Abstract

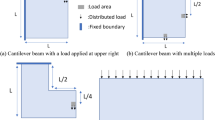

To realize a real-time structural topology optimization (TO), it is essential to use the information during the TO process. A step-to-step training method is proposed to improve the deep learning model prediction accuracy based on the solid isotropic material with penalization (SIMP) TO method. By increasing the use of optimization history information (such as the structure density matrix), the step-to-step method improves the model utilization efficiency for each sample data. This training method can effectively improve the deep learning model prediction accuracy without increasing the sample set size. The step-to-step training method combines several independent deep learning models (sub-models). The sub-models could have the same model layers and hyperparameters. It can be trained in parallel to speed up the training process. During the deep learning model training process, these features reduce the difficulties in adjusting sub-model parameters and the model training time cost. Meanwhile, this method is achieved by the local end-to-end training process. During the deep learning model predicting process, the increase in total prediction time cost can be ignored. The trained deep learning models can predict the optimized structures in real time. Maximization of first eigenfrequency topology optimization problem with three constraint conditions is used to verify the effectiveness of the proposed training method. The method proposed in this study provides an implementation technology for the real-time TO of structures. The authors also provide the deep learning model code and the dataset in this manuscript (git-hub).

Similar content being viewed by others

Data availability

The data set and deep learning model code are available from the “https://github.com/893801366/Real-time-topology-optimization-based-on-convolutional-neural-network-by-using-retrain-skill.git” (git-hub).

References

Bendsøe MP, Kikuchi N (1988) Generating optimal topologies in structural design using a homogenization method. Comput Methods Appl Mech Eng 71:197–224. https://doi.org/10.1016/0045-7825(88)90086-2

Zhu JH, Zhang WH, Xia L (2016) Topology optimization in aircraft and aerospace structures design. Arch Comput Methods Eng 23:595–622. https://doi.org/10.1007/s11831-015-9151-2

Wu P, Ma Q, Luo Y, Tao C (2016) Topology optimization design of automotive engine bracket. Energy Power Eng 08:230–235. https://doi.org/10.4236/epe.2016.84021

Cheng KT (1981) On non-smoothness in optimal design of solid, elastic plates. Int J Solids Struct 17:795–810. https://doi.org/10.1016/0020-7683(81)90089-5

Bendsøe MP (1989) Optimal shape design as a material distribution problem. Struct Opt 1:193–202. https://doi.org/10.1007/BF01650949

Guo X, Zhang W, Zhong W (2014) Doing topology optimization explicitly and geometrically-a new moving morphable components based framework. J Appl Mech Trans ASME 81:1–12. https://doi.org/10.1115/1.4027609

Xie YM, Steven GP (1993) A simple evolutionary procedure for structural optimization. Comput Struct 49:885–896. https://doi.org/10.1016/0045-7949(93)90035-C

Bendsøe MP, Sigmund O (1999) Material interpolation schemes in topology optimization. Arch Appl Mech 69:635–654. https://doi.org/10.1007/s004190050248

Huang X, Xie YM (2008) Optimal design of periodic structures using evolutionary topology optimization. Struct Multidiscip Opt 36:597–606. https://doi.org/10.1007/s00158-007-0196-1

Du J, Olhoff N (2007) Topological design of freely vibrating continuum structures for maximum values of simple and multiple eigenfrequencies and frequency gaps. Struct Multidiscip Opt 34:91–110. https://doi.org/10.1007/s00158-007-0101-y

Fan Z, Yan J, Wallin M et al (2020) Multiscale eigenfrequency optimization of multimaterial lattice structures based on the asymptotic homogenization method. Struct Multidiscip Opt 61:983–998. https://doi.org/10.1007/s00158-019-02399-0

Gersborg-Hansen A, Bendsøe MP, Sigmund O (2006) Topology optimization of heat conduction problems using the finite volume method. Struct Multidiscip Opt 31:251–259. https://doi.org/10.1007/s00158-005-0584-3

Deng J, Yan J, Cheng G (2013) Multi-objective concurrent topology optimization of thermoelastic structures composed of homogeneous porous material. Struct Multidiscip Opt 47:583–597. https://doi.org/10.1007/s00158-012-0849-6

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition. pp 770–778

Zou Z, Shi Z, Guo Y, Ye J (2019) Object Detection in 20 Years: A Survey. arXiv:1905.05055

Cho K, Van Merriënboer B, Gulcehre C, et al (2014) Learning phrase representations using RNN encoder-decoder for statistical machine translation. EMNLP 2014 - 2014 Conf Empir Methods Nat Lang Process Proc Conf 1724–1734. https://doi.org/10.3115/v1/d14-1179

Yu Y, Hur T, Jung J, Jang IG (2019) Deep learning for determining a near-optimal topological design without any iteration. Struct Multidiscip Opt 59:787–799. https://doi.org/10.1007/s00158-018-2101-5

Nakamura K, Suzuki Y (2020) Deep learning-based topological optimization for representing a user-specified design area. arXiv:2004.05461

Tan Z, Chen D, Chu Q, et al (2021) Efficient Semantic Image Synthesis via Class-Adaptive Normalization. IEEE Trans Pattern Anal Mach Intell 1. https://doi.org/10.1109/TPAMI.2021.3076487

Wang D, Xiang C, Pan Y et al (2022) A deep convolutional neural network for topology optimization with perceptible generalization ability. Eng Opt 54:973–988. https://doi.org/10.1080/0305215X.2021.1902998

Chandrasekhar A, Suresh K (2021) TOuNN: Topology Optimization using Neural Networks. Struct Multidiscip Opt 63:1135–1149. https://doi.org/10.1007/s00158-020-02748-4

Chi H, Zhang Y, Tang TLE, et al (2021) Universal machine learning for topology optimization. Comput Methods Appl Mech Eng 375:112739. https://doi.org/10.1016/j.cma.2019.112739

Yilin G, Fuh Ying Hsi J, Wen Feng L (2021) Multiscale topology optimisation with nonparametric microstructures using three-dimensional convolutional neural network (3D-CNN) models. Virtual Phys Prototyp 0:1–12. https://doi.org/10.1080/17452759.2021.1913783

Deng H, To AC (2021) A parametric level set method for topology optimization based on deep neural network. J Mech Des Trans ASME 143:. https://doi.org/10.1115/1.4050105

Hornik K, Stinchcombe M, White H (1989) Multilayer feedforward networks are universal approximators. Neural Netw 2:359–366. https://doi.org/10.1016/0893-6080(89)90020-8

Ates GC, Gorguluarslan RM (2021) Two-stage convolutional encoder-decoder network to improve the performance and reliability of deep learning models for topology optimization. Struct Multidiscip Opt 63:1927–1950. https://doi.org/10.1007/s00158-020-02788-w

Lin Q, Hong J, Liu Z et al (2018) Investigation into the topology optimization for conductive heat transfer based on deep learning approach. Int Commun Heat Mass Transf 97:103–109. https://doi.org/10.1016/j.icheatmasstransfer.2018.07.001

Yan J, Zhang Q, Xu Q, et al (2022) Deep learning driven real time topology optimisation based on initial stress learning. Adv Eng Informatics 51:. https://doi.org/10.1016/j.aei.2021.101472

Du J, Olhoff N (2007) Minimization of sound radiation from vibrating bi-material structures using topology optimization. Struct Multidiscip Opt 33:305–321. https://doi.org/10.1007/s00158-006-0088-9

K. S (1987) The method of moving asymptotes - a new method for structural optimization. Int J Numer Methods Eng 24:359–373. https://doi.org/10.1002/nme.1620240207

Chen D, Liu F, Li Z (2020) Deep Learning Based Single Sample Per Person Face Recognition: A Survey. arXiv:2006.11395

Rumelhart DE, Hintont GE (1986) Learning representations by back-propagating erRORS. Nature 323:533–536. https://doi.org/10.7551/mitpress/1888.003.0013

Uang C-M, Yin S, Andres P et al (1994) Shift-invariant interpattern association neural network. Appl Opt 33:2147. https://doi.org/10.1364/ao.33.002147

Pereira JT, Fancello EA, Barcellos CS (2004) Topology optimization of continuum structures with material failure constraints. Struct Multidiscip Opt 26:50–66. https://doi.org/10.1007/s00158-003-0301-z

LeCun Y, Bottou L, Bengio Y, Haffner P (1998) Gradient-based learning applied to document recognition. Proc IEEE 86:2278–2323. https://doi.org/10.1109/5.726791

Han J, Moraga C (1995) The influence of the sigmoid function parameters on the speed of backpropagation learning. Lect Notes Comput Sci 930:195–201. https://doi.org/10.1007/3-540-59497-3_175

A D (2000) Digital selection and analogue amplification coexist in a cortex-inspired silicon circuit. Nature 442:947–951. https://doi.org/10.1038/35016072

Radford A, Metz L, Chintala S (2016) Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv:1511.06434

Huang J, Qu L, Jia R, Zhao B (2019) O2U-Net: A simple noisy label detection approach for deep neural networks. Proc IEEE Int Conf Comput Vis 2019-Octob:3325–3333. https://doi.org/10.1109/ICCV.2019.00342

Hinton GE, Srivastava N, Krizhevsky A, et al (2012) Improving neural networks by preventing co-adaptation of feature detectors. arXiv:1207.0580

Szegedy C, Liu W, Jia Y, et al (2015) Going deeper with convolutions. Proc IEEE Comput Soc Conf Comput Vis Pattern Recognit 07–12-June:1–9. https://doi.org/10.1109/CVPR.2015.7298594

Kingma DP, Ba J (2014) Adam: A Method for Stochastic Optimization. Comput Sci arXiv:1412.6980

Rade J, Balu A, Herron E, et al (2021) Algorithmically-consistent deep learning frameworks for structural topology optimization. Eng Appl Artif Intell 106:. https://doi.org/10.1016/j.engappai.2021.104483

Gowda SN, Yuan C (2019) ColorNet: Investigating the Importance of Color Spaces for Image Classification. Lect Notes Comput Sci (including Subser Lect Notes Artif Intell Lect Notes Bioinformatics) 11364 LNCS:581–596. https://doi.org/10.1007/978-3-030-20870-7_36

Sigmund O (2007) Morphology-based black and white filters for topology optimization. Struct Multidiscip Optim 33:401–424. https://doi.org/10.1007/s00158-006-0087-x

Xu S, Cai Y, Cheng G (2010) Volume preserving nonlinear density filter based on heaviside functions. Struct Multidiscip Optim 41:495–505. https://doi.org/10.1007/s00158-009-0452-7

Wang D, Xiang C, Pan Y, et al (2021) A deep convolutional neural network for topology optimization with perceptible generalization ability. Eng Optim 1–16. https://doi.org/10.1080/0305215X.2021.1902998

Friedman JH (2001) Greedy function approximation: a gradient boosting machine. Ann Stat 29:1189–1232. https://doi.org/10.1214/aos/1013203451

Funding

This research was financially supported by the National Natural Science Foundation of China (No. U1906233,11732004), the Key R&D Program of Shandong Province (2019JZZY010801), the Fundamental Research Funds for the Central Universities (DUT20ZD213, DUT20LAB308).

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. All authors commented on previous versions of the manuscript. All authors read and approved the final manuscript. JY: conceptualization, project administration, reviewing and editing. DG: discussion with jun yan for the conceptualization, data collection, material preparation, programming, writing-original draft, writing-review & editing. QX: discussion, reviewing and editing. HL: discussion, reviewing and editing.

Corresponding authors

Ethics declarations

Conflict of interest

All authors certify that they have no affiliations with or involvement in any organization or entity with any financial interest or non-financial interest in the subject matter or materials discussed in this manuscript.

Informed consent

All the authors know the article content and agree to submit it for publication.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix: The total trainable parameters in the model

Appendix: The total trainable parameters in the model

This table shows the feature map size and trainable parameters in each layer. The change of feature map size reflects the operation time of up-sampling and down-sampling in the two models. The trainable parameters refer to the weight and bias parameters of neurons in each layer (such as the convolution layer or down/up sampling layer). These parameters will change during the model training process. Therefore, the trainable parameter size will affect the model training efficiency. The layers without trainable parameters are omitted (such as the dropout layer or add layer).

The model with reducing part | The model without reducing part | ||

|---|---|---|---|

Feature map size | Parameters | Feature map size | Parameters |

(221, 31, 1) | 0 | (221, 31, 1) | 0 |

(221, 31, 8) | 80 | (221, 31, 8) | 80 |

(220, 30, 32) | 1056 | (220, 30, 32) | 1056 |

(220, 30, 32) | 9248 | (220, 30, 32) | 9248 |

(110, 15, 64) | 8256 | (110, 15, 64) | 8256 |

(110, 15, 64) | 36,928 | (110, 15, 64) | 36,928 |

(55, 14, 128) | 32,896 | (55, 14, 128) | 32,896 |

(55, 14, 128) | 147,584 | (55, 14, 128) | 147,584 |

(27, 7, 256) | 196,864 | (27, 7, 256) | 196,864 |

(27, 7, 256) | 590,080 | (27, 7, 256) | 590,080 |

(13, 6, 512) | 786,944 | (13, 6, 512) | 786,944 |

(27, 7, 256) | 786,688 | (27, 7, 256) | 786,688 |

(27, 7, 256) | 590,080 | (27, 7, 256) | 590,080 |

(55, 14, 128) | 196,736 | (55, 14, 128) | 196,736 |

(55, 14, 128) | 147,584 | (55, 14, 128) | 147,584 |

(110, 15, 64) | 32,832 | (110, 15, 64) | 32,832 |

(110, 15, 64) | 36,928 | (110, 15, 64) | 36,928 |

(220, 30, 32) | 8224 | (220, 30, 32) | 8,224 |

(220, 30, 32) | 9248 | (220, 30, 32) | 9,248 |

(221, 31, 16) | 2064 | (221, 31, 16) | 2,064 |

(221, 31, 16) | 2320 | (221, 31, 16) | 2,320 |

(221, 31, 8) | 1160 | (221, 31, 8) | 1,160 |

(221, 31, 4) | 292 | (221, 31, 4) | 292 |

(221, 31, 1) | 337 | (6851, 1) | 187,751,655 |

Total Parameters | 3,624,129 | Total Parameters | 191,375,747 |

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Yan, J., Geng, D., Xu, Q. et al. Real-time topology optimization based on convolutional neural network by using retrain skill. Engineering with Computers 39, 4045–4059 (2023). https://doi.org/10.1007/s00366-023-01846-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00366-023-01846-3