Abstract

The paper contains the comparative analysis of the efficiency of different qunatile estimators for various distributions. Additionally, we show strong consistency of different quantile estimators and we study the Bahadur representation for each of the quantile estimators, when the sample is taken from NA, \(\varphi \), \(\rho ^*\), \(\rho \)-mixing population.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let \(\{X_n, n\ge 1\}\) be a sequence of identically distributed random variables defined on a fixed probability space \((\Omega , \mathcal {F}, P)\) with a distribution function F. The p-th quantile of F is defined as

where \(0<p<1\).

Quantiles play an important role in finance, modeling and statistics. In practical applications, quantile estimators are fundamental, which was noticed quite early, e.g. at the paper Galton (1889).

In the literature, there are numerous quantiles estimators. Some quantile estimators were developed for specific distributions, whereas others were designed to be "distribution-free" (in other words—nonparametric estimators), with no assumption about the population density function.

Let \((X_{(1)},X_{(2)},...,X_{(n)})\) be the ordered sample of \((X_1,...,X_n)\) and \(\lfloor x\rfloor \) denotes an integer part of x. Dielman et al. (1994) presented eight different nonparametric estimators of a quantile:

-

Weighted average at \(X_{(\lfloor np+0.5\rfloor )}\)

$$\begin{aligned} E_1= & {} (0.5+\lfloor np+0.5\rfloor -np)X_{(\lfloor np+0.5\rfloor )} \\{} & {} \quad +(0.5-\lfloor np+0.5\rfloor +np)X_{(\lfloor np+0.5\rfloor +1)}, \end{aligned}$$where \(0.5\le np\le (n-0.5)\).

Remark 1

It follows from the condition \(0.5\le np\le (n-0.5)\) that \(\displaystyle n\ge \max \bigg \{\frac{0.5}{1-p},\frac{1}{2p}\bigg \}\).

-

Weighted average at \(X_{(\lfloor np\rfloor )}\)

$$\begin{aligned} E_2=(1-(np-\lfloor np\rfloor ))X_{(\lfloor np\rfloor )}+(np-\lfloor np\rfloor )X_{(\lfloor np\rfloor +1)}. \end{aligned}$$ -

Lower empirical cumulative distribution function (CDF) value

$$\begin{aligned} E_3=X_{(\lfloor np\rfloor )}. \end{aligned}$$ -

Upper empirical cumulative distribution function (CDF) value

$$\begin{aligned} E_4=X_{(\lfloor np\rfloor +1)}. \end{aligned}$$ -

Observation numbered closest to np

$$\begin{aligned} E_5=\left\{ \begin{array}{ll} X_{(\lfloor np\rfloor )},&{}\text {if}\ \ np-\lfloor np\rfloor <0.5\\ X_{(\lfloor np\rfloor +1)},&{}\text {if} \ \ np-\lfloor np\rfloor \ge 0.5 \end{array}\right. . \end{aligned}$$ -

Empirical cumulative distribution function (CDF)

$$\begin{aligned} E_6=\left\{ \begin{array}{ll} X_{(\lfloor np\rfloor )},&{}\text {if}\ \ np-\lfloor np\rfloor =0\\ X_{(\lfloor np\rfloor +1)},&{}\text {if} \ \ np-\lfloor np\rfloor > 0 \end{array}\right. . \end{aligned}$$ -

Weighted average at \(X_{(\lfloor (n+1)p\rfloor )}\)

$$\begin{aligned} E_7= & {} (1-((n+1)p-\lfloor (n+1)p\rfloor ))X_{(\lfloor (n+1)p\rfloor )}\\{} & {} \quad +((n+1)p-\lfloor (n+1)p\rfloor )X_{(\lfloor (n+1)p\rfloor +1)}. \end{aligned}$$ -

Empirical cumulative distribution function (CDF) with averaging

$$\begin{aligned} E_8=\left\{ \begin{array}{ll} \frac{X_{(\lfloor np\rfloor )}+X_{(\lfloor np\rfloor +1)}}{2},&{}\text {if}\ \ np-\lfloor np\rfloor =0\\ X_{(\lfloor np\rfloor +1)},&{}\text {if} \ \ np-\lfloor np\rfloor > 0 \end{array}\right. . \end{aligned}$$

The most common estimator studied in many papers is \(E_4\). Bahadur (1966) first established an elegant representation for a sample quantile in terms of the empirical distribution function based on independent and identically distributed samples.

Let \(\displaystyle F_n(x) = {1\over n}\sum _{i=1}^n I[X_i\le x]\), \( x\in {\mathbb {R}} \), \(n\ge 1\) be the empirical distribution function for the sample \((X_1, X_2, \dots ,X_ n)\).

Theorem 1

(Bahadur (1966)) Let \(0< p < 1\) and \(\{X_n, n \ge 1\}\) be a sequence of independent identically distributed random variables with the distribution function F. Assume that F has at least two derivatives at some neighborhood of \(Q_p\) and \(F'(Q_p)~=~f(Q_p) > 0\). Then

In next years, many researchers have studied the Bahadur representation for sample quantiles for dependent sequences. This is very important problem for practical applications, because in practice we often deal with samples with different dependency structures. Sen (1972); Babu and Singh (1978); Yoshihara (1995); Yang et al. (2019) and Wu et al. (2021) obtained the Bahadur represenation for \(\varphi \)-mixing sequences, Sun (2006); Wang et al. (2011) and Zhang et al. (2014) got the Bahadur representation for \(\alpha \)-mixing sequences and Xing and Yang (2019) for \(\psi \)-mixing sequences. For negatively dependent structures Ling (2008); Xing and Yang (2011) and Xu et al. (2013) considered this problem for negatively associated (NA) sequences and Li et al. (2011) studied it for negatively orthant dependent (NOD) random variables.

Below, we present the definitions of four types of dependence of random variables that will be considered in this work.

Definition 1

(Joag-Dev and Proschan (1983)) A finite family of random variables \(\{X_i, 1\le i\le n\}\) is said to be negatively associated (NA) if for every pair of disjoint subsets A and B of \(\{1,2,...,n\}\), we have

wherever \(f_1\) and \(f_2\) are coordinatewise nondecreasing, provided the covariance exists. An infinite family of random variables is said to be NA if every finite subfamily is NA.

Many authors have investigated NA’s statistical properties. For example, Joag-Dev and Proschan (1983) studied NA’s fundamental properties, Yang (2003) investigated uniformly asymptotic normality of regression weighted estimator for NA samples, Liang and Jing (2005) presented asymptotic properties of the estimation of nonparametric regression model based on NA sequences, Liang et al. (2006) studied asymptotic properties of the estimation of semiparametric regression model based on a linear process with NA innovations.

Remark 2

Increasing functions defined on disjoint subsets of a set of NA random variables are NA random variables.

Example 1

(Joag-Dev and Proschan (1983)) Let \({\textbf{Z}}=(Z_1,...,Z_k)\) be a vector having a multinomial distribution, obtained by taking only one observation. Thus only one \(Z_i\) is 1 while the rest are zero. The NA property for \({\textbf{Z}}\) trivially follows from Definition 1. Since the general multinomial is a convolution of independent copies of \({\textbf{Z}}\), the closure property (Remark 2) establishes NA in this case.

Definition 2

(Rozanov and Volkonski (1959)) A sequence of random variables \(\{X_n, n\ge 1\}\) is said to be \(\varphi \)-mixing if

as \(n\rightarrow \infty \), where \({\mathcal {F}}_n^m=\sigma (X_i, n\le i\le m)\).

\(\varphi \)-mixing property was studied by many researchers. Utev (1990) studied the central limit theorem, Chen et al. (2009) investigated total convergence of the sequences, Yang et al. (2012) obtained the Berry-Esseen bound. The more information on \(\varphi \)-mixing properties one can find in Billingsley (1968) in chapter 4.

Example 2

(Wu et al. (2021)) Let \(\{\epsilon _n, n\ge 1\}\) be a sequence of independent and identically distributed random variables with zero mean and a finite variance. Define

for some positive integer m and constants \(a_k\), \(k=0,1,...,m\). Then \(\{X_n, n\ge 1\}\) is known as a moving average process with older m. It can be verified that \(\{X_n, n\ge 1\}\) is a \(\varphi \)-mixing process.

Definition 3

(Bradley (1992)) A sequence of random variables \(\{X_n,n\ge 1\}\) is said to be \(\rho ^*\)-mixing, if

as \(n\rightarrow \infty \), where

\(\displaystyle \text {dist}(S,T)=\min _{i\in S, j\in T}|j-i|\) and \(\sigma (S)\) and \(\sigma (T)\) are the \(\sigma \)-fields generated by \(\{X_i, i\in S\}\) and \(\{X_j, j\in T\}\), respectively.

\(\rho ^*\)-mixing random variables are a well-described and repeatedly studied structure. Bradley (1992) obtained the central limit theorem, Bryc and Smolenski (1993); Peligrad and Gut (1999); Utev and Peligrad (2003) presented the moment inequalities and Sung (2010) analysed the complete convergance of weighted sums for \(\rho ^*\)-mixing sequences of random variables.

Remark 3

Note that increasing functions defined on a disjoint subset of a \(\rho ^*\)-mixing field \(\{X_k, k\in N^d\}\) with mixing coefficients \(\rho ^*(s)\) are also \(\rho ^*\)-mixing with coefficients not greater that \(\rho ^*(s)\).

Example 3

(Wang et al. (2019)) Let \(\{X_n, n\ge 1\}\) be a strictly stationary, finite-state, irreducible and aperiodic Markov chain. Then it is a \(\rho ^*\)- mixing process with \(\rho ^*(k)=o(e^{-Ck})\) for some \(C>0\).

Definition 4

(Kolmogorov and Rozanov (1960)) A sequence of random variables \(\{X_n, n\ge 1\}\) is said to be \(\rho \)-mixing if

as \(n\rightarrow \infty \), where \({\mathcal {F}}_n^m=\sigma (X_i, n\le i\le m)\) and \(L^2(\cdot )\) is a set of real-valued square-integrable functions.

The \(\rho \)-mixing condition was introduced by Kolmogorov and Rozanov (1960). Shao (1995) in his paper explored the central limit theorem, the law of large numbers and the complete convergence of \(\rho \)-mixing sequences.

Example 4

(Peligrad (1987)) Suppose \(\{Y_k, k\ge 1\}\) and \(\{Z_k, k\ge 1\}\) are independent random variables with the identical standard normal distribution function F and consider the sequence

Because of \(\rho (2)=0\), sequence \(\{X_k^{(\alpha )}, k\ge 1\}\) is \(\rho \)-mixing with \(\displaystyle \sigma _n^2=\text {Var}\bigg (\sum _{k=1}^nX_k^{(\alpha )}\bigg )=2+n\alpha ,\) \(\inf _n \frac{\sigma _n^2}{n}=\alpha \) and \(E(X_k^{(\alpha )})^2=2+\alpha .\)

The following Rosenthal-type inequality will be important in further considerations:

where \(C_q>0\), \(q\ge 2\), \(EX_n=0\) and \(E|X_n|^q<\infty \) for every \(n\ge 1.\)

Remark 4

Inequality (2) is true for NA random variables (Shao and Su (1999)), for \(\rho ^*\)-mixing random variables (Peligrad and Gut (1999)) and for \(\varphi \)-mixing random variables with some additional condition on mixing coefficients \(\varphi (n), n\ge 1\) (Wu et al. (2021)).

For \(\rho \)-mixing random variables, the following inequality plays the same role as (2).

Lemma 1

(Shao (1995)) Let \(q\ge 2\) and \(\{X_n, n\ge 1\}\) be a sequence of \(\rho \)-mixing random variables. Assume that \(EX_i=0\), \(E|X_n|^q<\infty \) and

Then there exists a positive constant \(K=K(q,\rho (\cdot ))\) depending only on q and \(\rho (\cdot )\) such that for any \(k\ge 0\), \(n\ge 1\),

The first aim of this paper is a comparative analysis of the effectiveness of each of the estimators, depending on the distribution from which the sample is drawn. For this purpose, in section 2 we will compare the fit of the values of the estimators presented above obtained for samples taken from populations with different distributions to the theoretical value. The second aim is to show the strong consistency of each of the estimators and to study that the Bahadur representation holds for each of the estimators when the sample is taken from a NA, \(\varphi \), \(\rho ^*\) or \(\rho \)-mixing population.

2 Comparative analysis of estimators

In the comparative analysis of the estimators well-known probability distributions were used:

-

Normal distribution with \(\mu =0\), \(\sigma =4\),

-

Student’s t distribution with 3 degrees of freedom,

-

Weibull distribution with scale \(\lambda =1\) and shape \(k=5\),

-

Uniform distribution on the interval [0,2],

-

\(\chi ^2\) distribution with 3 degrees of freedom,

-

Exponential distribution with \(\lambda =1\).

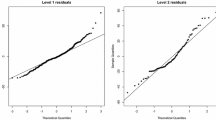

The analysis was carried out for the sample sizes: \(n\in \{50,150,555,1130, 2165\}\) and for the different values of \(p\in \{0.025, 0.5, 0.975\}\). Using the R software, in each case the teoretical value of the quantile was generated. Next, samples of sizes \(n\in \{50,150,555,1130, 2165\}\) were taken and the quantile estimator was calculated. The experiment was repeated 1000 times in each case and a mean square error (MSE) was generated.

In the normal distribution case, the best results were obtained for the estimators \(E_4\), \(E_6\) and \(E_8\). The estimator \(E_1\) was the only one that gave better results for small p. For \(p=0.5\), the results for each estimator are similar. The results for the Student’s t distribution are similar to the normal distribution. Again, the best results were obtained for the estimators \(E_4\), \(E_6\), \(E_8\). The estimators \(E_2\), \(E_3\) and \(E_5\) showed much worse results for small p than the others estimators and showed quite good results for high p. The results for \(p=0.5\) are, again, similar for each estimator. In the case of Weibull distribution, the errors are much smaller than in the normal and Student’s t distribution. Only \(E_1\) gives a better result for small p than for large p. As the sample grows, the differences in the errors for individual estimators are practically non-existent. For the uniform distribution the errors are also smaller than for the normal and Student’s t distribution. In this case, the estimator \(E_7\) gave the best results. Additionally, \(E_1\), \(E_2\), \(E_3\) and \(E_5\) gave a better result for small p than the others. For \(\chi ^2\) distribution the best results gave \(E_2\). Also good results were obtained for the estimators \(E_4\), \(E_5\), \(E_6\) and \(E_8\). Each of the estimators performs worse at high p than at low p. For the exponential distribution the greatest differences in the results are seen for high p. The best results are given by the estimator \(E_2\), but the estimators \(E_4\), \(E_5\), \(E_6\) and \(E_8\) also give quite good results.

The Tables 1 and 2 contain an assessment of the fit of each estimator depending on the distribution for, respectively, \(p=0.025\) and \(p=0.975\). Within a given distribution, estimator with the best fit gets three pluses and estimator with the worst fit gets minus. The errors for a given distribution was analogous for all estimators for \(p=0.5\).

In conclusion, none of the estimators performed equally well for different distributions for low and high p. Different estimators turn out to be the best depending on a given distribution. Only for \(p=0.5\) the fit of each estimator is at a very similar level for a given distribution. Common conclusion for each estimator is that as the sample size increases, the error decreases and the results for each estimator are very similar. However, it should be noted that the financial and life situation does not always allow to obtain a sample of 1000 or 2000 elements. That is why it is so important to choose an appropriate estimator for a given distribution especially when the research is based on a small sample.

3 Strong consistency and Bahadur representation

In this section we will study a strong consistency of a quantile estimator and the Bahadur representation for sample quantiles. Further, detailed considerations will be carried out for the estimator \(E_1\).

Theorem 2

Let \(\{X_n, n\ge 1\}\) be a sequence of random variables with one of the following dependency structures:

-

1.

NA dependence,

-

2.

\(\varphi \)-mixing dependence with coefficients satifying \(\displaystyle \sum _{n=1}^{\infty }\varphi (2^n)<\infty \),

-

3.

\(\rho ^*\)-mixing dependence,

-

4.

\(\rho \)-mixing dependence with coefficients satifying \(\displaystyle \sum _{n=1}^{\infty }\rho ^{\frac{2}{q}}(2^n)<\infty \), for \(\displaystyle q>~\frac{1}{\delta }\) for \(\displaystyle 0<\delta <\frac{1}{2}\).

Let \(\{X_n, n\ge 1\}\) be indentically distributed with a common distribution function F and a quantile \(Q_p\). Assume that F possesses a positive continuous density f in some neighborhood \(\mathfrak {D}_p\) of \(Q_p\) such that \( 0< \sup \{f(x); x \in \mathfrak {D}_p \} <\infty . \) Moreover, we assume that \(f'(x)\) is defined in some neighborhood \(\mathfrak {D}_p\) of \(Q_p\),

Then for any \(0<\delta <\frac{1}{2}\)

Proof

It it easy to see that \(\forall ~\varepsilon >0\)

One can obtain that

Put \(\displaystyle \xi _{ni}=I(X_i\le Q_p+\varepsilon n^{-\frac{1}{2}+\delta })-F(Q_p+\varepsilon n^{-\frac{1}{2}+\delta })\) for \(1\le i\le n\). Therefore, we get

Note that, using Taylor’s expansion:

we can obtain that there exists some constant \(c(\varepsilon )>0 \), depending only on \(\varepsilon >0\), such that for a sufficiently large n

Hence, on the basis of Eqs. (6),(7), Markov’s inequality and Eq. (2) (for NA, \(\varphi \) and \(\rho ^*\)-mixing random variables) or Lemma 1 (for \(\rho \)-mixing random variables), for \(\displaystyle r>\frac{1}{\delta }\) where \(\displaystyle 0<\delta <\frac{1}{2}\), we get the following estimation

We can carry out analogous considerations for \(A_n^2\) using the lower estimate of \(E_1\) in the inequality Eq. (5). Hence, we get \(\displaystyle \sum _{n=1}^{\infty } P\bigg [ E_1\le Q_p-\varepsilon n^{-\frac{1}{2}+\delta }\bigg ] <\infty .\) By the Borel-Cantelli lemma we get thesis Eq. (4).

\(\square \)

Remark 5

One can obtain analogous results for the estimator:

-

\(E_2\)—by assumption that \(X_{(\lfloor np\rfloor )}\le E_2\le X_{(\lfloor np\rfloor +1)}\),

-

\(E_3\)—calculations are similar to the estimator \(E_4\), which was considered in the paper (Dudek and Kuczmaszewska (2022)),

-

\(E_5\) and \(E_6\)—calculations are analogous to the estimators \(E_3\) and \(E_4\), because the proof does not depend on the value of \(np-\lfloor np\rfloor \),

-

\(E_7\)—by the assumption that \(X_{(\lfloor (n+1)p\rfloor ))}\le E_7\le X_{(\lfloor (n+1)p\rfloor +1))}\),

-

\(E_8\)—it is a combination of the estimator \(E_4\) and \(E_3\).

Now, let us focus on the Bahadur representation of sample quantiles.

Theorem 3

Let \(\{X_n, n\ge 1\}\) be a sequence of random variables with one of the following dependency structures:

-

1.

NA dependence,

-

2.

\(\rho ^*\)-mixing dependence.

Let \(\{X_n, n\ge 1\}\) be indentically distributed with a common distribution function F and quantile \(Q_p\). Assume that F possesses a positive continuous density f in some neighborhood \(\mathfrak {D}_p\) of \(Q_p\) such that

Then for any \( \delta >0\)

where \(\mathfrak {I}_n=\big [ Q_p-c_0n^{-\frac{1}{4}+\delta },Q_p+c_0n^{-\frac{1}{4}+\delta }\big ]\) for some \(c_0>0.\)

Proof

Let \(\{a_n, n\ge 1\}\) and \(\{b_n, n\ge 1\}\) be two sequences defined as follows

and

For each \(n\in {\mathbb {N}}\) and any integer j we define

Since \(F_n\) and F are nondecreasing we get for \(x\in \mathfrak {J}_{j,n}\)

and

Hence

It is easy to see that by The Mean Value Theorem and (8) we have

Therefore we obtain

Moreover, we note that

where \(Y_i^{Q_p}=E(I[X_i\le Q_p])-I[X_i\le Q_p]\) and \(Y_i^{(j,n)} = E(I[X_i\le \eta _{j,n}])-I[X_i\le \eta _{j,n}]\), \(-b_n \le j \le b_n\) are respectively NA or \(\rho ^*\)-mixing random variables.

It follows from the Markov’s inequality and Eq. (2) (for NA and \(\rho ^*\)-mixing random variables) that for \(\displaystyle r> \max \bigg \{2,\frac{5}{4\delta }\bigg \}\) where \(\displaystyle \delta >0\) we have

By the Borel-Cantelli lemma we get thesis. \(\square \)

Theorem 4

Let \(\{X_n, n\ge 1\}\) be a sequence of random variables with one of the following dependency structures:

-

1.

\(\varphi \)-mixing dependence with coefficients satifying \(\displaystyle \sum _{n=1}^{\infty }\varphi (2^n)<\infty \),

-

2.

\(\rho \)-mixing dependence with coefficients satifying \(\displaystyle \sum _{n=1}^{\infty }\rho ^{\frac{2}{q}}(2^n)<\infty \), for \(q> \max \bigg \{2,{5\over 2\delta }\bigg \}\) for \(\delta >0\).

Let \(\{X_n, n\ge 1\}\) be indentically distributed with a common distribution function F and quantile \(Q_p\). Assume that F possesses a positive continuous density f in some neighborhood \(\mathfrak {D}_p\) of \(Q_p\) such that

Then for any \( \delta >0\)

where \(\mathfrak {I}_n=\big [ Q_p-c_0n^{-\frac{1}{2}+\delta },Q_p+c_0n^{-\frac{1}{2}+\delta }\big ]\) for some \(c_0>0\)

Proof

The proof of Theorem 4 is very similar to the proof of Theorem 3. Analogously, let \(\{a_n, n\ge 1\}\) and \(\{b_n, n\ge 1\}\) be two sequences defined as follows

and

Let \(\eta _{j,n}\), \(\alpha _{j,n}\) and \(\mathfrak {J}_{j,n}\) be defined as in Theorem 3.

As it was shown in Theorem 3, it is easy to see that

Therefore we get

Next, we have

where \(Y_i^{(j,n)} = I[Q_p< X_i\le \eta _{j,n}]\), \(-b_n \le j \le b_n\) are respectively \(\varphi \) or \(\rho \)-mixing random variables.

Moreover, it is easy to obtain by The Mean Value Theorem that for \(r\ge 2\)

It follows from the Markov’s inequality and Eq. (2) (for \(\varphi \)-mixing random variables) or Lemma 1 (for \(\rho \)-mixing random variables) that for \(\displaystyle r> \max \bigg \{2,{5\over 2\delta }\bigg \}\) where \(\displaystyle \delta >0\) we have

By the Borel-Cantelli lemma we get thesis. \(\square \)

Theorem 5

Suppose that assumptions of Theorem 2 hold. Then for any \(\displaystyle 0<\delta <\frac{1}{2}\) we have,

for NA and \(\rho ^*\)-mixing random variables.

Proof

One can note that

By Taylor’s expansion we obtain for \(0<\theta <1\)

From Eq. (3) and Theorem 2 for \(\displaystyle 0<\delta <\frac{1}{2}\) it follows that

By Eq. (11) and Theorem 3 for \(\displaystyle 0<\delta <\frac{1}{2}\) we get that with probability 1,

which gives that \( f( Q_p)(E_1-Q_p)+F_n(Q_{p})-p = O(n^{-\frac{1}{2}+\delta }),\) when \(n\rightarrow \infty . \) Hence, we get Eq. (10). \(\square \)

Theorem 6

Suppose that assumptions of Theorem 2 hold. Then for any \(\displaystyle 0<\delta <\frac{1}{4}\) we get

for \(\varphi \) and \(\rho \)-mixing random variables.

Proof

Analogously as in the proof of Theorem 5, using Eq. (11) and Theorem 4 for \(\displaystyle 0<~\delta <\frac{1}{4}\) we get that with probability 1,

Hence, we get Eq. (12). \(\square \)

Remark 6

The calculations for the estimators \(E_2\), \(E_4, E_5,E_6,E_7\) and \(E_8\) proceed analogously through the condition that \(F_n( E_i) = p+O(n^{-1}),\) where \(i=2,4,5,6,7,8\). Assuming that \(F_n( E_3) \le p\), one can obtain analogous results for the estimator \(E_3\).

References

Babu GJ, Singh K (1978) On deviations between empirical and quantile processes for mixing random variables. J Multivar Anal 8:532–549. https://doi.org/10.1016/0047-259X(78)90031-3

Bahadur RR (1966) A note on quantiles in large samples. Ann Math Stat 37:577–580. https://doi.org/10.1214/aoms/1177699450

Billingsley P (1968) Convergance of probability measures. Wiley, New York

Bradley RC (1992) On the spectral density and asymptotic normality of weakly dependent random fields. J Theor Probab 5:355–373. https://doi.org/10.1007/BF01046741

Bryc W, Smolenski W (1993) Moment conditions for almost sure convergence of weakly correlated random variables. Proc Am Math Soc 119:629–635. https://doi.org/10.2307/2159950

Chen PY, Hu TC, Volodin A (2009) Limiting behavior of moving average process under \(\varphi \)-mixing assumption. Stat Probab Lett 79:105–111. https://doi.org/10.1016/j.spl.2008.07.026

Dielman T, Lowry C, Pfaffenberger R (1994) A comparison of quantile estimators. Commun Stat Simul Comput 23:355–371. https://doi.org/10.1080/03610919408813175

Dudek D, Kuczmaszewska A (2022) On the Bahadur representation of quantiles for a sample from \(\rho ^*\)-mixing structure population. Adv Sci Technol Res J 16:316–330. https://doi.org/10.12913/22998624/150480

Galton F (1889) Natural inheritance. Macmillan, New York

Joag-Dev K, Proschan F (1983) Negative association of random variables with applications. Ann Stat 11:286–295. https://doi.org/10.1214/aos/1176346079

Kolmogorov AN, Rozanov YA (1960) On strong mixing conditions for stationary gaussian processes. Theor Probab Appl 5:204–208. https://doi.org/10.1137/1105018

Li X, Yang W, Hu S, Wang X (2011) The Bahadur representation for sample quantile under NOD sequence. J Nonparam Stat 23:59–65. https://doi.org/10.1080/10485252.2010.486033

Liang HY, Jing BY (2005) Asymptotic properties for estimates of nonparametric regression models based on negatively associated sequences. J Multivar Anal 95:227–245. https://doi.org/10.1016/j.jmva.2004.06.004

Liang HY, Mammitzsch V, Steinebach J (2006) On a semiparametric regression model whose errors form a linear process with negatively associated innovations. Statistics 40:207–226. https://doi.org/10.1080/02331880600688163

Ling NX (2008) The Bahadur representation for sample quantiles under negatively associated sequences. Stat Probab Lett 78:2660–2663. https://doi.org/10.1016/j.spl.2008.03.026

Liu T, Zhang Z, Hu S, Yang W (2014) The Berry Esseen bound of sample quantiles for NA sequences. J Inequal Appl. https://doi.org/10.1186/1029-242X-2014-79

Peligrad M (1987) On the central limit theorem for \(\rho \)-mixing sequences of random variables. Ann Probab 15:1387–1394. https://doi.org/10.1214/aop/1176991983

Peligrad M, Gut A (1999) Almost-sure results for a class of dependent random variables. J Theor Probab 12:87–104. https://doi.org/10.1023/A:1021744626773

Rozanov YA, Volkonski VA (1959) Some limit theorems for random function. Theor Probab 4:186–207. https://doi.org/10.1137/1104015

Sen PK (1972) On the Bahadur representation of sample quantiles for sequences of \(\varphi \)-mixing random variables. J Multivar Anal 2:77–95. https://doi.org/10.1016/0047-259X(72)90011-5

Shao QM (1995) Maximal inequalities for partial sums of \(\rho \)-mixing sequences. Ann Probab 23:948–965. https://doi.org/10.1214/aop/1176988297

Shao QM, Su C (1999) The law of the iterated logarithm for negatively associated random variables. Stoch Process Appl 83:139–148. https://doi.org/10.1016/S0304-4149(99)00026-5

Sun SX (2006) The Bahadur representation for sample quantiles under weak dependence. Stat Probab Lett 76:1238–1244. https://doi.org/10.1016/j.spl.2005.12.021

Sung SH (2010) Complete convergence for weighted sums of \(\rho ^*\)-mixing random variables. Discrete Dyn Nat Soc. https://doi.org/10.1155/2010/630608

Utev SA (1990) On the central limit theorem for \(\varphi \)-mixing arrays of random variables. Theor Probab Appl 35:131–139. https://doi.org/10.1137/1135013

Utev S, Peligrad M (2003) Maximal inequalities and an invariance principle for a class of weakly dependent random variables. J Theor Probab 16:101–115. https://doi.org/10.1023/A:1022278404634

Wang XJ, Hu SH, Yang WZ (2011) The Bahadur representation for sample quantiles under strongly mixing sequence. J Stat Plan Inference 141:655–662. https://doi.org/10.1016/j.jspi.2010.07.008

Wang X, Wu Y, Hu S (2019) The Berry-Esseen bounds of the weighted estimator in a nonparametric regression model. Ann Inst Stat Math 71:1143–1162. https://doi.org/10.1007/s10463-018-0677-6

Wu Y, Yu W, Wang X (2021) The Bahadur representation of sample quantiles for \(\varphi \)-mixing random variables and its application. Statistics 55:426–444. https://doi.org/10.1080/02331888.2021.1923713

Xing G, Yang S (2011) A remark on the Bahadur representation of sample quantiles for negatively associated sequences. J Korean Stat Soc 40:277–280. https://doi.org/10.1016/j.jkss.2010.10.006

Xing G, Yang S (2019) On the Bahadur representation of sample quantiles for \(\psi \)-mixing sequences and its application. Commun Stat Theor Methods 48:1060–1072. https://doi.org/10.1080/03610926.2018.1423696

Xu SF, Ge L, Miao Y (2013) On the Bahadur representation of sample quantiles and order statistics for NA sequences. J Korean Stat Soc 42:1–7. https://doi.org/10.1016/j.jkss.2012.04.003

Yang SC (2003) Uniformly asymptotic normality of regression weighted estimator for negatively associated sample. Stat Probab Lett 62:101–110. https://doi.org/10.1016/S0167-7152(02)00427-3

Yang WZ, Wang XH, Li XQ (2012) Berry-Esseen bound of sample quantiles for \(\varphi \)-mixing random variables. J Math Anal Appl 388:451–462. https://doi.org/10.1016/j.jmaa.2011.10.058

Yang W, Hu S, Wang X (2019) The Bahadur representation for sample quantiles under dependent sequence. Acta Math Appl Sin 35:521–531. https://doi.org/10.1007/s10255-019-0827-5

Yoshihara K (1995) The Bahadur representation of sample quantile for sequences of strongly mixing random variables. Stat Probab Lett 24:299–305. https://doi.org/10.1016/0167-7152(94)00187-D

Zhang Q, Yang W, Hu S (2014) On Bahadur representation for sample quantiles under \(\alpha \)-mixing sequence. Stat Papers 55:285–299. https://doi.org/10.1007/s00362-012-0472-z

Acknowledgements

The authors would like to thank the editor and referees for their valuable comments.

Author information

Authors and Affiliations

Corresponding authors

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Dudek, D., Kuczmaszewska, A. Some practical and theoretical issues related to the quantile estimators. Stat Papers 65, 3917–3933 (2024). https://doi.org/10.1007/s00362-024-01543-3

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00362-024-01543-3