Abstract

A physical rattleback is a toy that can exhibit counter-intuitive behavior when spun on a horizontal plate. Most notably, it can spontaneously reverse its direction of rotation. Using a standard mathematical model of the rattleback, we prove the existence of reversing motion, reversing motion combined with rolling, and orbits that exhibit such behavior repeatedly.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We consider the energy-conserving motion of a solid three-dimensional ellipsoid that is in no-slip contact with a fixed horizontal plate and subject to a vertical gravitational force. If the solid is homogeneous, then the axes of inertia agree with the geometric axes of the ellipsoid. In this case, the equations of motion can be solved explicitly (Chaplygin 1897). More interesting behavior is observed when the axes of inertia are rotated by a nonzero angle \(\delta \) about the geometric axis that corresponds to the smallest diameter of the ellipsoid (Walker 1895). This is a standard model for the so-called rattleback or Celtic stone, where \(\delta \) is usually chosen close to zero (Walker 1896; Bondi 1986). Like the homogeneous ellipsoid, it admits a rotating motion with constant angular velocity about the vertical axis, if this axis corresponds to the smallest diameter of the body. But if this angular velocity (lies within a certain range and) gets perturbed in a non-vertical direction, then the rotation is observed to gradually slow down and eventually reverse direction. The reversal is accompanied by a rattling motion, whence the name rattleback. Some videos of such reversing orbits are posted at (https://web.ma.utexas.edu/users/koch/papers/rback/).

This behavior is somewhat counter-intuitive and appears to violate conservation of angular momentum. But angular momentum can be exchanged with the plate; and if the center of gravity is not vertically above the contact point, then the resulting torque can slow down rotation and even reverse its direction. (There is a preferred direction that is related to the sign of \(\delta \).) For a more detailed description of the rattleback reversal, the underlying physics, modeling of contact forces, numerical experiments, and more, we refer to Lindberg and Longman (1983), Garcia and Hubbard (1988), Borisov and Mamaev (2003), Gonchenko et al. (2005), Borisov et al. (2006), Borisov et al. (2012), Franti (2013), Awrejcewicz and Kudra (2019) and references therein.

Similar behavior is observed, both in physical models and numerical experiments, for rattlebacks whose bodies are cut-off elliptic paraboloids. But to our knowledge, there are no rigorous results in either case that establish the existence of reversing orbits. In this paper, we prove the existence of such orbits, including orbits that are periodic and thus reverse infinitely often. Our presentation is self-contained and can serve as an introduction to the rattleback model for a mathematically oriented reader.

To be more specific, let us first introduce the equation of motion. The position of a rigid body in \({\mathbb {R}}^3\) can be described by specifying its center of mass G and an orthonormal \(3\times 3\) matrix Q representing a rotation about G. The unit vector \({\varvec{e}}_3=[0\;0\;1]^{\scriptscriptstyle \top }\) will be referred to as the vertical direction.

Here, and in what follows, \(A^{\scriptscriptstyle \top }\) denotes the transpose of a matrix A. The corresponding vertical direction in the body-fixed frame is the third column \({\varvec{\gamma }}=Q{\varvec{e}}_3\) of the matrix Q. Consider now a body that is moving as a function of time t, and denote by \({d\over dt}{\varvec{x}}\) or \(\dot{\varvec{x}}\) the time-derivative of a vector-valued function \({\varvec{x}}\). Then, \({d\over dt}Q^{\scriptscriptstyle \top }{\varvec{x}}=Q^{\scriptscriptstyle \top }{\varvec{x}}'\), where \({\varvec{x}}'=\dot{\varvec{x}}-{\varvec{x}}\times {\varvec{\omega }}\). Here, \({\varvec{\omega }}\) is the angular velocity, and \({\varvec{a}}\times {\varvec{b}}\) denotes the cross-product of two vectors \({\varvec{a}}\) and \({\varvec{b}}\) in \({\mathbb {R}}^3\).

In the case of the rattleback with mass m, a vertical gravitational force \(-mg{\varvec{\gamma }}\) acts at the center of mass G, where g is a gravitational acceleration. Suppose that the body stays in contact with a fixed horizontal plate and satisfies a no-slip condition \({\varvec{v}}={\varvec{r}}\times {\varvec{\omega }}\). Here, \({\varvec{v}}\) is the velocity of G and \({\varvec{r}}\) denotes the vector from G to the point of contact C. Assuming conservation of momentum, we have \(m{\varvec{v}}'={\varvec{f}}-mg{\varvec{\gamma }}\), where \({\varvec{f}}\) is the force exerted on the body at C. Assuming conservation of angular momentum as well, we have \(({\mathbb {I}}\,{\varvec{\omega }})'={\varvec{r}}\times {\varvec{f}}\), where \({\mathbb {I}}\) is the inertia tensor about G.

Notice that \({\varvec{r}}\times {\varvec{f}}=m{\varvec{r}}\times {\varvec{v}}'+mg{\varvec{r}}\times {\varvec{\gamma }}\) due to momentum conservation. Substituting this expression into the equation \(({\mathbb {I}}\,{\varvec{\omega }})'={\varvec{r}}\times {\varvec{f}}\), we end up with the equation of motion

In addition, we have \(\dot{\varvec{\gamma }}={\varvec{\gamma }}\times {\varvec{\omega }}\), due to the fact that \({\varvec{\gamma }}'=\mathbf{{0}}\). The dynamic variables here are \({\varvec{\gamma }}\) and \({\varvec{\omega }}\). For the velocity \({\varvec{v}}\), we can substitute \({\varvec{r}}\times {\varvec{\omega }}\), and the vector \({\varvec{r}}\) can be expressed in terms of \({\varvec{\gamma }}\) by using the geometry of the body.

In this paper, we consider the body to be an ellipsoid in \({\mathbb {R}}^3\) with principal semi-axes \(b_1>b_2>b_3>0\). Consider the \(3\times 3\) matrix \(B=\,\mathrm{diag}(b_1,b_2,b_3)\). Then, the equation for the surface of the body and the tangency condition at the point of contact C are given by

Using these equations, one easily finds that

The inertia tensor \({\mathbb {I}}\) is assumed to be a symmetric strictly positive definite \(3\times 3\) matrix. Then, \({\mathbb {I}}\) is invertible, and (1.1) together with the equation \(\dot{\varvec{\gamma }}={\varvec{\gamma }}\times {\varvec{\omega }}\) defines a flow on \({\mathbb {R}}^6\). This flow preserves the length \(\ell =\Vert {\varvec{\gamma }}\Vert \). A straightforward computation shows that another flow-invariant quantity is the total energy

The three terms on the right-hand side of this equation can be identified with the rotational kinetic energy, the translational kinetic energy, and the potential energy, respectively. We note that the no-slip condition \({\varvec{v}}={\varvec{r}}\times {\varvec{\omega }}\) is a non-holonomic constraint, so the rattleback model is not a Hamiltonian system.

In what follows, we restrict to \(\Vert {\varvec{\gamma }}\Vert =1\), unless specified otherwise. Then, the phase space for our flow is \({\mathbb {S}}_2\times {\mathbb {R}}^3\), where \({\mathbb {S}}_2\) denotes the unit sphere in \({\mathbb {R}}^3\). The dimension can be reduced further from 5 to 4, if desired, by choosing an energy \(E>mgb_3\) and restricting to the fixed-energy surface

Clearly, these invariant surfaces are all compact. So in particular, every orbit returns arbitrarily close to a point that it has visited earlier. This allows for a variety of different types of motion, including periodic, quasiperiodic, and chaotic orbits. For the parameters and energies considered in this paper, orbits that look periodic are abundant. However, finding nontrivial periodic orbits turns out to difficult, unless one focuses on reversible orbits.

An important feature of the rattleback flow is reversibility. To be more precise, let \(\Phi \) be the flow for some vector field X on \({\mathbb {R}}^n\). That is, \({d\over dt}\Phi _t=X\circ \Phi _t\) for all \(t\in {\mathbb {R}}\). Given an invertible map R on \({\mathbb {R}}^n\), we say that \(\Phi \) is R-reversible if \(R\circ \Phi _t=\Phi _{-t}\circ R\) for all times t. Reversible dynamical systems share many qualitative properties with Hamiltonian dynamical systems (Devaney 1976, 1977; Golubitsky et al. 1991; Lamb and Roberts 1998). But they need not preserve a volume. In fact, one of our results exploits the existence of stationary solutions that attract (or repel) nearby points with the same energy. Nontrivial attractors of the type seen in dissipative systems have been observed numerically in Borisov and Mamaev (2003), Gonchenko et al. (2005), Borisov et al. (2012).

A well-known consequence of R-reversibility is the following. Assume that \(\Phi \) is R-reversible, and that some orbit of \(\Phi \) includes two distinct points x and \(\Phi _\tau (x)\) that are both R-invariant. Then, the orbit is time-periodic with period \(2\tau \). The proof is one line:

This property will be used to construct symmetric periodic orbits for the rattleback flow.

It is well-known that the rattleback flow is R-reversible for the reflection

Here, and in what follows, we use the notation \((x_1,\ldots ,x_n)=[x_1\;\ldots \;x_n]^{\scriptscriptstyle \top }\) for vectors in \({\mathbb {R}}^n\). A rattleback with ellipsoid geometry (1.2) has another symmetry: the flow commutes with the reflection \(S_0({\varvec{\gamma }},{\varvec{\omega }})=(-{\varvec{\gamma }},{\varvec{\omega }})\). Additional symmetries exist for special choices of the inertia tensor \({\mathbb {I}}\). A standard choice in experiments is to take \({\mathbb {I}}_{13}={\mathbb {I}}_{23}=0\). Then, the system is invariant under a rotation by \(\pi \) about the vertical axis \({\varvec{e}}_3\). In what follows, we always restrict to this situation. As a consequence, the flow commutes with the reflection \( S({\varvec{\gamma }},{\varvec{\omega }}) =((-\gamma _1,-\gamma _2,\gamma _3),(-\omega _1,-\omega _2,\omega _3)) \). And it commutes with the reflection \(S'=SS_0\) as well. Given that \(S'\) commutes with R, our flows are \(RS'\)-reversible, where

As part of our investigation, we have carried out numerical simulations for various choices of the model parameters. For simplicity, we focus here on a single set of parameters, namely

and \({\mathbb {I}}_{13}={\mathbb {I}}_{23}=0\). For the gravitational acceleration we choose the value \(g={40141\over 4096}\). These parameters can be realized in a physical experiment, with the proper choice of units for length, mass, and time. (Possible units would be centimeters, decagrams, and deciseconds, respectively.) We note that the matrix \({\mathbb {I}}\) is strictly positive definite, and that the smallest possible energy of a point in \(\mathcal {M}\) is \(mgb_3={40141\over 1024}=39.2001953125\).

The chosen inertia tensor is of the form \({\mathbb {I}}=\mathcal {R}^{-1}{\mathbb {I}}_0\mathcal {R}\), where \({\mathbb {I}}_0\) is roughly the inertia tensor of a homogeneous solid ellipsoid with the given mass m and semi-axes \(b_j\), and where \(\mathcal {R}\) is a rotation about the vertical axis \({\varvec{e}}_3\) by an angle \(\delta \simeq {\pi \over 20}\).

2 Main Results

A trivial solution of the rattleback equation is the stationary solution with \({\varvec{\gamma }}=(0,0,1)\) and \({\varvec{\omega }}=(0,0,\omega _3)\). If \(\omega _3\ne 0\), then this corresponds to a steady rotation about the vertical axis. As mentioned earlier, one of the peculiar features of the rattleback is observed when starting with a nearby initial condition that is not a stationary point. If \(\omega _3\) is within a certain range of values, then the rotation is observed to slow down and eventually reverse direction. In order to give a precise definition of reversal, consider the column vectors \({\varvec{\alpha }}\), \({\varvec{\beta }}\), and \({\varvec{\gamma }}\) of the rotation matrix Q, and define the angle \(\psi _0\) by the equation

When evaluated along an orbit, this “yaw-angle” \(\psi _0\) typically varies as a function of time. Denote by \(\psi :{\mathbb {R}}\rightarrow {\mathbb {R}}\) a continuous lift of \(\psi _0\) to the real line. We say that the body reverses its direction of rotation on a time interval [a, b], if there exists a time \(c\in [a,b]\) such that the differences \(\psi (c)-\psi (a)\) and \(\psi (c)-\psi (b)\) have the same sign and are bounded away from zero by some positive constant C. The largest such constant C will be referred to as the amplitude of the reversal, and the sign of \(\psi (c)-\psi (a)\) will be called the sign of the reversal.

Theorem 2.1

There exists an R-symmetric periodic orbit of period \(T=227.471\ldots \) that reverses its direction of rotation on \([-T/2,T/2]\) and on [0, T], with opposite signs and amplitudes larger than 4. The energy for this orbit is \(E=39.683\ldots \)

Our proof of this theorem is computer-assisted, in the sense that it involves estimates that have been verified (rigorously) with the aid of a computer. The same applies to the theorems stated below. The statement \(E=39.683\ldots \) in Theorem 2.1 means that \(39.683\le E<39.684\). The same notation is used for other interval enclosures. We note that our actual bounds are much more accurate.

The orbit mentioned in Theorem 2.1 is shown in Fig. 2. To be more precise, consider the angular velocity \({\varvec{M}}={\mathbb {I}}\,{\varvec{\omega }}-m{\varvec{r}}\times {\varvec{v}}\) about the contact point C. This angular velocity has been used as primary variable (in place of \({\varvec{\omega }}\)) in several papers. A straightforward computation shows that

The matrix \({\mathbb {I}}+mK({\varvec{r}})\) is strictly positive, so (2.2) could be used to express \({\varvec{\omega }}\) in terms of \({\varvec{M}}\). We note that the reflections R, S, and \(S'\) commute with the change of variables \(({\varvec{\gamma }},{\varvec{\omega }})\mapsto ({\varvec{\gamma }},{\varvec{M}})\).

Figure 2 shows the components of \({\varvec{\gamma }}\) (left) and of \({\varvec{M}}\) (right) as functions of time t, for the orbit described in Theorem 2.1. The R-reversibility of the orbit is equivalent to the condition that \({\varvec{\gamma }}\) is an even function of t, while \({\varvec{M}}\) is an odd function of t.

Components \(\gamma _j\) (left) and \(M_j\) (right) for the orbit described in Theorem 2.1

Components \(\gamma _j\) (left) and \(M_j\) (right) for the first orbit described in Theorem 2.2

Remark 1

All of our results that refer to the parameters (1.9) hold for an open set of parameter values nearby. This is a consequence of nondegeneracy properties that are verified as part of our proofs.

Our next result concerns the existence of a reversing heteroclinic orbit between two stationary points of the form \(z_c=({\varvec{e}}_3,c\,{\varvec{e}}_3)\). As will be shown in the next section, there exists a value \(c_*=1.048\ldots \) such that \(z_c\) is repelling for \(c<-c_*\) and attracting for \(c>c_*\), if the flow is restricted to the surface of fixed energy \(E=\mathcal {H}(z_c)\).

Theorem 2.2

Consider the parameter values (1.9). For \(c=1.849\ldots \) there exists a heteroclinic R-reversible orbit connecting \(z_{-c}\) to \(z_c\). This orbit reverses its direction of rotation on \([-b,b]\) for large \(b>0\), and the amplitude tends to infinity as \(b\rightarrow \infty \). An analogous orbit (in fact a one-parameter family) exists for \(c=1.467\ldots \) that is RS-reversible. The energies of these two orbits are \(E=74.95\ldots \) and \(E=61.72\ldots \), respectively.

The first orbit described in this theorem is shown in Fig. 3. We note that this orbit must pass through an R-invariant point x at time \(t=0\). Since \(z_{-c}\) is repelling and \(z_c\) attracting (for fixed energy), every point that is sufficiently close to x and has the same energy as x lies on some heteroclinic orbit connecting \(z_{-c}\) to \(z_c\). We expect that there exists heteroclinic orbits between \(z_{\pm c}\) for a range of values \(c>c_*\), and it is possible that such orbits exist for some range of values \(c<c_*\) as well.

Numeral experiments are most often carried out for rattlebacks whose body is a cut-off elliptic paraboloid. If we replace \(F({\varvec{r}})=\Vert {\varvec{\rho }}\Vert ^2\) by \(F({\varvec{r}})=\rho _1^2+\rho _2^2-2\rho _3-1\), where \({\varvec{\rho }}=B^{-1}{\varvec{r}}\), then the behavior can be expected to be similar to the behavior of the ellipsoid, as long as \({\varvec{\gamma }}\) stays close to \({\varvec{e}}_3\). Among the features of the ellipsoid-shaped rattleback that cannot be studied in the cut-off paraboloid case is roll-over motion.

A possible definition of “rolling over \({\varvec{e}}_1\)” can be given in terms of the angle \(\phi _0\) defined by the equation

When evaluated along an orbit, this “roll-angle” \(\phi _0\) typically varies as a function of time. Denote by \(\phi :{\mathbb {R}}\rightarrow {\mathbb {R}}\) a continuous lift of \(\phi _0\) to the real line. We say that the body rolls over \({\varvec{e}}_1\) on a time interval [a, b], if the difference \(\phi (b)-\phi (a)\) is no less than \(\pi \) in absolute value. The sign of \(\phi (b)-\phi (a)\) will be called the direction of the roll-over.

Theorem 2.3

Consider the parameter values (1.9). There exists a periodic orbit of period \(T=254.286\ldots \) that rolls over \({\varvec{e}}_1\) on two time-intervals, once in the positive direction, and once in the negative direction. In addition, the orbit reverses its direction of rotation on \([-T/2,T/2]\) and on [0, T], with opposite signs and amplitudes larger than 24. The orbit is R-symmetric, and when translated in time by T/4, it becomes \(RS'\)-symmetric. Its energy is \(E=42.0308\ldots \) Furthermore, there exists a one-parameter family of such orbits.

The orbit described in this theorem is shown in Figs. 4 and 5.

Components \(\gamma _j\) (left) and \(M_j\) (right) for the orbit described in Theorem 2.3

Components \(M_j\) (left) and angles \(\psi \), \(\phi \) (right) for the orbit described in Theorem 2.3

The right part of Fig. 5 shows the lifted yaw-angle \(\psi \) and the lifted roll-angle \(\phi \). The left part shows the behavior of \({\varvec{M}}\) near \(t=0\). It illustrates that the rattleback motion exhibits many rapid variations, especially during reversals. Controlling such orbits rigorously involves rather accurate estimates. Typical error bounds in our analysis are of the order \(2^{-2000}\).

3 Some Simpler Solutions

After describing a periodic orbit that rolls over \({\varvec{e}}_1\) repeatedly in the same direction, we will discuss some stationary solutions and their stability.

Theorem 3.1

Consider the parameter values (1.9). There exists a \(RS'\)-symmetric periodic orbit of period \(T=18.061\ldots \) and energy \(E=42.99\ldots \) that rolls over \({\varvec{e}}_1\) on two adjacent time-intervals of combined length T, both times with the same direction. In fact, there exists a two-parameter family of such orbits.

The orbit described in this theorem is shown in Fig. 6. Our numerical results suggest that both the yaw-angle \(\psi \) and the roll-angle \(\phi \) are monotone for this orbit, but we did not try to prove this.

Components \(\gamma _j\) (left) and \(M_j\) (right) for the first orbit described in Theorem 3.1

We note that there exist trivial roll-over orbits as well as trivial heteroclinic orbits. Consider the manifold \(\mathrm{Fix}(S')=\{({\varvec{\gamma }},{\varvec{\omega }})\in \mathcal {M}: \gamma _3=\omega _1=\omega _2=0\}\) that is invariant under the flow. At energy \(mgb_1\), we have a heteroclinic orbit in \(\mathrm{Fix}(S')\) between the points \(z_\pm =((\pm 1,0,0),\mathbf{{0}})\). For energies below \(mgb_1\), the orbits are all closed and avoid \(z_\pm \). For energies above \(mgb_1\), the orbits are closed and clearly roll over \({\varvec{e}}_2\) with the obvious definition of such a roll-over.

Next, we consider some stationary solutions. A stationary point \(x=({\varvec{\gamma }},{\varvec{\omega }})\) necessarily satisfies \({\varvec{\omega }}=\pm \Vert {\varvec{\omega }}\Vert {\varvec{\gamma }}\), since \(\dot{\varvec{\gamma }}={\varvec{\gamma }}\times {\varvec{\omega }}\) has to vanish. The stability of x is best discussed in terms of the vector field \(X:({\varvec{\gamma }},{\varvec{\omega }})\mapsto (\dot{\varvec{\gamma }},\dot{\varvec{\omega }})\). If x is invariant under R, RS, or \(RS'\), then the set of eigenvalues \(\lambda \) of DX(x) is invariant under \(\lambda \mapsto \bar{\lambda }\) and \(\lambda \mapsto -\lambda \).

The simplest stationary points are \(x_j=({\varvec{e}}_j,\mathbf{{0}})\), where \({\varvec{e}}_j\) is the unit vector parallel to the j-th coordinate axis. A straightforward computation shows that besides two eigenvalues zero (due to the conservation of \(\ell \) and \(\mathcal {H}\)), \(DX(x_1)\) has four real eigenvalues, \(DX(x_2)\) has two real and two imaginary eigenvalues, and \(DX(x_3)\) has four imaginary eigenvalues. This holds for any ellipsoid body with \(b_1>b_2>b_3>0\).

The stationary point \(x_3\) is part of a family of stationary points \(z_c=({\varvec{e}}_3,c\,{\varvec{e}}_3)\) parametrized by a real number c. The stability of these points has been investigated in several papers, including (Markeev 1983; Bondi 1986; Garcia and Hubbard 1988; Pascal 1994; Franti 2013). The consensus is that, for many choices of parameters, an analogue of the following holds.

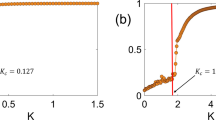

Lemma 3.2

Consider the parameter values (1.9). There exists a constant \(c_*=1.048\ldots \) such that the stationary point \(z_c\) is repelling for \(c<-c_*\), hyperbolic for \(0<|c|<c_*\), and attracting for \(c>c_*\), if the flow is restricted to the surface \(\mathcal {M}_{\scriptscriptstyle E}\) of constant energy \(E=\mathcal {H}(z_c)\).

A pencil-and-paper proof of this lemma is possible, but tedious; so we carried out the necessary (rigorous) computations with a computer. Notice that it suffices to prove the assertions for \(c>0\), since \(DX(z_{-c})=DX(Rz_c)=-DX(z_c)\) by R-reversibility. We remark that Lemma 3.2 excludes the existence of a real analytic first integral that is independent of \(\ell \) and \(\mathcal {H}\). A result on the non-existence of analytic integrals was proved also in Dullin and Tsygvintsev (2008).

Finally, let us describe two one-parameter families of \(RS'\)-invariant stationary points. We have not found them discussed in the literature.

Lemma 3.3

Consider the parameter values (1.9) and points \(z=({\varvec{\gamma }},{\varvec{\omega }})\) with \(\gamma _3=0\) and \({\varvec{\omega }}=\pm \Vert \omega \Vert {\varvec{\gamma }}\). There exist a real number \(q_*=0.025941\ldots \) such that the following holds. If \(-q_*<{\gamma _1\over \gamma _2}\le 0\), then z is a stationary point. For \(\gamma _1=0\) we have \(\Vert \omega \Vert =0\), and \(\Vert \omega \Vert \rightarrow \infty \) as \({\gamma _1\over \gamma _2}\rightarrow -q_*\) from above. Furthermore, if \(0\le {\gamma _2\over \gamma _1}<q_*\), then z is a stationary point. For \(\gamma _2=0\) we have \(\Vert \omega \Vert =0\), and \(\Vert \omega \Vert \rightarrow \infty \) as \({\gamma _2\over \gamma _1}\rightarrow q_*\) from below.

The existence of stationary points near \((\pm {\varvec{e}}_2,\mathbf{{0}})\) or \((\pm {\varvec{e}}_1,\mathbf{{0}})\) may be a known fact. What seems surprising is that the two critical solutions (corresponding to \(\Vert \omega \Vert =\infty \)) are related via a rotation by \({\pi \over 2}\). This part of Lemma 3.3 is not specific to the choice of parameter values (1.9).

The remaining parts of this paper are devoted to our proofs of the results stated in Sects. 2 and 3.

4 Integration and Poincaré Sections

The equation (1.1) determines \(\dot{\varvec{\omega }}\) as a function of \(x=({\varvec{\gamma }},{\varvec{\omega }})\). Together with the equation \(\dot{\varvec{\gamma }}={\varvec{\gamma }}\times {\varvec{\omega }}\), this defined a vector field \(X=(\dot{\varvec{\gamma }},\dot{\varvec{\omega }})\) on \({\mathbb {R}}^6\). This vector field is considered only in a small open neighborhood of \(\mathcal {M}\) in \({\mathbb {R}}^6\) where it is real analytic. The resulting flow \((t,x)\mapsto \Phi _t(x)\) is then real analytic as well.

In order to construct an orbit for a non-small time-interval [0, r], we partition this interval into m small subintervals \([\tau _{k-1},\tau _k]\) with \(\tau _0=0\) and \(\tau _m=r\). On each successive subinterval, starting with \(k=1\), we solve the initial value problem \(\dot{x}=X(x)\) with given initial conditions at time \(\tau _{k-1}\) via the integral equation

If \(\rho =\tau _k-\tau _{k-1}\) is a sufficiently small positive real number, then for \(1\le j\le 6\), the function g defined by \(g(t)=x_j(\tau _{k-1}+t)\) is given by its Taylor series about \(t=0\) and has a finite norm

This norm is convenient for computer-assisted proofs, since it is easy to estimate, and since the corresponding function space \(\mathcal {G}_\rho \) is a Banach algebra for the pointwise product of functions. Each function \(g\in \mathcal {G}_\rho \) extends analytically to the complex disk \(|z|<\rho \) and continuously to its boundary. In what follows, \(\rho \) is a fixed but arbitrary positive real number.

Consider now the integral equation (4.1), with \(k=1\) to simplify the discussion. Let \(x_0(t)=x(0)\). Since the vector field X defines an analytic function on some open neighborhood of x(0), the equation (4.1) can be solved order by order by iterating the transformation \(\mathcal {K}\) given by

starting with \(x=x_0\). That is, the Taylor polynomial \(x_n\) of order n for \(\mathcal {K}^n(x_0)\) agrees with the Taylor polynomial of order n for x.

This is of course the well-known Taylor integration method. In order to estimate the higher-order correction \(x-x_d\) for some large degree d, we use a norm on \(\mathcal {G}_\rho ^6\) of the form

with appropriately chosen weights \(w_j>0\). A common approach is to apply the contraction mapping theorem on a ball centered at \(x_d\). Instead, we use Theorem 5.1 in Arioli and Koch (2015), which only requires that some closed higher-order set gets mapped into itself. In our programs, the radius \(\rho \) and the weights \(w_j\) are chosen adaptively, depending on properties of \(X(x_d)\).

Computing \(\mathcal {K}(x)\) from the Taylor series for x involves only a few basic operations like sums, products, antiderivatives, multiplicative inverses, and square roots. This is done by decomposing each function g involved into a Taylor polynomial \(g_d\) of some (large) degree d and a higher-order remainder \(g-g_d\). For sums, products, and antiderivatives of functions in \(\mathcal {G}_\rho \), it is trivial to estimate the higher-order remainder of the result.

Consider now the multiplicative inverse \(1+h\) of a function \(1+g\), where

The following is straightforward to prove.

Proposition 4.1

Let \(g\in \mathcal {G}_\rho \) with \(\Vert g\Vert <1\). Then, \(h=(1+g)^{-1}-1\) belongs to \(\mathcal {G}_\rho \). The Taylor coefficients \(c_n\) of h are given recursively by

and

We note that the same holds if g takes values in some commutative Banach algebra \(\mathcal {X}\) with unit. Then, the Taylor coefficients \(b_n\) and \(c_n\) in (4.5) are vectors in \(\mathcal {X}\). This fact is used when estimating the flow for initial points that depend on parameters.

Next, consider the (principal branch of the) square root of a function \(1+g\).

Proposition 4.2

Let \(g\in \mathcal {G}_\rho \) with \(\Vert g\Vert <{1\over 2}\). Then \(h=(1+g)^{1/2}-1\) belongs to \(\mathcal {G}_\rho \). The Taylor coefficients \(c_n\) of h are given recursively by

Furthermore,

provided that the norm on the right-hand side of this inequality does not exceed \({1\over 4}\).

Proof

We will use that

Verifying this identity and (4.8) is straightforward.

Let now \(n\ge 2\). Using the power series for \(z\mapsto (1+z)^{1/2}-1\), and the fact that \(\bigl |{1/2\atopwithdelims ()k}\bigr |\le {1\over 8}\) for \(k\ge 2\), one easily finds that \(\Vert h_n\Vert \le {5\over 8}\Vert g\Vert \). This in turn yields a bound \(\bigr \Vert (1+h_n)^{-1}\bigr \Vert \le {8\over 5}\). So from (4.10), we find that \(\delta ={4\over 5}\Vert h-h_n\Vert \) satisfies

Assuming that \(\varepsilon \le {1\over 4}\), this implies the bound (4.9). \(\square \)

Next, we consider the problem of constructing reversible orbits for the given flow. In what follows, we will use \({\varvec{M}}\) as a primary variable instead of \({\varvec{\omega }}\). The equation of motion in the variables \(({\varvec{\gamma }},{\varvec{M}})\) is given by

Here, \({\varvec{r}}\) and \(\dot{\varvec{r}}\) are obtained from (1.3), while \({\varvec{\omega }}\) is determined from \({\varvec{r}}\) and \({\varvec{M}}\) via (2.2). In order to simplify notation, we will now write \(x=({\varvec{\gamma }},{\varvec{M}})\) and \(X=(\dot{\varvec{\gamma }},\dot{\varvec{M}})\). Recall that R and \(RS'\) commute with the change of variables \(({\varvec{\gamma }},{\varvec{\omega }})\mapsto ({\varvec{\gamma }},{\varvec{M}})\).

For the construction of periodic orbits, it is convenient to consider return maps to some codimension 1 surface \(\Sigma \). The surfaces used in our analysis are

for \(j=1\) or \(j=3\). Since we are exploiting reversibility, only half-orbits or quarter-orbits need to be considered. Each partial orbit starts at some symmetric (meaning R-invariant or \(RS'\)-invariant) point. The goal is to determine such a point x, as well as a positive time \(\tau =\tau (x)\), such that \(\Phi _\tau (x)\) is again symmetric. To this end, we first determine a symmetric numerical approximation \(\bar{x}\) for x and an approximation \(\bar{\tau }\) for \(\tau \). After choosing a real number \(\tau '\) slightly smaller than \(\bar{\tau }\), the associated Poincaré map \(\mathcal {P}\) is then defined by setting

for all (symmetric) starting points x in some neighborhood of \(\bar{x}\).

Consider now the problem of constructing the orbit described in Theorem 2.1. The starting point at time \(t=0\) is R-invariant and thus of the form \(x=({\varvec{\gamma }},\mathbf{{0}})\). Restricting to \(\Vert {\varvec{\gamma }}\Vert =1\), the possible starting points are parametrized by a vector \(\gamma =(\gamma _1,\gamma _2)\) in \({\mathbb {R}}^2\) of length less than 1. For the Poincaré section, we choose \(\Sigma =\Sigma _1\). Then, \(\tilde{x}=\mathcal {P}(x)\) is of the form \(\tilde{x}=\bigl (\tilde{\varvec{\gamma }},\tilde{\varvec{M}}\bigr )\) with \(\tilde{M}_1=0\). Define \(P(\gamma )=\bigl (\tilde{M}_2,\tilde{M}_3\bigr )\).

Lemma 4.3

There exists a vector \(\bar{\varvec{\gamma }}\in {\mathbb {S}}_2\) such that the following holds. Let \(\bar{x}=(\bar{\varvec{\gamma }},\mathbf{{0}})\) and \(\tau '=113\). Then, the Poincare map \(\mathcal {P}\) with \(\Sigma =\Sigma _1\) is well-defined and real analytic in an open neighborhood \(B_g\times B_{\scriptscriptstyle M}\) of \(\bar{x}\) in \(\mathcal {M}\). When restricted to \(B_g\), the associated mapping P is real analytic, has a nonsingular derivative, and takes the value (0, 0) at some R-invariant point. Furthermore, all orbits with starting points in \(B_g\times \{\mathbf{{0}}\}\) have Poincaré time \(\tau (x)=113.7359\ldots \), energy \(E=39.683\ldots \), and reverse as described in Theorem 2.1.

Our proof of this lemma is computer-assisted and will be described in Sect. 6. Notice that if \({\varvec{\gamma }}\in B_g\) is a solution of \(P(\gamma _1,\gamma _2)=(0,0)\), and if we set \(x=({\varvec{\gamma }},\mathbf{{0}})\), then the point \(\Phi _\tau (x)\) is R-invariant for \(\tau =\tau (x)\). Thus, as described earlier, this implies that \(\Phi _T(x)=x\) with \(T=2\tau (x)\). So Theorem 2.1 follows from Lemma 4.3.

In order to construct the orbit described in Theorem 2.3, we use a Poincare map \(\mathcal {P}\) with \(\Sigma =\Sigma _3\). The starting point x is again R-invariant, but the desired point \(\tilde{x}=\mathcal {P}(x)\) is \(RS'\)-invariant, meaning that \(\gamma _3=M_3=0\). So the goal is to find zeros of the function P defined by \(P(\gamma )=\tilde{\gamma }_3\).

Lemma 4.4

There exists a vector \(\bar{\varvec{\gamma }}\in {\mathbb {S}}_2\) such that the following holds. Let \(\bar{x}=(\bar{\varvec{\gamma }},\mathbf{{0}})\). Then, the Poincare map \(\mathcal {P}\) with \(\Sigma =\Sigma _3\) and \(\tau '=63\) is well-defined and real analytic in an open neighborhood \(B_g\times B_{\scriptscriptstyle M}\) of \(\bar{x}\) in \(\mathcal {M}\). When restricted to \(B_g\), the associated function P is real analytic and takes the value 0 at some \(RS'\)-invariant point. Furthermore, all orbits with starting points in \(B_g\times \{\mathbf{{0}}\}\) have Poincaré time \(\tau (x)=63.57172\ldots \), energy \(E=42.0308\ldots \) and reverse/roll-over as described in Theorem 2.3. The same holds for a two-parameter family of \(RS'\)-invariant initial points.

Our proof of this lemma will be described in Sect. 6. Notice that if \({\varvec{\gamma }}\in B_g\) is a solution of \(P(\gamma _1,\gamma _2)=0\), and if we set \(x=({\varvec{\gamma }},\mathbf{{0}})\), then the point \(\Phi _\tau (x)\) is \(RS'\)-invariant for \(\tau =\tau (x)\). Thus, by R-reversibility, the point \(x'=\Phi _{-\tau }(x)\) is \(RS'\)-invariant as well. As described earlier, this implies that \(\Phi _T(x')=x'\) with \(T=4\tau (x)\). So Theorem 2.3 follows from Lemma 4.4.

Remark 2

A lemma analogous to Lemma 4.4 holds for the orbit described in Theorem 3.1, with \(RS'\)-invariant starting points x. Choosing again \(\Sigma =\Sigma _3\) and \(P=\tilde{\gamma }_3\), the equation that needs to be solved is \(P(\gamma _1,M_1,M_2)=0\). Here, the value of \(\tau '\) used in (4.14) is \(\tau '=8.5\).

5 Stationary Points and Heteroclinic Orbits

Consider the flow on \({\mathbb {R}}^6\) in the variables \(({\varvec{\gamma }},{\varvec{\omega }})\). Clearly, \(\bar{x}=((0,0,\gamma _3),(0,0,\omega _3))\) is a stationary point for any real values \(\gamma _3\ne 0\) and \(\omega _3\). So the derivative \(D\!X(\bar{x})\) has two trivial eigenvalues 1, with eigenvectors ((0, 0, u), (0, 0, v)). The remaining eigenvalues agree with those of the \(4\times 4\) matrix \(P D\!X(\bar{x})P^{\scriptscriptstyle \top }\), where P is the \(4\times 6\) matrix defined by \(P({\varvec{\gamma }},{\varvec{\omega }})=(\gamma ,\omega )\), with \(\gamma =(\gamma _1,\gamma _2)\) and \(\omega =(\omega _1,\omega _2)\).

In what follows, we fix \(\gamma _3=1\) in the definition of \(\bar{x}\). Define two \(2\times 2\) matrices J and B by setting

where \(d=\bigl ({\mathbb {I}}_{11}+mb_3^2\bigr )\bigl ({\mathbb {I}}_{22}+mb_3^2\bigr )-{\mathbb {I}}_{12}{\mathbb {I}}_{21}\). Notice that J is the inverse of \(P[{\mathbb {I}}+K(\bar{\varvec{r}})]P^{\scriptscriptstyle \top }\). A straightforward computation shows that

where

and

Here, \(a_1=b_1^2/b_3\) and \(a_2=b_2^2/b_3\) are the principal radii of curvature of the ellipsoid at \(r_1=r_2=0\) and \(r_3=\pm b_3\),

Sketch of a proof of Lemma 3.2. Our aim is to apply the Routh–Hurwitz criterion, which is commonly used for such stability problems. It involves the coefficients \(p_0,\ldots ,p_4\) of the characteristic polynomial

and two other polynomials \(p_5\) and \(p_6\) that are constructed from the coefficients \(p_0,\ldots ,p_4\). By the Routh–Hurwitz criterion, the eigenvalues \(\lambda =\lambda (\omega _3)\) of \(\mathcal {L}(\omega _3)\) all have a negative real part if and only if \(p_n(\omega _3)>0\) for all n. For the parameters values (1.9), an explicit computation shows that \(\deg (p_n)=4-n\) for \(n\le 4\) and \(\deg (p_n)=n-2\) for \(n>4\). Furthermore, each polynomial \(p_n\) is either even or odd; and up to a factor \(d^4\), its coefficients are rationals with denominators that are powers of 2. The value of \(c_*\) mentioned in Lemma 3.2 is the positive zero of \(p_5\). The other polynomials \(p_n\) have no zeros on the positive real line, as can be seen immediately from their coefficients. The source code of our program Hurwitz that computes all these coefficients can be found in Supplementary material. \(\square \)

Consider now the two orbits described in Theorem 2.2. The first orbit is chosen to pass at time \(t=0\) through the point \(x=({\varvec{\gamma }},\mathbf{{0}})\) with \(\gamma _1=-43585\times 2^{-17}=-0.3325\ldots \) and \(\gamma _2=-144635\times 2^{-20}=-0.1379\ldots \). Since x is R-invariant, the orbit of x is R-symmetric. The energy of x is \(E=\mathcal {H}(x)=mbs\), with s given by (1.3). The claim is that \(\Phi _t(x)\) approaches one of the above-mentioned stationary points \(\bar{x}=({\varvec{e}}_3,\omega _3{\varvec{e}}_3)\) as \(t\rightarrow \infty \). The value of \(\omega _3>0\) is determined by the equation \(E={1\over 2}{\mathbb {I}}_{33}\omega _3^2+mgb_3\).

The second orbit mentioned in Theorem 2.2 passes at time \(t=0\) through the point \(x=({\varvec{e}}_3,(M_1,M_2,0))\) with \(M_1=-285332\times 2^{-20}=-0.2721\ldots \), and with \(M_2<0\) determined by prescribing the energy \(E=252819\times 2^{-12}=61.72\ldots \). Since x is RS-invariant, the orbit of x is RS-symmetric. Defining \(\omega _3>0\) by the equation \(E={1\over 2}{\mathbb {I}}_{33}\omega _3^2+mgb_3\), the claim is that \(\Phi _t(x)\) approaches the stationary point \(\bar{x}=({\varvec{e}}_3,\omega _3{\varvec{e}}_3)\) as \(t\rightarrow \infty \).

In both cases, the goal is to prove that there exists a time \(\tau >0\) such that \(\Phi _\tau (x)\) belongs to an open neighborhood of \(\bar{x}\) in \(\mathcal {M}_{\scriptscriptstyle E}\) that is attracted to \(\bar{x}\) under the flow. To this end, consider the map \(P_{\scriptscriptstyle E}:\mathcal {M}_E\rightarrow {\mathbb {R}}^4\) given by \(P_{\scriptscriptstyle E}(x)=Px\), where P is as defined at the beginning of this section. Then, the equation of motion on \(\mathcal {M}_{\scriptscriptstyle E}\) near the origin is conjugate via \(P_{\scriptscriptstyle E}\) to the equation

where \(Y=PX\circ P_{\scriptscriptstyle E}^{-1}\) in some open neighborhood of \(\bar{x}\) in \(\mathcal {M}_{\scriptscriptstyle E}\). The stationary point for the associated flow is \(\bar{y}=0\).

Notice that \(DY(0)=\mathcal {L}(\omega _3)\). Using Lemma 3.2, we have chosen \(\bar{x}\) in such a way that \(\omega _3>c_*\). So we know that all eigenvalues of \(\mathcal {L}(\omega _3)\) have a negative real part. We expect that all eigenvalues are simple. Then, there exists an inner product \(\langle \mathbf{.}\,,\mathbf{.}\rangle \) on \({\mathbb {R}}^4\) such that the matrix

is strictly negative definite, where \(\mathcal {L}(\omega _3)^*\) denotes the adjoint of \(\mathcal {L}(\omega _3)\) with respect to the above-mentioned inner product. Assume for now that \(\Lambda \) is strictly negative definite, meaning that \(\langle u,\Lambda u\rangle \) is negative for every nonzero vector \(u\in {\mathbb {R}}^4\). Then, the derivative

is negative, if the nonlinear part N(y) is sufficiently small compared to y.

Let now \(y_\tau =P\Phi _\tau (x)\). In order to prove that \(y_\tau \) is attracted to zero by the flow associated with Y, it suffices to show that \(y_\tau \) belongs to a ball \(\mathcal {B}\subset {\mathbb {R}}^4\) that is centered at the origin, with the property that \(|\langle y,N(y)\rangle |<|\langle y,\Lambda y\rangle |\) for all nonzero \(y\in \mathcal {B}\). This property is equivalent to

Lemma 5.1

Let \(y_\tau =P\Phi _\tau (x)\), with \(\tau =100\) and x as described above (either the R-invariant or the RS-invariant choice). Then, there exists an inner product \(\langle \mathbf{.}\,,\mathbf{.}\rangle \) on \({\mathbb {R}}^4\) such that \(\langle u,\Lambda u\rangle \) is negative for every nonzero \(u\in {\mathbb {R}}^4\). Moreover, there exists \(\delta >0\) such that \(y_\tau \) belongs to the ball \(\mathcal {B}=\bigl \{y\in {\mathbb {R}}^4:|\langle y,y\rangle |^{1/2}<\delta \bigr \}\), and such that the condition (5.10) holds. Furthermore, the orbit for x has the energy and reversing property described in Theorem 2.2.

Our proof of this lemma is computer-assisted and will be described in Sect. 6. Notice that \(\Phi _t(x)\rightarrow \bar{x}\) as \(t\rightarrow \infty \), since the norm of \(y(t)=P\Phi _t(x)\) tends to zero by (5.9) and (5.10). Furthermore \(S\bar{x}=\bar{x}\). So by reversibility, \(\Phi _t(x)\) converges to \(R\bar{x}=RS\bar{x}\) as \(t\rightarrow -\infty \). In other words, we have a heteroclinic orbit connecting \(R\bar{x}=RS\bar{x}=({\varvec{e}},-\omega _3{\varvec{e}})\) to \(x=({\varvec{e}},\omega _3{\varvec{e}})\). So Theorem 2.2 follows from Lemma 5.1.

In the remaining part of this section, we give a proof of Lemma 3.3, based in part on (trivial) estimates that have been carried out with the aid of a computer (Supplementary material). These estimates are specific to the choice of parameters (1.9), but analogous estimates should work for many other choices. The remaining arguments only use that \(b_1\ne b_2\) and \({\mathbb {I}}_{13}={\mathbb {I}}_{23}=0\).

Sketch of a proof of Lemma 3.3. We consider the equation for a stationary solution \(({\varvec{\gamma }},{\varvec{\omega }})\) with the property that \(\gamma _3=\omega _3=0\). Then, \(\omega =(\omega _1,\omega _2)\) must be parallel to \(\gamma =(\gamma _1,\gamma _2)\). So \(\omega =\pm \Vert \omega \Vert \gamma \). Consider also the condition \(\dot{\varvec{M}}=\mathbf{{0}}\). From (4.12), we see that the first two components of \(\dot{\varvec{M}}\) vanish automatically. And the condition \(\dot{M}_3=0\) becomes

This condition can be written as an equation for \(r=(r_1,r_2)\) by using that \(\gamma _j=-sb_j^{-1}r_j\) and \(\omega =\pm \Vert \omega \Vert \gamma \). To be more specific, we define two functions \(\mathcal {P}\) and \(\mathcal {Q}\) by the equation

A straightforward computation shows that (5.11) reduces to

If we stay away from the zeros of \(\mathcal {Q}\), then the condition is satisfied for some value of \(\Vert \omega \Vert \) if and only if \(\mathcal {P}(r)\) and \(\mathcal {Q}(r)\) have opposite signs.

In addition to (5.13), we also have the ellipse condition \((r_1/b_1)^2+(r_2/b_2)^2=1\). So define \(p(\theta )=\mathcal {P}(r)\) and \(q(\theta )=\mathcal {Q}(r)\), using \(r_1=b_1\sin (\theta /2)\) and \(r_2=b_2\cos (\theta /2)\). Both p and q are \(2\pi \)-periodic functions, since \(\mathcal {P}\) and \(\mathcal {Q}\) are even functions of r. In the remaining part of this paragraph, we consider just the parameters (1.9). Restricting \(\theta \) to the interval \([-\pi ,\pi ]\), the sign of \(p(\theta )\) is just the sign of \(-\theta \). So it suffices to determine the sign of \(q(\theta )\). This is easily done by using interval arithmetic. By estimating q and its derivative \(q'\) on subintervals, one finds that q has exactly two zeros. Finally, using a (rigorous) Newton method, the zeros are located at values \(\theta _*=-0.242951\ldots \) and \(\theta _*'=3.13056\ldots \). For details, we refer to the source code of the program RSp_Stat in Supplementary material.

Notice that \({\gamma _1\over \gamma _2}={b_2\over b_1}\tan (\theta /2)\). When computing these ratios numerically, it appears that the the vector \(\gamma \) for the angle \(\theta =\theta _*\) is orthogonal to the vector \(\gamma '\) for the angle \(\theta _*'\). The following argument confirms this observation.

Consider now \(\mathcal {Q}\) as a function of \(\gamma \), say \(\mathcal {Q}(r)=Q(\gamma )\). Let \(\gamma \) be a solution of \(Q(\gamma )=0\). This property of \(\gamma \) is equivalent to \(M_2\omega _1-M_1\omega _2=0\), meaning that \(M=(M_1,M_2)\) is parallel to \(\omega \). Recall that \(M=({\mathbb {I}}+mK(r))\omega \), where \(K(r)=\Vert r\Vert ^2\mathrm{I}-rr^{\scriptscriptstyle \top }\). So \(\omega \) is an eigenvector of \({\mathbb {I}}+mK(r)\). Equivalently, \(\omega \) is an eigenvector of \(m^{-1}{\mathbb {I}}-rr^{\scriptscriptstyle \top }\). But \(\omega \) is parallel to \(\gamma \), so \(\gamma \) is an eigenvector as well. Setting \(\rho =B^{-1}r\) with \(B=\,\mathrm{diag}(b_1,b_2)\), we have

for some real number \(\lambda \). This property is equivalent to the condition \(Q(\gamma )=0\).

Using that the matrix \([\cdots ]\) in the above equation is symmetric, we also have

for some real number \(\lambda '\). Notice that \(\rho =-\Vert B\gamma \Vert ^{-1}B\gamma \). Let \(\rho '=-\Vert B\gamma '\Vert ^{-1}B\gamma '\). Then,

for some real numbers \(\mu \) and \(\nu \). Subtracting (5.16) from (5.15) yields

where c is a nonzero constant. Notice that \(\rho '{\rho '}^{\scriptscriptstyle \top }\rho '=\rho '\) and \(\rho ^{\scriptscriptstyle \top }\rho \rho ^{\scriptscriptstyle \top }=\rho ^{\scriptscriptstyle \top }\). Thus, multiplying both sides of (5.17) from the left by \(\rho ^{\scriptscriptstyle \top }B^{-1}\) yields \(0=(\lambda '-\mu )\rho ^{\scriptscriptstyle \top }B^{-2}\rho '-c\nu \rho ^{\scriptscriptstyle \top }B^{-2}\rho \). But \(\rho ^{\scriptscriptstyle \top }B^{-2}\rho '=0\) since \(\gamma ^{\scriptscriptstyle \top }\gamma '=0\). This implies that \(\nu =0\). So the equation (5.16) holds with \(\nu =0\), and this is equivalent to \(Q(\gamma ')=0\). This proves the claim in Lemma 3.3 concerning the limits with \(\Vert \omega \Vert \rightarrow \infty \). \(\square \)

6 Computer Estimates

What remains to be done is to prove Lemmas 4.3, 4.4, and 5.1. (Our proof of the lemma referred to in Remark 2 is analogous to the proof of Lemma 4.4, so we will not discuss it separately here.) The necessary estimates are carried out with the aid of a computer. This part of the proof is written in the programming language Ada [24] and can be found in Supplementary material. The following is a rough guide for the reader who wishes to check the correctness of our programs.

6.1 Enclosures and Data Types

By an enclosure for (or bound on) an element x in a space \(\mathcal {X}\) we mean a set \(X\subset \mathcal {X}\) that includes x and is representable as data on a computer. For points in \({\mathbb {R}}^n\), this could be rectangles that contains x. Working rigorously with such enclosures is known as interval arithmetic. What we need here are enclosures for elements in Banach spaces, such as functions \(g(t)=\sum _n b_nt^n\) in the spaces \(\mathcal {G}_\rho \) described earlier. In addition, when considering orbits that depend on parameters (such as initial conditions), the coefficients \(b_n\) can be functions themselves.

In our programs, enclosures are associated with a data type. Let \(\mathcal {X}\) be a commutative real Banach algebra with unit \(\mathbf{{1}}\). Our data of type Ball are pairs \(\texttt {B}=(\texttt {B.C},\texttt {B.R})\), where B.C and B.R are representable numbers, with \(\texttt {B.R}\ge 0\). The enclosure associated with a Ball B is the ball \(\texttt {B}_\mathcal {X}=\{x\in \mathcal {X}:\Vert x-(\texttt {B.C})\mathbf{{1}}\Vert \le \texttt {B.R}\}\). For specific spaces \(\mathcal {X}\), other types of enclosures will be described below. In all cases, enclosures are closed convex subsets of \(\mathcal {X}\) that admit a canonical finite decomposition

where each \(x_n\) is a representable element in \(\mathcal {X}\), and where each B(n) is a Ball centered at \(\mathbf{{0}}\) or at \(\mathbf{{1}}\).

Assume that \(\mathcal {X}\) carries a type of enclosures named Scalar. For vectors in \(\mathcal {X}^3\), we use a Scalar-type enclosure for each component. The corresponding data type SVector3 is simply an array(1..3) of Scalar. Our type Point defines enclosures for points \(x=({\varvec{\gamma }},{\varvec{M}})\) with \({\varvec{\gamma }},{\varvec{M}}\in \mathcal {X}^3\). But a Point P is in fact a 7-tuple P=(P.Alpha, P.Beta, P.Gamma, P.M, P.Energy, P.YawPi, P.RollPi), where the first four components are of type SVector3. The component P.Energy is a Scalar that defines an enclosure for the energy of a point, while P.YawPi and P.RollPi are integers. More specifically, \({\texttt {P.YawPi}}=(\psi -\psi _0)/\pi \) and \({\texttt {P.RollPi}}=(\phi -\phi _0)/\pi \), where \(\psi \) is the lifted yaw-angle and \(\phi \) the lifted roll-angle for points x in the enclosure given by P. The type Point is defined in the Ada package Rattleback.

Consider now a function \(g:D\rightarrow \mathcal {X}\) on a disk \(D=\{z\in {\mathbb {C}}:|z|<\rho \}\) with representable radius \(\rho >0\). Denote by \(\mathcal {G}\) the space of all such functions that admit a Taylor series representation \(g(z)=\sum _{n=0}^\infty b_nz^n\) and have a finite norm \(\Vert g\Vert =\sum _{n=0}^\infty \Vert b_n\Vert \rho ^n\). Here, \(b_n\in \mathcal {X}\) for all n. A large class of enclosures for functions in this space is determined by the type Taylor1, which is defined in the Ada package Taylors1. Since this type has been used several times before, we refer to (Arioli and Koch 2018) for a rough description and to Supplementary material for details.

Our integration method uses a much simpler type named Taylor. A Taylor P is an array(0..d) of Scalar, where d is some fixed positive integer. The associated enclosure is the set

Here, \({\texttt {P(d)}}_\mathcal {G}\) is obtained from \(S={\texttt {P(d)}}_\mathcal {X}\) by replacing each ball \({\texttt {B(n)}}_\mathcal {X}\) in the decomposition (6.1) of S by the corresponding ball \({\texttt {B(n)}}_\mathcal {G}\). The first d terms in (6.2) provide enclosures for the polynomial part \(g_{d-1}\) of a vector \(g\in \mathcal {G}\), as defined in (4.5), while the last term provides an enclosure for both the coefficient \(b_d\) and the remainder \(g-g_d\). A precise definition of the type Taylor is given in the package ObO (an abbreviation for order-by-order). For analytic curves with values in \(\mathcal {X}^3\), we use Taylor-type enclosures for each component via a type TVector3, which is simply an array(1..3) of Taylor.

Enclosures for real analytic curves \(t\mapsto x(t)\) on D are defined by the type Curve that is introduced in the package Rattleback.Flows. In our programs, a Curve C is a quadruple (C.Alpha,C.Beta,C.Gamma,C.M) whose four components are of type TVector3. These enclosures are used in our bounds on the integral operator \(\mathcal {K}\) defined by (4.3).

We note that the types Point, Taylor, and Curve depend on choice of the Banach algebra \(\mathcal {X}\) via the type Scalar. In the case \(\mathcal {X}={\mathbb {R}}\), we instantiate the package Rattleback and others with Scalar => Ball. For our analysis of the characteristic polynomial (5.6), which depends on two parameters \(\omega _3\) and \(\lambda \), we use an instantiation of Rattleback with Scalar => TTay, where TTay defines enclosures for real analytic functions of two variables. (Hurwitz.TTay is a Taylor series in \(\lambda \) whose coefficients are Taylor series in \(\omega _3\).)

Another Banach algebra \(\mathcal {T}\) that is very useful consists of pairs \((u,u')\), where \(u\in \mathcal {X}\) and \(u'\in \mathcal {X}^n\). Addition and multiplication by scalars is as in \(\mathcal {X}^{n+1}\). The product of \((u,u')\) with \((v,v')\) is defined as \((uv,uv'+u'v)\). If one thinks of u and v as being functions of n parameters, then \(u'\) and \(v'\) transform like gradients. Enclosures for element in \(\mathcal {T}\) use a data type Tangent that is defined in the package Tangents. They are used to obtain bounds on the derivative of Poincaré maps (by using Scalar => Tangent) without first having to determine a formula for the derivative.

6.2 Bounds and Procedures

The next step is to implement bounds on maps between the various spaces. By a bound on a map \(f:\mathcal {X}\rightarrow \mathcal {Y}\), we mean a function F that assigns to a set \(X\subset \mathcal {X}\) of a given type (say Xtype) a set \(Y\subset \mathcal {Y}\) of a given type (say Ytype), in such a way that \(y=f(x)\) belongs to Y whenever \(x\in X\). In Ada, such a bound F can be implemented by defining an appropriate procedure F(X: in Xtype; Y: out Ytype). In practice, the domain of F is restricted: if X does not belong to the domain of F, the F raises an Exception which causes the program to abort.

The type Ball used here is defined in the package MPFR.Floats.Balls, using centers B.C of type MPFloat and radii \(\texttt {B.R}\ge 0\) of type LLFloat. Data of type MPFloat are high-precision floating point numbers, and the elementary operations for this type are implemented by using the open source MPFR library [27]. Data of type LLFloat are standard extended floating-point numbers [26] of the type commonly handled in hardware. Both types support controlled rounding. Bounds on the basic operations for this type Ball are defined and implemented in MPFR.Floats.Balls.

The Ada package that defines a certain type also defines (usually) bounds on the basic operations that involve this type. In particular, bounds on the maps \(g\mapsto g^{-1}\) and \(g\mapsto g^{1/2}\) on \(\mathcal {G}\) are implemented by the procedures Inv and Sqrt, respectively, in the package Obo that defines the type Taylor. In the spirit of order-by-order computations, these procedures include an argument Deg for the order (degree) that needs to be processed. At the top degree, which corresponds to the last term in (6.2), the procedures Inv and Sqrt determine bounds on the higher order terms, using the estimate given in Propositions 4.1 and 4.2, respectively.

Bounds involving the type Point are defined mostly in Rattleback. This includes a procedure Ham that implements a bound on the energy function \(\mathcal {H}\), and a procedure VecField that implements a bound on the vector field \(({\varvec{\gamma }},{\varvec{M}})\mapsto (\dot{\varvec{\gamma }},\dot{\varvec{M}})\). Several other procedures deal with the construction of points (initial conditions) with prescribed properties; their role is described by short comments in our programs.

The package Rattleback.Flows implements bounds on the time-t maps \(\Phi _t\) and various Poincaré maps. The first few procedures deal with the order-by-order computation of cross products and other basic operations. They maintain temporary data, so that lower order computations do not have to be repeated. And some of them can run sub-tasks in parallel, using the standard tasking facilities that are part of Ada [24]. The procedure VecField combines these computations into a bound on the vector field \(x\mapsto \dot{x}\) as maps between enclosures of the type Curve.

A bound on the solution of the integral equation \(\mathcal {K}(x)=x\) is implemented by the procedure Integrate. After the polynomial part \(x_d\) of the solution x has been determined, a bound on \(x-x_d\) is obtained by first guessing a possible enclosure \(S\subset \mathcal {G}^6\) for this function, and then checking that \(x_d+S\) is mapped into itself by the operator \(\mathcal {K}\). Using Theorem 5.1 in Arioli and Koch (2015), this guarantees that \(\mathcal {K}\) has a unique fixed point in \(x_d+S\). We note that Integrate first determines a proper value of the domain parameter \(\rho \) for the space \(\mathcal {G}=\mathcal {G}_\rho \). This defines the time-increments \(\tau _k-\tau _{k-1}\) used in (4.1).

Poincaré maps are now straightforward to implement. The type Flt_Affine specifies an affine functional \(F:\mathcal {X}^6\rightarrow \mathcal {X}\) whose zero defines a Poincaré section \(\Sigma \). To be more specific, \(F({\varvec{\gamma }},{\varvec{M}})\) only depends on \({\varvec{M}}\). Besides an argument F that specifies F, the procedure Sign_Poincare also includes an argument TNeed for the time \(\tau '\) that enters the definition (4.14). Now, Sign_Poincare uses (an instantiation of) the procedure Generic_Flow to iterate Integrate, until \(\Phi _t(x)\) with \(t\ge \tau '\) lies on \(\Sigma \). A bound on the zero of \(t\mapsto F(\Phi _t(x))\) is determined by using the Newton-based procedure ObO.FindZero. We note that t is of type Scalar, so the stopping time \(\tau =\tau (x)\) can depend on parameters, if \(\mathcal {X}\ne {\mathbb {R}}\).

The angles \(\psi _0\) and \(\phi _0\) are computed via their definitions (2.1) and (2.3), respectively. This involves integrating the equations \(\dot{\varvec{\alpha }}={\varvec{\alpha }}\times {\varvec{\omega }}\) and \(\dot{\varvec{\beta }}={\varvec{\beta }}\times {\varvec{\omega }}\) besides (4.12). The lifts of these angles to \({\mathbb {R}}\) are obtained by estimating their derivatives

along the flow. This is done via the procedures YawNumPi and RollNumPi, respectively, in the package Rattleback.Flows. The values of \(\psi \) and \(\phi \) at the Poincaré time \(\tau (x)\) and the intermediate times \(\tau _0,\tau _1,\ldots ,\tau _m\) are shown on the standard output. Our claims concerning reversals and roll-over can be (and have been) verified by inspecting the output of our programs.

6.3 Main Programs

Our proof of Lemma 4.3 is organized in the programs R_Der and R_Point. The initial point \(x=({\varvec{\gamma }},\mathbf{{0}})\) is determined from data of type Point that are read from a file (Supplementary material). It suffices to control the map P described before Lemma 4.3 on a square centered at \(\gamma =(\gamma _1,\gamma _2)\). The chosen square is \(2\varepsilon \times 2\varepsilon \), with \(\varepsilon =2^{-2000}\). This square also determines a domain \(B_g\subset {\mathbb {S}}_2\) via the constraint \(\Vert {\varvec{\gamma }}\Vert =1\).

After instantiating the necessary packages, the program R_Der computes an enclosure for the derivative DP on R and saves the result to a file. It also verifies that \(B_g\times B_{\scriptscriptstyle M}\) belongs to the domain of the Poincaré map for some open neighborhood \(B_{\scriptscriptstyle M}\) of the origin in \({\mathbb {R}}^3\). The program R_Point uses the above-mentioned enclosure for DP to verify that a quasi-Newton map associated with P maps R into its interior.

The necessary bounds for Lemma 4.4 are verified using the program RSpR_Point. The program takes an argument Sign_DG1 with values in \(\{-1,0,1\}\). The starting point is of the form \(x=({\varvec{\gamma }},\mathbf{{0}})\), with \(\gamma _2=-125174\times 2^{-17}\). If \(\texttt {Sign}\_\texttt {DG1}=0\), then the value of \(\gamma _1\) ranges in the interval \([-\delta ,\delta ]\), where \(\delta =2^{-2500}\). To be more precise, the Point-type enclosure P0 for x is chosen to include an open subset of \(\mathcal {M}\), with \({\texttt {P0.Gamma(1)}}\) including \([-\delta ,\delta ]\). In this case, RSpR_Point merely verifies that P0 is included in the domain of the associated Poincaré map. If \({\texttt {Sign}}\_\texttt {DG1}=\pm 1\), then \(\gamma _1=\pm \delta \). In these cases, RSpR_Point computes and shows an interval containing \(\tilde{\gamma }_3=P(\gamma )\). Inspecting the output confirms that the sign of \(\tilde{\gamma }_3\) agrees with the sign of Sign_DG1. Thus, there exist a value \(\gamma _1\in [-\delta ,\delta ]\) such that \(P(\gamma _1,\gamma _2)=0\).

An additional program RSpR_Der can be used (optionally) to prove that DP is nonzero. This implies that the two-parameter family mentioned in Lemma 4.4 is real analytic.

The bounds referred to in Remark 2 are verified via the program Roll_Point. This program is analogous to RSpR_Point. And there is an analogue Roll_Der of RSpR_Der.

The bounds needed for Lemma 5.1 are organized by the programs Het, HetRS, and Basin. Both Het and HetRS run Plain_Flow for a time \(\tau =100\). The initial point x is as described in Sect. 5. Enclosures for x and \(\Phi _\tau (x)\) are saved to data files. These files are then read by the procedure Check in Basin.

An upper bound LambdaMax on the spectrum of the (negative) linear operator \(\Lambda \) defined by (5.8) is determined and shown by Basin.Show_Linear. This is done by via approximate diagonalization. The matrix that diagonalizes \(\Lambda \) approximately also defines the inner product used in (5.10). Then, Basin.Show_NonLinear computes and shows an upper bound on the absolute value of the ratio \(\langle y,\vartheta ^{-1}N(\vartheta y)\rangle /\langle y,y\rangle \) for \(y\in \partial \mathcal {B}\). By construction, this bound is non-decreasing in \(\vartheta \), so it suffices to consider \(\vartheta =1\). At the end, (5.10) can be (and has been) checked by inspecting the output from Basic.

All of these programs were run successfully on a standard desktop machine, using a public version of the gcc/gnat compiler (https://www.gnu.org/software/gnat/). Instructions on how to compile and run these programs can be found in the file README that is included with the source code in Supplementary material. We note that the running times are rather long-days for some programs. This is due to the fact that our orbits are quite long, and that we need to use MPFR and rather high Taylor orders to get the accuracy needed.

Change history

21 July 2022

Open access funding note has been updated

References

A free-software compiler for the Ada programming language, which is part of the GNU Compiler Collection; see https://gnu.org/software/gnat/

Ada Reference Manual, ISO/IEC 8652:2012(E), Available e.g. at www.ada-auth.org/arm.html

Arioli, G., Koch, H.: The source code for our programs, data files, and some videos are available at https://web.ma.utexas.edu/users/koch/papers/rback/

Arioli, G., Koch, H.: Existence and stability of traveling pulse solutions of the FitzHugh–Nagumo equation. Nonlinear Anal. 113, 51–70 (2015)

Arioli, G., Koch, H.: Spectral stability for the wave equation with periodic forcing. J. Differ. Equ. 265, 2470–2501 (2018)

Awrejcewicz, J., Kudra, G.: Rolling resistance modelling in the Celtic stone dynamics. Multibody Syst. Dyn. 45, 155–167 (2019)

Bondi, H.: The rigid body dynamics of unidirectional spin. Proc. R. Soc. Lond. A 405, 265–274 (1986)

Borisov, A.V., Mamaev, I.S.: Strange attractors in rattleback dynamics. Physics Uspekhi 46, 393–403 (2003)

Borisov, A.V., Kilin, A.A., Mamaev, I.S.: New effects in dynamics of rattlebacks. Dokl. Phys. 51, 272–275 (2006)

Borisov, A.V., Jalnine, A.Y., Kuznetsov, S.P., Sataev, I.R., Sedova, J.V.: Dynamical phenomena occurring due to phase volume compression in nonholonomic model of the rattleback. Regul. Chaot. Dyn. 17, 512–532 (2012)

Chaplygin, S.A.: On motion of heavy rigid body of revolution on horizontal plane. Proc. Soc. Friends Nat. Sci. 9, 10–16 (1897)

Devaney, R.L.: Reversible diffeomorphisms and flows. Trans. AMS 218, 89–113 (1976)

Devaney, R.L.: Blue sky catastrophes in reversible and Hamiltonian systems. Indiana Univ. Math. 26, 247–263 (1977)

Dullin, H.R., Tsygvintsev, A.V.: On the analytic non-integrability of the Rattleback problem. Ann. Faculté Sci. Toulouse Math. 6(17), 495–517 (2008)

Franti, L.: On the rotational dynamics of the rattleback. Cent. Eur. J. Phys. 11, 162–172 (2013)

Garcia, A., Hubbard, M.: Spin reversal of the rattleback: theory and experiment. Proc. R. Soc. Lond. A 418, 165–197 (1988)

Golubitsky, M., Krupa, M., Lim, C.: Time-reversibility and particle sedimentation. SIAM J. Appl. Math. 51, 49–72 (1991)

Gonchenko, S.V., Ovsyannikov, I.I., Simó, C., Turaev, D.: Three-dimensional Hénon-like maps and wild Lorenz-like attractors. Int. J. Bifurc. Chaos 15, 3493–3508 (2005)

Kondo, Y., Nakanishi, H.: Rattleback dynamics and its reversal time of rotation. Phys. Rev. E 95, 062207 (2017). (11pp)

Lamb, J.S.W., Roberts, J.A.G.: Time-reversal symmetry in dynamical systems: a survey. Physica D 112, 1–39 (1998)

Lindberg, R.E., Longman, R.W.: On the dynamic behavior of the wobblestone. Acta Mech. 49, 81–94 (1983)

Markeev, A.P.: On the dynamics of a solid on an absolutely rough plane. J. Appl. Math. Mech. 47, 473–478 (1983)

Pascal, M.: Asymptotic solution of the equations of motion for a Celtic stone. PMM U.S.S.R 47, 269–276 (1994)

The Institute of Electrical and Electronics Engineers, IEEE Standard for Floating-Point Arithmetic. In: IEEE Std 754-2019, 1–84 (2019). https://doi.org/10.1109/IEEESTD.2019.8766229

The MPFR library for multiple-precision floating-point computations with correct rounding; see www.mpfr.org/

Walker, G.T.: On a curious dynamical property of celt. Proc. Camb. Philos. Soc. 8, 305–306 (1895)

Walker, G.T.: On a dynamical top. Q. J. Pure Appl. Math. 28, 175–184 (1896)

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by George Haller.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

G. Arioli: Supported in part by the PRIN project “Equazioni alle derivate parziali e disuguaglianze analitico-geometriche associate”

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Arioli, G., Koch, H. Some Reversing Orbits for a Rattleback Model. J Nonlinear Sci 32, 38 (2022). https://doi.org/10.1007/s00332-022-09797-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00332-022-09797-7