Abstract

We analyze the harvesting and stocking of a population that is affected by random and seasonal environmental fluctuations. The main novelty comes from having three layers of environmental fluctuations. The first layer is due to the environment switching at random times between different environmental states. This is similar to having sudden environmental changes or catastrophes. The second layer is due to seasonal variation, where there is a significant change in the dynamics between seasons. Finally, the third layer is due to the constant presence of environmental stochasticity—between the seasonal or random regime switches, the species is affected by fluctuations which can be modelled by white noise. This framework is more realistic because it can capture both significant random and deterministic environmental shifts as well as small and frequent fluctuations in abiotic factors. Our framework also allows for the price or cost of harvesting to change deterministically and stochastically, something that is more realistic from an economic point of view. The combined effects of seasonal and random fluctuations make it impossible to find the optimal harvesting-stocking strategy analytically. We get around this roadblock by developing rigorous numerical approximations and proving that they converge to the optimal harvesting-stocking strategy. We apply our methods to multiple population models and explore how prices, or costs, and environmental fluctuations influence the optimal harvesting-stocking strategy. We show that in many situations the optimal way of harvesting and stocking is not of threshold type.

Similar content being viewed by others

Notes

The value function in a switching state is influenced by the value function in the other state, since there is a probability of transition.

The (price) elasticity of a demand function is a measure of the responsiveness of the quantity demanded to price changes, measured in an unit free manner, defined as \(\epsilon = \frac{du}{dp} \cdot \frac{p}{u}\), and widely used in economics. \(\epsilon = -1\) is defined as “unit elasticity."

Observe that we have not considered models with a distinction between young and old. All individuals are the same at all points in time, and have the same harvested value.

References

Abakuks A, Prajneshu (1981) An optimal harvesting policy for a logistic model in a randomly varying environment. Math Biosci 55(3–4):169–177

Alvarez LH (2000) Singular stochastic control in the presence of a state-dependent yield structure. Stochastic Processes Appl 86(2):323–343

Alvarez E LH, Hening A (2020) Optimal sustainable harvesting of populations in random environments. Stochast Processes Appl

Alvarez LHR, Koskela E (2007) Optimal harvesting under resource stock and price uncertainty. J Econ Dyn Control 31:2461–2485

Alvarez E LHR, Shepp LA (1998) Optimal harvesting of stochastically fluctuating populations. J Math Biol 37(2):155–177

Alvarez LHR, Shepp LA (1998) Optimal harvesting of stochastically fluctuating populations. J Math Biol 37:155–177

Asche F, Chen Y, Smith MD (2015) Economic incentives to target species and fish size: prices and fine-scale product attributes in norwegian fisheries. ICES J Mar Sci 72(3):733–740

Bao J, Shao J (2016) Permanence and extinction of regime-switching predator-prey models. SIAM J Math Anal 48(1):725–739

Benaïm M, Lobry C (2016) Lotka Volterra in fluctuating environment or “how switching between beneficial environments can make survival harder. Ann Appl Probab (to appear)

Bohner M, Streipert S (2016) Optimal harvesting policy for the Beverton-Holt model. Math Biosci Eng 13(4):673

Bourquin A (2021) Persistence in randomly switched Lotka–Volterra food chains. arXiv preprint arXiv:2109.03003

Brauer F, Sànchez DA (2003) Periodic environments and periodic harvesting. Nat Resour Model 16(3):233–244

Braverman E, Mamdani R (2008) Continuous versus pulse harvesting for population models in constant and variable environment. J Math Biol 57(3):413–434

Chesson P (2000) General theory of competitive coexistence in spatially-varying environments. Theor Popul Biol 58(3):211–237

Chesson PL, Ellner S (1989) Invasibility and stochastic boundedness in monotonic competition models. J Math Biol 27(2):117–138

Clark CW (2010) Mathematical bioeconomics, pure and applied mathematics (Hoboken), 3rd edn. Wiley, Hoboken. The mathematics of conservation

Cohen A, Hening A, Sun C (2022) Optimal ergodic harvesting under ambiguity. SIAM J Control Optim

Cromer T (1988) Harvesting in a seasonal environment. Math Comput Model 10(6):445–450

Cushing JM (1977) Periodic time-dependent predator-prey systems. SIAM J Appl Math 32(1):82–95

Cushing JM (1980) Two species competition in a periodic environment. J Math Biol 10(4):385–400

Du NH, Hening A, Nguyen DH, Yin G (2021) Dynamical systems under random perturbations with fast switching and slow diffusion: hyperbolic equilibria and stable limit cycles. J Differ Equ 293:313–358

Evans SN, Hening A, Schreiber SJ (2015) Protected polymorphisms and evolutionary stability of patch-selection strategies in stochastic environments. J Math Biol 71(2):325–359

Fan M, Wang K (1998) Optimal harvesting policy for single population with periodic coefficients. Math Biosci 152(2):165–178

Hening A (2021) Coexistence, extinction, and optimal harvesting in discrete-time stochastic population models. J Nonlinear Sci 31(1):1–50

Hening A, Li Y (2020) Stationary distributions of persistent ecological systems. arXiv preprint arXiv:2003.04398

Hening A, Nguyen D (2018) Coexistence and extinction for stochastic Kolmogorov systems. Ann Appl Probab 28(3):1893–1942

Hening A, Nguyen DH (2020) The competitive exclusion principle in stochastic environments. J Math Biol 80:1323–1351

Hening A, Strickler E (2019) On a predator-prey system with random switching that never converges to its equilibrium. SIAM J Math Anal 51(5):3625–3640

Hening A, Tran KQ (2020) Harvesting and seeding of stochastic populations: analysis and numerical approximation. J Math Biol 81:65–112

Hening A, Nguyen DH, Ungureanu SC, Wong TK (2019a) Asymptotic harvesting of populations in random environments. J Math Biol 78(1–2):293–329

Hening A, Tran K, Phan T, Yin G (2019b) Harvesting of interacting stochastic populations. J Math Biol 79(2):533–570

Hening A, Nguyen DH, Nguyen N, Watts H (2021) Random switching in an ecosystem with two prey and one predator. arxiv

Henson SM, Cushing JM (1997) The effect of periodic habitat fluctuations on a nonlinear insect population model. J Math Biol 36(2):201–226

Kharroubi I, Lim T, Vath VL (2019) Optimal exploitation of a resource with stochastic population dynamics and delayed renewal. J Math Anal Appl 477(1):627–656

Kushner HJ (1984) Approximation and weak convergence methods for random processes, with applications to stochastic systems theory. MIT Press, Cambridge

Kushner HJ (1990) Numerical methods for stochastic control problems in continuous time. SIAM J Control Optim 28(5):999–1048

Kushner HJ, Dupuis PG (1992) Numerical methods for stochastic control problems in continuous time. Springer, Berlin

Lungu EM, Øksendal B (1997) Optimal harvesting from a population in a stochastic crowded environment. Math Biosci 145(1):47–75

Miltersen KR (2003) Commodity price modelling that matches current observables: a new approach. Quant Finan 3(1):51–58. https://doi.org/10.1080/713666159

Nguyen DH, Yin G, Zhu C (2017) Certain properties related to well posedness of switching diffusions. Stochastic Process Appl 127(10):3135–3158

Osborne MF (1959) Brownian motion in the stock market. Oper Res 7(2):145–173

Pooley SG (1987) Demand considerations in fisheries management–Hawaii’s market for bottom fish. In: Tropical snappers and groupers: biology and fisheries management, pp 605–638

Rinaldi S, Muratori S, Kuznetsov Y (1993) Multiple attractors, catastrophes and chaos in seasonally perturbed predator-prey communities. Bull Math Biol 55(1):15–35

Schreiber SJ, Benaïm M, Atchadé KAS (2011) Persistence in fluctuating environments. J Math Biol 62(5):655–683

Song Q, Zhu C (2016) On singular control problems with state constraints and regime-switching: a viscosity solution approach. Automatica 70:66–73

Song QS, Yin G, Zhang Z (2006) Numerical methods for controlled regime-switching diffusions and regime-switching jump diffusions. Automatica 2(7):1147–1157

Song Q, Stockbridge RH, Zhu C (2011) On optimal harvesting problems in random environments. SIAM J Control Optim 49(2):859–889

Sylvia G (1994) Market information and fisheries management: a multiple-objective analysis. North Am J Fish Manag 14(2):278–290

Tran K, Yin G (2015) Optimal harvesting strategies for stochastic competitive Lotka-Volterra ecosystems. Automatica 55:236–246

Tran K, Yin G (2017) Optimal harvesting strategies for stochastic ecosystems. IET Control Theory Appl 11(15):2521–2530

White ER, Hastings A (2020) Seasonality in ecology: progress and prospects in theory. Ecol Complex 44:100867

Winsor CP (1932) The gompertz curve as a growth curve. Proc Natl Acad Sci USA 18(1):1

Xu C, Boyce MS, Daley DJ (2005) Harvesting in seasonal environments. J Math Biol 50(6):663–682

Yin GG, Zhu C (2009) Hybrid switching diffusions: properties and applications, vol 63. Springer, Berlin

Yin G, Zhang Q, Badowski G (2003) Discrete-time singularly perturbed Markov chains: aggregation, occupation measures, and switching diffusion limit. Adv Appl Probab 35:449–476

Zeide B (1993) Analysis of growth equations. For Sci 39(3):594–616

Zhu C (2011) Optimal control of the risk process in a regime-switching environment. Automatica 47:1570–1579

Zhu C, Yin G (2009) On competitive Lotka–Volterra model in random environments. J Math Anal Appl 357(1):154–170

Acknowledgements

A. Hening is supported by the NSF through the grant DMS 2147903. K. Q. Tran is supported by the National Research Foundation of Korea Grant funded by the Korea Government (MIST) NRF-2021R1F1A1062361.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Transition probabilities

1.1 A.1. The formulation from Sect. 2

We first look at the details we need for the setting from Sect. 2. With the notation defined in Sect. 2.1, let \((x, \alpha , u)\in S_h\times {\mathcal {M}}\times \mathcal {U}\) and denote by \({\mathbb E}^{h, u}_{x, \alpha , n}\), \({\mathbb Cov}^{h, u}_{x, \alpha , n}\) the conditional expectation and covariance given by

respectively. Define \(\Delta X^h_n = X^h_{n+1}-X^h_n\). Our objective in this subsection is to define transition probabilities \(q^h ((x,k), (y, l) | u)\) so that the controlled Markov chain \(\{(X^h_n, \alpha ^h_n)\}\) is locally consistent with respect to the controlled diffusion (2.3). By this we mean that the following conditions hold:

Using the procedure developed by Kushner (1990), for \((x, \alpha )\in S_h\times {\mathcal {M}}\) and \(u\in \mathcal {U}\), we define

where for a real number r, \(r^+=\max \{r, 0\}\), \(r^-=-\min \{0, r\}\). Set \(q^h \left( (x, k), (y, l)|u\right) =0\) for all unlisted values of \((y, l)\in S_h\times {\mathcal {M}}\). Note that \( \sup _{x, k, u} \Delta t^h (x, k, u)\rightarrow 0\) as \(h\rightarrow 0\). Using the above transition probabilities, we can check that the locally consistent conditions of \(\{(X^h_n, \alpha ^h_n)\}\) are satisfied.

Lemma A.1

The Markov chain \(\{(X^h_n, \alpha ^h_n)\}\) with transition probabilities \(\{q^h(\cdot )\}\) defined in (A.2) satisfies the local consistence in (A.1).

1.2 A.2. Variable effort harvesting-stocking strategies

For \((x, \alpha , u)\in S_h\times {\mathcal {M}}\times \mathcal {U}\), let \({\mathbb E}^{h, u}_{x, \alpha , n}\), \({\mathbb Cov}^{h, u}_{x, \alpha , n}\) denote the conditional expectation and covariance given by

respectively. Define \(\Delta X^h_n = X^h_{n+1}-X^h_n\). In order to approximate the process \((X(\cdot ), \alpha (\cdot ))\) given in (3.1), the controlled Markov chain \(\{(X^h_n, \alpha ^h_n)\}\) must be locally consistent with respect to \((X(\cdot ), \alpha (\cdot ))\) in the sense that the following conditions hold

To this end, we define the transition probabilities \(q^h ((x,k), (y, l) | u)\) as follows. For \((x, k)\in S_h\times {\mathcal {M}}\) and \(u\in \mathcal {U}\), let

Set \(q^h \left( (x, k), (y, l)|u\right) =0\) for all unlisted values of \((y, l)\in S_h\times {\mathcal {M}}\).

1.3 A.3. Uncertain price functions

Let \((\phi , x, \alpha , u)\in \widehat{S}_h\times {\mathcal {M}}\times \mathcal {U}\) and denote by \({\mathbb E}^{h, u}_{\phi , x, \alpha , n}\), \({\mathbb Cov}^{h, u}_{\phi , x, \alpha , n}\) the conditional expectation and covariance given by

respectively. Define \(\Delta X^h_n = X^h_{n+1}-X^h_n\) and \(\Delta \Phi ^h_n = \Phi ^h_{n+1}-\Phi ^h_n\). In order to approximate \((\Phi (\cdot ), X(\cdot ), \alpha (\cdot ))\) given by (2.3)-and-(3.2), the controlled Markov chain \(\{(\Phi ^h_n, X^h_n, \alpha ^h_n)\}\) must be locally consistent with respect to \((\Phi (\cdot ), X(\cdot ), \alpha (\cdot ))\) in the sense that the following conditions hold:

To this end, we define the transition probabilities \(q^h ((\phi , x,k), (\psi , y, l) | u)\) as follows. For \((\phi , x, k)\in \widehat{S}_h\times {\mathcal {M}}\) and \(u\in \mathcal {U}\), let

Set \(q^h \left( (\phi , x, k), (\psi , y, l)|u\right) =0\) for all unlisted values of \((\psi , y, l)\in \widehat{S}_h\times {\mathcal {M}}\).

1.4 A.4. The combined effects of seasonality and Markovian switching

Recall that \(\widetilde{S}_{h}: = \{(\gamma , x)=(k_1 h_1, k_2 h_2)'\in \mathbb R^2: k_i\in \mathbb {Z}_{\ge 0}, k_1\le T/h_1\}.\) Let \((\gamma , x, \alpha , u)\in \widetilde{S}_h\times {\mathcal {M}}\times \mathcal {U}\) and denote by \({\mathbb E}^{h, u}_{\gamma , x, \alpha , n}\), \({\mathbb Cov}^{h, u}_{\gamma , x, \alpha , n}\) the conditional expectation and covariance given by

respectively. Define \(\Delta X^h_n = X^h_{n+1}-X^h_n\) and \(\Delta \Gamma ^h_n = \Gamma ^h_{n+1}-\Gamma ^h_n\). In order to approximate \((\Gamma (\cdot ), X(\cdot ), \alpha (\cdot ))\) given by (3.4), the controlled Markov chain \(\{(\Gamma ^h_n, X^h_n, \alpha ^h_n)\}\) must be locally consistent with respect to \((\Gamma (\cdot ), X(\cdot ), \alpha (\cdot ))\) in the sense that the following conditions hold:

To this end, we define the transition probabilities \(q^h ((\gamma , x,k), (\lambda , y, l) | u)\) as follows. Let \((\gamma , x, k)\in \widetilde{S}_h\times {\mathcal {M}}\) and \(u\in \mathcal {U}\). If \(\gamma +h_1=T\), \(\gamma +h_1\) in the following definition is understood as 0. Let

Set \(q^h \left( (\gamma , x, k), (\lambda , y, l)|u\right) =0\) for all unlisted values of \((\lambda , y, l)\in \widetilde{S}_h\times {\mathcal {M}}\).

Appendix B: Continuous–time interpolation

We will present the convergence analysis for the formulation in Sect. 2. The other formulas can be handled in a similar way. Our procedure and methods are similar to those in Kushner (1990), Kushner and Dupuis (1992), Song et al. (2006). The convergence result is based on a continuous-time interpolation of the controlled Markov chain, which will be constructed to be piecewise constant on the time interval \([t^h_n, t^h_{n+1}), n\ge 0\). To this end, we define \(n^h(t)=\max \{n: t^h_n\le t\}, t\ge 0.\) The piecewise constant interpolation of \(\{(X^h_n,\alpha ^h_n, U^h_n)\}\), denoted by \(\big (X^h(t),\alpha ^h(t), U^h(t)\big )\) is naturally defined as

Define \(\mathcal {F}^h(t)=\sigma \{X^h(s), \alpha ^h(s), U^h(s): s\le t\}=\mathcal {F}^h_{n^h(t)}\). Also define

It is obvious that

Recall that \(\Delta t^h_m = h^2/Q_h(X^h_m, \alpha ^h_m, U^h_m)\). It follows that

with \(\{\varepsilon _1^h(\cdot )\}\) being an \(\mathcal {F}^h(t)\)-adapted process satisfying

For simplicity, we suppose that \(\inf \limits _{(x, k)}1/|\sigma (x, k)|>0\) [if this is not the case, we can use the trick from Kushner and Dupuis (1992, pp. 288–289)]. Define \(w^h(\cdot )\) by

Then we can write

with \(\{\varepsilon _2^h(\cdot )\}\) being an \(\mathcal {F}^h(t)\)-adapted process satisfying

Using (B.3) and (B.5), we can write (B.2) as

where \(\varepsilon ^h(\cdot )\) is an \(\mathcal {F}^h(t)\)-adapted process satisfying

The performance function from (2.9) can be rewritten as

Appendix C: Convergence

The convergence of the algorithms is established via the weak convergence method. To proceed, let \(D[0, \infty )\) denote the space of functions that are right continuous and have left-hand limits endowed with the Skorokhod topology. All the weak analysis will be on this space or its k-fold products \(D^k[0, \infty )\) for appropriate k. We follow Kushner and Dupuis (1992, Section 4.6) in order to introduce relaxed control representations, which we need in order to prove the weak convergence.

Definition C.1

Let \(\mathcal {B}(\mathcal {U}\times [0, \infty ))\) be the \(\sigma \)-algebra of Borel subsets of \(\mathcal {U}\times [0, \infty )\). An admissible relaxed control, which we will call a relaxed control, \(m(\cdot )\) is a measure on \(\mathcal {B}(\mathcal {U}\times [0, \infty ))\) such that

Given a relaxed control \(m(\cdot )\), there is a probability measure \(m_t(\cdot )\) defined on the \(\sigma \)-algebra \(\mathcal {B} (\mathcal {U})\) such that \(m(du dt)=m_t(du)dt\). Let \(\mathcal {R}(\mathcal {U}\times [0, \infty ))\) denote the set of all relaxed controls on \(\mathcal {U}\times [0, \infty )\).

With the given probability space, we say that \(m(\cdot )\) is an admissible relaxed (stochastic) control if (i) for each fixed \(t\ge 0\), \(m(t, \cdot )\) is a random variable taking values in \(\mathcal {R}(\mathcal {U}\times [0, \infty ))\), and for each fixed \(\omega \), \(m(\cdot , \omega )\) is a deterministic relaxed control; (ii) the function defined by \(m(A\times [0, t])\) is \(\mathcal {F}(t)\)-adapted for any \(A\in \mathcal {B}(\mathcal {U})\). As a result, with probability one, there is a measure \(m_t(\cdot , \omega )\) on the Borel \(\sigma \)-algebra \(\mathcal {B}(\mathcal {U})\) such that \(m(dcdt) = m_t(dc)dt\).

Remark C.2

For a sequence of controls \(U^h=\{U^h_n: n\in \mathbb {Z}_{\ge 0}\}\), we define a sequence of relaxed control equivalence as follows. First, we set \(m_{t}^h(du)=\delta _{U^h(t)}(du)\) for \(t\ge 0\), where \(\delta _{U^h(t)}(\cdot )\) is the probability measure concentrated at \(U^h(t)\). Then \(m^h(\cdot )\) is defined by \(m^h(dudt)=m_t(du)dt\); that is,

Recall that \(\mathcal {R}(\mathcal {U}\times [0, \infty ))\) is the space of all relaxed controls on \(\mathcal {U}\times [0, \infty )\). Then \(\mathcal {R}(\mathcal {U}\times [0, \infty ))\) can be metrized using the Prokhorov metric in the usual way as in Kushner and Dupuis (1992, pp. 263–264). With the Prokhorov metric, \(\mathcal {R}(\mathcal {U}\times [0, \infty ))\) is a compact space. It follows that any sequence of relaxed controls has a convergent subsequence. Moreover, a sequence \((\eta _n)_{n\in \mathbb {N}}\) with \(\eta _n\in \mathcal {R}(\mathcal {U}\times [0, \infty ))\) converges to \(\eta \in \mathcal {R}(\mathcal {U}\times [0, \infty ))\) if and only if for any continuous functions with compact support \(\Psi (\cdot )\) on \(\mathcal {U}\times [0, \infty )\) one has

as \(n\rightarrow \infty \). Note that for a sequence of ordinary controls \(U^h=\{U^h_n: n\in \mathbb {Z}_{\ge 0}\}\), the associated relaxed control \({m}^h(dcdt)\) belongs to \(\mathcal {R}(\mathcal {U}\times [0, \infty ))\). Note also that the limits of the “relaxed control representations” of the ordinary controls might not be ordinary controls, but only relaxed controls.

With the notion of relaxed control given above, we can write (B.6) and (B.7) as

The value function defined in (2.6) can be rewritten as

where

Lemma C.3

The process \(\{\alpha ^h(\cdot )\}\) converges weakly to \(\alpha (\cdot )\), which is a Markov chain with generator \(Q=(q_{kl})\).

Proof

The proof is similar to that of Yin et al. (2003, Theorem 3.1) and is therefore omitted.

Theorem C.4

Suppose Assumption 2.1 holds. Let the chain \(\{(X^h_n, \alpha ^h_n) \}\) be constructed using the transition probabilities defined in (A.2), \(\big (X^h(\cdot ), \alpha ^h(\cdot ), w^h(\cdot )\big )\) be the continuous-time interpolation defined in (B.1) and (B.4), \(\{U^h_n\}\) be an admissible strategy and \(m^h(\cdot )\) be the relaxed control representation of \(\{U^h_n\}\). Then the following assertions hold.

-

(a)

The family of processes \(H^h(\cdot )=\big ({X}^h(\cdot ), {\alpha }^h(\cdot ), m^h(\cdot ), {w}^h(\cdot )\big )\) is tight. As a result, it has a weakly convergent subsequence with limit \(H(\cdot )= \big ({X}(\cdot ), {\alpha }(\cdot ), m(\cdot ), {w}(\cdot )\big ).\)

-

(b)

Let \(\mathcal { F}(t)\) be the \(\sigma \)-algebra generated by \(\big \{H(s): s\le t\big \}\). Then \(w(\cdot )\) is a standard \(\mathcal { F}(t)\) adapted Brownian motion, \(m(\cdot )\) is an admissible control, and

$$\begin{aligned} X(t) = x + \int _0^t \left[ b (X(s), \alpha (s))+u\right] m_s(du)ds + \int _0^t \sigma (X(s), \alpha (s)) dw(s), \quad t\ge 0. \end{aligned}$$(C.3)

Proof

(a) We use the tightness criteria in Kushner (1984, p. 47). Specifically, a sufficient condition for tightness of a sequence of processes \(\zeta ^h(\cdot )\) with paths in \(D^k[0, \infty )\) is that for any \(T_0, \rho \in (0, \infty )\),

The tightness of \(\{\alpha ^h(\cdot )\}\) is obvious by the preceding lemma. The process \(\{m^h(\cdot )\}\) is tight since its range space is compact. It is standard to show the tightness of \(\{w^h(\cdot )\}\) and \(\{X^h(\cdot )\}\)—see Song et al. (2006) for details. As a result, a subsequence of \(H^h(\cdot )=\big (X^h(\cdot ), \alpha ^h(\cdot ), m^h(\cdot ), w^h(\cdot )\big )\) converges weakly to the limit \(H(\cdot )=\big (X(\cdot ), \alpha (\cdot ), m(\cdot ), w(\cdot )\big )\).

(b) For the rest of the proof, we assume the probability space is chosen as required by Skorokhod representation. Thus, with a slight abuse of notation, we assume that \(H^h(\cdot )\) converges to the limit \(H(\cdot )\) with probability one via Skorokhod representation.

To characterize \({w}(\cdot )\), let \(\widetilde{k}, \widetilde{j}\) be arbitrary positive integers. Pick \(t>0\), \(\rho >0\) and \(\{t_k: k\le \widetilde{k}\}\) such that \(t_k\le t\le t+\rho \) for each k. Let \(\phi _j(\cdot )\) be real-valued continuous functions that are compactly supported on \(\mathcal {U}\times [0, \infty )\) for any \(j\le \widetilde{j}\). Define \((\phi _j, m)_t := \int _0^t \int _{\mathcal {U}} \phi _j (u, s)m(duds)\).

Let \(\Psi (\cdot )\) be a real-valued and continuous function of its arguments with compact support. By the definition of \(w^h(\cdot )\) in (B.4), \(w^h(\cdot )\) is an \(\mathcal {F}^h(t)\)-martingale. Thus, we have

and

By using the Skorokhod representation and the dominated convergence theorem, letting \(h\rightarrow 0\) in (C.4), we obtain

Since \({w}(\cdot )\) has continuous paths with probability one, (C.6) implies that \({w}(\cdot )\) is a continuous \({\mathcal {F}}(\cdot )\)-martingale. Moreover, (C.5) gives us that

Thus, the quadratic variation of w(t) is t, which implies that \(w(\cdot )\) is a standard \(\mathcal { F}(t)\) adapted Brownian motion.

By the convergence with probability one via Skorokhod representation, we have

uniformly in t as \(h\rightarrow 0\).

Also, by the weak convergence of \(\{m^h(\cdot )\}\), for any bounded and continuous function \(\phi (\cdot )\) with compact support, \((\phi , m^h)_\infty \rightarrow (\phi , m)_\infty \); see also Remark C.2. The weak convergence and the Skorokhod representation imply that

uniformly in t on any bounded interval with probability one.

For each positive constant \(\rho \) and process \({\nu }(\cdot )\), define the piecewise constant process \({\nu }^\rho (\cdot )\) by \({\nu }^\rho (t)={\nu }(k\rho )\) for \(t\in [k\rho , k\rho +\rho ), k\in \mathbb {Z}_{\ge 0}\). Then, by the tightness of \(({X}^h(\cdot ), {\alpha }^h(\cdot ))\), (C.1) can be rewritten as

where \(\lim \limits _{\rho \rightarrow 0}\limsup \limits _{h\rightarrow 0} {\mathbb E}|{\varepsilon }^{h, \rho }(t)|=0.\) Noting that the processes \({X}^{h, \rho }(\cdot )\) and \({\alpha }^{h, \rho }(\cdot )\) take constant values on the intervals \([n\rho , n\rho +\rho )\), we have

The integrals above are well defined with probability one since they can be written as finite sums. Combining the last results, we have

where \(\lim \limits _{\rho \rightarrow 0}E|{\varepsilon }^{ \rho }(t)|=0.\) Taking the limit as \(\rho \rightarrow 0\) finishes the proof.

Theorem C.5

Suppose Assumption 2.1 holds. Let \(V^h(x, \alpha )\) and \(V(x, \alpha )\) be the value functions defined in (2.6) and (2.9). Then \(V^h(x, \alpha )\rightarrow V(x, \alpha )\) as \(h\rightarrow 0\).

Proof

The proof is motivated by that of Theorem 7 in Song et al. (2006). Let \(U^h(\cdot )\) be an admissible strategy for the chain \(\{(X^h_n, \alpha ^h_n)\}\) and \(m^h(\cdot )\) be the corresponding relaxed control representation. Without loss of generality (passing to an additional subsequence if needed), we assume that \( \big ({X}^{{h}}(\cdot ), {\alpha }^{{h}}(\cdot ), {w}^{{h}}(\cdot ), {m}^{{h}}(\cdot )\big )\) converges weakly to \(\big ({X}(\cdot ), {\alpha }(\cdot ), {w}(\cdot ), {m}(\cdot )\big )\). We show that as \(h\rightarrow 0\) we have

From (C.2) one has

By the weak convergence and the Skorokhod representation, as \(h\rightarrow 0\),

This yields that \(J^h(x, \alpha , U^h(\cdot )) \rightarrow J(x, \alpha ,m(\cdot ))\) as \(h\rightarrow 0\).

Next, we prove that

For any small positive constant \(\varepsilon \), let \(\widetilde{U}^h(\cdot )\) be an \(\varepsilon \)-optimal harvesting strategy for the chain \(\{(X^h_n, \alpha ^h_n)\}\); that is,

Choose a subsequence \(\{\widetilde{h}\}\) of \(\{h\}\) such that

Let \(\widetilde{m}^{\widetilde{h}}(\cdot )\) be the relaxed control representation of \(\widetilde{U}^{\widetilde{h}}(\cdot )\). Without loss of generality (passing to an additional subsequence if needed), we may assume that \( \big ({X}^{\widetilde{h}}(\cdot ), {\alpha }^{\widetilde{h}}(\cdot ), {w}^{\widetilde{h}}(\cdot ), {m}^{\widetilde{h}}(\cdot )\big )\) converges weakly to \(\big ({X}(\cdot ), {\alpha }(\cdot ), {w}(\cdot ), {m}(\cdot )\big )\). It follows from our claim in the beginning of the proof that

where \(J(x, \alpha , m(\cdot ))\le V(x, \alpha )\) by the definition of \(V(x, \alpha )\). Since \(\varepsilon \) is arbitrarily small, (C.10) follows from (C.11) and (C.12).

To prove the reverse inequality \(\liminf \limits _{h} V^h(x, \alpha )\ge V(x, \alpha ) \), for any small positive constant \(\varepsilon \), we choose a particular \(\varepsilon \)-optimal strategy \(\overline{m}(\cdot )\) for (2.3)–(2.4) such that the approximation can be applied to the chain \(\{(X^h_n, \alpha ^h_n)\}\) and the associated cost compared with \(V^h(x, \alpha )\). By the chattering lemma [see for instance Kushner (1990, Theorem 3.1)], for any given \(\varepsilon >0\), there is a constant \(\lambda >0\) and an ordinary control \(\overline{U}^\varepsilon (\cdot )\) for (2.3)–(2.4) with the following properties:

-

(a)

\(\overline{U}^\varepsilon (\cdot )\) takes only finitely many values (denoted by \(\mathcal {U}_\varepsilon \) the set of all such values);

-

(b)

\(\overline{U}^\varepsilon (\cdot )\) is constant on the intervals \([k\lambda , k\lambda + \lambda )\) for \(k\in \mathbb {Z}_{\ge 0};\)

-

(c)

with \(\overline{m}^\varepsilon (\cdot )\) denoting the relaxed control representation of \(\overline{U}^\varepsilon (\cdot )\), we have that \((\overline{X}^\varepsilon (\cdot ), \overline{\alpha }^\varepsilon (\cdot ), \overline{w}^\varepsilon (\cdot ), \overline{m}^\varepsilon (\cdot ))\) converges weakly to \((\overline{X}(\cdot ), \overline{\alpha }(\cdot ), \overline{w}(\cdot ), \overline{m}(\cdot ))\) as \(\varepsilon \rightarrow 0\);

-

(d)

\(J(x, \alpha , \overline{m}^\varepsilon (\cdot ))\ge V(x, \alpha ) -\varepsilon \).

For \(\varepsilon >0\) and the corresponding \(\lambda \) in the chattering lemma, consider an optimal control problem for (2.3) subject to (2.4), but where the controls are constants over the interval \([k\lambda , k\lambda +\lambda )\) for \(k\in \mathbb {Z}_{\ge 0}\) and take values in \(\mathcal {U}_\varepsilon \) (the set of control values of \(\overline{U}^\varepsilon (\cdot )\)). This corresponds to controlling the discrete-time Markov process that is obtained by sampling \(X(\cdot )\) and \(\alpha (\cdot )\) at times \(k\lambda \) for \(k\in \mathbb {Z}_{\ge 0}\). Let \(\widehat{U}^\varepsilon (\cdot )\) denote the \(\varepsilon \)-optimal control, \(\widehat{m}^\varepsilon (\cdot )\) denote the relaxed control representation, and let \(\widehat{X}^\varepsilon (\cdot )\) denote the associated state process. Since \(\widehat{m}^\varepsilon (\cdot )\) is \(\varepsilon \)-optimal in the chosen class of controls, we have

We next approximate \(\widehat{U}^\varepsilon (\cdot )\) by a suitable function of \(w(\cdot )\) and \(\alpha (\cdot )\). Using the same method as in Song et al. (2006), we can approximate \(\widehat{U}^\varepsilon (\cdot )\) by the ordinary control \(U^{\varepsilon , \theta }(\cdot )\) with the corresponding relaxed control \(m^{\varepsilon , \theta }(\cdot )\) and the state process \(X^{\varepsilon , \theta }(\cdot )\) such that

as \(\theta \rightarrow 0\) and

Then a sequence of ordinary controls \(\{\overline{U}^h_n\}\) for the chain \(\{(X^h(\cdot ), \alpha ^h(\cdot ))\}\) can be constructed with the relaxed control representation \(\{\overline{m}^h_n\}\) such that as \(h\rightarrow 0\), the \((X^h(\cdot ), \alpha ^h(\cdot ), \overline{m}^h(\cdot ), w^h(\cdot ))\) converges weakly to \((X^{\varepsilon , \theta }(\cdot ), \alpha (\cdot ), m^{\varepsilon , \theta }(\cdot ), w(\cdot ))\). By the optimality of \(V^h(x, \alpha )\) and the weak convergence above, we have as \(h\rightarrow 0\),

It follows that \(V^h(x, \alpha )\ge V(x, \alpha )-4\varepsilon \) for sufficiently small h. Since any subsequence of \(H^h(\cdot )\) has a subsequence that converges weakly and \(\varepsilon \) is arbitrary, we have \(\liminf \limits _{h}V^h(x, \alpha )\ge V(x, \alpha )\). The conclusion follows. \(\square \)

Appendix D: Numerical experiments

1.1 D.1. Varying the cost dependency

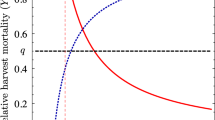

We want to see what effect different specifications of the cost function have on the shape of the optimal harvesting rate, and in particular whether it is bang-bang. We suspect that the convexity of the cost function leads to bang-bang (all or nothing) optimal harvesting. In Fig. 9, we have as an example a cost functions of the form \(C(u) = \sqrt{|u|})\). The rest of the parameters are kept the same as in Sect. 4.1. This example has concave costs, but there is a point of convexity at 0. Experiments with other partly concave cost functions show a similar pattern. Piecewise linear costs like \(C(u)= |u|\), or \(C(u) = \ln (1+|u|)\), lead to optimal controls that are step functions. However, when we use a purely concave cost function, like \(C(u) = \ln (1+u/3)\), seen in Fig. 10, we again obtain bang-bang optimal control. Further experiments confirm the observation.

Value function (left) and optimal harvesting-stocking rate (right) for a model with switching affecting \(\mu (\alpha ) = 4 - \alpha \), and a cost function \(C(u) = \sqrt{|u|}\). Other parameters described in Sect. 4.1

Value function (left) and optimal harvesting-stocking rate (right) for a model with switching affecting \(\mu (\alpha ) = 4 - \alpha \), and a cost function \(C(u) = \ln (1+u/3)\). Other parameters described in Sect. 4.1

1.2 D.2. The Gompertz model of population growth

In this example, the dynamics of the population size without harvesting is given by a Gompertz model (Winsor 1932; Zeide 1993) of the form

where

The generator Q of the Markov chain \(\alpha (\cdot )\) is given by

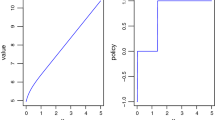

Value function (left) and optimal harvesting-stocking rate (right) for a Gompertz model with absolute harvesting, with switching affectin \(b(x, \alpha )=(4 - \alpha ) x \ln \frac{2}{x}\), constant price \(P(\cdot ) = 1\), and a cost function \(C(\cdot ) = u^2/2\). Other parameters described in Sect. 4.1

Figure 11 shows the value function and the optimal stocking-harvesting rate as a function of population size X(t) and the environmental state \(\alpha \). In the Gompertz model, the deterministic rate of growth near extinction goes to \(\infty \), unlike in the logistic model where it is linear. Comparing these results with the ones in Fig. 2, we can also see that low population values in the Gompertz model are much less unfavorable, both in terms of future value and in terms of the benefit of extraction.

1.3 D.3. The Nisbet–Gurney model of population growth

In this model, the evolution of the population size without harvesting is given by a switched Nisbet–Gurney model; thus,

where

The generator Q of the Markov chain \(\alpha (\cdot )\) is given by

Value function (left) and optimal harvesting-stocking rate (right) for a Nisbet–Gurney model with absolute harvesting, with switching affecting the growth rate \(b(x, \alpha ) = (4-\alpha ) x e^{-x} - x\), constant price \(P(\cdot ) = 1\), and a cost function \(C(\cdot ) = u^2/2\). Other parameters described in Sect. 4.1

Figure 12 shows a numerical estimation of this model. The value function has the usual features, being increasing and concave. The harvesting rate is monotonic, which is not a surprise considering the cost choice and our discussion in Sect. 4.6. Again, the control in state \(\alpha = 1\) shows higher harvesting and seeding, which is consistent with this state being more favourable for growth.

Rights and permissions

About this article

Cite this article

Hening, A., Tran, K.Q. & Ungureanu, S.C. The effects of random and seasonal environmental fluctuations on optimal harvesting and stocking. J. Math. Biol. 84, 41 (2022). https://doi.org/10.1007/s00285-022-01750-2

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00285-022-01750-2

Keywords

- Harvesting

- Stochastic environment

- Density-dependent price

- Controlled diffusion

- Switching environment

- Seasonality