Appendix A: hard selection

With hard selection for reproduction in a population in initial state \(\mathbf{x}\), an offspring is produced by a parent of type \(j=1,\ldots ,n\) in age class \(k=1,\ldots ,d\) with probability

$$\begin{aligned} \frac{(1+r_{j,k}(\mathbf{x }) ) x_{j,k}f_k }{1+ \sum \limits _{j^{\prime }=1}^n \sum \limits _{l=1}^d r_{j^{\prime },l}(\mathbf{x }) x_{j^{\prime },l}f_l} . \end{aligned}$$

(84)

This assumes that an individual of type j in age class k has a relative fertility \(1+ r_{j,k}(\mathbf{x })\) with respect to all individuals in the population. Afterwards, there is mutation to type \(i=1,\ldots ,n\) with probability \( u_{j,i,k}(\mathbf{x})\), so that

$$\begin{aligned} P_i(\mathbf{x}) = \sum _{k=1}^d \sum _{j=1}^n \frac{(1+r_{j,k}(\mathbf{x}))x_{j,k} f_k u_{j,i,k}(\mathbf{x}) }{1+\sum \limits _{j^{\prime }=1}^n \sum \limits _{l=1}^d x_{j^{\prime },l}r_{j^{\prime },l}(\mathbf{x })f_l} \end{aligned}$$

(85)

is the probability for the offspring to be of type \(i=1,\ldots ,n\). This probability can be written in the form

$$\begin{aligned} P_i(\mathbf{x}) = \sum _{k=1}^d x_{i,k}f_k + \frac{1}{N} \sum _{k=1}^d \left( \tilde{\mu }_{i,k}(\mathbf{x}) +x_{i,k}\tilde{\rho }_{i,k}(\mathbf{x}) \right) f_k+ o(N^{-1}) \end{aligned}$$

(86)

with \(No(N^{-1})\rightarrow 0 \) uniformly in \(\mathbf{x}\) as \(N\rightarrow + \infty \), where \( \tilde{\mu }_{i,k}(\mathbf{x}) \) is defined in (11) and

$$\begin{aligned} \tilde{\rho }_{i,k}(\mathbf{x}) = \rho _{i,k}(\mathbf{x} ) - \sum _{l=1}^d \sum _{j=1}^n \rho _{j,l}(\mathbf{x} ) x_{j,l} f_l . \end{aligned}$$

(87)

Here, the conditional expected frequency of type i in the next cohort in age class k is given by

$$\begin{aligned} E_{\mathbf{x}}[x_{i,k}(1)] = (M \mathbf{x}_i)_k + \frac{1}{N}\phi _{i,k}(\mathbf{x}) + o(N^{-1}) \end{aligned}$$

(88)

with

$$\begin{aligned} \phi _{i,1}(\mathbf{x}) =\sum _{k=1}^d \left( \tilde{\mu }_{i,k}(\mathbf{x}) +x_{i,k}\tilde{\rho }_{i,k}(\mathbf{x}) \right) f_k \end{aligned}$$

(89)

and

$$\begin{aligned} \phi _{i,k}(\mathbf{x}) = x_{i,{k-1}} C_{k-1} \tilde{\sigma }_{i,k-1}(\mathbf{x}) \end{aligned}$$

(90)

with \(\tilde{\sigma }_{i,k-1}(\mathbf{x})\) defined in (19) for \(k=2,\ldots ,d\), where

$$\begin{aligned} M =\left( \begin{array}{ccccc} f_1 &{}\quad f_2 &{}\quad f_3 &{}\quad \dots &{}\quad f_d\\ 1 &{}\quad 0 &{}\quad 0 &{}\quad \dots &{}\quad 0\\ 0 &{}\quad 1 &{}\quad 0 &{}\quad \dots &{}\quad 0\\ \vdots &{}\quad \ddots &{}\quad \ddots &{}\quad \ddots &{}\quad \vdots \\ 0 &{}\quad \dots &{}\quad 0 &{}\quad 1 &{}\quad 0 \end{array} \right) \end{aligned}$$

(91)

is a backward transition matrix under neutrality. Note that this matrix coincide with the backward transition matrix in the case of soft selection if \(p_k = f_k\) for \(k=1,\ldots ,d\).

Under the additional assumptions that \(f_d > 0 \) and \(\gcd \{k : 1 \le k \le d, f_{k}>0\}=1\), the stochastic matrix M in (91) is necessarily irreducible and aperiodic. Its stationary distribution \( \mathbf{w} = (w_1,\ldots ,w_d)^T \) is given by

$$\begin{aligned} w_k = \frac{1}{\bar{k}} \sum _{l=k}^d f_l \end{aligned}$$

(92)

for \(k=1,\ldots ,d\) with

$$\begin{aligned} \bar{k} = \sum _{k=1}^{d}kf_k \end{aligned}$$

(93)

being the mean age of reproduction in the neutral model.

Appendix B: approximations for a multivariate Wallenius’ non-central hypergeometric distribution

1.1 Definition of Wallenius’ distribution

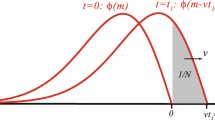

Consider an urn model for R balls of n different colors and weights in a biased sampling. Let \(m_i\) be the number of balls of color i with weight \(\omega _i\) for \(i=1,\ldots ,n\), so that \( \mathbf{m} = (m_1,m_2,\ldots , m_n)^T \in \mathbb {N}^n,\)\( {\varvec{\omega } } = (\omega _1,\omega _2,\ldots , \omega _n)^T \in \mathbb {R}^n,\) and \(R = \sum _{i=1}^n{m_i}.\) Let us sample r balls without replacement and count the number of balls of each color. The result of the experiment is a random vector \((Y_1,\ldots ,Y_n)\) with state space

$$\begin{aligned} \mathrm {S} = \left\{ \mathbf {y}=(y_1,\ldots ,y_n)^T \in \mathbb {N}^n \, : \, \sum _{i=1}^{n} y_i = r \right\} , \end{aligned}$$

(94)

and mass function

$$\begin{aligned} p(\mathbf{y}, {\varvec{\omega }}) = \left( \prod _{i=1}^n \left( {\begin{array}{c}m_i\\ y_i\end{array}}\right) \right) \int _0^1\left( \prod _{k=1}^n (1-t^{\omega _k/D({\varvec{\omega }})})^{y_k} \right) dt, \end{aligned}$$

(95)

where

$$\begin{aligned} D({\varvec{\omega }})={\varvec{\omega }}^T ({\mathbf m}-{\mathbf y}) = \sum _{i=1}^n \omega _i(m_i-y_i). \end{aligned}$$

(96)

This probability distribution is represented by mwnchypg\((r,R,\mathbf{m},{\varvec{\omega }})\). It was first studied by Wallenius (1963) in the univariate case and by Chesson (1976) in the multivariate case.

1.2 Approximation of the mass function under weak bias

We want to approximate the distribution mwnchypg\((r,R,\mathbf{m},{\varvec{\omega }})\) around the point \( {\varvec{\omega }} = \mathbf{1}\). This is what is needed to study the first-order effects of differences in viability from one age class to the next that are of order \(N^{-1}\) as \(N \rightarrow +\infty \). Note that

$$\begin{aligned} f(\mathbf{y }, {\varvec{\omega }}, t ) =\prod _{i=1}^n (1-t^{\omega _i/D({\varvec{\omega }})})^{y_i}, \end{aligned}$$

(97)

for \( {\varvec{\omega } } = (\omega _1,\ldots , \omega _k, \ldots , \omega _n)^T \in \mathbb {R}^n\), \(0<t<1\) and \(\mathbf{y}\in \mathrm {S}\), has partial derivative with respect to \(\omega _k\) given by

$$\begin{aligned}&\frac{\partial f}{\partial \omega _k} (\mathbf{y},{\varvec{\omega }},t) \nonumber \\&\quad = \sum _{j=1}^{n} y_j \left( \frac{\omega _j(m_k-y_k) - D({\varvec{\omega }})\delta _{jk}}{D({\varvec{\omega }})^2}\right) \left( \frac{t^{\omega _j/D({\varvec{\omega }})}\ln (t)}{1-t^{\omega _j/D({\varvec{\omega }})}}\right) \left( \prod _{i=1}^{n } (1-t^{\omega _i/D({\varvec{\omega }})})^{y_i} \right) . \end{aligned}$$

(98)

This implies that (see, e.g., Godement 2005, p. 36)

$$\begin{aligned} \frac{\partial p}{\partial \omega _k} (\mathbf{y},{\varvec{\omega }})&= \left( \prod _{i=1}^n \left( {\begin{array}{c}m_i\\ y_i\end{array}}\right) \right) \frac{\partial }{\partial \omega _k} \left( \int _0^1 f(\mathbf{y }, {\varvec{\omega }}, t ) dt\right) \nonumber \\&= \left( \prod _{i=1}^{n} \left( {\begin{array}{c}m_i\\ y_i\end{array}}\right) \right) \int _0^1 \frac{\partial f}{\partial \omega _k} (\mathbf{y},{\varvec{\omega }},t)dt. \end{aligned}$$

(99)

Moreover,

$$\begin{aligned} \frac{\partial p}{\partial \omega _k} (\mathbf{y},{\varvec{\omega }}) = \left( \prod _{i=1}^{n} \left( {\begin{array}{c}m_i\\ y_i\end{array}}\right) \right) \sum _{j=1}^{n} y_j \frac{\omega _j(m_k-y_k) - D({\varvec{\omega }})\delta _{jk}}{D({\varvec{\omega }})^2} I_j(\mathbf{y},{\varvec{\omega }}) \end{aligned}$$

(100)

with

$$\begin{aligned} I_j(\mathbf{y},{\varvec{\omega }}) = \int _0^1 \frac{t^{\omega _j/D({\varvec{\omega }})}\ln (t)}{1-t^{\omega _j/D({\varvec{\omega }})}} \left( \prod _{i=1}^{n } (1-t^{\omega _i/D({\varvec{\omega }})})^{y_i} \right) dt \end{aligned}$$

(101)

for \(j=1,\ldots ,n.\) This uses the fact that

$$\begin{aligned} t^{\omega _i/D({\varvec{\omega }})} = \exp \left\{ \frac{\omega _i}{D({\varvec{\omega }})}\ln (t)\right\} . \end{aligned}$$

(102)

At \({\varvec{\omega }} = \mathbf{1}\), we have

$$\begin{aligned} \frac{\partial p}{\partial \omega _k} (\mathbf{y},\mathbf{1}) = \left( \prod _{i=1}^{n} \left( {\begin{array}{c}m_i\\ y_i\end{array}}\right) \right) \left( \frac{r m_k - Ry_k}{{(R-r)^2}}\right) I(\mathbf{y},{\varvec{1}}) \end{aligned}$$

(103)

with

$$\begin{aligned} I(\mathbf{y},{\varvec{1}}) = \int _0^1 t^{(R-r)^{-1}}\ln (t)(1-t^{(R-r)^{-1}})^{r-1}dt. \end{aligned}$$

With the change of variable \(x=t^{{(R-r)}^{-1}}\), the above integral becomes

$$\begin{aligned} I(\mathbf{y},{\varvec{1}}) = (R-r)^2\int _0^1\ln (x)x^{(R-r)}(1-x)^{r-1}dx =(R-r)^2\left. \frac{\partial B}{\partial z}(z,u)\right| _{(R-r+1,r)}, \end{aligned}$$

(104)

where

$$\begin{aligned} B(z,u) = \int _0^1 x^{z-1} (1-x)^{u-1} dx = \frac{\Gamma (z)\Gamma (u)}{\Gamma (z+u)} \end{aligned}$$

(105)

and

$$\begin{aligned} \Gamma (z) = \int _0^\infty t^{z-1}e^{-t}dt \end{aligned}$$

(106)

are respectively the beta and gamma functions (see, e.g., Stegan 1964, pp. 255–258).

The partial derivative of the beta function with respect to the first variable is given by

$$\begin{aligned} \frac{\partial B}{\partial z}(z,u) = \Gamma (u)\frac{\Gamma (z+u)\Gamma '(z)-\Gamma '(z+u)\Gamma (z)}{(\Gamma (z+u))^2} = B(z,u) \left( \psi (z)-\psi (z+u)\right) \end{aligned}$$

(107)

with

$$\begin{aligned} \psi (z) = \frac{d}{dz}\ln (\Gamma (z)) = \frac{\Gamma '(z)}{\Gamma (z)}. \end{aligned}$$

(108)

This function verifies

$$\begin{aligned} \psi (m_1) - \psi (m_2) = \sum _{k=m_2}^{m_1-1}\frac{1}{k} \end{aligned}$$

(109)

for integers \(m_1\) and \(m_2\) satisfying \(m_1>m_2\ge 1\). Using the fact that \(\Gamma (m) = (m-1)!\) for \( m\in \mathbb {N}\), we find

$$\begin{aligned} \left. \frac{\partial B}{\partial z}(z,u)\right| _{(R-r+1,r)}&= \frac{\Gamma (R-r+1)\Gamma (r) }{\Gamma (R+1)}\left( \psi (R-r+1)-\psi (R+1)\right) \nonumber \\&=-\frac{(R-r)!(r-1)!}{R!} S_{r,R} \end{aligned}$$

(110)

with

$$\begin{aligned} S_{r,R} = \sum _{k=R-r+1}^{R} \frac{1}{k} . \end{aligned}$$

(111)

Replacing Eq. (110) in Eq. (104), we get

$$\begin{aligned} I(\mathbf{y},{\varvec{1}}) =&(R-r)^2\left. \frac{\partial B}{\partial z}(z,u)\right| _{(R-r+1,r)}\nonumber \\ =&-(R-r)^2\frac{(R-r)!(r-1)!}{R!} S_{r,R}\nonumber \\ =&- \frac{ (R-r)^2S_{r,R} }{ {\left( {\begin{array}{c}R\\ r\end{array}}\right) }r }. \end{aligned}$$

(112)

Then Eq. (103) can be written as

$$\begin{aligned} \frac{\partial p}{\partial \omega _k} (\mathbf{y},\mathbf{1}) = p(\mathbf{y},\mathbf{1}) \left( y_k\frac{R}{r} - m_k \right) S_{r,R} \end{aligned}$$

(113)

with

$$\begin{aligned} p(\mathbf{y},\mathbf{1}) = \dfrac{\prod \limits _{i=1}^{n} \genfrac(){0.0pt}0{m_i}{y_i}}{\genfrac(){0.0pt}0{R}{r}} \end{aligned}$$

(114)

being the mass function of a multivariate hypergeometric distribution with parameters given by \((r,R,\mathbf{m})\), represented by mhypg\((r,R,\mathbf{m})\).

The second partial derivatives of the mass function of a mhypg\((R,r,\mathbf{m})\) random vector are given by

$$\begin{aligned} \frac{\partial }{\partial \omega _l}\frac{\partial p}{\partial \omega _k} (\mathbf{y},{\varvec{\omega }})=&\left( \prod _{i=1}^{n} \left( {\begin{array}{c}m_i\\ y_i\end{array}}\right) \right) \sum _{j=1}^{n} y_j \left( \frac{\partial }{\partial \omega _l}\left( \frac{\omega _j(m_k-y_k) - D({\varvec{\omega }})\delta _{jk}}{D({\varvec{\omega }})^2}\right) I_j(\mathbf{y},{\varvec{\omega }})\right) \nonumber \\&+ y_j \left( \frac{\omega _j(m_k-y_k) - D({\varvec{\omega }})\delta _{jk}}{D({\varvec{\omega }})^2}\frac{\partial }{\partial \omega _l}\left( I_j(\mathbf{y},{\varvec{\omega }})\right) \right) \end{aligned}$$

(115)

where

$$\begin{aligned} I_j(\mathbf{y},{\varvec{\omega }) = \int _0^1 \frac{t^{\omega _j/D({\varvec{\omega }})}\ln (t)}{1-t^{\omega _j/D({\varvec{\omega }})}} f(\mathbf{y }, {\varvec{\omega }}, t ) dt}. \end{aligned}$$

(116)

We have that

$$\begin{aligned} \frac{\partial }{\partial \omega _l}\left( \frac{\omega _j(m_k-y_k) - D({\varvec{\omega }})\delta _{jk}}{D({\varvec{\omega }})^2}\right) =&\frac{\delta _{lj}D({\varvec{\omega }}) - 2 \omega _l(m_l -y_l)}{(D({\varvec{\omega }}))^3} + \frac{\delta _{jk}}{(D({\varvec{\omega }}))^2} \nonumber \\ =&\frac{(\delta _{lj}+\delta _{jk})D({\varvec{\omega }}) - 2 \omega _l(m_l -y_l)}{(D({\varvec{\omega }}))^3}, \end{aligned}$$

(117)

$$\begin{aligned} \frac{\partial }{\partial \omega _l}\left( \frac{t^{\omega _j/D({\varvec{\omega }})}}{1-t^{\omega _j/D({\varvec{\omega }})}} \right) =&\frac{t^{\omega _j/D({\varvec{\omega }})} \ln (t) \left( D({\varvec{\omega }}) \delta _{lj} - \omega _j(m_l - y_l)\right) }{(D({\varvec{\omega }})(1-t^{\omega _j/D({\varvec{\omega }})}))^2} \end{aligned}$$

(118)

and

$$\begin{aligned} \frac{\partial }{\partial \omega _l}\left( f(\mathbf{y }, {\varvec{\omega }}, t ) \right) = \sum _{j=1}^{n} y_j \left( \frac{\omega _j(m_l-y_l) - D({\varvec{\omega }})\delta _{jl}}{D({\varvec{\omega }})^2} \right) \frac{t^{\omega _j/D({\varvec{\omega }})}\ln (t)}{1-t^{\omega _j/D({\varvec{\omega }})}} f(\mathbf{y }, {\varvec{\omega }}, t ). \end{aligned}$$

(119)

Then

$$\begin{aligned} \frac{\partial }{\partial \omega _l}\frac{\partial p}{\partial \omega _k} (\mathbf{y},{\varvec{\omega }})=&\left( \prod _{i=1}^{n} \left( {\begin{array}{c}m_i\\ y_i\end{array}}\right) \right) \sum _{j=1}^{n} y_j \frac{(\delta _{lj}+\delta _{jk})D({\varvec{\omega }}) - 2 \omega _l(m_l -y_l)}{(D({\varvec{\omega }}))^3} I_j(\mathbf{y},{\varvec{\omega }})\nonumber \\&+ y_j \left( \frac{\omega _j(m_k-y_k) - D({\varvec{\omega }})\delta _{jk}}{D({\varvec{\omega }})^2}J_{lj}(\mathbf{y},{\varvec{\omega }})\right) \end{aligned}$$

(120)

with

$$\begin{aligned} J_{lj}(\mathbf{y},{\varvec{\omega }}) =&\frac{\partial }{\partial \omega _l} I_{j}(\mathbf{y},{\varvec{\omega }})\nonumber \\ =&\int _0^1 \frac{t^{\omega _j/D({\varvec{\omega }})} (\ln (t))^2 }{(D({\varvec{\omega }})(1-t^{\omega _j/D({\varvec{\omega }})}))^2} f(\mathbf{y }, {\varvec{\omega }}, t ) \nonumber \\&\cdot \left( D({\varvec{\omega }}) \delta _{lj} - \omega _j(m_l - y_l) \right. \nonumber \\&\left. + \sum _{k=1}^{n} y_k \frac{\left( \omega _k(m_l-y_l) - D({\varvec{\omega }})\delta _{kl} \right) t^{\omega _k/D({\varvec{\omega }})}}{1-t^{\omega _k/D({\varvec{\omega }})}} \right) dt. \end{aligned}$$

(121)

1.3 Approximation of the k-th moments under weak bias

According to Eq. (113), with \(( H_1,\ldots ,H_{n})\) representing a mhypg\((R,r,\mathbf{m})\) random vector, we have

$$\begin{aligned} E\left[ \left( \frac{Y_i}{r}\right) ^k\right] =\,&E\left[ \left( \frac{H_i}{r}\right) ^k\right] \nonumber \\&+ \sum _{l=1}^{n}(\omega _l-1) \sum _{\mathbf{y} \in \mathrm {S}} \left( \frac{y_i}{r}\right) ^k p(\mathbf{y},\mathbf{1}) \left( y_l\frac{R}{r} - m_l\right) S_{r,R}+ o(|{\varvec{\omega }} -\mathbf{1}|),\nonumber \\ \end{aligned}$$

(122)

where

$$\begin{aligned} \sum _{\mathbf{y} \in \mathrm {S}} \left( \frac{y_i}{r}\right) ^k p(\mathbf{y},\mathbf{1}) \left( y_l\frac{R}{r} - m_l\right) = E[H_i^kH_l]\frac{R}{r^k}-\frac{m_l}{r^k}E[H_i^k] \end{aligned}$$

(123)

and

$$\begin{aligned} \frac{o(|{\varvec{\omega }} -\mathbf{1}|)}{|{\varvec{\omega }} -\mathbf{1}|} \rightarrow 0 \end{aligned}$$

(124)

as \(|{\varvec{\omega }} - \mathbf{1}| \rightarrow {0}\) with \(|\cdot |\) denoting the Euclidean norm. Actually, the convergence is uniform in \({\varvec{\omega }}\) as can be shown by induction on the number of balls removed, r, at least for \(k=1,2,3,4\) (Soares 2019). Therefore, we have

$$\begin{aligned} E\left[ \left( \frac{Y_i}{r}\right) ^k\right] =\,&E\left[ \left( \frac{H_i}{r}\right) ^k\right] \nonumber \\&+S_{r,R} \sum _{l=1}^{n}(\omega _l-1) \left( E[H_i^kH_l]\frac{R}{r^{k+1}}-\frac{m_l}{r^k}E[H_i^k] \right) + o(|{\varvec{\omega }} - \mathbf{1}|) \end{aligned}$$

(125)

uniformly in \({\varvec{\omega }}\) for the k-th moments.

For the first moments, for instance, we have

$$\begin{aligned} E\left[ \frac{Y_i}{r}\right] = E\left[ \frac{H_i}{r}\right] +S_{r,R} \sum _{l=1}^{n}(\omega _l-1) \left( E[H_iH_l]\frac{R}{r^2}-\frac{m_l}{r}E[H_i] \right) + o(|{\varvec{\omega }} -\mathbf{1}|)\nonumber \\ \end{aligned}$$

(126)

uniformly in \({\varvec{\omega }}\), where

$$\begin{aligned} E[H_i] = r \frac{m_i}{R} \end{aligned}$$

(127)

and

$$\begin{aligned} E[H_iH_l] = \left\{ \begin{array}{lll} \frac{{m_l} {m_i}r(r-1)}{R(R-1)}&{}\quad \text{ if } &{}\quad i\ne l,\\ \frac{{m_i}(m_i -1)r(r-1)}{R(R-1)}+\frac{{m_i} r}{R}&{}\quad \text{ if } &{}\quad i= l, \end{array} \right. \end{aligned}$$

(128)

for \(i, l = 1, \ldots ,n\). This yields

$$\begin{aligned} E\left[ \frac{Y_i}{r}\right]&= \frac{m_i}{R} + \frac{m_i}{R} \frac{R(R-r)}{r(R-1)} S_{r,R} \left( \omega _i -1 - \sum _{l=1}^{{n}}(\omega _l-1)\frac{m_l}{R} \right) + o(|{\varvec{\omega }} -\mathbf{1}|) \end{aligned}$$

(129)

uniformly in \({\varvec{\omega }}\) for \(i = 1, \ldots ,n\).

1.3.1 Approximation of the covariances under weak bias

According to Eq. (113), we have

$$\begin{aligned} E[Y_iY_j]= & {} E[H_i H_j] \nonumber \\&+ \sum _{l=1}^{n}(\omega _l-1) \sum _{\mathbf{y} \in \mathrm {S}} y_iy_j p(\mathbf{y},\mathbf{1}) \left( y_l\frac{R}{r} - m_l\right) S_{r,R}+ o(|{\varvec{\omega }} -\mathbf{1}|),\nonumber \\ \end{aligned}$$

(130)

where

$$\begin{aligned} \sum _{\mathbf{y} \in \mathrm {S}} y_iy_j p(\mathbf{y},\mathbf{1}) \left( y_l\frac{R}{r} - m_l\right) = E[H_iH_jH_l]\frac{R}{r}-m_lE[H_iH_j]. \end{aligned}$$

(131)

Therefore,

$$\begin{aligned} E\left[ \frac{Y_i}{r}\frac{Y_j}{r}\right] =&E\left[ \frac{H_i}{r}\frac{H_j}{r}\right] \nonumber \\&+S_{r,R} \sum _{l=1}^{n}(\omega _l-1) \left( E[H_iH_jH_l]\frac{R}{r^3}-\frac{m_l}{r^2}E[H_iH_j] \right) + o(|{\varvec{\omega }} -\mathbf{1}|)\nonumber \\ \end{aligned}$$

(132)

for \(i,j=1,\ldots ,n\), where \( o(|{\varvec{\omega }} -\mathbf{1}|)\) is actually uniform in \({\varvec{\omega }}\) as can be proved by induction on the number of balls removed, r (Soares 2019). Here, \(E[H_iH_j]\) is given by (128), while

$$\begin{aligned}&E[H_iH_jH_l] \nonumber \\&\quad = \left\{ \begin{array}{lll} m_i m_j m_l \frac{r(r-1)(r-2)}{R(R-1)(R-2)} &{}\quad \text{ if } i\ne j , j\ne l \text{ and } l\ne i, \\ m_i m_j \frac{ r(r-1)}{R(R-1)}\left( 1+(m_i-1)\frac{r-2}{R-2} \right) &{}\quad \text{ if } i\ne j \text{ and } l= i, \\ {m_i} \frac{r}{R} \left[ 1+ (m_i-1)\frac{ r-1}{R-1} \left( 3 +(m_i-2)\frac{r-2}{R-2} \right) \right] &{}\quad \text{ if } i= j = l. \\ \end{array} \right. \end{aligned}$$

(133)

This gives an approximation for the covariances

$$\begin{aligned} Cov\left[ \frac{Y_i}{r},\frac{Y_j}{r}\right] = E\left[ \frac{Y_i}{r}\frac{Y_j}{r}\right] - E\left[ \frac{Y_i}{r}\right] E\left[ \frac{Y_j}{r}\right] \end{aligned}$$

(134)

for \(i, j = 1, \ldots ,n\).

1.4 Approximations in the case of a large sample size in a large population

Consider that the weight associated to a ball of color i is in the form

$$\begin{aligned} \omega _i -1= \frac{f}{R}\sigma _i \end{aligned}$$

(135)

with \(0<f<1\) and \(\sigma _i\) constant for \(i=1,\ldots ,n\). As \(R \rightarrow +\infty \), let us keep constant the ratios

$$\begin{aligned} \beta = \frac{r}{R} \end{aligned}$$

(136)

and

$$\begin{aligned} x_i = \frac{m_i}{ R} \end{aligned}$$

(137)

for \(i = 1,\ldots , n\). Then, defining

$$\begin{aligned} X_i = \frac{Y_i}{r} = \frac{Y_i}{\beta R} \end{aligned}$$

(138)

and using (129) for its first moment, we get

$$\begin{aligned} E[X_i] = x_i + \frac{f}{R}x_i\left( \frac{1-\beta }{\beta }\right) S \left( \sigma _i - \sum _{l=1}^{n} \sigma _lx_l \right) + o(R^{-1}), \end{aligned}$$

(139)

where

$$\begin{aligned} S = \lim _{R\rightarrow +\infty } S_{{\beta R,R}} = \ln \left( \frac{1}{1-\beta }\right) . \end{aligned}$$

(140)

This gives

$$\begin{aligned} E[X_i] = x_i + \frac{x_if}{R}C{{\tilde{\sigma }}_i} + o(R^{-1}), \end{aligned}$$

(141)

where

$$\begin{aligned} C = \frac{1-\beta }{\beta }\ln \left( \frac{1}{1-\beta }\right) =\frac{R-r}{r }\ln \left( \frac{R}{R-r}\right) \end{aligned}$$

(142)

and

$$\begin{aligned} {{\tilde{\sigma }}_i} = \sigma _i - \sum _{l=1}^{n}\sigma _l x_l. \end{aligned}$$

(143)

On the other hand, using (128) and (133), it can be checked that we have the following limits as \(R\rightarrow +\infty \) with \(\beta \) and \(x_i\) for \(i=1,\ldots ,n\) being kept fixed :

$$\begin{aligned} \begin{array}{|l|c|} \hline i\ne j , l\ne i,j &{} E[H_iH_jH_l] \frac{R}{r^3} - E[H_i H_j] \frac{m_l}{r^2}\rightarrow -\frac{2 (1 - \beta ) x_i x_j x_l}{\beta }\\ \hline i\ne j, l = i&{} E[H_i^2H_j] \frac{R}{r^3} - E[H_i H_j]\frac{m_i}{r^2} \rightarrow \frac{(1-\beta ) x_i (1-2 x_i) x_j}{\beta }\\ \hline i = j, l \ne i&{} E[H_i^2H_l] \frac{R}{r^3} - E[H_i^2 ]\frac{m_l }{r^2} \rightarrow - \frac{2(1-\beta ) x_i^2 x_l}{\beta }\\ \hline i = j = l&{} E[H_i^3] \frac{R}{r^3} - E[H_i^2 ] \frac{m_i}{r^2}\rightarrow \frac{2 (1-\beta ) (1-x_i) x_i^2}{\beta }\\ \hline \end{array} \end{aligned}$$

(144)

Therefore, (144) and (132) lead to

$$\begin{aligned} E[X_iX_j] = E\left[ \frac{H_iH_j}{r^2}\right] +\frac{f}{R}\left( \frac{1-\beta }{\beta } \right) x_i x_j S \left( \sigma _i + \sigma _j-2\sum _{l=1}^{n} \sigma _lx_l \right) + o( R^{-1}). \end{aligned}$$

(145)

On the other hand, owing to (129) and (144), we have

$$\begin{aligned} E[X_i]E[X_j] =&E\left[ \frac{H_i}{r}\right] E\left[ \frac{H_j}{r}\right] \nonumber \\&+ \frac{f}{R} \left( \frac{1-\beta }{\beta }\right) x_ix_j S\left( \sigma _i + \sigma _j - 2 \sum _{l=1}^{n} \sigma _lx_l\right) + o(R^{-1}). \end{aligned}$$

(146)

Therefore, for the covariance, we get

$$\begin{aligned} Cov[X_i,X_j] = Cov\left[ \frac{H_i}{r}, \frac{H_j}{r}\right] + o(R^{-1}), \end{aligned}$$

(147)

where

$$\begin{aligned} Cov\left[ \frac{H_i}{r}, \frac{H_j}{r}\right] = \frac{1}{R}x_i(\delta _{ij} - x_j)\left( \frac{1-\beta }{\beta }\right) + o (R^{-1}). \end{aligned}$$

(148)

As for the third moment, we have

$$\begin{aligned} E\left[ \left( \frac{Y_i}{r}\right) ^3\right] =&\frac{1}{r^3} \left( \frac{3 m_i^{(2)} r^{(2)}}{R^{(2)}}+\frac{m_i^{(3)} r^{(3)}}{R^{(3)}}+\frac{m_i r}{R}\right) \nonumber \\&+ \frac{R (R-r)}{r^3}S_{r,R}\Bigg ( \frac{m_i }{R^{(2)}} +\frac{6m_i^{(2)}(r-1) }{R^{(3)}} +\frac{3 m_i^{(3)}(r-1)^{(2)}}{R^{(4)}} \Bigg )\nonumber \\&\cdot \Bigg ( \omega _i - \sum _{l=1}^{n}\omega _l \frac{m_l}{R} \Bigg ) + o(|{\varvec{\omega }} - \mathbf{1}|), \end{aligned}$$

(149)

with the notation \(r^{(k)}=r (r-1)\cdots (r-k+1)\), where

$$\begin{aligned} \frac{3 m_i^{(2)} r^{(2)}}{r^3R^{(2)}} =&\frac{3x_i(x_i -1/R)(\beta -1/R)}{\beta ^2 R(1-1/R)} = o(1), \end{aligned}$$

(150)

$$\begin{aligned} \frac{m_i^{(3)} r^{(3)}}{r^3R^{(3)}} =&\frac{x_i(x_i -1/R)(x_i-2/R) \beta (\beta -1/R)(\beta -2/R)}{\beta ^3(1-1/R)(1-2/R)} = x_i^3 + o(1), \end{aligned}$$

(151)

$$\begin{aligned} \frac{m_i r}{r^3R} =&\frac{x_i}{(\beta R)^2} = o(1) , \end{aligned}$$

(152)

$$\begin{aligned} \frac{m_i }{rR^{(2)}} =&\frac{x_i }{\beta R (R-1)} = o(1), \end{aligned}$$

(153)

$$\begin{aligned} \frac{6m_i^{(2)}(r-1) }{rR^{(3)}} =&\frac{6x_i(x_i-1/R)(\beta -1/R) }{\beta R(1-1/R)(1-2/R)} = o(1) , \end{aligned}$$

(154)

$$\begin{aligned} \frac{3 m_i^{(3)}(r-1)^{(2)}}{rR^{(4)}} =&\frac{3 x_i (x_i-1/R)(x_i-2/R)(\beta -1/R)(\beta -2/R)}{\beta (1-1/R)(1-2/R)(1-3/R)} = 3 x_i^3 \beta + o(1) \end{aligned}$$

(155)

and

$$\begin{aligned} \frac{R (R-r)}{r^2}S_{r,R} = \frac{(1-\beta )}{\beta ^2} S_{\beta R,R} = \frac{ (1-\beta )}{\beta ^2}\ln \left( \frac{1}{1-\beta }\right) + o(1). \end{aligned}$$

(156)

This yields

$$\begin{aligned} E\left[ X_i^3\right]&= x_i^3 + \frac{3 x_i^3 f (1-\beta )}{R\beta }\ln \left( \frac{1}{1-\beta }\right) \Bigg ( \sigma _i - \sum _{l=1}^{n}\sigma _l x_l \Bigg ) + o(R^{-1})\nonumber \\&= x_i^3 + \frac{3 x_i^3f }{R}C {{\tilde{\sigma }}_i} + o(R^{-1}). \end{aligned}$$

(157)

Similarly, for the fourth moment, we have

$$\begin{aligned} E\left[ \left( \frac{Y_i}{r}\right) ^4\right]&= \frac{1}{r^4}\Bigg ( \frac{7 m_i^{(2)} r^{(2)}}{R^{(2)}}+\frac{6 m_i^{(3)} r^{(3)}}{R^{(3)}}+\frac{m_i^{(4)} r^{(4)}}{R^{(4)}}+\frac{m_i r}{R} \Bigg )\nonumber \\&\quad + \frac{R(R-r)}{r^5}S_{r,R} \Bigg ( \frac{m_i r}{R^{(2)}} +\frac{14 m_i^{(2)} r^{(2)}}{R^{(3)}} + \frac{ 18 m_i^{(3)} r^{(3)}}{R^{(4)}} + \frac{ 4m_i^{(4)} r^{(4)}}{R^{(5)}} \Bigg )\nonumber \\&\quad \cdot \Bigg ( \omega _i - \sum _{l=1}^{n}\omega _l \frac{m_l }{R} \Bigg ) + o(|{\varvec{\omega }} - \mathbf{1}|), \end{aligned}$$

(158)

where

$$\begin{aligned} \frac{7 m_i^{(2)} r^{(2)}}{r^4R^{(2)}} =\,&\frac{7 x_i(x_i-1/R)\beta (\beta -1/R)}{\beta ^4 R(R-1)} = o(1), \end{aligned}$$

(159)

$$\begin{aligned} \frac{6 m_i^{(3)} r^{(3)}}{r^4 R^{(3)}} =\,&\frac{6 x_i(x_i-1/R)(x_i-2/R)\beta (\beta -1/R)(\beta -2/R)}{\beta ^4 R(1-1/R)(1-2/R)} = o(1), \end{aligned}$$

(160)

$$\begin{aligned} \frac{m_i^{(4)} r^{(4)}}{r^4R^{(4)}} =\,&\frac{x_i(x_i-1/R)(x_i-2/R)(x_i-3/R) \beta (\beta -1/R)(\beta -2/R)(\beta -3/R)}{\beta ^4(1-1/R)(1-2/R)(1-3/R)}\nonumber \\ =\,&x_i^4 + o(1), \end{aligned}$$

(161)

$$\begin{aligned} \frac{m_i r}{r^4 R} =\,&\frac{x_i}{\beta ^3 R^3} = o(1), \end{aligned}$$

(162)

$$\begin{aligned} \frac{m_i r}{r^3R^{(2)}} =\,&\frac{x_i }{\beta ^2R^2(R-1)} = o(1), \end{aligned}$$

(163)

$$\begin{aligned} \frac{14 m_i^{(2)}r^{(2)}}{r^3R^{(3)}} =\,&\frac{14 x_i(x_i-1/R) (\beta -1/R)}{\beta ^2(R-1)(R-2)} = o(1), \end{aligned}$$

(164)

$$\begin{aligned} \frac{ 18 m_i^{(3)} r^{(3)}}{r^3R^{(4)}} =\,&\frac{ 18 x_i(x_i-1/R)(x_i-2/R) (\beta -1/R)(\beta -2/R)}{\beta ^2 R(1-1/R)(1-2/R)(1-3/R)} = o(1) \end{aligned}$$

(165)

and

$$\begin{aligned} \frac{ 4m_i^{(4)} r^{(4)}}{r^3R^{(5)}}&= \frac{ 4x_i(x_i-1/R)(x_i-2/R)(x_i-3/R) (\beta -1/R)(\beta -2/R)(\beta -3/R)}{\beta ^2(1-1/R)(1-2/R)(1-3/R)(1-4/R)}\nonumber \\&= 4x_i^4\beta + o(1) . \end{aligned}$$

(166)

This yields

$$\begin{aligned} E\left[ X_i^4\right]&= x_i^{4} +\frac{4 x_i^4 f(1-\beta )}{R\beta }\ln \left( \frac{1}{1-\beta }\right) \Bigg ( \sigma _i - \sum _{l=1}^{n}\sigma _l x_l \Bigg ) + o(R^{-1})\nonumber \\&= x_i^{4} +\frac{4x_i^4 f}{R} C {{\tilde{\sigma }}_i} + o(R^{-1}). \end{aligned}$$

(167)

More generally, we expect

$$\begin{aligned} E[X_i^t] = x_i^{t}+\frac{ tx_i^tf}{R}C{{\tilde{\sigma }}_i} + o(R^{-1}) \end{aligned}$$

(168)

for every integer \(t\ge 1\).

Finally, for the fourth central moment, we have

$$\begin{aligned} E[(X_i - E[X_i])^4] =\,&E[X_i^4] - 4E[X_i^3]E[X_i] + 6E[X_i^2]E[X_i]^2 - 3E[X_i]^4\nonumber \\ =\,&x_i^4 + \frac{4x_i^4f}{R}C{{\tilde{\sigma }}_i} + o(R^{-1})\nonumber \\&- 4 \left( x_i^3 + \frac{3x_i^3f}{R}C{{\tilde{\sigma }}_i} + o(R^{-1})\right) \left( x_i + \frac{x_if}{R}C{{\tilde{\sigma }}_i} + o(R^{-1})\right) \nonumber \\&+ 6 \left( x_i^2 + \frac{2x_i^2f}{R}C{{\tilde{\sigma }}_i} + o(R^{-1})\right) \left( x_i + \frac{x_if}{R}C{{\tilde{\sigma }}_i} + o(R^{-1})\right) ^2\nonumber \\&- 3 \left( x_i+ \frac{x_if}{R}C{{\tilde{\sigma }}_i} + o(R^{-1})\right) ^4\nonumber \\ =\,&\left( x_i^4 -4x_i^4 + 6x_i^4- 3x_i^4\right) \nonumber \\&+ (4x_i^4- 16x_i^4+24x_i^4-12 x_i^4)\frac{f}{R}C{{\tilde{\sigma }}_i} + o(R^{-1}) \nonumber \\ =\,&o(R^{-1}). \end{aligned}$$

(169)

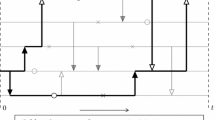

Appendix C: conditions for a diffusion approximation

1.1 Condition I

Using (15), we have

$$\begin{aligned} E_\mathbf{x} [ \mathbf{w}^T \mathbf{x}_i(1) ] = \sum _{k=1}^d w_k E_\mathbf{x} [ \mathbf{x}_i(1) ] = \sum _{k=1}^{d}w_{k} (M \mathbf{x}_i)_k + \frac{1}{N} \sum _{k=1}^{d}w_{k}\phi _{i,k}(\mathbf{x}) + o(N^{-1}) \end{aligned}$$

(170)

with

$$\begin{aligned} \sum _{k=1}^{d}w_{k} (M \mathbf{x}_i)_k = \mathbf{w}^T M \mathbf{x}_i = \mathbf{w}^T \mathbf{x}_i \end{aligned}$$

(171)

owing to the fact that \( \mathbf{w}^T M = \mathbf{w}^T \). Then, using (44), we have

$$\begin{aligned} E_\mathbf{x} [\Delta z_i] = E_\mathbf{x} [ \mathbf{w}^T \mathbf{x}_i(1) - \mathbf{w}^T \mathbf{x}_i ] = \frac{1}{N} \sum _{k=1}^{d}w_{k}\phi _{i,k}(\mathbf{z } \mathbf{1 }^T + \mathbf{y}) + o(N^{-1}) \end{aligned}$$

(172)

as \(N\rightarrow +\infty \).

1.2 Condition II

We have

$$\begin{aligned} E_\mathbf{x} [(\Delta z_i)(\Delta z_j)] =E_\mathbf{x}[ z_i(1) z_j(1)] - z_iE_\mathbf{x} [ z_j(1)] - z_jE_\mathbf{x} [ z_i(1)] + z_i z_j \end{aligned}$$

(173)

with

$$\begin{aligned} E_\mathbf{x}[ z_i(1) z_j(1)] = Cov_\mathbf{x}[ z_{i}(1),z_{j}(1)] + E_\mathbf{x}[ z_i(1)]E_\mathbf{x}[ z_j(1)]. \end{aligned}$$

(174)

Owing to condition I, we have

$$\begin{aligned} E_\mathbf{x}[ z_i(1)]E_\mathbf{x}[ z_j(1)] - z_iE_\mathbf{x} [ z_j(1)] - z_jE_\mathbf{x} [ z_i(1)] + z_i z_j&=E_\mathbf{x} [(\Delta z_i)] E_\mathbf{x} [(\Delta z_j)]\nonumber \\&= o(N^{-1}). \end{aligned}$$

(175)

Therefore,

$$\begin{aligned} E_\mathbf{x} [(\Delta z_i)(\Delta z_j)] =Cov_\mathbf{x}[ z_{i}(1),z_{j}(1)] + o(N^{-1}). \end{aligned}$$

(176)

Since the variables \(x_{i,k}(1)\) and \(x_{j,l}(1)\) are independent as soon as \(l\ne k\), we have

$$\begin{aligned} Cov_\mathbf{x}[ z_i(1) ,z_j(1)]&= Cov_\mathbf{x}\left[ \sum _{k=1}^d w_k x_{i,k}(1) , \sum _{k=1}^d w_k x_{j,k}(1)\right] \nonumber \\&= \sum _{k=1}^d w_k^2 Cov_\mathbf{x}[ x_{i,k}(1),x_{j,k}(1)] . \end{aligned}$$

(177)

The vector \((x_{1,1}(1),\ldots ,x_{n,1}(1))\) having a multinomial probability distribution with parameters \(N_1, P_{1}(\mathbf{x}),\ldots , P_{n}(\mathbf{x}), \) the covariances of the components are given by

$$\begin{aligned} Cov_\mathbf{x}[ x_{i,1}(1),x_{j,1}(1)]=-\frac{1}{N_1}P_i(\mathbf{x})P_j(\mathbf{x}) = -\frac{1}{N_1} (M\mathbf{x}_i)_1(M\mathbf{x}_j)_1 + o(N^{-1}) \end{aligned}$$

(178)

and

$$\begin{aligned} Cov_\mathbf{x}[ x_{i,1}(1),x_{i,1}(1)]=&\frac{1}{N_1}P_i(\mathbf{x})(1-P_i(\mathbf{x})) \nonumber \\ =&\frac{1}{N_1} (M\mathbf{x}_i)_1(1- (M\mathbf{x}_i)_1) + o(N^{-1}) \end{aligned}$$

(179)

for \(i,j =1,\ldots ,n\) with \(i\ne j\), using Eq. (10). Therefore, by (15), we have

$$\begin{aligned} Cov_\mathbf{x}[ x_{i,1}(1),x_{j,1}(1)]= \frac{1}{Nf_1}(M\mathbf{x}_i)_1 \left( \delta _{ij}- (M\mathbf{x}_j)_1 \right) + o(N^{-1}) \end{aligned}$$

(180)

with \(\delta _{ij} = 0\) if \(i\ne j\) and \(\delta _{ii}=1,\) for \(i,j=1,\ldots ,n\). For \(k =2,\ldots ,d\), and with reference to “Appendix B” (with \(R=N_{k-1}\) and \(\beta = f_k/f_{k-1}\)), we have

$$\begin{aligned} Cov_\mathbf{x}[x_{i,k}(1),x_{j,k}(1)]&= \frac{1}{N}x_{i,k-1}(\delta _{ij} - x_{j,k-1})\left( \frac{1}{f_{k}} - \frac{1}{f_{k-1}}\right) + o (N^{-1})\nonumber \\&=\frac{1}{N} (M\mathbf{x}_i)_k (\delta _{ij} - (M\mathbf{x}_j)_k )\left( \frac{1}{f_{k}} - \frac{1}{f_{k-1}}\right) + o (N^{-1}). \end{aligned}$$

(181)

Therefore, condition II holds with

$$\begin{aligned} a_{i,j}(\mathbf{z},\mathbf{y}) = \sum _{k=1}^d w_k^2 ( (M\mathbf{y}_i)_k + z_i ) (\delta _{ij} - (M\mathbf{y}_j)_k - z_j)\left( \frac{1}{f_{k}} - \frac{1}{f_{k-1}}\right) \end{aligned}$$

(182)

using the convention that \(1/f_0 = 0\).

1.3 Condition III

We start with

$$\begin{aligned}&E_\mathbf{x} [(\Delta z_i)^4] - E_\mathbf{x} [( z_i (1)-E_\mathbf{x} [ z_i (1)] )^4]\nonumber \\&\quad =E_\mathbf{x} [( z_i(1)-z_i)^4] - E_\mathbf{x} [( z_i (1)-E_\mathbf{x}[ z_i (1)] )^4]\nonumber \\&\quad =E_\mathbf{x} [ z_i(1)^4] - 4 z_iE_\mathbf{x} [ z_i(1)^3] + 6 z_i^2E_\mathbf{x} [ z_i(1)^2] -4 z_i^3E_\mathbf{x} [ z_i(1)] + z_i^4\nonumber \\&\qquad - \Big ( E_\mathbf{x} [ z_i(1)^4] - 4 E_\mathbf{x} [ z_i (1)]E_\mathbf{x} [ z_i(1)^3] + 6 E_\mathbf{x} [ z_i (1)]^2E_\mathbf{x} [ z_i(1)^2]\nonumber \\&\qquad -4 E_\mathbf{x} [ z_i (1)]^3E_\mathbf{x} [ z_i(1)] + E_\mathbf{x} [ z_i (1)]^4\Big )\nonumber \\&\quad =- 4 z_iE_\mathbf{x} [ z_i(1)^3] + 6 z_i^2E_\mathbf{x} [ z_i(1)^2] -4 z_i^3E_\mathbf{x} [ z_i(1)] + z_i^4\nonumber \\&\qquad +4 E_\mathbf{x} [ z_i (1)]E_\mathbf{x} [ z_i(1)^3] - 6 E_\mathbf{x} [ z_i (1)]^2E_\mathbf{x} [ z_i(1)^2]+3 E_\mathbf{x} [ z_i (1)]^4\nonumber \\&\quad =4E_\mathbf{x} [ z_i(1)^3 ] ( E_\mathbf{x} [ z_i (1)]- z_i ) +6 E_\mathbf{x} [ z_i(1)^2] ( z_i^2 - E_\mathbf{x} [ z_i (1)]^2)\nonumber \\&\qquad -4 z_i^3E_\mathbf{x} [ z_i(1)] + z_i^4 +3 E_\mathbf{x} [ z_i (1)]^4. \end{aligned}$$

(183)

From conditions I and II, we have

$$\begin{aligned} E_\mathbf{x}\, [\Delta z_i]&= b_i \, (\mathbf{z},\mathbf{y})\, N^{-1} + o\left( N^{-1}\right) , \end{aligned}$$

(184a)

$$\begin{aligned} E_\mathbf{x} [(\Delta z_i )^2]&= a_{i,i}(\mathbf{z},\mathbf{y}) N^{-1} + o\left( N^{-1}\right) , \end{aligned}$$

(184b)

from which

$$\begin{aligned}&E_\mathbf{x} [(\Delta z_i)^4] - E_\mathbf{x} [( z_i (1)-E_\mathbf{x} [ z_i (1)] )^4] \nonumber \\&\quad =4E_\mathbf{x} [ z_i(1)^3 ] ( b_i(\mathbf{z},\mathbf{y})N^{-1} ) +6 (z_i^2 + 2z_ib_i(\mathbf{z},\mathbf{y})N^{-1}\nonumber \\&\qquad + a_{i,i}(\mathbf{z},\mathbf{y}) N^{-1} )( -2z_ib_i(\mathbf{z},\mathbf{y})N^{-1})\nonumber \\&\qquad -4 z_i^3(z_i + b_i(\mathbf{z},\mathbf{y})N^{-1} ) + z_i^4 +3 ( z_i^4 + 4z_i^3b_i(\mathbf{z},\mathbf{y})N^{-1} ) + o\left( N^{-1}\right) \nonumber \\&\quad =4 b_i(\mathbf{z},\mathbf{y})N^{-1} (E_\mathbf{x} [ z_i(1)^3 ] - z_i^3) + o\left( N^{-1}\right) . \end{aligned}$$

(185)

But we have

$$\begin{aligned} E_\mathbf{x} [ z_i(1)^3 ] - z_i^3 = o(1) \end{aligned}$$

(186)

since \(z_i (1) = \sum \limits _{k=1}^d w_k x_{i,k} (1)\) with \(x_{i,k}(1), x_{i,l}(1), x_{i,m}(1)\) being conditionally independent for k, l, m all different, and satisfying, for \(t=1,2,3\),

$$\begin{aligned} E_\mathbf{x} [ x_{i,1}(1)^t ]&= P_i(\mathbf{x})^t + o(1)\nonumber \\&= \left( \sum _{l=1}^d p_l x_{i,l} + o(1) \right) ^t + o(1)\nonumber \\&= \left( \sum _{l=1}^d p_l x_{i,l} \right) ^t + o(1) \nonumber \\&= \left( (M \mathbf{x}_i)_1 \right) ^t + o(1), \end{aligned}$$

(187)

since \(N_1 x_{i,1}(1)\) has a conditional binomial distribution of parameters \(N_1\) and \(P_i(\mathbf{x})\) given in (9), and

$$\begin{aligned} E_\mathbf{x} [ x_{i,k}(1)^t ] = x_{i,k-1}^t + o(1) = \left( (M \mathbf{x}_i)_k \right) ^t + o(1) \end{aligned}$$

(188)

for \(k=2,\ldots ,d\), as shown in “Appendix B”. As a matter of fact, for some coefficients \(c_2\) and \(c_3\), we have

$$\begin{aligned} E_\mathbf{x} [ z_i(1)^3 ]&= E_\mathbf{x} \left[ \left( \sum _{k=1}^d w_k x_{i,k}(1) \right) ^3 \right] \nonumber \\&= \sum _{k=1}^d w_k^3 E_\mathbf{x} \left[ \left( x_{i,k}(1) \right) ^3 \right] + c_2 \sum _{k=1}^d \sum _{l=1, l \ne k}^d w_k^2 w_l E_\mathbf{x} \left[ \left( x_{i,k}(1) \right) ^2\right] E_\mathbf{x} \left[ x_{i,l}(1) \right] \nonumber \\&\quad + c_3 \sum _{k=1}^d \sum _{l> k}^d \sum _{m> l}^d w_k w_l w_m E_\mathbf{x} \left[ x_{i,k}(1) \right] E_\mathbf{x} \left[ x_{i,l}(1)\right] E_\mathbf{x} \left[ x_{i,m}(1) \right] \nonumber \\&= \sum _{k=1}^d w_k^3 \left( (M \mathbf{x}_i)_k \right) ^3 + c_2 \sum _{k=1}^d \sum _{l=1,l \ne k}^d w_k^2 w_l \left( (M \mathbf{x}_i)_k \right) ^2 (M \mathbf{x}_i)_l \nonumber \\&\quad + c_3 \sum _{k=1}^d \sum _{l> k}^d \sum _{m > l}^d w_k w_l w_m (M \mathbf{x}_i)_k (M \mathbf{x}_i)_l (M \mathbf{x}_i)_m +o(1)\nonumber \\&= \left( \sum _{k=1}^d w_k (M \mathbf{x}_i)_k \right) ^3 +o(1)\nonumber \\&= \left( \sum _{k=1}^d w_k x_{i,k} \right) ^3 +o(1) \nonumber \\&= z_i^3 +o(1). \end{aligned}$$

(189)

Therefore,

$$\begin{aligned} E_\mathbf{x} [(\Delta z_i)^4] = E_\mathbf{x} [( z_i (1)-E_\mathbf{x} [ z_i (1)] )^4] + o( N^{-1}). \end{aligned}$$

(190)

Furthermore,

$$\begin{aligned} E_\mathbf{x}\left[ \left( z_i (1) - E_\mathbf{x}[z_i (1)]\right) ^4\right]&= E_\mathbf{x}\left[ \left( \sum _{k=1}^d w_k(x_{i,k} (1) -E_\mathbf{x}[ x_{i,k} (1) ] ) \right) ^4\right] \nonumber \\&\le E_\mathbf{x}\left[ \sum _{k=1}^d w_k\left( x_{i,k} (1) -E_\mathbf{x}[ x_{i,k} (1)]\right) ^4\right] \nonumber \\&= \sum _{k=1}^d w_kE_\mathbf{x}\left[ \left( x_{i,k} (1) -E_\mathbf{x}[ x_{i,k} (1) ]\right) ^4\right] . \end{aligned}$$

(191)

The inequality is obtained by using the Jensen inequality for the power of order 4 with the values \(a_k =x_{i,k} (1) -E_\mathbf{x}[ x_{i,k} (1) ]\) and the positive probabilities \(w_k\) for \(k=1,\ldots ,d\).

For the first age class \((k=1)\) whose size is \(N_1=f_1N\), we get

$$\begin{aligned}&E_\mathbf{x}\left[ \left( x_{i,1} (1) -E_\mathbf{x}[ x_{i,1} (1) ]\right) ^4\right] \nonumber \\&= \frac{P_i(\mathbf{x})(1-P_i(\mathbf{x}))}{N_1^2} \left( {3P_i(\mathbf{x})(1-P_i(\mathbf{x}))} + \frac{1-6P_i(\mathbf{x})+6P_i(\mathbf{x})^2}{N_1} \right) \nonumber \\&= o\left( N^{-1}\right) . \end{aligned}$$

(192)

On the other hand, for the following age classes \((k=2,\dots , d)\), we have

$$\begin{aligned} E_\mathbf{x}\left[ \left( x_{i,k} (1) -E_\mathbf{x}[ x_{i,k} (1) ]\right) ^4\right] = o\left( N^{-1}\right) \end{aligned}$$

(193)

as shown in “Appendix B” (Eq. (169) with \(X_i = x_{i,k} (1)\) and \(R=N_{k-1}=f_{k-1}N\)).

1.4 Condition IV

We have

$$\begin{aligned} E_\mathbf{x}\left[ \left( y_{i,k} (1) - y_{i,k}\right) \right] = E_\mathbf{x}\left[ \left( x_{i,k} (1) - x_{i,k}\right) \right] + o\left( 1\right) \end{aligned}$$

(194)

by condition I. Moreover, for the first age class (\(k=1\)), we have

$$\begin{aligned} E_\mathbf{x}\left[ x_{i,1} (1)\right] = \sum _{k=1}^d p_k x_{i,k} + o\left( 1\right) , \end{aligned}$$

(195)

from which

$$\begin{aligned} c_{i,1}(\mathbf{z},\mathbf{y}) = \sum _{k=1}^d p_k x_{i,k} -x_{i,1}, \end{aligned}$$

(196)

while for the following age classes (\(k = 2, \ldots , d\)), we have

$$\begin{aligned} E_\mathbf{x}\left[ x_{i,k} (1)\right] = x_{i,k-1} + o\left( N^{-1}\right) , \end{aligned}$$

(197)

from which

$$\begin{aligned} c_{i,k}(\mathbf{z},\mathbf{y}) = x_{i,k-1} - x_{i,k}. \end{aligned}$$

(198)

Therefore, we get condition IV with

$$\begin{aligned} c_{i,k}(\mathbf{z},\mathbf{y}) = (M \mathbf{x}_i)_k - x_{i,k} = (M \mathbf{y}_i)_k - y_{i,k}, \end{aligned}$$

(199)

where M is the matrix defined in (14).

1.5 Condition V

According to (47), we have

$$\begin{aligned} Var_\mathbf{x}\left[ \Delta y_{i,k} \right] = Var_\mathbf{x}\left[ \Delta x_{i,k}\right] + Var_\mathbf{x}\left[ \Delta z_{i} \right] - Cov_\mathbf{x}\left[ \Delta x_{i,k} ,\Delta z_{i} \right] . \end{aligned}$$

(200)

Owing to (181) and (180), we have that

$$\begin{aligned} Var_\mathbf{x}\left[ \Delta x_{i,k} \right] =o(1), \end{aligned}$$

(201)

and to (48a) and (48b), that

$$\begin{aligned} Var_\mathbf{x}\left[ \Delta z_{i} \right] =o(1). \end{aligned}$$

(202)

On the other hand, the random variables \(x_{i,k}(1)\) and \(x_{i,l}(1)\) for \(l\ne k=1,\ldots , d\) and \(i=1,\ldots ,n\) are conditionally independent so that

$$\begin{aligned} Cov_\mathbf{x}\left[ \Delta x_{i,k},\Delta z_{i} \right] = w_kCov_\mathbf{x}\left[ x_{i,k} (1),x_{i,k} (1)\right] = o(1), \end{aligned}$$

(203)

owing to (181) and (180).

1.6 Condition VI

Owing to (199), the recurrence system of equations (50) becomes

$$\begin{aligned} Y_{i,k}(t+1,\mathbf{z},\mathbf{{y}}) = (M \mathbf{Y}_i(t,\mathbf{z},\mathbf{{y}}))_k \end{aligned}$$

(204)

with

$$\begin{aligned} \mathbf{Y}_i(t,\mathbf{z},\mathbf{{y}}) = ( Y_{i,1}(t,\mathbf{z},\mathbf{{y}}), \ldots , Y_{i,d}(t,\mathbf{z},\mathbf{{y}}))^T. \end{aligned}$$

(205)

Then

$$\begin{aligned} \mathbf{Y}_{i}(t+1,\mathbf{z},\mathbf{{y}}) = M^{t+1} \mathbf{Y}_i(0,\mathbf{z},\mathbf{{y}}) =M^{t+1} \mathbf{{y}}_i \end{aligned}$$

(206)

for all integers \(t\ge 0\). According to Eq. (28), we have

$$\begin{aligned} \lim \limits _{t\rightarrow +\infty } M^{t} = \mathbf{1 w^T } \end{aligned}$$

(207)

entrywise. Moreover,

$$\begin{aligned} \mathbf{w}^T \mathbf{{y}}_i = \mathbf{w}^T\left( \mathbf{x}_i - z_i\mathbf{1}\right) = \mathbf{w}^T\left( \mathbf{x}_i - ( \mathbf{w}^T \mathbf{x}_i) \mathbf{1} \right) =\mathbf{w}^T \mathbf{x}_i - ( \mathbf{w}^T \mathbf{x}_i) \mathbf{w}^T\mathbf{1} = 0, \end{aligned}$$

(208)

since \(\mathbf{w}^T\mathbf{1} = 1\). Therefore,

$$\begin{aligned} \lim \limits _{t\rightarrow + \infty } \mathbf{Y}_{i}(t,\mathbf{z},\mathbf{{y}}) = \mathbf{0} \end{aligned}$$

(209)

uniformly in \(\mathbf{z} \in \mathbb {R}^n \) and \(\mathbf{y} \in \mathbb {R}^n\times \mathbb {R}^d \) for \(i = 1,\ldots ,n\) by linearity.

Appendix D: analysis of U(f) with selection on fertility only

With the change of variable \(f = (3-y)/2\), we get

$$\begin{aligned} V(y)=U(f(y)) =\frac{\frac{4}{(3-y)y}}{1-\exp \left\{ -\frac{(1+y)}{y}\right\} } \end{aligned}$$

(210)

for \(1<y<2\). The derivative of V(y) is given by

$$\begin{aligned} V'(y) = \frac{4 e^{\frac{1}{y}+1} \left( -2 (y-1) y+e^{\frac{1}{y}+1} (2 y-3) y+3\right) }{\left( e^{\frac{1}{y}+1}-1\right) ^2 (y-3)^2 y^3}. \end{aligned}$$

(211)

In particular, we have

$$\begin{aligned} V'(1) = \frac{e^2 \left( 3-e^2\right) }{\left( e^2-1\right) ^2} < 0, \quad V'(2) = \frac{e^{3/2} \left( 2 e^{3/2}-1\right) }{2 \left( e^{3/2}-1\right) ^2} > 0. \end{aligned}$$

(212)

Owing to the intermediate value theorem, there exists a real number \(y^{*} \in (1,2) \) such that \(V'(y^{*}) = 0\). Furthermore, the second derivative of V(y) given by

$$\begin{aligned} V''(y)=\frac{4 e^{\frac{1}{y}+1} A(y) }{\left( e^{\frac{1}{y}+1}-1\right) ^3 (y-3)^3 y^5} \end{aligned}$$

(213)

is a positive function with

$$\begin{aligned} A(y)&= -6 e^{\frac{2}{y}+2} ((y-3) y+3) y^2 +e^{\frac{1}{y}+1} (y (y (6 y (2 y-5)+5)+42)-9)\nonumber \\&\quad -9 - 30 y + 11 y^2 + 12 y^3 - 6 y^4 \end{aligned}$$

(214)

being negative for \(1<y<2\) (see below). Then the critical point \(y^{*}\) is unique, and it is actually the global minimum point of V(y). Therefore, \(f^{*} = (3-y^{*})/2\) is the only critical point of U(f), and it is actually the global minimum point of U(f).

It remains to prove that \(A(y) < 0\) for \(1<y<2\). Note that

$$\begin{aligned}&-6 e^{\frac{2}{y}+2} ((y-3) y+3) y^2 +e^{\frac{1}{y}+1} (y (y (6 y (2 y-5)+5)+42)-9)\nonumber \\&\quad = e^{\frac{1}{y}+1} \left( -6 y^2 e^{\frac{1}{y}+1} ((y-3) y+3) -9 + 42 y + 5 y^2 - 30 y^3 + 12 y^4 \right) \nonumber \\&\quad = e^{\frac{1}{y}+1} \left( -6 y^2 e^{\frac{1}{y}+1} ((y-3) y+3) +y^2\left( 5 - 30 y + 12 y^2\right) -9 + 42 y \right) \nonumber \\&\quad = e^{\frac{1}{y}+1} \left( -6 y^2 ((y-3) y+3) \left( e^{\frac{1}{y}+1} - 4\right) -12 y^4+42 y^3-67 y^2+42 y-9 \right) \end{aligned}$$

(215)

is negative, since \(e^{\frac{1}{y}+1}-4> e^{2}-4 > 0\), while \(-12 y^4+42 y^3-67 y^2+42 y-9 <0\) and \( (y-3) y+3>0\), both polynomials having no real roots. Note also that the polynomial \(-9 - 30 y + 11 y^2 + 12 y^3 - 6 y^4\) in (214) has only two real roots, namely \(y \approx -1.38072\) and \(y \approx -0.281155\). Moreover, since it goes to \(-\infty \) as \(y\rightarrow +\infty \), it is necessarily negative for \(1<y<2\).

Appendix E: derivative of U(f) with selection on survival

With selection on survival only, we have

$$\begin{aligned}&U'(f) \nonumber \\&\quad =\frac{2 A \left( (2 f-3) \left( 4 f^2 \left( A-2\right) +3 A+f \left( 14-4 E\right) -7\right) L+2 f P L^2+\left( A-1\right) (3-2 f)^2\right) }{f^2 (2 f-3)^3 \left( A-1\right) ^2}, \end{aligned}$$

(216)

while with selection on both survival and fertility, we have

$$\begin{aligned}&U'(f) \nonumber \\&\quad = \frac{2 C \left( L \left( Q B -8 f^3+32 f^2-36 f+21\right) +2 (f-1) \left( (6 f-9) B -8 f+15\right) +2 f P L^2 \right) }{f^2 (2 f-3)^3 \left( B -1\right) ^2} \end{aligned}$$

(217)

with

$$\begin{aligned} P&=8 f^3-28 f^2+34 f-11, \end{aligned}$$

(218a)

$$\begin{aligned} Q&= 8 f^3-20 f^2+18 f-9,\end{aligned}$$

(218b)

$$\begin{aligned} L&= \ln \left( \frac{f}{2 f-1}\right) ,\end{aligned}$$

(218c)

$$\begin{aligned} \ln A&=\frac{2 (f-2) (2 f-1)}{2 f-3} \ln \left( \frac{f}{2 f-1}\right) \end{aligned}$$

(218d)

and

$$\begin{aligned} B = \exp \left\{ \frac{2 (f-2) \left( (2 f-1) \ln \left( \frac{f}{2 f-1}\right) +1\right) }{2 f-3}\right\} . \end{aligned}$$

(218e)