Abstract

This paper is based on the complete classification of evolutionary scenarios for the Moran process with two strategies given by Taylor et al. (Bull Math Biol 66(6):1621–1644, 2004. https://doi.org/10.1016/j.bulm.2004.03.004). Their classification is based on whether each strategy is a Nash equilibrium and whether the fixation probability for a single individual of each strategy is larger or smaller than its value for neutral evolution. We improve on this analysis by showing that each evolutionary scenario is characterized by a definite graph shape for the fixation probability function. A second class of results deals with the behavior of the fixation probability when the population size tends to infinity. We develop asymptotic formulae that approximate the fixation probability in this limit and conclude that some of the evolutionary scenarios cannot exist when the population size is large.

Similar content being viewed by others

References

Allen LJS (2011) An introduction to stochastic processes with applications to biology. Chapman & Hall, Boca Raton

Antal T, Scheuring I (2006) Fixation of strategies for an evolutionary game in finite populations. Bull Math Biol 68(8):1923–1944. https://doi.org/10.1007/s11538-006-9061-4

Apostol TM (1999) An elementary view of Euler’s summation formula. Am Math Mon 106:409–418

Ashcroft P, Altrock PM, Galla T (2014) Fixation in finite populations evolving in fluctuating environments. J R Soc Interface 11(100). https://doi.org/10.1098/rsif.2014.0663. URL http://rsif.royalsocietypublishing.org/content/11/100/20140663, http://rsif.royalsocietypublishing.org/content/11/100/20140663.full.pdf

Chalub FA, Souza MO (2009) From discrete to continuous evolution models: a unifying approach to drift-diffusion and replicator dynamics. Theor Popul Biol 76(4):268–277. https://doi.org/10.1016/j.tpb.2009.08.006

Chalub FACC, Souza MO (2016) Fixation in large populations: a continuous view of a discrete problem. J Math Biol 72(1):283–330. https://doi.org/10.1007/s00285-015-0889-9

Durand G, Lessard S (2016) Fixation probability in a two-locus intersexual selection model. Theor Popul Biol 109:75–87. https://doi.org/10.1016/j.tpb.2016.03.004

Durney CH, Case SO, Pleimling M (2012) Stochastic evolution of four species in cyclic competition. J Stat Mech Theory Exp 2012(06):P06014

Ewens WJ (2004) Mathematical population genetics I: theoretical introduction. Interdisciplinary applied mathematics. Springer, New York

Healey D, Axelrod K, Gore J (2016) Negative frequency-dependent interactions can underlie phenotypic heterogeneity in a clonal microbial population. Mol Syst Biol 12:877

Hofbauer J, Sigmund K (1998) Evolutionary games and population dynamics. Cambridge University Press, Cambridge

Maynard Smith J, Price G (1973) The logic of animal conflicts. Nature 246:15–18

McLoone B, Fan WTL, Pham A, Smead R, Loewe L (2018) Stochasticity, selection, and the evolution of cooperation in a two-level Moran model of the snowdrift game. Complexity. https://doi.org/10.1155/2018/9836150

Mobilia M (2011) Fixation and polarization in a three-species opinion dynamics model. EPL 95(5):50002. https://doi.org/10.1209/0295-5075/95/50002

Moran PAP (1958) Random processes in genetics. Proc Camb Philos Soc 54(1):60

Nowak M (2006) Evolutionary dynamics, 1st edn. The Belknap of Harvard University Press, Cambridge

Nowak MA, Sigmund K (1992) Tit for tat in heterogeneus populations. Nature 355:255–253

Nowak MA, Sasaki A, Taylor C, Fudenberg D (2004) Emergence of cooperation and evolutionary stability in finite populations. Nature 428(6983):646–650. https://doi.org/10.1038/nature02414

Núñez Rodríguez I, Neves AGM (2016) Evolution of cooperation in a particular case of the infinitely repeated prisoner’s dilemma with three strategies. J Math Biol 73(6):1665–1690. https://doi.org/10.1007/s00285-016-1009-1

Olver FWJ (1974) Asymptotics and special functions. Academic Press, San Diego

Sample C, Allen B (2017) The limits of weak selection and large population size in evolutionary game theory. J Math Biol 75(5):1285–1317

Taylor C, Fudenberg D, Sasaki A, Nowak MA (2004) Evolutionary game dynamics in finite populations. Bull Math Biol 66(6):1621–1644. https://doi.org/10.1016/j.bulm.2004.03.004

Taylor PD, Jonker LB (1978) Evolutionary stable strategies and game dynamics. Math Biosci 40:145–156

Traulsen A, Claussen JC, Hauert C (2012) Stochastic differential equations for evolutionary dynamics with demographic noise and mutations. Phys Rev E 85(041):901. https://doi.org/10.1103/PhysRevE.85.041901

Xu Z, Zhang J, Zhang C, Chen Z (2016) Fixation of strategies driven by switching probabilities in evolutionary games. EPL 116(5):58002

Zeeman CE (1980) Population dynamics from game theory. In: Lecture notes in mathematics, vol 819. Springer, 497p

Acknowledgements

We thank Max O. Souza for early discussions and encouragement for writing this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

EPS had scholarships paid by CNPq (Conselho Nacional de Desenvolvimento Científico e Tecnológico, Brazil) and Capes (Coordenação de Aperfeiçoamento de Pessoal de Nível Superior, Brazil). EMF had a Capes scholarship. AGMN was partially supported by Fundação de Amparo à Pesquisa de Minas Gerais (Grant No. CEX - APQ-02909-14) (FAPEMIG, Brazil).

A Proofs

A Proofs

1.1 A.1 Proof of Theorem 1

We start with the second scenario in (15). We will show that if the invasion dynamics is \(B{\mathop {}\limits ^{\leftarrow \leftarrow }} A\), then necessarily \( \rho _A<\frac{1}{N} \) and \( \rho _B>\frac{1}{N} \). The invasion arrows mean that both \(r_1\) and \(r_{N-1}\) are smaller than 1. By Lemma 1, regardless of the \(r_i\) being increasing, decreasing or constant, \(r_i<1\) for all i. This implies \(\sum _{j=1}^{N-1}\prod _{i=1}^{j}r_i^{-1}>N-1 \). As \(\rho _A=\pi _1\), then (11) implies \(\rho _A <1/N\).

A formula for \(\rho _B\) similar to (11) may be obtained:

A reasoning similar to the above shows that due to \(r_i\) being smaller than 1 for all i implies \(\rho _B>1/N\). This proves that the only possible scenario if the invasion dynamics is \(B{\mathop {}\limits ^{\leftarrow \leftarrow }} A\) is \(B{\mathop {\Leftarrow \Leftarrow }\limits ^{\leftarrow \leftarrow }} A\). An analogous proof holds for the first scenario in (15).

Let us now work with the scenarios in (16), in which the upper arrows mean \( r_1> 1 > r_{N-1}\). In this case, by Lemma 1, the \(r_i\) are decreasing. Let

It is clear that \(1 \le \ell \le N-2\) and the same for \(\ell '\). We define recursively \( H_i \) as

Analogously, we define \( H^{\prime }_i \) as

Notice that sequences \( H_1, H_2,\ldots ,H_{\ell } \) and \( H^{\prime }_1, H^{\prime }_2,\ldots ,H^{\prime }_{\ell ^{\prime }} \) are decreasing, whereas sequences \(H_{\ell }, H_{\ell +1},\ldots ,H_{N-1} \) and \( H^{\prime }_{\ell ^{\prime }}, H^{\prime }_{\ell ^{\prime }+1},\ldots ,H^{\prime }_{N-1} \) are increasing. See also that \( H_1 < 1 \), \( H^{\prime }_1 < 1 \), but we do not know whether \( H_{N-1} \) and \( H^{\prime }_{N-1} \) are larger, smaller or equal to 1. If \(H_i>1\) for some i, then we must have \(H_{N-1}>1\). If, on the other hand, \(H_{N-1}\le 1\), then \(H_i\le 1\) for all i. The same conclusions are valid for the \(H'_i\). Moreover, the following relation holds

In terms of the new notations, we have

Suppose now that \( \rho _A<1/N \). Then \(1/\rho _A-1=\sum _{i=1}^{N-1}H_i>N-1 \) and we must have \(H_i>1\) for some i. As we have already seen, this implies that \( H_{N-1}>1 \). By relation (53), we have \( H^{\prime }_{N-1}<1 \) and thus \( H^{\prime }_i<1 \) for all i. This implies \( \sum _{i=1}^{N-1}H^{\prime }_i<N-1 \) and, by the \( \rho _B \) formula in (54), we get \( \rho _B>1/N \).

We can apply the same reasoning above to show that if \( r_1> 1> r_{N-1} \) and \( \rho _B<1/N \), then \( \rho _A>1/N \).

Both reasonings put together show that it is not possible to have both \( \rho _A<1/N \) and \( \rho _B<1/N \) if \( r_1> 1> r_{N-1}\). In symbols, this means that scenario \(B{\mathop {\Leftarrow \Rightarrow }\limits ^{\rightarrow \leftarrow }} A\) is forbidden, proving our assertion about scenarios in (16).

The assertion about scenarios (17) is proved by an analogous argument. More concretely, we prove that if \( r_1< 1 < r_{N-1} \), then \( \rho _A>1/N \) and \( \rho _B>1/N \) cannot be both true. In symbols, scenario \(B{\mathop {\Rightarrow \Leftarrow }\limits ^{\leftarrow \rightarrow }} A\) is forbidden. \(\square \)

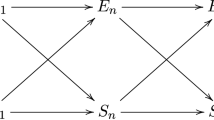

Notice that in the above proof we showed that some evolutionary scenarios are forbidden. That all evolutionary scenarios which are not forbidden are actually permitted is illustrated by examples. Examples in Fig. 2 show that scenarios \(B{\mathop {\Rightarrow \Leftarrow }\limits ^{\rightarrow \leftarrow }} A\) and \(B{\mathop {\Rightarrow \Rightarrow }\limits ^{\rightarrow \leftarrow }} A\) are permitted. If the roles of A and B are exchanged, i.e. if the pay-off matrix for the examples in Fig. 2 is exchanged by \(P=\left( \begin{array}{cc} 1.85 &{} 2.2 \\ 2.1 &{} 2. \\ \end{array} \right) \), then we obtain an example of scenario \(B{\mathop {\Leftarrow \Leftarrow }\limits ^{\rightarrow \leftarrow }} A\) for \(N=250\). Similarly, the examples in Fig. 3 indicate that scenarios \(B{\mathop {\Leftarrow \Leftarrow }\limits ^{\leftarrow \rightarrow }} A\) and \(B{\mathop {\Leftarrow \Rightarrow }\limits ^{\leftarrow \rightarrow }} A\) do exist. If we exchange A and B, we obtain also an example for the \(B{\mathop {\Rightarrow \Rightarrow }\limits ^{\leftarrow \rightarrow }} A\) case.

1.2 A.2 Proofs of the graph shape results

Before we start, let us introduce some notation for the proofs.

First of all, let \(I_{N-1} = \{1,2, \dots , N-1\}\). The set of points i in \(I_{N-1}\) such that the fixation probability \(\pi _i\) is smaller than the neutral value i / N will be denoted D. More concretely,

\(\overline{D}\) will then denote a similar set, but replacing the strict inequality by \(\le \), i.e.

Analogously, we define

and

1.2.1 A.2.1 Proof of Proposition 1

The scenario hypotheses are \(r_1<1\), \(r_{N-1}<1\), \(\rho _A<1/N\) and \(\rho _B>1/N\). The former two imply by Lemma 1 that \(r_i<1\) for all \(i \in I_{N-1}\). Using (8), we prove that the discrete derivative is an increasing function.

The latter two imply that \(\pi _1<1/N\) and \(\pi _{N-1}<\frac{N-1}{N}\). Using the boundary conditions in (10) we also have \(d_1<1/N\) and \(d_N>1/N\).

What remains to be proved is that \(D=I_{N-1}\) in this scenario. We know that \(1 \in D\) and \(N-1 \in D\). Suppose that \(I_{N-1}{\setminus }D\) is not empty, and let j be its minimum. Then \(2 \le j \le N-2\), \(\pi _{j-1}<\frac{j-1}{N}\) and \(\pi _j \ge \frac{j}{N}\). It follows that \(d_j>1/N\) and, because the derivative is increasing, \(d_i>1/N\) for \(i > j\). We may then use Lemma 2 and find that \(\pi _{N-1}>\frac{N-1}{N}\), which is an absurd because, as already noticed, we must have \(\pi _{N-1}<\frac{N-1}{N}\). Then \(I_{N-1}{\setminus }D\) is empty. \(\square \)

1.2.2 A.2.2 Proof of Proposition 3

The arrows in \(B{\mathop {}\limits ^{\rightarrow \leftarrow }} A\) mean that we have \(r_1>1\) and \(r_{N-1}<1\). It is then necessary, according to Lemma 1, that \(r_i\) decreases with i. Although in fitnesses \(f_i\) and \(g_i\), see (2) and (3), and consequently also in \(r_i\), i is an integer, the very same definitions make mathematical sense for \(i \in {\mathbb {R}}\). If we accept that extension to \({\mathbb {R}}\), then, by continuity, equation \(r_i=1\) has a single real solution \(x \in (1,N-1)\). If we define \(i^{*}\) to be the smallest integer larger than or equal to x, then using (8) we prove the claims related to \(i^{*}\) in the statement of the proposition.

Suppose now that the scenario is \(B{\mathop {\Rightarrow \Leftarrow }\limits ^{\rightarrow \leftarrow }} A\). The double arrows mean \(\rho _A>1/N\) and \(\rho _B>1/N\), which imply \(\pi _1>1/N\) and \(\pi _{N-1}<\frac{N-1}{N}\). So \(1 \in U\) and \(N-1 \in D\). As D is non-empty, let \(\overline{i}\) be its minimum. As \(\overline{i} \in D\) and \(\overline{i}-1 \in \overline{U}\), then \(d_{\overline{i}}<1/N\).

We claim that \(D \supset \{\overline{i}, \overline{i}+1, \dots , N-1 \}\). If this is not true, then \(\{\overline{i}, \overline{i}+1, \dots , N-1 \}{\setminus }D\) is not empty. Let then j be the smallest element in this set. We have \(j> \overline{i}\), \(\pi _{j-1} < \frac{j-1}{N}\) and \(\pi _j \ge \frac{j}{N}\). This implies \(d_j>1/N\). Because the discrete derivative increased when going from \(\overline{i}\) to j, then \(j>i^{*}+1\). It follows that \(d_k> 1/N\) for all \(k \ge j\) and, by Lemma 2, \(\pi _{N-1} > \frac{N-1}{N}\), which contradicts hypothesis \(\rho _B>1/N\). Then our claim is proved. As D cannot contain elements smaller than its minimum \(\overline{i}\), nor larger than \(N-1\), then \(D = \{\overline{i}, \overline{i}+1, \dots , N-1 \}\), proving what we needed about the scenario \(B{\mathop {\Rightarrow \Leftarrow }\limits ^{\rightarrow \leftarrow }} A\).

Consider now \(B{\mathop {\Rightarrow \Rightarrow }\limits ^{\rightarrow \leftarrow }} A\). The double arrows here imply \(\pi _1>1/N\) and \(\pi _{N-1}>\frac{N-1}{N}\). The latter, together with (10), implies \(d_N<1/N\). What we want to prove is that \(\overline{D}=\emptyset \).

Suppose that \(\overline{D}\) is not empty. Let then \(j_1\) and \(j_2\) be its minimum and maximum elements. Because 1 and \(N-1\) are in U, then \(1<j_1 \le j_2<N-1\). As \(j_1-1 \in U\) and \(j_1 \in \overline{D}\), then \(d_{j_1}<1/N\). Similarly, \(d_{j_2+1}>1/N\). As the discrete derivative increased between \(j_1\) and \(j_2+1\), then \(j_2> i^{*}\). It follows that \(d_j>1/N\) if \(j \ge j_2+1\). Together with Lemma 2, this leads to a contradiction, because we already knew that \(d_N<1/N\).

Finally, the proof for scenario \(B{\mathop {\Leftarrow \Leftarrow }\limits ^{\rightarrow \leftarrow }} A\) may be obtained from the one of \(B{\mathop {\Rightarrow \Rightarrow }\limits ^{\rightarrow \leftarrow }} A\) by using (18). \(\square \)

1.3 A.3 Proofs of some auxiliary results

1.3.1 A.3.1 General results

For completeness sake, we state here the Euler–Maclaurin formula. For a proof and the definitions of the Bernoulli numbers and periodic functions, the reader is directed to Apostol (1999).

Theorem 7

(Euler–Maclaurin formula) For any function f with a continuous derivative of order \(2m + 1\) on the interval [0, n], \(m \ge 0\) and \(n \in {\mathbb {N}}\), we have

where the \(P_k\) are the Bernoulli periodic functions and \(B_k=P_k(1)\) the Bernoulli numbers.

The next general result is a generalized form of the Riemann–Lebesgue lemma appearing in the theory of Fourier series and transform. We state it here for completeness. Its proof will not be presented, because it is basically the proof of the standard form of the same result.

Lemma 3

(Generalized Riemann–Lebesgue) Let p be a \(C^1\) 1-periodic function with \(\int _0^1 p(x)dx=0\) and f be integrable in \([0, \infty )\). Then

1.3.2 A.3.2 Proof of Proposition 5

For \(x \in [0,1]\) we may write

Notice that \(|\frac{[Nx]}{N}-x| \le \frac{1}{2N}\). If we use a Taylor expansion around x, it is easy to see that if \(x \notin {\mathbb {Q}}_N\), then the second term in the above sum may be estimated as

which is bounded, but in general does not tend to 0 as \(N \rightarrow \infty \). As already remarked, in order to simplify things, we may add the hypothesis that \(x\in {\mathbb {Q}}_{N_0}\) for some \(N_0\) and that N is a multiple of \(N_0\). With this further assumption, the term we are referring to is null.

Continuing, we will then assume that Nx is integer and we write \(\ell _{Nx}\) instead of \(\ell _{[Nx]}\). We may split \(N (\ell _{Nx}-L(x))\) as

For the sum of the second and third terms above, we have

where we used in the last passage the Euler–Maclaurin formula (55) with \(m=0\). By making the substitution \(u=\frac{s}{N}\) in the last integral and using Lemma 3, we prove that it tends to 0. So, if Nx is an integer,

The remaining term \(-\sum _{k=1}^{Nx} (\log r_k-\log R(\frac{k}{N}))\) in the above expression for \(N (\ell _{Nx}-L(x))\) can be rewritten by using the definitions (9) for \(r_k\) and (23) for \(R(\frac{k}{N})\). The difference \(r_k-R(\frac{k}{N})\) is

We may then use the Taylor expansion of the logarithm to find

Summing the above expression for k running from 1 to Nx, the first terms become a Riemann sum that converges when \(N \rightarrow \infty \) to the integral wq(x), whereas the sum of the \(O(1/N^2)\) terms of course tends to 0. \(\square \)

1.3.3 A.3.3 Proofs of some results appearing in Theorems 2 and 4

We start with the result leading to the only asymptotically non-vanishing contributions for \(\sum _{j=1}^{N-1} e^{N L(\frac{j}{N})}\), as in the proof of Theorem 2. In fact, these contributions are obtained by just putting \(a= R'(x^{*})\) in the result below:

Proposition 6

Let \(a>0\). Then, for any non-negative integer m,

Proof

Take \(f(x)=e^{-\frac{a}{N}x^2}\) in (55). We may also take \(n=\infty \) in the same formula, because the improper integrals converge and so do the limits at infinity of f and its derivatives, all equal to 0. The odd-ordered derivatives of f at 0 all vanish, too. The Euler–Maclaurin formula gives us then, in this case,

To finish the proof, we use the fact that the Hermite polynomials \(H_n(x)\) are related to the derivatives of \(e^{-x^2}\) by

The derivatives of f become

Substituting this expression in the remaining integral in (57), performing the change of variables \(u=\sqrt{\frac{a}{N}}x\) and using Lemma 3, the result is proved. \(\square \)

Proposition 6 tells us that exchanging the sum in the left-hand side of (56) by the corresponding integral produces an error very close to 1 / 2. The difference between this error and 1 / 2 is so small that for any \(m>0\), this difference multiplied by \(N^m\) still tends to 0. For the sake of proving Theorem 2, \(m=1\) would be enough, but our result is so remarkable that we could not help stating it in its full generality.

In the next lemma we prove some asymptotic estimates—obtained also by the Euler–Maclaurin formula—necessary for proving Theorem 2.

Lemma 4

-

(i)

Let \(a>0\) and \(A \in {\mathbb {N}}\) be such that \(\frac{A^2}{N} {\mathop {\rightarrow }\limits ^{N \rightarrow \infty }} \infty \). Then

$$\begin{aligned} \sum _{k=A}^{\infty } e^{-\frac{a}{N}k^2} {\mathop {\sim }\limits ^{N \rightarrow \infty }} \left( \frac{1}{2}\, \frac{N}{a} \, A^{-1} \,+\,1\right) \, e^{-\frac{a}{N}A^2}. \end{aligned}$$(58) -

(ii)

If p is an odd positive integer, then

$$\begin{aligned} \sum _{k=1}^{\infty } k^p \, e^{-\frac{a}{N}k^2} {\mathop {\sim }\limits ^{N \rightarrow \infty }} \frac{1}{2} \, (\frac{p-1}{2})! \, \left( \frac{N}{a}\right) ^{\frac{p+1}{2}}. \end{aligned}$$(59)

Proof

In order to prove (58) we use (55), again with \(f(x)=e^{-\frac{a}{N} x^2}\) and \(n=\infty \), but now we take \(m=0\). It gives us

For the first integral in the above expression, by a simple change of variable we have

where \(\varGamma (s,x)\equiv \int _x^{\infty } y^{s-1} \, e^{-y}dy\) is the complementary (or upper) incomplete Gamma function. It is known that (Olver 1974) \(\varGamma (s,x) {\mathop {\sim }\limits ^{x \rightarrow \infty }} x^{s-1} e^{-x}\). Then

For the second integral in the right-hand side of (60), we remind that \(|P_1(x)| \le 1/2\) for all \(x \in {\mathbb {R}}\). Thus

Putting together the expressions for both integrals, we obtain (58).

For proving (59) we use again (55) with \(m=0\), now with \(f(x)=x^p e^{-\frac{a}{N} x^2}\). We get

Explicitly calculating \(f'(x)\) and using again \(|P_1(x)|\le 1/2\), the integral in the right-hand side may be easily bounded by a sum of two terms, both of them being \(O(N^{p/2})\), thus negligible with respect to the first term in the right-hand side of the above formula. Using that \(\varGamma (\frac{p+1}{2})= (\frac{p-1}{2})!\) for odd p takes us to the result. \(\square \)

The next result proves that two of the terms appearing in the proof of Theorem 2 vanish asymptotically.

Proposition 7

Both terms

and

appearing in the proof of Theorem 2 tend to 0 when \(N \rightarrow \infty \).

Proof

Using \(a=\frac{R'(x^{*})}{2}\) and \(A= [ N^{2/3}]+1\) in (58), we see that

We proceed now to show that \(\sum _{k=[ N^{2/3}]+1}^{J^{*}-1} e^{N(L(\frac{J^{*}-k}{N})-L(x^{*}))}\) tends to 0 when \(N \rightarrow \infty \). In fact, in scenario \(B\leftarrow \rightarrow A\) we know that \(L''(x)=-\frac{R'(x)}{R(x)} <0\) for all \(x \in [0,1]\). Letting \(-M \equiv \max _{x\in [0,1]} L''(x)\), then \(M>0\). Using a Taylor formula with Lagrange remainder, we know that there exists \(\xi _k\) between \(x^{*}\) and \(\frac{J^{*}-k}{N}\) such that

where we have used (38) and the above definition of M. As \(\delta _N \ge -1/2\), we have

We may now use (58) again and our claim follows. \(\square \)

One last term remains to be controlled in order to complete the proof of Theorem 2. We do so in

Proposition 8

The term

appearing in the proof of Theorem 2 is bounded when \(N \rightarrow \infty \).

Proof

Notice first that \(\frac{J^{*}-k}{N}= x^{*}- \frac{k+\delta _N}{N}\). Using the Taylor expansion (40), we may rewrite

where \(\alpha _k\) is some number between 0 and 1. We now use that for any \(a>0\),

if \(|\theta | \le a\). If we take

and \(M'=\max _{x \in [0,1]}|L'''(x)|\), then

where in the last passage we used that \(|\delta _N|\le 1/2\). As \(k \le N^{2/3}\) in the sum we want to estimate, there exists a constant C independent of N such that \(|\theta |<C\) if \(k \le N^{2/3}\).

By (61), we obtain that

Substituting this bound and using (56) and (59), both with \(p=1\) and \(p=3\), finishes the proof. \(\square \)

This last result appears in the proof of Theorem 4.

Proposition 9

If the invasion scenario is \(B\leftarrow \rightarrow A\) and \(x<x^{*}\), then

Proof

By using for \(L(x-\frac{k}{N})\) a Taylor expansion up to order 1 around x with Lagrange remainder, then

for some \(\alpha _k \in (0,1)\). If we use that \(L''\) is negative in [0, 1] and for \(\theta <0\) we have \(|e^{\theta }-1|<|\theta |\), then

where \(M =\max _{y \in [0,1]}|L''(y)|\). As the latter sum converges if we exchange the upper limit by \(\infty \), the proposition is proved. \(\square \)

Rights and permissions

About this article

Cite this article

de Souza, E.P., Ferreira, E.M. & Neves, A.G.M. Fixation probabilities for the Moran process in evolutionary games with two strategies: graph shapes and large population asymptotics. J. Math. Biol. 78, 1033–1065 (2019). https://doi.org/10.1007/s00285-018-1300-4

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00285-018-1300-4