Abstract

We study the optimal arrangement of two conductive materials in order to maximize the first eigenvalue of the corresponding diffusion operator with Dirichlet conditions. The amount of the highest conductive composite is assumed to be limited. Since this type of problems has no solution in general, we work with a relaxed formulation. We show that the problem has some good concavity properties which allow us to get some uniqueness results. By proving that it is related to the minimization of the energy for a two-phase material studied in [4] we also obtain some smoothness results. As a consequence, we show that the unrelaxed problem has never solution. The paper is completed with some numerical results corresponding to the convergence of the gradient algorithm.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

For two conductive materials (electric or thermic), represented by their diffusion constants \(0<\alpha <\beta \), we are interested in finding the mixture which maximizes the first eigenvalue of the corresponding diffusion operator with Dirichlet conditions. Namely, for a fixed bounded open set \(\Omega \subset {\mathbb {R}}^N\), \(N\ge 2\), we look for a measurable subset \(\omega \subset \Omega \) maximizing

With this formulation the solution is the trivial one given by \(\omega =\emptyset \). It consists in taking in every point the material with the highest diffusion coefficient. The problem becomes interesting when the amount of such material is limited, i.e. when we add the constraint

for some \(\kappa \in \big (0,|\Omega |\big )\). From the point of view of the applications, the maximization of the first eigenvalue is a criterion to determine the best two-phase conductive material (associated to the Dirichlet conditions). To clarify this statement we can consider the parabolic problem

Due to the diffusion process, the solution u (electric potential of temperature) tends to zero when t tends to infinity. Thus, we can consider that one material is a better diffuser than another if the solution converges to zero faster. In this point we recall the estimate (\(\lambda _1\) the first eigenvalue of the elliptic operator)

which is optimal in the sense that it is reached for \(u_0\) an associated eigenfunction. Therefore, one way of understanding that the material is a good conductor is that \(\lambda _1\) is large.

The opposite problem, corresponding to get the worse conductive material (i.e. the best isolating one) has been considered in several papers such as [2, 5, 6, 11, 12], and [21] (see also [8] for the p-Laplacian operator). It consists in finding \(\omega \) minimizing (1.1), when (1.2) is replaced by \(|\omega |\le \kappa .\) Assuming \(\Omega \) connected, with connected boundary, it has been proved in [6] (see [5, 8] and [25], for related results) that the problem has a solution if and only if \(\Omega \) is a ball.

The non-existence of solution for optimal design problems is classical ([22, 23]). Because of that, it is usual to work with a relaxed formulation. This can be achieved using the homogenization theory ([1, 24,25,26]), which describes the set of materials that can be obtained mixing some elementary composites in a microscopic level. The resulting materials do not only depend on the proportion of the composites but also of their disposition. In the case of minimizing the first eigenvalue , the most elementary estimates in H convergence (see e.g. [25], Proposition 9) prove that such relaxed formulation consists in replacing \(\alpha \chi _\omega +\beta \chi _{\Omega \setminus \omega }\) by the harmonic mean value of \(\alpha \) and \(\beta \) with proportions \(\theta \) and \(1-\theta \) respectively, \(\theta \in (0,1)\), i.e. by

This homogenized material is obtained by laminates of \(\alpha \) and \(\beta \) in the direction of the flow. In the case of the maximization considered here, it consists in replacing \(\alpha \chi _\omega +\beta \chi _{\Omega \setminus \omega }\) by the arithmetic mean value, i.e. by

It is also obtained as a laminate of \(\alpha \) and \(\beta \) but now in an orthogonal direction to the flow.

Taking \(c=(\beta -\alpha )/\beta \), we are going then to be interested in the problem

We show that \(\Lambda \) is concave in \(L^\infty (\Omega ;[0,1])\) and that the minimization in u becomes a convex problem after a change of variables. This allows us to write the max-min problem as a min-max problem. If \(({\hat{\theta }},{\hat{u}})\) is a saddle point we prove that \({\hat{u}}\) is unique (taking it positive, with unit norm in \(L^2(\Omega )\)), and \({\hat{\theta }}\) belongs to a convex set of functions which satisfy

for a certain \({\hat{\mu }}>0\), which depends on u but not on \({\hat{\theta }}\). Thus, \({\hat{\theta }}\) is unique outside the set \(\{|\nabla {\hat{u}}|={\hat{\mu }}\}.\)

The optimality conditions

are necessary and sufficient. Because of that, it is simple to check that every solution \(({\hat{\theta }},{\hat{u}})\) of (1.3) is also a solution of

with \(f={\hat{\lambda }} {\hat{u}}\). For an arbitrary \(f\in H^{-1}(\Omega )\), this is a problem which has been studied in [4], see also [3] for a related problem where \(\theta \in L^\infty ((-\infty ,0])\). Applying the results in [4] to our problem we deduce that \(\Omega \in C^{1,1}\) implies that the solutions \(({\hat{\theta }},{\hat{u}})\) of (1.3) satisfy

As a consequence of this result we show that the unrelaxed problem (1.1) has no solution for any bounded open set \(\Omega \in C^{1,1}\), and therefore that the set \(\big \{|\nabla {\hat{u}}|={\hat{\mu }}\}\), with \({\hat{\mu }}\) satisfying (1.4) always has a positive measure.

The connection between (1.3) and (1.5) merits to be compared with the results in [5] (see also [8] and [10]). In these papers, it has been proved that the minimization of the first eigenvalue can also be formulated as

However in our case we do not know if an equivalence result of this type holds. For an arbitrary \(f\in H^{-1}(\Omega )\), problem \(\mathcal{P}_f\) has also been considered by several authors ([1, 5, 7, 17, 25]), specially for \(f=1\) where it applies for example to the optimal rearrangement of two materials in the cross section of a beam in order to minimize the torsion

Taking into account the concavity of the functional \(\Lambda \), we finish the paper showing the convergence of the gradient method applied to (1.3). We also estimate the rate of convergence (see [9] for related results), and we provide some numerical examples corresponding to \(\Omega \) a circle and a square respectively.

Some results about the relaxation and the optimality conditions to the minimization or maximization of an arbitrary eigenvalue for a two-phase material have also been obtained in [14].

2 Theoretical Results for the Maximization of the Eigenvalue

For a bounded open set \(\Omega \subset {\mathbb {R}}^N\), \(N\ge 2\), and three positive constants \(0<\alpha <\beta \), \(0<\kappa <|\Omega |\), we look for a measurable set \(\omega \subset \Omega \), with \(|\omega |\ge \kappa \), which maximizes the first eigenvalue of the operator \(u\in H^1_0(\Omega )\mapsto -\mathrm{div}\big (\big (\alpha \chi _\omega +\beta (1-\chi _{\omega })\big )\nabla u\big )\in H^{-1}(\Omega )\), i.e. we are interested in the optimal design problem

Since this type of problems has no solution in general ( [22, 23]), let us work with a relaxed formulation. Using the homogenization theory ([1, 25, 26]), it is well known that it is given by

It consists in replacing the mixture \(\alpha \chi _\omega +\beta \chi _{\omega ^c}\) by a most general one obtained as a laminate of both materials in an orthogonal direction to \(\nabla u\) with proportions \(\theta \) and \(1-\theta \). Dividing by \(\beta \) and introducing

we can also write (2.2) as

Moreover, we can restrict ourselves to the set of functions u which are non-negative and have unit norm in \(L^2(\Omega )\). The following theorem shows the uniqueness of the optimal state function \({\hat{u}}\) and provides an equivalent formulation.

Theorem 2.1

We have

Moreover, if \({\hat{\theta }}\) is a solution of the left-hand side problem and \({\hat{u}}\) is the solution of

then \({\hat{u}}\) is the unique solution of the right-hand side problem and \({\hat{\theta }}\) is a solution of

Related with the above result, we also have

Theorem 2.2

The function \(\Lambda :L^\infty (\Omega ;[0,1])\rightarrow {\mathbb {R}}\) defined by

is concave. Further, for every \(\theta ,\vartheta \in L^\infty (\Omega ;[0,1])\), we have

with u the solution of

and

with \(u'\) the solution of

Corollary 2.3

A function \({\hat{\theta \in }} L^\infty (\Omega ;[0,1])\) is a solution of (2.4) if and only if defining \({\hat{u}}\) as the unique solution of (2.6), we have that \({\hat{\theta }}\) satisfies (2.7).

Remark 2.4

If \({\hat{\theta }}\) is a solution of (2.4) and \({\hat{u}}\) the solution of (2.6), then \({\hat{u}}\) satisfies

with \({\hat{\lambda }}\) the maximum value in (2.4). By Theorem 2.1, we also have that \({\hat{\theta }}\) is a solution of (2.7). This proves that \(({\hat{\theta }},{\hat{u}})\) is a saddle point of the problem

and then of the optimal design problem studied in [4] with \(f={\hat{\lambda }} {\hat{u}}\). Applying the smoothness results in this paper, we get Theorem 2.5 below.

A related problem to the one in [4] has been studied in [3], where the restriction \(\theta \in L^\infty (\Omega ;[0,1])\) is replaced by \(\theta \in L^\infty (\Omega ;(-\infty ,0])\). In this case, it is proved that the optimal state function u is still in \(W^{1,\infty }(\Omega )\).

Theorem 2.5

Assume \(\Omega \in C^{1,1}\). For \({\hat{\theta }}\) a solution of (2.4) and \({\hat{u}}\) the solution of (2.6), we have

If \({\hat{\theta }}\) is an unrelaxed solution of (2.4), i.e. \({\hat{\theta }}=\chi _{{\hat{\omega }}}\) for some measurable subset \({\hat{\omega }}\subset \Omega \), then

Remark 2.6

By the strong maximum principle, we know that \({\hat{u}}\) is strictly positive in \(\Omega \). Using (2.13) and \({\hat{u}}\in H^2(\Omega )\), we also have \(\nabla {\hat{u}}\not =0\) a.e. in \(\Omega \). Taking into account (2.7), this implies that for every solution \({\hat{\theta }}\) of (2.4), there exists \({\hat{\mu }}>0\) such that

Since \({\hat{\mu }}\) can be chosen as

and \({\hat{u}}\) is unique, we get that \({\hat{\mu }}\) can be chosen independently of \({\hat{\theta }}\) and then by (2.17) that all the solutions of (2.4) take the same value on the set \(\{|\nabla {\hat{u}}|\not ={\hat{\mu }}\}\). By (2.13), these solutions also satisfy the first order linear PDE

However, \({\hat{u}}\in H^2(\Omega )\) is not enough to conclude that (2.17), (2.18) and \({\hat{u}}\) unique imply \({\hat{\theta }}\) unique. In any way, Theorem 2.2 proves that the set of solutions of (2.4) is a convex set.

For the problem of minimizing the first eigenvalue, it has been proved in [6] (see [5, 7, 8, 25] for related results) that assuming that \(\Omega \in C^{1,1}\) connected, with connected boundary, then the unrelaxed problem has a solution if and only if \(\Omega \) is a ball. In the case of the maximization of the first eigenvalue, the following theorem gives that an unrelaxed solution never exists. In particular this shows hat \({\hat{\mu }}\) in (2.17) is such that \(\big |\{|\nabla {\hat{u}}|={\hat{\mu }}\}\big |\) is always positive.

Theorem 2.7

If \(\Omega \) is a \(C^{1,1}\) domain in \({\mathbb {R}}^N\), then problem (2.1) has no solution.

3 Proof of the Theoretical Results

We show in this section the results stated in the previous one relative to the properties of the solutions of problem (2.4).

Proof of Theorem 2.1

Taking into account that

we deduce that \(\theta \) is a solution of (2.4) if and only if it is a solution of

Here we introduce the change of variables

It transforms the problem in

Let us prove that for every \(\theta \in L^\infty (\Omega ;[0,1])\), the functional \(\Phi \) defined by

is convex. Moreover, it is strictly convex on the set

For this purpose, we take \(z_1,z_2\ge 0,\) with \(\sqrt{z_1},\sqrt{z_2}\in H^1_0(\Omega )\) and \(r\in (0,1)\). Then, using the convexity of the function \(\xi \in {\mathbb {R}}^N\mapsto |\xi |^2\), we have

This proves the convexity of \(\Phi \). In order to prove the strict convexity on the set defined by (3.4), we return to (3.5). Assuming that \(z_1,z_2\) are strictly positive a.e. in \(\Omega \), and using that the function \(\xi \in {\mathbb {R}}^N\mapsto |\xi |^2\) is strilctly convex, we deduce that (3.5) is an equality if and only if

This proves that \(\log (z_1/z_2)\) is constant in \(\Omega \) and then that there exists a positive constante \(C>0\) such that \(z_1=Cz_2\) a.e. in \(\Omega \). Since the integral of \(z_1\) and \(z_2\) takes the same value, we must have \(C=1\). Thus \(\Phi \) is strictly convex.

The convexity of \(\Phi \), allows us to apply the Von-Neumann min-max theorem (see e.g. [27], chapter 2.13) to (3.2) to deduce

Moreover, if \({\tilde{\theta }}\) is a solution of the left-hand side problem and \({\tilde{z}}\) is the solution of

then \({\tilde{z}}\) is a solution of the right-hand side problem. Returning to the variables \((\theta ,u)\), this proves the thesis of Theorem 2.1, except the uniqueness of \({\hat{u}}\). For this purpose, we recall that by the strong maximum principle, if \({\hat{u}}\) is a solution of (2.6) then it is positive in \(\Omega \) and therefore \({\hat{z}}:=\sqrt{{\hat{u}}}\) is also positive. As a consequence we have

Since \(\Phi \) is strictly convex on the set defined by (3.6), we also have that the function

is strictly convex as a maximum of strictly convex functions. Thus \({\hat{z}}\) and then \({\hat{u}}\) is unique. \(\square \)

Proof of Theorem 2.2

Assume \(\theta ,\vartheta \in L^\infty (\Omega ;[0,1])\). We take \(\delta >0\) such that

Taking into account that the eigenvalue \(\Lambda (\theta +\varepsilon (\vartheta -\theta ))\) is simple, we can apply the implicit function theorem to the function \(F:(-\delta ,1+\delta )\times {\mathbb {R}}\times H^1_0(\Omega )\rightarrow H^{-1}(\Omega )\times {\mathbb {R}},\) defined by

to deduce that for \(\lambda _\varepsilon :=\Lambda \big (\theta +\varepsilon (\vartheta -\theta )\big ),\) and \(u_\varepsilon \) the unique solution of

we have that the function \(\varepsilon \in (-\delta ,1+\delta )\rightarrow (\lambda _\varepsilon ,u_\varepsilon )\in {\mathbb {R}}\times H^1_0(\Omega )\) is in \(C^\infty (-\delta ,1+\delta ).\) Deriving twice in (3.7) with respect to \(\varepsilon \), and taking \(\varepsilon =0\), we get

with

Equation (3.8) gives (2.12). In order to prove (2.9), we use u as test function in (3.8). Since \(\Vert u\Vert _{L^2(\Omega )}=1,\) we get

Using now \(u'\) as test function in (3.7), with \(\varepsilon =0\), we also have

Replacing (3.11) in (3.10), we conclude (2.9).

It remains to prove (2.11). For this purpose, and reasoning as above, we use u as test function in (3.9), which taking into account \(\Vert u\Vert _{L^2(\Omega )}=1\), \(\int _\Omega uu'dx=0\), gives

which using \(u''\) as test function in (3.7), with \(\varepsilon =0\), simplifies to

On the other hand, using \(u'\) as test function in (3.8), we have

By (3.12) and (3.13), we deduce (2.11) and then that \(\Lambda \) is concave. \(\square \)

Proof of Theorem 2.7

Reasoning by contradiction, we assume that there exists a solution \({\hat{\theta }}\) of (2.13) such that \({\hat{\theta }}=\chi _{{\hat{\omega }}}\) with \({\hat{\omega }}\) a measurable set of \(\Omega \). By Theorem 2.5 we know that (2.16) holds, which taking into account that \({\hat{\lambda }}(1+c/(1-c)\chi _{{\hat{\omega }}}){\hat{u}}\) belongs to \(L^\infty (\Omega )\), implies (see e.g. [16])

By the John-Niremberg theorem, [19], there exists \(\tau >0\) such that

This allows us to use the imbedding theorems for Orlicz-Sobolev spaces, [15], which taking into account that

proves the existence of \(C>0\) such that

Since

we then have (see e.g. [13], Theorem 2.5) that for every \(y\in \Omega \), there exists a unique maximal solution \(\varphi (.,y)\) of

which is defined in an open interval \(I(y)\subset {\mathbb {R}}.\) Taking into account that

and that \({\hat{u}}\) is strictly positive in \(\Omega \) and vanishes on \(\partial \Omega \), we can apply LaSalle’s theorem, [20], to deduce

Now, we observe that the equation \(\nabla \hat{\theta }\cdot \nabla \hat{u}=0\) in \(\Omega \), implies

But this a contradiction with (3.18), which recalling that \({\hat{\theta }}=\chi _{{\hat{\omega }}}\) and that \(\nabla {\hat{u}}\) is continuous, implies

where by (1.4)

\(\square \)

4 A Numerical Approximation

In this section, taking into account Theorem 2.2, let us prove the convergence of the gradient method applied to (2.4) together with an estimate of the error. The corresponding algorithm reads as follows:

We fix \(\delta >0\).

-

Take \(\theta _0\in L^\infty (\Omega ;[0,1])\) such that

$$\begin{aligned} \int _\Omega \theta _0dx=\kappa .\end{aligned}$$(4.1) -

Assuming we have constructed \(\theta _n\in L^\infty (\Omega ;[0,1])\) such that

$$\begin{aligned} \int _\Omega \theta _ndx=\kappa ,\end{aligned}$$(4.2)we define \((\lambda _n,u_n)\) as the unique solution of

$$\begin{aligned} \left\{ \begin{array}{l}\displaystyle -\mathrm{div}\big ((1-c\theta _n)\nabla u_n\big )=\lambda _n u_n\ \hbox { in }\Omega \\ \displaystyle u_n=0\ \hbox { on }\partial \Omega ,\quad u_n>0\ \hbox { in }\Omega ,\quad \int _\Omega |u_n|^2dx=1.\end{array}\right. \end{aligned}$$(4.3) -

Take \(\vartheta _n\in L^\infty (\Omega ;[0,1])\) as a solution of

$$\begin{aligned} \min _{\begin{array}{c} \vartheta \in L^\infty (\Omega ;[0,1])\\ \int _\Omega \vartheta dx\ge \kappa \end{array}}\int _\Omega \vartheta |\nabla u_n|^2dx.\end{aligned}$$(4.4) -

For

$$\begin{aligned} \varepsilon _n=\min \Big \{1,\delta \int _\Omega (\theta _n-\vartheta _n)|\nabla u|^2dx\Big \}, \end{aligned}$$we define

$$\begin{aligned} \theta _{n+1}=(1-\varepsilon _n)\theta _n+\varepsilon _n\vartheta _n.\end{aligned}$$(4.5)

Remark 4.1

Problem (4.3) can be easily solved using the power method. The solutions of (4.4) are explicitly given by

Our main result is given by the following convergence theorem.

Theorem 4.2

There exists \(\delta _0>0\), such that for every \(\delta \in (0,\delta _0]\), and every \(\theta _0\in L^\infty (\Omega ;[0,1])\) which satisfies (4.1), the sequence \((\theta _n,u_n)\) defined by the previous algorithm satisfies:

-

1.

There exist \(C>0\), which only depends on c and \(\Vert u_0\Vert _{H^1_0(\Omega )}\) such that defining \({\hat{\lambda }}\) as the maximum value of (2.4), we have

$$\begin{aligned} 0\le {\hat{\lambda }}-\lambda _n\le {C\over \sqrt{n}}.\end{aligned}$$(4.6) -

2.

Every \(\hat{\theta }\in L^\infty (\Omega ;[0,1])\) such that there exists a subsequence of \(\theta _n\) converging in \(L^\infty (\Omega )\) weak-\(*\) to \(\theta \) is a solution of (2.4).

-

3.

The sequence \(u_n\) satisfies

$$\begin{aligned} \int _\Omega |{\hat{u}}-u_n|^2dx\le C\,{\ln n\over \sqrt{n}}.\end{aligned}$$(4.7)with \({\hat{u}}\) defined by Theorem 2.1 and \(C>0\) depending only on c and \(\Vert u_0\Vert _{H^1_0(\Omega )}\).

The first and second assertions of the theorem will follow from the following general convergence result for the gradient descendent method. It applies to a convex (but non necesaryly strictly convex) functional with bounded second derivative. It is strongly related with the classical convergence result of the gradient method with a fixed step. However, the rate of convergence is worse due to the lack of the ellipticity.

Theorem 4.3

Assume X a reflexive space, \(K\subset X\) a bounded convex closed set and \(F:K\rightarrow {\mathbb {R}}\) a convex function, Gâteaux derivable, such that there exists \(M\ge 0\) satisfying (\(\langle .,.\rangle \) denotes the duality product)

Then, for every \(k_0\in K\), and every \(\delta \in (0,1/M]\), the sequence \(\{k_n\}\) defined by

with \({\tilde{k}}_n\) solution of

and

satisfies

The constant C only depends on \(F(k_0)\) and M. Moreover, every \({\hat{k}}\in K\) such that there exists a subsequence of \(\{k_n\}\) which converges weakly to \({\hat{k}}\), is a minimum point for F.

Remark 4.4

The assumption F Gâteaux derivable in C can be relaxed by: For every \(k\in C\), there exists \(F'(k)\in X'\) such that

We also observe that this property combined with the convexity of F implies that F is lower semicontinuous in K and then that F has a minimum in K.

Proof of Theorem 4.3

Thanks to (4.8), for every \(\varepsilon \in [0,1]\) we have

Defining \({\tilde{k}}_n\) by (4.10), \(\varepsilon _n\) by (4.11), and \(\delta _n\) by

we then have

Now, we take \({\hat{k}}\in C\) a minimum point for F. Using the convexity of F and the definition (4.10) of \({\tilde{k}}_n\), we have

Taking \(e_n:=F(k_n)-F({\hat{k}})\ge 0\), we deduce from this inequality and (4.15)

Lemma 1 in [18], then proves (4.12).

On the other hand, the convexity and lower semicontinuity of F imply that F is sequentially lower semicontinuous for the weak topology. Therefore, for every subsequence of \(k_n\), still denoted by \(k_n\), which converges weakly to some \({\tilde{k}}\in K\), we have

and then \({\tilde{k}}\) is a minimum point of F in K. \(\square \)

In order to prove Theorem 4.2, let us also need the following two lemmas

Lemma 4.5

Let \(\Omega \) be a bounded open set, then there exists \(\rho >0\) such that defining \(\Lambda (\theta )\) by (2.8), and \(\Lambda _2(\theta )\) as the second eigenvalue of \(-\mathrm{div}((1-c\theta )\nabla )\) with Dirichlet conditions, we have

Proof

Reasoning by contradiction, we assume that there exists a sequence \(\theta _n\in L^\infty (\Omega ;[0,1])\) such that the \(\Lambda _2(\theta _n)-\Lambda (\theta _n)\) goes to zero. Extracting a subsequence if necessary (see e.g. [24, 26]), we can assume that there exists \(A\in L^\infty (\Omega )^{N\times N}\) symmetric, with

such that \((1-c\theta _n)I\) converges to A in the sense of the H-convergence in \(\Omega \) ([1, 24, 26]). Denoting by \(\lambda _1\), \(\lambda _2\) the first and second eigenvalues of the operator \(-\mathrm{div}(A\nabla )\) with Dirichlet conditions, this implies

Since \(\lambda _2-\lambda _1>0\), this contradicts the fact that \(\Lambda _2(\theta _n)-\Lambda _1(\theta _n)\) tends to zero. \(\square \)

Lemma 4.6

Assume \(\Omega \) a bounded open set, then there exist three constants \(R,M,\gamma >0\) such that for every \(\theta ,\vartheta \in L^\infty (\Omega ;[0,1])\), \(u'\) defined by (2.12), and \(\lambda ''\) defined by (2.11), satisfy

Proof

We define \(\lambda _1\) and \(\lambda _2\) as the first and second eigenvalues of \(-\mathrm{div}((1-c\theta )\nabla )\) with Dirichlet condtions, and u as the unique positive eigenfunction with unit norm in \(L^2(\Omega )\) corresponding to \(\lambda _1\). Since \(u'\) is orthogonal to u in \(L^2(\Omega )\), we have

Using then \(u'\) as test function in (2.12), we have

Therefore

Using here Lemma 4.5, Cauchy-Schwarz’s inequality and

with \(\lambda ^*_1\) the first eigenvalue of \(-\Delta \) in \(\Omega \) with Dirichlet conditions, we conclude the existence of \(R>0\) such that the first assertion in (4.18) holds. From this inequality, (4.17), (4.20), and (2.11), we easily conclude (4.18). \(\square \)

Proof of Theorem 4.2

Taking into account Lemma 4.18 and Remak 4.4, we have that (4.6) and \({\hat{\theta }}\) solution of (2.4) are an immediate consequence of Theorem 4.3 applied to \(K=L^\infty (\Omega ;[0,1])\subset L^2(\Omega )\) and \(F(\theta )=-\Lambda (\theta )\).

It remains to prove (4.7). Given \({\hat{\theta }}\) a solution of (2.4), and defining \(\Lambda \) by (2.8), we have

where thanks to \({\hat{\theta }}\) solution of (2.4), we have

Using then \(\Lambda ({\hat{\theta }})-\Lambda (\theta _n)={\hat{\lambda }}-\lambda _n\) and the second inequality in (4.18), we get

with \(u'_t\) the solution of (2.12) for \(\theta ={\hat{\theta }}\) and \(\vartheta =\theta _n\).

Now, we use that (4.22) and (4.18) imply

where C only depends on \(u_0\) and c. This proves (4.7). \(\square \)

5 Numerical Examples

In this last secton, we present some numerical experiments corresponding to the algorithm described in Section 4.

Our first example refers to the case where \(\Omega \) is a ball in \({\mathbb {R}}^N\). In this case, as a simple application of the uniqueness of the optimal state function given by Theorem 2.1 we can easily show that the solutions are radial. The corresponding result is given in Proposition 5.1 below. In the case of the minimization of the first eigenvalue, a similar result has been obtained in [2] (see [11, 12, 21] for related results). The result is more delicate to prove than in the present paper due to the lack of uniqueness. We also recall that for the minimization problem, the corresponding solutions \({\hat{\theta }}\) are unrelaxed, i.e. they are characteristic functions. In the maximization problem, Theorem 2.7 shows that this is not the case.

Proposition 5.1

Let \(\Omega \) be the unit ball in \({\mathbb {R}}^N\). Then, there exists a unique solution \(({\hat{\theta }},{\hat{u}})\) of (2.5). Moreover \({\hat{u}}\) is a positive decreasing radial function in \(W^{2,\infty }(\Omega )\) and \({\hat{\theta }}\) is a radial function in \(W^{1,\infty }(\Omega )\).

Proof

Along the proof, we denote by \(B_R\) the ball of center 0 and radius R and by \(|A|_{N-1}\) the \((N-1)\)-Hausdorff measure of a subset \(A\subset {\mathbb {R}}^N\).

Assume \({\hat{\theta }}\) a solution of (2.4) and \({\hat{u}}\) the solution of (2.6). Then, thanks to the symmetry properties of the unit ball, we have that \({\tilde{\theta }}(x):={\hat{\theta }}(Px)\) is also a solution of (2.4) for every orthogonal matrix P. Moreover, the solution of (2.4) relative to \({\hat{\theta }}\) is given by \({\tilde{u}}(x)={\hat{u}}(Px)\). Since \({\tilde{u}}={\hat{u}}\) by Theorem 2.1, we get \({\hat{u}}(Px)={\hat{u}}(x)\) for every orthogonal matrix P. This proves that \({\hat{u}}\) is a radial function.

Once we know that \({\hat{u}}\) is radial we get that for every \({\tilde{\theta }}\) solution of (2.4), the function \({\hat{\theta }}\) defined by

is also a solution of (2.4), which is radial.

With a little abuse of notation, let us also denote by \({\hat{u}}\), \({\hat{\theta }}\) the real function in (0, 1) satisfying

These functions satisfy

with \({\hat{\lambda }}>0\) the value of the maximum in (2.4). From (2.17), we have \({\hat{\theta }}=1\) in \([0,\delta ]\) for some \(\delta >0\), and by Theorem 2.5, the function \({d{\hat{u}}\over dr}\) is continuous in [0, 1]. Then, (5.1) shows that \({\hat{\theta }}\) is a continuous function. Using also (2.17) and that (5.1) implies

we conclude that \({\hat{\theta }}\) is in \(W^{1,\infty }(0,1)\) and then by (5.1), the function \({\hat{u}}\) is in \(W^{2,\infty }(0,1)\).

To finish, it remains to prove the uniqueness of \({\hat{\theta }}\). Using the characteristic method, this follows from (2.17), \({\hat{u}}\in W^{1,\infty }(0,1)\) and \({\hat{\theta }}\) solution of

for every solution \({\hat{\theta }}\) of (2.4). \(\square \)

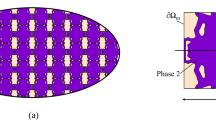

In figs 1, 2 and 3, we represent the optimal proportion \(\theta \) of the best conductive material for \(\Omega \) the unit circle, \(c=0.5\) and \(\kappa =p|\Omega |\), with \(p=0.2\), \(p=0.5\) and \(p=0.8\) respectively. Since we know that \({\hat{\theta }}\) is radial we have applied the algorithm in Section 4 directly to the corresponding one-dimensional problem.

In figs 4, 5 and 6 we represent the optimal proportion \(\theta \) of the worse conductive material for \(\Omega \) the unit square, \(c=0.5\) and \(\kappa =p|\Omega |\), p as above. As it is hoped, the solution has the symmetries of the square.

References

Allaire, G.: Shape optimization by the homogenization method. Appl. Math. Sci. 146, Springer-Verlag, New York, (2002)

Alvino, A., Trombetti, G., Lions, P.L.: On optimization problems with prescribed rearrangements. Nonlinear Anal. 13(2), 185–220 (1989)

Buttazzo, G., Oudet, E., Velichov, B.: A free boundary problem arising in PDE optimization. Calc. Var. Partial Differential Equations 54(4), 3829–3856 (2015)

Casado-Díaz, J.: Some smoothness results for the optimal design of a two-composite material which minimizes the energy. Calc. Var. Partial Differential Equations 53(3–4), 465–486 (2015)

Casado-Díaz, J.: Smoothness properties for the optimal mixture of two isotropic materials SIAM. The compliance and eigenvalue problems. J. Cont. Optim. 53(4), 2319–2349 (2015)

Casado-Díaz, J.: A characterization result for the existence of a two-phase material minimizing the first eigenvalue. Ann. Inst. H. Poincaré 34(15), 1215–1226 (2017)

Casado-Díaz, J., Conca, C., Vásquez-Varas, D.: The maximization of the \(p\)-Laplacian energy for a two-phase material. SIAM J. Control Optim. 59(2), 1497–1519 (2021)

Casado-Dıaz, J., Conca, C., Vasquez-Varas, D.: Minimization of the \(p\)-Laplacian first eigenvalue for a two-phase material. J. Comput. Appl. Math. 399,(2022)

Casado-Díaz, J., Conca, C., Vásquez-Varas, D.: Numerical maximization of the \(p\)-Laplacian energy of a two-phase material. SIAM J. Numer. Anal. 59(6), 3077–3097 (2021)

A. Cherkaev, E. Cherkaeva. Stable optimal design for uncertain loading conditions. In Homogenization: in memory of Serguei Kozlov, ed. by V. Berdichevsky, V. Jikov, G. Papanicolau. Series on advances in math. for appl. sci. 50. World Scientific, Singapore, 1999, 193-213

Conca, C., Laurain, A., Mahadevan, R.: Minimization of the ground state for two phase conductors in low contrast regime. SIAM J. Appl. Math. 72(4), 1238–1259 (2012)

Conca, C., Mahadevan, R., Sanz, L.: An extremal eigenvalue problem for a two-phase conductor in a ball. Appl. Math. Optim. 60(2), 173–184 (2009)

Corduneanu, C.: Principles of differential and integral equations. Allyn and Bacon Inc, Boston (1971)

Cox, S., Lipton, R.: Extremal eigenvalue problems for two-phase conductors. Arch. Rational Mech. Anal. 136(2), 101–117 (1996)

Donaldson, T.K., Trudinger, N.S.: Orlicz-Sobolev spaces and imbedding theorems. J. Functional Analysis 8, 52–75 (1971)

Fefferman, C., Stein, E.M.: \(H^p\) spaces of several variables. Acta Math. 129(3–4), 137–193 (1972)

Goodman, J., Kohn, R.V., Reyna, L.: Numerical study of a relaxed variational problem for optimal design. Comput. Methods Appl. Mech. Engrg. 57(1), 107–127 (1986)

Huang, Y.Q., Li, R., Liu, W.: Preconditioned descent algorithms for p-Laplacian. Sci. Comput. 32(2), 343–371 (2007)

John, F., Nirenberg, L.: On functions of bounded mean oscillation. Comm. Pure Appl. Math. 14, 415–426 (1961)

J.P. LaSalle. Some extensions of Liapunov’s second method. Circuit theory. IRE Trans. CT-7 (1960), 520–527

Mohammadi, S.A., Yousefnezhad, M.: Optimal ground state energy of two-phase conductors. Electron. J. Diff. Eqns. 171, 8 (2014)

Murat, F.: Un contre-exemple pour le problème du contrôle dans les coefficients. CRAS Sci. Paris A 273, 708–711 (1971)

Murat, F.: Theoremes de non existence pour des problemes de controle dans les coefficients. CRAS Sci. Paris A 274, 395–398 (1972)

F. Murat. \(H\)-convergence. Séminaire d’Analyse Fonctionnelle et Numérique, 1977-78, Université d’Alger, multicopied, 34 pp. English translation: F. Murat and L. Tartar. H-convergence. In Topics in the Mathematical Modelling of Composite Materials, ed. by L. Cherkaev, R.V. Kohn. Progress in Nonlinear Diff. Equ. and their Appl., 31, Birkaüser, Boston, 1998, 139-174

Murat, F., Tartar, L., Calcul des variations et homogénéisation. In Les méthodes de l’homogénéisation: theorie et applications en physique, Eirolles, Paris,: 319–369. English translation: F. Murat, L. Tartar. Calculus of variations and homogenization. In Topics in the Mathematical Modelling of Composite Materials, ed. by L. Cherkaev, R.V. Kohn. Progress in Nonlinear Diff. Equ. and their Appl., 31. Birkaüser, Boston 1998, 139–174 (1985)

Tartar, L.: The general theory of homogenization. A personalized introduction. Springer, Berlin Heidelberger (2009)

Zeidler, E.: Applied Functional Analysis. Main principles and their applications. Appl. Math. Sci. 109, Springer-Verlag, New York, (1995)

Acknowledgements

This work has been partially supported through the project PID2020-116809GB-I00 the Ministerio de Economía, Industria y Competitividad of Spain.

Open Access

This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

Funding

Open Access funding provided thanks to the CRUE-CSIC agreement with Springer Nature.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Casado-díaz, J. The Maximization of the First Eigenvalue for a Two-Phase Material. Appl Math Optim 86, 11 (2022). https://doi.org/10.1007/s00245-022-09825-8

Accepted:

Published:

DOI: https://doi.org/10.1007/s00245-022-09825-8