Abstract

The Lott–Sturm–Villani Curvature-Dimension condition provides a synthetic notion for a metric-measure space to have Ricci-curvature bounded from below and dimension bounded from above. We prove that it is enough to verify this condition locally: an essentially non-branching metric-measure space \((X,\mathsf {d},{\mathfrak {m}})\) (so that \((\text {supp}({\mathfrak {m}}),\mathsf {d})\) is a length-space and \({\mathfrak {m}}(X) < \infty \)) verifying the local Curvature-Dimension condition \({\mathsf {CD}}_{loc}(K,N)\) with parameters \(K \in {\mathbb {R}}\) and \(N \in (1,\infty )\), also verifies the global Curvature-Dimension condition \({\mathsf {CD}}(K,N)\). In other words, the Curvature-Dimension condition enjoys the globalization (or local-to-global) property, answering a question which had remained open since the beginning of the theory. For the proof, we establish an equivalence between \(L^1\)- and \(L^2\)-optimal-transport–based interpolation. The challenge is not merely a technical one, and several new conceptual ingredients which are of independent interest are developed: an explicit change-of-variables formula for densities of Wasserstein geodesics depending on a second-order temporal derivative of associated Kantorovich potentials; a surprising third-order theory for the latter Kantorovich potentials, which holds in complete generality on any proper geodesic space; and a certain rigidity property of the change-of-variables formula, allowing us to bootstrap the a-priori available regularity. As a consequence, numerous variants of the Curvature-Dimension condition proposed by various authors throughout the years are shown to, in fact, all be equivalent in the above setting, thereby unifying the theory.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The Curvature-Dimension condition \({\mathsf {CD}}(K,N)\) was first introduced in the 1980’s by Bakry and Émery [15, 16] in the context of diffusion generators, having in mind primarily the setting of weighted Riemannian manifolds, namely smooth Riemannian manifolds endowed with a smooth density with respect to the Riemannian volume. The \({\mathsf {CD}}(K,N)\) condition serves as a generalization of the classical condition in the non-weighted Riemannian setting of having Ricci curvature bounded below by \(K \in {\mathbb {R}}\) and dimension bounded above by \(N \in [1,\infty ]\) (see e.g. [56, 60] for further possible extensions). Numerous consequences of this condition have been obtained over the past decades, extending results from the classical non-weighted setting and at times establishing new ones directly in the weighted one. These include diameter bounds, volume comparison theorems, heat-kernel and spectral estimates, Harnack inequalities, topological implications, Brunn–Minkowski-type inequalities, and isoperimetric, functional and concentration inequalities—see e.g. [17, 48, 77] and the references therein.

Being a differential and Hilbertian condition, it was for many years unclear how to extend the Bakry–Émery definition beyond the smooth Riemannian setting, as interest in (measured) Gromov-Hausdorff limits of Riemannian manifolds and other non-Hilbertian singular spaces steadily grew. In parallel, and apparently unrelatedly, the theory of Optimal-Transport was being developed in increasing generality following the influential work of Brenier [21] (see e.g. [2, 36, 53, 65, 75,76,77]). Given two probability measures \(\mu _0,\mu _1\) on a common geodesic space \((X,\mathsf {d})\) and a prescribed cost of transporting a single mass from point x to y, the Monge-Kantorovich idea is to optimally couple \(\mu _0\) and \(\mu _1\) by minimizing the total transportation cost, and as a byproduct obtain a Wasserstein geodesic \([0,1] \ni t \mapsto \mu _t\) connecting \(\mu _0\) and \(\mu _1\) in the space of probability measures \({\mathcal {P}}(X)\). This gives rise to the notion of displacement convexity of a given functional on \({\mathcal {P}}(X)\) along Wasserstein geodesics, introduced and studied by McCann [52]. Following the works of Cordero-Erausquin–McCann–Schmuckenschläger [33], Otto–Villani [62] and von Renesse–Sturm [70], it was realized that the \({\mathsf {CD}}(K,\infty )\) condition in the smooth setting may be equivalently formulated synthetically as a certain convexity property of an entropy functional along \(W_2\) Wasserstein geodesics (associated to \(L^2\)-Optimal-Transport, when the transport-cost is given by the squared-distance function).

This idea culminated in the seminal works of Lott–Villani [51] and Sturm [73, 74], where a synthetic definition of \({\mathsf {CD}}(K,N)\) was proposed on a general (complete, separable) metric space \((X,\mathsf {d})\) endowed with a (locally-finite Borel) reference measure \({\mathfrak {m}}\) (“metric-measure space”, or m.m.s.); it was moreover shown that the latter definition coincides with the Bakry–Émery one in the smooth Riemannian setting (and in particular in the classical non-weighted one), that it is stable under measured Gromov-Hausdorff convergence of m.m.s.’s, and that it implies various geometric and analytic inequalities relating metric and measure, in complete analogy with the smooth setting. It was subsequently also shown [58, 64] that Finsler manifolds and Alexandrov spaces satisfy the Curvature-Dimension condition. Thus emerged an overwhelmingly convincing notion of Ricci curvature lower bound K and dimension upper bound N for a general (geodesic) m.m.s.\((X,\mathsf {d},{\mathfrak {m}})\), leading to a rich and fruitful theory exploring the geometry of m.m.s.’s by means of Optimal-Transport.

One of the most important and longstanding open problems in the Lott–Sturm–Villani theory (see [73, 74] and [77, pp. 888, 907]) is whether the Curvature-Dimension condition on a general geodesic m.m.s.(say, having full-support \(\text {supp}({\mathfrak {m}}) = X\)) enjoys the globalization (or local-to-global) property: if the \({\mathsf {CD}}(K,N)\) condition is known to hold on a neighborhood \(X_o\) of any given point \(o \in X\) (a property henceforth denoted by \({\mathsf {CD}}_{loc}(K,N)\)), does it also necessarily hold on the entire space? Clearly this is indeed the case in the smooth setting, as both curvature and dimension may be computed locally (by equivalence with the differential \({\mathsf {CD}}\) definition). However, for reasons which we will expand on shortly, this is not at all clear and in some cases is actually false on general m.m.s.’s. An affirmative answer to this question would immensely facilitate the verification of the \({\mathsf {CD}}\) condition, which at present requires testing all possible \(W_2\)-geodesics on X, instead of locally on each \(X_o\). The analogous question for sectional curvature on Alexandrov spaces (where the dimension N is absent) does indeed have an affirmative answer, as shown by Topogonov, and in full generality, by Perelman (see [22]).

Several partial answers to the local-to-global problem have already been obtained in the literature. A geodesic space \((X,\mathsf {d})\) is called non-branching if geodesics are forbidden to branch at an interior-point into two separate geodesics. On a non-branching geodesic m.m.s.\((X,\mathsf {d},{\mathfrak {m}})\) having full support, it was shown by Sturm in [73, Theorem 4.17] that the local-to-global property is satisfied when \(N = \infty \) (assuming that the space of probability measures with finite \({\mathfrak {m}}\)-relative entropy is geodesically convex; see also [77, Theorem 30.42] where the same globalization result was proved under a different condition involving the existence of a full-measure totally-convex subset of X of finite-dimensional points). Still for non-branching geodesic m.m.s.’s having full support, a positive answer was also obtained by Villani in [77, Theorem 30.37] for the case \(K=0\) and \(N \in [1,\infty )\).

We stress that in these results, the restriction to non-branching spaces is not merely a technical assumption—an example of a heavily-branching m.m.s.verifying \({\mathsf {CD}}_{loc}(0,4)\) which does not verify \({\mathsf {CD}}(K,N)\) for any fixed \(K \in {\mathbb {R}}\) and \(N\in [1,\infty ]\) was constructed by Rajala in [67]. Consequently, a natural assumption is to require that \((X,\mathsf {d})\) be non-branching, or more generally, to require that the \(L^2\)-Optimal-Transport on \((X,\mathsf {d},{\mathfrak {m}})\) be concentrated (i.e. up to a null-set) on a non-branching subset of geodesics, an assumption introduced by Rajala and Sturm in [68] under the name essentially non-branching (see Sect. 6 for precise definitions). For instance, it is known [68] that measured Gromov-Hausdorff limits of Riemannian manifolds satisfying \({\mathsf {CD}}(K,\infty )\), and more generally, \({\mathsf {RCD}}(K,\infty )\) spaces, always satisfy the essentially non-branching assumption (see Sect. 13).

In this work, we provide an affirmative answer to the globalization problem in the remaining range of parameters: for \(N \in (1,\infty )\) and \(K \in {\mathbb {R}}\), the \({\mathsf {CD}}(K,N)\) condition verifies the local-to-global property on an essentially non-branching geodesic m.m.s.\((X,\mathsf {d},{\mathfrak {m}})\) having finite total-measure and full support. The exclusion of the case \(N=1\) is to avoid unnecessary pathologies, and is not essential. Our assumption that \({\mathfrak {m}}\) has finite total-measure (or equivalently, by scaling, that it is a probability measure) is most probably technical, but we did not verify it can be removed so as to avoid overloading the paper even further. This result is new even under the additional assumption that the space is infinitesimally Hilbertian (see [40])—we will say that such spaces verify \({\mathsf {RCD}}(K,N)\)—in which case the assumption of being (globally) essentially non-branching is in fact superfluous.

To better explain the difference between the previously known cases when \(\frac{K}{N} = 0\) and the conceptual challenge which the newly treated case \(\frac{K}{N} \ne 0\) poses, as well as to sketch our solution and its main new ingredients, which we believe are of independent interest, we provide some additional details below and refer to Sect. 6 for precise definitions.

1.1 Disentangling volume-distortion coefficients

Roughly speaking, the \({\mathsf {CD}}(K,N)\) condition prescribes a synthetic second-order bound on how an infinitesimal volume changes when it is moved along a \(W^{2}\)-geodesic: the volume distortion (or transport Jacobian) J along the geodesic should satisfy the following interpolation inequality for \(t_0 = 0\) and \(t_1 = 1\):

where \(\tau _{K,N}^{(t)}(\theta )\) is an explicit coefficient depending on the curvature \(K \in {\mathbb {R}}\), dimension \(N \in [1,\infty ]\), the interpolating time parameter \(t \in [0,1]\) and the total length of the geodesic \(\theta \in [0,\infty )\) (with an appropriate interpretation of (1.1) when \(N=\infty \)). When \(N <\infty \), the latter coefficient is obtained by geometrically averaging two different volume distortion coefficients:

where the \(\sigma _{K,N-1}^{(t)}(\theta )\) term encodes an \((N-1)\)-dimensional evolution orthogonal to the transport and thus affected by the curvature, and the linear term t represents a one dimensional evolution tangential to the transport and thus independent of any curvature information. As with the Jacobi equation in the usual Riemannian setting, the function \([0,1] \ni t \mapsto \sigma (t) := \sigma _{K,N-1}^{(t)}(\theta )\) is explicitly obtained by solving the second-order differential equation:

The common feature of the previously known cases \(\frac{K}{N} = 0\) for the local-to-global problem is the linear behaviour in time of the distortion coefficient: \(\tau _{K,N}^{(t)}(\theta ) = t\). A major obstacle with the remaining cases \(\frac{K}{N} \ne 0\) is that the function \([0,1] \ni t \mapsto \tau _{K,N}^{(t)}(\theta )\) does not satisfy a second-order differential characterization such as (1.3). If it did, it would be possible to express the interpolation inequality (1.1) on \([t_0,t_1] \subset [0,1]\) as a second-order differential inequality for \(J^{\frac{1}{N}}\) on \([t_0,t_1]\) (see Lemmas A.5 and A.6), and so if (1.1) were known to hold for all \(\left\{ [t_0^i,t_1^i]\right\} _{i=1\ldots k}\) so that \(\cup _{i=1}^k (t_0^i,t_1^i) = (0,1)\), it would follow that (1.1) also holds for \([t_0,t_1] = [0,1]\). However, a counterexample to the latter implication was constructed by Deng and Sturm in [34], thereby showing that:

On the other hand, the above argument does work if we were to replace \(\tau \) by the slightly smaller \(\sigma \) coefficients. This motivated Bacher and Sturm in [14] to define for \(K \in {\mathbb {R}}\) and \(N \in (1,\infty )\) the slightly weaker “reduced” Curvature-Dimension condition, denoted by \({\mathsf {CD}}^{*}(K,N)\), where the distortion coefficients \(\tau _{K,N}^{(t)}(\theta )\) are indeed replaced by \(\sigma _{K,N}^{(t)}(\theta )\). Using the above gluing argument (after resolving numerous technicalities), the local-to-global property for \({\mathsf {CD}}^*(K,N)\) was established in [14] on non-branching spaces (see also the work of Erbar–Kuwada–Sturm [35, Corollary 3.13, Theorem 3.14 and Remark 3.26] for an extension to the essentially non-branching setting, cf. [29, 68]). Let us also mention here the work of Ambrosio–Mondino–Savaré [10], who independently of a similar result in [35], established the local-to-global property for \({\mathsf {RCD}}^*(K,N)\) proper spaces, \(K \in {\mathbb {R}}\) and \(N \in [1,\infty ]\), without a-priori assuming any non-branching assumptions (but a-posteriori, such spaces must be essentially non-branching by [68]).

Without requiring any non-branching assumptions, the \({\mathsf {CD}}^*(K,N)\) condition was shown in [14] to imply the same geometric and analytic inequalities as the \({\mathsf {CD}}(K,N)\) condition, but with slightly worse constants (typically missing the sharp constant by a factor of \(\frac{N-1}{N}\)), suggesting that the latter is still the “right” notion of Curvature-Dimension. We conclude that the local-to-global challenge is to properly disentangle between the orthogonal and tangential components of the volume distortion J before attempting to individually integrate them as above. This also highlights the geometric nature of the globalization problem, and demonstrates that it is not merely a technical challenge.

1.2 Comparing \(L^2\)- and \(L^1\)-Optimal-Transport and main result

There have been a couple of prior attempts to disentangle the volume distortion into its orthogonal and tangential components, by comparing between \(W_2\) and \(W_1\) Wasserstein geodesics (associated to \(L^2\)- and \(L^1\)-Optimal-Transport, respectively). In [30], this strategy was implicitly employed by Cavalletti and Sturm to show that \({\mathsf {CD}}_{loc}(K,N)\) implies the measure-contraction property \({\mathsf {MCP}}(K,N)\), which in a sense is a particular case of \({\mathsf {CD}}(K,N)\) when one end of the \(W_2\)-geodesic is a Dirac delta at a point \(o \in X\) (see [57, 74]). In that case, all of the transport-geodesics have o as a common end point, so by considering a disintegration of \({\mathfrak {m}}\) on the family of spheres centered at o, and restricting the \(W_2\)-geodesic to these spheres, the desired disentanglement was obtained. In the subsequent work [24], Cavalletti generalized this approach to a particular family of \(W_2\)-geodesics, having the property that for a.e. transport-geodesic \(\gamma \), its length \(\ell (\gamma )\) is a function of \(\varphi (\gamma _{0})\), where \(\varphi \) is a Kantorovich potential associated to the corresponding \(L^2\)-Optimal-Transport problem. Here the disintegration was with respect to the individual level sets of \(\varphi \), and again the restriction of the \(W_2\)-geodesic enjoying the latter property to these level sets (formally of co-dimension one) induced a \(W_1\)-geodesic, enabling disentanglement.

Another application of \(L^1\)-Optimal-Transport, seemingly unrelated to disentanglement of \(W_2\)-geodesics, appeared in the recent breakthrough work of Klartag [47] on localization in the smooth Riemannian setting. The localization paradigm, developed by Payne–Weinberger [63], Gromov–Milman [44] and Kannan–Lovász–Simonovits [46], is a powerful tool to reduce various analytic and geometric inequalities on the space \(({\mathbb {R}}^n,\mathsf {d},{\mathfrak {m}})\) to appropriate one-dimensional counterparts. The original approach by these authors was based on a bisection method, and thus inherently confined to \({\mathbb {R}}^n\). In [47], Klartag extended the localization paradigm to the weighted Riemannian setting, by disintegrating the reference measure \({\mathfrak {m}}\) on \(L^1\)-Optimal-Transport geodesics (or “rays”) associated to the inequality under study (cf. Feldman–McCann [38]), and proving that the resulting conditional one-dimensional measures inherit the Curvature-Dimension properties of the underlying manifold.

Klartag’s idea is quite robust, and permitted Cavalletti and Mondino in [27] to avoid the smooth techniques used in [47] and to extend the localization paradigm to the framework of essentially non-branching geodesic m.m.s.’s \((X,\mathsf {d},{\mathfrak {m}})\) of full-support verifying \({\mathsf {CD}}_{loc}(K,N)\), \(N \in (1,\infty )\). By a careful study of the structure of \(W_1\)-geodesics, Cavalletti and Mondino were able to transfer the Curvature-Dimension information encoded in the \(W_2\)-geodesics to the individual rays along which a given \(W_1\)-geodesic evolves, thereby proving that on such spaces,

Note that the densities of one-dimensional \({\mathsf {CD}}(K,N)\) spaces are characterized via the \(\sigma \) (as opposed to \(\tau \)) volume-distortion coefficients (see the “Appendix”), so by applying the gluing argument described in the previous subsection, only local \({\mathsf {CD}}_{loc}(K,N)\) information was required in [27] to obtain global control over the entire one-dimensional transport ray.

This allowed Cavalletti and Mondino (see [27, 28]) to obtain a series of sharp geometric and analytic inequalities for \({\mathsf {CD}}_{loc}(K,N)\) spaces as above, in particular extending from the smooth Riemannian setting the sharp Lévy-Gromov [42] and Milman [55] isoperimetric inequalities, as well as the sharp Brunn-Minkowski inequality of Cordero-Erausquin–McCann–Schmuckenschläger [33] and Sturm [74], all in global form (see also Ohta [59]).

We would like to address at this point a certain general belief shared by some in the Optimal-Transport community, stating that the property \({\mathsf {BM}}(K,N)\) of satisfying the Brunn-Minkowski inequality (with sharp coefficients correctly depending on K, N), should be morally equivalent to the \({\mathsf {CD}}(K,N)\) condition. Rigorously establishing such an equivalence would immediately yield the local-to-global property of \({\mathsf {CD}}(K,N)\), by the Cavalletti–Mondino localization proof that \({\mathsf {CD}}_{loc}(K,N) \Rightarrow {\mathsf {BM}}(K,N)\). However, we were unsuccessful in establishing the missing implication \({\mathsf {BM}}(K,N) \Rightarrow {\mathsf {CD}}(K,N)\), and in fact a careful attempt in this direction seems to lead back to the circle of ideas we were ultimately able to successfully develop in this work.

Instead of starting our investigation from \({\mathsf {BM}}(K,N)\), our strategy is to directly start from a suitable modification of the property (1.5), which we dub \({\mathsf {CD}}^1(K,N)\), when (1.5) is required to hold for transport rays associated to (signed) distance functions from level sets of continuous functions. A stronger condition, when (1.5) is required to hold for transport rays associated to all 1-Lipschitz functions, is denoted by \({\mathsf {CD}}^1_{Lip}(K,N)\)—see Sect. 8 for precise definitions. The main result of this work consists of showing that \({\mathsf {CD}}^1(K,N) \Rightarrow {\mathsf {CD}}(K,N)\), by means of transferring the one-dimensional \({\mathsf {CD}}(K,N)\) information encoded in a family of suitably constructed \(L^1\)-Optimal-Transport rays, onto a given \(W_2\)-geodesic, thereby obtaining the correct disentanglement between tangential and orthogonal distortions. This goes in exactly the opposite direction to the one studied by Cavalletti and Mondino in [27], and completes the cycle

To the best of our knowledge, this decisive feature of our work—deducing \({\mathsf {CD}}(K,N)\) for a given \(W_2\)-geodesic by considering the \({\mathsf {CD}}_{\text {loc}}(K,N)\) information encoded in family (in accordance with (1.4)) of different associated \(W_2\)-geodesics (manifesting itself in the \({\mathsf {CD}}^1(K,N)\) information along a family of different \(L^1\)-Optimal-Transport rays)—has not been previously explored.

Main Theorem 1.1

Let \((X,\mathsf {d},{\mathfrak {m}})\) be an essentially non-branching m.m.s.with \({\mathfrak {m}}(X) < \infty \), and let \(K \in {\mathbb {R}}\) and \(N \in (1,\infty )\). Then the following statements are equivalent:

-

(1)

\((X,\mathsf {d},{\mathfrak {m}})\) verifies \({\mathsf {CD}}(K,N)\).

-

(2)

\((X,\mathsf {d},{\mathfrak {m}})\) verifies \({\mathsf {CD}}^*(K,N)\).

-

(3)

\((X,\mathsf {d},{\mathfrak {m}})\) verifies \({\mathsf {CD}}^1_{Lip}(K,N)\).

-

(4)

\((X,\mathsf {d},{\mathfrak {m}})\) verifies \({\mathsf {CD}}^1(K,N)\).

If in addition \((\text {supp}({\mathfrak {m}}),\mathsf {d})\) is a length-space, the above statements are equivalent to

-

(5)

\((X,\mathsf {d},{\mathfrak {m}})\) verifies \({\mathsf {CD}}_{loc}(K,N)\).

To this list one can also add the entropic Curvature-Dimension condition \({\mathsf {CD}}^e(K,N)\) of Erbar–Kuwada–Sturm [35], which is known to be equivalent to \({\mathsf {CD}}^*(K,N)\) for essentially non-branching spaces. In other words, all synthetic definitions of Curvature-Dimension are equivalent for essentially non-branching m.m.s.’s, and in particular, the local-to-global property holds for such spaces (recall that this is known to be false on m.m.s.’s where branching is allowed by [67]). The equivalence with \({\mathsf {CD}}_{loc}(K,N)\) is clearly false without some global assumption ultimately ensuring that \((\text {supp}({\mathfrak {m}}),\mathsf {d})\) is a geodesic-space, see Remark 13.4.

As already mentioned, and being slightly imprecise (see Sect. 13 for precise statements), the implications \({\mathsf {CD}}(K,N) \Rightarrow {\mathsf {CD}}^*(K,N) \Rightarrow {\mathsf {CD}}_{loc}(K,N)\) follow from the work of Bacher and Sturm [14], and the implication \({\mathsf {CD}}_{loc}(K,N) \Rightarrow {\mathsf {CD}}^1_{Lip}(K,N)\) follows by adapting to the present framework what was already proved by Cavalletti and Mondino in [27] (after taking care of the important maximality requirement of transport-rays, see Theorem 7.10). So almost all of our effort goes into proving that \({\mathsf {CD}}^1(K,N) \Rightarrow {\mathsf {CD}}(K,N)\). For a smooth weighted Riemannian manifold \((M,\mathsf {d},{\mathfrak {m}})\), it is an easy exercise to show the latter implication using the Bakry–Émery differential characterization of \({\mathsf {CD}}(K,N)\)—simply use an appropriate umbilic hypersurface H passing through a given point \(p \in M\) and perpendicular to a given direction \(\xi \in T_p M\), and apply the \({\mathsf {CD}}^1(K,N)\) definition to the distance function from H. Of course, this provides no insight towards how to proceed in the m.m.s.setting, so it is natural to try and obtain an alternative synthetic proof, still in the smooth setting. While this is possible, it already poses a much greater challenge, which in some sense provided the required insight leading to the strategy we ultimately employ in this work.

1.3 Main new ingredients of proof

To achieve the right disentanglement, we are required to develop several new ingredients beyond the present state-of-the-art, which, being conceptual in nature, are in our opinion of independent interest.

-

(1)

The first is a change-of-variables formula for the density of an \(L^2\)-Optimal-Transport geodesic in X (see Theorem 11.4), which depends on a second-order derivative of associated interpolating Kantorovich potentials.

Let \(\mathrm{Geo}(X)\) denote the collection of constant speed geodesics on X parametrized on the interval [0, 1], and let \(\mathrm{e}_t : \mathrm{Geo}(X) \ni \gamma \mapsto \gamma _t \in X\) denote the evaluation map at time \(t \in [0,1]\). Given two Borel probability measures \(\mu _0,\mu _1 \in {\mathcal {P}}(X)\) with finite second moments, any \(W_2\)-geodesic \([0,1] \ni t\mapsto \mu _t \in {\mathcal {P}}(X)\) can be lifted to an optimal dynamical plan \(\nu \in {\mathcal {P}}(\mathrm{Geo}(X))\), so that \((\mathrm{e}_t)_{\sharp } \nu = \mu _t\) for all \(t \in [0,1]\). Let \(\varphi \) denote a Kantorovich potential associated to the \(L^2\)-transport problem between \(\mu _0\) and \(\mu _1\). Given \(s,t\in (0,1)\), we introduce the time-propagated intermediate Kantorovich potential \(\Phi _s^t\) by pushing forward \(\varphi _s\) via \(\mathrm{e}_t \circ \mathrm{e}_s^{-1}\), where \(\left\{ \varphi _t\right\} _{t\in [0,1]}\) is the family of interpolating Kantorovich potentials obtained via the Hopf–Lax semi-group applied to \(\varphi \). While \(\mathrm{e}_t^{-1}\) may be multi-valued, Theorem 3.11 ensures that \(\Phi _s^t = \varphi _s \circ \mathrm{e}_s \circ \mathrm{e}_t^{-1}\) is well-defined on \(\mathrm{e}_t(G_\varphi )\), the set of t-mid-points of transport geodesics.

Theorem 11.4 states that if \((X,\mathsf {d},{\mathfrak {m}})\) is an essentially non-branching m.m.s.verifying \({\mathsf {CD}}^{1}(K,N)\) (\({\mathfrak {m}}(X) < \infty \) and \(N \in (1,\infty )\)), and if \(\mu _0,\mu _1 \ll {\mathfrak {m}}\), then for \(\nu \)-a.e. transport-geodesic \(\gamma \in \mathrm{Geo}(X)\) of positive length:

$$\begin{aligned} \frac{\rho _{s} (\gamma _{s})}{\rho _{t}(\gamma _{t})} = \frac{\ell ^{2}(\gamma )}{\partial _{\tau }|_{\tau = t}\Phi _{s}^{\tau }(\gamma _{t})} \cdot h^\gamma _s(t) \;\;\; \text {for a.e. }t,s \in (0,1), \end{aligned}$$(1.6)where \(\rho _t\) are appropriate versions of the densities \(d\mu _t / d{\mathfrak {m}}\), and for every \(s \in (0,1)\), \(h^\gamma _s\) is a \({\mathsf {CD}}(\ell (\gamma )^2 K ,N)\) density on [0, 1] so that \(h^\gamma _s(s) = 1\). In particular, for a.e. \(t,s\in (0,1)\), \(\partial _{\tau }|_{\tau = t}\Phi _{s}^{\tau }(\gamma _{t})\) exists and is positive. Here \(h^\gamma _s\) is obtained from the \({\mathsf {CD}}^1(K,N)\) condition applied to the transport-ray associated to the (signed) distance function from the level set \(\left\{ \varphi _s = \varphi _s(\gamma _s)\right\} \).

Theorem 11.4 constitutes the culmination of Part II of this work, which is mostly dedicated to introducing the \({\mathsf {CD}}^{1}(K,N)\) condition and rigorously establishing the change-of-variables formula (1.6). Note that we refrain from making any assumptions on (the challenging) spatial regularity of \(\Phi _{s}^{t}\) when \(t\ne s\), so we are precluded from invoking the coarea formula in our derivation. Our main tool for deriving (1.6) is a comparison between two disintegrations of appropriate measures, one encoding \(W_2\) information and another encoding \(W_1\) information—see Sect. 11 for a heuristic derivation.

-

(2)

To obtain disentanglement of the “Jacobian” \(t \mapsto 1/\rho _t(\gamma _t)\) into its orthogonal and tangential components, we need to understand the first-order variation of the change-of-variables formula (1.6) at \(t=s\), i.e. the second-order variation of \(t \mapsto \Phi _s^t\) at \(t=s\), which amounts to a third-order variation of \(t \mapsto \varphi _t\). Our second main new ingredient in this work is a surprising third-order bound on the variation of \(t \mapsto \varphi _t\) along the Hopf–Lax semi-group (Theorem 5.5), which holds in complete generality on any proper geodesic space.

To this end, we develop in Part I of this work a first, second, and finally third order temporal theory of intermediate Kantorovich potentials in a purely metric setting \((X,\mathsf {d})\), without specifying any reference measure \({\mathfrak {m}}\) and without assuming any non-branching assumptions. This part, which may be read independently of the other components of this work, is presented first (in Sects. 2–5), since its results are constantly used throughout the rest of this work.

Our starting point here is the pioneering work by Ambrosio–Gigli–Savaré [5, 6, Section 3], who already investigated in a very general (extended) metric space setting the first and second order temporal behaviour of the Hopf-Lax semi-group \(Q_t\) applied to a general function \(f : X \rightarrow {\mathbb {R}}\cup \left\{ +\infty \right\} \). However, the essential point we observe in our treatment is that when f is itself a Kantorovich potential \(\varphi \), characterized by the property that \(\varphi = Q_1(-\varphi ^c)\) and \(\varphi ^c = Q_1(-\varphi )\), much more may be said regarding the behaviour of \(t \mapsto \varphi _t := -Q_t(-\varphi )\), even in first and second order. This is due to the fact that if we reverse time and define \({\bar{\varphi }}_t := Q_{1-t}(-\varphi ^c)\), then we obtain two-sided control over \(\varphi _t\) on the set \(\left\{ \varphi _t = {\bar{\varphi }}_t\right\} \), which turns out to coincide with the set \(\mathrm{e}_t(G_\varphi )\). So for instance, two apparently novel observations which we constantly use throughout this work are that for all \(t \in (0,1)\), \(\ell _t^2/2 := \partial _t \varphi _t\) exists on \(\mathrm{e}_t(G_\varphi )\), and that transport geodesics having a given \(x \in X\) as their t-midpoint all have the same length \(\ell _t(x)\). In Sect. 3, we establish Lipschitz regularity properties of \(t \mapsto \ell ^2_t(x)\) for all \(x \in X\), as well as upper and lower derivative estimates, both pointwise and a.e., for appropriate times t. These are then transferred in Sect. 4 to corresponding estimates for the function \(\Phi _s^t\).

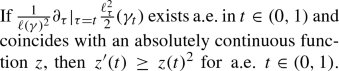

Part I culminates in Sect. 5, whose goal is to prove a quantitative version of the following (somewhat oversimplified) statement, which crucially provides second order information on \(\ell _t\), or equivalently, third order information on \(\varphi _t\), along \(\gamma _t\):

(1.7)

(1.7)Equivalently, this amounts to the statement that:

$$\begin{aligned} (0,1) \ni r \mapsto L(r) := \exp \left( - \frac{1}{\ell (\gamma )^2} \int ^r_{r_0} \partial _\tau |_{\tau =t} \frac{\ell _\tau ^2}{2}(\gamma _t) dt\right) \text { is concave },\nonumber \\ \end{aligned}$$(1.8)since (formally)

$$\begin{aligned} \frac{L''}{L} = (\log L) '' + ((\log L)')^2 = - z' + z^2 \le 0 . \end{aligned}$$It turns out that L(t) precisely corresponds to the tangential component of \(1/\rho _t(\gamma _t)\), and its concavity ensures that it is synthetically controlled by the linear term appearing in the definition of \(\tau ^{(t)}_{K,N}(\theta )\) in (1.2). The novel observation that it is possible to extract in a general metric setting third order information from the Hopf-Lax semi-group, which formally solves the first-order Hamilton-Jacobi equation, is in our opinion one of the most surprising parts of this work. Even in the smooth Riemannian setting, we were not able to find a synthetic proof which is easier than the one in the general metric setting; a formal differential proof of (1.7) assuming both temporal and (more challenging) spatial higher-order regularity of \(\varphi _t\) is provided in Sect. 5.1, but the latter seems to wrongly suggest that it would not be possible to extend (1.7) beyond a Hilbertian setting. Our proof in the general metric setting (Theorem 5.2) is based on a careful comparison of second order expansions of \(\varepsilon \mapsto \varphi _{\tau +\varepsilon }(\gamma _\tau )\) at \(\tau =t,s\), and subtle differences between the usual second derivative and the second Peano derivative (see Sect. 2) come into play.

-

(3)

Our third main new ingredient, described in Part III, is a certain rigidity property of the change-of-variables formula (1.6), which allows us to bootstrap the a-priori available temporal regularity, and which in combination with the first and second ingredients, enables us to achieve disentanglement.

Indeed, the definition of \(\Phi _s^t\) may be naturally extended to an appropriate domain beyond \(\mathrm{e}_t(G_\varphi )\) as follows, allowing to easily (formally) calculate its partial derivative:

$$\begin{aligned} \Phi _s^t = \varphi _t + (t-s) \frac{\ell _t^2}{2} , \;\;\; \partial _t \Phi _s^t = \ell _t^2 + (t-s) \partial _t\frac{\ell _t^2}{2} . \end{aligned}$$Evaluating at \(x = \gamma _t\) and plugging this into the change-of-variables formula (1.6), it follows that for \(\nu \)-a.e. geodesic \(\gamma \):

$$\begin{aligned} \frac{\rho _s(\gamma _s)}{\rho _t(\gamma _t)} = \frac{h^\gamma _s(t)}{1 + (t-s) \frac{\partial _\tau |_{\tau =t}\ell _\tau ^2/2(\gamma _t)}{\ell ^2(\gamma )}} \;\;\; \text {for a.e. } t,s \in (0,1). \end{aligned}$$(1.9)Thanks to the idea of considering together both initial-point s and end-point t, the latter formula takes on a very rigid structure: note that on the left-hand-side the s and t variables are separated, and the denominator on the right-hand-side depends linearly is s. Consequently, we can easily bootstrap the a-priori available regularity in s and t of all terms involved. It follows that \(\frac{1}{\ell ^2(\gamma )} \partial _\tau |_{\tau =t}\ell _\tau ^2/2(\gamma _t)\) must coincide for a.e. \(t \in (0,1)\) with a locally-Lipschitz function z(t), so that (1.7) applies. In addition, by redefining \(\left\{ h^\gamma _s\right\} \) for s in a null subset of (0, 1), we can guarantee that \((0,1) \ni s \mapsto h^\gamma _s(t)\) is locally Lipschitz (for any given \(t \in (0,1)\)), even though there is a-priori no relation between the different densities \(\left\{ h^\gamma _s\right\} _{s \in (0,1)}\).

At this point, if \(\rho _t(\gamma _t)\) and z(t) were known to be \(C^2\) smooth, and equality were to hold in (1.9) for all \(s,t \in (0,1)\), we could then define

$$\begin{aligned} Y(r) := \exp \left( \int _{r_0}^r \partial _t|_{t=s} \log h^\gamma _s(t) ds \right) , \end{aligned}$$(1.10)and as \(\partial _t|_{t=s} \log (1 + (t-s) z(t)) = z(s)\), it would follow, recalling the definition (1.8) of L, that

$$\begin{aligned} \frac{\rho _{r_0}(\gamma _{r_0})}{\rho _r(\gamma _r)} = L(r) Y(r) \;\;\; \forall r \in (0,1) . \end{aligned}$$(1.11)Using the fact that all \(\left\{ h^\gamma _s\right\} _{s \in (0,1)}\) are \({\mathsf {CD}}(\ell (\gamma )^2 K,N)\) densities to control \(\partial ^2_t|_{t=r} \log h_r(t)\), and surprisingly, also the concavity of L (again!) to control the mixed partial derivatives \(\partial _s \partial _t|_{t=s=r} \log h^\gamma _s(t)\), a formal computation described in Sect. 12.2 then verifies that Y is a \({\mathsf {CD}}(\ell (\gamma )^2 K,N)\) density itself. A rigorous justification without all of the above non-realistic assumptions turns out to be extremely tedious, due to the difficulty in applying an approximation argument while preserving the rigidity of the equation—this is worked out in Sect. 12 and the “Appendix”.

After taking care of all these details, we finally obtain the desired disentanglement (1.11) of the Jacobian: L is concave and so controlled synthetically by a linear distortion coefficient, whereas Y is a \({\mathsf {CD}}(\ell (\gamma )^2 K,N)\) density and so (by definition) \(Y^{1/(N-1)}\) is controlled synthetically by the \(\sigma ^{(t)}_{K,N-1}(\ell (\gamma ))\) coefficient. A standard application of Hölder’s inequality then verifies that \(J^{1/N}(r) = \rho _r(\gamma _r)^{-1/N}\) is controlled by the \(\tau ^{(t)}_{K,N}(\ell (\gamma ))\) distortion coefficient, i.e. satisfies (1.1)—in fact for all \(t_0,t_1 \in [0,1]\)—thereby establishing \({\mathsf {CD}}(K,N)\), see Theorem 13.2.

The definition (1.10) of Y finally sheds light on the crucial role which the parameter \(s \in (0,1)\) plays in our strategy—its role is to vary between the different \(W_2\)-geodesics from which the \({\mathsf {CD}}_{loc}(K,N)\) information is extracted into the \({\mathsf {CD}}^1(K,N)\) information on the disintegration into transport-rays from the (signed) distance functions from level sets \(\left\{ \varphi _s = \varphi _s(\gamma _s)\right\} \), thereby coming full circle with the observation of (1.4).

Besides establishing the local-to-global property of \({\mathsf {CD}}(K,N)\) and the equivalence of its various variants (in our setting), we emphasize that as a by product of our proof, we obtain a remarkable new self-improvement property of \({\mathsf {CD}}(K,N)\): the \(\tau _{K,N}\)-concavity (1.1) of the transport Jacobian \(J_t(\gamma _t)\) along all \(W_2\)-geodesics implies the (a-priori) stronger “L-Y” decomposition \(J_t(\gamma _t) = L_\gamma (t) Y_\gamma (t)\), where \(L_\gamma \) is concave and \(Y_\gamma \) is a \({\mathsf {CD}}(\ell (\gamma )^2 K, N)\) density on (0, 1). As already mentioned above, this self-improvement is false for a single \(W_2\)-geodesic. We believe that the stronger “L-Y” information will prove to be of further use in the study of \({\mathsf {CD}}(K,N)\) essentially non-branching spaces.

We refer to Sect. 13 for the final details and for additional immediate corollaries of the Main Theorem 1.1 pertaining to \({\mathsf {RCD}}(K,N)\) and strong \({\mathsf {CD}}(K,N)\) spaces. We also provide there several concluding remarks and suggestions for further investigation.

2 Part I Temporal theory of Optimal-Transport

3 Preliminaries

3.1 Geodesics

A metric space \((X,\mathsf {d})\) is called a length space if for all \(x,y \in X\), \(\mathsf {d}(x,y) = \inf \ell (\sigma )\), where the infimum is over all (continuous) curves \(\sigma : I \rightarrow X\) connecting x and y, and \(\ell (\sigma ) := \sup \sum _{i=1}^k \mathsf {d}(\sigma (t_{i-1}),\sigma (t_{i}))\) denotes the curve’s length, where the latter supremum is over all \(k \in {\mathbb {N}}\) and \(t_0 \le \cdots \le t_k\) in the interval \(I \subset {\mathbb {R}}\). A curve \(\gamma \) is called a geodesic if \(\ell (\gamma |_{[t_0,t_1]}) = \mathsf {d}(\gamma (t_0),\gamma (t_1))\) for all \([t_0,t_1] \subset I\). If \(\ell (\gamma ) = 0\) we will say that \(\gamma \) is a null geodesic. The metric space is called a geodesic space if for all \(x,y \in X\) there exists a geodesic in X connecting x and y. We denote by \(\mathrm{Geo}(X)\) the set of all closed directed constant-speed geodesics parametrized on the interval [0, 1]:

We regard \(\mathrm{Geo}(X)\) as a subset of all Lipschitz maps \(\text {Lip}([0,1], X)\) endowed with the uniform topology. We will frequently use \(\gamma _t := \gamma (t)\).

The metric space is called proper if every closed ball (of finite radius) is compact. It follows from the metric version of the Hopf-Rinow Theorem (e.g. [22, Theorem 2.5.28]) that for complete length spaces, local compactness is equivalent to properness, and that complete proper length spaces are in fact geodesic.

Given a subset \(D \subset X \times {\mathbb {R}}\), we denote its sections by

Given a subset \(G \subset \mathrm{Geo}(X)\), we denote by \(\mathring{G} := \left\{ \gamma |_{(0,1)} \;;\; \gamma \in G\right\} \) the corresponding open-ended geodesics on (0, 1). For a subset of (closed or open) geodesics \({\tilde{G}}\), we denote

We denote by \(\mathrm{e}_t : \mathrm{Geo}(X) \ni \gamma \mapsto \gamma _t \in X\) the (continuous) evaluation map at \(t \in [0,1]\), and abbreviate given \(I \subset [0,1]\) as follows:

3.2 Derivatives

For a function \(g : A \rightarrow {\mathbb {R}}\) on a subset \(A \subset {\mathbb {R}}\), denote its upper and lower derivatives at a point \(t_0 \in A\) which is an accumulation point of A by

We will say that g is differentiable at \(t_0\) iff \(\frac{d}{dt} g(t_0) := \frac{{\overline{d}}}{dt} g(t_0) = \underline{\frac{d}{dt}} g(t_0) < \infty \). This is a slightly more general definition of differentiability than the traditional one which requires that \(t_0\) be an interior point of A.

Remark 2.1

Note that there are only a countable number of isolated points in A, so a.e. point in A is an accumulation point. In addition, it is clear that if \(t_0 \in B \subset A\) is an accumulation point of B and g is differentiable at \(t_0\), then \(g|_B\) is also differentiable at \(t_0\) with the same derivative. In particular, if g is a.e. differentiable on A then \(g|_B\) is also a.e. differentiable on B and the derivatives coincide.

Remark 2.2

Denote by \(A_1 \subset A\) the subset of density one points of A (which are in particular accumulation points of A). By Lebesgue’s Density Theorem \({\mathcal {L}}^1(A \setminus A_1) = 0\), where we denote by \({\mathcal {L}}^1\) the Lebesgue measure on \({\mathbb {R}}\) throughout this work. If \(g : A \rightarrow {\mathbb {R}}\) is locally Lipschitz, consider any locally Lipschitz extension \({\hat{g}} : {\mathbb {R}}\rightarrow {\mathbb {R}}\) of g. Then it is easy to check that for \(t_0 \in A_1\), g is differentiable in the above sense at \(t_0\) if and only if \({{\hat{g}}}\) is differentiable at \(t_0\) in the usual sense, in which case the derivatives coincide. In particular, as \({{\hat{g}}}\) is a.e. differentiable on \({\mathbb {R}}\), it follows that g is a.e. differentiable on \(A_1\) and hence on A, and it holds that \(\frac{d}{dt} g = \frac{d}{dt} {{\hat{g}}}\) a.e. on A.

Let \(f : I \rightarrow {\mathbb {R}}\) denote a convex function on an open interval \(I \subset {\mathbb {R}}\). It is well-known that the left and right derivatives \(f^{\prime ,-}\) and \(f^{\prime ,+}\) exist at every point in I and that f is locally Lipschitz there; in particular, f is differentiable at a given point iff the left and right derivatives coincide there. Denoting by \(D \subset I\) the differentiability points of f in I, it is also well-known that \(I \setminus D\) is at most countable. Consequently, any point in D is an accumulation point, and we may consider the differentiability in D of \(f' : D \rightarrow {\mathbb {R}}\) as defined above. We will require the following elementary one-dimensional version (probably due to Jessen) of the well-known Aleksandrov’s theorem about twice differentiability a.e. of convex functions on \({\mathbb {R}}^n\) (see [45, Theorem 5.2.1] or [20, Section 2.6], and [71, p. 31] for historical comments). Clearly, all of these results extend to locally semi-convex and semi-concave functions as well; recall that a function \(f : I \rightarrow {\mathbb {R}}\) is called semi-convex (semi-concave) if there exists \(C \in {\mathbb {R}}\) so that \(I \ni x \mapsto f(x) + C x^2\) is convex (concave).

Lemma 2.3

(Second Order Differentiability of Convex Function) Let \(f : I \rightarrow {\mathbb {R}}\) be a convex function on an open interval \(I \subset {\mathbb {R}}\), and let \(\tau _0 \in I\) and \(\Delta \in {\mathbb {R}}\). Then the following statements are equivalent:

-

(1)

f is differentiable at \(\tau _0\), and if \(D \subset I\) denotes the subset of differentiability points of f in I, then \(f' : D \rightarrow {\mathbb {R}}\) is differentiable at \(\tau _0\) with

$$\begin{aligned} (f')'(\tau _0) := \lim _{D \ni \tau \rightarrow \tau _0} \frac{f'(\tau ) - f'(\tau _0)}{\tau -\tau _0} = \Delta . \end{aligned}$$ -

(2)

The right derivative \(f^{\prime ,+} \,{:}\, I \,{\rightarrow }\, {\mathbb {R}}\) is differentiable at \(\tau _0\) with \((f^{\prime ,+})'\) \((\tau _0)=\Delta \).

-

(3)

The left derivative \(f^{\prime ,-}\,{:}\, I \,{\rightarrow }\, {\mathbb {R}}\) is differentiable at \(\tau _0\) with \((f^{\prime ,-})'\) \((\tau _0)=\Delta \).

-

(4)

f is differentiable at \(\tau _0\) and has the following second order expansion there:

$$\begin{aligned} f(\tau _0 + \varepsilon ) = f(\tau _0) + f'(\tau _0) \varepsilon + \Delta \frac{\varepsilon ^2}{2} + o(\varepsilon ^2)\quad \text { as }\varepsilon \rightarrow 0. \end{aligned}$$In this case, f is said to have a second Peano derivative at \(\tau _0\).

We remark that even for a differentiable function f, while the implication \((1) \Rightarrow (4)\) follows by Taylor’s theorem (existence of the second derivative at a point implies existence of the second Peano derivative there), the converse implication is in general false (see e.g. [61] for a nice discussion). For a locally semi-convex or semi-concave function f, we will say that f is twice differentiable at \(\tau _0\) if any (all) of the above equivalent conditions hold for some \(\Delta \in {\mathbb {R}}\), and write \((\frac{d}{d\tau })^{2}|_{\tau = \tau _0} f(\tau ) = \Delta \).

Finally, we will require the following slightly more refined notation.

Definition

Given an open interval \(I \subset {\mathbb {R}}\) and a function \(f : I \rightarrow {\mathbb {R}}\) which is differentiable at \(\tau _0 \in I\), we define its upper and lower second Peano derivatives at \(\tau _0\), denoted \({\overline{{\mathcal {P}}}}_2 f(\tau _0)\) and \({\underline{{\mathcal {P}}}}_2 f(\tau _0)\) respectively, by

where

Clearly f has a second Peano derivative at \(\tau _0\) iff \({\overline{{\mathcal {P}}}}_2 f(\tau _0) = {\underline{{\mathcal {P}}}}_2 f(\tau _0) < \infty \).

The following is a type of Stolz–Cesàro lemma:

Lemma 2.4

Given an open interval \(I \subset {\mathbb {R}}\) and a locally absolutely continuous function \(f : I \rightarrow {\mathbb {R}}\) which is differentiable at \(\tau _0 \in I\), we have

Proof

By local absolute continuity, f is differentiable a.e. in I and we have for small enough \(\left| \varepsilon \right| \):

and hence

Taking appropriate subsequential limits as \(\varepsilon \rightarrow 0\), the asserted inequalities readily follow. \(\square \)

4 Temporal theory of intermediate-time Kantorovich potentials: first and second order

In the next sections, we will only consider the quadratic cost function \(c=\mathsf {d}^2/2\) on \(X \times X\).

Definition

(c-Concavity, Kantorovich Potential) The c-transform of a function \(\psi : X \rightarrow {\mathbb {R}}\cup \left\{ \pm \infty \right\} \) is defined as the following (upper semi-continuous) function:

A function \(\varphi : X \rightarrow {\mathbb {R}}\cup \left\{ \pm \infty \right\} \) is called c-concave if \(\varphi = \psi ^c\) for some \(\psi \) as above. It is well known [76, Exercise 2.35] that \(\varphi \) is c-concave iff \((\varphi ^c)^c = \varphi \). In the context of Optimal-Transport with respect to the quadratic cost c, a c-concave function \(\varphi : X \rightarrow {\mathbb {R}}\cup \left\{ -\infty \right\} \) which is not identically equal to \(-\infty \) is also known as a Kantorovich potential, and this is how we will refer to such functions in this work. In that case, \(\varphi ^c : X \rightarrow {\mathbb {R}}\cup \left\{ -\infty \right\} \) is also a Kantorovich potential, called the dual or conjugate potential.

There is a natural way to interpolate between a Kantorovich potential and its dual by means of the Hopf-Lax semi-group, resulting in intermediate-time Kantorovich potentials \(\left\{ \varphi _t\right\} _{t \in (0,1)}\). The goal of the next three sections is to provide first, second and third order information on the time-behavior \(t \mapsto \varphi _t(x)\) at intermediate times \(t \in (0,1)\). In these sections, we only assume that \((X,\mathsf {d})\) is a proper geodesic metric space.

In this section, we focus on first and second order information. The main new result is Theorem 3.11.

4.1 Hopf-Lax semi-group

We begin with several well-known definitions which we slightly modify and specialize to our setting.

Definition

(Hopf-Lax Transform) Given \(f : X \rightarrow {\mathbb {R}}\cup \left\{ \pm \infty \right\} \) which is not identically \(+\infty \) and \(t > 0\), define the Hopf-Lax transform \(Q_t f : X \rightarrow {\mathbb {R}}\cup \left\{ -\infty \right\} \) by

Clearly either \(Q_t f \equiv -\infty \) or \(Q_t f(x)\) is finite for all \(x \in X\) (as our metric \(\mathsf {d}\) is finite). Consequently, we denote:

setting \(t_*(f) = 0\) if the supremum is over an empty set. Finally, we set \(Q_0 f := f\).

It is not hard to check (see e.g. [49, Theorem 2.5 (i)]) that when \((X,\mathsf {d})\) is a length space (and in particular geodesic), the Hopf-Lax transform is in fact a semi-group on \([0,\infty )\):

Remark 3.1

It is also possible to extend the definition of \(Q_t f\) to negative times \(t < 0\) by setting

This is called the backwards Hopf-Lax semi-group on \((-\infty ,0]\). However, \(({\mathbb {R}},+) \ni t \mapsto (Q_t,\circ )\) is in general not an abelian group homomorphism, not even for \(t \in [0,1]\) when applied to a Kantorovich potential \(\varphi \) (characterized by \(Q_{-1} \circ Q_1(-\varphi ) = -\varphi \))—see Sect. 3.3. This will be a rather significant nuisance we will need to cope with in this work.

Clearly \((0,\infty ) \times X \ni (t,x) \mapsto Q_t f(x)\) is upper semi-continuous as the infimum of continuous functions in (t, x), and by definition \([0,\infty ) \ni t \mapsto Q_t f(x)\) is monotone non-increasing for each \(x \in X\). Consequently, \((0,\infty ) \ni t \mapsto Q_t f(x)\) must be continuous from the left.

It may also be shown (see [5, Lemma 3.1.2]) that \(X \times (0,t_*(f)) \ni (x,t) \mapsto Q_t f (x)\) is continuous (and in fact locally Lipschitz, see Theorem 3.4 below). Together with the left-continuity, we deduce that for every \(x \in X\), \((0,t_*(f)] \ni t \mapsto Q_t f(x)\) is continuous.

Note that by definition \(f^c = Q_1(-f)\), and that a Kantorovich pair of conjugate potentials \(\varphi ,\varphi ^c : X \rightarrow {\mathbb {R}}\cup \left\{ -\infty \right\} \) are characterized by not being identically equal to \(-\infty \) and satisfying:

In particular, \(t_*(\varphi ),t_*(\varphi ^c) \ge 1\), and we a-posteriori deduce that \(\varphi , \varphi ^c\) are both finite on the entire space X (we have used above the fact that the metric \(\mathsf {d}\) is finite, which differs from other more general treatments).

Definition

(Interpolating Intermediate-Time Kantorovich Potentials) Given a Kantorovich potential \(\varphi : X \rightarrow {\mathbb {R}}\), the interpolating Kantorovich potential at time \(t \in [0,1]\), \(\varphi _t : X \rightarrow {\mathbb {R}}\), is defined for all \(t \in [0,1]\) by

Note that \(\varphi _0 = \varphi \), \(\varphi _1 = -\varphi ^c\), and

Applying the above mentioned general properties of the Hopf-Lax semi-group to \(\varphi _t\), it will be useful to record:

Lemma 3.2

-

(1)

\((x,t) \mapsto \varphi _t(x)\) is lower semi-continuous on \(X \times (0,1]\) and continuous on \(X \times (0,1)\).

-

(2)

For every \(x \in X\), \([0,1] \ni t \mapsto \varphi _t(x)\) is monotone non-decreasing and continuous on (0, 1].

Definition

(Kantorovich Geodesic) Given a Kantorovich potential \(\varphi : X \rightarrow {\mathbb {R}}\), a geodesic \(\gamma \in \mathrm{Geo}(X)\) is called a \(\varphi \)-Kantorovich (or optimal) geodesic if

We denote all \(\varphi \)-Kantorovich geodesics by \(G_\varphi \). Note that \(\gamma \in G_{\varphi }\) iff \(\gamma ^c \in G_{\varphi ^c}\), where \(\gamma ^c(t) := \gamma (1-t)\) is the time-reversed geodesic. By upper semi-continuity of \(\varphi \) and \(\varphi ^c\), it follows that \(G_\varphi \) is a closed subset of \(\mathrm{Geo}(X)\).

The following is not hard to check (see e.g. [24, Corollary 2.16]):

Lemma 3.3

Let \(\gamma \) be a \(\varphi \)-Kantorovich geodesic. Then

4.2 Distance functions

The following important definition was given by Ambrosio–Gigli–Savaré [5, 6]:

Definition

(Distance functions \(D^{\pm }_f\)) Given \(f : X \rightarrow {\mathbb {R}}\cup \left\{ +\infty \right\} \) which is not identically \(+\infty \), denote

where the supremum and infimum above run over the set of minimizing sequences \(\left\{ y_n\right\} \) in the definition of the Hopf-Lax transform (3.1). A simple diagonal argument shows that the (outer) supremum and infimum above are in fact attained.

The following properties were established in [5, 6, Chapter 3]:

Theorem 3.4

(Ambrosio–Gigli–Savaré) For any metric space \((X,\mathsf {d})\) (not necessarily proper, complete nor geodesic):

-

(1)

Both functions \(D^{\pm }_f(x,t)\) are locally finite on \(X \times (0,t_*(f))\), and \((x,t) \mapsto Q_t f(x)\) is locally Lipschitz there.

-

(2)

\((x,t) \mapsto D^{\pm }_f(x,t)\) is upper (\(D^{+}_f(x,t)\)) / lower (\(D^{-}_f(x,t)\)) semi-continuous on \(X \times (0,t_*(f))\).

-

(3)

For every \(x \in X\), both functions \((0,t_*(f)) \ni t \mapsto D^{\pm }_f(x,t)\) are monotone non-decreasing and coincide except where they have (at most countably many) jump discontinuities.

-

(4)

For every \(x \in X\), \(\partial _t^{\pm } Q_t f(x) = - \frac{(D^{\pm }_f(x,t))^2}{2 t^2}\) for all \(t \in (0,t_*(f))\), where \(\partial _t^{-}\) and \(\partial _t^+\) denote the left and right partial derivatives, respectively. In particular, the map \((0,t_*(f)) \ni t \mapsto Q_t f(x)\) is locally Lipschitz and locally semi-concave, and differentiable at \(t \in (0,t_*(f))\) iff \(D^+_f(x,t) = D^-_f(x,t)\).

It may be instructive to recall the proof of property (3) above, which is related to some ensuing properties, so for completeness, we present it below. For simplicity, we restrict to the case of interest for us, and first record:

Lemma 3.5

Given a proper metric space X, a lower semi-continuous \(f : X \rightarrow {\mathbb {R}}\), \(x \in X\) and \(t \in (0,t_*(f))\), there exist \(y^{\pm }_t \in X\) so that

Recall that \(-\varphi \) is indeed lower semi-continuous for any Kantorovich potential \(\varphi \).

Proof of Lemma 3.5

Let \(\{y_t^{\pm ,n}\}\) denote a minimizing sequence so that

By property (1) we know that \(D^{\pm }_f(x,t)< R < \infty \), and the properness implies that the closed geodesic ball \(B_R(x)\) is compact. Consequently \(\{y_t^{\pm ,n}\}\) has a converging subsequence to \(y^{\pm }_t\), and the lower semi-continuity of f implies that:

as asserted. \(\square \)

Proof of (3) for proper X and lower semi-continuous f. The assertion will follow immediately after establishing

since trivially \(D^-_f \le D^+_f\) and since a monotone function can only have a countable number of jump discontinuities. By Lemma 3.5, there exist \(y^+_s\) and \(y^-_t\) so that

and:

It follows that

Summing these two inequalities and rearranging terms, one deduces

as required. \(\square \)

4.3 Intermediate-time duality and time-reversed potential

It is immediate to show by inspecting the definitions that we always have (e.g. [77, Theorem 7.34 (iii)] or [3, Proposition 2.17 (ii)]):

this is an inherent group-structure incompatibility of the Hopf-Lax forward and backward semi-groups. Note that for \(f = -\varphi \) where \(\varphi \) is a Kantorovich potential, we do have equality for \(s=1\), and in fact for all \(s \in [0,1]\). However, for \(f = Q_t(-\varphi )\), \(t \in (0,1)\) and \(s=1-t\), we can only assert an inequality above ([77, Theorem 7.36], [3, Corollary 2.23 (i)]):

and equality may not hold at every point of X (cf. [77, Remark 7.37]). Nevertheless, in our setting, the subset where equality is attained may be characterized as in the next proposition. We first introduce the following very convenient:

Definition

(Time-Reversed Interpolating Potential) Given a Kantorovich potential \(\varphi : X \rightarrow {\mathbb {R}}\), define the time-reversed interpolating Kantorovich potential at time \(t \in [0,1]\), \({\bar{\varphi }}_t : X \rightarrow {\mathbb {R}}\), as

Note that \({\bar{\varphi }}_0 = \varphi \), \({\bar{\varphi }}_1 = -\varphi ^c\), and

Proposition 3.6

-

(1)

\(\varphi _0 = {\bar{\varphi }}_0 = \varphi \) and \(\varphi _1 = {\bar{\varphi }}_1 = -\varphi ^c\).

-

(2)

For all \(t \in [0,1]\), \(\varphi _t \le {\bar{\varphi }}_t\).

-

(3)

For any \(t \in (0,1)\), \(\varphi _t(x) = {\bar{\varphi }}_t(x)\) if and only if \(x \in \mathrm{e}_t(G_\varphi )\). In other words:

$$\begin{aligned} D(\mathring{G}_\varphi ) = \left\{ (x,t) \in X \times (0,1) \; ; \; \varphi _t(x) = {\bar{\varphi }}_t(x) \right\} . \end{aligned}$$(3.3)

(1) is immediate by c-concavity, and (2) is a reformulation of (3.2), so the only assertion requiring proof is (3). The if direction is well-known (e.g. [77, Theorem 7.36], [3, Corollary 2.23 (ii)]), but the other direction appears to be new. It is based on the following simple lemma, which we will use again later on:

Lemma 3.7

Assume that for some \(x,y,z \in X\) and \(t \in (0,1)\):

Then x is a t-intermediate point between y and z:

and there exists a \(\varphi \)-Kantorovich geodesic \(\gamma : [0,1] \rightarrow X\) with \(\gamma (0) = y\), \(\gamma (t) =x\) and \(\gamma (1) = z\).

Proof

Using that

our assumption yields

On the other hand, the reverse inequality is always valid by the triangle and Cauchy–Schwarz inequalities:

It follows that we must have equality everywhere above, and (3.4) amounts to the equality case in the Cauchy–Schwarz inequality. Consequently, the concatenation \(\gamma : [0,1] \rightarrow X\) of any constant speed geodesic \(\gamma _1 : [0,t] \rightarrow X\) between y and x, with any constant speed geodesic \(\gamma _2 : [t,1] \rightarrow X\) between x and z, so that \(\gamma (0) = y\), \(\gamma (t) = x\) and \(\gamma (1) = z\), must be a constant speed geodesic itself (by the triangle inequality). Lastly, the equality in (3.5) implies that \(\gamma \in G_\varphi \), thereby concluding the proof. \(\square \)

Proof of Proposition 3.6 (3)

We begin with the known direction. Let \(x = \gamma _t\) with \(\gamma \in G_\varphi \). Apply Lemma 3.3 to \(\gamma \) with \(s=0\) and \(r=t\):

and to \(\gamma ^c \in G_{\varphi ^c}\) with \(s=1\) and \(r=1-t\):

where we used that \((\varphi ^c)_1 = -(\varphi ^c)^c = -\varphi \). Summing these two identities, we obtain:

as asserted.

For the other direction, assume that \(\varphi _t(x) = - (\varphi ^c)_{1-t}(x)\) for some \(x \in X\) and \(t \in (0,1)\). By Lemma 3.5 applied to the lower semi-continuous functions \(-\varphi \) and \(-\varphi ^c\), there exist \(y_t,z_t \in X\) so that

Summing the two equations, the assertion follows immediately from Lemma 3.7. \(\square \)

We also record the following immediate corollary of Lemma 3.2:

Corollary 3.8

-

(1)

\((x,t) \mapsto {\bar{\varphi }}_t(x)\) is upper semi-continuous on \(X \times [0,1)\) and continuous on \(X \times (0,1)\).

-

(2)

For every \(x \in X\), \([0,1] \ni t \mapsto {\bar{\varphi }}_t(x)\) is monotone non-decreasing and continuous on [0, 1).

Finally, in view of (3.3), we deduce for free:

Corollary 3.9

\(D(\mathring{G}_\varphi )\) is a closed subset of \(X \times (0,1)\).

Proof

Immediate from (3.3) by the continuity of \(\varphi _t(x)\) and \({\bar{\varphi }}_t(x)\) on \(X \times (0,1)\).

\(\square \)

4.4 Length functions \(\ell _t^{\pm }\) and \({\bar{\ell }}_t^{\pm }\)

Definition

(Length functions \(\ell _t^{\pm },{\bar{\ell }}_t^{\pm }\)) Given a Kantorovich potential \(\varphi : X \rightarrow {\mathbb {R}}\), denote

To provide motivation for these definitions, let us mention that we will shortly see that if \(x = \gamma _t\) with \(\gamma \in G_\varphi \) and \(t \in (0,1)\), then

In particular, all \(\varphi \)-Kantorovich geodesics having x as their t-mid-point have the same length. These facts seem to not have been previously noted in the literature, and they will be crucially exploited in this work.

Definition

For \({\tilde{\ell }} = \ell ,{\bar{\ell }}\), introduce the following set:

and on it define \({\tilde{\ell }}_t(x)\) as the common value \({\tilde{\ell }}_t^+(x) = {\tilde{\ell }}_t^-(x)\).

Recalling that \(\varphi _t = -Q_t(-\varphi )\) and \({\bar{\varphi }}_t = Q_{1-t}(-\varphi ^c)\), we begin by translating Theorem 3.4 into the following corollary. We freely use standard properties of semi-convex (semi-concave) functions, like twice a.e. differentiability, non-negativity (non-positivity) of the singular part of the distributional second derivative (see e.g. Lemma A.11), etc.

Corollary 3.10

Let \(\varphi : X \rightarrow {\mathbb {R}}\) denote a Kantorovich potential. Then:

-

(1)

For \({\tilde{\ell }}= \ell ,{\bar{\ell }}\) and \({\tilde{\varphi }}=\varphi ,{\bar{\varphi }}\), \({\tilde{\ell }}^{\pm }_t(x)\) are locally finite on \(X \times (0,1)\), and \((x,t) \mapsto {\tilde{\varphi }}_t(x)\) is locally Lipschitz there.

-

(2)

For \({\tilde{\ell }} = \ell ,{\bar{\ell }}\), \((x,t) \mapsto {\tilde{\ell }}^{\pm }_t(x)\) is upper (\({\tilde{\ell }}^{+}_t(x)\)) / lower (\({\tilde{\ell }}^{-}_t(x)\)) semi-continuous on \(X \times (0,1)\). In particular, the subset \(D_{{\tilde{\ell }}} \subset X \times (0,1)\) is Borel and \((x,t) \mapsto {\tilde{\ell }}_t(x)\) is continuous on \(D_{{\tilde{\ell }}}\).

-

(3)

For every \(x \in X\) we have

$$\begin{aligned} \partial _t^{\pm } \varphi _t(x) = \frac{\ell ^{\pm }_t(x)^2}{2} ~,~ \partial _t^{\pm } {\bar{\varphi }}_t(x) = \frac{{\bar{\ell }}^{\mp }_t(x)^2}{2}\;\;\; \forall t \in (0,1) . \end{aligned}$$In particular, for \({\tilde{\ell }}= \ell ,{\bar{\ell }}\) and \({\tilde{\varphi }}=\varphi ,{\bar{\varphi }}\), respectively, the map \((0,1) \ni t \mapsto {\tilde{\varphi }}_t(x)\) is locally Lipschitz, and it is differentiable at \(t \in (0,1)\) iff \(t \in D_{{\tilde{\ell }}}(x)\), the set on which both maps \((0,1) \ni t \mapsto {\tilde{\ell }}^{\pm }_t(x)\) coincide. \(D_{{\tilde{\ell }}}(x)\) is precisely the set of continuity points of both maps, and thus coincides with (0, 1) with at most countably exceptions. In particular

$$\begin{aligned} {\tilde{\varphi }}_{t_2}(x) - {\tilde{\varphi }}_{t_1}(x) = \int _{t_1}^{t_2} \frac{{\tilde{\ell }}^2_\tau (x)}{2} d\tau \;\;\; \forall t_1,t_2 \in (0,1) . \end{aligned}$$ -

(4)

For every \(x \in X\):

-

(a)

Both maps \((0,1) \ni t \mapsto t \ell ^{\pm }_t(x)\) are monotone non-decreasing. In particular, \(D_{\ell }(x) \ni t \mapsto \ell ^2_t(x)\) is differentiable a.e., the singular part of its distributional derivative is non-negative, \((0,1) \ni t\mapsto \varphi _t(x)\) is locally semi-convex, and

$$\begin{aligned} {\underline{\partial }}_t \frac{\ell _t^2(x)}{2}&\ge -\frac{1}{t} \ell _t^2(x) \;\;\; \forall t \in D_{\ell }(x) . \end{aligned}$$(3.6) -

(b)

Both maps \((0,1) \ni t \mapsto (1-t) {\bar{\ell }}^{\pm }_t(x)\) are monotone non-increasing. In particular, \(D_{{\bar{\ell }}}(x) \ni t \mapsto {\bar{\ell }}^2_t(x)\) is differentiable a.e., the singular part of its distributional derivative is non-positive, \((0,1) \ni t \mapsto {\bar{\varphi }}_t(x)\) is locally semi-concave, and

$$\begin{aligned} {\overline{\partial }}_t \frac{{\bar{\ell }}_t^2(x)}{2}&\le \frac{1}{1-t} {\bar{\ell }}_t^2(x) \;\;\; \forall t \in D_{{\bar{\ell }}}(x) . \end{aligned}$$(3.7)

-

(a)

Proof

The only point requiring verification is that monotonicity of \(t \mapsto t \ell _t(x)\) in (4a) and \(t \mapsto (1-t) {\bar{\ell }}_t\) in (4b) implies (3.6) and (3.7), respectively. For instance, using the continuity of \(t \mapsto \ell _t(x)\) on \(D_{\ell }(x)\), (3.6) is clearly equivalent to

Now, if \(\ell _t(x) = 0\) the monotonicity directly implies \({\underline{\partial }}_t \ell _t(x) \ge 0\) and establishes (3.8), whereas otherwise, (3.8) is equivalent by the chain-rule (and again the continuity of \(t \mapsto \ell _t(x)\) on \(D_{\ell }(x)\)) to

which in turn is a consequence of the aforementioned monotonicity. The proof of (3.7) follows identically. \(\square \)

We now arrive to the main new result of this section, which will be constantly and crucially used in this work:

Theorem 3.11

Let \(\varphi : X \rightarrow {\mathbb {R}}\) denote a Kantorovich potential.

-

(1)

For all \(x \in \mathrm{e}_t(G_\varphi )\) with \(t \in (0,1)\), we have

$$\begin{aligned} \ell ^{+}_t(x) = \ell ^{-}_t(x) = {\bar{\ell }}^{+}_t(x) = {\bar{\ell }}^{-}_t(x) = \ell (\gamma ) , \end{aligned}$$for any \(\gamma \in G_\varphi \) so that \(\gamma _t = x\). In other words

$$\begin{aligned} D(\mathring{G}_\varphi ) = \left\{ (x,t) \in X \times (0,1) \; ; \; x = \gamma _t \; , \; \gamma \in G_\varphi \right\} \subset D_\ell \cap D_{{\bar{\ell }}}, \end{aligned}$$and moreover \(\ell _t(x) = {\bar{\ell }}_t(x)\) there.

-

(2)

For all \(x \in X\), \(\mathring{G}_\varphi (x) \ni t \mapsto \ell _t(x)={\bar{\ell }}_t(x)\) is locally Lipschitz:

$$\begin{aligned}&\left| \sqrt{t (1-t)} \ell _{t}(x) - \sqrt{s (1-s)} \ell _{s}(x)\right| \nonumber \\&\quad \le \sqrt{\ell _{t}(x) \ell _{s}(x)} \left| \sqrt{t (1-s)} - \sqrt{s (1-t)} \right| \;\;\; \forall t,s \in \mathring{G}_\varphi (x).\qquad \end{aligned}$$(3.9) -

(3)

For all \((x,t) \in D(\mathring{G}_\varphi ) \subset D_\ell \cap D_{{\bar{\ell }}}\) we have for both \(* = {\underline{{\mathcal {P}}}}_2 {\bar{\varphi }}_t(x) ,{\overline{{\mathcal {P}}}}_2 \varphi _t(x)\):

$$\begin{aligned} -\frac{1}{t} \ell _t^2(x) \le {\underline{\partial }}_t \frac{\ell _t^2(x)}{2} \le {\underline{{\mathcal {P}}}}_2 \varphi _t(x) \le * \le {\overline{{\mathcal {P}}}}_2 {\bar{\varphi }}_t(x) \le {\overline{\partial }}_t \frac{{\bar{\ell }}_t^2(x)}{2} \le \frac{1}{1-t} \ell _t^2(x) , \end{aligned}$$where the Peano (partial) derivatives are with respect to the t variable.

-

(4)

For all \((x,t) \in D(\mathring{G}_\varphi ) \subset D_\ell \cap D_{{\bar{\ell }}}\) we have:

$$\begin{aligned} {\overline{\partial }}_t \frac{\ell _t^2(x)}{2}&\le {\overline{\partial }}_t \frac{{\bar{\ell }}_t^2(x)}{2} + \left( \frac{1}{1-t} + \frac{1}{t}\right) \ell _t^2(x) \le \left( \frac{2}{1-t} + \frac{1}{t}\right) \ell _t^2(x) \\ \underline{\partial _t} \frac{{\bar{\ell }}_t^2(x)}{2}&\ge \underline{\partial _t} \frac{\ell _t^2(x)}{2} -\left( \frac{1}{t} + \frac{1}{1-t}\right) \ell _t^2(x) \ge -\left( \frac{2}{t} + \frac{1}{1-t}\right) \ell _t^2(x) . \end{aligned}$$

In particular, for every \(x \in X\), we have:

with \(t \mapsto \frac{\ell ^2_t(x)}{2}\) and \(t \mapsto \frac{{\bar{\ell }}^2_t(x)}{2}\) continuous on \(D_\ell (x) \cap D_{{\bar{\ell }}}(x)\), differentiable a.e. there, and having locally bounded lower and upper derivatives on \(\mathring{G}_{\varphi }(x) \subset D_\ell (x) \cap D_{{\bar{\ell }}}(x)\) as in (3) and (4).

Proof

To see (1), let \((x,t) \in D(\mathring{G}_\varphi )\). Equivalently, by Proposition 3.6 (3), we know that \(\varphi _t(x) = {\bar{\varphi }}_t(x)\). In addition, Lemma 3.5 assures the existence of \(y^{\pm }\) and \(z^{\pm }\) in X so that

Equating both expressions and applying Lemma 3.7, we deduce that x is the t-midpoint of a geodesic connecting \(y^{\pm }\) and \(z^{\pm }\) (for all 4 possibilities), and that

so that all 4 possibilities above coincide. We remark in passing that this already implies in a non-branching setting that necessarily \(y^+ = y^-\) and \(z^+=z^-\), i.e. the uniqueness of a \(\varphi \)-Kantorovich geodesic with t-mid point x.

Furthermore, if \(x = \gamma _t\) for some \(\gamma \in G_\varphi \), then by Lemma 3.3:

It follows by definition of \(D^{\pm }_{-\varphi }(x,t)\) that:

which together with (3.10) establishes that \(\ell (\gamma ) = \ell _t(x) = {\bar{\ell }}_t(x)\).

To see (2), let \(\gamma ^t, \gamma ^s \in G_{\varphi }\) be so that \(\gamma ^t_t = \gamma ^s_s = x\), for some \(t,s \in (0,1)\). Then

for \((p,q) = (t,s)\) and \((p,q) = (s,t)\). Summing these two inequalities, we obtain the well-known c-cyclic monotonicity of the set \(\left\{ (\gamma ^t_0,\gamma ^t_1),(\gamma ^s_0,\gamma ^s_1)\right\} \):

To evaluate the right-hand-side, we simply pass through x and employ the triangle inequality:

Plugging this above and rearranging terms, we obtain

Completing the square by subtracting \(2 \sqrt{t(1-t)s(1-s)} \ell (\gamma ^t) \ell (\gamma ^s)\) from both sides, and recalling that \(\ell (\gamma ^p) = \ell _p(x)\) for \(p=t,s\), we readily obtain (3.9). In particular, using \(t=s\), the above argument recovers the last assertion of (1) that \(\ell (\gamma )\) is the same for all \(\gamma \in G_\varphi \) so that \(\gamma _t = x\).

To see (3), recall that given \(x \in X\), we know by Proposition 3.6 that \(\varphi _t(x) \le {\bar{\varphi }}_t(x)\) for all \(t \in (0,1)\) with equality iff \(t \in \mathring{G}_\varphi (x)\). Since \(\mathring{G}_\varphi (x) \subset D_\ell (x) \cap D_{{\bar{\ell }}}(x)\) by (1), we know that both maps \(t \mapsto {\tilde{\varphi }}_t(x)\) are differentiable at \(t_0 \in \mathring{G}_\varphi (x)\), and we see again that \(\frac{\ell ^2_{t_0}(x)}{2} = \partial _t \varphi _{t_0}(x) = \partial _t {\bar{\varphi }}_{t_0}(x) = \frac{{\bar{\ell }}^2_{t_0}(x)}{2}\), since the derivatives of a function and its majorant must coincide at a mutual point of differentiability where they touch. Moreover, defining \({\tilde{h}} = h,{\bar{h}}\) as

it follows that \(h \le {\bar{h}}\) (on \((-t_0,1-t_0)\)). Diving by \(\varepsilon ^2\) and taking appropriate subsequential limits, we obviously obtain

Combining these inequalities with those of Lemma 2.4, (3.6) and (3.7), the chain of inequalities in (3) readily follows.

To see (4), let \(t_0 \in \mathring{G}_\varphi (x)\). Consider the function \(f(t) := {\bar{\varphi }}_t(x) - \varphi _t(x)\) on (0, 1), which is locally semi-concave by Corollary 3.10. By Proposition 3.6, we know that \(f \ge 0\) with \(f(t_0) = 0\). The function f is differentiable on \(D_{\ell }(x) \cap D_{{\bar{\ell }}}(x)\) and satisfies \(f'(t) = \frac{{\bar{\ell }}^2_{t}(x)}{2} - \frac{\ell ^2_{t}(x)}{2}\) there. In particular, this holds at \(t_0 \in \mathring{G}_\varphi (x) \subset D_{\ell }(x) \cap D_{{\bar{\ell }}}(x)\) by (1) and \(f'(t_0) = 0\). Note that by Corollary 3.10:

In particular, since both \(D_{{\tilde{\ell }}}(x) \ni t \mapsto {\tilde{\ell }}_t(x)\) are continuous at \(t = t_0 \in D_{\ell }(x) \cap D_{{\bar{\ell }}}(x)\), for \({\tilde{\ell }} = \ell ,{\bar{\ell }}\), it follows that

It follows that on the open interval \(I_\delta := (t_0-\delta ,t_0 + \delta ) \cap (0,1)\), \(f - C_\varepsilon \frac{t^2}{2}\) is concave with \(C_\varepsilon \) defined as the constant on the right-hand-side above. Applying Lemma 3.12 below to the translated function \(f(\cdot + t_0)\) on the interval \(I_\delta - t_0\), it follows that:

As \({\bar{\ell }}_{t_0}(x) = \ell _{t_0}(x)\) by (1), we obtain

The assertion of (4) now follows by taking appropriate subsequential limits as \(t \rightarrow t_0\) and using the fact that \(\varepsilon > 0\) was arbitrary. \(\square \)

Lemma 3.12

Given \(I \subset {\mathbb {R}}\) an open interval containing 0, let \(f : I \rightarrow {\mathbb {R}}\) denote a C-semi-concave function, so that \(I \ni t \mapsto f - C \frac{t^2}{2}\) is concave, \(C \ge 0\). Assume that \(f \ge 0\) on I, that f is differentiable at 0 and that \(f(0) = f'(0) = 0\). Then \(\underline{\partial _t}|_{t=0} f'(t) \ge -C\), and moreover, \(\frac{f'(t)}{t} \ge -C\) for all \(t \in D \cap I/2\), where \(D \subset I\) denotes the subset (of full measure) of differentiability points of f.

Note that the C-semi-concavity is equivalent to \({\overline{\partial }}_t|_{t=0} f'(t) \le C\), while the conclusion is from the opposite direction. It is not hard to verify that the asserted lower bound is in fact best possible.

Proof of Lemma 3.12

Set \(g = f'\) on D. The C-semi-concavity is equivalent to the statement that \(g(t) - C t\) is non-increasing on D, so that \(g(t_2) \le g(t_1) + C (t_2 - t_1)\) for all \(t_1,t_2 \in D\) with \(t_1 < t_2\). It follows that necessarily \(g(t) \ge -C t\) for all \(t \in D \cap I/2\) with \(t \ge 0\), since:

Repeating the same argument for \(t \mapsto f(-t)\), we see that \(-g(t) \ge C t\) for all \(t \in D \cap I/2\) with \(t \le 0\). This concludes the proof. \(\square \)

In a sense, Theorem 3.11 (2) is the temporal analogue of the spatial 1/2-Hölder regularity proved by Villani in [77, Theorem 8.22]. Formally taking \(s \rightarrow t\) in (3.9), it is easy to check that one obtains (for both \({\tilde{\ell }} = \ell ,{\bar{\ell }}\)) stronger bounds than in Theorem 3.11 (3) and (4):

However, we do not know how to rigorously pass from (3.9) to (3.11) or vice versa (by differentiation or integration, respectively), since we cannot exclude the possibility that the (relatively closed in (0, 1)) set \(\mathring{G}_\varphi (x)\) has isolated points, nor that it is disconnected. Instead, we can obtain the following stronger version of (3.11) which only holds for a.e. \(t \in \mathring{G}_\varphi (x)\), but will prove to be very useful later on.

Corollary 3.13

For all \(x \in X\), for a.e. \(t \in \mathring{G}_\varphi (x)\), \(\partial _t \ell ^2_t(x)\) and \(\partial _t {\bar{\ell }}^2_t(x)\) exist, coincide, and satisfy:

Proof

By Corollary 3.10, for all \(x \in X\) and \({\tilde{\ell }} = \ell ,{\bar{\ell }}\), \(t \mapsto {\tilde{\ell }}^2_t(x)\) is differentiable a.e. on \(D_{{\tilde{\ell }}}(x)\). Consequently, the first and third equalities in (3.12) follow for a.e. \(t \in \mathring{G}_\varphi (x) \subset D_{\ell }(x) \cap D_{{\bar{\ell }}}(x)\) by Remark 2.1. The second equality follows since \(\ell _t(x) = {\bar{\ell }}_t(x)\) for \(t \in \mathring{G}_\varphi (x)\) by Theorem 3.11. The lower and upper bounds in (3.12) then follow from Theorem 3.11 (3) (or as in (3.11), by taking the limit as \(s \rightarrow t\) in Theorem 3.11 (2)). \(\square \)

4.5 Null-geodesics

Definition 3.14

(Null-Geodesics and Null-Geodesic Points) Given a Kantorovich potential \(\varphi : X \rightarrow {\mathbb {R}}\), denote the subset of null \(\varphi \)-Kantorovich geodesics by

Its complement in \(G_\varphi \) will be denoted by \(G_\varphi ^+\). The subset of X of null \(\varphi \)-Kantorovich geodesic points is denoted by

Its complement in X will be denoted by \(X^+\).

The following provides a convenient equivalent characterization of \(X^0\) and \(X^+\):

Lemma 3.15

Given \(x \in X\), the following statements are equivalent:

-

(1)

\(x \in X^0\), i.e. \(\varphi (x) + \varphi ^c(x) = 0\).

-

(2)

\(\forall t \in (0,1)\), \(\varphi _t(x) = {\bar{\varphi }}_t(x) = \varphi (x) = -\varphi ^c(x)\).

-

(3)

\(\forall t \in (0,1)\), \(\varphi _t(x) = c\) and \({\bar{\varphi }}_t(x) = {\bar{c}}\) for some \(c,{\bar{c}} \in {\mathbb {R}}\).

-

(4)

\(D_{\ell }(x) = D_{{\bar{\ell }}}(x) = (0,1)\) and \(\;\forall t \in (0,1) \;\; \ell _t(x) = {\bar{\ell }}_t(x) = 0\).

-

(5)

\(\exists t_0 \in \mathring{G}_\varphi (x)\) so that \(\varphi _{t_0}(x) = \varphi (x)\) or \({\bar{\varphi }}_{t_0}(x) = \varphi (x)\) or \(\varphi _{t_0}(x) = -\varphi ^c(x)\) or \({\bar{\varphi }}_{t_0}(x) = -\varphi ^c(x)\).

-

(6)

\(\exists t_0 \in \mathring{G}_\varphi (x)\) so that \(\ell _{t_0}^{-}(x) = 0\) or \(\ell _{t_0}^{+}(x) = 0\) or \({\bar{\ell }}_{t_0}^{-}(x) = 0\) or \({\bar{\ell }}_{t_0}^+(x) = 0\).

In other words, we have the following dichotomy: all \(\varphi \)-Kantorovich geodesics having \(x \in X\) as some interior mid-point have either strictly positive length (iff \(x \in X^+\)) or zero length (iff \(x \in X^0\)).

Remark 3.16

In fact, we always have \(\varphi _t(x) = {\bar{\varphi }}_t(x)\) and \(\ell _t(x) = {\bar{\ell }}_t(x)\) for \(t \in \mathring{G}_\varphi (x) \subset D_{\ell }(x) \cap D_{{\bar{\ell }}}(x)\) by Theorem 3.11, so we may simply write “\(\varphi _{t_0}(x) = \varphi (x)\) or \(\varphi _{t_0}(x)= -\varphi ^c(x)\)” and “\(\ell _{t_0}(x) = {\bar{\ell }}_{t_0}(x) = 0\)” in statements (5) and (6), respectively. However, we chose to formulate these statements with the (a-priori) minimal requirements.

Proof of Lemma 3.15

\((1) \Rightarrow (2)\) is straightforward: for instance, (1) is by definition identical to \(\varphi _1(x) = \varphi _0(x)\) and (2) follows by the monotonicity of \([0,1] \ni t \mapsto {\tilde{\varphi }}_t(x)\) for both \({\tilde{\varphi }} = \varphi ,{\bar{\varphi }}\); alternatively, apply Lemma 3.3 to the null geodesic \(\gamma ^0 \equiv x\) with respect to both Kantorovich potentials \(\varphi \) and \(\varphi ^c\).

\((2) \,\!\Rightarrow \!\, (3)\) is trivial.

\((3)\,\!\!\Leftrightarrow \!\!\,(4)\) follows by using that \(D_{{\tilde{\ell }}}(x)\) is characterized as the subset of t-differentiability points of \(\varphi _t(x)\) on (0, 1) with \(\partial _t {\tilde{\varphi }}_t(x) = {\tilde{\ell }}_t^2(x)/2\) there.

\((3)\,\!\Rightarrow \!\,(1)\): by the continuity of \(t \mapsto \varphi _t(x)\) from the left at \(t=1\) it follows that \(c = \varphi _1(x)\), and similarly the continuity of \(t \mapsto {\bar{\varphi }}_t(x)\) from the right at \(t=0\) yields that \({\bar{c}} = {\bar{\varphi }}_0(x) = \varphi (x)\). Since always \(\varphi \le {\bar{\varphi }}\), we deduce \(\varphi _1(x) = c \le {\bar{c}} = \varphi (x)\). On the other hand, we always have \(\varphi (x) \le \varphi _1(x)\) by monotonicity, so we conclude that \(\varphi (x) = \varphi _1(x)\), establishing statement (1). This concludes the proof of the equivalence \((1)\,\! \Leftrightarrow \!\,(2) \,\!\Leftrightarrow \!\,(3) \,\!\Leftrightarrow \!\, (4)\).

\((2) \,\!\Rightarrow \!\, (5)\) and \((4)\,\! \Rightarrow \!\,(6)\) are trivial.

\((5) \,\!\!\Rightarrow \!\!\,(6)\) is straightforward: for instance, if \({\tilde{\varphi }}_{t_0}(x) = {\tilde{\varphi }}_0(x) = \varphi (x)\) for some \(t_0 \in (0,1)\) and \({\tilde{\varphi }} \in \left\{ \varphi ,{\bar{\varphi }}\right\} \), then by monotonicity, \({\tilde{\varphi }}_t(x) = \varphi (x)\) for all \(t \in [0,t_0]\), and hence the left derivative at \(t=t_0\) satisfies \(\ell ^{-}_{t_0}(x) = \partial _t^{-}|_{t=t_0} \varphi _t(x) = 0\) if \({\tilde{\varphi }} = \varphi \) and \({\bar{\ell }}^{+}_{t_0}(x) = \partial _t^{-}|_{t=t_0} {\bar{\varphi }}_t(x) = 0\) if \({\tilde{\varphi }} = {\bar{\varphi }}\). If \({\tilde{\varphi }}_{t_0}(x) = {\tilde{\varphi }}_1(x) = -\varphi ^c(x)\), repeat the argument using the right derivative.