Abstract

We explore the supercritical phase of the vertex-reinforced jump process (VRJP) and the \(\mathbb {H}^{2|2}\)-model on rooted regular trees. The VRJP is a random walk, which is more likely to jump to vertices on which it has previously spent a lot of time. The \(\mathbb {H}^{2|2}\)-model is a supersymmetric lattice spin model, originally introduced as a toy model for the Anderson transition. On infinite rooted regular trees, the VRJP undergoes a recurrence/transience transition controlled by an inverse temperature parameter \(\beta > 0\). Approaching the critical point from the transient regime, \(\beta \searrow \beta _{\textrm{c}}\), we show that the expected total time spent at the starting vertex diverges as \(\sim \exp (c/\sqrt{\beta - \beta _{\textrm{c}}})\). Moreover, on large finite trees we show that the VRJP exhibits an additional intermediate regime for parameter values \(\beta _{\textrm{c}}< \beta < \beta _{\textrm{c}}^{\textrm{erg}}\). In this regime, despite being transient in infinite volume, the VRJP on finite trees spends an unusually long time at the starting vertex with high probability. We provide analogous results for correlation functions of the \(\mathbb {H}^{2|2}\)-model. Our proofs rely on the application of branching random walk methods to a horospherical marginal of the \(\mathbb {H}^{2|2}\)-model.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and Main Results

1.1 History and introduction

Our work will focus on two distinct but related models: The \(\mathbb {H}^{2|2}\)-model, a lattice spin model which is related to the Anderson transition, and the vertex-reinforced jump process (VRJP), a random walk on graphs which is more likely to jump to vertices on which it has already spent a lot of time.

The \(\mathbb {H}^{2|2}\)-model was initially introduced by Zirnbauer [1] as a toy model for studying the Anderson transition. Formally, it is a lattice spin model taking values in the hyperbolic superplane \(\mathbb {H}^{2|2}\), a supersymmetric analogue of hyperbolic space. Independently, the VRJP was introduced by Davis and Volkov [2] as a natural example of a reinforced (and consequently non-Markovian) continuous-time random walk. Somewhat surprisingly, Sabot and Tarrès [3] observed that these two models are intimately related. Namely, the time the VRJP asymptotically spends on vertices can be expressed in terms of the \(\mathbb {H}^{2|2}\)-model. This has been used to see the VRJP as a random walk in random environment, with the environment being given by the \(\mathbb {H}^{2|2}\)-model. Furthermore, the two models are linked by a Dynkin-type isomorphism theorem due to Bauerschmidt, Helmuth and Swan [4, 5], analogous to the connection between simple random walk and the Gaussian free field [6].

Both models are parametrised by an inverse temperature \(\beta > 0\) and, depending on the background geometry of the graph under consideration, may exhibit a phase transition at some critical parameter \(\beta _{\textrm{c}} \in \left( 0,\infty \right] \). For the \(\mathbb {H}^{2|2}\)-model the expected transition is between a disordered high-temperature phase (\(\beta < \beta _{\textrm{c}}\)) and a symmetry-broken low-temperature phase (\(\beta > \beta _{\textrm{c}}\)) exhibiting long-range order. For the VRJP the transition is between a recurrent phase due to strong reinforcement effects and a transient phase due to low reinforcement effects.

On \(\mathbb {Z}^{D}\) a fair bit is known about the phase diagram of the two models. In dimension \(D\le 2\) both models are never delocalised (i.e. they are always disordered and recurrent, respectively) [2,3,4, 7,8,9]. In dimensions \(D\ge 3\), however, they exhibit a phase transition from a localised to a delocalised phase at a unique \(\beta _{\textrm{c}} \in (0,\infty )\) [3, 8, 10,11,12,13,14].

In this article we consider both models on the geometry of a rooted \((d+1)\)-regular tree \(\mathbb {T}_{d}\) with \(d\ge 2\) (see Fig. 1). For the VRJP this setting was previously explored by various authors [15,16,17,18,19]. In particular, Basdevant and Singh [17] showed that the VRJP on Galton–Watson trees with mean offspring \(m>1\) has a phase transition from recurrence to transience at some explicitly characterised \(\beta _{\textrm{c}} \in (0,\infty )\). For simplicity, we focus on the “deterministic case”, but our results should translate to Galton–Watson trees as well (up to some technical restrictions on the offspring distribution).

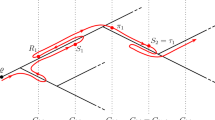

The main goal of this work is to provide new information on the supercritical phase (\(\beta > \beta _{\textrm{c}}\)) including the near-critical regime. Roughly speaking, we show that on the infinite rooted \((d+1)\)-regular tree \(\mathbb {T}_{d}\) the order parameters of the VRJP and the \(\mathbb {H}^{2|2}\)-model diverge as \(\exp (c/\sqrt{\beta -\beta _{\textrm{c}}})\) as one approaches the critical point from the supercritical regime, \(\beta \searrow \beta _{\textrm{c}}\) (see Theorem 1.2 and 1.5, respectively). Such behaviour has previously been predicted by Zirnbauer for Efetov’s model [20]. This “infinite-order” behaviour towards the critical point is rather surprising, as it conflicts with usual scaling hypotheses in statistical mechanics, which predict algebraic singularities as one approaches the critical points. Moreover, we show that on finite rooted \((d+1)\)-regular trees, the VRJP and the \(\mathbb {H}^{2|2}\)-model exhibit an additional mulifractal intermediate regime for \(\beta \in (\beta _{\textrm{c}}, \beta _{\textrm{c}}^{\textrm{erg}})\) (see Theorem 1.3, 1.4, and 1.6). An illustration of some of our results for the VRJP is given in Fig. 2.

Sketch of the phase diagram for the VRJP on \(\mathbb {T}_{d}\) with \(d\ge 2\). The recurrence/transience transition at \(\beta _{\textrm{c}}\) is phrased in terms of \(\mathbb {E}[L^{0}_{\infty }]\), i.e. the expected total time the walk (on the infinite rooted \((d+1)\)-regular tree \(\mathbb {T}_{d}\)) spends at the starting vertex. In this article, we obtain precise asymptotics for \(\mathbb {E}[L^{0}_{\infty }]\) as \(\beta \searrow \beta _{\textrm{c}}\). Second, we show that there is an additional transition point \(\beta _{\textrm{c}}^{\textrm{erg}} > \beta _{\textrm{c}}\). It is phrased in terms of the volume-scaling of the fraction of total time, \(\lim _{t\rightarrow \infty } L^{0}_{t}/t\), the VRJP on the finite tree \(\mathbb {T}_{d,n}\) spends at the origin. Here, the symbol “\(\sim \)” is understood loosely, and we refer to the text for precise error terms

Connection to the Anderson Transition and Efetov’s Model. Inspiration for our work originates from predictions in the physics literature on Efetov’s model [20,21,22,23,24,25]. The latter is a supersymmetric lattice sigma model that is considered to capture the Anderson transition [26, 27]. To be more precise, Efetov’s model can be derived from a granular limit (similar to a Griffiths-Simon construction [28]) of the random band matrix model, followed by a sigma model approximation [29, 30]. The connection to our work is due to Zirnbauer, who introduced the \(\mathbb {H}^{2|2}\)-model as a simplification of Efetov’s model [1]. Namely, in Efetov’s model spins take value in the symmetric superspace \(\textrm{U}(1,1|2)/[\textrm{U}(1|1)\otimes \textrm{U}(1|1)]\). According to Zirnbauer, the essential features of this target space are its hyperbolic symmetry and its supersymmetry.Footnote 1 In this sense, \(\mathbb {H}^{2|2}\) is the simplest target space with these two properties. Study of the \(\mathbb {H}^{2|2}\)-model may guide the analysis of supersymmetric field theories more closely related to the Anderson transition.

Moreover, the \(\mathbb {H}^{2|2}\)-model and the VRJP are directly and rigorously related to an Anderson-type model, which we refer to as the STZ-Anderson model (see Definition 1.8). This fact was already hinted at by Disertori, Spencer and Zirnbauer [10], but only fully appreciated by Sabot, Tarrès and Zeng [31, 32], who exploited the relationship to gain new insights on the VRJP. It is an interesting open problem to better understand the spectral properties of this model and how it relates to the VRJP and the \(\mathbb {H}^{2|2}\)-model.

Notably, the phase diagram of the \(\mathbb {H}^{2|2}\)-model is better understood than that of Efetov’s model or the Anderson model on a lattice. For example, for the \(\mathbb {H}^{2|2}\)-model there is proven absence of long-range order in 2D [4] as well as proven existence of a phase transition in 3D [10, 11]. For the Anderson model on \(\mathbb {Z}^{D}\), the existence of a phase transition in \(D\ge 3\) and the absence of one in \(D=2\) are arguably among the most prominent open problems in mathematical physics. A good example of the Anderson model’s intricacies is given by the work of Aizenman and Warzel [33, 34]. Despite many previous efforts, they were the first to gain a somewhat complete understanding of the model’s spectral properties on the regular tree. However, many questions are still open, in particular there are no rigorous results on the Anderson model’s (near-)critical behaviour. In this sense one might (somewhat generously) interpret this article as a step towards better understanding of the near-critical behaviour for a model in the “Anderson universality class”.

We would also like to comment on the methods used in the physics literature on Efetov’s model. The analysis of the model on a regular tree, initiated by Efetov and Zirnbauer [20, 21], relies on a recursion/consistency relation that is specific to the tree setting. Using this approach, Zirnbauer predicted the divergence of the order parameter (relevant for the symmetry-breaking transition of Efetov’s model) for \(\beta \searrow \beta _{\textrm{c}}\). We should mention that Mirlin and Gruzberg [35] argued that this analysis should essentially carry through for the \(\mathbb {H}^{2|2}\)-model. In our case, we take a different path, exploiting a branching random walk structure in the “horospherical marginal” of the \(\mathbb {H}^{2|2}\)-model (the t-field).

After completion of this work, we were made aware by Martin Zirnbauer of recent numerical investigations for the Anderson transition on random tree-like graphs [36, 37]. The observed scaling behaviour near the transition point might suggest the need for a field-theoretic description beyond the supersymmetric approach of Efetov (also see [38, 39]). At this point, there does not seem to exist a consensus on the theoretical description of near-critical scaling for the Anderson transition of tree-like graphs and rigorous results would be of great value.

Notation: In multi-line estimates, we occasionally use “running constants” \(c,C > 0\) whose precise value may vary from line to line. We denote by \([n] = {1,\ldots ,n}\) the range of positive integers up to n. For a graph \(G = (V,E)\) an unoriented edge \(\{x,y\} \in E\) will be denoted by the juxtaposition xy, whereas an oriented edge is denoted by a tuple (x, y), which is oriented from x to y. Write \({E}\) for the set of oriented edges. For a vertex x in a rooted tree (or a particle of a branching random walk), we denote its generation (i.e. distance from the origin) by \(\left| x\right| \). We use the short-hand \(\sum _{\left| x\right| = n}\ldots \) to denote summation over all vertices/particles at generation n. Variants of this convention will be used and the meaning should be clear from context. When our results concern the \((d+1)\)-regular rooted tree \(\mathbb {T}_{d}\), we assume \(d\ge 2\) will typically suppress the d-dependence of all involved constants, unless specified otherwise. Mentions of \(\beta _{\textrm{c}}\) implicity refer to the critical parameter \(\beta _{\textrm{c}} = \beta _{\textrm{c}}(d)\) as given by Proposition 2.14.

1.2 Model definitions and results

In this section, we define the VRJP, the \(\mathbb {H}^{2|2}\)-model, the t-field and the STZ-Anderson model. We are aware that spin systems with fermionic degrees of freedom, such as the \(\mathbb {H}^{2|2}\)-model, might be foreign to some readers. However, understanding this model is not necessary for the main results on the VRJP, and the reader can feel comfortable to skip references to the \(\mathbb {H}^{2|2}\)-model on a first reading. We also note that all models that we introduce are intimately related (as illustrated in Fig. 3) and Sect. 2 will illuminate some of these connections.

1.2.1 Vertex-Reinforced jump process

Definition 1.1

Let \(G = (V,E)\) be a locally finite graph equipped with positive edge-weights \((\beta _e)_{e\in E}\), and a starting vertex \(i_0\in V\). The VRJP \((X_t)_{t\ge 0}\) starting at \(X_{0} = i_0\) is the continuous-time jump process that at time t jumps from a vertex \(X_{t} = x\) to a neighbour y at rate

We refer to \(L_{t}^{y}\) as the local time at y up to time t.

Unless specified otherwise, the VRJP on a graph G refers to the case of constants weights \(\beta _{e} \equiv \beta \) and the dependency on the weight \(\beta \) is specified by a subscript, as in \(\mathbb {E}_{\beta }\) or \(\mathbb {P}_{\beta }\). By a slight abuse of language, we refer to \(\beta \) as an inverse temperature.

Results for the VRJP. Note that Fig. 2 gives a rough picture of our statements for the VRJP. In the following we provide the exact results.

In the following, \(\beta _{\textrm{c}} = \beta _{\textrm{c}}(d)\) will denote the critical inverse temperature for the recurrence/transience transition of the VRJP on the infinite rooted \((d+1)\)-regular tree \(\mathbb {T}_{d}\) with \(d\ge 2\). By Basdevant and Singh [17] this inverse temperature is well-defined and finite: \(\beta _{\textrm{c}} \in (0,\infty )\) (cf. Proposition 2.14). Alternatively, \(\beta _{\textrm{c}}\) is characterised in terms of divergence of the expected total local time at the origin: \(\beta _{\textrm{c}} = \inf \{\beta > 0: \mathbb {E}_{\beta }[L^{0}_{\infty }] < \infty \}\). The following theorem provides information about the divergence of \(\mathbb {E}_{\beta }[L^{0}_{\infty }]\) as we approach the critical point from the transient regime.

Theorem 1.2

(Local-Time Asymptotics as \(\beta \searrow \beta _{\textrm{c}}\) for the VRJP on \(\mathbb {T}_{d}\)). Consider the VRJP, started at the root 0 of the infinite rooted \((d+1)\)-regular tree \(\mathbb {T}_{d}\) with \(d\ge 2\). Let \(\beta _{\textrm{c}} = \beta _{\textrm{c}}(d) \in (0,\infty )\) be as in Proposition 2.14. Let \(L^{0}_{\infty } = \lim _{t\rightarrow \infty }L^{0}_{t}\) denote the total time the VRJP spends at the root. There are constants \(c,C>0\) such that for sufficiently small \(\epsilon > 0\):

The above result concerned the infinite rooted \((d+1)\)-regular tree \(\mathbb {T}_{d}\). On a finite rooted \((d+1)\)-regular tree \(\mathbb {T}_{d,n}\) the total local time at the origin always diverges, but we may consider the fraction of time the walk spends at the starting vertex. In terms of this quantity we can identify both the recurrence/transience transition point \(\beta _{\textrm{c}}\) as well as an additional intermediate phase inside the transient regime.

Theorem 1.3

(Intermediate Phase for VRJP on Finite Trees). Consider the VRJP started at the root of the rooted \((d+1)\)-regular tree of depth n, \(\mathbb {T}_{d,n}\), with \(d\ge 2\). Let \(L_{t}^{0}\) denote the total time the walk spent at the root up until time t. We have

with \(\beta \mapsto \nu (\beta )\) continuous and non-decreasing such that

for some \(\beta _{\textrm{c}}^{\textrm{erg}} = \beta _{\textrm{c}}^{\textrm{erg}}(d) > \beta _{\textrm{c}}\). More precisely, we have

with \(\psi _{\beta }(\eta )\) given in (3.7).

Moreover, in the intermediate phase the inverse fraction of time at the origin shows a multifractal scaling behaviour:

Theorem 1.4

(Multifractality in the Intermediate Phase). Consider the setup of Theorem 1.3 and suppose \(\beta \in (\beta _{\textrm{c}}, \beta _{\textrm{c}}^{\textrm{erg}})\). For \(\eta \in (0,1)\) we have

where

where \(\psi _{\beta }\) is given in (3.7) and \(\eta _{\beta } = \textrm{argmin}_{\eta >0} \psi _{\beta }(\eta )/\eta \in (0,1)\).

1.2.2 The \(\mathbb {H}^{2|2}\)-model

Definition of the \(\mathbb {H}^{2|2}\)-Model. We start by writing down the formal expressions defining the \(\mathbb {H}^{2|2}\)-model, and then make sense out of it afterwards. Conceptually, we think of the hyperbolic superplane \(\mathbb {H}^{2|2}\) as the set of vectors \(\textbf{u} = (z,x,y,\xi ,\eta )\), satisfying

Here, z, x, y are even/bosonic coordinates and \(\xi ,\eta \) are odd/fermionic, a notion that will be explained shortly. For two vectors \(\textbf{u}_{i} = (z_{i},x_{i},y_{i},\xi _{i},\eta _{i})\) and \(\textbf{u}_{j} = (z_{j},x_{j},y_{j},\xi _{j},\eta _{j})\), we define the inner product

In other words, this pairing is of hyperbolic type in the even variables and of symplectic type in the odd variables.

Consider a finite graph \(G = (V,E)\) with non-negative edge weights \((\beta _{e})_{e \in E}\) and magnetic field \(h > 0\). Morally, we think of the \(\mathbb {H}^{2|2}\)-model on G as a probability measure on spin configurations \(\underline{\textbf{u}} = (\textbf{u}_{i})_{i\in V} \in (\mathbb {H}^{2|2})^{V}\), such that the formal expectation of a functional \(F \in C^{\infty }((\mathbb {H}^{2|2})^{V})\) is given by

with \(\text {d}{\textbf{u}}\) denoting the Haar measure over \(\mathbb {H}^{2|2}\). In other words, formally everything is analogous to the definition of spin/sigma models with “usual” target spaces, such as spheres \(S^{n}\) or hyperbolic spaces \(\mathbb {H}^{n}\). The only subtlety is that we still need to understand what a functional such as \(F \in C^{\infty }((\mathbb {H}^{2|2})^{V})\) means and how to interpret the integral above.

Rigorously, the space \(\mathbb {H}^{2|2}\) is not understood as a set of points, but rather is defined in a dual sense by directly specifying its set of smooth functions to be

In other words, this is the exterior algebra in two generators with coefficients in \(C^{\infty }(\mathbb {R}^{2})\) (which is the same as \(C^{\infty }(\mathbb {R}^{2|2})\), analogous to the fact that \(\mathbb {H}^{2} \cong \mathbb {R}^{2}\) as smooth manifolds.). Note that this set naturally carries the structure of a graded-commutative algebra. More concretely, any superfunction \(f\in C^{\infty }(\mathbb {H}^{2|2})\) can we written as

with smooth functions \(f_{0},f_{\xi },f_{\eta },f_{\xi \eta } \in C^{\infty }(\mathbb {R}^{2})\) and \(\xi ,\eta \) generating a Grassmann algebra, i.e. they satisfy the algebraic relations \(\xi \eta = -\eta \xi \) and \(\xi ^{2} = \eta ^{2} = 0\). We think of such f as a smooth function in the variables \(x,y,\xi ,\eta \) and write \(f = f(x,y,\xi ,\eta )\). In particular, the coordinate functions \(x,y,\xi ,\eta \) are themselves superfunctions. In light of (1.8), we define the z-coordinate to be the (even) superfunction

In this sense the coordinate vector \(\textbf{u} = (z,x,y,\xi ,\eta )\) satisfies \(\textbf{u}\cdot \textbf{u} = -1\). By abuse of notation we write \(\textbf{u} \in \mathbb {H}^{2|2}\), but more correctly one might say that \(\textbf{u}\) parametrises \(\mathbb {H}^{2|2}\). For a superfunction \(f \in C^{\infty }(\mathbb {H}^{2|2})\) we write \(f(\textbf{u}) = f(x,y,\xi ,\eta ) = f\) and in line with physics terminology we might say that f is a function of the even/bosonic variables z, x, y and the odd/fermionic variables \(\xi ,\eta \).

The definition of z in (1.13) shows a particular example of a more general principle: The composition of an ordinary function (the square root in the example) with a superfunction (in the example that is \(1 + x^{2} + y^{2} - 2\xi \eta \)) is defined by formal Taylor expansion in the Grassmann variables. Due to nilpotency of the Grassmann variables this is well-defined.

Next we would like to introduce a notion of integrating a superfunction \(f(\textbf{u})\) over \(\mathbb {H}^{2|2}\). Expressing f as in (1.12), we define the derivations \(\partial _{\xi }, \partial _{\eta }\) acting via

In particular, note that these derivations are odd: they anticommute, \(\partial _{\xi }\partial _{\eta } = -\partial _{\eta }\partial _{\xi }\), and satisfy a graded Leibniz rule. The \(\mathbb {H}^{2|2}\)-integral of \(f \in C^{\infty }(\mathbb {H}^{2|2})\) is then defined to be the linear functional

The factor \(\tfrac{1}{z}\) plays the role of a \(\mathbb {H}^{2|2}\)-volume element in the coordinates \(x,y,\xi ,\eta \). Note that this integral evaluates to a real number.

In a final step to formalise (1.10) we define multivariate superfunctions over \(\mathbb {H}^{2|2}\)

that is the Grassmann algebra in \(2\left| V\right| \) generators \(\{\xi _{i}, \eta _{i}\}_{i\in V}\) with coefficients in \(C^{\infty }(\mathbb {R}^{2\left| V\right| })\). An element of this algebra is considered a functional over spin configurations \(\underline{\textbf{u}} = \{\textbf{u}_{i}\}_{i\in V}\) and we write \(F = F(\underline{\textbf{u}})\). Any superfunction \(F \in C^{\infty }((\mathbb {H}^{2|2})^{V})\) can be expressed, analogously to (1.12), as

The integral of such F over \((\mathbb {H}^{2|2})^{V}\) is defined as

With this notion of integration, the definition of the \(\mathbb {H}^{2|2}\)-model in (1.10) can be understood in a rigorous sense: The “Gibbs factor” is the composition of a regular function (exponential) with a superfunction (the exponent). As such it is defined by expansion in the Grassmann variables.

Results for the \(\mathbb {H}^{2|2}\)-Model. In the following we will simply rephrase above theorems in terms of the \(\mathbb {H}^{2|2}\)-model.

Theorem 1.5

(Asymptotics as \(\beta \searrow \beta _{\textrm{c}}\) for the \(\mathbb {H}^{2|2}\)-model on \(\mathbb {T}_{d}\)). Consider the \(\mathbb {H}^{2|2}\)-model on \(\mathbb {T}_{d,n}\). Suppose \(\beta _{\textrm{c}} = \beta _{\textrm{c}}(d) \in (0,\infty )\) is as in Proposition 2.14. The quantity

is well-defined and finite for any \(\epsilon > 0\). There exist constants \(c,C > 0\) such that for sufficiently small \(\epsilon > 0\)

The above statement considered the infinite-volume limit, i.e. taking \(n\rightarrow \infty \) before removing the magnetic field \(h \searrow 0\). One may also consider a finite-volume limit (also referred to as inverse-order thermodynamic limit [40]): In that case, we consider scaling limits of observable as \(h\searrow 0\) before taking \(n\rightarrow \infty \). In this limit, we also demonstrate an intermediate multifractal regime for the \(\mathbb {H}^{2|2}\)-model.

Theorem 1.6

(Intermediate Phase for the \(\mathbb {H}^{2|2}\)-Model on \(\mathbb {T}_{d,n}\)). There exist \(0< \beta _{\textrm{c}}< \beta _{\textrm{c}}^{\textrm{erg}} < \infty \) as in Theorem 1.3, such that for \(\beta _{\textrm{c}}< \beta < \beta _{\textrm{c}}^{\textrm{erg}}\) we have for \(\eta \in (0,1)\)

with \(\tau _{\beta }(\eta )\) as given in (1.7).

At first glance, the observable in (1.21) might seem somewhat obscure. However, in the physics literature on Efetov’s model and the Anderson transition, analogous quantities are predicted to encode disorder-averaged (fractional) moments of eigenstates at a given vertex and energy level, see for example [25, Equation (6)]. The volume-scaling of these quantities provides information about the (de)localisation behaviour of the eigenstates.

1.2.3 The t-field

Despite the inconspicuous name, the t-field is the most relevant object for our analysis. It is directly related to both the VRJP, encoding the time the VRJP asymptotically spends on each vertex, as well as the \(\mathbb {H}^{2|2}\)-model, arising as a marginal in horospherical coordinates (see Sect. 2 for details).

Definition 1.7

(t-field Distribution). Consider a finite graph \(G = (V,E)\), a vertex \(i_{0} \in V\) and non-negative edge-weights \((\beta _e)_{e\in E}\). The law of the t-field, with weights \((\beta _e)_{e\in E}\), pinned at \(i_{0}\), is a probability measure on configurations \(\textbf{t} = \{t_{i}\}_{i\in V} \in \mathbb {R}^{V}\) given by

with the determinantal term

where \({\mathcal {T}}^{(i_{0})}\) is the set of spanning trees in G oriented away from \(i_{0}\).

Alternatively, one can write \(D_{\beta }(\textbf{t}) = \prod _{i\in V\setminus \{i_{0}\}} e^{-2t_{i}} \det _{i_{0}}(-\Delta _{\beta (\textbf{t})})\), where \(\det _{i_{0}}\) denotes the principal minor with respect to \(i_{0}\) and \(-\Delta _{\beta (\textbf{t})}\) is the discrete Laplacian for edge-weights \(\beta (\textbf{t}) = (\beta _{ij}e^{t_{i}+t_{j}})_{ij}\).

In general the determinantal term renders the law \(\mathcal {Q}_{\beta }^{(i_{0})}\) highly non-local. However, in case the underlying graph G is a tree, only a single summand contributes to (1.23) and the measure factorises in terms of the oriented edge-increments \(\{t_{i}-t_{j}\}_{(i,j)}\). This simplification is essential for this article and gives us the possibility to analyse the t-field on rooted \((d+1)\)-regular trees in terms of a branching random walk.

1.2.4 STZ-Anderson model

The following introduces a random Schrödinger operator, which is related to the previously introduced models. It will only be required for translating our results on the intermediate phase to the \(\mathbb {H}^{2|2}\)-model (Sect. 5.2), so the reader may skip this definition on a first reading. As Sabot, Tarrès and Zeng [31, 32] were the first to study this system in detail, we refer to it as the STZ-Anderson model.

Definition 1.8

(STZ-Anderson model). Consider a locally finite graph \(G = (V,E)\), equipped with non-negative edge-weights \((\beta _e)_{e\in E}\). For \(B = (B_{i})_{i\in \Lambda } \subseteq \mathbb {R}_{+}^{\Lambda }\) define the Schrödinger-type operator

Define a probability distribution \(\nu _{\beta }\) over configurations \(B = (B_{i})_{i\in \Lambda }\) by specifying the Laplace transforms of its finite-dimensional marginals: For any vector \((\lambda _{i})_{i\in V} \in \left[ 0,\infty \right) ^{V}\) with only finitely many non-zero entries, we have

Subject to this distribution, we refer to B as the STZ-field and to \(H_{B}\) as the STZ-Anderson model.

One may note that on finite graphs, the density of \(\nu _{\beta }\) is explicit:

where \(H_{B} > 0\) means that the matrix \(H_{B}\) is positive definite. The definitino via (1.25) is convenient, since it allows us to directly consider the infinite-volume limit. We also note that while the density (1.26) seems highly non-local, the Laplace transform in (1.25) only involves values of \(\lambda \) at adjacent vertices and therefore implies 1-dependency of the STZ-field.

In the original literature the STZ-field is denoted by \(\beta \) and referred to as the \(\beta \)-field. In order to be consistent with the statistical physics literature and avoid confusion with the inverse temperature, we introduced this slightly different notation. To be precise, we used this change of notation to also introduce a slightly more convenient normalisation: one has \(B_{i} = 2\beta _{i}\) compared to the normalisation of the \(\beta \)-field \(\{\beta _{i}\}\) used by Sabot, Tarrès and Zeng.

1.3 Further comments

Comments on Related Work As noted earlier, the VRJP on tree geometries was already studied by various authors [15,16,17,18,19]. One notable difference to our work is that we do not consider the more general setting of Galton–Watson trees. While this is mostly to avoid unnecessary notational and technical difficulties, the Galton–Watson setting might be more subtle. This is due to an “extra” phase transition in the transient phase, observed by Chen and Zeng [18]. This phase transition depends on the probability of the Galton Watson tree having precisely one offspring. It is an interesting question how this would interact with our analysis.

In regard to our results, the recent work by Rapenne [19] is of particular interest. He provides precise quantitative information on the (sub-)critical phase \(\beta \le \beta _{\textrm{c}}\). The results are phrased in terms of a certain martingale, associated with the STZ-Anderson model, but they can be formulated in terms of the \(\mathbb {H}^{2|2}\)-model with wired boundary conditions (or analogously the VRJP started from the boundary) on a rooted \((d+1)\)-regular tree of finite depth. In this sense, Rapenne’s article can be considered as complementary to our work.

Another curious connection to our work is given by the Derrida-Retaux model [41,42,43,44,45,46,47,48]. The latter is a toy model for a hierarchical renormalisation procedure related to the depinning transition. It has recently been shown [48] that the free energy of this model may diverge as \(\sim \exp (-c/\sqrt{p - p_{\textrm{c}}})\) approaching the critical point from the supercritical phase, \(p\searrow p_{\textrm{c}}\). There are further formal similarities between their analysis and the present article. It would be of interest to shed further light on the universality of this type of behaviour.

Debate on Intermediate Phase We would like to highlight that the presence/absence of such an intermediate phase for the Anderson transitionFootnote 2 on tree-geometries has been a recent topic of debate in the physics literature (see [40, 49] and references therein). In short, the debate concerns the question of whether the intermediate phase only arises due to finite-volume and boundary effects on the tree.

While the presence of a non-ergodic delocalised phase on finite regular trees has been established in recent years [24, 25, 50], it was not clear if this behaviour persists in the absence of a large “free” boundary. To study this, one can consider a system on a large random regular graphs (RRGs) as a “tree without boundary” (alternatively one could consider trees with wired boundary conditions). For the Anderson transition on RRGs, early numerical simulations [23, 51, 52] suggested existence of an intermediate phase, in conflict with existing theoretical predictions [22, 53,54,55]. Shortly afterwards, it was argued that the discrepancy was due to finite-size effects that vanish at very large system sizes [24, 49, 56], even though this does not seem to be the consensusFootnote 3 [40, 52].

We should note that Aizenman and Warzel [33, 57] have shown the existence of an energy-regime of “resonant delocalisation” for the Anderson model on regular trees. It would be interesting to understand if/how this phenomenon is related to the intermediate phase discussed here.

In accordance with the physics literature, we refer to the intermediate phase (\(\beta _{\textrm{c}}< \beta < \beta _{\textrm{c}}^{\textrm{erg}}\)) as multifractal as opposed to the ergodic phase (\(\beta > \beta _{\textrm{c}}^{\textrm{erg}}\)).

1.4 Structure of this article

In Sect. 2 we provide details on the connections between the various models and recall previously known results for the VRJP. In particular, we recall that the VRJP can be seen as a random walk in random conductances given in terms of a t-field (referred to as the t-field environment). On the tree, the t-field can be seen as a branching random walk (BRW) and we recall various facts from the BRW literature. In Sect. 3 we apply BRW techniques to establish a statement on effective conductances in random environments given in terms of critical BRWs (Theorem 3.2). With Theorem 3.1 we prove a result on effective conductances in the near-critical t-field environment. We close the section by showing how the result on effective conductances implies Theorem 1.2 on expected local times for the VRJP. In Sect. 4 we continue to use BRW techniques for the t-field to establish Theorem 1.3 on the intermediate phase for the VRJP. We also prove Theorem 1.4 on the multifractality in the intermediate phase. Moreover, we argue that Rapenne’s recent work [19] implies the absence of such an intermediate phase on trees with wired boundary conditions. In Sect. 5 we show how to establish the results for the \(\mathbb {H}^{2|2}\)-model. For the near-critical asymptotics (Theorem 1.5) this is an easy consequence of a Dynkin isomorphism between the \(\mathbb {H}^{2|2}\)-model and the VRJP. For Theorem 1.6 on the intermediate phase, we make use of the STZ-field to connect the observable for the \(\mathbb {H}^{2|2}\)-model with the observable \(\lim _{t\rightarrow \infty } L^{0}_{t}/t\) that we study for the VRJP.

2 Additional Background

2.1 Dynkin isomorphism for the VRJP and the \(\mathbb {H}^{2|2}\)-Model

Analogous to the connection between the Gaussian free field and the (continuous-time) simple random walk, there is a Dynkin-type isomorphism theorem relating correlation functions of the \(\mathbb {H}^{2|2}\)-model with the local time of a VRJP.

Theorem 2.1

([5, Theorem 5.6]). Suppose \(G = (V,E)\) is a finite graph with positive edge-weights \(\{\beta _{ij}\}_{ij\in E}\). Let \(\langle \cdot \rangle _{\beta ,h}\) denote the expectation of the \(\mathbb {H}^{2|2}\)-model and suppose that under \(\mathbb {E}_{i}\), the process \((X_{t})_{t\ge 0}\) denotes a VRJP started from i. Suppose \(g:\mathbb {R}^{V} \rightarrow \mathbb {R}\) is a smooth bounded function. Then, for any \(i,j\in V\)

where \(\textbf{L}_{t} = (L_{t}^{x})_{x\in V}\) denotes the VRJP’s local time field.

This result will be key to deduce Theorem 1.5 from Theorem 1.2.

2.2 VRJP as random walk in a t-field environment

As a continuous-time process, there is some freedom in the time-parametrisation of the VRJP. While the definition in (1.1) (the linearly reinforced timescale) is the “usual” parametrisation, we also make use of the exchangeable timescale VRJP \((\tilde{X}_t)_{t\in \left[ 0,+\infty \right) }\):

Writing \(\tilde{L}_{t}^{x} = \int _{0}^{t}\mathbbm {1}\{\tilde{X}_{s} = x\}\text {d}{s}\), the local times in the two timescales are related by

Above reparametrisation is motivated by the following result of Sabot and Tarrès [3], showing that the VRJP in exchangeable timescale can be seen as a (Markovian) random walk in random conductances given in terms of the t-field.

Theorem 2.2

(VRJP as Random Walk in Random Environment [3]). Consider a finite graph \(G = (V,E)\), a starting vertex \(i_{0} \in V\) and edge-weights \((\beta _e)_{e\in E}\). The exchangeable timescale VRJP, started at \(i_{0}\), equals in law an (annealed) continuous-time Markov jump process, with jump rates between from i to j given by

where \(\textbf{T} = (T_x)_{x\in V}\) are random variables distributed according to the law of the t-field (1.22) pinned at \(i_{0}\).

As a consequence of Theorem 2.2, the t-field can be recovered from the VRJP’s asymptotic local time:

Corollary 2.3

(t-field from Asymptotic Local Time [31]). Consider the setting of Theorem 2.2. Let \((L_{t}^{x})_{x\in V}\) and \((\tilde{L}_{t}^{x})_{x\in V}\) denote the local time field of the VRJP in linearly reinforced and exchangeable timescale, respectively. Then

exist and follow the law \(\mathcal {Q}^{(i_{0})}_{\beta }\) of the t-field in (1.22).

Proof

For the exchangeable timescale, Sabot, Tarrès and Zeng [31, Theorem 2] provide a proof. The statement for the usual (linearly reinforced) VRJP then follows by the time change formula for local times (2.3). \(\square \)

Considering the VRJP as a random walk in random environment enables us to study its local time properties with the tools of random conductance networks. For a t-field \(\textbf{T} = (T_x)_{x\in V}\) pinned at \(i_{0}\), we refer to the collection of random edge weights (or conductances)

as the t-field environment. This should be thought of as a symmetrised version of the VRJP’s random environment (2.4). It is easier to study a random walk with symmetric jump rates, since its amenable to the methods of conductance networks. The following lemma relates local times in the t-field environment with the local times in the environment of the exchangeable timescale VRJP:

Lemma 2.4

Consider the setting of Theorem 2.2. Let \((\tilde{X}_{t})_{t\ge 0}\) and \((Y_{t})_{t\ge 0}\) denote two continuous-time Markov jump processes started from \(i_{0}\) with rates given by (2.4) and (2.6), respectively. We write \(\tilde{L}_{t}^{x}\) and \(l_{t}^{x}\) for their respective local time fields. Let \(B \subseteq V\) and write \(\tilde{\mathcal {T}_{B}}\) and \(\mathcal {T}_{B}\) for the respective hitting times of B. Then

for \(x \in V\). In particular, \(L_{\tilde{\mathcal {T}}_{B}}^{i_{0}} {\mathop {=}\limits ^{\tiny \text {law}}} 2 l_{\mathcal {T}_{B}}^{i_{0}}\).

Proof

The discrete-time processes associated to \((\tilde{X}_{t})_{t\ge 0}\) and \((Y_{t})_{t\ge 0}\) apparently agree. In particular, they both visit a vertex x the same number of times, before hitting B. Every time \(\tilde{X}_{t}\) visits the vertex x, it spends an \(\textrm{Exp}(\sum _{y}\tfrac{1}{2}\beta _{xy}e^{T_{y} - T_{x}})\)-distributed time there, before jumping to another vertex. \(Y_{t}\) on the other hand will spend time distributed as \(\textrm{Exp}(\sum _{y}\beta _{xy}e^{T_{x} + T_{y}}) = \tfrac{1}{2} e^{-2T_{x}} \textrm{Exp}(\sum _{y}\tfrac{1}{2}\beta _{xy}e^{T_{y} - T_{x}})\). This concludes the proof. \(\square \)

2.3 Effective conductance

Our approach to proving Theorem 1.2 will rely on establishing asymptotics for the effective conductance in the t-field environment (Theorem 3.1).

Definition 2.5

Consider a locally finite graph \(G = (V,E)\) with edge weights (or conductances) \(\{w_{ij}\}_{ij \in E}\). For two disjoint sets \(A,B \subseteq V\), the effective conductance between them is defined as

The variational definition (2.8) makes it easy to deduce monotonicity and boundedness properties:

Lemma 2.6

Consider the situation of Definition 2.5. Suppose \(S \subseteq E\) is a edge-cutset separating A, B. Then

Alternatively, suppose \(C \subseteq V\) is a vertex-cutset separating A, B. Then

Proof

For the first statement, consider (2.8) for the function \(U:V \rightarrow \mathbb {R}\) that is constant zero (resp. one) in the component of A (resp. B) in \(V{\setminus }S\). For the second statement, note that for any funcion \(U :V \rightarrow \mathbb {R}\) with \(U\vert _{A} \equiv 0\) and \(U\vert _{C} \equiv 1\) we can define a function \(\tilde{U}\) that agrees with U on C and the connected compenent of \(V\setminus C\) containing A, and is constant equal to one on the component of B in \(V\setminus V\). Then, \(\tilde{U}\vert _{A} \equiv 0\) and \(\tilde{U}\vert _{B} \equiv 1\) and \(\sum _{ij\in E} w_{ij} (U(i) - U(j))^{2} \le \sum _{ij\in E} w_{ij} (\tilde{U}(i) - \tilde{U}(j))^{2}\), which proves the claim. \(\square \)

The monotoniciy in (2.10) makes it possible to define an effective conductance to infinity. For an increasing exhaustion \(V_{1} \subseteq V_{2} \subseteq \cdots \) of the vertex set \(V = \bigcup _{n}V_{n}\) and a given finite set \(A\subseteq V\), we define the effective conductance from A to infinity by

One may check that this is independent from the choice of exhaustion. For us, the main use of effective conductances stems from their relation to escape times:

Lemma 2.7

Consider a locally finite graph \(G = (V,E)\) with edge weights (or conductances) \(\{w_{ij}\}_{ij \in E}\). Let \(C^{\textrm{eff}}(i_{0},B)\) denote the effective conductance between the singleton \(\{i_{0}\}\) and a disjoint set B. Consider a continuous-time random walk \((X_{t})_{t\ge 0}\) on G, starting at \(X_{0} = i_{0}\) and jumping from \(X_{t} = i\) to j at rate \(w_{ij}\). Let \(L_{\textrm{esc}}(i_{0},B)\) denote the total time the walk spends at \(i_{0}\) before visiting B for the first time. Then \(L_{\textrm{esc}}(i_{0},B)\) is distributed as an \(\textrm{Exp}(1/C^{\textrm{eff}}(i_{0},B))\)-random variable.

For an infinite graph G, the above conclusions also hold for B “at infinity”: We let \(L_{\textrm{esc},\infty }(i_{0})\) denote the total time spent at \(i_{0}\) and understand \(C_{\infty }^{\textrm{eff}}(i_{0})\) as in (2.11). Then \(L_{\textrm{esc},\infty }(i_{0}) \sim \textrm{Exp}(1/C^{\textrm{eff}}_{\infty }(i_{0}))\).

Proof

According to [6, Sect. 2.2], the walk’s number of visits at \(i_{0}\) before hitting B is a geometric random variable \(N\sim \textrm{Geo}(p_{\textrm{esc}})\) with the escape probability \(p_{\textrm{esc}} = C^{\textrm{eff}}(i_{0},B)/(\sum _{j\sim i_{0}} w_{i_{0}j})\). Moreover, for the continuous-time process, every time we visit \(i_{0}\) we spend an \(\textrm{Exp}(\sum _{j\sim i_{0}} w_{i_{0}j})\)-distributed time there, before jumping to a neighbour. Hence, \(L_{\textrm{esc}}(i_{0},B)\) is distributed as the sum of N independent \(\textrm{Exp}(\sum _{j\sim i_{0}} w_{i_{0}j})\)-distributed random variables. By standard results for the exponential distribution (easily checked via its moment-generating function), this implies the claim. Note that this argument also holds true for B “at infinity”, in which case \(N\sim \textrm{Geo}(p_{\textrm{esc}})\) with \(p_{\textrm{esc}} = C^{\textrm{eff}}_{\infty }(i_{0})/(\sum _{j\sim i_{0}} w_{i_{0}j})\) will simply denote the total number of visits at \(i_{0}\) (see [6, Sect. 2.2] for more details). \(\square \)

2.4 The t-field from the \(\mathbb {H}^{2|2}\)- and STZ-Anderson model

t-Field as a Horospherical Marginal of the \(\mathbb {H}^{2|2}\)-model First we introduce horospherical coordinates on \(\mathbb {H}^{2|2}\). In these coordinates, \(\textbf{u} \in \mathbb {H}^{2|2}\) is parametrised by \((t,s,\bar{\psi },\psi )\), with \(t,s \in \mathbb {R}\) and Grassmann variables \(\bar{\psi },\psi \) via

A particular consequence of this is that \(e^{t} = z + x\). By rewriting the Gibbs measure for the \(\mathbb {H}^{2|2}\)-model, defined in (1.10), in terms of horospherical coordinates and integrating out the fermionic variables \(\psi , \bar{\psi }\), one obtains a marginal density in \(\underline{t} = \{t_{x}\}_{x\in V}\) and \(\underline{s} = \{s_{x}\}_{x\in V}\), which can be interpreted probabilistically:

Lemma 2.8

(Horospherical Marginal of the \(\mathbb {H}^{2|2}\)-Model [4, 10, 11]). Consider a finite graph \(G = (V,E)\), a vertex \(i_{0} \in V\), and non-negative edge-weights \((\beta _{ij})_{ij\in E}\). There exist random variables \(\underline{T} = \{T_{x}\}_{x\in V} \in \mathbb {R}^{V}\) and \(\underline{S} = \{S_{x}\}_{x \in V} \in \mathbb {R}^{V}\), such that for any \(F \in C^{\infty }_{\textrm{c}}(\mathbb {R}^{V}\times \mathbb {R}^{V})\)

The law of \(\underline{T}\) is given by the t-field pinned at \(i_{0}\) (see Definition 1.7). Moreover, conditionally on \(\underline{T}\), the s-field follows the law of a Gaussian free field in conductances \(\{\beta _{ij}e^{T_{i} + T_{j}}\}_{ij \in E}\), pinned at \(i_{0}\), \(S_{i_{0}} = 0\).

t-Field and the STZ-Anderson Model. It turns out that the (zero-energy) Green’s function of the STZ-Anderson model is directly related to the t-field:

Proposition 2.9

[31] For \(H_{B}\) denoting the STZ-Anderson model as in Definition 1.8 define the Green’s function \(G_{B}(i,j) = [H_{B}^{-1}]_{i,j}\). For a vertex \(i_{0} \in V\), define \(\{T_{i}\}_{i\in \Lambda }\) via

Then \(\{T_{i}\}\) follows the law \(\mathcal {Q}_{\beta }^{(i_{0})}\) of the t-field, pinned at \(i_{0}\). Moreover, with \(\{T_{i}\}\) as above we have \(B_{i} = \sum _{j\sim i}\beta _{ij}e^{T_{j} - T_{i}}\) for all \(i \in V\setminus \{i_{0}\}\).

This provides a way of coupling the STZ-field with the t-field, as well as a coupling of t-fields pinned at different vertices.

Remark 2.10

(Natural Coupling). Lemma 2.8 and Proposition 2.9 give us a way to define a natural coupling of STZ-field, t-field and s-field as follows: Fix some pinning vertex \(i_{0} \in V\). Sample an STZ-Anderson model \(H_{B}\) with respect to edge weights \(\{\beta _{ij}\}_{ij \in E}\). Then define the t-field \(\{T_{i}\}_{i\in V}\), pinned at \(i_{0}\) via (2.14). Then, conditionally on the t-field, sample the s-field \(\{S_{i}\}_{i\in V}\) as a Gaussian free field in conductances \(\{\beta _{ij}e^{T_{i} + T_{j}}\}_{ij \in E}\), pinned at \(i_{0}\), \(S_{i_{0}} = 0\).

2.5 Monotonicity properties of the t-field

A rather surprising property of the t-field, proved by the first author, is the monotonicity of various expectation values with respect to the edge-weights. The following is a restatement of [8, Theorem 6] after applying Proposition 2.9:

Theorem 2.11

([8, Theorem 6]). Consider a finite graph \(G = (V,E)\) and fix some vertex \(i_{0} \in V\). Under \(\mathbb {E}_{\pmb {\beta }}\), we let \(\mathbb {T} = \{T_{i}\}_{i\in V}\) denote a t-field pinned at \(i_{0}\) with respect to non-negative edge weights \(\pmb {\beta } = \{\beta _{e}\}_{e\in E}\). Then, for any convex \(f:\left[ 0,\infty \right) \rightarrow \mathbb {R}\) and non-negative \(\{\lambda _{i}\}_{i\in V}\), the map

is decreasing.

A direct corollary of the above is that expectations of the form \(\mathbb {E}_{\beta }[e^{\eta T_{x}}]\) are increasing in \(\beta \) for \(\eta \le [0,1]\) and are decreasing for \(\eta \ge 1\). This will be the extent to which we make use of the result.

2.6 The t-field on \(\mathbb {T}_{d}\)

Consider the t-field measure (1.22) on \(\mathbb {T}_{d,n} = (V_{d,n}, E_{d,n})\), the rooted \((d+1)\)-regular tree of depth n, pinned at the root \(i_{0} = 0\). Only one term contributes to the determinantal term (1.23), namely the term corresponding to \(\mathbb {T}_{d,n}\) itself, oriented away from the root:

where \({E}_{d,n}\) is the set of edges in \(\mathbb {T}_{d,n}\) oriented away from the root. In other words, the increments of the t-field along outgoing edges are i.i.d. and distributed according to the following:

Definition 2.12

(t-field Increment Measure). For \(\beta > 0\) define the probability distribution

We refer to this as the t-field increment distribution and if not specified otherwise, T will always denote a random variable with distribution \(\mathcal {Q}^{\textrm{inc}}_{\beta }\). The dependence on \(\beta \) is either implicit or denoted by a subscript, such as in \(\mathbb {E}_{\beta }\) or \(\mathbb {P}_{\beta }\).

The density (2.17) implies that

where IG (RIG) denotes the (reciprocal) inverse Gaussian distribution (cf. (A.4)). Note that changing variables to \(t\mapsto e^{t}\) and comparing to the density of the inverse Gaussian, we see that (2.17) is normalised.

Definition 2.13

(Free Infinite Volume t-field on \(\mathbb {T}_{d}\)). For \(\beta > 0\), associate to every edge e of the infinite rooted \((d+1)\)-regular tree \(\mathbb {T}_{d}\) a t-field increment \(\tilde{T}_{e}\), distributed according to (2.17). For every vertex \(x \in \mathbb {T}_{d}\) let \(\gamma _{x}\) denote the unique self-avoiding path from 0 to x and define \(T_{x} :=\sum _{e\in \gamma _{x}} \tilde{T}_{e}\). The random field \(\{T_{x}\}_{x\in \mathbb {T}_{d}}\) is the free infinite volume t-field on \(\mathbb {T}_{d}\) at inverse temperature \(\beta > 0\). In particular, its restriction \(\{T_{x}\}_{x \in \mathbb {T}_{d,n}}\) onto vertices up to generation n follows the law \(\mathcal {Q}^{(0)}_{\beta ;\mathbb {T}_{d,n}}\).

By construction, \(\{T_{x}\}_{x\in \mathbb {T}_{d}}\) can be considered a branching random walk (BRW) with a deterministic number of offsprings (every particle gives rise to d new particles in the next generation). In Sect. 2.8 we will elaborate on this perspective.

2.7 Previous results for VRJP on trees

As we have already noted in the introduction, the VRJP on tree graphs has received quite some attention [15,16,17,18,19]. In particular, Basdevant and Singh [17] studied the VRJP on Galton–Watson trees with general offspring distribution, and exactly located the recurrence/transience phase transition:

Proposition 2.14

(Basdevant-Singh [17]). Let \(\mathcal {T}\) denote a Galton–Watson tree with mean offspring \(b > 1\). Consider the VRJP started from the root of \(\mathcal {T}\), conditionally on non-extinction of the tree. There exists a critical parameter \(\beta _{\textrm{c}} = \beta _{\textrm{c}}(b)\), such that the VRJP is

-

recurrent for \(\beta \le \beta _\textrm{c}\),

-

transient for \(\beta > \beta _\textrm{c}\).

Moreover, \(\beta _{\textrm{c}}\) is characterised as the unique positive solution to

We also take the opportunity to highlight Rapenne’s recent results [19] concerning the (sub)critical phase, \(\beta \le \beta _{\textrm{c}}\). His statements can be seen to complement our results, which focus on the supercritical phase \(\beta > \beta _{\textrm{c}}\).

2.8 Background on branching random walks

Let’s quickly recall some basic results from the theory of branching random walks. For a more comprehensive treatment we refer to Shi’s monograph [58].

A branching random walk (BRW) with offspring distribution \(\mu \in \textrm{Prob}(\mathbb {N}_{0})\) and increment distribution \(\nu \) is constructed as follows: We start with a “root” particle \(x = 0\) at generation \(\left| 0\right| = 0\) and starting position \(V(0) = v_{0}\). We sample its number of offsprings according to \(\mu \). They constitute the particles at generation one, \(\{\left| x\right| = 1\}\). Every such particle is assigned a position \(v_{0} + \delta V_{x}\) with \(\{\delta V_{x}\}_{\left| x\right| = 1}\) being i.i.d. according to the increment distribution \(\nu \). This process is repeated recursively and we end up with a random collection of particles \(\{x\}\), each equipped with a position \(V(x) \in \mathbb {R}\), a generation \(\left| x\right| \in \mathbb {N}_{0}\) and a history \(0=x_{0}, x_{1}, \ldots , x_{\left| x\right| } = x\) of predecessors. Unless otherwise stated, we assume from now on that a BRW always starts from the origin, \(v_{0} = 0\).

A particularly useful quantity for the study of BRWs is the \(\log \)-Laplace transform of the offspring process:

where the sum goes over all particles in the first generation. A priori, we have \(\psi (\eta ) \in [0,\infty ]\), but we typically assume \(\psi (0) > 0\) and \(\inf _{\eta >0} \psi (\eta ) < \infty \). The first assumption corresponds to supercriticality of the offspring distributionFootnote 4, whereas the second assumption enables us to study the average over histories of the BRW in terms of single random walk:

Proposition 2.15

(Many-To-One Formula). Consider a BRW with log-Laplace transform \(\psi (\eta )\). Choose \(\eta > 0\) such that \(\psi (\eta ) < \infty \) and define a random walk \(0 = S_{0}, S_{1}, \ldots \) with i.i.d. increments such that for any measurable \(h:\mathbb {R}\rightarrow \mathbb {R}\)

Then, for all \(n\ge 1\) and \(g:\mathbb {R}^{n} \rightarrow \left[ 0,\infty \right) \) measurable we have

For a proof we refer to Shi’s lecture notes [58, Theorem 1.1]. An application of the many-to-one formula is the following statement about the velocity of extremal particles (cf. [58, Theorem 1.3]).

Proposition 2.16

(Asymptotic Velocity of Extremal Particles). Suppose \(\psi (0) > 0\) and \(\inf \limits _{\eta > 0} \psi (\eta ) < \infty \). Then, almost surely under the event of non-extinction, we have

Critical Branching Random Walks. A common assumption, under which BRWs exhibit various universal properties, is \(\psi (1) = \psi '(1) = 0\). While not common terminology in the literature, we will refer to this as criticality:

This definition can be motivated by considering the many-to-one formula (Proposition 2.15) applied to a critical BRW for \(\eta = 1\): In that case, the random walk \(S_{i}\) has mean zero increments, \(\mathbb {E}[S_{1}] = -\psi '(1) = 0\), and the exponential drift in (2.22) vanishes, \(e^{n\psi (1)} = 1\). Consequently, as far as the many-to-one formula is concerned, critical BRWs inherit some of the universality of mean zero random walks (e.g. Donsker’s theorem, say under an additional second moment assumption). Moreover, the notion of criticality is particularly useful, since in many cases we can reduce a BRW to the critical case by a simple rescaling/drift transformation:

Lemma 2.17

(Critical Rescaling of a BRW). Consider a BRW with log-Laplace transform \(\psi (\eta ) = \log \mathbb {E}[\sum _{\left| x\right| = 1} e^{-\eta V(x)}]\). Suppose there exists \(\eta ^{*} > 0\) solving the equation

Equivalently, \(\eta ^{*}\) is a critical point for \(\eta \rightarrow \psi (\eta )/\eta \). Define a BRW with the same particles \(\{x\}\) and rescaled positions

The resulting BRW is critical.

Proof

Write \(\psi ^{*}(\gamma ) = \log \mathbb {E}\sum _{\left| x\right| = 1} e^{-\gamma V^{*}(x)}\) for the log-Laplace transform of the rescaled BRW. We easily check

Equivalently, \(1 = \mathbb {E}\sum _{\left| x\right| =1}e^{-\eta ^{*}V(x) - \psi (\eta ^{*})}\), which together with (2.25) yields

which concludes the proof. \(\square \)

3 VRJP and the t-Field as \(\beta \searrow \beta _{\textrm{c}}\)

The main goal of this section is to prove Theorem 1.2 on the asymptotic escape time of the VRJP as \(\beta \searrow \beta _{\textrm{c}}\). The main work will be in establishing the following result on the effective conductance in a t-field environment:

Theorem 3.1

(Near-Critical Effective Conductance). Let \(\{T_{x}\}_{x\in \mathbb {T}_{d}}\) denote the (free) t-field on \(\mathbb {T}_{d}\), pinned at the origin. Let \(C^{\textrm{eff}}_{\infty }\) denote the effective conductance from the origin to infinity in the network given by conductances \(\{\beta e^{T_{i} + T_{j}} \mathbbm {1}_{i\sim j}\}_{i,j\in \mathbb {T}_{d}}\). There exist constants \(c,C > 0\) such that

as \(\epsilon \searrow 0\), where \(\beta _{\textrm{c}} = \beta _{\textrm{c}}(d) > 0\) is given by Proposition 2.19.

For establishing this result, the BRW perspective onto the t-field is essential. The lower bound will follow from a mild modification of a result by Gantert, Hu and Shi [59] (see Theorem 3.8). For the upper bound we will consider the critical rescaling of the near-critical t-field (cf. Lemma 2.17). The bound will then follow by a perturbative argument applied to a result on effective conductances in a critical BRW environment. The latter we prove in a more general form, for which it is convenient to introduce some additional notions.

For a random variable V and a fixed offspring degree d we write

Analogous to Definition 2.13, for an increment distribution given by V, we define a random field \(\{V_{x}\}_{x\in \mathbb {T}_{d}}\) and refer to it as the BRW with increments V. We say that V is a critical increment if \(\{V_{x}\}_{x \in \mathbb {T}_{d}}\) is critical, i.e. \(\psi _{V}(1) = \psi _{V}'(1) = 0\). Note that this implicitly depends on our choice of \(d \ge 2\), but we choose to suppress this dependency. For a critical increment V we write

Note that this is the variance of the (mean-zero) increments of the random walk \((S_{i})_{i\ge 0}\) given by the many-to-one formula (Proposition 2.15 for \(\eta = 1\)).

Theorem 3.2

Fix some offspring degree \(d \ge 2\) and consider a critical increment V with \(\sigma _{V}^{2} < \infty \) and \(\psi _{V}(1+2a) < \infty \) for some constant \(a > 0\). Write \(\{V_{x}\}_{x \in \mathbb {T}_{d}}\) for the BRW with increments V and define the conductances \(\{e^{-\gamma (V_{x} + V_{y})}\}_{xy}\). Let \(C_{n,\gamma }^{\textrm{eff}}\) denote the effective conductance between the origin 0 and the vertices in the n-th generation. Then, for \(\gamma \in (1/2, 1/2 + a)\), we have

Moreover, this is uniform with respect to \(\gamma \), \(\sigma _{V}^{2}\) and \(\psi _{V}(1+2a)\) in the following sense: Suppose there is a family \(V^{(k)}\), \(k\in \mathbb {N}\), of critical increments and define \(C^{\textrm{eff}}_{n,\gamma ;k}\) as above. Further assume \(0< \inf _{k}\sigma ^{2}_{V^{(k)}} \le \sup _{k}\sigma ^{2}_{V^{(k)}} < \infty \) and \(\sup _{k} \psi _{V^{(k)}}(1+2a) < \infty \). Then we have

We note that random walk in (critical) multiplicative environments on trees has previously been studied, see for example [60,61,62,63,64,65]. In particular, Hu and Shi [63, Theorem 2.1] established bounds analogous to (3.4) for escape probabilities, instead of effective conductances. While the quantities are related, bounds on the expected escape probability do not directly translate into bounds for the expected effective conductance. Moreover, their setup for the random environment does not directly apply to our settingFootnote 5. Last but not least, for our applications, we require additional uniformity of the bounds with respect to the underlying BRW.

3.1 The t-field as a branching random walk

Considered as a BRW, the t-field \(\{T_{x}\}_{x\in \mathbb {T}_{d}}\) on the rooted \((d+1)\)-regular tree \(\mathbb {T}_{d}\) (or more precisely the negative t-field) has a log-Laplace transform given by

where T denotes the t-field increment as introduced in Definition 2.12. One can check easily that \(\psi _{\beta }(0) = \psi _{\beta }(1) = \log d\). More generally, using the density for T we have

where \(K_{\alpha }\) denotes the modified Bessel function of second kind. An illustration of \(\psi _{\beta }\) for different values of \(\beta \) is given in Fig. 4. In particular, it’s a smooth function in \(\beta ,\eta > 0\) and one may check that it’s strictly convex since

equals the variance of a non-deterministic random variable. Moreover, by the symmetry and monotonicity properties of the Bessel function (\(K_{\alpha } = K_{-\alpha }\) and \(K_{\alpha } \le K_{\alpha '}\) for \(0 \le \alpha \le \alpha '\)), the infimum of \(\psi _{\beta }(\eta )\) is attained at \(\eta = 1/2\):

The critical inverse temperature \(\beta _{\textrm{c}} = \beta _{\textrm{c}}(d) > 0\), as given in Proposition 2.14, is equivalently characterised by the vanishing of this infimum:

In particular, by Lemma 2.17, this implies that \(\{-\tfrac{1}{2} T_{x}\}_{x\in \mathbb {T}_{\textrm{d}}}\) is a critical BRW at \(\beta =\beta _{\textrm{c}}\). More generally, it will be useful to consider critical rescalings of \(\{T_{x}\}\) for general \(\beta > 0\). For this we write

An illustration of these quantities is given in Fig. 5. If \(\eta _{\beta }\) as above is well-defined, then it satisfies (2.25) and hence by Lemma 2.17 the rescaled field

defines a critical BRW. The following lemma lends rigour to this:

Lemma 3.3

\(\eta _{\beta }\) as given in (3.11) is well-defined and the unique positive root of the strictly increasing map \(\eta \mapsto \eta \psi _{\beta }^{\prime }(\eta ) - \psi _{\beta }(\eta )\). Consequently, the maps \(\beta \mapsto \eta _{\beta }\) and \(\beta \mapsto \gamma _{\beta }\) are continuously differentiable.

Proof

Recall the Bessel function asymptotics \(K_{\alpha }(\beta ) \sim \tfrac{1}{2} (2/\beta )^{\alpha } \Gamma (\alpha )\) as \(\alpha \rightarrow \infty \), hence by (3.7) we have \(\psi _{\beta }(\eta ) \sim \eta \log \eta \) for \(\eta \rightarrow \infty \). Consequently, \(\psi _{\beta }(\eta )/\eta \) diverges as \(\eta \rightarrow \infty \) (and it also diverges as \(\eta \searrow 0\)). Hence it attains its infimum at some finite value. We claim that there is a unique minimiser \(\eta _{\beta }\). Since \(\psi _{\beta }(\eta )/\eta \) is continuously differentiable in \(\eta > 0\), at any minimum it will have vanishing derivative \(\partial _{\eta } (\psi _{\beta }(\eta )/\eta ) = [\eta \psi _{\beta }^{\prime }(\eta ) - \psi _{\beta }(\eta )]/\eta ^{2}\). And in fact the map \(\eta \mapsto \eta \psi _{\beta }^{\prime }(\eta ) - \psi _{\beta }(\eta )\) is strictly increasing, since its derivative equals \(\eta \psi _{\beta }^{\prime \prime }(\eta ) > 0\), see (3.8), and as such has at most one root. This implies that \(\eta _{\beta }\) as in (3.11) is well-defined and the unique root of \(\eta \psi _{\beta }^{\prime }(\eta ) - \psi _{\beta }(\eta )\).

Continuous differentiability of \(\beta \mapsto \eta _{\beta }\) follows from the implicit function theorem applied to \(f(\eta , \beta ) :=\eta \psi _{\beta }'(\eta ) - \psi _{\beta }(\eta )\), noting that \(\partial _{\eta }f(\eta ,\beta ) = \eta \psi _{\beta }^{\prime \prime }(\eta ) > 0\). This directly implies continuous differentiability of \(\beta \mapsto \gamma _{\beta } = \psi _{\beta }(\eta _{\beta })/\eta _{\beta }\) \(\square \)

Illustration of \(\eta _{\beta }\), \(\gamma _{\beta }/\log d\) and \(\psi _{\beta }(\eta )/(\eta \log d)\) for \(d=2\). For the figure on the left, note that \(\gamma _{\beta }\) is positive for \(\beta > \beta _{\textrm{c}}\) and attains its maximum at \(\beta _{\textrm{c}}^{\textrm{erg}}\), at the same point at which \(\eta _{\beta } = 1\). The right figure illustrates the same point: The minima of \(\psi _{\beta }(\eta )/\eta \) move to the right with increasing \(\beta \) and attain their highest value at \(\beta = \beta _{\textrm{c}}^{\textrm{erg}}\)

Considering the graphs in Fig. 5, one would conjecture that \(\eta _{\beta }\) is strictly increasing in \(\beta \). One can apply the implicit function theorem to \(f(\eta , \beta ) :=\eta \psi _{\beta }'(\eta ) - \psi _{\beta }(\eta )\) to obtain

The denominator is positive by (3.8), but we are not aware how to show non-negativity of the numerator for general \(\beta \). We can however make use of this for the special case \(\beta = \beta _{\textrm{c}}\), which will be relevant in Sect. 3.3, in order to prove Theorem 3.1.

Proposition 3.4

Let \(\psi _{\beta }(\eta )\) and \(\eta _{\beta }\) be as in (3.7) and (3.11), for some \(d\ge 2\). For \(\beta _{\textrm{c}} = \beta _{\textrm{c}}(d) > 0\), as given in Proposition 2.14, we have \(\eta _{\beta _{\textrm{c}}} = 1/2\) and

Proof

By (3.10) we have \(\tfrac{1}{2} \psi _{\beta _{\textrm{c}}}^{\prime }(\tfrac{1}{2}) - \psi _{\beta _{\textrm{c}}}(\tfrac{1}{2}) = -\psi _{\beta _{\textrm{c}}}(\tfrac{1}{2}) = 0\). Lemma 3.3 therefore implies \(\eta _{\beta _{\textrm{c}}} = 1/2\). Applying (3.13) and recalling \(\psi _{\beta }'(\tfrac{1}{2}) = 0\), we get

The denominator is positive by (3.8). As for the numerator, we recall (3.7) for \(\eta = 1/2\):

To see monotonicity of the integral in \(\beta \) it is convenient to apply the change of variables.

Note that \(u^{2}/2 = \tfrac{1}{2}(e^{t} + e^{-t}) - 1 = \cosh (t)-1\), hence

Clearly, the integrand in the last line is strictly increasing in \(\beta \), hence \(\partial _{\beta }\psi _{\beta }(\tfrac{1}{2})>0\). This implies the first statement in (3.14). For the second statement note that \(\psi _{\beta _{\textrm{c}}}^{\prime }(\tfrac{1}{2}) = 0\). Hence, \(\partial _{\beta }\vert _{\beta =\beta _{\textrm{c}}}\psi _{\beta }(\eta _{\beta }) = \partial _{\beta }\vert _{\beta =\beta _{\textrm{c}}}\psi _{\beta }(\tfrac{1}{2}) > 0\). \(\square \)

As already suggested in Fig. 5, there is a second natural transition point \(\beta _{\textrm{c}}^{\textrm{erg}} > \beta _{\textrm{c}}\), which is “special” due to \(\gamma _{\beta }\) attaining its maximum there. This transition point will be relevant for the study of the intermediate phase in Sect. 4.

Proposition 3.5

(Characterisation of \(\beta _{\textrm{c}}^{\textrm{erg}}\)). Let \(\psi _{\beta }(\eta )\) and \(\eta _{\beta }\) be as in (3.7) and (3.11), for some \(d\ge 2\). The map \(\beta \mapsto \psi _{\beta }^{\prime }(1) - \psi _{\beta }(1)\) is strictly decreasing and there exists a unique \(\beta _{\textrm{c}}^{\textrm{erg}} = \beta _{\textrm{c}}^{\textrm{erg}}(d) > 0\), such that

Equivalently, \(\beta _{\textrm{c}}^{\textrm{erg}} > 0\) is characterised by any of the following conditions:

Moreover, for \(\beta < \beta _{\textrm{c}}^{\textrm{erg}}\) we have that \(\eta _{\beta } < 1\) and that \(\beta \mapsto \gamma _{\beta }\) is increasing, while for \(\beta > \beta _{\textrm{c}}^{\textrm{erg}}\) one has \(\eta _{\beta } > 1\) and \(\beta \mapsto \gamma _{\beta }\) is decreasing.

Proof

By definition of \(\psi _{\beta }\) and the t-field increment measure we have

We claim that \(\beta \mapsto \mathbb {E}_{\beta }[T]\) is strictly increasing. In fact, using the change of variables in (3.17) and noting that \(e^{-t/2} = \cosh (t/2) - \sinh (t/2) = \sqrt{1+(u/2)^{2}} - u/2\), we have

It is easy to check that \(x {\text {arsinh}}(x)/\sqrt{1+x^{2}}\) is strictly increasing in \(\left| x\right| \). Consequently, rescaling \(u = s/\sqrt{\beta }\) as in (3.18), we see that above integral is strictly increasing in \(\beta \). Moreover, one also observes that that \(\mathbb {E}_{\beta }[T] \rightarrow -\infty \) for \(\beta \searrow 0\), whereas \(\mathbb {E}_{\beta }[T] \rightarrow 0\) for \(\beta \rightarrow \infty \). Hence by (3.21), there exists a unique \(\beta _{\textrm{c}}^{\textrm{erg}} > 0\), such that \(\psi _{\beta _{\textrm{c}}^{\textrm{erg}}}^{\prime }(1) = \psi _{\beta _{\textrm{c}}^{\textrm{erg}}}(1)\). In particular, \(\eta _{\beta _{\textrm{c}}^{\textrm{erg}}} = 1\).

The first two alternative characterisations in (3.20) follow from (3.21) and our previous considerations. Also, by Theorem 2.11, we have

which by Lemma 3.3 implies that \(\eta _{\beta } \lessgtr 1\) for \(\beta \lessgtr \beta _{\textrm{c}}^{\textrm{erg}}\).

To show the last characterisation in (3.20), we calculate the derivative of \(\beta \mapsto \gamma _{\beta } = \psi _{\beta }(\eta _{\beta })/\eta _{\beta }\):

where in the last line we used that \(\eta _{\beta }\psi ^{\prime }_{\beta }(\eta _{\beta }) - \psi _{\beta }(\eta _{\beta }) = 0\). By Theorem 2.11, the last line in (3.24) is non-negative if \(\eta _{\beta } \le 1\) and non-positive for \(\eta _{\beta } \ge 1\). Since \(\eta _{\beta } \lessgtr 1\) for \(\beta \lessgtr \beta _{\textrm{c}}^{\textrm{erg}}\) this implies the last statement in (3.20) as well as the stated monotonicity behaviour of \(\beta \mapsto \gamma _{\beta }\). \(\square \)

3.2 Effective conductance in a critical environment (Proof of Theorem 3.2)

First we recall some results on small deviation of random walks. To be precise, we use an extension of Mogulskii’s Lemma [66], due to Gantert, Hu and Shi [59].

Lemma 3.6

(Triangular Mogulskii’s Lemma [59, Lemma 2.1]). For each \(n\ge 1\), let \(X_i^{(n)}\), \(1\le i \le n\), be i.i.d. real-valued random variables. Let \(g_1<g_2\) be continuous functions on [0, 1] with \(g_1(0)<0<g_2(0)\). Let \((a_n)\) be a sequence of positive numbers such that \(a_n \rightarrow \infty \) and \(a^{2}_n/n \rightarrow 0\) as \(n \rightarrow \infty \). Assume that there exist constants \(\eta >0\) and \(\sigma ^2>0\) such that:

Consider the measurable event

where \(S_i^{(n)}:= X_1^{(n)}+\cdots +X_i^{(n)},\ 1\le i \le n\). We have

Lemma 3.7

For each \(k\ge 1\), let \(X_{i}^{(k)}\), \(i\in \mathbb {N}\), be i.i.d. real-valued random variables with \(\mathbb {E}[X_{i}^{(k)}] = 0\) and \(\sigma _{k}^{2} :=\mathbb {E}[(X_{i}^{(k)})^{2}]\). Suppose that \(0< \inf _{k}\sigma _{k}^{2} \le \sup _{k}\sigma _{k}^{2} < \infty \). Write \(S_{i}^{k} = X_{1}^{(k)} + \cdots + X_{i}^{(k)}\). For \(\gamma > 0\) and \(\nu \in (0,\tfrac{1}{2})\), define the events

then we have

Proof

We proceed by contradiction. Write \(b_{n}^{(k)} :=-n^{1-2\nu }\log \mathbb {P}[E^{(k)}_{n}]\) and \(b_{\infty }^{(k)} :=\big (\frac{\pi \sigma _{k}}{2\gamma }\big )^{2}\) and suppose (3.29) does not hold. Then there exists \(\epsilon > 0\), \((k_{n})_{n\in \mathbb {N}}\), and a subsequence \(\mathcal {N}_{0} \subseteq \mathbb {N}\)

Since the \(\sigma _{k}^{2}\) are bounded, we can refine to a subsequence \(\mathcal {N}_{1} \subseteq \mathcal {N}_{0} \subseteq \mathbb {N}\), such that \(\sigma ^{2}_{k_{n}} \rightarrow \tilde{\sigma } > 0\) along \(\mathcal {N}_{1}\). But by Lemma 3.6 (with \(a_{n} = n^{\nu }\), \(g_{1} = -\gamma \), and \(g_{2} = +\gamma \)) we have \(b_{n}^{(k_{n})} \rightarrow - \big (\frac{\pi \tilde{\sigma }}{2\gamma }\big )^{2}\) along \(\mathcal {N}_{1}\), in contradiction with (3.30). \(\square \)

Proof of Theorem 3.2

Recall the notation in Theorem 3.2. We proceed by proving the statement for an individual increment V, but indicate at which steps care has to be taken to establish the uniformity (3.5).

Write \(\partial \Lambda _{n} :=\{x \in \mathbb {T}_{d} :\left| x\right| = n\}\) for the vertices at distance n from the origin. Set \(\alpha :=\frac{1}{2}(\pi ^{2}\sigma _{V}^{2})^{1/3}\). Define the stopping lines of \(\{V_{x}\}_{x\in \mathbb {T}_{d}}\) at level \(\alpha n^{1/3}\):

where we write \({E}\) for the set or edges oriented away from the origin and “\(a \prec b\)” means that a is an ancestor of b. Let \(A_{n}\) denote the event that \(\mathcal {L}^{(n)}\) is a cut-set between the origin and \(\partial \Lambda _{n}\). By (2.9), conditionally on the event \(A_{n}\) we have the point-wise bound

We thus have:

Bounding the second summand. Clearly, we have

To bound the first summand on the right hand side, we apply the many-to-one formula (Proposition 2.15) with \(\eta = 1\), and get a random walk \((S_{i})_{i\ge 0}\), such that

In the third line we used that \(\psi _{V}(1) = 0\). We recall that (since \(\psi (1)_{V} = \psi _{V}'(1) = 0\)) we have \(\mathbb {E}[S_{1}] = 0\) and \(\mathbb {E}[S_{1}^{2}] = \sigma _{V}^{2}\). Applying Lemma 3.7 (with \(\gamma = \alpha \) and \(\nu = 1/3\)) yields

where we used that \((\tfrac{\pi \sigma _{V}}{2\alpha })^{2} = 2 \alpha \). Moreover, Lemma 3.7 states that the convergence in (3.36) is uniform over a family \(V^{(k)}\), \(k\in \mathbb {N}\), of critical increments given that \(0< \inf _{k}\sigma ^{2}_{V^{(k)}} \le \sup _{k}\sigma ^{2}_{V^{(k)}} < \infty \). In conclusion we have

Fot the second summand in (3.34) we have

Where we used that \(e^{i \psi _{V}(\eta )} = \sum _{\left| x\right| = i} \mathbb {E}[e^{-\eta V_{x}}]\), which one may check inductively. In conclusion, (3.34), (3.37) and (3.38) yield \(\mathbb {P}(A_{n}^{\textrm{c}}) \le e^{-(\alpha +o(1)) n^{1/3}}\). We proceed by controlling the second summand in (3.33) using Cauchy-Schwarz and properties of the effective conductance (Lemma 2.6):

To bound the first factor on the right hand side note that \(C_{n,\gamma }^{\textrm{eff}} \le {\textstyle \sum _{\left| x\right| =1}}e^{-\gamma V_{x}}\) by Lemma 2.6. By Jensen’s and Hölder’s inequality

where we used \(1 = e^{\psi _{V}(1)} = d\, \mathbb {E}[e^{-V}]\). The last line in (3.40) is continuous in \(\gamma \in \mathbb {R}\), hence uniformly bounded for \(\gamma \in (1/2, 1/2 + a)\). In conclusion, we have

for a constant \(C(\psi _{V}(1+2a)) > 0\) depending continuously on \(\psi _{V}(1+2a)\). In particular, this yields a uniform bound over a family of critical increments \(V^{(k)}\) with \(0< \inf _{k}\sigma ^{2}_{V^{(k)}} \le \sup _{k}\sigma ^{2}_{V^{(k)}} < \infty \) and \(\sup _{k}\psi _{V^{(k)}}(1+2a) \infty \).

Bounding the first summand. For a vertex \(x \in \partial \Lambda _{n}\) we write \((x_{k})_{k=0,\ldots ,n}\) for its sequence of predecessors (\(x_{0} = 0, x_{n} = x\)). For a walk \(X = (X_{i})_{i\ge 0}\), analogously to our stopping lines, we introduce the stopping time at level \(\alpha n^{1/3}\):

Note that on the event \(A_{n}\), we know for every \(x\in \partial \Lambda _{n}\) that the sequence \((V_{x_{i}})_{i=0,\ldots ,n}\) crosses level \(\alpha n^{1/3}\). In other words, \(T^{(n)}_{(V_{x_{i}})} \le n\).

Consequently, the first summand in (3.33) is bounded via

The last line is amenable to the many-to-one formula (Theorem 2.15). Write \((S_{i})_{i\ge 0}\) for the associated random walk (choosing \(\eta = 1\)), then the last line in (3.43) is equal to

Now, since \(S_{k}\ge \alpha n^{1/3}\) for \(T^{(n)}_{S} = k\), and since \(\gamma > 1/2\) by assumption, we can bound the right hand side and obtain

Now by using the definition of \((S_{i})\) in (2.21) we have

for a constant \(C(\psi _{V}(1+2a)) > 0\) that is independent of \(\gamma \in (1/2, 1/2 + a)\) and continuous with respect to \(\psi _{V}(1+2a)\). Hence,

and this bound holds uniformly with respect to \(\gamma \in (1/2, 1/2 + a)\) and over family of critical increments \(V^{(k)}\), given that \(\sup _{k} \psi _{V^{(k)}}(1+2a) < \infty \). In conclusion (3.32), (3.41) and (3.47) yield

uniformly over \(\gamma \in (1/2, 1/2 + a)\) as \(n\rightarrow \infty \). And as noted, this bound is also uniform over a family of critical increments \(V^{(k)}\), given the assumptions in the theorem. This concludes the proof. \(\square \)

3.3 Near-critical effective conductance (Proof of Theorem 3.1)

The upper bound in Theorem 3.1 will follow from Theorem 3.2 and a perturbative argument. For the lower bound, we will apply a modification of a result due to Gantert, Hu and Shi [59]. In their work they give the asymptotics for the probability that some trajectory of a critical branching random walk stays below a slope \(\delta |i|\) when \(\delta \searrow 0\). We are interested in this result applied to the critical rescaling of t-field \(\{\tau _{x}^{\beta }\}_{x\in \mathbb {T}_{d}}\) as given in (3.12). Comparing to Gantert, Hu and Shi’s result, we will require additional uniformity in \(\beta \):

Theorem 3.8

Let \(\{\tau _{x}^{\beta }\}_{x\in \mathbb {T}_{d}}\) be as in (3.12). For any \(a>0\) small enough, there exists a constant \(C>0\) such that for all \(\beta \in [\beta _c,\beta _c+a]\), for \(\delta \) small enough:

This theorem will be proven in Appendix B, as it closely follows the arguments of Gantert, Hu and Shi, while taking some extra care to ensure the required uniformity.

Proof of Theorem 3.1

The main idea is to consider, for \(\beta = \beta _{\textrm{c}} + \epsilon \), the critical rescaling of the t-field (see Lemma 2.17, (3.11) and Lemma 3.3)

We remind the reader of the definition of the rescaled field with the following near-critical behaviour for the constants (Proposition 3.4):

Together with these asymptotics, application Theorem 3.8 and Theorem 3.2 to \(\{\tau ^{\beta }_{i}\}_{i\in \mathbb {T}_{d}}\), will yield the lower and upper bound, respectively.

Lower Bound: According to Theorem 3.8 we have that there exist constants \(a, C>0\), such that for all sufficiently small \(\delta >0\):

Note that \(\tau _{\gamma _{i}} \le \delta i\) is equivalent to \(T_{\gamma _{i}} \ge \eta _{\beta }^{-1} [\psi _{\beta }(\eta _{\beta }) - \delta ] i\). Choosing \(\delta (\epsilon ) = \tfrac{1}{2} c_{\psi } \epsilon \), we have \(\eta _{\beta _{\textrm{c}}+\epsilon }^{-1} [\psi _{\beta _{\textrm{c}}+\epsilon }(\eta _{\beta _{\textrm{c}}+\epsilon }) - \delta (\epsilon )] = c_{\psi }\epsilon + O(\epsilon ^{2})\). Hence, for \(\epsilon >0\) small enough

Write \(A_{\epsilon }\) for the event in brackets. Conditionally on this event, we can bound \(C_{\infty }^{\textrm{eff}}\) from below by the conductance along the path \(\gamma \) (which is given by Kirchhoff’s rule for conductors in series):

Consequently, (3.52) and (3.53) yield

This concludes the proof of the lower bound in (3.1).

Upper Bound: Recalling the definition (3.49), we have for any \(i,j \in \mathbb {T}_{d,n} \subseteq \mathbb {T}_{d}\) that

Hence, if we write \(\tilde{C}^{\textrm{eff}}_{n}\) for the effective conductance between the origin and \(\partial \Lambda _{n} = \{x\in \mathbb {T}_{d}:\left| x\right| = n\}\) in the electrical network with conductances \(\{e^{-\eta ^{-1}_{\beta }(\tau ^{\beta }_{i} + \tau ^{\beta }_{j})}\}_{ij \in E}\), we have

For any \(\beta > 0\), the field \(\tau ^{\beta }_{i}\) is the BRW for the critical increment \(\tau ^{\beta } :=-\eta _{\beta } T + \psi _{\beta }(\eta _{\beta })\), with T is distributed as a t-field increment (at inverse temperature \(\beta \)). Hence, Theorem 3.2 implies

and moreover this holds uniformly as \(\beta \searrow \beta _{\textrm{c}}\). Note that by (3.50) we have \(\min (\tfrac{1}{4},\eta ^{-1}_{\beta } - 1/2) = \tfrac{1}{4}\) for \(\beta \) sufficiently close to \(\beta _{\textrm{c}}\). In the following write \(\beta = \beta _{\textrm{c}} + \epsilon \). By (3.50) we have \(\psi _{\beta _{\textrm{c} + \epsilon }}(\eta _{\beta _{\textrm{c}} + \epsilon })/\eta _{\beta _{\textrm{c}} + \epsilon } \sim 2 c_{\psi } \epsilon \) as \(\epsilon \searrow 0\). Hence, choosing \(n = n(\epsilon ) = c' \epsilon ^{-3/2}\) we have

consequently for \(c' > 0\) sufficiently small, (3.56) and (3.57) together with Lemma 2.6 yield

for some constant \(C > 0\). \(\square \)

A corollary of the proof above, in particular (3.52), (3.53) is the following

Lemma 3.9

In the setting of Theorem 3.1 one has, for some constants \(c, C > 0\)

as \(\epsilon \searrow 0\).

3.4 Average escape time of the VRJP as \(\beta \searrow \beta _{\textrm{c}}\) (Proof of Theorem 1.2)

Lemma 3.10

(Local Time and Effective Conductance). Let \(L^{0}_{\infty }\) denote the time the VRJP spends at the origin. Let \(C_{\infty }^{\textrm{eff}}\) be the effective conductance between the origin and infinity in the t-field environment. Also suppose Z is an independent exponential random variable of unit mean. Then we have

Proof

Write \(\tilde{L}^{0}_{\infty }\) for the total time the exchangeable timescale VRJP spends at the origin. By the time change formula for the local times (2.3), we have:

By Theorem 2.2, Lemma 2.4, and Lemma 2.7, \(\tilde{L}^{0}_{\infty }\) is \(\textrm{Exp}(2/C_{\infty }^{\textrm{eff}})\)-distributed. \(\square \)

Lemma 3.11

Let \(C^\textrm{eff}_{\infty }\) be as in Theorem 3.1. For any \(\alpha >0\), there exists a constant \(c = c(d,\alpha ) > 0\), such that for \(\epsilon > 0\) small enough and \(x \ge e^{c/\sqrt{\epsilon }}\)

In particular, there exists a constant \(C > 0\) such that

Proof

Recall that the t-field environment is given by edge-weights \(\{\beta _{ij}e^{T_{i} + T_{j}}\}_{ij \in E(\mathbb {T}_{d})}\), where the t-field \(T_{i}\) has independent increments along outgoing edges and is defined to equal 0 at the origin. In particular, the environment on the subtree emanating from x (which is isomorphic to \(\mathbb {T}_{d}\)) is distributed as a t-field environment on \(\mathbb {T}_{d}\) multiplied by \(e^{2T_{x}}\) (which is the same as requiring that the t-field equals \(T_{x}\) at the “origin” x). For any \(n\in \mathbb {N}\), and a vertex x at generation n, write \(\omega _{n,x}\) for the effective conductance from x to infinity. By the above we have that \(\{e^{-2T_{x}}\omega _{n,x}\}_{\left| x\right| = n}\) are independently distributed as \(C_{\infty }^{\textrm{eff}}\). Also, they are independent from the t-field up to generation n.