Abstract

We show that certain singular limits of relative entropy of vacuum states in two dimensional massive free fermion theory exist and compute these limits. As an application we show that the c function computed by Casini and Huerta based on heuristic arguments arise naturally as a consequence of our results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

von Neumann entropy is the basic concept in quantum information and extends the classical Shannon’s information entropy notion to the non commutative setting. The role of entropy in Quantum Field Theory is more recent and increasingly important, and appears in relation with several primary research topics in theoretical physics as area theorems, c-theorems, quantum null energy inequality, etc. (see for instance [2, 3, 12, 13, 28] and refs. therein).

The singular limits of certain relative entropies contain rich information about the underlying QFT. See Section 4 of [15] for an example where the global index of the underlying conformal net appears. Another such example is the paper [3] in which the celebrated c-theorem in 2 d QFT was derived from these singular limits (assuming they exist) by using monotonicity and covariance. The paper [3] contains physical arguments for c-theorem, and in the beginning of Sect. 2 we give a brief discussion. We refer interesting readers to the paper [3] for more details. It is clear that these arguments used certain unproved assumptions about singular limits of relative entropy. These singular limits are described precisely in Section 2, and is the main focus of this paper. To describe our main results, let us first recall some work in physics literature. In [5, 7] Casini–Huerta computed c function in the case of 2d free massive fermions from singular limits of relative entropy and obtained the remarkable result that such function can be expressed in terms of functions which are related to solutions of Painleve equations of type V (cf. Eq. 31). However the starting point of [5, 7] is the replica trick which uses formal manipulations of a density matrix that does not exist. There are identities in [4] where both sides are infinite, and in deriving an integral formula for the c-function the authors used integration by parts on these infinite functions, assuming certain vanishing properties on the boundary of the integral (cf. Remark 6.12). In fact it is not even known that the function that appears in formula (148) of [5] is integrable. This makes it very challenging to justify the formulas in [5]. See the second paragraph in the introduction of [14] for recent comments.

In this paper we take the point of view that relative entropy is fundamental in QFT, and by investigating the properties of their singular limits we should recover the above mentioned formulas in [5]. Our main results (cf. Th. 6.8, Th. 6.11 and Cor. 6.13 ) give an explicit formula for the singular limits in terms of \(\tau \) function, and as a consequence (cf. Cor. 6.14) the formula of c-function in [5] follow. For reader’s convenience, here is an explicit formula for singular limits of relative entropy as described in Cor. 6.13: the singular limits of relative entropy as defined in Eq. (6) where intervals are as in Fig. 6 are given by the following formula

where

and \(\tau _0(t,\beta ) \) is as defined after Eq. (32).

To prove these results, one of our key observations is that the deep results of [22, 23] provide the right mathematical framework to investigate the properties of the singular limits, and indeed our theorems are proved in such framework, and our earlier results in [26] and [29] also play a crucial role. Even though the starting point of our computation is different from that of [5], some of the ideas are similar to that of [5], since the computations of Green’s functions in [5] are special cases of the general theory of [22, 23]. Here are more detailed account of our results: (1) in Sect. 3 we show that the singular limits exist based on a result in [25]; (2) we show that suitably modified two point “wave function" of [22, 23] gives the resolvent of an operator, and this gives a formula to compute the singular limits; (3) by using the results of [22] and [23] we reduce the computation of the trace of kernel to a local computation around the ends of the interval, allowing us to express the singular limits in terms of \(\tau \) function. Along the way we also find some seemingly new properties of \(\tau \) function (cf. Remark ).

The rest of this paper is organized as follows: In Sect. 2 we define the singular limits we want to compute. In Sect. 3 we show that the singular limits defined in Sect. 2 exist and analyze their properties. In Sect. 4 we collect the results from [22] and [23] that we will use in the paper, and also to set up notations. In Sect. 5 we prove a resolvent formula which will enable us to compute the singular limits. In Sect. 6 we use the results from the previous sections to give explicit formula for these singular limits. As a consequence in the last Sect. 6.3 we derive the formula of Casini–Huerta for c-function.

There are many interesting questions left open by this work. It will be interesting to extend our computation of singular limits to more general non-equal time slice configurations. We expect that the deep results of [23] will be useful. In the case of free bosons, since the entropy formula involves unbounded operators, our methods do not apply immediately. Finally, it seems to be a challenging question to have well controlled singular limits in more than two dimensional space time, since the singularities are no longer of the logarithm type, and the dependence of these singularities on the boundary is more complicated. We expect our results and ideas may be useful in addressing these questions.

We’d like to thank E. Witten for stimulating discussions.

2 The Singular Limits of Relative Entropy

In this section we describe the question that will be addressed in this paper. First we will make some general comments. See [11] for more details on algebraic formulation of QFT. Let \(O_1, O_2\) be two double cones in Minkowski space-time such that \(O_1\) is in the casual complement of \(O_2\), and the intersection of the closure of \(O_1\) and \(O_2\) is empty. Let \({{\mathcal {A}}}(O_1\cup O_2), {{\mathcal {A}}}(O_1)\) and \({{\mathcal {A}}}(O_2)\) be the algebras of observables that are associated with \(O_1\cup O_2\), \(O_1, O_2\) respectively (cf. [11] for algebraic formulation of QFT). Denote by \(\omega _{O_1\cup O_2}, \omega _{O_1}, \omega _{ O_2}\) the restriction of the vacuum state to \({{\mathcal {A}}}(O_1\cup O_2)\), \({{\mathcal {A}}}(O_1)\) and \({{\mathcal {A}}}(O_2)\) respectively. Under the split condition the tensor state \(\omega _{O_1}\otimes \omega _{O_2}\) (graded tensor in the case of fermions, cf. Section 3.2 of [15]) is a normal state on \({{\mathcal {A}}}(O_1\cup O_2)\). Let \(F(O_1, O_2):=S(\omega _{O_1\cup O_2},\omega _{O_1}\otimes \omega _{O_2})\) where \(S(\omega _{O_1\cup O_2},\omega _{O_1}\otimes \omega _{O_2})\) is Araki’s relative entropy of the two states (cf. Section 2.1 of [15]). It is expected (cf. [19]) that \(F(O_1, O_2)\) is finite and goes to infinity when \(O_1\) and \(O_2\) get closer to each other.

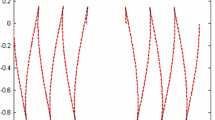

We will focus in the two dimensional case in this paper, and the double cones lie on time equal to 0 slice. In that case we can represent double cone by its time equal to 0 slice. Let \(O_1=[b_1, b], O_2=[c,c_1], b<c\). It is expected that \(F(O_1,O_2)\) will have \(\ln (c-b)\) divergence when \(c-b\rightarrow 0\) (cf. Remark 5.11 for precise statements in the massive free fermion case). The singular limits in this case are already interesting (cf. (2) of Th. 4.2 in [15]). As already observed in [3] (also cf. Section 4.2 of [15]), if we consider \(F(b_1,b,c;c_2,c_1):=F([b_1,c], [c_2,c_1])- F([b,c], [c_2,c_1])\) in Fig. 1, then when \(c_2\rightarrow c^+\) heuristically the logarithm divergence will cancel out at the end c and we may have a well defined function, denoted by \(F(b_1,b,c,c_1)\). Casini and Huerta argued in [3] that such function can be written as \(F(b_1,b,c,c_1)= G(c-b_1)+G(c_1-b)- G(c_1-b_1)-G(c-b)\) where \(G(t), t>0\) is “renormalized” entropy. Let \(t=e^s\), and define the c function to be \(c(s)= \frac{d G(e^s)}{ds}\). Casini and Huerta argued that \(c(s)\ge 0\) and \(c'(s)\le 0\) based on covariance and monotonicity of relative entropy. In Section 4.2 of [15] a large class of such functions in the case of conformal field theories are determined which have c function equal to a constant proportional to the central charge.

Our goal in this paper is to show that \(\lim _{c_2\rightarrow c^+}F(b_1,b,c;c_2,c_1)\) exists and compute the limiting function \(F(b_1,b,c,c_1)\) in the case of massive free fermions. We refer the reader to the introduction part of the paper [8] where general free fermion theory is described in Algebraic QFT framework. We will only be interested in the case of massive free fermions in two dimensional Minkowski spacetime. In the following we will introduce the formula for relative entropy \(F(b_1,b,c;c_2,c_1)\) as discussed on Page 1475 of [29]. First we will do some preparations. Specializing the formulas in the introduction part of the paper [8] to the case of massive free fermions in two dimensional Minkowski spacetime, our problem can be formulated as follows (also cf. [6]). Let

where \(K_0,K_1\) are modified Bessel functions of second kind.

Let

where \(I_2\) is two by two identity matrix.

Denote by C the operator on \(L^2({\mathbb {R}},{\mathbb {C}}^2)\) given by \((Cf)(x)= \int C(y,x)f(y)dy\). Here we have chosen somewhat unconventional notation by integrating with respect to the first variable. The reason will be explained in Sect. 5.4. We write

By the paragraph after Eq. (14), C is a projection. To compare with the notation in [8], this is the projection that is denoted by P in equation (10) of [8] that defines the Fock state which is the ground state for 2-dimensional Minkowski space-time. The reader can also find similar formula in equation (64) of [6].

If I is a closed interval, we will denote by \(P_I\) the projection on \(L^2({\mathbb {R}},{\mathbb {C}}^2)\) which is given by multiplication by the characteristic function of I. In Fig. 1 we have used 1, 2, 3 to denote the intervals \([b_1,b], [b,c], [c_2,c_1]\) respectively which is more convenient. For example \(P_1\) is \(P_{[b_1,b]}\) and \(F(1\cup 2, 2\cup 3)\) is \(F(b_1,b,c;c_2,c_1).\)

Introducing the following:

Definition 2.1

\(\hat{C_I}:=P_I C(1-P_I)CP_I. \) When x is in the resolvent set of the operators \(T,\hat{C}_{12}, \hat{C}_{23}, \hat{C}_{2}, \hat{C}_{123}\), we define

When it is necessary to indicate the dependence of \(\hat{C}(1\cup 2, 2\cup 3,x)\) on the intervals, we will write \(\hat{C}(b_1,b,c;c_2,c_1,x)\). Let \(0\le E\le 1\) be an operator. Let \(R_E(\beta )=(E-1/2+\beta )^{-1}\). Note that \(R_E(\beta )\) is holomorphic in \(\beta \in {\mathbb {C}}-[1/2,-1/2]\) in the operator norm topology. It is now useful to recall the following formula (cf. [5]):

By Lemma 3.12 of [26] the above formula is more conveniently written as

where \(\tilde{E}= E(1-E),x=\beta ^2-\frac{1}{4}.\) This follows as a direct computation as in Lemma 3.12 of [26] that

By the first paragraph on Page 1475 of [29], the relative entropy is given by the trace of an operator constructed from the covariance operator as in definition (2). Following the definitions in a straightforward way we have the following formula

The main question in this paper to determine \(\lim _{c_2\rightarrow c^+} F(1\cup 2, 2\cup 3)\) and study its properties.

In Sect. 6 we will frequently encounter functions H(a, b, w) or operators where a, b are end points of interval [a, b] and w denote the other variables H may depend on. We make the following:

Definition 2.2

For the intervals as in Fig. 1, \(H(12,23,w):= H(12,w)+H(23,w)- H(2,w)-H(123,w).\)

When it is necessary to specify the dependence of H(12, 23, w) on the end points of intervals, we will write H(12, 23, w) as \(H(b_1,b,c;c_2,c_1,w)\), and when \(c_2=c\), \(H(b_1,b,c,c_1,w)\).

3 Existence of Singular Limits in the Massive Free Fermion Case

3.1 Schatten-von Neumann ideals

This paper relies on the results for general quasi-normed ideals of compact operators. Here we limit our attention to the case of Schatten-von Neumann operator ideals \(S_q, q>0\). Detailed information on these ideals can be found e.g. in [20] and [24]. We shall point out only some basic facts. For a compact operator A on a separable Hilbert space H, denote by \(s_n(A), n =1, 2,...\) its n-th singular values, that is, the eigenvalues of the operator \(|A|:=\sqrt{A^*A}\). Note that if \(R_1, R_2\) are bounded operators, Then (cf. [24])

where ||A|| to denote the norm of an operator A. We denote the identity operator on H by 1. The Schatten-von Neumann ideal \(S_q, q>0\) consists of all compact operators A, for which \(|A|_{S_q}:= (\sum _{k=1}^\infty s_k(A)^q)^{\frac{1}{q}} < \infty \). Note that \(|A|_{S_q}=|A^*|_{S_q}\). When \(q=\infty \), \(|A|_{S_\infty }\) is the operator norm of A.

If \(q\ge 1\), then the above functional defines a norm; if \(0<q<1\), then it is a so-called quasi-norm. There is nevertheless a convenient analogue of the triangle inequality, which is called the q-triangle inequality:

We also have the Holder inequality:

See [17] and also [2]. In what follows we focus on the case \(q\in (0, 1]\). We will use ||A|| to denote the norm of an operator, and \(||A||_1\) the trace of |A|. By definition

3.2 A result from [25]

Let \(p(x,y,\xi )\) be a smooth function on \({\mathbb {R}}^3\). Define a class of operators on \(L^2({\mathbb {R}}^1)\) with symbol \(p(x,y,\xi )\) as follows

where \(u\in L^2({\mathbb {R}}^1)\).

Let \( r>0\). Denote by \(L_{\textrm{loc}}^r ({\mathbb {R}}^1)\) the set of measurable functions h such that \(|h|^r\) is integrable on bounded measurable sets. \(0<\delta \le \infty \). Let \(C_n\) be the unit interval centered at n. The lattice square norm is defined by

Let \(l^\delta (L^r)({\mathbb {R}}^1):=\{h\in L_{\textrm{loc}}^r ({\mathbb {R}}^1)||h|_{r,\delta }< \infty \}.\)

Proposition 3.1

Let \(h_1, h_2\in l^\infty (L^2)({\mathbb {R}})\) be two functions such that the distance between the support of \(h_1\) and \(h_2\) is greater or equal to \(\epsilon _0>0\). Let \(q\in (0,1]\) be a number, and let \(n=[q^{-1}]+1\), where \([q^{-1}]\) is the largest integer less or equal to \(q^{-1}\). Suppose that \(a=a(\xi )\) satisfies \(\partial ^m a\in l^q(L^1)({\mathbb {R}}^1)\), for some \(m\ge n\). Note that a depends only on one variable. We will also use \(h_1,h_2\) to denote the corresponding multiplication operators. Then

where \(C(q,m,\epsilon _0)\) is a constant which only depends on \(q,m,\epsilon _0\).

Proof

When \(\epsilon _0\ge 1\) and when \(h_1(x)=0 \) for \(x\ge -\epsilon _0/2,\) and \(h_2(x)=0 \) for \(x\le \epsilon _0/2\), this is Th. 2.7 of [25]. For general \(\epsilon _0>0\), let \(U_{\epsilon _0}\) be the unitary operator on \(L^2({\mathbb {R}})\) defined by \( (U_{\epsilon _0}u)(x)= \epsilon _0^{1/2} u(\epsilon _0x)\). We have \(U_{\epsilon _0}h_i(x)U_{\epsilon _0}^{-1}= h_i(\epsilon _0x)\), and by formula 3.2 of [25] we have \(U_{\epsilon _0}Op(a(\xi )) U_{\epsilon _0}^{-1}= Op(a(\frac{1}{\epsilon _0}\xi )).\) Note that the distance between the support of \(h_1(\epsilon _0x)\) and \(h_2(\epsilon _0x)\) is now greater or equal to 1, and \(Op(a(\xi )), |\cdot |_{2,\infty } \) are translation invariant. The proposition now follows from the fact that \(|\cdot |_{S_q}\) is unitary invariant and Th. 2.7 of [25]. \(\square \)

Suppose that \(a_0(\xi )= \frac{1}{\sqrt{\xi ^2+m^2}}, a_1(\xi )= \frac{\xi }{\sqrt{\xi ^2+m^2}}, m>0\).

Lemma 3.2

Let \(q\in (0,1],\epsilon _0>0\). (1) If \(q(k+1)>1\), then

where \(C_0(\epsilon _0,k,q)\) is a constant which depends only on \(\epsilon _0,k,q;\)

(2) If \(q(k+2)>1,k\ge 1,\) then

where \(C_1(\epsilon _0,k,q)\) is a constant which depends only on \(\epsilon _0,k,q\).

Proof

Ad (1): First note \(a_0(\xi )\) has no singularity for finite \(\xi \). When \(\xi > m\), we have the following convergent series expansion for \(a_0(\xi )\)

It follows that when \(\xi \) is sufficiently large we have \(\partial ^ka_0(\frac{1}{\epsilon _0}\xi ) = O(\frac{1}{\xi ^{k+1}})\). Similarly the same bound holds when \(-\xi \) is sufficiently large. When \(q(k+1)>1\), the series \(\sum _{|n|>0}\frac{1}{n^{q(k+1)}} <\infty \), and (1) is proved. (2) is proved similarly. \(\square \)

Corollary 3.3

Assume that \(h_i=\chi _{E_i}, i=1,2\) are characteristic functions of measurable sets \(E_i\), and the distance between \(E_1,E_2\) is greater or equal to \(\epsilon _0>0\). Let \(a_0(\xi ), a_1(\xi )\) be as in Lemma 3.2. Then for any \(q\in (0,1], i=1,2 \) we have

where \( C(\epsilon _0, q)\) is a constant which only depends on \(\epsilon _0, q\).

Proof

For any \(q\in (0,1]\), let \(n=[q^{-1}]+1\). Choose \(k=n\). Then \((k+2)q> (k+1)q> 1\). Since \(h_i, i=1,2\) are characteristic functions, we have \(\textrm{Sup}_{u\in \textrm{R}} (\int _{C_u} |h_i(\epsilon _0x)^2| dx)^{1/2} \le \epsilon _0,\) and the corollary follows from Prop. 3.1 and Lemma 3.2. \(\square \)

where \(\tilde{v}_l\) are defined as in Eq. (19).

where \(I_2\) is two by two identity matrix. Note that the Fourier transform of \(\tilde{v}_0(x)\) is \(\frac{\pi }{2} a_0(\xi )\), and the Fourier transform of \(\tilde{v}_1(x)\) is \(-i \frac{\pi }{2}a_0(\xi )\) (cf. Section 6.5 of [9]) as in Lemma 3.2. In the momentum space or after Fourier transform (note that Fourier transformation maps convolution into products) the operator \(D_m\) is simply multiplication by

Using \(a_1(\xi )= \frac{\xi }{\sqrt{\xi ^2+m^2}}, a_1^2+ m^2 a_0^2=1\) we see that \(D_m\) is self adjoint and \(D_m^2=1/4\).

Denote by C the operator on \(L^2({\mathbb {R}},{\mathbb {C}}^2)\) whose kernel is given by C(x, y) in Eq. (2). We write

Using the fact that \(D_m\) is self adjoint and \(D_m^2=1/4\) we see that C is a projection. Let \(h_1,h_2\) be characteristic functions of two measurable sets whose distance is greater or equal to \(\epsilon _0\). By inequality (8) we have

Since up to constants each \( C_{ij}\) is \(\textrm{Op}(a_0)\) or \(\textrm{Op}(a_1)\), by Cor. 3.3 we have proved the following:

Corollary 3.4

Let \(E_i\) be two measurable subsets of \({\mathbb {R}}\) whose distance is greater or equal to \(\epsilon _0\), and denote by \(P_{E_i}\) the projections on \(L^2({\mathbb {R}},{\mathbb {C}}^2)\) which are given by multiplication by the characteristic functions of \(E_i, i=1,2\). Then for any \(q\in (0,1]\), we have

where \(D(q,\epsilon _0)\) is a constant which only depends on \(q,\epsilon _0\).

Definition 3.5

If z is a complex number, \(z_*\) will denote the distance between z and the closed interval \([-1/4,0]\).

Note that if \(x>0\), then \(x_*=x\).

Lemma 3.6

Suppose \(Q_i, i=1,2,3\) are three projections with \(Q_1Q_3=0\). Then \(0\le Q_1Q_2Q_3Q_2Q_1\le 1/4\).

Proof

It is clear that \(0\le Q_1Q_2Q_3Q_2Q_1\). Since \(Q_1Q_3=0, Q_3\le 1-Q_1\). We have \(Q_1Q_2Q_3Q_2Q_1\le Q_1Q_2(1-Q_1)Q_2Q_1\). Note that \(Q_1Q_2(1-Q_1)Q_2Q_1= Q_1Q_2Q_1 (1- Q_1Q_2Q_1)\), and \(0\le Q_1Q_2Q_1\le 1\). If \(0\le x\le 1\), then \(x(1-x)\le 1/4\). It follows that \(Q_1Q_2Q_1 (1- Q_1Q_2Q_1)\le 1/4\) and the Lemma is proved. \(\square \)

Lemma 3.7

Suppose that \(0\le T_i\le 1/4, i=1,2,\) and z is a complex number with \(z_*>0\). Then (1)

(2)

where \(0<\sigma < 1\).

Proof

(1) follows from definition. Note that \(||\frac{1}{z+T_i}||\le \frac{1}{z_*}, i=1,2\). Let \(R_i(z)=\frac{1}{z+T_i}, V=z(T_1-T_2), W=R_1(z)VR_2(z)\) in the notation of Lemma 3.11 of [26]. Then \(||R_i(z)||\le \frac{1}{z_*}, i=1,2, \) and

By Lemma 3.11 of [26] we have proved the Lemma. \(\square \)

3.3 Split of intervals

The intervals are as in Fig. 1, where \(1:=[b_1,b], 2:=[b,c], 3=[c_2,c_1], c\le c_2\). We assume that the length of intervals 1, 2 are bounded from below by \(\epsilon _0\). Note that we allow \(b_1\) (resp. \(c_1\)) to be \(-\infty \) (resp. \(\infty \)). Fix \(1/2< \sigma <1\). Given two operators \(T_1, T_2\), we write \(T_1\thicksim T_2\) if \(|T_1-T_2|_{S_\sigma } \le C(\epsilon _0)\) for some constant \(C(\epsilon _0)\) which only depends on \(\epsilon _0\). Note that inequality in (8) implies that if \(T_1\thicksim T_2, T_2\thicksim T_3\), then \(T_1\thicksim T_3\) and similarly if \(T_1 \thicksim T_2, T_3\thicksim T_4\), then \(c_1 T_1 + c_2 T_2 \thicksim c_1 T_1 + c_2 T_2\) for any bounded operators \(c_1, c_2\) by Eq. (9). Also note that \(T_1\thicksim T_2\) iff \(T_1^*\thicksim T_2^*\). For two subset E, F of the real line we use d(E, F) to denote the distance between them.

Proposition 3.8

Proof

We have \(1-P_{123}= P_0+P_4\) as in Fig. 2. Note that when either \(b_1=-\infty \) or \(c_1=\infty \), \(4_1=\varnothing \) or \(4_2=\varnothing \). Split interval 4 as the union of two intervals \(4_1, 4_2\) such that \(d(4_1,23)\ge \epsilon _0, d(4_1,1)\ge \epsilon _0\) (we define the distance of an empty set to interval 1 to be \(\infty \)). By Cor. 3.4 we have

and the proposition is proved. \(\square \)

Proposition 3.9

Let intervals be as in Fig. 3. Then

Proof

By our choice in Fig. 3\(d(5,04)\ge \epsilon _0/2, d(6,1)\ge \epsilon _0/2\). Apply Cor. 3.4 we have

It follows that

Similarly

and

\(\square \)

Theorem 3.10

where \(\hat{C}(1\cup 2, 2\cup 3, z)\) is defined in Definition 2.1, \(C(\epsilon _0)\) is a constant which only depends on \(\epsilon _0\), and \(z_*>0\) is defined as in Definition 3.5.

Proof

The theorem is proved by a series of reductions, and in each step we will throw away terms T which verifies \(||T||_1\le C(\epsilon _0) \frac{|z|}{z_*^{1+\sigma }}\) until we get to 0.

First by Prop. 3.8\(\hat{C}_{123}\thicksim P_1 C(1-P_{123}) CP_1 + P_{23} C(1-P_{123}) CP_{23}\).

By Lemmas 3.6 and 3.7, after thrown away a term T which verifies \(||T||_1\le C(\epsilon _0) \frac{|z|}{z_*^{1+\sigma }}\), we can replace \(g_0(\hat{C}_{123},z) \) by \(g_0(P_1 C(1-P_{123}) CP_1+P_{23} C(1-P_{123}) CP_{23},z).\)

Note that \(P_1 P_{23}=0\), we have

Since \(P_1 C P_3 \thicksim 0\), we can replace \(g_0(P_1 C(1-P_{123}) CP_1,z)\) by \(g_0(P_1 C(1-P_{12}) CP_{1},z)\). By Prop. 3.9, Lemmas 3.6 and 3.7 we can replace \(g_0( P_{23} C(1-P_{123}) CP_{23},z)\) by \(g_0(P_6 C(P_0+P_4) CP_{6},z)\). Similarly by Prop. 3.9, Lemmas 3.6 and 3.7 we can replace \(\hat{C}_{23}\) by \(P_5CP_1CP_5 +P_6 C(P_0+P_4) CP_{6}\). Hence we can replace

by

Note that this term is independent of interval 3. Hence by repeating the above reductions for

while taking 3 to be an empty set, and recall that \(\hat{C}(1\cup 2, 2\cup 3, z)\) as defined in Definition 2.1, we have proved the theorem. \(\square \)

Definition 3.11

When \(b_1=-\infty , c_2=c\) in Fig. 2, dropping \(-\infty \) we will write the corresponding \(\hat{C}(12,23,x)\) in definition (2.1) simply as \(\hat{C}(b,c,c_1, x)\). Similarly for \(c_1=\infty \). When \(b_1=-\infty , c_1=\infty , c_2=c\) in Fig. 2, we will write the corresponding \(\hat{C}(12,23,x)\) in definition (2.1) simply as \(\hat{C}(b,c,x)\). Similarly for any H(12, 23, w) as in definition 2.2 we will write H(12, 23, w) as H(b, c, w) when \(b_1=-\infty , c_1=\infty , c_2=c\) in Fig. 2.

Lemma 3.12

(1) Assume that \(f(y)= f_1(y) A f_2(y)\) where A is a fixed trace class operator and \(f_i(y)\rightarrow f_i(0)\) strongly as \(y\rightarrow 0\). Then \(\lim _{x\rightarrow 0} {\hbox {tr}}(f_1(y) A f_2(y))={\hbox {tr}}(f_1(0) A f_2(0))\); (2) Assume that \(f(z)= f_1(z) A f_2(z)\) where A is a trace class operator and \(f_i(z)\) is holomorphic in z in strong operator topology. Then \( {\hbox {tr}}(f_1(z) A f_2(z))\) is holomorphic in z.

Proof

Ad (1), since A is trace class, \({\hbox {tr}}(f_1(y) A f_2(y))={\hbox {tr}}(f_2(y) f_1(y) A )\) and (1) follows by Lemma 3.10 of [15]. (2) follows from (1) and definitions. \(\square \)

Proposition 3.13

Assume that z is a complex number with \(z_*>0\). Then

Proof

All equations are proved in the same way. Let us prove the first equation. It is enough to examine the “thrown away terms" in the proof of Th. 3.10. By Lemma 3.6, all such “thrown away terms" are as in (1) of Lemma 3.7. A typical such term is of the following \(g_1(y) f_2(y)g_3(y)\), where \(f_2(y) = P_i CP_j \), where \(i\ne j\) denote intervals with \(d(i,j)\ge \epsilon _0/2\), and either \(P_i\) or \(P_j\) depends on \(y=c_2-c\), and this projection, denoted by P(y) decreases or increases to a projection P strongly as \(y\rightarrow 0^+\). For definiteness assume that \(P_j=P(y)\). We can replace P(y) by \(P(y)P(\eta )\) when \(y<\eta \) and P(y) is decreasing, or P(y) by P(y)P when P(y) is increasing. Apply Lemma 3.12 to \(g_1(y) P_i CP (\eta )P(y) g_3(y)\) with \(A= P_i CP (\eta )\), or to \(g_1(y) P_i CP P(y) g_3(y)\) with \(A= P_i CP \), note that in both cases by Cor. 3.4A is trace class, we conclude that when \(y\rightarrow 0\), the thrown away terms in \( \hat{C}(b_1,b,c;c_2,c_1,z)\) converges to the corresponding thrown away terms in \( \hat{C}(b_1,b,c,c_1,z)\) in trace, and similarly for the rest of equations. \(\square \)

Theorem 3.14

where \(F(b_1,b,c; c_2,c_1), F(b_1,b,c,c_1) \) is defined as in Eq. (6).

Proof

By Th. 3.10, since \(\beta ^2- 1/4 {>}0\), \(||\hat{C}(b_1,b,c;c_2,c_1, \beta ^2- 1/4)||_1 {\le } C(\epsilon _0) \frac{1}{(\beta ^2- 1/4)^\sigma }\). Recall that \(1/2<\sigma <1\). Since the function \( \frac{2\beta }{\beta +1/2} \frac{1}{(\beta ^2- 1/4)^\sigma }\) is integrable on \((1/2,\infty )\), the theorem now follows from Dominated Convergence Theorem and Prop. 3.13. \(\square \)

Proposition 3.15

\({\hbox {tr}}(\hat{C}(b,c,\beta ^2- 1/4))\) is holomorphic in \(\beta \in {\mathbb {C}}-[-1/2,1/2]\).

Proof

As in the proof of Prop. 3.13, it is enough to show that the trace of “thrown away term" in the proof of Th. 3.10 is holomorphic in \(z=\beta ^2- 1/4\). Since \(\beta \in {\mathbb {C}}-[-1/2,1/2]\), \(z\in {\mathbb {C}}-[-1/4,0]\). By Lemma 3.6, all such terms are as in (1) of Lemma 3.7. A typical such term is \(f_1(z) A f_2(z)\) where A is a fixed trace class operator, and \(f_1(z), f_2(z)\) are holomorphic in z with respect to the norm topology since \(z\in {\mathbb {C}}-[-1/4,0].\) By Lemma 3.12 the proposition is proved. \(\square \)

Note that by Th. 3.10 and Th. 3.14 the singular limit F(12, 23) is well defined for the intervals 1, 2, 3 as in Fig. 1 with \(c_2=c\). For any interval I, we introduce

By using the convention as in definition (2.2), from Eq. (5) we have

By Eq. (6) we have the following formula

We will compute this function in Sect. 6.

4 Some Results from [22, 23]

In this section we will review some of the results of [22] and [23] that will be used in this paper. The reader is encouraged to consult [22] and [23] for more details. For a survey, see [21]. The functions introduced at the end of this section will play a crucial role in Sects. 5 and 6. Unfortunately there is no clear rigorous short cut to explain why these functions may be useful for our purpose. See [18] for a different perspective which may be helpful. Let \(z=(x^1+ ix^2)/2, {\bar{z}}=(x^1- ix^2)/2\) denote the complex coordinate of the Euclidean 2-space. We write \(\partial _z=\partial , {\bar{\partial }}= \partial _{{\bar{z}}}\). Consider the Euclidean Klein–Gordon equation

with positive mass \(m>0\). We introduce a series of multi-valued special solutions of the above equations. For \(l\in {\mathbb {C}}\) let \(I_l(x)\) and \(K_l(x)\) denote the modified Bessel functions of the first and second kind respectively. Set

where \(z=\frac{1}{2} re^{i\theta },{\bar{z}}=\frac{1}{2} re^{-i\theta }, r\ge 0, \theta \in {\mathbb {R}}.\)

These functions are multi-valued solutions (outside the origin) of Eq. (18), having the following local behavior as \(|z|\rightarrow 0\):

where \(l!= \Gamma (l+1)\), and ... are higher order term. We also have

where \(\gamma _0\) is Euler’s constant. These functions satisfy the following recursion relations

where \(\partial :=\partial _z, {\bar{\partial }}:=\partial _{{{\bar{z}}}}.\) In this paper we will make use of two point “wave functions" as defined in section 3.2 of [22]. Let \((a_i,\bar{a_i}), i=1,2\) be two distinct points of \({\mathbb {R}}^2\). Denote by \(\tilde{X}'\) the universal covering manifold of \(X'={\mathbb {R}}^2 -\{(a_i,{\bar{a}}_i)\}_{i=1,2}\) with the covering projection \(\pi : \tilde{X}'\rightarrow X'\). We fix base points \(\tilde{x}_0 \in \tilde{X}', {x}_0 \in {X}' \) so that \(\pi (\tilde{X_0}) =x_0\), and denote by \(\pi _1(X'; x_0)\) the fundamental group of \(X'\). An element \(\gamma \in \pi _1(X'; x_0)\) is identified with the covering transformation it induces on \(\tilde{X}'\). For a function u on \(\tilde{X}'\) we set \((\gamma u)(\tilde{x})= u(\gamma ^{-1}(\tilde{x}))\).

Fix a set of generators \(\gamma _1,\gamma _2 \in \pi _1(X'; x_0)\), where \(\gamma _i\) is a closed path encircling \((a_i,\bar{a_i})\) clockwise once.

Definition 4.1

For \(l_\nu \in {\mathbb {R}} {\smallsetminus } {\mathbb {Z}}\), \(W_{a_1, a_2}^{l_1,l_2}\) will denote the space consisting of complex valued real analytic function v on \(\tilde{X}'\) with the following properties: (1) v satisfies Eq. (18) on \(\tilde{X}'\); (2) \((\gamma _\nu v)(z,{\bar{z}}) = e^{-2\pi il_\nu } v(z,{\bar{z}})\) in a small neighborhood of \((a_\nu ,{\bar{a}}_\nu ), \nu =1,2\); (3)

when \(|z-a_\nu |<\eta \) for some \(\eta >0\), \(v_k[a_\nu ](z):=v(z-a_\nu )\) where k is \(\pm l_{\nu }+j\), \(c's\) are constants independent of z, and the sum is absolutely convergent on any compact subset of \(|z-a_\nu |<\eta \);

(4) \(|v|= O(e^{-2m |z|})\) as \(|z|\rightarrow \infty \).

Remark 4.2

When we say that a function \(f(z,{\bar{z}}, a_\mu ,{\bar{a}}_\mu )\) verify any of the conditions listed in definition 4.1 we simply mean that those conditions listed in definition 4.1 are satisfied with v replaced by f.

We note that our \(W_{a_1, a_2}^{l_1,l_2}\) is \(W_{B,a_1,a_2}^{l_1,l_2,\textrm{strict}}\) on page 589 of [22]. Denote by L the matrix

and by slightly abusing notations we denote by \(1-L\) the matrix

For each such fixed L We will make use of two canonical elements in \(W_{a_1, a_2}^{l_1,l_2}\) (cf. Page 592 of [22]) given by \(v_\mu (L)\) which satisfies

where ... are higher order terms. Here we have suppressed the dependence of \(v_\mu (L)\) on \(z,{\bar{z}},a_\mu , {\bar{a}}_\mu , \mu =1,2\), and the dependence of \(\alpha _{\mu \nu }(L), \beta _{\mu \nu }(L) \) on \(a_\mu , {\bar{a}}_\mu , \mu =1,2\) in the notation when no confusion arises. In general if \(k\in {\mathbb {Z}}\), we have

It follows that \(\alpha _{\mu \nu }(L+k)= \alpha _{\mu \nu }(L),\beta _{\mu \nu }(L+k)= \beta _{\mu \nu }(L)\).

Only when it is necessary to indicate the dependence of \(\alpha _{\mu \nu }(L), \beta _{\mu \nu }(L)\) on \(a_\nu ,{\bar{a}}_\nu \)we will write them as \(\alpha _{\mu \nu }(a_1,a_2,L), \beta _{\mu \nu }(a_1,a_2,L)\). We shall make use of the next two lemmas in Sect. 5.

Lemma 4.3

Assume that \(|l|<1/2\). Suppose that v satisfies (1) and (2) of Definition 4.1, and close to \(a_\mu \) we have \(v=O(|z-a_\mu |^{-|l_\mu |})\). Then \(v\in W^{l_1,l_2}\).

Proof

Let us first consider the case when \(1/2> l_\mu >0, \mu =1,2. \) By Prop. 3.1.3 of [22] we have the following expansion of v around \(a_\mu \):

Since \(1/2> l_\mu >0 \), the above expansion is

When \(-1/2<l_\mu <0\), since \(v=O(|z-a_\mu |^{l_\mu })\), the expansion takes the form

In both cases we see that \(v\in W^{l_1,l_2}\). \(\square \)

Lemma 4.4

Assume that \(0<|l_1|<1/2, l_2=-l_1\) and \(v\in W^{{l_1,l_2}}\). If \(\partial _{{\bar{z}}}v \in L^2[a_1,a_2]\), then \(v=0\).

Proof

By definition around \(a_1\) v has expansion

where ... are higher order terms. Note that when \(0<l_1<1/2\), \(({\bar{z}}-a_1)^{l_1-1}\) is not in \(L^2[a_1,a_2]\). Since \(\partial _{{\bar{z}}}v \in L^2[a_1,a_2]\), we must have \(c_{l_1}^*(v)=0\). Similarly near \(a_2\) v has expansion

and we have \(c_{l_2}^*(v)=0\). Hence \(v\in W^{{l_1,l_2+1}}\). Since \(c_{-l_2-1}(v)=0, c_{l_1}^*(v)=0\), by Prop. 3.2.2 of [22] we have \(v=0\). The case when \(-1/2<l_1<0\) is similar. \(\square \)

Lemma 4.5

Let

when \(r=2|z-a_\mu |\le \eta ,0<\eta <1\). Then for \(\delta \ge 0\), if \(r\le \eta /2\) we have

where in the first inequality \(\delta = -l_\mu \textrm{mod} {\mathbb {Z}} \), and in the second inequality \(\delta = l_\mu \textrm{mod} {\mathbb {Z}}\), \(l_\mu , \mu =1,2\) are not integers, and C is a constant independent of r.

Proof

The proof is implicitly contained in the proof of Prop. 3.1.3 of [22]. We will prove the first inequality since the second one is proved in a similar way. As in the proof of Prop. 3.1.3 of [22], let \(z-a_\mu = \frac{1}{2} r e^{i\theta } \), we have

where \(c_{\delta +k}(v)\in {\mathbb {C}}\) is independent of r. Recall that \(I_\delta (x) = \sum _{0\le m} \frac{1}{m!\Gamma (m+\delta +1)} (\frac{x}{2})^{2\,m+\delta }= (\frac{x}{2})^{\delta }\sum _{0\le m} \frac{1}{m!\Gamma (m+\delta +1)} (\frac{x}{2})^{2\,m}.\)

When \(\delta \ge 0\), we note that \(\sum _{0\le m} \frac{1}{m!\Gamma (m+\delta +1)} (\frac{x}{2})^{2\,m}\) is an increasing function of \(x>0\), so we have

when \(0<x\le \eta \).

We have

Since \(\sum _{0\le k<\infty } |c_{\delta +k}(v) v_{\delta +k}[a_\mu ](a_\mu + \frac{1}{2}\eta )| < \infty \), we conclude that

The second inequality is proved similarly. \(\square \)

Recall that L is the matrix

We use the following notation

The next proposition follows from Prop. 3.2.5 in [22]:

Proposition 4.6

(1) when \(l_1=-l_2\in {\mathbb {R}} -{\mathbb {Z}},\) we have

(2)

The entries of \(\alpha (L), \beta (L)\) satisfy a remarkable set of equations. These equations are derived by considering the deformation of the equations satisfied by \(v_\mu (L)\) in [22] and more generally by using product formula in [23]. Let us now describe these equations as on Page 935 of [23] (also cf. Page 623 of [22]). Let us first define an invertible matrix G whose inverse is given by \(\beta (L) \sin (\pi L)= -G^{-1}\). Let

Then G takes the following form

where

Then \(\beta (L) \sin (\pi L)= -G^{-1},\) and \(G^{-1}\) takes the following form

\(k:=k_l(t),\psi :=\psi _l(t)\) only depends on l, t, where \(k_l(t),\psi _l(t)\) are as in Eq. (29), \(k, \psi \) satisfy the following:

The above equation for \(\psi \) can be converted to the following Painleve equation of the fifth kind by the substitution \(s=t^2, \sigma =\tanh ^2(\psi )\):

4.1 \(\tau \) Function and related functions

Let us also recall some basic facts about \(\tau \) function. We will only consider the case when \(l_1=-l_2, l=2l_1\). This is a function which is analytic in l when |l| is small (cf. Lemma 4.11), and real analytic in \(a_{i}, {\bar{a}}_i, i=1,2\). The defining equation is (cf. Remark on Page 628 of [22] or Page 936 of [23]):

Equation (32) will play an important role in Section6.2. Note \(\tau _0:=\ln \tau \) only depends on t as in Eq. (27) and l, and we will write such a function as \(\tau _0(t,l)\), and suppress its dependence on t, l in the following for the ease of notations. Let \(\tau _1= -\tau _0.\) Then by equation (4) in [27] \(\tau _1\) satisfies the following equation:

\(\psi \) satisfies the Eq. (30) with boundary condition

as \(t\rightarrow \infty \). We note that when \(l_1\in {\mathbb {R}},4 i\sin (\pi l_1) K_{2l_1}(t) \in i{\mathbb {R}}\) and when \(l_1\in i{\mathbb {R}},4 i\sin (\pi l_1) K_{2l_1}(t) \in {\mathbb {R}}\). We have (cf. Appendix of [7]) \(\psi \in i{\mathbb {R}}\) when \(l_1\in {\mathbb {R}}\), and \(\psi \in {\mathbb {R}}\) when \(l_1\in i{\mathbb {R}}\).

Remark 4.7

In Sect. 6 we will sometimes consider real analytic function f(z, ...) restricted to \(z\in {\mathbb {R}}\), where ... stands for possible other variables, and consider the partial derivative of f with respect to the real variable z. To avoid possible confusions with \(\partial _z\) which is defined to be the partial derivative of f with respect to the complex variable z, we will use \(\frac{df}{dz}\) to denote the partial derivative of f with respect to the real variable z.

We also have the following facts from [22]:

Proposition 4.8

Assume that \(l_1, l_2\in {\mathbb {R}}-{\mathbb {Z}}\). (1)

where \(c, \epsilon \) are as in Eq. (27);

(2) When \(l_1=-l_2\in {\mathbb {R}}\), we have \({\bar{\beta }}_{21}(L){\bar{\beta }}_{21}(1-L)= \sinh ^2\psi ;\)

(3)

(4) When \(a_1, a_2\) are real and let \(t=2m(a_2-a_1)>0\), then we have

Here we have used \(\frac{d \alpha _{11}}{da_i}\) to denote the partial derivative of \(\alpha _{11}\) with respect to the real variable \(a_i\) as in Remark 4.7.

Proof

(1) follows from (2) of Prop. 4.6 and Eq. (28). When \(l_1=-l_2\in {\mathbb {R}}\), by the remark after Eq. (34) we have \(\sinh \psi \in i{\mathbb {R}}\), and (2) follows from (1) and Eq. (27). (3) follows from equation 3.3.11 of [22]. For (4), note that in this case from Eq. (32) we have \(\frac{d\tau _0}{dt}= \alpha _{11}\). Recall that \(\tau _1=-\tau _0\), and by Eq. (33) we have \(\frac{d \alpha _{11}}{dt}=\sinh ^2\psi - \frac{1}{t} \alpha _{11}.\) From (3) we have \(\frac{\alpha _{11}}{t}= \frac{1}{2}(\alpha _{12}(L) \alpha _{21}(L)+\sinh ^2\psi )\). Hence we have

(4) now follows since \(t=2\,m(a_2-a_1)\) and \(\alpha _{11}\) only depends on t. \(\square \)

When \(|l|=2|l_1|\) is sufficiently small, we have the following convergent power series expansion for \(\tau _0=\ln \tau \) (cf. Page 933 of [23]):

where

and \(u_k^{\pm l}\) stands for \(u_k^{-l}\) for even k and \(u_k^{l}\) for odd k, \(\lambda = i\sin (\pi l_1)\). Let \(K(u,v):=e^{-t/4 (u+u^{-1} +v+v^{-1})} \frac{1}{u+v} \times u^{l} v^{-l}. \) Define K to be the operator on \(L^2((0,\infty ),dx)\) with kernel K(u, v). Let \(\tau _2:=\ln \textrm{det}(I-\lambda _1^2 KK')\) where \(\lambda _1= 2i \sin (\pi l_1)\), and \(K'\) is the transpose of K. When \(\lambda _1\) is sufficiently small we have

So we see that \(\tau _0= -\tau _1=-\tau _2\). For an operator T we use ||T|| and \(||T||_2\) to denote its norm and Hilbert-Schmidt norm respectively.

Lemma 4.9

Assume that \(t\ge \eta >0, |l|<1\). Then

where \(C_i(\eta )\) are functions of \(\eta \) only and \(C_i(\eta )\rightarrow 0\) as \(\eta \rightarrow \infty , i=0,1.\)

Proof

Fix \(u>0\), let us evaluate the maximum of \(\int _0^\infty |K(u,v)| dv\). Denote by r the real part of l. Since \(|r|<1 \) We have

where \(f_r(u):= e^{-t/4 (u+u^{-1})} u^{r}\). Note that \(\int _0^\infty e^{-t/4 (v+v^{-1})} v^{-r-1}dv \le \int _0^1 e^{-\eta /4 (v+v^{-1})} v^{-2}dv + \int _1^\infty e^{-\eta /4 (v+v^{-1})} v^{-1+|r|}dv\), and both \(\int _0^1 e^{-\eta /4 (v+v^{-1})} v^{-2}dv\) and \(\int _1^\infty e^{-\eta /4 (v+v^{-1})} v^{-1+|r|}dv \) are decreasing function of \(\eta \), and goes to 0 as \(\eta \rightarrow \infty \). Also \(f_r(u)= f_{-r}(u^{-1})\). To find the maximum of \(f_r(u), u>0\), it is sufficient to assume that \(1>r\ge 0\). Since \(u^{r} < 1\) if \(0<u<1\) and \(u^{r+1} < u^2\) if \(1<u\), we have \(f_r(u) \le e^{-\eta /4 (u+u^{-1})} (1+u^2) \le e^{-\eta /2} + e^{-\eta /4 (u+u^{-1})} u^2.\) The critical point of the function \( f_1(u):=e^{-\eta /4 (u+u^{-1})} u^2\) is given by the solution of the equation \(f_1'(u)=0\) and it is given by \(u=\frac{-8/\eta + \sqrt{\frac{64 }{\eta ^2} + 4}}{2}\). One easily checks that the maximum of \(f_1(u)\) is a function of \(\eta \), and goes to 0 as \(\eta \rightarrow \infty \).

Similar estimate holds when we integrate with respect to u and fix v in the previous paragraph. By Th. 6.18 of [10] we have proved \(||K||\le C_0(\eta )\) where \(C_0(\eta ) \rightarrow 0\) as \(\eta \rightarrow \infty \). We note that \(||K||_2^2 =\int _0^\infty \int _0^\infty |K(u,v)K(v,u)| dv du\). |K(u, v)K(v, u)| is a decreasing function of t and is dominated by an integrable function independent of t for any \(t\ge \eta \). Since |K(u, v)K(v, u)| goes to 0 as \(t\rightarrow \infty \), Lebesgue’s dominated convergence theorem implies that \(\int _0^\infty \int _0^\infty |K(u,v)K(v,u)| dv du\) goes to 0 as \(t\rightarrow \infty \). The proof for \(K'\) is similar. \(\square \)

Given two series \(\sum _{n\ge 0} f_n, \sum _{n\ge 0} g_n\) we say \(\sum _{n\ge 0} f_n\) is dominated by \(\sum _{n\ge 0} g_n\) if \(|f_n|\le |g_n|\) for all \(n\ge 0\).

The following Lemma follows from Cauchy’s formula and the proof can be found in Chapter 6 of [1]:

Lemma 4.10

Suppose that \(f(z), f_n(z), \forall n\ge 0\) are holomorphic functions on an open set U.

If \(f(z)=\sum _{n\ge 0} f_n(z)\) and assume that \(\sum _{n\ge 0} f_n(z)\) is dominated by a series \(\sum _{n\ge 0} C_n\) with \(C_n\) independent of z and \(\sum _{n\ge 0} |C_n|<\infty \). Then \(f(z)'=\sum _n f_n(z)'\) and the series converges absolutely and uniformly on any compact subset of U.

(1) of the following Lemma is a special case of a more general result Prop. 4.5.1 of [23]. We present a simple proof which also gives us part (2) and (3) of the Lemma that will be used later.

Lemma 4.11

Assume that \(l_1\) is a complex number. (1) If \(t\ge \eta >0\), then the power series in (38) is dominated by a convergent geometric series for \(|l|=2|l_1|< C_0(\eta )'\) where \(C_0(\eta )'\) only depends on \(\eta \); (2) \(\tau _0\rightarrow 0, \partial _{l_1} \tau _0\rightarrow 0\) as \(l\rightarrow 0,\) and \(\tau _0\rightarrow 0, \partial _{l_1} \tau _0\rightarrow 0\) as \(t\rightarrow \infty ;\) (3) \(\tau _0\) is a holomorphic function of l, and \(\partial _{l_1} \tau _0\) is continuous in t for \(t\ge \eta >0\) and \(|l|< C_0'(\eta ).\)

Proof

Ad (1): Note that \(-\tau _0 = \sum _{k\ge 1} \lambda ^{2k} {\hbox {tr}}((KK')^k)\).

By Lemma 4.9\(|{\hbox {tr}}((KK')^k)|\le ||K||^{k-1} ||K'||^{k-1} ||K||_2 ||K'||_2 \le C_0(\eta )^{2k-2} C_1(\eta )^{2},\) since \(\lambda \rightarrow 0\) as \(l\rightarrow 0\), it follows that there is a constant \(C_0(\eta )'\) such that if \(|l|\le C_0(\eta )'\) then the series is dominated by a convergent geometric series. It follows immediately that \(\tau _0\rightarrow 0\) as \(t\rightarrow \infty \) or as \(l\rightarrow 0\), and \(\tau _0\) is a holomorphic function of \(l_1\) for \(|l|< C_0(\eta )'\).

By (1)

where by Lemma 4.10 the series on the righthand side converges absolutely and uniformly on any compact subset of \(|l_1|< C_0(\eta )'\). To prove the rest of cases in (2) and (3), it is sufficient to check each term \(\partial _{l_1} (\frac{ (2i \sin (\pi l_1))^{2k} }{k} e_{l}^{(2k)}(t))\) has the properties described in (2) and (3). For an example since \(\partial _{l_1}(2i \sin (\pi l_1))^{2k} \rightarrow 0\) as \(l_1\rightarrow 0\), and \( (2i \sin (\pi l_1))^{2k} \rightarrow 0\) as \(l_1\rightarrow 0\), we have \(\partial _{l_1} \tau _0\rightarrow 0\) as \(l\rightarrow 0.\) The rest of the cases are proved in a similar way. \(\square \)

Lemma 4.12

(1) Let

and assume that \(t=2\,m (a_2-a_1)\ge \eta >0\), \(l_1=k+l_0, l_2=k-l_0, k\in {\mathbb {Z}}.\) Then there is a constant \(C_2(\eta )\) which only depends on \(\eta \) such that if \(|l_0| < C_2(\eta )\) then \(v_\mu (L)\) is holomorphic in \(l_0\); (2) \(\partial _{l_i} v_\mu (L)=O(e^{-m|z|})\) when \(|z|\rightarrow \infty , i=1,2, |l_0| < C_2(\eta ).\)

Proof

(1) follows from Prop. 4.4.1 and Remark 1 on page 929 of [23]. Write \(z=1/2 r e^{i\theta }\). Recall that \(v_\mu (L)\) verifies (4) in definition 4.1. Note since \(l_1+l_2\in {\mathbb {Z}}\), when \(|z|=1/2 r\ge R\) where R is a fixed large positive number, by Prop. 3.1.5 of [22] we have the expansion \(v_\mu (L)=\sum _{k\ge 0} c_k K_k(mr)\). Here \(c_k\) is independent of r and is holomorphic in \(l_1,l_2\). As on page 587 of [22] we have

The term \(K_0(mr)\) can be estimate directly as \(K_0(mr)=O(\frac{1}{\sqrt{mr}} e^{-mr})\), and (2) is proved. \(\square \)

4.2 An analogue of Green’s function

There exists an analogue of Green’s function \(v_0(z^*,z,L)\) (Note that this is a two variable function) (cf. Page 596 of [22]) which verifies (1), (2) and (4) in definition 4.1 for variable z and: when \(z^*\ne a_\nu ,z^*\ne {\bar{a}}_\nu \), (1) near \(z^* \),

where ... is a smooth function; (2) when z is near \((a_\nu ,{\bar{a}}_\nu )\),

where

and ... are lower order terms.

\(v_0(z^*, z,L)\)Footnote 1 (cf. Cor. 3.3.11 of [22]) is a real analytic function of \((z,{{\bar{z}}}, z^*,\bar{z^*},a_\mu , {\bar{a}}_\mu ), \mu =1,2\), and satisfies a remarkable set of equations as described on Page 626 of [22]:

For \(\nu =1,2\),

Note that even though \(v_0(z^*, z,L)\) is singular at \(z=z^*\), \(m^{-1}\partial _{a_\nu } v_0(z^*, z,L)\) is non-singular at \(z=z^*\).

5 The Resolvent

Let \(I:=[a_1, a_2] \in {\mathbb {R}}\) be a closed interval. Throughout this section we shall assume that the following conditions are satisfied:

where \(C_0'(\eta ), C_2(\eta )\) are constants in Lemmas 4.11 and 4.12 respectively.

5.1 Condition H

We first recall some results from Chapter 2 of [17].

Definition 5.1

A function f defined on \([a_1,a_2]\) satisfies \(H(\mu ), 0<\mu \le 1\) if there is a constant \(C\ge 0\) such that

for all \(x_1,x_2\in [a_1,a_2]\). A function f is said to satisfy condition H on \((a_1, a_2)\) if for any closed interval \([a_1',a_2']\subset (a_1,a_2)\), there exists \(0<\mu '\le 1\) which may depend on \([a_1',a_2']\) such that f satisfies \(H(\mu ')\) on \([a_1',a_2']\).

The proof of the following formula, also known as Sokhotski-Plemelj formula, can be found on Page 49 of [17]:

Lemma 5.2

Assume that f(x) verifies condition H on \(I=(a_1, a_2)\). Then if \(x\in (a_1,a_2)\)

We will also need the property of singular integrals with Cauchy kernels at the ends of the interval I( cf. Page 74 of [17] for more precise estimates):

Lemma 5.3

Suppose \(\phi (x)\) given on \(I=(a_1, a_2)\) satisfy the following conditions: (1) On any closed interval \([a_1',a_2']\subset (a_1,a_2)\), \(\phi (t)\) satisfies the \(H(\mu )\) condition

where A does not depend on \(x_1,x_2\) but may depend on \([a_1',a_2']\), \(0<\mu <1\).

(2) Assume that near the ends \(c=a_1\) or \(c=a_2\) the function \(\phi (x)\) is of the form

where \(\phi ^*(x)\) satisfies condition H near and at c.

Let \(\Phi (z)=\frac{1}{2\pi i}\int _I \frac{\phi (x)}{x-z}\). First assume that z is near c but not on I. Then (3) If \(\gamma =0, \Phi (z)=\pm \frac{\phi (c)}{2\pi i}\ln \frac{1}{z-c} + \Phi _0(z)\) where the upper sign is taken for \(c=a_1,\) the lower for \(c=a_2\). \(\ln \frac{1}{z-c}\) is to be understood to be any branch, single valued in the plane cut along I; \(\Phi _0(z)\) is a bounded function; (4) If \(\gamma = \alpha +i\beta , 1>\alpha >0\), \(\Phi (z)=\pm \frac{\phi (c)^*}{(z-c)^\gamma } \frac{e^{{\pm }\gamma \pi i}}{2i \sin \gamma \pi } + \Phi _0(z)\) where the signs are as in (3), \((z-c)^\gamma \) is any branch, single valued near c in the plane cut along I and taking the value \((x-c)^\gamma \) on the upper side of I, and \(|\Phi _0(z)|< \frac{C}{|z-c|^{\alpha _0}}\) where \(C,\alpha _0\) are real constants with \(\alpha _0< \alpha \).

For the point \(z=x_0\) lying on I, the following results hold: (5) If \(\gamma =0, \Phi (x_0)=\pm \frac{\phi (c)}{2\pi i}\ln \frac{1}{z-c} + \Phi _0(x_0)^*\) where \(\Phi _0(x_0)^*\) satisfies the condition H near and at c, and the signs are again as in (3); (6) If \(\gamma = \alpha +i\beta , 1>\alpha >0\), \(\Phi (z)=\pm \frac{\phi (c)^*}{(x_0-c)^\gamma } \frac{\cot \gamma \pi }{2i} + \Phi _0(x_0)\) where the signs are as in (3), \(\Phi _0(x_0)= \frac{\Phi ^{**}(x_0)}{|x_0-c|^{\alpha _0}}\) where \(\Phi ^{**}(x_0)\) satisfies condition H near and at c and \(\alpha _0 <\alpha \).

We will also make use of the following

Lemma 5.4

Suppose that \(h\in L^2[a_1,a_2]\) and \(h_0(x_0,x)\) is a smooth function of \((x_0,x)\) in an open set containing square \([a_1,a_2]\times [a_1,a_2]\). Then the function \(h_1(x_0)=\int _I \ln |x-x_0| h_0(x_0,x) h(x)dx\) verifies \(H(\mu )\) on \(I=[a_1,a_2]\) for any \(0<\mu <\frac{1}{4}\).

Proof

Define \(\psi _0(x_1,x):= |x_1-x|^\mu \ln |x_1-x|\) where \(0<\mu <\frac{1}{4}\). By a result on Page 17 of [17] for fixed \(x\in I\), \(|\psi (x_1,x)-\psi (x_2,x)|\le C_0(\mu -\epsilon )|x_1-x_2|^{\mu -\epsilon } \) for any \(0<\epsilon <\mu \) where the constant \(C_0(\mu -\epsilon )\) does not depend on x. Let \(\psi (x_1,x):= \psi _0(x_1,x)h_0(x_1,x)\). Since \(|\psi (x_1,x)-\psi (x_2,x)|\le |\psi _0(x_1,x)-\psi _0(x_2,x)| |h_0(x_1,x)|+ |\psi _0(x_2,x)| |h_0(x_1,x)-h_0(x_2,x)|\), by our assumption on \(h_0\) we have \(|\psi (x_1,x)-\psi (x_2,x)|\le C(\mu -\epsilon )|x_1-x_2|^{\mu -\epsilon } \) for any \(0<\epsilon <\mu \) where the constant \(C(\mu -\epsilon )\) does not depend on x. Let \(x_1,x_2\in I\). Note that \(\psi (x_1,x), |x-x_1|^\mu \) and \(|x-x_2|^\mu \) are bounded functions on I and we will denote by M a constant which is greater than the upper bounds of these three functions.

We have

Note that

Since

we have

where \(M'\) is constant independent of x. By using Holder inequality we have

Note that by Holder inequality again we have \( \int _I |x_1-x|^{-2\mu } |x_2-x|^{-2\mu }dx \le (\int _I |x_1-x|^{-4\mu }dx)^{1/2} (\int _I |x_2-x|^{-4\mu }dx)^{1/2}<M''\) where \(M''\) is a constant independent of \(x_1,x_2\) since \(\mu < 1/4\), and the Lemma is proved. \(\square \)

5.2 Solving the linear equation

Recall from definition (1)

where \(I_2\) is two by two identity matrix. We denote by \(D_0(y,x)\) the massless limit of \(D_m(y,x)\) and it is given by

A key idea to find resolvent for the operator defined as in definition (1) is to extend x from I to the complex plane. This is well defined because \(\tilde{v}_i(y-z), i=0,1\) are real analytic function of \(z,{{\bar{z}}}\) when \(z\ne y\). More precisely we define

For a function f(z) defined on \({\mathbb {C}}-[a_1,a_2]\), \(x\in (a_1,a_2)\), we define \(f(x):=f(x+i0)= \lim _{\epsilon \rightarrow 0^+} f(x+i\epsilon )\) if the limit exists, i.e., the value of f on the upper cut of I. Define \(f(x-i0):=\lim _{\epsilon \rightarrow 0^-}f(x-i\epsilon ) \) if the limit exists, i.e., the value of f on the lower cut of I.

Lemma 5.5

Let \(G(z)= \int _I D_m(y,z) g(y) dy\), g verifies condition H as in definition 5.1 on I, and assume that \(\forall x\in (a_1,a_2)\), f(x) is defined by

Then \(\forall x\in (a_1,a_2)\)

Proof

Fix \(x\in (a_1,a_2)\). We have \(D_m(y,z)= D_0(y,z) + E_1(y,z)\) where \(E_1(y,z)= E_2(y,z)+E_3(y,z)\), \(E_2(y,z)\) is a smooth function, and \(E_3(y,z)\) is \(\ln |z-y|\) multiplied by a smooth function \(F_3(y,z)\).

We have

Notice when \(y\ne x\), the integrand converges pointwise to 0 as \(\epsilon \rightarrow 0^+\). For fixed x, the integral over y can be divided into two parts: the first part is over those \(y\in I \) with \(|y-x|\ge 1/2 \), and the second part is over \(y\in I \) with \(|y-x|\le 1/2 \). On the first part of the integral the integrand can be dominated by a constant, and on the second part of the integral, when \(\epsilon \) is small than 1/2, \(|x-y|^2+\epsilon ^2<1\), and

hence the integrand is dominated by a constant multiplied by \(|\ln |y-x| g(y)|\), which is an integrable function on I. By Dominated Convergence Theorem we have \(\lim _{\epsilon \rightarrow 0^+}\int _I (\ln |x+i\epsilon -y| F_3(y,x+i\epsilon )g(y)-\ln |x-i\epsilon -y| F_3(y,x-i\epsilon )g(y))dy= 0.\)

It is enough to prove the first equation in the Lemma for \(D_0(y,x)\), and this follows immediately from Lemma 5.2. The second equation also follows in a similar way. Notice that

the last equation follows from the first two by solving \(G(x+i0),G(x-i0)\) in terms of f(x), g(x). \(\square \)

5.3 Resolvent in the case of \(m=0\)

This case is a well known case of Riemann-Hilbert problem and more general case is discussed for an example on Page 130 of [16]. We will give a sketch of proof in our case.

Recall from condition (45) that \(l_1=l\) and \(e^{2\pi i l_1}= \frac{\beta -1/2}{\beta +1/2},\beta \in i{\mathbb {R}}\). Denote by \(h(z)=\frac{(z-a_1)^{-l}}{(a_2-z)^l}\). We will choose the branch cut to be \([a_1,a_2]\) and define h(z) so that \(h(x+i0)=\frac{(x-a_1)^{-l}}{(a_2-x)^l}, \forall x\in (a_1,a_2)\) and \(h(x-i0)=e^{- 2\pi il}\frac{(x-a_1)^{-l}}{(a_2-x)^l}, \forall x\in (a_1,a_2)\)

Introduce

Theorem 5.6

For \(D_0\) as in Eq. (48) we have \((D_0 +\beta )^{-1} = \frac{1}{\beta ^2- \frac{1}{4}}(\beta - R_0).\)

Proof

Note that \(D_0\) is diagonal, it is enough to check that

It is sufficient to check the equation on smooth functions. Let g(x) be a smooth function on I. We will solve the following linear equation:

Since g is smooth, f is smooth on \((a_1,a_2)\). Recall that \(h(z)=\frac{(z-a_1)^{-l}}{(a_2-z)^l}\), and we have chosen the branch cut to be \([a_1,a_2]\) and define h(z) so that \(h(x+i0)=\frac{(x-a_1)^{-l}}{(a_2-x)^l}, \forall x\in (a_1,a_2)\) and \(h(x-i0)=e^{- 2\pi il}\frac{(x-a_1)^{-l}}{(a_2-x)^l}, \forall x\in (a_1,a_2)\).

Recall that \(G_l(y,z)= \frac{h(z)(y-a_1)^{l}(a_2-y)^{-l}}{z-y}\). By our choice of h(z) we have \(G_l(y,x+i0)=G_l(y,x), \forall x\ne y \in (a_1,a_2)\) and \(G_l(y,x-i0)=e^{- 2\pi il} G_l(y,x), \forall x\ne y \in (a_1,a_2)\).

Consider the following functions: \(G_1(z):= \int _I G_l(y,z) \frac{f(y)}{\beta +1/2} dy \) and \(G_2(z):= \int _I \frac{-1}{2\pi i} \frac{1}{x-y} g(y)dy, G_3(z):= G_1(z)-G_2(z)\) on \({\mathbb {C}}-[a_1,a_2]\).

Using Lemma 5.2 exactly as in the proof of Lemma 5.5 we have

Hence

Near \(a_\nu , \nu =1,2, \) by using Lemma 5.3 we have that \(G_i(z)\) is either O(1) or \(O(|z-a_\nu |^{-l})\) if \(l>0\). Hence \(G_3(t)\) is in \(L^2([a_1,a_2])\). By equation (20) on Page 130 of [16], \(G_3(z)=C \frac{1}{(z-a_1)^{1-l}(z-a_2)^{l}}\) for some constant C. But since \(|l|<1/2,\frac{1}{(z-a_1)^{1-l}(z-a_2)^{l}}\) is not in \(L^2([a_1,a_2])\), we conclude that \(G_3(z)=0\) and \(G_1(z)=G_2(z)\). It follows that we have \(g(x)= G_{1}(x+i0)- G_{1}(x-i0)\). By the formula on Page 130 of [16] the theorem is proved. \(\square \)

We note that \(G_l(y,z)\) satisfies many of the properties of \(v_0\) in Eq. (40). For an example, \(\partial _{a_1} G_l(y,z)= l (z-a_1)^{-l-1}(a_2-z)^l (y-a_1)^{l-1}(a_2-y)^{-l}\) is non-singular at \(z=y\), and factorized as products of a function of y and a function of z, similar to Eq. (43). This strongly suggests that the resolvent for \(m>0\) case can be obtained from suitable functions of \(v_0\), and we will prove in the next section that is indeed the case.

5.4 The general case

Recall the function \(v_0(z^*,z, L)\) defined at the end of Sect. 4. Consider the following function

Note that by definition \({\textbf{R}}_m(z^*,z)\) also depends on \(L, a_1, a_2\) and we have suppressed its dependence on \(L, a_1, a_2\) in the following for the ease of notations. In fact, from now until the end of this paper, we make the following declaration: Starting from Sect. 5.4until the end of this paper, whenever a function is defined, and it is clear from the definition that such a function depends on L (or equivalently l), \(a_1, a_2\), unless otherwise stated, we will suppress its dependence on any of L (or equivalently l), \(a_1, a_2\) for the ease of notations following the convention in [22].

We come up with \({\textbf{R}}_m(z^*,z)\) by demanding that \({\textbf{R}}_m(z^*,z)\) share the following properties with \(D_m(z^*,z)\) as defined in Eq. (49): the second row is obtained from the first row by applying \(i m^{-1} \partial _{{\bar{z}}}.\) Moreover the entries of \({\textbf{R}}_m(z^*,z)\) as a function of z satisfy (1), (2) and (4) of definition 4.1.

We will now give a formula for the resolvent. We choose the cut of the complex plane to be \(I=[a_1,a_2]\). We choose a branch of \({\textbf{R}}_m(z^*,z)\) on \({\mathbb {C}}-[a_1,a_2]\), denoted by \(R_m(z^*,z)\) as follows. For \(y\ne x\in I\), we define \(R_m(y,x):=R_m(y,x+i0)= \lim _{\epsilon \rightarrow 0^+} R_m(y,x+i\epsilon )\), i.e., the value of \(R_m\) on the upper cut of I. Define \(R_m(y,x-i0)=\lim _{\epsilon \rightarrow 0^-} R_m(y,x-i\epsilon )\), i.e., the value of \(R_m\) on the lower cut of I. Note that from Eq. (53) and definition of \(v_0(z^*,z, L+1)\) before Eq. (39) we have \(R_m(y,x-i0)=e^{-2\pi i l}R_m(y,x+i0)\). By Eq. (39) we can choose \({R}_m(y,x)\) such that when x is close to y, and \(y\ne x\), \(R_m(y,x)=D_m(y,x)+\) non-singular terms, where both x, y are in \((a_1,a_2)\). Since \(l_1=-l_2\), \(R_m(z^*,z)\) is a well defined single valued function for \(z\ne z^*\), and both \(z,z^*\) are in \({\mathbb {C}}-[a_1,a_2]\).

Let \(R_m\) be the integral operator on \(L^2(I, {\mathbb {C}}^2)\) given by the kernel \(R_m(y,x)\), i.e,

Note that the notation \(R_m\) follows the declaration after Eq. (53). Here we have chosen somewhat unconventional notation by integrating with respect to the first variable in \(R_m\): this is in tune with the notation of \(v_0(z^*,z,L)\), where \(z^*\) corresponds to integration variable. The most singular part of \(R_m(y,x)\) when \(x-y\rightarrow 0\) is (up to multiplication by constants) \(\frac{1}{x-y}\) and the integral is understood as Cauchy’s Principle Value. When \(x\ne y\) and both in \((a_1,a_2)\), \(R_m(y,x)\) is a smooth function of y.

For fixed \(x\in (a_1,a_2) \), by Eq. (40) near \(a_i\) \(R_m (y,x)\) has the worst singularity of the form \((x-a_i)^{-l}\) when \(l>0, i=1,2.\)

Let f(y) be a smooth function on I. Let \(\hat{G}(z):=\frac{1}{\beta +1/2}\int _I R_m(y,z)f(y)dy\). Note that the notation \(\hat{G}(z)\) follows the declaration after Eq. (53).

\(\hat{G}(z)\) verifies (1), (2) and (4) of definition 4.1 on the plane minus the cut I, since \(R_m(y,z)\) as a function of z does when \(z\ne y\). The following gives the properties of \(\hat{G}(z)\) near and on the cut I:

Lemma 5.7

Proof

Let us choose a second cut on the complex plane as given by the three line segments below I joining the ends of I in Fig. 4. Let \(S_1(z^*,z)\) be the function from \({\textbf{R}}_m(z,z^*)\) in Eq. (53) defined on this second cut, such that \(S_1(y,z)=R_m(y, z)\) when \(\textrm{Im} z>0,y\in I\). When we move z from above \([a_1,a_2]\) to the region between the two cuts (the interior of the square in Fig. 4), we have crossed the cut for \(R_m(y,z)\), and by definition we have that when z is in the region between the two cuts,

It follows that

Note that when y is close to x, by Eq. (39) that gives the asymptotics when y is close to x, and definition of \(D_m\), \(S_1(y,x+i\epsilon )- S_1(y,x-i\epsilon )=D_m(y,x+i\epsilon )- D_m(y,x-i\epsilon ) + \)regular term. The first equation now follows as in the proof of Lemma 5.5. For the second equation, choose \(\eta >0\) to be small and let \(I_\eta :=(x-\eta ,x+\eta ). \) When \(\epsilon \rightarrow 0^+\), we have

where we have used \(e^{2\pi il}= \frac{\beta -1/2}{\beta +1/2}\).

On \(I_\eta \), up to terms which go to 0 as \(\eta \rightarrow 0\), we have

where in the last \(=\) we have used Lemma 5.2, and o(1) denotes a term that goes to 0 as \(\eta \rightarrow 0. \) The proof now is complete. \(\square \)

Theorem 5.8

where \(I_2\) is the identity two by two matrix.

Proof

We will check the resolvent formula on a dense subspace of \(L^2(I)\).

Let f(x) be a smooth function on I with support of f contained in \((a_1,a_2)\). We will solve the following linear equation:

First since \(\beta \in i{\mathbb {R}}, \beta \ne 0\), \(\beta \) is in the resolvent of the self adjoint operator \(D_m\), and the above linear equation has a unique solution \(g\in L^2(I)\). Let us first show that g satisfies condition H on \((a_1,a_2)\). We re-write the above equation as

Since the entries of \(D_m(y,x)-D_0(y,x)\), up to addition of a smooth function of (x, y), equal to \(\ln |x-y|\) multiplied by a smooth function of (x, y), by Lemma 5.4\(g_1(x):=\int _I (D_m(y,x)-D_0(y,x)) g(y) dy\) satisfies condition H on I. Apply the resolvent formula in Th. 5.6 we have

Since \(f(x)-g_1(x)\) satisfies condition H on I, by the result on Page 49 of [17] we see that g(x) satisfies condition H on \((a_1,a_2)\). By applying item (5) and (6) in Lemma 5.3, we see that g(x) is always bounded by \(O(|x-a_i|^{-|l|})\) at the ends \(a_i,i=1,2\).

Now consider two functions

Let \(G_3(z):= G_1(z)- G_2(z)\). Our goal is to show that \(G_3(z)=0\). Notice that \(G_3(z)\) satisfies (1) and (4) in Definition 4.1 on the plane with cut I. Apply Lemma 5.5 to \(G_2\) and Lemma 5.7 to \(G_1\) for \(x\in (a_1,a_2)\) we have

Now we examine the growth properties of \(G_1(z), G_2(z)\) near the ends \(a_i\). By the second paragraph after Eq. (53) we can write the components of \(G_1(z), G_2(z), G_3(z)\) as

respectively. Since \(G_1(z):= \int _I R_m(y,z) \frac{f(y)}{\beta +1/2}\), the support of f is contained in \((a_1,a_2)\), when z is close to \(a_i\), we only need to consider the property of \( R_m(y,z)\) when \(|z-y|\ge \eta _0>0\) where \(\eta _0\) is a fixed small constant. By Eq. (40), it follows that \(v(z)=O(|z-a_i|^{-|l_i|})\) and \(\partial _{{\bar{z}}} v=O(|z-a_i|^{-|l_i|})\).

As for \(G_2(z)\), note that \(D_m(y,z)-D_0(y,z)\), up to addition of smooth functions, is \(\ln |z-y|\) multiplied by a smooth function. By Lemma 5.4 the integrals involving \(\ln |z-y|\) are bounded around \(a_i\). So up to bounded functions around \(a_i\) we can replace \(D_m(y,z)\) by \(D_0(y,z)\) in the definition of \(G_2(z)\).

Apply (3) and (4) of Lemma 5.3 to such modified \(G_2(z)\), we see that near \(a_i,\) \(v', \partial _{{\bar{z}}} v'\) are either bounded or bounded by \(O(|z-a_i|^{-l_i})\) when \(l_i>0\). It follows that \(v-v'\) verifies the conditions in Lemmas 4.3 and 4.4, and we conclude that \(G_3(z)=0\). The theorem now follows from Lemmas 5.7 and 5.5 as follows. Fix x in the interior of I. Apply Lemma 5.7 to \(G_1\) we have \(G_1(x+i0)-G_1(x-i0)= g(x).\) Apply Lemma 5.5 to \(G_2\) we have

. Using \(G_1=G_2\) we have proved the theorem. \(\square \)

5.5 Some properties of the resolvent

Recall from the definition of \(R_m(z^*,z,L)\) below Eq. (53)

where we have put in extra L dependence. By definition \(R_m(z^*,z,L)\) also depends on \(a_1,a_2\) and we have followed the declaration after Eq. (53). We will use \(R_m(L)\) to denote the corresponding integral operator. Recall from condition (45)

with \(l=l_1=-l_2\), and \(e^{2\pi il}= \frac{\beta -1/2}{\beta +1/2}\).

Lemma 5.9

Proof

By Th. 5.8

Since \(\beta \in i{\mathbb {R}}\) and \(D_m\) is self adjoint, we have

where we have used \(e^{2\pi i l}= \frac{\beta +1/2}{\beta -1/2}\).

It follows that \(R_m(L)^H = R_m(-L)\), where \(R_m(L)^H\) is the adjoint of integral operator \(R_m(L)\), and the Lemma is proved. \(\square \)

It is convenient to introduce for fixed L

Note that by definition \(R(z^*,z)\) also depends on \(a_1, a_2, L\) and we have followed the declaration after Eq. (53). By equation (3.2.23) of [22] we have the complex conjugate of \(\frac{i}{\pi }\partial _z v_0(z,z^*,L+1)\) is \( \frac{i}{\pi }\partial _z v_0(z,z^*, L)\). Note that by Eq. (39) singularities of \( v_0(z^*,z, L)\) and \(v_0(z^*,z,-L)\) canceled out at \(z=z^*\in (a_1,a_2)\). It follows that \(R_m(z,z)\) is smooth for \(z\in (a_1,a_2)\), and

where \({\hbox {tr}}_2\) here means the sum of the diagonal entries of the two by two matrix.

Using Eqs. (43) and (24) we have

Similarly

When \(a_\mu =x_\mu + i y_\mu \) with \(x_\mu , y_\mu \) real, we will be interested in computing \(m^{-1}\frac{d R(z,z)}{dx_\mu } = m^{-1} \partial _{a_\mu }R(z,z) + m^{-1}\partial _{{\bar{a}}_\mu }R(z^*,z)\). Note that when \(a_\mu \) is real this is the same as \(m^{-1}\frac{d R(z,z)}{da_\mu }\). Following the convention in Remark 4.7 from Eqs. (57), (58) we have

When \(a_\mu \) is real, and \(z\in (a_1,a_2)\), from Lemma 5.9\(R(z,z)\in i{\mathbb {R}}\). It follows that \(m^{-1}\frac{d R(z,z)}{da_\mu } \in i{\mathbb {R}}\) and we conclude that \(v_\mu (z,1-L)v_\mu (z,1+L) + {\bar{v}}_\mu (z^*,L){\bar{v}}_\mu (z,-L)\) is real. So when \(a_\mu , \mu =1,2\) are real we have the following equation

By definition R(z, z) also depends on \(a_1, a_2, L\) and we have followed the declaration after Eq. (53). We note that R(x, x) is smooth in \(x,a_1,a_2\) when \(x\in (a_1,a_2)\). The following Proposition gives information about the property of R(x, x) near the ends \(a_1,a_2\).

Proposition 5.10

(1) Near \(a_\mu \) we have the following expansion of \(\frac{dR(x,x)}{dx}, x\in (a_1,a_2)\):

where \(C_3, A_1, A_2\) are constants which depend smoothly on \(a_1,a_2\), and \(\tilde{R}(x)\) is a continuous function that vanishes at \(a_\mu , \mu =1,2;\)

(2) near \(a_\mu \) we have the following expansion of \(R(x,x), x\in (a_1,a_2)\):

where \(C_2(\mu ), A_1, A_2\) are constants which depend smoothly on \(a_1,a_2\), and \(\tilde{R_1}(x)\) is a function with continuous first derivative and vanishes at \(a_\mu , \mu =1,2; \)

(3) near \(a_\mu \) we have the following expansion of \(\frac{dR(x,x)}{da_\mu }, x\in (a_1,a_2)\):

where \(C_3', A_1', A_2'\) are constants which depend smoothly on \(a_1,a_2\), and \(\tilde{R}(x)\) is a continuous function and vanishes at \(a_\mu , \mu =1,2;\) near \(a_\nu \) when \(\nu \ne \mu \), \(m^{-1}\frac{dR(x,x)}{da_\mu }\) is a continuous function;

(4) \(m^{-1}\frac{dR(x,x)}{da_\mu }+ i l_\mu (mx-ma_\mu )^{-2}\) is an integrable function on \([a_1,a_2]\).

Proof

Note that (2) follows from (1), and (4) follows from (3) since \(|l_\mu | < 1/2\). It is sufficient to prove (1) and (3). By Eq. (44) We have

Using Eq. (57) and set \(z^*=z\) we have

Similarly by using Eq. (58) and set \(z^*=z\)

Let us examine the expansion of \(\frac{\pi i}{\sin (\pi l_1)}v_1(1-L)v_1(1+L)\) around \(a_1\): by Eq. (23) that gives the expansion of \(v_1(1-L)\) and \( v_1(1+L)\) around \(a_1\) we have

where ... are higher order terms. Using the identity for Gamma function

we have \(\Gamma (l)\Gamma (-l)= \frac{\pi }{-l\sin (\pi l)}\). The coefficient of \((mz-ma_1)^{-2}\) in the above expansion is \(-il_1\). The coefficient of \((mz-ma_1)^{-1}\) in the above expansion is

which is 0 due to the fact that \(\alpha _{11}(1-L)=\alpha _{11}(1+L)\) and \(\Gamma (1+l) \Gamma (-l)= - \Gamma (l) \Gamma (1-l)\). From the above expansion the next order is, up to multiplication by constants which only depend on \(l_1\), \(\beta _{11}(1+L)(mz-ma_1)^{2l_1}\) and \(\beta _{11}(1-L)(mz-ma_1)^{-2l_1}\). By Lemma 4.5 the rest of the expansion is continuous and vanishes at \(x=a_1\).

Let us examine the expansion of \(\frac{\pi i}{\sin (\pi l_1)}v_2(1-L)v_2(1+L)\) around \(a_1\): by Eq. (23) we find that the first few leading order terms are, up to multiplication by constants which only depend on \(l_1\), \(\alpha _{21}(1-L) \alpha _{21}(1+L)\), \(\alpha _{21}(1+L)\beta _{21}(1-L)(mz-ma_1)^{1-2l_1},\alpha _{21}(1-L)\beta _{21}(1+L)(mz-ma_1)^{1+2l_1} \), which are non-singular since \(|l_1|< 1/2\), and the rest of the expansion is continuous and vanishes at \(x=a_1\).

Similarly one can check that the expansion of \(\frac{\pi i}{\sin (\pi l_1)}v_1(-L)v_1(L)\) around \(a_1\) has leading order, up to multiplication by constants which only depend on \(l_1\), \(\alpha _{11}(-L) \alpha _{11}(L) +\beta _{11}(-L) \beta _{11}(L), \alpha _{11}(-L)\beta _{11}(L)(mz-ma_1)^{2l_1}\) and \(\alpha _{11}(L)\beta _{11}(-L)(mz-ma_1)^{-2l_1}\). The rest of the expansion is continuous and vanishes at \(x=a_1\). The expansion of \(\frac{\pi i}{\sin (\pi l_1)}v_2(-L)v_2(L)\) around \(a_1\) has no singular terms and is a continuous function.

The same argument works for the expansion around \(a_2\), with \(l_1\) replaced by \(l_2=-l_1\).

Let \(z=x+iy, x\in (a_1,a_2)\). Then \(m^{-1}\frac{dR}{dx}= m^{-1}\partial _z R + m^{-1}\partial _{{\bar{z}}} R \), and (1) is proved.

To prove (3), by Eq. (60) we have

We should examine the expansion of \(v_\mu (z,1-L)v_\mu (z,1+L)+{v}_\mu (z,L){v}_\mu (z,-L)\) and its complex conjugate around \(a_1, a_2\). The proof is now the same as in the proof of (1). \(\square \)

Remark 5.11

From Prop. 5.10 if one integrate R(x, x) over \([a_1,a_2]\), we will get \(\log \) divergence from the two ends \(a_1\), \(a_2\) respectively. Moreover these singularities only depend on the ends \(a_1,a_2\) through \(l_1,l_2\).

5.6 The constant term

We remind the reader that we will follow the declaration after Eq. (53) for the rest of this paper.

Proposition 5.12

The constant term \(C_2(\mu )\) in (2) of Prop. 5.10 is given by \(C_2(\mu )= -\frac{i}{l_\mu } \alpha _{\mu \mu }(L) +c\) where c is a constant independent of \(a_1,a_2 \in {\mathbb {R}}\).

Proof

It is sufficient to check \(m\frac{dC_2(\mu )}{da_j}= -i/l_1 \frac{\alpha _{jj}(L)}{da_j}, j=1,2\) where we have followed the notation in Remark 4.7. We will check the case when \(\mu =1\). \(\mu =2\) is similar.

From Prop. 5.10 near \(a_1\) we have the following expansion of R(z, z):

By Eq. (44) \( m^{-1}\partial _z R(z,z)= -m^{-1} (\partial _{a_1}+\partial _{a_2})R(z,z)\), expanding both sides around \(a_1\), we see that the constant term on the LHS is \( C_3\), where the constant term on the RHS is \(C_3- [ m^{-1} \partial _{a_1} C_2(1)+ C_1]\) where \(C_1\) is the constant term of \(m^{-1} \partial _{a_2}R(z,z)\) in its expansion around \(a_1\). It follows that \( m^{-1} \partial _{a_1} C_2(1)= -C_1\). On the other hand \(m^{-1}\partial _{a_2} C_2(1)\) is the constant term of \(m^{-1} \partial _{a_2}R(z,z)\) in its expansion around \(a_1\), it follows that \(m^{-1}\partial _{a_1} C_2(1)= -C_1=-m^{-1}\partial _{a_2} C_2(1)\). From the Eq. (57)

Using the Eq. (23) for the expansion near \(a_1\), we find that the constant term \(C_1\) of \(m^{-1}\partial _{a_2} R(z,z)\) near \(a_1\) is given by

It follows that

where we have used \(\alpha _{21}(1-L)= -\alpha _{12}(L)=-\alpha _{12}(1+L)\) in Lemma 4.6.

Similarly we have \(m^{-1}\partial _{{\bar{a}}_1} C_2(1)= -C_4=-m^{-1}\partial _{{\bar{a}}_2} C_2(1)\), where \(C_4\) is the constant term of \(m^{-1}\partial _{{\bar{a}}_2} R(z,z)\) near \(a_1\). From the the Eq. (58)

we find the constant term around \(a_1\) is given by

Using \({\bar{\beta }}(-L){\bar{\beta }}(L)= {\sinh }^2(\psi )\) in (2) of Prop. 4.8, we find

We conclude from (4) of Prop. 4.8 that

and

and the Prop. is proved. \(\square \)

6 The Computation of Singular Limits

Throughout this section except the last Sect. 6.3, we shall assume that the conditions in (45) are satisfied.

Let us first give a heuristic reason for our approach. We’d like to compute the singular limit F(12, 23) from (16) where 1, 2, 3 are intervals as in Fig. 6. Ignoring the singularities for the moment, we need to compute \(\int _{a_1}^{a_2} R(x,x)dx\), where \(a_1, a_2\) are the end points of intervals in Fig. 6. Formally differentiating with respect to \(a_1\) for an example, we get \(\int _{a_1}^{a_2} \frac{dR(x,x)}{da_1}dx -R(a_1,a_1)\). By Eq. (60) we need to compute \( \int _{a_1}^{a_2} (v_\mu (1-L)v_\mu (1+L) + v_\mu (-L)v_\mu (L)) dx \), its complex conjugate, and \(R(a_1,a_1)\). It turns out with suitable linear combinations as in Sect. 6.2 the singularities will cancel out in the integrals, and the contribution from \(R(a_1,a_1)\) is the constant term determined in Prop. 5.12. By Eq. (60) we should turn to the computation of \(\int _{a_1}^{a_2} (v_\mu (1-L)v_\mu (1+L) + v_\mu (-L)v_\mu (L)) dx\).

6.1 A reduction of a line integral to a local computation

We will use \(I_{\epsilon +},I_{\epsilon -}\) to denote the upper side of the cut \([a_1+\epsilon ,a_2-\epsilon ]\) and the lower side of the cut \([a_1+\epsilon ,a_2-\epsilon ]\) respectively with its usual orientation, namely from the left to the right. \(-J\) will denote the interval J with opposite orientation. The value of the functions on the left hand side of the integral in the next Lemma are by definition the value of such functions from the upper side of the cut \([a_1+\epsilon ,a_2-\epsilon ].\)

Lemma 6.1

Proof

Since \(v_1(z-i0,1-L)= e^{2\pi i l} v_1(z+i0, 1-L)\) when \(z\in (a_1,a_2)\), we have

Note \(v_1(z-i0,1+L)= e^{-2\pi i l} v_1(z+i0, 1+L)\). We have

and the first equation follows. The second equation follows similarly. \(\square \)

Lemma 6.2

(1) Let \(f=\partial _l v_1(1-L) v_1(1+L), g= \partial _l v_1(-L) v_1(L)\), then \(d(fdz+ gd{\bar{z}})=0\); (2) \(\int _{J_{\epsilon ,\epsilon _1}} \partial _l v_1(1-L) v_1(1+L)+ \partial _l v_1(-L) v_1(L)=0\), where \(J_{\epsilon ,\epsilon _1}\) is the contour as in Fig. 5; (3)

where \(J_\epsilon \) denotes the union of two circles centered at \(a_1,a_2\) with radius \(\epsilon >0\) and oriented anti-clockwise.

Proof

Ad (1): By Eq. (24)

So we have

It follows that \(\partial _z g = \partial _{{\bar{z}}} f\), and \(d(fdz + gd{\bar{z}})= (\partial _z g - \partial _{{\bar{z}}} f) dz\wedge d{\bar{z}} =0\).

Ad (2): By Stokes theorem, \(\int _{J_{\epsilon ,\epsilon _1}\cup C_R} d(fdz + gd{\bar{z}})=0\), where \(C_R\) is a circle centered at the origin with anti-clockwise orientation and radius R very large. We have \(\int _{J_{\epsilon ,\epsilon _1}\cup C_R} \partial _l v_1(1-L) v_1(1+L)+ \partial _l v_1(-L) v_1(L)dz=0.\)

By Lemma 4.12 and let \(R\rightarrow \infty \) we have proved (2).

Ad (3): We let \(\epsilon _1\rightarrow 0\) in (2), and use Lemma 6.1, (3) is proved. \(\square \)

Lemma 6.3

Let \(J_\epsilon \) be the union of two circles centered at \(a_1,a_2\) with radius \(\epsilon >0\) and oriented anti-clockwise. Then:

(1)

(2)

Proof

Ad (1): First we note that \(e^{-il\theta } v_1(1-L)\) is a periodic function of \(\theta \) (with period \(2\pi \)), and can be thought as a function on the circle \( z=a_1+\epsilon e^{i\theta }\). We have \(\partial _l (e^{-i l\theta } v_1(1-L))= e^{-il\theta } (-i\theta ) v_1(1-L) + e^{-i l\theta } \partial _l v_1(1-L).\)

Hence

and

We note that \(e^{il\theta } \partial _l(e^{-i l\theta } v_1(1-L))v_1(1+L)\) is a smooth periodic function of \(\theta \) with period \(2\pi \). When integrating over \(0\le \theta \le 2\pi \), the only possible nonzero contribution comes from the coefficient of \((mz-ma_1)^{-1}\) (multiplied by \(m^{-1})\) in the expansion of \(e^{il\theta } \partial _l(e^{-i l\theta } v_1(1-L))v_1(1+L)\) around \(a_1\). This is the same as the coefficient of \((mz-ma_1)^{-1}\) in expansion of \(\partial _l v_1(1-L)v_1(1+L)\) around \(a_1\). By Eq. (23) we see there is only one such term in the expansion, and the coefficient of \((mz-ma_1)^{-1}\) is given by

Using the identity \(\Gamma (l+1)\Gamma (-l)= \frac{\pi }{-\sin {\pi l}}\) this can be simplified to \( \frac{-\sin \pi l_1}{\pi }(\partial _l \alpha _{11}(L) - \frac{\alpha _{11}}{l_1}) \).

The expansion of \(\partial _l v_1(-L)v_1(L)\) is less singular and has no \((z-a_1)^{-1}\) term around \(a_1\). Similarly both \(\partial _l v_1(1-L)v_1(1+L)\) and \(\partial _l v_1(-L)v_1(L)\) have no \((z-a_1)^{-1}\) term around \(a_2\).

As in the proof of Prop. 5.10, the leading term in the expansion of \(v_1(1-L)v_1(1+L)\) around \(a_1\) is given by \(\frac{-l_1\sin \pi l_1}{\pi } (mz-ma_1)^{-2}\). This term gives the first term on the right hand side of the equation in (1). The next term is \( (mz-ma_1)^{2l_1}\) or \((mz-ma_1)^{-2l_1}\). However when integrating such a term multiplied by \(i\theta \) over \(|z-a_1|=\epsilon \), up to constant it is bounded by \(\epsilon ^{1-2|l_1|}\). Similarly by Lemma 4.5 the integral of the non-singular term in the expansion is bounded by \(O(\epsilon )\). The expansion of \(v_1 (-L)v_1(L)\) is less singular and the leading term is \((mz-ma_1)^{2l_1}\) or \((mz-ma_1)^{-2l_1}\), and when integrating such term multiplied by \(i\theta \) over \(|z-a_1|=\epsilon \), up to constant it is bounded by \(\epsilon ^{1-2|l_1|}\). Similarly by Lemma 4.5 the integral of the non-singular term in the expansion is bounded by \(O(\epsilon )\).

The leading term in the expansion of \(v_1(1-L)v_1(1+L)\) around \(a_2\) starts with \((mz-ma_1)^{0}\) by Eq. (23), and similarly there are no singular terms in the expansion of \(v_1(-L)v_1(L)\) around \(a_2\). From this it follows that the integral of \((\partial _l v_1(1-L) v_1(1+L)+ \partial _l v_1(-L) v_1(L))\) on \(|z-a_2|=\epsilon \) is \(O(\epsilon )\), and (1) is proved. (2) follows from (1) and Lemma 6.2. \(\square \)

Lemma 6.4

Proof

When \(\mu =1\) this follows from (2) of Lemma 6.3 and Eq. (60), and the fact that both sides of (2) of Lemma 6.3 are real. \(\mu =2\) is proved in the same way as the proof of (3) of Lemma 6.3. \(\square \)

6.2 Computation of singular limits

For an interval I (our interval is connected), we will simplify our notation further by writing R(I, x) the (1, 1) entry of the resolvent \(R_m(x,x,L)-R_m(x,x,-L)\) (cf. Eq. 54) on the interval I when \(x\in I\), and 0 when x is not in I. Recall from definition 2.2\(R(12,23,x)= R(1\cup 2,x)+ R(2\cup 3,x)-R(2,x)-R(1\cup 2\cup 3,x)\), where intervals 1, 2, 3 are as in Fig. 6. Define

where we have followed the convention as in definition 3.11. As usual by definition \(R_1(b_1,b,c,c_1)\) also depends on l and we have followed the declaration after Eq. (53).

Note the dependence of \(R_1(b_1,b,c,c_1)\) on its variables is very different from that of \(R_m\) and we hope that there may be no confusions here. By Prop 5.10R(12, 23, x) is continuous in the interior of intervals 1, 2, 3 and bounded near the ends of intervals 1, 2, 3.

From Eq. (16), Theorem 5.8 and Eq. (56) we have

where \(\hat{C}(12,23,\beta ^2-1/4)\) is as in Definition (2.1).

Proposition 6.5

where we have added the dependence of \(\alpha _{ii}(L),i=1,2 \) on the interval where it is defined.

Proof

We will prove the first equation. The second equation is proved in a similar way.