Abstract

We study fast/slow systems driven by a fractional Brownian motion B with Hurst parameter \(H\in (\frac{1}{3}, 1]\). Surprisingly, the slow dynamic converges on suitable timescales to a limiting Markov process and we describe its generator. More precisely, if \(Y^\varepsilon \) denotes a Markov process with sufficiently good mixing properties evolving on a fast timescale \(\varepsilon \ll 1\), the solutions of the equation

converge to a regular diffusion without having to assume that F averages to 0, provided that \(H< \frac{1}{2}\). For \(H > \frac{1}{2}\), a similar result holds, but this time it does require F to average to 0. We also prove that the n-point motions converge to those of a Kunita type SDE. One nice interpretation of this result is that it provides a continuous interpolation between the time homogenisation theorem for random ODEs with rapidly oscillating right-hand sides (\(H=1\)) and the averaging of diffusion processes (\(H= \frac{1}{2}\)).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The setting considered in this article is as follows. Consider a particle in a rapidly evolving random medium, so that it is governed by a stochastic differential equation of the type \(dx_t= A(x_t, t/\varepsilon )\,dt + \sigma (x_t,t/\varepsilon )\,dB\)Footnote 1 for a small parameter \(\varepsilon >0\). The situation we are interested in is where, in the “static” case (i.e. when A and \(\sigma \) have no explicit time dependence), the system is either super- or subdiffusive. This is the case if the driving noise B is modelled by fractional Brownian motion (fBM) with Hurst parameter \(H \ne \frac{1}{2}\). Recall that fractional noises (i.e. the time derivative of fBM) can be obtained as scaling limits in statistical mechanics models [11, 38, 65] and that fBM with Hurst parameter H is a Gaussian process with stationary increments and self-similarity exponent H. It is therefore characterised (up to an irrelevant global shift) by the fact that \(\mathbf{E}(B_t-B_s)^2=|t-s|^{2H}\), so that it is superdiffusive for \(H > \frac{1}{2}\) and subdiffusive for \(H < \frac{1}{2}\). The covariance of its increments, \(\mathbf{E}(B_{t+1}-B_{t})(B_{s+1}-B_s)\), decays at rate \(|t-s|^{2H-2}\) for large \(|t-s|\) and therefore exhibits long-range dependence when \(H > \frac{1}{2}\).

We furthermore assume that the rapid time evolution of the environment is described by a hidden Markov variable, thus leading to the model

with B an fBM with Hurst parameter \(H\in (0,1)\) in \(\mathbf{R}^m\) and \(F(x,y)\in {{\mathbb {L}}}(\mathbf{R}^m, \mathbf{R}^d)\). The stochastic integral appearing in the first term is problematic when \(H < \frac{1}{2}\): one should really interpret this equation as \(x_t^\varepsilon = \lim _{\delta \rightarrow 0} x_t^{\varepsilon ,\delta }\) with \(x_t^{\varepsilon ,\delta }\) driven by a smooth approximation \(B^\delta \) to B with relevant timescale \(\delta \ll \varepsilon \ll 1\), see Sect. 1.1 below. Regarding the fast Markov variable, a prototypical situation is that of a system of the type

where W is a Wiener processes independent of the fBM B appearing in (1.1). This allows for the case where the variable x feeds back into the evolution of y, but for most of this article we assume that there is no x-dependence in (1.2). We also assume that \(y_t\) admits a unique invariant probability measure \(\mu \). In the case with feedback, we have a family of invariant measure \(\mu _x\) obtained by “freezing” the value of the variable x in (1.2).

It was recently shown by the authors in [31] that in the case \(H > \frac{1}{2}\) the process \(x_\varepsilon \) converges in probability to the solution to

where the average of any function h is given by \({\bar{h}}(x) = \int h(x,y)\,\mu _x(dy)\). The aim of the present article is to investigate the two cases left out by the aforementioned analysis, namely what happens when either \(H < \frac{1}{2}\) or when \(H > \frac{1}{2}\) but \({\bar{F}} = 0\) in (1.3)?

1.1 Description of the model

It turns out that the effect of the rapid oscillatory motion described by the fast variable y is to slow down the motion of x in the superdiffusive case and to speed it up in the subdiffusive case. This can be explained by the following heuristics. For times of order \(t \lesssim \varepsilon \), the process Y doesn’t evolve much so that, by the scaling property of the driving fBM, one expects the process x to move by about \(\varepsilon ^H\) in a time of order \(\varepsilon \). On large times \(t \gtrsim \varepsilon \) on the other hand we will see that the limiting process is actually Markovian, even in the case with long-range dependence. This suggests that over times of order t the process x performs about \(t/\varepsilon \) steps of a random walk with step size \(\varepsilon ^H\) and therefore moves by about \(\varepsilon ^H \sqrt{t/\varepsilon }\). This suggests that one should multiply F by \(\varepsilon ^{\frac{1}{2}-H}\) in order to obtain a non-trivial limit.

As a consequence, the equations we actually study in this article are of the form:

(summation over i is implied), where B is a fractional Brownian motion with Hurst parameter H ranging from \(\frac{1}{3}\) to 1Footnote 2, and Y is an independent stationary Markov process with values in some Polish space \(\mathcal{Y}\), invariant measure \(\mu \) and generator \(-\mathcal{L}\)Footnote 3. At the moment, we are unfortunately unable to cover the case when X feeds back into the dynamics of Y. When \(H > \frac{1}{2}\), we furthermore assume that \(\int F_i(x,y)\,\mu (dy) = 0\) for every \(i \ne 0\) and every x.

Our main result is that, as \(\varepsilon \rightarrow 0\), solutions to (1.4) converge in law to a limiting Markov process and we provide an expression for its generator. In fact, we have an even stronger form of convergence, namely we show that the flow generated by (1.4) converges to the one generated by a limiting stochastic differential equation of Kunita type (i.e. driven by an infinite-dimensional noise).

Remark 1.1

Of course, (1.4) is not quite the same as (1.2) which was our starting point. One way of relating them more directly is to perform a time change and set \(X^\varepsilon = x_\varepsilon (\varepsilon ^{1-2H}t)\) with \(x_\varepsilon \) solving (1.2). Then \(X^\varepsilon \) solves the equation \(dX^\varepsilon ={\tilde{\varepsilon }}^{{1\over 2}-H} F_i(X^\varepsilon ,Y^{{\tilde{\varepsilon }}})\,dB_i+ {\tilde{\varepsilon }}^{\frac{1}{2H}-1}F_0(X^\varepsilon ,Y^{{\tilde{{\varepsilon }}}})\,dt\) where we have set \({\tilde{{\varepsilon }}}=\varepsilon ^{2H}\). When \(H < \frac{1}{2}\), this then converges to the same limit as (1.4), but of course with \(F_0\) in (1.4) set to 0. When \(H > \frac{1}{2}\), then one would need to take \(F_i\) centred in (1.2) in order to obtain a non-trivial limit and our results imply that one again converges to the same limit as (1.4), at least in the case \(F_0 = 0\).

The special case when \(F_0 = 0\) and the \(F_i\) are independent of the x-variable yields a functional central limit theorem for stochastic integrals against fractional Brownian motion. This already appears to be new by itself and might be of independent interest.

As already hinted at, the map \(t \mapsto F_i(\cdot , Y^\varepsilon _t)\) is too irregular to fit into the standard theory of differential equations driven by a fractional Brownian motion, especially when \(H < \frac{1}{2}\), so that it is not even completely clear a priori how to interpret (1.4) for fixed \(\varepsilon > 0\). These questions will be addressed in more detail in Sect. 2 below. Let us put these aside for the moment and consider the following ordinary differential equation

where v is a smooth stationary Gaussian random process with covariance C such that \(C(t) \sim |t|^{2H-2}\) for |t| large. When \(H < \frac{1}{2}\) we furthermore assume that \(\int C(t)\,dt = 0\) and, when \(H = \frac{1}{2}\), we assume that C decays exponentially and satisfies \(\int C(t)\,dt = 1\). One way of obtaining such a process v is to set \(v = \phi * \dot{B}\) for \(\phi \) a Schwartz test function integrating to 1 (and \(*\) denoting convolution in time). This in particular shows that, at least in law, one has \((\varepsilon \delta )^{H-1}v\big ({t \over \varepsilon \delta }\big ) =( \phi _{\varepsilon \delta }* \dot{B})(t)\), where we set \(\phi _\varepsilon (t) = \varepsilon ^{-1}\phi (t/\varepsilon )\). Since this converges in law to \(\dot{B}\) as \(\varepsilon \delta \rightarrow 0\), we can view (1.5) as an approximation to (1.4).

It is then possible to show that the limit \(X^\varepsilon = \lim _{\delta \rightarrow 0} X^{\varepsilon ,\delta }\) exists and our results hold with \(X^\varepsilon \) interpreted in this way. Furthermore, we will see that all our results hold uniformly over \(\delta \in (0,1]\) as \(\varepsilon \rightarrow 0\). This in particular shows that the converse limit obtained by first sending \(\varepsilon \rightarrow 0\) and then \(\delta \rightarrow 0\) is the same, as are all limits obtained by other ways of jointly sending \(\varepsilon ,\delta \rightarrow 0\).

1.2 Description of the main results

We now give a precise formulation of our main results, albeit with a simplified set of assumptions. The reason is that while the simplified assumptions are straightforward to state, they are very stringent regarding the Markov process Y. The more realistic set of assumptions used in the remainder of the article however is quite technical to formulate. We first recall the following standard definition of the fractional powers of the generator of the process Y.

Definition 1.2

We write \(\mathcal{H}= L^2(\mu )\) with \(\mu \) the invariant measure of Y and \(\langle \cdot ,\cdot \rangle _\mu \) for its scalar product. For \(\alpha \in (0,1)\), we then say that \(f\in \mathrm {Dom}(\mathcal{L}^{\alpha } )\) if, for every \(g \in \mathcal{H}\), the integral

converges and determines a bounded functional on \(\mathcal{H}\) (which we then call \(\mathcal{L}^\alpha f\)). Recall that the generator of the process Y is \(-\mathcal{L}\), so that \(\mathcal{L}\) is indeed a positive operator in the reversible case and \(\mathcal{L}^\alpha \) does then coincide with the definition using functional calculus.

Similarly, for \(\alpha \in (-1,0)\), we write \(\mathcal{L}^\alpha \) for the operator given by

Since \(t \mapsto t^{-\alpha -1}\) is locally integrable, it follows from the first point of Assumption 1.3 below that \(\mathcal{L}^\alpha \) is a bounded operator on the subspace of \({\mathrm {Lip}}(\mathcal{Y})\) consisting of mean zero functions.

Assuming that \(X^\varepsilon \) takes values in \(\mathbf{R}^d\), we then define the \(d\times d\) matrix-valued function

where \(\mathcal{L}\) acts on the second argument of \(F_k\). As we will see in Remark 1.7, the expression (1.6) is naturally interpreted as the limit \(\delta \rightarrow 0\) of a “local” Green–Kubo formula associated to the fluctuations of (1.5).

Note that the condition \({\bar{F}}_k = 0\) is necessary in the case \(H > \frac{1}{2}\) since the negative power of \(\mathcal{L}\) appearing in this expression does not make sense otherwise, see also Remark 2.5 below. We shall assume mixing conditions and Hölder continuity of the Y variable, see Assumptions 2.1–2.3 below, as well as a regularity condition on \(x \mapsto F(x,\cdot )\) (and also \(F_0\)) as spelled out in Assumption 2.7. A simpler set of conditions is as follows, the first of which is a strengthening of Assumptions 2.1 and 2.3, the second is a strengthening of Assumption 2.2, and the last is just a restatement of Assumption 2.7 in this context.

Assumption 1.3

(Simplified Assumptions). The functions \(F_i\) appearing in (1.4) as well as the Markov process Y satisfy the following.

-

1.

The Markov semigroup associated to the process Y is strongly continuous and has a spectral gap in \({\mathrm {Lip}}(\mathcal{Y})\), the space of bounded Lipschitz continuous functions on \(\mathcal{Y}\).

-

2.

In the case \(H < 1/2\) we assume that, for any \(\alpha < H\), the process \(t \mapsto Y_t\) admits \(\alpha \)-Hölder continuous trajectories and its Hölder seminorm (over intervals of length 1 say) has bounded moments of all orders.

-

3.

When \(H > \frac{1}{2}\), we also assume that \(\int F_i(x,y)\,\mu (dy) = 0\) for every \(i \ne 0\) and every x.

-

4.

There exists \(\kappa > 0\) such that, for every \(i \ge 0\), \(x\mapsto F_i(x,\cdot )\) is \(\mathcal{C}^4\) with values in \({\mathrm {Lip}}(\mathcal{Y})\) and its derivatives of order at most 4 are bounded by \(C (1+|x|)^{-\kappa }\) for some \(C>0\).

Remark 1.4

Recall that a Markov semigroup \((P_t)_{t \ge 0}\) admits a spectral gap in any given Banach space \(E \subset L^2(\mu )\) if \(P_t:E \rightarrow E\) is a bounded linear operator for every t and if there exist constants \(c,C > 0\) such that \(\Vert P_t f - \mu (f)\Vert _E\le Ce^{-ct}\Vert f\Vert _E\) for all \(f \in E\). For this definition to make sense, E of course needs to contain all constant functions.

The reason why we are aiming for a more general result at the expense of a much more technical set of assumptions is that having a spectral gap in \({\mathrm {Lip}}(\mathcal{Y})\) is a very restrictive condition which is not even satisfied for the Ornstein–Uhlenbeck process.Footnote 4

Theorem 1.5

Let \(H\in (\frac{1}{3}, 1)\) and let Assumption 1.3 hold. For fixed \(\varepsilon > 0\), \(\alpha < H\), and \(T>0\), the process \(X^{\varepsilon ,\delta }\) converges in law in \(\mathcal{C}^\alpha ([0,T])\) as \(\delta \rightarrow 0\) to a limit \(X^\varepsilon \) which we interpret as the solution to (1.4).

The solution flow of (1.4) converges in law to that of the Kunita-type stochastic differential equation written in Itô form as

where \({\bar{F}}_0(x)=\int F_0(x,y)\mu (dy)\), \(G_i(x) = (\partial ^{(2)}_j\Sigma _{ji})(x,x)\), W is a Gaussian random field with correlation

and where \(\partial ^{(2)}_j\) denotes differentiation in the jth direction of the second argument.

Proof

As already suggested, this is a special case of our main result, Theorem 2.8 below. The fact that Assumptions 2.1–2.3 and 2.7 are implied by Assumption 1.3 is immediate. (Take \(E_n = {\mathrm {Lip}}(\mathcal{Y})\) for every n.) \(\square \)

As a consequence, we also have the following functional CLT.

Corollary 1.6

Let \(H\in (\frac{1}{3}, 1)\) and let Assumption 1.3 hold (or let Assumptions 2.1–2.3 hold and when \(H > \frac{1}{2}\), let \(\int F_i(y)\,\mu (dy) = 0\) for every \(i \ge 1\).)

Then the stochastic process \(Z^\varepsilon _t = \sqrt{\varepsilon }\int _0^{t/\varepsilon } F(Y_r)\, dB_r\) converges to a Wiener process W, weakly in \(\mathcal{C}^\alpha ([0,T])\) for any \({\alpha <\frac{1}{2}\wedge H}\). Furthermore, defining the random smooth function \(Z^{\varepsilon ,\delta }_t = \sqrt{\varepsilon }\int _0^{t/\varepsilon } F(Y_r)\, dB_r^\delta \) with \(B^\delta = \phi _\delta * B\), its iterated integral satisfies

where the matrix \(\Sigma \) is given by (1.6) (which is independent of \(x,{\bar{x}}\) in this case).

Remark 1.7

Theorem 1.5 characterises \(\lim _{\varepsilon \rightarrow 0}\lim _{\delta \rightarrow 0}X^{\varepsilon ,\delta }\) and shows that it is a Markov process with generator \({\mathcal {A}}\) given by

Our proof actually carries over with minor modifications to the case when \(\varepsilon \rightarrow 0\) for fixed \(\delta \) (but with convergence bounds that are uniform in \(\delta \)!), in which case the limit is given by the same expression (1.7), but with the matrix \(\Sigma \) given by

where \(R_\delta (t) = \delta ^{2H-2} \mathbf{E}v(0)v(t/\delta )\) and \(P_t = e^{-\mathcal{L}t}\) denotes the Markov semigroup for Y. We will derive this formula in Sect. 1.3 where we will also see that, for frozen values of x, it is a special case of the Green–Kubo formula [42, 45, 61]. Note that (1.7)–(1.10) (in particular the convergence of the flow) is also consistent with [22, Theorem 4.3] where a somewhat analogous situation is considered. It follows from Definition 1.2 that \(\Sigma _\delta \rightarrow \Sigma \) as \(\delta \rightarrow 0\), so that the two limits commute (in law).

Remark 1.8

There has recently been a surge in interest in the study of slow/fast systems involving fractional Brownian motion. We already mentioned the averaging result [31] which considers the case \(H > \frac{1}{2}\) but with \({\bar{F}} \ne 0\). The work [60] considers the case \(H \in (\frac{1}{3},1)\) like the present article, but with the very strong assumption that F is independent of the fast variable, in which case only \(F_0\) exhibits rapid fluctuations and one essentially recovers classical averaging results. In [2], the authors consider the case \(H > \frac{1}{2}\), but with F independent of the slow variable x and, as in [31], not necessarily averaging to zero. They obtain a description of the fluctuations for (a generalisation of) such systems in the regime where there is an additional small parameter in front of F.

Formula (1.6) holds for the continuum of parameters \(H\in (\frac{1}{3}, 1)\). There are two special cases that were previously known. The case \(H = \frac{1}{2}\) reduces of course to the classical stochastic averaging results [17, 30, 66, 67] which state that the generator of the limiting diffusion is obtained by averaging the generator for the slow diffusion with the x variable frozen against the invariant measure for the fast process. Note that for this to match (1.9) one needs to interpret the stochastic integral in (1.4) in the Stratonovich sense. This is natural given that this is the interpretation that one obtains when replacing B by a smooth approximation, which is consistent with Remark 1.7. The fact that one also has convergence of flows however (in the case without feedback considered here) appears to be new even in this case.

Another set of closely related classical results deals with “time homogenisation”, also known as the Kramers–Smoluchowski limit or diffusion creation [44, 61]. There, one considers random ODEs of the type

with F averaging to zero against the stationary measure \(\mu \) for the fast process Y. In this case, one also obtains a Markov process in the limit \(\varepsilon \rightarrow 0\) and its generator coincides with (1.9) if one sets \(H=1\). This can be understood by noting that, at least formally, fractional Brownian motion with Hurst parameter \(H=1\) is given by \(B(t) = ct\) with c a normal random variable, so that (1.4) reduces to (1.11), except for the random constant c, which then appears quadratically in (1.9) and therefore disappears when averaged out.

The standard proofs of averaging/homogenisation results found in the literature tend to fall roughly into two groups. The first contains functional analytic proofs based on general methods for studying singular limits of the form \(\exp (t \mathcal{L}_\varepsilon )\) for \(\mathcal{L}_\varepsilon = \varepsilon ^{-1} \mathcal{L}_0 + \mathcal{L}_1\). This of course requires the full process (slow plus fast) to be Markovian and completely breaks down in our situation. The second group consists of more probabilistic arguments, which typically rely on using corrector techniques to construct sufficiently many martingales to be able to exploit the well-posedness of the martingale problem for the limiting Markov process. The latter are in principle more promising in our situation since the limiting process is still Markovian, but the lack of Markov property makes it unclear how to construct martingales from our process. (But see Sect. 5.3 for a construction that does go in this direction.)

Instead, our proof relies on rough paths theory [15, 53], which has recently been used to recover homogenisation results (formally corresponding to the case \(H=1\)), for example in [40]. See also [1, 7, 12, 14] for more recent results with a similar flavour. In the case when the fast dynamics is non-Markovian and solves an equation driven by a fractional Brownian motion, a collection of homogenisation results were obtained in [23,24,25,26], while stochastic averaging results with non-Markovian fast motions are obtained in [51, 52] for the case \(H>\frac{1}{2}\). The former group of results are proved using rough path techniques, but there is of course an extensive literature on functional limit theorems based on either central or non-central limit theorems, see for example [3, 5, 6, 10, 54, 62, 63].

Finally, note that many physical systems can be regarded as a slow/fast systems, this includes second order Langevin equations and tagged particles in a turbulent random field [4, 8, 16, 41, 42, 61, 68]. They also arise in the context of perturbed completely integrable Hamiltonian systems [20, 49] and geometric stochastic systems [21, 48, 50, 59]. See also [13, 47, 70] for some review articles/monographs.

Remark 1.9

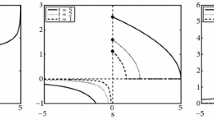

It may be surprising that, when \(H < \frac{1}{2}\), even though \(X_\varepsilon \) is driven by a fractional Brownian motion and F(x, y) isn’t assumed to be centred in the y variable, the limit \({\bar{X}}\) is a regular diffusion. This is unlike the case \(H > \frac{1}{2}\) [31, 52] where a non-centred F leads to an averaging result with a process driven by fBm in the limit. This change in behaviour can be understood heuristically as follows. With \(\eta \) as in (3.5), the covariance of \(t\mapsto f(Y_t^\varepsilon ) \dot{B}_t\) is given by \(\zeta _\varepsilon (t-s) = \eta ''(t-s) g((t-s)/\varepsilon )\) for some function \(g(t)=\langle f, P_{t}f\rangle \), that would typically converge quite fast to a non-zero limit. The scaling properties of \(\eta \) then show that

for some constant \(C_g\) which has no reason to vanish in general. As a consequence, \(\varepsilon ^{1-2H} \zeta _\varepsilon \) converges pointwise to 0 while its integral remains constant, suggesting that \(\varepsilon ^{\frac{1}{2}-H} f(Y_t^\varepsilon ) \dot{B}_t\) indeed converges to a white noise. When \(H > \frac{1}{2}\) however, \(\eta ''\) is not absolutely integrable at infinity and one needs to assume that g vanishes there, which leads to a centering condition. A similar transition from diffusive to super-diffusive behaviour at \(H = \frac{1}{2}\) was observed in a different context in [41].

Remark 1.10

As explained, our result implies more, namely that the (random) flow induced by the SDE (1.4) converges in law to that induced by the Kunita-type SDE [46]

In other words, the flows \(\psi _{s,t}^{\varepsilon }\), where \(\psi _{s,t}^\varepsilon (x)\) denotes the solution at time t to the x-component of (1.4) with initial condition x at time s, converge to a limit \(\psi _{s,t}\) which is Markovian in the sense that \(\psi _{s,t}\) and \(\psi _{u,v}\) are independent whenever \([s,t) \cap [u,v) = \emptyset \). This remark appears to be novel even when \(H=\frac{1}{2}\), but it is unclear whether it extends to the case when x feeds back into the dynamic of y as in (1.2).

Remark 1.11

The term \(\partial ^{(2)}_j\Sigma _{ji}\) appearing in (1.12) looks “almost” like an Itô-Stratonovich correction. In fact, when \(\mathcal{L}\) is self-adjoint on \(L^2(\mu )\), one has \(\Sigma _{ij}(x,{\bar{x}}) = \Sigma _{ji}({\bar{x}},x)\) in which case (1.12) is equivalent to \(dx_i = W_i(x,{\circ }\, dt)+ {\bar{F}}_0^i(x)\,dt\).

1.3 Heuristics for general slow/fast random ODEs

We now show how to heuristically derive (1.10). Consider a random ODE of the form

where \(Z_t^\varepsilon = Z(t/\varepsilon )\) for some stationary (but not necessarily Markovian!) stochastic process Z and \({\hat{F}}(x,\cdot )\) is assumed to be centred with respect to the stationary measure of Z. In the case when \({\hat{F}}(x,z) = {\hat{F}}(z)\) does not depend on x, it follows from the Green–Kubo formula [42, 45, 61] that, at least when Z has sufficiently nice mixing properties, \(X^\varepsilon \) converges as \(\varepsilon \rightarrow 0\) to a Wiener process with covariance \(\Sigma + \Sigma ^\top \), where

This suggests that a natural quantity to consider in the general case is

and that the limit of \(X^\varepsilon \) as \(\varepsilon \rightarrow 0\) is a diffusion with generator of the form

for some drift term b.

To derive the correct expression for the drift b, we note that one expects

in the regime \(\varepsilon \ll \delta t \ll 1\). The left-hand side of this expression is given by

To lowest order, one can approximate this expression by replacing \(X_s^{\varepsilon }\) by \(X_t^{\varepsilon }\), but the resulting expression vanishes rapidly for \(s \gtrsim t+\varepsilon \) due to the centering condition on \({\hat{F}}\). To the next order, one has

where the last identity follows from the substitution \(u = (s-r)/\varepsilon \) combined with the fact that, provided that Z is sufficiently rapidly mixing, we expect the main contribution from this integral to come from \(|u| \approx 1\), while typical values of s are such that \((s-t)/\varepsilon \approx \delta t/\varepsilon \gg 1\). Combining this with (1.16) eventually yields the expression

Comparing this with (1.14), we conclude that

(summation over repeated indices is implied) so that (1.15) can be written as

which does coincide with the expression (1.9) as desired.

In order to link this calculation with the setting of the previous section, we note that (1.5) (with \(F_0 = 0\) for simplicity) can be coerced into the form (1.13) by setting \(Z_t = (\delta ^{H-1}v(t/\delta ), Y_t)\) as well as \({\hat{F}}(x,(v,y)) = F(x,y) v\). In this case, one has

so that one does indeed recover the expression (1.10) for any fixed \(\delta \).

Remark 1.12

The eagle-eyed reader will have spotted that since the stationary measure of Z is \(\mathcal{N}(0,C) \otimes \mu \) for some multiple C of the identity matrix and since \({\hat{F}}(x,(v,y))\) is linear in v, the centering condition for \({\hat{F}}\) is always satisfied, independently of the choice of F. This explains why our main result does not require any centering condition when \(H \le \frac{1}{2}\). When \(H > \frac{1}{2}\) however, the covariance function R decays too slowly for the heuristic derivation just given to apply. The centering condition for F then guarantees that correlations decay sufficiently fast to justify the second step in (1.17).

The remainder of this article is structured as follows. In Sect. 2 we introduce the assumptions on the nonlinearities \(F_i\) as well as the fast process Y, we discuss a few examples, and we give provide the statement of our main result. In Sect. 3 we then show that solutions to (1.5) converge as \(\delta \rightarrow 0\), which yields in particular a precise interpretation of what we mean by (1.4) when \(H < \frac{1}{2}\). The strategy of proof is as follows. Given a smooth mollification \(B^\delta \) of B, we first show convergence of \(\int _s^t \int _s^r f(u)\, \dot{B}^\delta (u) du\, g(r)\, \dot{B}^\delta (r)dr\) as \(\delta \rightarrow 0\) for any deterministic H-Hölder continuous functions f, g. While we are able to reduce this to existing criteria for canonical rough path lifts of Gaussian processes [9, 18] in the case where the two fractional Brownian motions appearing in this expression are independent, the case where they are equal requires a bit more care and relies on a simple trick given in Proposition 3.4, which is of independent interest. This then allows us to build an infinite-dimensional rough path \({{\mathbf {Z}}}^\varepsilon \) (taking values in a space of vector fields on \(\mathbf{R}^d\)) associated to (1.4) in a similar way as in [40, Sec. 1.5] (see also the “nonlinear rough paths” of [58] and [26]) and to reformulate (1.4) as an RDE driven by \({{\mathbf {Z}}}^\varepsilon \) with nonlinearity given by point evaluation. Section 3.2 provides details of the construction of \({{\mathbf {Z}}}^\varepsilon \), while Sect. 3.3 then uses it to formulate our main technical result, namely Theorem 3.14 which shows that \({{\mathbf {Z}}}^\varepsilon \) converges to a certain rough path lift of an infinite-dimensional Wiener process with covariance function given by \(\Sigma \). The remainder of the article is devoted to the proof of this convergence statement. Section 4 shows tightness of the family \(\{{{\mathbf {Z}}}^\varepsilon \}_{\varepsilon \le 1}\), while we identify its limit in Sect. 5. In both sections, the cases \(H < \frac{1}{2}\) and \(H > \frac{1}{2}\) are treated in a completely different way.

The fact that we have convergence of the full infinite-dimensional rough path allows us to conclude that we do not just have convergence of solutions for fixed initial conditions, but of the full solution flow. One point of note is that there are two separate sources of randomness, namely the Markov process Y and the fractional Brownian motion B. Our convergence result is “annealed” in the sense that our convergence in law requires both sources, but a number of intermediate results are “quenched” in the sense that they hold for almost every realisation of Y. It is an open question whether our final convergence result also holds in the quenched sense.

2 Precise Formulation and Results

In this section, we collect the precise assumptions on the functions \(F_i\) as well as the Markov process Y.

Convention. We write \(A \lesssim B\) as shorthand for \(A \le KB\) with a constant K that will differ from statement to statement.

2.1 Technical assumptions on the fast variable Y

Throughout the article we fix \(H \in (\frac{1}{3},1)\) as well as a sequence \((E_n)_{n \ge 0}\) of Banach spaces such that \(E_n \subset E_{n+1}\) and \(E_n \subset L^1(\mathcal{Y},\mu )\) for every \(n \ge 0\), and such that pointwise multiplication is a continuous operation from \(E_0 \times E_n\) into \(E_{n+1}\) for every \(n\ge 0\). We also write simply E instead of \(E_0\) and assume E contains constant functions. See Sect. 2.2 below for two classes of examples showing what type of spaces we have in mind here.

First, we impose that Y has “nice” ergodic properties in the following sense, which in particular implies that \(\mu \) is its unique invariant measure on \(\mathcal{Y}\).

Assumption 2.1

Let \(N = \infty \) for \(H > \frac{1}{2}\) and \(N=2\) for \(H \in (\frac{1}{3},\frac{1}{2}]\). For every \(n \in [1,N)\), the semigroup \(P_t\) extends to a strongly continuous semigroup on \(E_n\) and there exist constants C and \(c> 0\) (possibly depending on n) such that, for every \(f \in E_n\) with \(\int _\mathcal{Y}f d\mu =0\), one has

In the low regularity case, we also assume that the process Y has some sample path continuity when composed with a function in \(E_2\).

Assumption 2.2

For \(H \in (\frac{1}{3},\frac{1}{2})\) there exists \(p_\star > \max \{4d,12/(3H-1)\}\) such that for every \(f \in E_2\)

for some constant \(c > 0\).

We also need some integrability.

Assumption 2.3

For \(H \ge \frac{1}{2}\), one has \(E_n \subset L^2(\mathcal{Y},\mu )\) for every \(n \ge 0\). For \(H < \frac{1}{2}\), one has \(E_2 \subset L^2(\mathcal{Y},\mu )\) and \(E \subset L^{p_\star }(\mathcal{Y},\mu )\).

Remark 2.4

When combining it with the inclusion of the product, Assumption 2.3 implies that \(E \subset \bigcap _{p \ge 1} L^p(\mathcal{Y},\mu )\) for \(H\ge \frac{1}{2}\).

Another consequence of these assumptions is as follows.

Remark 2.5

As a consequence of Assumption 2.2, we conclude that if \(f \in E_2\) and \(H < \frac{1}{2}\), then

Recalling the definition of \(\mathcal{L}^\alpha \) from Definition 1.2, it follows that \(E_2 \subset \mathrm {Dom}(\mathcal{L}^\alpha )\) for every \(\alpha < H\) so that (1.6) is indeed well defined provided that \(F_k(x,\cdot ) \in E_2\) for every x. This will be guaranteed by Assumption 2.7 below.

2.2 Examples of fast variables

One possible concrete framework is as follows. Fix two weights \(V:\mathcal{Y}\rightarrow [1,\infty ]\) and \(W :\mathcal{Y}\rightarrow (0,\infty )\) and a metric d on \(\mathcal{Y}\) generating its topology with the property that there exists \(C>0\) such that, for all \(x,y \in \mathcal{Y}\) with \(d(x,y) \le 1\), one has

For \(n \ge 1\), we then let \(\mathcal{B}_{V,W}\) be the Banach space of functions \(f :\mathcal{Y}\rightarrow \mathbf{R}\) such that

One choice of scale of function spaces that is suitable for a large class of Markov processes is to take \(E_n = \mathcal{B}_{V,W}\) for every \(n \ge 1\) (and suitably chosen V and W), while \(E_0\) is chosen be the space of bounded Lipschitz continuous functions, namely \(\mathcal{B}_{1,1}\).

This framework is relatively general since it allows for a wide variety of choices of V, W, and of distance functions on \(\mathcal{Y}\), see [33, 36]. For example, it was shown in [32, Thm. 1.4] that the 2D stochastic Navier–Stokes equations exhibit a spectral gap in such spaces under extremely weak conditions on the driving noise. More precisely, for every \(\eta \) small enough there exist constants C and \(\gamma \) such that

for every Fréchet differentiable function f for every \(t\ge 0\), where

This at first sight appears to fall outside our framework, but one notices that if one sets

then the norm \(\Vert \cdot \Vert _{V,W}\) with \(V(x) = \exp (\eta |x|^2)\) and \(W(x) = 1/(1+|x|)\) is equivalent to the norm (2.4). The reason for the choice of d as in (2.5), which is then “undone” by our choice of W, is to guarantee that (2.3) holds for V, which would not be the case for \(|x-y| \le 1\) in the Euclidean distance.

To verify Assumption 2.2 one can then for example make use of the following.

Lemma 2.6

Suppose that \(\int \big (V(x)(1+W(x))\big )^{p_\star }\,\mu (dx) < \infty \) and there exists a constant c such that, for some \(\alpha _0>1-2H\),

Let \(f \in \mathcal{B}_{V,W}\) and \(2p \le p_\star \), then

In particular, on any fixed time interval, we have \(\mathbf{E}\Vert f(Y_\cdot )\Vert _{\mathcal{C}^\alpha }^p < \infty \) provided that \(p(\alpha _0-\alpha ) > 1\).

Proof

For \(f \in E\) with \(\Vert f\Vert _E \le 1\) and for \(p \le \frac{1}{2} p_\star \), one has

where we combined (2.6) with Markov’s inequality in the last step. \(\square \)

When \(H \ge \frac{1}{2}\), Assumption 2.2 is empty, so only integrability conditions are required on the spaces \(E_n\). This allows for example to use Harris’s theorem [29, 34, 55] to verify Assumption 2.1 for spaces of functions with weighted supremum norms. More precisely, one would then take E to be the space of all bounded Borel measurable functions and \(E_n = \mathcal{B}_V\), the Banach space of functions \(f :\mathcal{Y}\rightarrow \mathbf{R}\) such that

In order to verify our assumptions, it then suffices that V is a square integrable Lyapunov function for the Markov process Y and that the sublevel sets of V satisfy a ‘small set’ condition for the transition probabilities of Y [55].

2.3 Main results

One final assumption we need is that the nonlinearities F and \(F_0\) appearing in (1.4) are sufficiently nice E-valued functions of their first argument. More precisely, we assume the following.

Assumption 2.7

The map \(x \mapsto F(x,\cdot )\) is of class \(\mathcal{C}^4\) with values in E and there exists an exponent \(\kappa > \frac{16 d}{p_\star }\) with \(p_\star \) as in Assumption 2.2 (and simply \(\kappa > 0\) when \(H > \frac{1}{2}\)) such that, for every multi-index \(\ell \) of length at most 4,

The same is assumed to hold true for \(F_0\). When \(H > \frac{1}{2}\), we further assume that \(\int F_i(x,y)\,\mu (dy) = 0\) for every \(i \ne 0\) and every x.

The condition \(F \in \mathcal{C}^4\) is of course suboptimal and could probably be lowered to \(F \in \mathcal{C}^\beta \) for \(\beta > \max \{H^{-1},2\}\) and \(F_0 \in \mathcal{C}^\beta \) for \(\beta > 1\), at least if enough integrability is assumed in Assumption 2.3. We also now fix a Schwartz function \(\phi \) integrating to 1 and set \(\phi _\delta (t)=\frac{1}{\delta }\phi (t/ \delta )\). We then write \(B^\delta \) for the convolution of B with this mollifier, namely

With this notation, the solutions to (1.5) are equal in law to the process given by

Since \(B^{\varepsilon \delta }\) is smooth, this equation should be interpreted as an ordinary differential equation that just happens to have random coefficients. With all these preliminaries at hand, our main result is the following.

Theorem 2.8

For \(H \in (\frac{1}{3},1]\) and under Assumptions 2.1–2.3, and 2.7, the conclusions of Theorem 1.5 hold. With \(X^{\varepsilon ,\delta }\) defined in (2.7), the convergence \(X^{\varepsilon ,\delta } \rightarrow X^\varepsilon \) furthermore holds in probability.

Proof

The convergence in probability of the flow \(X^{\varepsilon ,\delta } \rightarrow X^{\varepsilon }\) is the content of Proposition 3.2 below. The proof of the conclusion of Theorem 1.5, namely the convergence in law of the flow for (1.4) as \(\varepsilon \rightarrow 0\) is the content of Corollary 3.15. \(\square \)

3 Convergence of Smooth Approximations

We first address the question of the convergence in probability of solutions to (1.5) to those of (1.4) as \(\delta \rightarrow 0\) for \(\varepsilon > 0\) fixed. In fact, we will directly provide an interpretation of (1.4) and show that this interpretation is sufficiently stable to allow for the approximation (1.5).

Our convergence proof relies on the theory of rough paths; we refer to [15] for an introduction. The main insight of this theory is that even though, for \(H \le \frac{1}{2}\), the solution map \(B \mapsto X\) for equations of the type (1.4) isn’t continuous when viewing B as an element of any classical function space large enough to contain typical sample paths of fractional Brownian motion, it does become continuous when enhancing B with its iterated integrals \({{\mathbb {B}}}= \int B\otimes dB\) and endowing the space of pairs \((B,{{\mathbb {B}}})\) with a suitable topology.

For this, consider for any \(x \in \mathbf{R}^d\) the processes

Here, the first integral is interpreted as a Wiener integral which makes sense also when \(\delta = 0\) and, when \(\delta > 0\), coincides with the Riemann–Stieltjes integral. Recall that the Wiener integral of a deterministic (or independent) integrand against any Gaussian process B is well-defined provided that the integrand belongs to the reproducing kernel Hilbert space \(\mathcal{H}_B\) of B and provides an isometric embedding \(\mathcal{H}_B \ni f \mapsto \int f\,dB \in L^2(\Omega ,\mathbf{P})\). In the case of fractional Brownian motion, it is known that \(L^2 \subset \mathcal{H}_B\) when \(H \ge \frac{1}{2}\) while for \(H < \frac{1}{2}\) one has \(\mathcal{C}^\alpha \subset \mathcal{H}_B\) for every \(\alpha > \frac{1}{2}-H\). The fact that for fixed x and \(\varepsilon > 0\), \(t \mapsto F(x,Y^\varepsilon _t)\) belongs to \(\mathcal{H}_B\) for all \(H > \frac{1}{3}\) is then a simple consequence of Assumptions 2.2 and 2.3 combined with Kolmogorov’s continuity criterion (when \(H < \frac{1}{2}\)).

Write \(\mathcal{B}= \mathcal{C}_b^3(\mathbf{R}^d,\mathbf{R}^d)\) and \(\mathcal{B}_k = \mathcal{C}_b^3(\mathbf{R}^{d\cdot k},(\mathbf{R}^{d})^{\otimes k})\), so that one has canonical inclusions of the algebraic tensor product \(\mathcal{B}_k \otimes _0 \mathcal{B}_\ell \subset \mathcal{B}_{k+\ell }\) with the usual identification \((f\otimes g)(x,y)= f(x) g(y)\) thanks to the fact that \(\Vert f\otimes g\Vert _{\mathcal{B}_{k+\ell }}\le \Vert f\Vert _{\mathcal{B}_\ell }\Vert g\Vert _{\mathcal{B}_k}\). Given a final time \(T>0\) and \(\alpha \in (\frac{1}{3},\frac{1}{2})\), we define the space \(\mathscr {C}^\alpha ([0,T], \mathcal{B}\oplus \mathcal{B}_2)\) of \(\alpha \)-Hölder rough paths in the usual way [15, Def. 2.1], but with all norms of level-2 objects in \(\mathcal{B}_2\). Recall that an \(\alpha \)-Hölder rough path \((X, {{\mathbb {X}}})\) is a pair of functions where \(X\in \mathscr {C}^\alpha ([0,T], \mathcal{B})\) with \(X_0=0\) and \({{\mathbb {X}}}: \Delta _T \rightarrow \mathcal{B}_2\) where \(\Delta _T :=\{(s,t): 0\le s\le t \le T\}\) is the two-simplex with \(|{{\mathbb {X}}}|_{2 \alpha }:=\sup _{(s,t)\in \Delta _T}\frac{\Vert {{\mathbb {X}}}_{s,t}\Vert _{\mathcal{B}_2}}{|t-s|^{2 \alpha }}<\infty \). In addition, Chen’s relation is imposed, namely \({{\mathbb {X}}}_{s,t}-{{\mathbb {X}}}_{s,u}-{{\mathbb {X}}}_{u,t}=X_{s,u}\otimes X_{u,t}\).

We define the second-order processes \({{\mathbb {Z}}}^{\varepsilon ,\delta }\) and \({\bar{{{\mathbb {Z}}}}}^\varepsilon \) by

(the differentials are taken in the r variable) and we define \({{\mathbf {Z}}}^{\varepsilon ,\delta } = (Z^{\varepsilon ,\delta },{{\mathbb {Z}}}^{\varepsilon ,\delta })\), \({\bar{{{\mathbf {Z}}}}}^\varepsilon = ({\bar{Z}}^\varepsilon ,{\bar{{{\mathbb {Z}}}}}^\varepsilon )\). Note here that \(r \mapsto Z_{s,r}^{\varepsilon ,\delta }(x)\) is smooth and \(r \mapsto {\bar{Z}}_{s,r}^{\varepsilon }(x)\) is Hölder continuous for any exponent strictly less than 1, so these integrals should be interpreted as regular Riemann–Stieltjes integrals. In Sect. 3.2 below we will give a proof of the following result.

Proposition 3.1

Let \(H \in (\frac{1}{3},1]\), let \(\alpha \in (\frac{1}{3},H\wedge \frac{1}{2})\) and \(\beta \in (1-\alpha ,1)\), and let Assumptions 2.1–2.3, and 2.7 hold. Then, \({{\mathbf {Z}}}^{{\varepsilon },\delta }\) and \({\bar{{{\mathbf {Z}}}}}^\varepsilon \) admit versions that are random elements in \(\mathscr {C}^\alpha ([0,T], \mathcal{B}\oplus \mathcal{B}_2)\) and \(\mathscr {C}^\beta ([0,T], \mathcal{B}\oplus \mathcal{B}_2)\) respectively. Furthermore, \({{\mathbf {Z}}}^{{\varepsilon },\delta }\) converges in probability in \(\mathscr {C}^\alpha ([0,T], \mathcal{B}\oplus \mathcal{B}_2)\) as \(\delta \rightarrow 0\) to the random rough path \({{\mathbf {Z}}}^{{\varepsilon }}\) characterised in Proposition 3.11 below. (In particular, the first order component \(Z^{{\varepsilon }}\) of \({{\mathbf {Z}}}^{{\varepsilon }}\) is given, for any fixed x, by the Wiener integral (3.1) with \(\delta = 0\).)

For now, we take this result as granted. With this result in place, we obtain the following convergence result as \(\delta \rightarrow 0\).

Proposition 3.2

The second claim of Theorem 2.8 holds.

Proof

With the space \(\mathcal{B}\) as above, let \(\delta :\mathbf{R}^d \rightarrow L(\mathcal{B},\mathbf{R}^d)\) be the function given by \(\delta (x)(f) = f(x)\). We then claim that, for any \(\varepsilon , \delta > 0\), (2.7) can be rewritten as the rough differential equation (RDE) driven by the infinite-dimensional rough paths \({{\mathbf {Z}}}^{{\varepsilon },\delta }\) and \({\bar{{{\mathbf {Z}}}}}^{{\varepsilon }}\) defined above and given by

Note that since \(\alpha + \beta > 1\), there is no need to specify cross-integrals between \({{\mathbf {Z}}}^{\varepsilon ,\delta }\) and \({\bar{{{\mathbf {Z}}}}}^{\varepsilon }\) since they can be defined in a canonical way using Young integration [72].

To check that this RDE is well-posed for any rough paths \({{\mathbf {Z}}}^{{\varepsilon },\delta }\) and \({\bar{{{\mathbf {Z}}}}}^\varepsilon \) belonging to \(\mathscr {C}^\alpha ([0,T], \mathcal{B}\oplus \mathcal{B}_2)\) and \(\mathscr {C}^\beta ([0,T], \mathcal{B}\oplus \mathcal{B}_2)\) respectively, we note first that one readily verifies that the map \(\delta \) is Fréchet differentiable, and actually even \(\mathcal{C}^3_b\). Its differential \(D\delta \) at \(x\in \mathbf{R}^d\) in the direction of \(y\in \mathbf{R}^d\) is given by \((D\delta )_x(y)(h) = (Dh)_x(y)\) where \(h\in L(\mathcal{B},\mathbf{R}^d)\). Morerover \(|(D\delta )_x(h)|\le |h|_\mathcal{B}\). In particular we may consider the map \(D\delta \cdot \delta :\mathbf{R}^d \rightarrow L(\mathcal{B}\otimes \mathcal{B},\mathbf{R}^d)\) which for \(h = h_1 \otimes h_2\in \mathcal{B}_2\) is given by

for a suitable partial trace \(\mathop {\mathrm {tr}}\limits \). This shows that \(D\delta \cdot \delta \) extends continuously to a \(\mathcal{C}^2_b\) map from \(\mathbf{R}^d\) into \(L(\mathcal{B}_2, \mathbf{R}^d)\). (Since \(\mathcal{B}_2\) differs from the projective tensor product of \(\mathcal{B}\) with itself, this doesn’t automatically follow from the fact that \(\delta \) itself is \(\mathcal{C}^3_b\).) Retracing the standard existence and uniqueness proof for RDEs, [15, Sec. 8.5] then shows that (3.2) admits unique (global) solutions for every initial condition and every driving path. Furthermore, if the sample path \(t \mapsto Y(t)\) is given by any continuous \(\mathcal{Y}\)-valued function then, under the stated regularity conditions on \(F, F_0\), it is straightforward to verify that the solutions to (3.2) coincide with those of (2.7).

Since the RDE solution is a jointly locally Lipschitz continuous function of both the initial data \(x_0\) and the driving paths \({{\mathbf {Z}}}^{\varepsilon ,\delta }\) and \({\bar{{{\mathbf {Z}}}}}^{\varepsilon }\) into \(\mathcal{C}^\alpha (\mathbf{R}_+,\mathbf{R}^d)\), the claim that \(X^{\varepsilon ,\delta } \rightarrow X^\varepsilon \) in probability then follows immediately from Proposition 3.1. \(\square \)

Remark 3.3

This shows that it is consistent to define solutions to (1.4) in the general case as simply being a shorthand for solutions to (3.2) driven by the pair of rough paths \({{\mathbf {Z}}}^\varepsilon \) and \({\bar{{{\mathbf {Z}}}}}^\varepsilon \). This is the interpretation that we will use from now on. The fact that in the special case \(H =\frac{1}{2}\) this coincides with the Stratonovich interpretation of the equation follows as in [15, Thm 9.1].

3.1 Preliminary results

In this section we present a few general results that will be used in the proof of Proposition 3.1. We start with the following elementary property of the second Wiener chaos.

Proposition 3.4

Let \(\mathcal{H}\subset \mathcal{E}\) be an abstract Wiener space and let \(B, {\tilde{B}}\) be two i.i.d. Gaussian random variables on \(\mathcal{E}\) with Cameron–Martin space \(\mathcal{H}\). Let \(K^\delta :\mathcal{E}\times \mathcal{E}\rightarrow \mathbf{R}\) be continuous bilinear maps such that the limit \({\tilde{K}} = \lim _{\delta \rightarrow 0} K^\delta (B,{\tilde{B}})\) exists in \(L^2\). Then, the limit

exists in \(L^2\). Furthermore, the limit in (3.4) depends only on the limit \({\tilde{K}}\) and not on the approximating sequence \(K^\delta \) and one has the bound \(\mathbf{E}K^2 \le 2 \mathbf{E}{\tilde{K}}^2\).

Proof

The Gaussian probability space generated by the pair \((B,{\tilde{B}})\) has Cameron–Martin space \(\mathcal{H}\oplus {\tilde{\mathcal{H}}}\) where \({\tilde{\mathcal{H}}}\) is a copy of \(\mathcal{H}\). Since \(K^\delta \) is bilinear and \(K^\delta (B,{\tilde{B}})\) has vanishing expectation, it belongs to the second homogeneous Wiener chaos, so that there exists \({\hat{\mathrm {K}}}^\delta \in (\mathcal{H}\oplus {\tilde{\mathcal{H}}}) \otimes _s (\mathcal{H}\oplus {\tilde{\mathcal{H}}})\) with \(K^\delta (B,{\tilde{B}}) = \mathcal{I}_2({\hat{\mathrm {K}}}^\delta )\), where \(\mathcal{I}_k\) denotes the usual isometry between kth symmetric tensor power and kth homogeneous Wiener chaos, see [57].

Note now that, interpreting \({\hat{\mathrm {K}}}^\delta \) as a Hilbert–Schmidt operator on \(\mathcal{H}\oplus {\tilde{\mathcal{H}}}\), there exists \(\mathrm {K}^\delta \in {\tilde{\mathcal{H}}} \otimes \mathcal{H}\) such that \({\hat{\mathrm {K}}}^\delta = \iota \mathrm {K}^\delta \), where \(\iota :{\tilde{\mathcal{H}}} \otimes \mathcal{H}\rightarrow (\mathcal{H}\oplus {\tilde{\mathcal{H}}}) \otimes _s (\mathcal{H}\oplus {\tilde{\mathcal{H}}})\) is given by

with the obvious matrix notation and \(\tau :{\tilde{\mathcal{H}}} \otimes \mathcal{H}\rightarrow \mathcal{H}\otimes {\tilde{\mathcal{H}}}\) the transposition operator.

This is because the first diagonal block is obtained by testing against \(\langle k, (B, {\tilde{B}})\rangle \) where \(k=(f, 0) \otimes _s (g,0)\) with \(f,g \in \mathcal{H}\), yielding

The second diagonal block vanishes for the same reason with the roles of B and \({\tilde{B}}\) exchanged. (Here we denote by \(x\mapsto \langle x,f\rangle \) the unique element of \(L^2(\mathcal{E})\) which is linear on a set of full measure containing \(\mathcal{H}\) and coincides with \(\langle f,\cdot \rangle \) there. In fact, \(\langle x,f\rangle =f^*(x)\) when \(f^*\in \mathcal{E}^*\) and \(\langle B,f\rangle =f^*\circ B\). )

On the other hand, one has

where this time \(\mathcal{I}_2\) refers to the isometry between \(\mathcal{H}\otimes _s\mathcal{H}\) and the second chaos generated by B only. Since \(\mathcal{I}_2\) and \(\tau \) are both isometries, it immediately follows that \(\mathbf{E}(K^\delta _\diamond (B,B))^2 \le \Vert \mathrm {K}^\delta \Vert ^2 = 2 \Vert \iota \mathrm {K}^\delta \Vert ^2 = 2\mathbf{E}(K^\delta (B,{\tilde{B}}))^2\), and similarly for differences \(K^\delta - K^{\delta '}\), so that the claim follows. \(\square \)

Remark 3.5

It follows immediately from (3.4) that if we replace \(K^\delta \) by the same sequence of bilinear maps, but with their two arguments exchanged, the limit one obtains is the same.

Before we turn to the precise statement, we introduce the following notation which will be used repeatedly in the sequel. We write \(\eta \) for the distribution on \(\mathbf{R}\) given by

and \(\eta ''\) for its second distributional derivative. For \(a< 0 < b\), we will then make the abuse of notation \(\int _a^b \eta ''(t)\,\phi (t)\,dt\) as a shorthand for \(\lim _{\varepsilon \rightarrow 0} \langle \eta '', \phi \mathbf {1}^\varepsilon _{[a,b]}\rangle \), where \(\mathbf {1}^\varepsilon _{[a,b]}\) denotes a mollification of the indicator function \(\mathbf {1}_{[a,b]}\). The following is elementary.

Lemma 3.6

Let \(a<0<b\) and \(H \in (\frac{1}{3},\frac{1}{2})\). Setting \(\alpha _H= H(1-2H)\) and \(\phi _0 = \phi (0)\), the limit above is given by

independently of the choice of mollifier, thus justifying the notation. For \(a = 0\), we set

which can be justified in a similar way, provided that the mollifier one uses is symmetric. (This in turn is the case if we view \(\eta \) as the limit of covariances of smooth approximations to fractional Brownian motion.)

For \(H = \frac{1}{2}\), one similarly has \(\int _a^b \eta ''(t)\,\phi (t)\,dt = \phi _0\) and \(\int _0^b \eta ''(t)\,\phi (t)\,dt = \frac{1}{2} \phi _0\), while for \(H \in (\frac{1}{2},1)\), \(\eta ''\) is given by the locally integrable function \(t \mapsto -2\alpha _H |t|^{2H-2}\).

\(\square \)

We now show that for any fixed \(\varepsilon > 0\), the processes \(Z^\varepsilon \) satisfy a suitable form of Hölder regularity. To keep notations shorter, we define the collection of processes

indexed by \(\mathbf{R}^m\)-valued functions f that belong to the reproducing kernel space of the fractional Brownian motion B. Here \(\{e^i\}\) is an o.n.b. of \(\mathbf{R}^m\) and \(B_r=(B_r^1, \dots , B_r^m)\). We start our analysis with some preliminary result for the irregular case \(H < \frac{1}{2}\).

Lemma 3.7

Let \(H \in (\frac{1}{3},\frac{1}{2})\) and let \(f\in \mathcal{C}^\beta ([0,1],\mathbf{R}^m)\) for some \(\beta > (1-2H) \vee 0\). The processes \(Z^f\) satisfy the Coutin–Qian conditions [9, 18, Def. 14] in the sense that

for all \(0< s< t < 1\) and all \(h \in (0, t-s]\).

Proof

The mixed second order distributional derivative of \( \mathbf{E}(B^\delta _sB_t^\delta )\) is given by \(\frac{1}{2} \eta _\delta ''(s-t)\), the convolution of \(\frac{1}{2}\eta ''\) with a symmetric mollifier at scale \(\delta \). Mollifying B and taking limits shows that we have the identity

(with summation over the components of f implied). For \(H < \frac{1}{2}\), this yields the bound

as required.

Regarding the covariance, we have

when \(0<h\le t-s\) so the intervals overlap only at most one point, as required. \(\square \)

If \({\tilde{B}}\) is an independent copy of B, we can combine this result with those of [18] to conclude that there is a canonical rough path associated with the path \(\big (\int f(r)\,dB(r), \int g(r)\,d{\tilde{B}}(r)\big )\) for any \(f,g\in \mathcal{C}^\beta ([0,1],\mathbf{R}^m)\). We now show that there also exists a canonical lift for \((Z^f, Z^g)\), where the integrals are defined with respect to the same fractional Brownian motion B. With this notation, we then have the following result.

Proposition 3.8

For \(H \in (\frac{1}{3},\frac{1}{2})\), we set \(\alpha = H\) and \(U = \mathcal{C}^\beta ([0,1],\mathbf{R}^m)\) for some \(\beta \in (\frac{1}{3} , H)\). For \(H \in [\frac{1}{2}, 1)\), we fix \(p > 3/(3H-1)\) and set \(\alpha = H-\frac{1}{p}\) and \(U = L^p([0,1],\mathbf{R}^m)\).

Then, for any finite collection \(\{f_i\}_{i \le N} \subset U\), there is a “canonical lift” of

given by (3.7) to a geometric rough path \({{\mathbf {Z}}}^f = (Z^f, {{\mathbb {Z}}}^f)\) on \(\mathbf{R}^N\) such that

for every \(q \ge 1\). This is obtained by taking the limit as \(\delta \rightarrow 0\) of the canonical lift of the smooth paths \(Z^{\delta ,f}\) defined as in (3.7) but with B replaced by \(B^\delta \).

Remark 3.9

As usual, “geometric” here means that \({{\mathbf {Z}}}^f\) is the limit of canonical lifts of smooth functions. Indeed, for \(B^\delta \) the convolution of B with a mollifier at scale \(\delta > 0\), \({{\mathbb {Z}}}^{f}\) is given by

and this limit is independent of the choice of mollifier (and therefore “canonical”).

Proof

We only need to show (3.9) for \(q=2\) since Z and \({{\mathbb {Z}}}\) belong to a Wiener chaos of fixed order. (Recall that the \(f_i\) are considered deterministic here.)

We start with the case \(H \in (\frac{1}{3},\frac{1}{2})\). Let \({\tilde{B}}\) denote an independent copy of the fractional Brownian motion B and let \({\tilde{Z}}^{f,g} = (Z^f, {\tilde{Z}}^g)\) where \({\tilde{Z}}^g\) is defined like \(Z^{g}\) but with B replaced by \({\tilde{B}}\). By Lemma 3.7, for any \(f,g \in \mathcal{C}^\beta \), we can then apply [18, Thm 35] to construct a second-order process \({\tilde{{{\mathbb {Z}}}}}^{f,g}_{s,t}\) satisfying the Chen identity

It furthermore coincides with the Wiener integral

which makes sense since the Coutin–Qian condition guarantees that \(r \mapsto Z^f_{s,r}\) belongs to the reproducing kernel space of \(Z^g\). It is furthermore such that smooth approximations to (3.10) (replace B and \({\tilde{B}}\) by \(B^\delta \) and \({\tilde{B}}^\delta \), obtained by convolution with a mollifier at scale \(\delta \rightarrow 0\)) converge to it in \(L^2\). In particular, \({\tilde{{{\mathbb {Z}}}}}^{f,g}\) belongs to the second Wiener chaos generated by \((B,{\tilde{B}})\) and is of the form of the limits considered in Proposition 3.4.

We now want to replace \({\tilde{B}}\) by B. For an approximation \(B^\delta \) as mentioned above, setting

we have

where \(\eta _\delta \) is an even \(\delta \)-mollification of \(t \mapsto |t|^{2H}\), so that in particular \(\int _0^\infty \eta _\delta ''(s)\,ds=0\), since \(\eta _\delta '(0)=0\). Similarly to (3.8), we can then rewrite (3.12) as

It follows immediately that one has

which is bounded by some multiple of

Combining this with Proposition 3.4, we conclude that

exists in probability, is independent of the choice of mollification, and satisfies the bound

It now suffices to set \({{\mathbb {Z}}}^{f,ij}_{s,t} = {{\mathbb {Z}}}^{f_i,f_j}_{s,t}\). Both the fact that Chen’s relation holds and the fact that the resulting rough path is geometric follow at once from the fact that these properties hold for the smooth approximations.

Combining (3.15) with the fact that the rough path obtained from [18, Thm 35] satisfies the bound (3.9) with \(\alpha = H\) as a consequence of Lemma 3.7, the claim follows.

We now turn to the case \(H = \frac{1}{2}\) where it is well known that \({{\mathbb {Z}}}^{\delta ,f,g}_{s,t}\) defined in (3.11) converges to the Stratonovich integral \( \int _s^t \int _s^r f(u)\,dB(u)\, g(r)\, \circ dB(r)\), so that

A simple consequence of Hölder’s inequality then leads to the bounds

where \( \Vert f\Vert _{L^p}= \Vert f\Vert _{L^p([0,1])}\). This shows again that the bound (3.9) holds, this time with \(\alpha = \frac{1}{2}-\frac{1}{p}\) (and q arbitrary), and our condition on p guarantees that this is greater than \(\frac{1}{3}\).

For \(H > \frac{1}{2}\), the first identity in (3.8) above combined with the positivity of the distribution \(-\eta ''\) and Hölder’s inequality yields the bound

Similarly, again as a consequence of the positivity of \(-\eta ''\), we have the bound

which shows again that (3.9) holds. \(\square \)

3.2 Construction and convergence of the rough driver as \({\delta \rightarrow 0}\)

The aim of this section is to construct the rough path \({{\mathbf {Z}}}^\varepsilon \) (this is the content of Proposition 3.11) and to show that this construction enjoys good stability properties. This is done by stitching together the “canonical” rough path lift for the collection \(\{Z^\varepsilon (x)\}_{x \in \mathbf{R}^d}\) obtained in Proposition 3.8. For \(H>\frac{1}{2}\), this is just iterated Young integrals. For \(H=\frac{1}{2}\) the iterated integrals are considered in the Stratonovich sense and, thanks to the independence of Y and B, the first order process can be interpreted indifferently as either an Itô or a Stratonovich integral.

In order to make use of Proposition 3.8, we use the following lemma, where \(Y^\varepsilon _t\) denotes the Markov process from Sect. 2.1.

Lemma 3.10

Let U be as in Proposition 3.8 and let Assumption 2.2 hold for some \(p_\star \). When \(H < \frac{1}{2}\), we further assume \(E\subset L^{p_\star }\) and \(\beta < H - \frac{1}{p_\star }\), where \(U = \mathcal{C}^\beta \). Then, given \(f \in E\) and setting \({\hat{f}}(t) = f(Y^\varepsilon _t)\), one has for every \(p < p_\star \) the bound

uniformly over E. (Here we use the convention \(p_\star = \infty \) when \(H>\frac{1}{2}\).)

Proof

For \(H \in (\frac{1}{3},\frac{1}{2})\), the assertion follows immediately from Kolmogorov’s continuity test, using Assumption 2.2 and \(E\subset L^{p}\). For \(H \ge \frac{1}{2}\), it suffices to note that if \(f \in L^p(\mathcal{Y},\mu )\), then for any fixed \(\varepsilon > 0\) the map \({\hat{f}} :t \mapsto f(Y^\varepsilon _t)\) belongs to \(L^p([0,1])\) almost surely and \(\mathbf{E}\Vert {\hat{f}}\Vert _{L^p}^p \le \Vert f\Vert _{L^p}^p\). \(\square \)

We now show how to collect these objects into one “large” Banach space-valued rough path. The process itself will take values in \(\mathcal{B}= \mathcal{C}_b^3(\mathbf{R}^d,\mathbf{R}^d)\), with the second order processes taking value in \(\mathcal{B}_2 = \mathcal{C}_b^3(\mathbf{R}^{d}\times \mathbf{R}^{d},(\mathbf{R}^{d})^{\otimes 2})\). Our aim is then to define a \(\mathcal{B}\oplus \mathcal{B}_2\)-valued rough path \((Z^{\varepsilon },{{\mathbb {Z}}}^{\varepsilon })\) which is the canonical lift (in the sense of Proposition 3.8 for any finite collection of x’s) of

Let \(\{f_{i,x}^\varepsilon \}_{i\le d}\) be the collection of maps from \(\mathbf{R}_+\) to \( \mathbf{R}^m\) determined by

With this notation at hand and recalling the construction of \(Z^f\) and \({{\mathbb {Z}}}^{f,g}\) as in the proof of Proposition 3.8, it is then natural to look for a \(\mathcal{B}\oplus \mathcal{B}_2\)-valued rough path \((Z^{\varepsilon },{{\mathbb {Z}}}^{\varepsilon })\) such that, for every \(x, {\bar{x}} \in \mathbf{R}^d\), the identities

hold almost surely. (Provided that \(F(x,\cdot ) \in E\) for every x, the right-hand sides make sense by combining Lemma 3.10 with Proposition 3.8.) We claim that this does indeed define a bona fide infinite-dimensional rough path

Recall also that a rough path \({{\mathbf {Z}}}= (Z,{{\mathbb {Z}}})\) is weakly geometric if the identity

holds, where the transposition map \((\cdot )^\top :\mathcal{B}\otimes \mathcal{B}\rightarrow \mathcal{B}\otimes \mathcal{B}\) swapping the two factors is continuously extended to \(\mathcal{B}_2\). With these notations, we have the following.

Proposition 3.11

Let Assumptions 2.2, 2.3, and 2.7 hold and let \(\alpha \in (\frac{1}{3} ,H - \frac{1}{p_\star })\) if \(H < \frac{1}{2}\) and \(\alpha \in (\frac{1}{3},\frac{1}{2})\) otherwise. Then, for any \(\varepsilon > 0\), there exists a random rough path \({{\mathbf {Z}}}^\varepsilon = (Z^\varepsilon ,{{\mathbb {Z}}}^\varepsilon )\) in \(\mathscr {C}^\alpha ([0,T], \mathcal{B}\oplus \mathcal{B}_2)\) that is weakly geometric and such that, for every x, (3.17) holds almost surely.

Proof

Since the Chen relations and (3.18) are obviously satisfied for smooth approximations as in (3.11), we only need to show that the analytic constraints hold. In other words, for any fixed \(T >0\) and \(\varepsilon > 0\), we look for an almost surely finite random variable \(C_\varepsilon \) such that

holds uniformly over all \(0\le s < t \le T\). By the Kolmogorov criterion for rough paths [15, Thm 3.1], it suffices to show that, for some \(\beta > 0\) and \(p \ge 1\) such that \(\gamma - \frac{1}{p} > \alpha \), one has the bounds

By Lemma A.1 below, it suffices to show that

for \(k + \ell \le 4\) and some p such that \(p > (4d/\kappa ) \vee (\gamma -\alpha )^{-1}\).

Since for \(\ell \le 4\) we have \(D_x^\ell F_i^*(x,\cdot ) \in E\) with \(\Vert D_x^\ell F_i^*(x,\cdot )\Vert _E \lesssim (1+|x|)^{-\kappa }\) by Assumption 2.7, it follows immediately from Proposition 3.8 combined with Lemma 3.10 that the bound (3.20a) holds for \(\gamma = H\) and \(p \le p_\star \) when \(H < \frac{1}{2}\) and for any \(\gamma < H\) and \(p \ge 1\) when \(H \ge \frac{1}{2}\). The bound (3.20b) follows in the same way. (These arguments are somewhat formal, but can readily be justified by taking limits of smooth approximations.) \(\square \)

In order to prove Proposition 3.1, we make use of the following variant “in probability” of the usual tightness criterion for convergence in law.

Proposition 3.12

Let \((\mathcal{Z},d)\) be a complete separable metric space and let \(\{L_k\,:\, k \in \mathbf{N}\}\) be a countable collection of continuous maps \(L_k :\mathcal{Z}\rightarrow \mathbf{R}\) that separate elements of \(\mathcal{Z}\) in the sense that, for every \(x,y \in \mathcal{Z}\) with \(x\ne y\) there exist k such that \(L_k(x) \ne L_k(y)\).

Let \(\{Z_n\}_{n \ge 0}\) and \(Z_\infty \) be \(\mathcal{Z}\)-valued random variables such that the collection of their laws is tight and such that \(L_k(Z_n) \rightarrow L_k(Z_\infty )\) in probability for every k. Then, \(Z_n \rightarrow Z_\infty \) in probability.

Proof

Let \({\hat{d}}:\mathcal{Z}^2 \rightarrow \mathbf{R}_+\) be the continuous distance function given by

and note first that our assumption implies that \({\hat{d}}(Z_n, Z_\infty ) \rightarrow 0\) in probability. Given \(\varepsilon > 0\), tightness implies that there exists \(K_\varepsilon \subset \mathcal{Z}\) compact such that \(\mathbf{P}(Z_n \not \in K_\varepsilon ) \le \varepsilon \) for every \(n \in \mathbf{N}\cup \{\infty \}\). Furthermore, the set \(\{(x,y) \in K_\varepsilon \times K_\varepsilon \,:\, d(x,y) \ge \varepsilon \}\) is compact, so that \({\hat{d}}\) attains its infimum \(\delta \) on it. Since \({\hat{d}}\) only vanishes on the diagonal, one has \(\delta > 0\) and, since \({\hat{d}}(Z_n, Z_\infty ) \rightarrow 0\) in probability, we can find \(N>0\) such that \(\mathbf{P}({\hat{d}}(Z_n, Z_\infty ) \ge \delta ) \le \varepsilon \) for every \(n \ge N\).

It follows that, for every \(n \ge N\) one has

which implies the claim. \(\square \)

Regarding tightness itself, the following lemma is a slight variation of well known results.

Lemma 3.13

Let \({\hat{\mathcal{B}}} \subset \mathcal{B}\) and \({\hat{\mathcal{B}}}_2 \subset \mathcal{B}_2\) be compact embeddings of Banach spaces such that \({\hat{\mathcal{B}}} \otimes {\hat{\mathcal{B}}} \subset {\hat{\mathcal{B}}}_2\) with \(\Vert v\otimes w\Vert _{{\hat{\mathcal{B}}}_2} \lesssim \Vert v\Vert _{{\hat{\mathcal{B}}}}\Vert w\Vert _{{\hat{\mathcal{B}}}}\). Let \(\mathcal{A}\) be a collection of random \({\hat{\mathcal{B}}} \oplus {\hat{\mathcal{B}}}_2\)-valued rough paths such that for some \(\alpha _0>\frac{1}{3}\) and every \({{\mathbf {Z}}}= (Z,{{\mathbb {Z}}})\in \mathcal{A}\),

for some \(p > 3/(3\alpha _0-1)\). Then, the laws of the \({{\mathbf {Z}}}\)’s in \(\mathcal{A}\) are tight in \(\mathscr {C}^\alpha ([0,T], \mathcal{B}\oplus \mathcal{B}_2)\) for any \(T > 0\) and \(\alpha \in (\frac{1}{3}, \alpha _0 - \frac{1}{p})\).

Proof

Write \(\mathcal{G}\) for the metric space given by \(\mathcal{B}\oplus \mathcal{B}_2\) endowed with the metric

Recall then that \(\mathscr {C}^\alpha ([0,T], \mathcal{B}\oplus \mathcal{B}_2)\) can be identified with the usual space of \(\alpha \)-Hölder functions with values in \(\mathcal{G}\) by identifying \({{\mathbf {Z}}}= (Z,{{\mathbb {Z}}})\) with the function \(t \mapsto {{\mathbf {Z}}}_t {\mathop {=}\limits ^{\tiny {\hbox {def}}}}Z_{0,t} \oplus {{\mathbb {Z}}}_{0,t}\) and noting that, thanks to Chen’s relations,

(See [19, Sec. 7.5] for more details and motivation.) Since d generates the same topology on \(\mathcal{G}\) as that given by the Banach space structure of \(\mathcal{B}\oplus \mathcal{B}_2\), balls of \({\hat{\mathcal{B}}} \oplus {\hat{\mathcal{B}}}_2\) are compact in \(\mathcal{G}\). The claim then follows at once from Kolmogorov’s continuity test, combined with the fact that, given a compact metric space \((\mathcal{X}, d)\) and a compact subset \(\mathcal{K}\) of a Polish space \((\mathcal{Y},{\bar{d}})\), the set \(\mathcal{C}^\beta (\mathcal{X},\mathcal{K})\) is compact in \(\mathcal{C}^\alpha (\mathcal{X},\mathcal{Y})\) for any \(\beta > \alpha \). \(\square \)

Proof of of Proposition 3.1

We apply Proposition 3.12 with the metric space \(\mathcal{Z}\) given by \(\mathscr {C}^\alpha ([0,T], \mathcal{B}\oplus \mathcal{B}_2)\), \(Z_n = {{\mathbf {Z}}}^{\varepsilon ,\delta _n}\) for any given sequence \(\delta _n \rightarrow 0\), and \(Z_\infty = {{\mathbf {Z}}}^\varepsilon \) as constructed in Proposition 3.11. The continuous maps \(L_k\) appearing in the statement are given by the collection of maps \((Z,{{\mathbb {Z}}}) \mapsto Z_t(x)\) and \((Z,{{\mathbb {Z}}}) \mapsto {{\mathbb {Z}}}_{s,t}(x,{\bar{x}})\) for a countable dense set of times s and t and elements \(x, {\bar{x}} \in \mathbf{R}^d\).

It follows from (3.20) and Lemma A.1 that the bound (3.21) holds for \({{\mathbf {Z}}}^{\varepsilon ,\delta }\), uniformly in \(\delta \) (but with \(\varepsilon \) fixed), so that the required tightness condition holds by Lemma 3.13. For any fixed \(\varepsilon > 0\), the convergences in probability \(Z^{\varepsilon ,\delta }_{t}(x)_{i} \rightarrow Z^{\varepsilon }_{t}(x)_{i}\) and \({{\mathbb {Z}}}^{\varepsilon ,\delta }_{s,t}(x,{\bar{x}})_{ij} \rightarrow {{\mathbb {Z}}}^{\varepsilon }_{s,t}(x,{\bar{x}})_{ij}\) were shown in Proposition 3.8. (It suffices to apply it with the choices \(f = f^\varepsilon _{i,x}\) and \(g = f^\varepsilon _{j,{\bar{x}}}\).) \(\square \)

3.3 Formulation of the main technical result

The main technical result of this article can then be formulated in the following way.

Theorem 3.14

Let \(H\in (\frac{1}{3},1)\), let Assumptions 2.1–2.3, and 2.7 hold, and let \(\alpha \) and \({{\mathbf {Z}}}^\varepsilon \) be as in Proposition 3.11. Then, as \(\varepsilon \rightarrow 0\), \({{\mathbf {Z}}}^\varepsilon \) converges weakly in the space of \(\alpha \)-Hölder continuous \((\mathcal{B},\mathcal{B}_2)\)-valued rough paths to a limit \({{\mathbf {Z}}}\). Furthermore, there is a Gaussian random field W as in (1.8) such that

where \(\mathbb {W}^{\mathrm{It}\hat{\mathrm{o}}}_{s,t}(x,{\bar{x}}) = \int _s^t Z_{s,r}(x)\otimes W({\bar{x}},dr)\), interpreted as an Itô integral and \(\Sigma \) is as in (1.6).

The proof of this result will be given in Sects. 4 and 5 below, see Proposition 5.1 which is just a slight reformulation of Theorem 3.14. We first show in Sect. 4 that the family \(\{ {{\mathbf {Z}}}^\varepsilon \}_{\varepsilon \le 1}\) is tight in a suitable space of rough paths and then identify its limit in Sect. 5.

Corollary 3.15

Under the assumptions of Theorem 3.14, the solution flow of (1.4) converges weakly to that of the Kunita-type SDE (1.7).

Proof

Define the \(\mathcal{B}\)-valued process

It follows from Assumption 2.7 that, for \(k \le 4\) and \(p \le p_\star \),

uniformly over \(\varepsilon \). This shows that the family \(\{Z^{0, {\varepsilon }}\}_{\varepsilon \le 1}\) is tight in \(\mathcal{C}^\beta ([0,1],\mathcal{B})\) for every \(\beta < 1\).

Furthermore, by the ergodic theorem which holds under Assumption 2.1, for every x,

almost surely. Since we can choose \(\beta \) and \(\alpha \) such that \(\alpha + \beta > 1\) and \(2\beta > 1\), it follows that there is no need to control any cross terms between \(Z^{0,{\varepsilon }}\) and either \(Z^{\varepsilon }\) or \(Z^{0,{\varepsilon }}\) itself in order to be able to solve equations driven by both [27, 53]. Furthermore, since the limit of \(Z^{0,{\varepsilon }}\) is deterministic, one deduces joint convergence from Theorem 3.14.

By the continuity theorem for rough differential equations, the solutions of (1.4) written in the form (3.2) converge weakly to those of the rough differential equation

It remains to identify solutions to this equation with those of (1.7).

This is straightforward and follows as in [15, Sec 5.1] for example. Since the Gubinelli derivative \(x'\) of the solution \(X = (x,x')\) to (3.22) is given by \(\delta (x)\), the integral \(\int _0^t \delta (X_s) d{{\mathbf {Z}}}_s\) is obtained as limit of the compensated Riemann sum

where \({{\mathcal {P}}}\) is a partition on [0, t] and \(D\delta \cdot \delta \) is as in (3.3). Since x is continuous and adapted to the filtration generated by W, the first term converges to

The last term on the other hand converges to 0 in probability since it is a discrete martingale and its summands are centred random variables of variance \(\mathcal{O}(|v-u|^2)\). \(\square \)

4 Tightness of the Rough Driver as \({\varepsilon \rightarrow 0}\)

The content of this section is the proof of the following tightness result. Let \(\{{{\mathbf {Z}}}^\varepsilon \}_{\varepsilon \le 1}\) be given as in Proposition 3.11.

Proposition 4.1

Let Assumptions 2.1–2.3, and 2.7 hold. For \(H\in (\frac{1}{3}, \frac{1}{2}]\), there exists \( \alpha \in (\frac{1}{3}, H)\) such that the family \(\{{{\mathbf {Z}}}^\varepsilon \}_{\varepsilon \le 1}\) is tight in \(\mathscr {C}^\alpha ([0,T], \mathcal{B}\oplus \mathcal{B}_2)\).

For \(H \in (\frac{1}{2},1)\), if in addition \(\int F(x,y)\,\mu (dy) = 0\) for every x, then the family of rough paths \({{\mathbf {Z}}}^\varepsilon \) is tight in \(\mathcal{C}^\alpha \) for every \(\alpha \in (\frac{1}{3},\frac{1}{2})\).

It will be convenient to introduce the following notation. Given \(f,g \in E\), we use the shorthand

We then have the following tightness criterion.

Lemma 4.2

Let \(p>d+1\). Assume that for any \(f,g\in E\), \(|s-t|\le 1\), and \(\varepsilon \in (0,1]\),

where \(p > 3/(3\alpha _0-1)\). Let furthermore Assumption 2.7 hold with \(\kappa > \frac{8d}{p}\). Then the family \(\{{{\mathbf {Z}}}^\varepsilon \}_{\varepsilon \le 1}\) is tight in \(\mathscr {C}^\alpha ([0,T], \mathcal{B}\oplus \mathcal{B}_2)\) for any \( \alpha < \alpha _0-1/p\).

Proof

Recall that with the above notations, one has from (3.17)

By the assumption for \(|\ell | \le 3\) one has for \(|s-t|\le 1\) and \(|x-x'| \le 1\),

where we wrote \([x,x']\) for the convex hull of \(\{x,x'\}\). Here, the last bound follows from Assumption 2.7. It then follows from Lemma A.1 that, for \({\hat{\mathcal{B}}}\) as defined in the appendix, \(\mathbf{E}\Vert Z^\varepsilon _{s,t}\Vert _{{\hat{\mathcal{B}}}}^p \lesssim |t-s|^{p \alpha _0}\). We choose \(\zeta \) to be any number in \((0, 1-d/p)\).

It similarly follows that for \(|k+\ell | \le 3\) and \(|x-x'|\le 1\)

(and analogously when varying \({\bar{x}}\)). Since \(\kappa p /8 > d\) by assumption, we can again apply Lemma A.1 with p/2, thus yielding \(\mathbf{E}\Vert {{\mathbb {Z}}}^\varepsilon _{s,t}\Vert _{{\hat{\mathcal{B}}}_2}^{p/2} \lesssim |t-s|^{p\alpha _0}\). Since furthermore \(p > 3/(3\alpha _0-1)\) by assumption, the conditions of Lemma 3.13 are satisfied and the claim follows for any \(\alpha < \alpha _0 - 1/p\). \(\square \)

Proof of Proposition 4.1

The arguments are quite different for the different ranges of H, but they will always reduce to verifying the assumptions of Lemma 4.2.

First let \(H\in (\frac{1}{3}, \frac{1}{2})\). The first assumption of Lemma 4.2 follows from Proposition 4.5 below with \(\alpha _0=H\) and from the trivial bound

while the second assumption follows from Proposition 4.7 below. Both hold for any \(p\le p_\star /4\) where \(p_\star >\max \{4d, \frac{6}{(3H-1)}\}\), and the proofs of the propositions are the content of Sect. 4.1.

The ingredients for showing tightness of \({{\mathbf {Z}}}^\varepsilon \) where \(H\in (\frac{1}{2}, 1)\) are given in Sect. 4.2, starting with a bound on J analogous to that of Proposition 4.5. Unlike in the proof of that statement though, we do not show this by bounding the conditional variance \(\mathbf{E}\bigl (|J_{s,t}^\varepsilon (f)|^2\,|\,\mathcal{F}^Y\bigr )\). This is because, as a consequence of the lack of integrability at infinity of \(\eta ''\) when \(H > \frac{1}{2}\), it appears difficult to obtain a sufficiently good bound on it, especially for H close to 1. (In particular, the best bounds one can expect to obtain from a quantitative law of large numbers don’t appear to be sufficient when \(H > \frac{3}{4}\).) The required bounds are collected in Corollary 4.17 which yields the assumptions of Lemma 4.2 with \(\alpha _0 = \frac{1}{2}\) and arbitrary p.

Finally we take \(\alpha _0=\frac{1}{2}\) when \(H=\frac{1}{2}\), then \( \Vert J^\varepsilon _{s,t}(f)\Vert _{L^p}\le \sqrt{ \int _s^t |f(Y_r^\varepsilon )|^2} dr \lesssim C \Vert f\Vert _E \sqrt{ |t-s|}\;\) by Burkholder-Davies-Gundy inequality, and similarly the second order processes satisfies the bound:

allowing us to again apply Lemma 4.2 and concluding the proof. \(\square \)

4.1 The low regularity case

This section consists of a number of a priori moment bounds, which we then combine at the end to provide the proof of Proposition 4.7. These uniform in \(\varepsilon \) moment bounds follow from the Hölder continuity of Y in a subspace of \(L^{p^*}\) for a sufficiently large \(p^*\), in particular ergodicity of Y does not play any role.

We will make repeated use of the following simple calculation, where we recall (3.5) for the definition of the distribution \(\eta \).

Lemma 4.3

Given \(t > 0\) and \(H < \frac{1}{2}\), let \(\Psi :[0,t]^2 \rightarrow \mathbf{R}\) be a continuous function such that for some numbers \(\varepsilon > 0\), \(\beta > -2H\), \(\gamma , \zeta > 1-2H\), and \(C, {\hat{C}}, {\bar{C}} \ge 0\) it holds that

for all \(s,r \in [0,t]\). Then, one has the bound

with the proportionality constant K depending only on \(\beta \), \(\gamma \) and \(\zeta \). The same bound holds if the upper limit of the inner integral in (4.2) is given by r instead of t.

Proof

Let I be the double integral appearing in (4.2). As a consequence of Lemma 3.6, we can rewrite it as

We then have

We used the conditioned imposed on \(\beta , \gamma \) and \(\zeta \). Regarding \(I_2\), we have the bound

and the claim follows. \(\square \)

Note that replacing \(\Psi (r,s)\) by \(\Psi _\tau (r,s) = \tau ^{2H}\Psi (\tau r,\tau s)\) and t by \(t/\tau \), the left-hand side of (4.2) is left unchanged. Regarding the bounds (4.1), such a change leads to the substitutions \(C \mapsto C \tau ^{2H+\beta }\), \(\varepsilon \mapsto \varepsilon /\tau \), \({\hat{C}} \mapsto {\hat{C}} \tau ^{2H+\beta }\), and \({\bar{C}} \mapsto {\bar{C}} \tau ^{2H+\beta }\). All three terms appearing in the right-hand side of (4.2) are invariant under these substitutions.

Remark 4.4

The proof of Lemma 4.3 works mutatis mutandis for \(\Psi \) taking values in a Banach space, for example \(L^p\). We also see that if \(\Psi \) is upper bounded by a finite sum of terms of the type (4.1) with different exponents \(\beta \) and \(\gamma \), then the bound (4.2) still holds with the corresponding sum in the right-hand side.

We perform a number of preliminary calculations. For this, it will be notationally convenient to introduce the shortcuts

for \(f \in E\) (with values in \(\mathbf{R}^m\)).

Proposition 4.5

Let \(H\in (\frac{1}{3}, \frac{1}{2})\) and let Assumptions 2.2 and 2.3 hold for some \(p_\star \ge 2\). Then there exists a constant C such that, uniformly over \(s\ge 0\), \(t\ge 0\), and \(f\in E\),

Proof

Let \(\mathcal{F}^Y\) denote the \(\sigma \)-algebra generated by all point evaluations of the process Y, and \(\mathcal{F}^Y_t\) the corresponding filtration. Write p for \(p_\star \) for brevity. Since B is independent of \(\mathcal{F}^Y\) and the \(L^p\) norm of an element of a Wiener chaos of fixed degree is controlled by its \(L^2\) norm, we have

for some universal constant c depending only on p and on the degree of the Wiener chaos, so that

Since \(f\in E\) is in \(L^{p}\) by Assumption 2.3, it follows from Assumption 2.2 that

We can therefore apply Lemma 4.3 with \(\gamma = H\) and \(\beta = 0\) so that, for \(\Vert f\Vert _E \le 1\), one has

whence the desired bound follows. (The condition \(\gamma > 1-2H\) is satisfied since \(H > \frac{1}{3}\) by assumption.) \(\square \)

We now consider the second-order process \({{\mathbb {J}}}\) given by

and bound it in a similar way. Recalling that \( \langle f,g\rangle _\mu =\int _\mathcal{Y}\langle f, g\rangle d\mu \), we first obtain a bound on its expectation.

Proposition 4.6

Let \(H\in (\frac{1}{3}, \frac{1}{2})\), let Assumptions 2.2 and 2.3 hold for some \(p_\star \ge 2\), and let \(f ,g\in E\). One has

provided that \(2p \le p_\star \).

Proof

It follows from (4.7) that we have the identity