Abstract

We study the spectra of quantum trees of finite cone type. These are quantum graphs whose geometry has a certain homogeneity, and which carry a finite set of edge lengths, coupling constants and potentials on the edges. We show the spectrum consists of bands of purely absolutely continuous spectrum, along with a discrete set of eigenvalues. Afterwards, we study random perturbations of such trees, at the level of edge length and coupling, and prove the stability of pure AC spectrum, along with resolvent estimates.

Similar content being viewed by others

1 Introduction

Our aim in this paper is to establish the existence of bands of purely absolutely continuous (AC) spectrum for a large family of quantum trees. One of our motivations is to provide a collection of examples relevant for the Quantum Ergodicity result proven in [6].

For discrete trees, the problem is quite well understood when the tree is somehow homogeneous. The adjacency matrix of the \((q+1)\)-regular tree \({\mathbb {T}}_q\) has pure AC spectrum \([-2\sqrt{q},2\sqrt{q}]\) as is well-known [21]. If we fix a root \(o\in {\mathbb {T}}_q\) and regard the tree as descending from o, then the subtree descending from any offspring is the same (each is a q-ary tree), except for the subtree at the origin (which has \((q+1)\) children). We say that \({\mathbb {T}}_q\) has two “cone types”. It was shown in [19] that if \({\mathbb {T}}\) is a general tree with finitely many cone types, such that each vertex has a child of its own type, and all types arise in each progeny subtree, then the spectrum consists of bands of pure AC spectrum. This problem was revisited in [5], where these assumptions were relaxed to allow \({\mathbb {T}}\) to be any universal cover of a finite graph of minimal degree at least 2 (see also [9] for similar results concerning more general operators on these discrete trees). In this case however, besides the bands of AC spectrum, a finite number of eigenvalues may appear.

A natural question is whether AC spectrum survives if we add a potential. This is motivated by the famous Anderson model [8] where random independent, identically-distributed potentials are attached at lattice sites. It remains a major open problem to prove such stability for the Anderson model on the Euclidean lattice \({\mathbb {Z}}^d\), \(d\ge 3\) [30]. The first mathematical proof showing the stability of pure AC spectrum was obtained in [22] in the case of regular trees (Bethe lattice) under weak random perturbations, thus providing the first example of spectral delocalization for an Anderson model. More general trees were subsequently treated in [16, 20], always in the setting of discrete Schrödinger operators. The stability of AC spectrum under perturbation by a non-random radial potential was proved in [19] in case of non-regular trees of finite cone type.

In this article we consider quantum trees, i.e. each edge is endowed some length \(L_e\) and we study differential operators acting on the edges with appropriate boundary conditions at the vertices specified by certain coupling constants. The presence of AC spectrum for quantum trees appears to have been studied less systematically than in the case of discrete Schrödinger operators. In case of regular trees \({\mathbb {T}}_q\), it was shown in [12] that the quantum tree obtained by endowing each edge with the same length L, the same symmetric potential W on the edges and the same coupling constant \(\alpha \) at the vertices, has a spectrum consisting of bands of pure AC spectrum, along with eigenvalues between the bands. The setting was a bit generalized quite recently in [13], where each vertex in a 2q-regular tree is surrounded by the same set of lengths \((L_1,\dots ,L_q)\), each length repeated twice, similarly the same set of symmetric potentials \((W_1,\dots ,W_q)\), and the boundary conditions are taken to be Kirchhoff. The nature of the spectrum is partly addressed, but the possibility that it consists of a discrete set of points is not excluded. Finally, it was shown in [1] that the AC spectrum of the equilateral quantum tree [12] remains stable under weak random perturbation of the edge lengths. The theorem however does not yield purity of the AC spectrum in some interval; one can only infer that the Lebesgue measure of the perturbed AC spectrum is close to the unperturbed one. We also mention the papers [17, 28] which consider radial quantum trees, for which a reduction to a half-line model can be performed.

Our aim here is twofold. First, go beyond regular graphs. We are mainly interested in the case where the tree is the universal cover of some compact quantum graph. This implies the set of different lengths, potentials and coupling constants is finite, but the situation can be much more general than the special Cayley graph setting considered in [13]. We show in this framework that the spectrum will consist of (nontrivial) bands of pure AC spectrum, plus some discrete set of eigenvalues. Next, we consider random perturbations of these trees. We can perturb both the edge lengths and coupling constants. This setting is more general than [1], where the tree was regular and the coupling constants were zero. But our main motivation here is especially to derive the purity of the perturbed AC spectrum, along with a strong control on the resolvent, which is an important ingredient to prove quantum ergodicity for large quantum graphs. We do this in a companion paper [6].

1.1 Some definitions

1.1.1 Quantum graphs

Let \(G=(V,E)\) be a graph with vertex set V and edge set E. We will assume that there are no self-loops and that there is at most one edge between any two vertices, so that we can see E as a subset of \(V\times V\). For each vertex \(v\in V\), we denote by d(v) the degree of v. We let \(B= B(G)\) be the set of oriented edges (or bonds), so that \(|B|=2|E|\). If \(b\in B\), we shall denote by \({\hat{b}}\) the reverse bond. We write \(o_b\) for the origin of b and \(t_b\) for the terminus of b. We define the map \(e: B\longrightarrow E\) by \(e( (v,v')) = \{v,v'\}\). An orientation of G is a map \(\mathfrak {or}: E \longrightarrow B\) such that \(e \circ \mathfrak {or} = Id_E\).

A length graph (V, E, L) is a connected combinatorial graph (V, E) endowed with a map \(L: E\rightarrow (0,\infty )\). If \(b\in B\), we denote \(L_b:= L(e(b))\).

A quantum graph \({\mathbf {Q}}=(V,E,L,W,\alpha )\) is the data of:

-

A length graph (V, E, L),

-

A potential \(W=(W_b)_{b\in B} \in \bigoplus _{b\in B} C^0([0,L_b]; {\mathbb {R}})\) satisfying for \(x\in [0,L_b]\),

$$\begin{aligned} W_{\widehat{b}}(L_b-x) = W_b(x)\,. \end{aligned}$$(1.1) -

Coupling constants \(\alpha = (\alpha _v)_{v\in V} \in {\mathbb {R}}^V\).

The underlying metric graph is the quotient

where \((b,x_b)\sim (b',x'_{b'})\) if \(b'= {\hat{b}}\) and \(x'_{b'}= L_b-x_b\).

A function on the graph will be a map \(f: {\mathscr {G}}\longrightarrow {\mathbb {R}}\). It can also be identified with a collection of maps \((f_b)_{b\in B}\) such that \(f_b (L_b - \cdot ) = f_{{\hat{b}}}(\cdot )\). We say that f is supported on e for some \(e\in E\) if \(f_b \equiv 0\) unless \(e(b)= e\).

If each \(f_b\) is positive and measurable, we define \(\int _{\mathscr {G}} f(x) \mathrm {d}x := \frac{1}{2}\sum _{b\in B} \int _0^{L_b} f_{b}(x_b) \mathrm {d}x_b\). We may then define the spaces \(L^p({\mathscr {G}})\) for \(p\in [1, \infty ]\) in the usual way.

Condition (1.1) simply requires W to be well-defined on \({\mathscr {G}}\) (no symmetry assumption).

When \(G=(V,E)\) is a tree, i.e., contains no cycles (which will be the case in most of the paper), we say that \({\mathbf {Q}}\) is a quantum tree, and we denote it by the letter \({\mathbf {T}}\) rather than \({\mathbf {Q}}\), while the set \({\mathscr {G}}\) is called a metric tree and is denoted by \({\mathscr {T}}\).

1.1.2 Orienting quantum trees

Let \({\mathbb {T}}\) be a combinatorial tree, that is, a graph containing no cycles. We denote its vertex set by \(V({\mathbb {T}})\) or just V, its edge set by \(E({\mathbb {T}})\), and its set of oriented edges by \(B({\mathbb {T}})\). In all the paper, we will often write \(v\in {\mathbb {T}}\) instead of \(v\in V({\mathbb {T}})\) to lighten the notations.

In this paragraph, we explain how we can present the tree \({\mathbb {T}}\) in a coherent view, that is to say, fix an oriented edge \(b_o \in B({\mathbb {T}})\), and give an orientation to all the other edges of \({\mathbb {T}}\), by asking that they “point in the same direction as \(b_o\)”.

More precisely, let us fix once and for all an oriented edge \(b_o \in B({\mathbb {T}})\), corresponding to an edge \(e_o\in E({\mathbb {T}})\). If we remove the edge \(e_o\) from \({\mathbb {T}}\), we obtain two connected components which are still combinatorial trees. We will write \({\mathbb {T}}_{b_o}^+\) for the connected component containing \(t_{b_o}\), and \({\mathbb {T}}_{b_o}^-\) for the component containing \(o_{b_o}\).

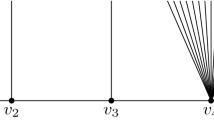

Let \(v\in {\mathbb {T}}_{b_o}^+\) be at a distance n from \(t_{b_o}\). Amongst the neighbours of v, one of them is at distance \(n-1\) from \(t_{b_o}\): we denote it by \(v_-\), and say that \(v_-\) is the parent of v. The other neighbours of v are at a distance \(n+1\) from \(t_{b_o}\), and are called the children of v. The set of children of v is denoted by \({\mathscr {N}}_v^+\). On the contrary, if \(v\in {\mathbb {T}}_{b_o}^-\) is at distance n from \(o_{b_o}\), its unique neighbour at a distance \(n-1\) from \(o_{b_o}\) is called the child of v, and denoted by \(v_+\), and its other neighbours are its parents, whose set we denote by \({\mathscr {N}}_v^-\). These definitions are natural if we see the tree at the left of Fig. 1 as a genealogical tree.

Let \(V^{*} = \big (V({\mathbb {T}}) {\setminus } \{o_{b_o},t_{b_o}\}\big )\cup \{o\}\). We define a map \({\mathfrak {b}}: V^* \longrightarrow B({\mathbb {T}})\) as follows: we set \({\mathfrak {b}}(o) = b_o\), and, if \(v\in {\mathbb {T}}_{b_o}^+\), then \({\mathfrak {b}}(v) = (v_-, v)\), while if \(v\in {\mathbb {T}}_{b_o}^-\), then \({\mathfrak {b}}(v) = (v, v_+)\). One easily sees that \(e\circ {\mathfrak {b}}: V^* \longrightarrow E({\mathbb {T}})\) is a bijection, so that \({\mathfrak {b}} \circ (e\circ {\mathfrak {b}})^{-1}\) is an orientation of \({\mathbb {T}}\). The map \({\mathfrak {b}}\) serves to index all oriented edges: those in \({\mathbb {T}}_{b_o}^+\) by their terminus, those in \({\mathbb {T}}_{b_o}^-\) by their origin, and \(b_o\) by its “midpoint” o. The latter makes sense once we turn \({\mathbb {T}}\) into a quantum tree \({\mathbf {T}}\). We denote \(L_v:=L_{{\mathfrak {b}}(v)}\) and \(W_v:=W_{{\mathfrak {b}}(v)}\). The metric tree \({\mathscr {T}}\) can be identified with the set

A function on \({\mathscr {T}}\) will then be the data of \(\psi = (\psi _v)_{v\in V^*}\), where each \(\psi _v\) is a function of the variable \(x_v\in [0, L_v]\).

On a quantum tree, we consider the Schrödinger operator

with domain \(D({\mathscr {H}}_{{\mathbf {T}}})\), the set of functions \((\psi _v)\in \underset{v\in V^*}{\bigoplus } W^{2,2}(0,L_v)\) satisfying the so-called \(\delta \)-conditions. Namely, for all \(v\in {\mathbb {T}}_{b_o}^+\),

while for all \(v\in {\mathbb {T}}_{b_o}^-\),

Finally, we have \(\psi _o(L_o) = \psi _{o_+}(0)\) \(\forall o_+\in {\mathscr {N}}_o^+\), \(\sum _{o_+} \psi _{o_+}'(0) = \psi _o'(L_o) + \alpha _{t_{b_o}} \psi _o(L_o)\), and \(\psi _{o_-}(L_{o_-}) = \psi _o(0)\), \(\sum _{o_-}\psi _{o_-}'(L_{o_-}) + \alpha _{o_{b_o}} \psi _o(0) = \psi _o'(0)\). In a common convention we will refer to the \(\alpha _v=0\) case as the Kirchhoff-Neumann condition.

Remark 1.1

The above conventions mean that we see \({\mathbb {T}}\) as a doubly infinite genealogical tree. This is what we called the coherent view; it can also be pictured by saying that we imagine an electric flow moving from \({\mathbb {T}}_{b_o}^-\) to \({\mathbb {T}}_{b_o}^+\).

There is another way of orienting the graph which we call the twisted view. This is done by turning \(b_o\) into a V-shape and viewing \(V({\mathbb {T}})\) as offspring of o. See Fig. 1 for an illustration; here one should think that o is a source from which the electric flows moves outwards. When necessary to highlight this genealogical structure, we will write \({{\mathbb {T}}}_o^+\) for the set of offsprings of o. Each vertex v has a single parent \(v_-\) and several children; all the edges take the form \(\{v, v_-\}\) for a unique v.

The link between the two views is immediate: functions on \({\mathbb {T}}_{b_o}^+\) in both views coincide, while on \({\mathbb {T}}_{b_o}^-\), one replaces b by \({\hat{b}}\) and derivatives take a sign. Here \({\hat{b}}=(t_b,o_b)\) is the edge reversal of b. Hence, in the twisted view,Footnote 1 all functions in the domain of \({\mathscr {H}}\) satisfy (1.31.4).

Given \(v\in V^{*}\), \(z\in {\mathbb {C}}\), let \(C_{z}(x)\) and \(S_{z}(x)\) be a basis of solutions of the problem \(-\psi _v''+W_v\psi _v = z \psi _v\) satisfying

Then any solution \(\psi _v\) of the problem satisfies

If \(W_v\equiv 0\) then the basis of solutions is

if \(W_v(x)=c_1+c_2\cos (2\pi x/L_v)\) then \(S_z, C_z\) would be Mathieu functions. It is a standard fact that \(S_z(x), C_z(x)\) are analytic functions of \(z\in {\mathbb {C}}\) (see for instance [26, Chapter 1]).

1.2 Trees of finite cone type

We define a cone in \({\mathbb {T}}\) to be a subtree of the form \({\mathbb {T}}_b^+\) or \({\mathbb {T}}_b^-\), for some \(b\in B({\mathbb {T}})\). Each cone \({\mathbb {T}}_b^+\) has an origin \(t_b\), and each cone \({\mathbb {T}}_b^-\) has an end \(o_b\).

We say that two quantum cones \({\mathbf {T}}_b^+\) and \({\mathbf {T}}_{b'}^+\) are isomorphic if there is an isomorphism of combinatorial graphs \(\varphi :{\mathbb {T}}_b^+ \rightarrow {\mathbb {T}}_{b'}^+\) such that \(L_{\varphi (v)} = L_v\), \(W_{\varphi (v)} = W_v\) and \(\alpha _{\varphi (v)} = \alpha _v\) for all \(v\in {\mathbb {T}}_b^+\). Isomorphic \({\mathbf {T}}_b^-\) and \({\mathbf {T}}_{b'}^-\) are defined the same way.

We say that \({\mathbf {T}}\) is a tree of finite cone type if there exists \(b_o\in B({\mathbb {T}})\) such that:

-

(i)

There are finitely many non-isomorphic quantum cones \({\mathbf {T}}_{(v_-,v)}^+\) as \(v\in {\mathbb {T}}_{b_o}^+\).

-

(ii)

There are finitely many non-isomorphic quantum cones \({\mathbf {T}}_{(w,w_+)}^-\) as \(w\in {\mathbb {T}}_{b_o}^-\).

Here \((t_{b_o})_-=o_{b_o}\) and \((o_{b_o})_+=t_{b_o}\). Note that in a regular tree, all cones \({\mathbb {T}}_b^{\pm }\) are isomorphic, but a necessary condition for it to be a quantum tree of finite cone type, is that its edges and vertices be endowed with finitely many lengths, potentials and coupling constants.Footnote 2

If \({\mathbf {T}}\) is a tree of finite cone type, with \(b_o\in B({\mathbb {T}})\) fixed, we may introduce a type function \(\ell :{\mathbb {T}}_{b_o}^+ \rightarrow {\mathbb {N}}_0={\mathbb {N}}\cup \{0\}\), taking values in a finite set, such that \(\ell (v) = \ell (w)\) iff \({\mathbf {T}}_{(v_-,v)}^+ \equiv {\mathbf {T}}_{(w_-,w)}^+\) as quantum trees. Similarly, \(\ell :{\mathbb {T}}_{b_o}^- \rightarrow {\mathbb {N}}_0\) satisfies \(\ell (v)=\ell (w)\) iff \({\mathbf {T}}_{(v,v_+)}^- \equiv {\mathbf {T}}_{(w,w_+)}^-\). Note that if \(\ell (v)=\ell (w)\), then \(W_v = W_w\), \(L_v = L_w\) and \(\alpha _v = \alpha _w\), since the corresponding isomorphism respects this information. Hence, any coherent quantum tree \({\mathbf {T}}\) of finite cone type comes with the following structure:

-

(a)

A fixed \(b_o\in B({\mathbb {T}})\).

-

(b)

Two finite sets of labels \({\mathfrak {A}}^+ = \{i_1,\dots ,i_m\}\), \({\mathfrak {A}}^- = \{j_1,\dots ,j_n\}\) and two matrices \(M=(M_{i,j})_{i,j\in {\mathfrak {A}}^+}\), \(N=(N_{i,j})_{i,j\in {\mathfrak {A}}^-}\). If \(v\in {\mathbb {T}}_{b_o}^+\) has type j, it has \(M_{j,k}\) children of type k. If \(w\in {\mathbb {T}}_{b_o}^-\) has type j, it has \(N_{j,k}\) parents of type k.

-

(c)

Finite sets \(\{L_i\}_{i\in {\mathfrak {A}}^\pm }\), \(\{W_i\}_{i\in {\mathfrak {A}}^\pm }\) and \(\{\alpha _i\}_{i\in {\mathfrak {A}}^\pm }\) encoding the lengths, potentials and coupling constants, respectively. More precisely, \(b_o\) is endowed a special length \(L_o\) and potential \(W_o\). If \((v_-,v)\in {\mathbb {T}}_{b_o}^+\) with \(\ell (v) = i\), then \(L_v = L_i\), \(W_v = W_i\) and \(\alpha _v = \alpha _i\). The same attribution is made if \((v,v_+)\in {\mathbb {T}}_{b_o}^-\) with \(\ell (v) = i\).

If we take the twisted view instead, we only need one alphabet \({\mathfrak {A}} = {\mathfrak {A}}^+\cup {\mathfrak {A}}^-\) and one corresponding matrix \(M=(M_{i,j})_{i,j\in {\mathfrak {A}}}\).

A trivial example is the equilateral, \((q+1)\)-regular quantum tree, with identical symmetric potentials W on each edge and identical coupling constant \(\alpha \) on each vertex [12]. In this case, all vertices in \({\mathbb {T}}_{b_o}^{\pm }\) have the same type, and we get two \(1\times 1\) matrices \(M = N = \begin{pmatrix} q \end{pmatrix}\).

An important class of examples comes from universal covers of finite undirected graphs. More precisely, if G is a finite undirected graph and \({\mathbb {T}}\) is its universal cover, then \({\mathbb {T}}\) is a combinatorial tree satisfying condition (i). If we endow G with a quantum structure \({\mathbf {G}}\) and lift it to \({\mathbb {T}}\) in the natural way, then the corresponding \({\mathbf {T}}\) will be a quantum tree of finite cone type.

Quantum trees of finite cone type satisfying (a)–(c) will be our basic, “unperturbed” trees. We denote the Schrödinger operator (1.2) acting in this setting as \({\mathscr {H}}_0\). It is self-adjoint, as a consequence of Theorem 17 in [23]. Later on, we shall study random perturbations of these trees, and denote the corresponding self-adjoint operator by \({\mathscr {H}}^{\omega }_\varepsilon \), where \(\varepsilon \) is the strength of the disorder.

We make the following assumption on \({\mathbb {T}}\):

- (C1*):

-

For any \(k,l\in {\mathfrak {A}}^+\), there is \(n=n(k,l)\) such that \((M^n)_{k,l}\ge 1\). Similarly, for \(i,j\in {\mathfrak {A}}^-\), there is \(n=n(i,j)\) with \((N^n)_{i,j}\ge 1\).

See Remark 1.2 below for a discussion of this condition. We may now state a first theorem, which describes the structure of the spectrum of \({\mathscr {H}}_0= {\mathscr {H}}_{\mathbf {T}}\) on a tree \({\mathbf {T}}\) of finite cone type. We denote by \(G_0^z(x,y)=({\mathscr {H}}_0-z)^{-1}(x,y)\) the Green’s function of \({\mathscr {H}}_0\).

Theorem 1.1

Let M, N satisfy (C1*). Then the spectrum of \({\mathscr {H}}_0\) consists of a disjoint union of closed intervals and of isolated points: \(\sigma ({\mathscr {H}}_0)= \left( \bigsqcup _rI_r \right) \cup {\mathfrak {P}}\), where the \(I_r\) are closed intervals, and \({\mathfrak {P}}\) is a discrete set. The spectrum is purely absolutely continuous in the interior of each band \(\mathring{I}_r\). For \(\lambda \in \mathring{I}_r\), and for any \(v\in {\mathbb {T}}\), the limit \(G^{\lambda +{\mathrm {i}}0}_0(v,v)\) exists and satisfies \({\text {Im}}G^{\lambda +{\mathrm {i}}0}_0(v,v) >0\), where \(G_0^z\) is the Green’s function of \({\mathscr {H}}_0\).

Let \(R_{z,0}^{\pm }\) be the Weyl-Titchmarsh functions of \({\mathscr {H}}_0\) as defined in [1], see (2.2). Let \(R_{\lambda ,0}^{\pm }=R_{\lambda +{\mathrm {i}}0,0}^{\pm }\) when the limit exists. Theorem 1.1 implies that \({\text {Im}}R_{\lambda ,0}^+(v) + {\text {Im}}R_{\lambda ,0}^-(v)>0\) in \(\mathring{I}_r\). We will need the stronger property that \({\text {Im}}R_{\lambda ,0}^+(v)>0\) for all v. For this, we introduce the following strengthening of (C1*).

- (C1):

-

The quantum tree \({\mathbf {T}}\) is the universal cover of a finite quantum graph \({\mathbf {G}}\) of minimal degree \(\ge 2\) which is not a cycle.

Remark 1.2

Condition (C1*) means that on \({\mathbb {T}}_{b_o}^+\), any cone type \(l\in {\mathfrak {A}}^+\) appears as offspring of any \(k\in {\mathfrak {A}}^+\) after a finite number of generations, and similarly for \({\mathbb {T}}_{b_o}^-\). It is not required that cone types in \({\mathfrak {A}}^-\) appear in \({\mathbb {T}}_{b_o}^+\) - we only need the matrices M and N to be separately irreducible. We can also allow for “rooted” trees where the root o has degree one. In this case the situation is a bit simpler actually; we only have to deal with one matrix M. Condition (C1*) applies in particular to trees with a “radial periodic” data, i.e. data that are periodic functions of the distance to the origin (such as some examples appearing in [28]).

Assumption (C1) implies (C1*) (see Remark 3.2), and is in fact more restrictive. In particular, \({\mathbf {T}}\) is “unimodular”, that is, all data is somehow homogeneous as we move along the tree. This excludes for example the binary tree and more generally radial periodic trees, where the root plays a special role. However, such unimodular trees are still very general, they are actually the most interesting for us, and many techniques (such as a reduction to a half-line model) fail to tackle them. Even in the very simple case where the base graph \({\mathbf {G}}\) is regular but the edge lengths are not equal, the lifted structure in general will be neither radial periodic, nor identical around each vertex (in contrast to [13]).

Note that the case where \({\mathbf {G}}\) is a cycle is already known when the couplings are zero. In this case \({\mathscr {H}}_{{\mathbf {T}}}\) is just a periodic Schrödinger operator on \({\mathbb {R}}\) (of period \(\le |{\mathbf {G}}|\)), it is well-known that the spectrum is purely AC in this case [27, Sect. XIII.16].

Theorem 1.2

If \({\mathbf {T}}\) satisfies (C1), then the spectrum of \({\mathscr {H}}_0\) consists of a disjoint union of closed intervals and of isolated points: \(\sigma ({\mathscr {H}}_0)= \left( \bigsqcup _rI_r \right) \cup {\mathfrak {P}}\), where the \(I_r\) are closed intervals, and \({\mathfrak {P}}\) is a discrete set. The spectrum is purely absolutely continuous in the interior of each band \(\mathring{I}_r\). For \(\lambda \in \mathring{I}_r\), the limit \(R_{\lambda +{\mathrm {i}}0,0}^+(v)\) exists for any \(v\in V\) and satisfies \({\text {Im}}R_{\lambda ,0}^+(v) >0\).

Remark 1.3

In Theorems 1.1 and 1.2, it is not excluded in principle that \(\bigcup _r I_r = \emptyset \), i.e. the spectrum consists of isolated points. We think this never happens for infinite quantum trees of finite cone type with the \(\delta \)-conditions we consider, i.e. we believe these should always have some continuous spectrum. We did not find such a result in the literature however. This is why we dedicate Sect. 4 to prove the following: if \({\mathbf {T}}\) satisfies either:

-

(1)

Assumption (C1) and has a single data \((L,\alpha ,W)\) (all edges carry the same length, coupling and symmetric potential),

-

(2)

Or has a general data \((L_e,\alpha _{o_e},W_e)_{e\in E({\mathbf {G}})}\), but the finite graph G is moreover Hamiltonian,

then \({\mathscr {H}}_{{\mathbf {T}}}\) always has some continuous spectrum, i.e. \(\bigcup _r\mathring{I}_r \ne \emptyset \). Recall that a finite graph is Hamiltonian if it has a cycle that visits each vertex exactly once. Note that as a discrete tree, \({\mathbb {T}}\) may cover many different graphs. We only need one of these finite graphs to be Hamiltonian. For example, we can consider any regular tree, despite the fact that some regular graphs (like the Petersen graph) are not Hamiltonian.

In particular, the Cayley tree considered in [13] can be realized as the universal cover of the complete bipartite graph \(K_{2q,2q}\), which is Hamiltonian. For this, use the fact that \(K_{2q,2q}\) has a proper 2q-edge-colouring and put the same length/potential on edges of the same colour. The lift of this is then a tree which has the same data around each vertex, and we may take \(L_{q+j}=L_j\), \(W_{q+j}=W_j\) to be in the setting [13]. Then our theorems imply this tree has nontrivial bands of pure AC spectrum, thus enriching the results of [13]. Again, this is just one very special application of our framework.

1.3 Random perturbations of trees of finite cone type

Fix a quantum tree \({\mathbf {T}}\) satisfying (C1). As explained in Remark 3.2, any such tree is a tree of finite cone type. We fix an edge \(e\in E({\mathbb {T}})\), and see our quantum tree in the twisted view (in which all vertices are descendent of a vertex o), so as to deal with a single alphabet \({\mathfrak {A}}\) and a corresponding matrix M. We denote the lengths and coupling constants of the unperturbed tree \({\mathbf {T}}\) by \((L_v^0)_{v\in V^{*}}\) and \((\alpha _v^0)_{v\in V}\). These can also be denoted \((L_i^0)_{i\in {\mathfrak {A}}\cup \{o\}}\) and \((\alpha _i^0)_{i\in {\mathfrak {A}}}\). We assume there are no potentials on the edges and the couplings are nonnegative:

We now want to analyze random perturbations of \({\mathbf {T}}\). For this purpose, we introduce a probability space \((\varOmega , {\mathscr {F}}, {{\,\mathrm{{\mathbb {P}}}\,}})\), a family of random variables \(\omega \in \varOmega \mapsto (L_v^{\omega })_{v\in V^{*}}\) representing random lengths, and a family of random variables \(\omega \in \varOmega \mapsto (\alpha _v^{\omega })_{v\in V}\) representing random coupling constants. In principle we could also consider random potentials \(\omega \in \varOmega \mapsto (W_v^{\omega })_{v\in V^{*}}\), however here we assume there are no potentials on the edges even after perturbation. We also assume the perturbed couplings are nonnegative:

We make the following assumptions on the random perturbation (see Remark 1.1 for the notation \({\mathbb {T}}_{o}^+\)):

- (P0):

-

The operator \({\mathscr {H}}_{\varepsilon }^{\omega }\) is the Laplacian on the edges acting on \(\bigoplus W^{2,2}(0,L_v^{\omega })\), satisfying \(\delta \)-conditions with coupling constants \((\alpha _v^{\omega })_{v\in {\mathbb {T}}}\), which are assumed to satisfy

$$\begin{aligned} L_v^{\omega } \in \left[ L_v^0 - \varepsilon , L_v^0 + \varepsilon \right] \quad \text {and} \quad \alpha _v^{\omega } \in \left[ \alpha _v^0 - \varepsilon , \alpha _v^0 + \varepsilon \right] . \end{aligned}$$ - (P1):

-

For all \(v,w\in {\mathbb {T}}_{o}^+\), the random variables \((\alpha _v^{\omega },L_v^{\omega })\) and \((\alpha _w^{\omega },L_w^{\omega })\) are independent if the forward trees of v and w do not intersect, i.e. if \({\mathbb {T}}_{(v_-,v)}^+ \cap {\mathbb {T}}_{(w_-,w)}^+ = \emptyset \).

- (P2):

-

For all \(v,w\in {\mathbb {T}}_{o}^+\) that share the same label, the restrictions of the random variables \((\alpha ^{\omega },L^{\omega })\) to the isomorphic forward trees of v and w are identically distributed.

Remark 1.4

Assumptions (P1) and (P2) hold, in particular, for independent identically distributed random variables (which is the main case we have in mind).

We shall consider intervals I lying in the interior of the unperturbed AC spectrum:

where \(I_r\) are given in Theorem 1.2.

We will also need to ensure that the various \(\sin \big (\sqrt{\lambda }L_v\big )\) do not vanish. More precisely, by (P0), the perturbed lengths all lie in \(\bigcup _{j\in {\mathfrak {A}}\cup \{0\}} [L_{j,\min }(\varepsilon ), L_{j,\max }(\varepsilon )]\), where \(L_{j,\min }(\varepsilon ) = L_j^0-\varepsilon \) and \(L_{j,\max }(\varepsilon ) = L_{j}^0+\varepsilon \). We then assume

where the set \({\mathscr {D}}={\mathscr {D}}_{\varepsilon }\) is a “thickening” of the Dirichlet spectrum, given by

This ensures that \(\sin \big (\sqrt{\lambda }L_v^{\omega }\big ),\sin \big (\sqrt{\lambda }L_v^0\big )\ne 0\) for any \(\lambda \in I\), \(v\in {\mathbb {T}}\) and \(\omega \).

Recall that the Weyl-Titchmarsh functions \(R^+_z(v)\) will be introduced in (2.2). Introduce the following condition:

- (Green -s):

-

There is a non-empty open set \(I_1\) and some \(s>0\) such that for all \(b\in {\mathbb {T}}\),

$$\begin{aligned} \sup _{\lambda \in I_1,\eta \in (0,1)} {{\,\mathrm{{\mathbb {E}}}\,}}\left( \left| {\text {Im}}R_{\lambda +{\mathrm {i}}\eta }^+(o_{b})\right| ^{-s} \right) <\infty \,. \end{aligned}$$

Condition (Green -s) implies in particular that the spectrum in \(I_1\) is purely AC, as long as it stays away from the Dirichlet spectrum, see “Appendix A.2”. Here (Green -s) refers to “Green’s function” and the moment value s. In fact, such inverse bounds on the WT function imply moments bounds on the Green’s function; see Corollary 2.1.

Introduce the following assumptions:

- (C0):

-

The minimal degree of \({\mathbb {T}}\) is at least 3.

- (C2):

-

For each \(k\in {\mathfrak {A}}\), there is \(k'\) with \(M_{k,k'}\ge 1\) such that for any \(l\in {\mathfrak {A}}\): \(M_{k,l}\ge 1\) implies \(M_{k',l}\ge 1\).

The second assumption ensures that each vertex \(v\in {\mathbb {T}}\) has at least one child \(v'\) such that each label found in \({\mathscr {N}}_v^+\) can also be found in \({\mathscr {N}}_{v'}^+\). See [5] for examples of such trees.

Theorem 1.3

Let \({\mathbf {T}}\) satisfy (C0), (C1), (C2) and \((\alpha ,L)\) satisfy (P0), (P1) and (P2), and be without edge potentials. Let \(I\subset \varSigma \) be compact with \(I\cap {\mathscr {D}} = \emptyset \). Then for any \(s>1\), we may find \(\varepsilon _0(I,s)\) such that (Green -s) holds on I for any \(\varepsilon \le \varepsilon _0\). In particular, \(\sigma ({\mathscr {H}}^{\omega }_{\varepsilon })\) has purely absolutely continuous spectrum almost-surely in I.

The “in particular” part is due to Theorem A.1.

In the above theorem, the disorder window \(\varepsilon _0(I,s)\) depends on the value of the moment s. We can actually obtain a disorder window valid uniformly for all s, but at the price of assuming some regularity on the \(\delta \)-potential:

- (P3):

-

For any \(v\in {\mathbb {T}}\), \(\ell (v)=j\), \(j\in {\mathfrak {A}}\), the distribution \(\nu _j\) of \(\alpha _v^\omega \) is Hölder continuous: there exist \(C_{\nu }>0\) and \(\beta \in (0,1]\) such that for any bounded \(I\subset {\mathbb {R}}\),

$$\begin{aligned} \max _{j\in {\mathfrak {A}}}\nu _j(I) \le C_{\nu } \cdot |I|^\beta \, . \end{aligned}$$

This holds e.g. if the \(\nu _j\) are absolutely continuous with a bounded density (then \(\beta =1\)).

Theorem 1.4

Suppose in addition to the assumptions of Theorem 1.3 that (P3) is satisfied. Then there exists \(\varepsilon _0(I)\) such that for any \(\varepsilon \le \varepsilon _0\) and any \(s\ge 1\), (Green -s) holds on I.

We finally mention that assumptions (1.7)–(1.8) in the last two theorems come from our choice to work with the quantity \(\frac{R_z^+(o_b)}{\sqrt{z}}\) in the proof, as it behaves quite nicely with respect to Cayley transforms and transmission from edge to edge. The sign assumption \(\alpha _v\ge 0\) (and where relevant \(W_v\ge 0\)) ensures that \({\text {Im}}\frac{R_z^+(o_b)}{\sqrt{z}} > 0\) when \({\text {Im}}z > 0\) (see the proof of Lemma 2.3 in “Appendix A.1”) This “Herglotz” property is a necessary input for our proof.

2 Green’s Function on Quantum Trees

The aim of this section is to derive quantum analogs for the well-known recursive formulas of the Green’s function on combinatorial trees. These identities will play a key role in the spectral analysis of the quantum tree, and may be of independent interest. In fact, we shall also need them when studying quantum ergodicity in [6]. Some of these identities appeared before in [1].

In all this section, we fix a quantum tree \({\mathbf {T}}\), and denote by \(W_{\max }^{2,2}({\mathbf {T}})\) the set of \(\psi =(\psi _v)\) such that \(\psi _v\in W^{2,2}(0,L_v)\), \(\sum _{v}\Vert \psi _v\Vert _{W^{2,2}}^2<\infty \).

If \(b\in B({\mathbb {T}})\), we write, as in Sect. 1.1, \({\mathbb {T}}_b^+\) for the connected component of \({\mathbb {T}}{\setminus } \{e(b)\} \) containing \(t_{b}\), and \({\mathbb {T}}_{b}^-\) for the component of \({\mathbb {T}}{\setminus } \{e(b)\} \) containing \(o_{b}\), where \({\mathbb {T}}{\setminus } \{e(b)\}\) is the combinatorial tree \({\mathbb {T}}\) to which we removed the edge corresponding to b.

If \(x = (b, x_b)\in {\mathscr {T}}\), we define a quantum tree \({\mathbf {T}}_x^+\) by \({\mathbf {T}}_x^+ = [x_b,t_b]\cup {\mathbf {T}}_b^+\). More precisely, add a vertex \(v_x\) at x, let \(V({\mathbf {T}}_x^+)= V({\mathbf {T}}^+_b) \cup \{v_x\}\), \(E({\mathbf {T}}_x^+)= E({\mathbf {T}}^+_b) \cup \{v_x,t_b\}\), \(L_{\{v_x,t_b\}} = L_b-x_b\), \(W_{(v_x,t_b)} = (W_b)|_{[L_b-x_b, L_b]}\), \(\alpha _{v_x}=0\), and the lengths, potentials and coupling constants be the same as those of \({\mathbf {T}}^+_b\) on the rest of the edges. In a similar fashion, we define \({\mathbf {T}}_x^- = {\mathbf {T}}_b^-\cup [o_b,x_b]\).

Let \(u=(b, u_b)\in {\mathscr {T}}\). By [1, Theorem 2.1], which remains true in our context, if we define \({\mathscr {H}}_{{\mathbf {T}}_u^{\pm }}^{\max }\) on \({\mathscr {T}}_u^{\pm }\) to be the Schrödinger operator \(-\varDelta +W\) with domain \(D({\mathscr {H}}_{{\mathbf {T}}_u^{\pm }}^{\max })\), the set of \(\psi \in W_{\max }^{2,2}({\mathbf {T}}_u^{\pm })\) satisfying \(\delta \)-conditions on inner vertices of \({\mathbf {T}}_u^{\pm }\), then for any \(z\in {\mathbb {C}}^+:={\mathbb {H}}:= \{z\in {\mathbb {C}}: {\text {Im}}z >0\}\), there are unique z-eigenfunctions \(V_{z;u}^+\in D({\mathscr {H}}_{{\mathbf {T}}_u^+}^{\max })\), \(U_{z;u}^-\in D({\mathscr {H}}_{{\mathbf {T}}_u^-}^{\max })\) satisfying \(U_{z;u}^-(u)=V_{z;u}^+(u)=1\). Complex eigenvalues exist because \({\mathscr {H}}_{{\mathbf {T}}_u^\pm }^{\max }\) is not self-adjoint, as there are no domain conditions at u.

Note that, by uniqueness, if \(v,v'\in {\mathscr {T}}\) with \(v\in {\mathscr {T}}_{v'}^-\), then on \({\mathscr {T}}_v^-\), the functions \(U_{z;v}^-\) and \(U_{z;v'}^-\) must be proportional. Similarly, \(V_{z;o}^+\) and \(V_{z;o'}^+\) must be proportional on \({\mathscr {T}}_o^+\) if \(o\in {\mathscr {T}}_{o'}^+\). Therefore, the quantity introduced in (2.1) below is independent of the choice of o and v.

Lemma 2.1

Let \(z\in {\mathbb {C}}^+\). The resolvent \(({\mathscr {H}}_{{\mathbf {T}}}-z)^{-1}\) of \({\mathscr {H}}_{{\mathbf {T}}}\) is an integral operator with kernel \(G^{z}(x,y)\) defined as follows. Given \(x,y\in {\mathscr {T}}\), fix o, v such that \(x,y\in {\mathscr {T}}_o^+ \cap {\mathscr {T}}_v^-\). Then

where \({\mathscr {W}}^{z}_{v,o}(x)\) is the Wronskian

Versions of this lemma previously appeared in [1, Lemma A.2] and [17, Lemma D.15]. We give the proof in “Appendix A” for completeness.

Since for each \(z\in {\mathbb {C}}^+\), \(G^z\) satisfies the \(\delta \)-boundary conditions in each of its arguments, we deduce that, whenever \(o_b= o_{b'}=v\), we have \(G^z( (b, 0), \cdot ) = G^z ((b', 0), \cdot )\) and \(G^z(\cdot , (b, 0)) = G^z (\cdot , (b', 0))\). These quantities will therefore be denoted by \(G^z (v, \cdot )\) and \(G^z(\cdot , v)\) respectively.

As in [1], we define the Weyl-Titchmarch (WT) functions (which are analogous to Dirichlet-to-Neumann functions) for \(x\in {\mathscr {T}}\) by

Note that we take here the coherent point of view, which is why there is a negative sign in the definition of \(R^-_{z}(x)\).

Given an oriented edge \(b=(o_b,t_b)\), we define

Remark 2.1

If \(a< \inf \sigma ({\mathscr {H}}_{{\mathbf {T}}})\), then \(\zeta ^{z}(b)\) is well-defined on \({\mathbb {C}}{\setminus } (a,\infty )\), i.e. the denominator does not vanish, as follows from (2.1) and the proof of [1, Theorem 2.1(ii)]. The proof also shows that \(z \mapsto \zeta ^{z}(b)\) is holomorphic on \({\mathbb {C}}{\setminus } (a,\infty )\) and real-valued on \((-\infty ,a]\).

In the case of combinatorial trees, \(\zeta ^{z}(b)\) coincides with what was denoted \(\zeta ^{z}_{o_b}(t_b)\) in [3]. In fact, by the multiplicative property of the Green function, we have \(\frac{G^{z}(o_b,t_b)}{G^{z}(o_b,o_b)} = \frac{G^{z}(o_b,o_b)\zeta _{o_b}^{z}(t_b)}{G^{z}(o_b,o_b)} = \zeta _{o_b}^{z}(t_b)\).

In the case that \({\mathbf {T}}\) is the \((q+1)\)-regular tree with equilateral edges, with identical coupling constants and potentials, then \(\zeta ^{z}(b)\) is the quantity \(\mu ^-(z)\) in [12], and is independent of b. Moreover, the limit \(\mu ^-(\lambda ) = \lim _{\eta \downarrow 0} \mu ^-(\lambda +{\mathrm {i}}\eta )\) exists in this case, provided that \(\lambda \) is not in the Dirichlet spectrum, i.e., that \(\sin (\lambda L)\ne 0\).

Finally, for the quantum Cayley graphs of [13], the \(\zeta ^{z}(b)\) coincide with the multipliers \(\mu _m(z)\). Hence, there are finitely many distinct \(\zeta ^{z}(b)\). Moreover, in this setting, \(\zeta ^{z}({\hat{b}}) = \zeta ^{z}(b)\), and \(\zeta ^{z}(gb) = \zeta ^{z}(b)\), where g is an element of the group acting on the graph.

Given an oriented edge b, we will denote by \({\mathscr {N}}_b^+\) the set of outgoing edges from b, i.e. the set of \(b'\) with \(o_{b'}=t_b\) and \(b'\ne {\hat{b}}\).

Lemma 2.2

Let \(z\in {\mathbb {C}}^+\). We have the following relations between \(\zeta ^{z}\) and the WT functions \(R_{z}^{\pm }\):

Moreover,

and

where \(b'=(t_b,t_{b'})\). Given a non-backtracking path \(b_1,\ldots , b_k\) (that is to say, \(o_{b_{i+1}} = t_{b_i}\) and \(t_{b_{i+1}} \ne o_{b_i}\) for all \(i \in \{1,\ldots ,k-1\}\)), we have the multiplicative property

Finally, for any path \(b_1,\ldots , b_k\), we have

Proof

By (2.1), \(\zeta ^{z}(b) = \frac{V_{z;o}^+(t_b)}{V_{z;o}^+(o_b)} \cdot \frac{{\mathscr {W}}_{v,o}^{z}(o_b)}{{\mathscr {W}}_{v,o}^{z}(t_b)}\). But the Wronskian is constant on b, as checked by differentiating it. Moreover, since \(V_{z;o}^+(x_b)\) is a z-eigenfunction on b, we have \(V_{z;o}^+(t_b) = C_{z}(L_b) V_{z;o}^+(o_b) + S_{z}(L_b) (V_{z;o}^+)'(o_b)\). Hence, we have as claimed \(\zeta ^{z}(b) = C_{z}(L_b) + R^+_{z}(o_b)S_{z}(L_b)\). Next, \(\zeta ^{z}({\hat{b}}) = \frac{G^{z}(t_b,o_b)}{G^{z}(t_b,t_b)} = \frac{U_{z;v}^-(o_b)}{U_{z;v}^-(t_b)}\) again by constancy of \({\mathscr {W}}_{v,o}^{z}\) on b. Writing (1.6) in the form

we get \(U_{z;v}^-(o_b) = S_{z}'(L_b) U_{z;v}^-(t_b) -S_{z}(L_b)(U_{z;v}^-)'(t_b)\), so \(\zeta ^{z}({\hat{b}}) = S_{z}'(L_b) + R^-_{z}(t_b) S_{z}(L_b)\) as claimed.

Next, \(V_{z;o}^+(y_b) = V_{z;o}^+(o_b) C_{z}(y_b) + (V_{z;o}^+)'(o_b) S_{z}(y_b)\), so

Using \(R_{z}^+(o_b) = \frac{\zeta ^{z}(b)-C_{z}(L_b)}{S_{z}(L_b)}\), we get

The first part of (2.5) follows by the Wronskian identity \(C_z(x)S_z'(x)-C_z'(x)S_z(x)=1\).

For the second part, by (2.11),

The claim now follows as before using (2.4).

Since \(V_{z;o}^+\) satisfies the \(\delta \)-conditions, we have

Recalling (2.4), this proves (2.6).

By (2.1),

so

We have \(R_{z}^+(t_b) = \frac{S_{z}'(L_b)}{S_{z}(L_b)} - \frac{1}{S_{z}(L_b)\zeta ^{z}(b)}\) and \(R^-_{z}(t_b) = \frac{\zeta ^{z}({\hat{b}})-S_{z}'(L_b)}{S_{z}(L_b)}\) by (2.4) and (2.5). Hence,

proving the first part of (2.7).

For the second part, we showed that \(\frac{S_{z}(L_b)}{G^{z}(t_b,t_b)} = \frac{1-\zeta ^{z}(b)\zeta ^{z}({\hat{b}})}{\zeta ^{z}(b)}\), so replacing b by \({\hat{b}}\) we deduce that \(\frac{S_{z}(L_b)}{G^{z}(o_b,o_b)} = \frac{1-\zeta ^{z}({\hat{b}})\zeta ^{z}(b)}{\zeta ^{z}({\hat{b}})}\), so \(\frac{\zeta ^{z}({\hat{b}})}{\zeta ^{z}(b)} = \frac{G^{z}(o_b,o_b)}{G^{z}(t_b,t_b)}\).

It follows from (2.13) and (2.14) that

Inserting this expression in (2.6), we obtain (2.8).

As previously observed, the Wronskian is constant on each b, so \(\zeta ^{z}({\hat{b}}) = \frac{U_{z;v}^-(o_b)}{U_{z;v}^-(t_b)}\). Hence,

By (2.7), \(\zeta ^{z}(b_1)\cdots \zeta ^{z}(b_k) = \zeta ^{z}({\hat{b}}_1)\cdots \zeta ^{z}({\hat{b}}_k) \cdot \frac{G^{z}(t_{b_k},t_{b_k})}{G^{z}(o_{b_1}, o_{b_1})}\), proving the other equality.

Finally, it follows from (2.9) that

\(\square \)

For the following lemma, fix \(o,v\in V\) and consider the WT functions (2.2). Assume that \(o_b,t_b\in V({\mathbf {T}}_o^+)\cap V({\mathbf {T}}_v^-)\), that is to say, that \(b\in B({\mathbf {T}}_o^+)\) and \({\hat{b}}\in B({\mathbf {T}}_v^-)\). Let \({\mathbf {T}}_{o_b}^+ \subseteq {\mathbf {T}}_o^+\) and \({\mathbf {T}}_{t_b}^- \subseteq {\mathbf {T}}_v^-\) be the subtrees starting at \(o_b\) and \(t_b\), respectively. Let \(G_{{\mathbf {T}}_{o_b}^+}(x,y)\) be the Green kernel of the \(\delta \)-problem on \({\mathbf {T}}_{o_b}^+\). This means the usual \(\delta \)-conditions at \(v\in V({\mathbf {T}}_{o_b}^+)\), with \(\alpha _{o_b}=0\). Similarly, \(G_{{\mathbf {T}}_{t_b}^-}(x,y)\) is the Green kernel of the \(\delta \)-problem on \({\mathbf {T}}_{t_b}^-\).

We will need the notion of Herglotz functions [14] throughout the paper. A Herglotz function (a.k.a. Nevanlinna function or Pick function) is an analytic function from \({\mathbb {C}}^+\) to \({\mathbb {C}}^+\). Herglotz functions form a positive cone: if \(f_1\), \(f_2\) are Herglotz and \(a_1, a_2\) are positive constants, then \(a_1f_1 + a_2f_2\) is Herglotz. Composition of two Herglotz functions is again a Herglotz function. The functions \(z\mapsto \sqrt{z}\) and \(z\mapsto -1/z\) for example are Herglotz.

Every Herglotz function f has a canonical representation [14, Theorem II.I] of the form

where A and B are constants and \({\mathfrak {m}}\) is a Borel measure satisfying \(\int _{\mathbb {R}}(1+t^2)^{-1}\,{\mathrm {d}}{\mathfrak {m}}<\infty \).

Lemma 2.3

Let \(b\in {\mathbf {T}}\) and \(z\in {\mathbb {C}}^+\). Let \(o,v\in V\) be such that \(b\in {\mathbf {T}}_o^+\) and \({\hat{b}}\in {\mathbf {T}}_v^-\). Then we may express

where \(G_{{\mathbf {T}}_v^{\pm }}^z(v,v)\) are defined with the Neumann condition at v.

The functions \(F(z) = R_{z}^+(o_b)\), \(R_{z}^-(t_b)\) and \(G^{z}(v,v)\) are Herglotz functions. If all \(W_v\ge 0\) and \(\alpha _v\ge 0\), then \({\widetilde{F}}(z) = \frac{R_{z}^+(o_b)}{\sqrt{z}}\), \(\frac{R_{z}^-(t_b)}{\sqrt{z}}\) are also Herglotz.

Moreover, we have the following “current” relations:

Equality holds in both cases if \({\text {Im}}z=0\), whenever defined.

Most statements of this lemma appear in [1]. We give the proof in “Appendix A” for completeness. We also deduce that \(-\frac{S_z'(L_b)}{S_z(L_b)}\) and \(S_{z}(L_b)\zeta ^{z}(b)\in {\mathbb {C}}^+\), see Remark A.2.

The following corollary says that the inverse moments of the imaginary part of the WT functions essentially control all relevant spectral quantities on the tree:

Corollary 2.1

Let \(I\subset {\mathbb {R}}\) be compact, \(I\cap {\mathscr {D}}=\emptyset \), and \(z\in {\mathbb {C}}^+\). Fix \(c_1,c_2,c_3>0\) such that for all \(z\in I+{\mathrm {i}}[0,1]\), \(L_b\in [L_{\min },L_{\max }]\),

Then for any \(p\ge 1\), and \(b\in {\mathbb {T}}\),

and

Also note that \(R_z^-(t_b) = R_z^+(o_{\widehat{b}})+\frac{C_z(L_b)-S_z'(L_b)}{S_z(L_b)}\) using (2.4), soFootnote 3 up to choosing \(c_4>0\) with \(|S_z'(L_b)|\le c_4\), a control over all \(R_z^+(o_b)\), \(b\in {\mathbb {T}}\), implies a control over all \(R_z^-(t_b)\).

Proof

We have by (2.13), \(|G^z(o_b,o_b)|^p = |R_z^+(o_b)+R_z^-(o_b)|^{-p}\le |{\text {Im}}R_z^+(o_b)|^{-p}\). By (2.12),

where we used that \(-\frac{S_z'(L_b)}{S_z(L_b)}\) and \(R_z^+(o_e)\) are Herglotz in the last line, with \(b^+\in {\mathscr {N}}_b^+\) arbitrary. Hence,

\(\square \)

3 AC Spectrum for the Unperturbed Tree

The aim of this section is to prove Theorems 1.1 and 1.2.

Let \({\mathbf {T}}\) be a quantum tree of finite cone type, with the structure described in Sect. 1.2. Given \((v_-,v)\in B({\mathbb {T}}_{b_o}^+)\), we denote

This notation is simply analogous to the one introduced in Sect. 1.1.2, and does not mean that \(\zeta ^{z}\) is a function of the terminus alone. It simply means that each discrete edge in \({\mathbb {T}}_{b_o}^+\) can be specified by indicating the terminus alone. We also let \(\zeta ^z(t_{b_o})=\zeta ^z(b_o)\).

Denote \(\zeta _j^{z} = \zeta ^{z}(v)\) if \(\ell (v)=j\). Then (2.6) says that for each \(j\in {\mathfrak {A}}^+\),

The matrix elements \(M_{j,k}\) were defined in Sect. 1.2(b). The system (3.1) is reminiscent of the finite system of equations that appears in the combinatorial case [5, 19] for \(\zeta _j^{z} = \zeta _{v_-}^{z}(v)\). In order to put it in a nicer form, we denote \(h_j = S_{z}(L_j)\zeta _j^{z}\). Then we get the following system of polynomial equations:

where \(F_j(z) = \alpha _j + \sum _{k=1}^m M_{j,k} \frac{C_{z}(L_k)}{S_{z}(L_k)} + \frac{S_{z}'(L_j)}{S_{z}(L_j)}\).

An analogous system of equations involving the matrix \(N=(N_{i,j})\) arises when considering cones in \({\mathbb {T}}_{b_o}^-\). We restrict ourselves to the above system; the other one is analyzed similarly.

We mention that a similar system of equations in a more special framework appeared recently in [13, Eq. (4.8)]. In this case, one has \(M_{j,j} =1\) for each j and \(M_{j,k}=2\) for \(k\ne j\).

Our aim in the following is to control the values of \(\zeta ^{\lambda +{\mathrm {i}}\eta }_j\) as \(\eta \downarrow 0\). For the models [12, 13], the \(\zeta ^{z}_j\) are uniformly bounded. The following simple criterion gives a sufficient condition for this to happen. Note the condition \(M_{j,j}>0\) below implies that each vertex of label j has at least one offspring of its own type. Later we will relax that restriction.

Lemma 3.1

Suppose \(M_{j,j}>0\) for some j. Then \(|\zeta _j^{z}|<1\) for any \(z\in {\mathbb {C}}{\setminus } {\mathbb {R}}\). In fact, \(|\zeta _j^{z}|^2<\frac{1}{M_{j,j}}\).

This lemma parallels the combinatorial case [19, Lemma 3], see [13, Lemma 3.9] for a special case.

Proof

Let \(z\in {\mathbb {C}}^+\) and b with \(\ell (t_b)=j\). Then (2.17) becomes \(\sum _{k=1}^m M_{j,k} {\text {Im}}R_z^+(k) \le \frac{{\text {Im}}R_z^+(j)}{|\zeta _j^z|^2}\), where \(R_z^+(k):=R_z^+(o_e)\) if \(\ell (t_e)=k\). The inequality is actually strict if \({\text {Im}}z>0\), as seen from the proof of (2.17). Thus, \(|\zeta _j^z|^2 < \frac{{\text {Im}}R_z^+(j)}{M_{j,j} {\text {Im}}R_z^+(j)} =\frac{1}{M_{j,j}}\).

The case \({\text {Im}}z<0\) can be adapted without difficulty, in this case \({\text {Im}}R_z^+(o_e)\) should be replaced by \(|{\text {Im}}R_z^+(o_e)|\) in (2.17). \(\quad \square \)

The lemma implies in particular that \(|\zeta _j^{\lambda +{\mathrm {i}}0}| \le \frac{1}{M_{j,j}}\) for any \(\lambda \in {\mathbb {R}}\).

There are many models of interest for which the condition of Lemma 3.1 is not satistfied, so we next consider the general case. Now the limit \(\zeta _j^{\lambda +{\mathrm {i}}0}\) may no longer exist, but we aim to show this problem can only occur on a discrete subset of \({\mathbb {R}}\).

Proposition 3.1

There is a discrete set \( {\mathfrak {D}}\subset {\mathbb {R}}\) such that, for all \(j=1,\dots ,m\), the solutions \(h_j(\lambda +{\mathrm {i}}\eta ) = S_{\lambda +{\mathrm {i}}\eta }(L_j) \zeta _j^{\lambda +{\mathrm {i}}\eta }\) of (3.2) have a finite limit as \(\eta \downarrow 0\) for all \(\lambda \in {\mathbb {R}}{\setminus } {\mathfrak {D}}\). The map \(\lambda \mapsto S_{\lambda }(L_j)\zeta _j^{\lambda +{\mathrm {i}}0}\) is continuous on \({\mathbb {R}}{\setminus } {\mathfrak {D}}\), and there is a discrete set \({\mathfrak {D}}'\) such that it is analytic on \({\mathbb {R}}{\setminus } ({\mathfrak {D}}\cup {\mathfrak {D}}')\).

Proof

We follow the strategy in [5, Sect. 4]. The aim is essentially to decouple the system (3.2) and show that each \(h_j\) satisfies an algebraic equation \(Q_j(h_j)=0\). For this, we will use an algebraic tool from [24].

Let \(\lambda _0\in {\mathbb {R}}\), and let \(P_j(h_1,\dots ,h_m) = \sum _{k=1}^m\frac{M_{j,k}}{S_{z}^2(L_k)} h_k h_j -F_j(z) h_j + 1 \). Clearly, \(P_j \in K[h_1,\dots ,h_m]\), where \(K={\mathscr {K}}_{\lambda _0}\) is the field of functions f(z) possessing a convergent Laurent series \(f(z)=\sum _{j=-n_0}^{\infty }a_j(\lambda _0) (z-\lambda _0)^j\) in some neighbourhood \(N_{\lambda _0}\subset {\mathbb {C}}\) of \(\lambda _0\).

Let \(K'={\mathscr {J}}_{\lambda _0}\) be the field of functions f which are meromorphic on \(N_{\lambda _0}\cap {\mathbb {C}}^+\) for some neighbourhood \(N_{\lambda _0}\) of \(\lambda _0\). Then \(K'\) is an extension of K, and we know that \(S_{z}(L_j)\zeta _j^{z}\) belongs to \(K'\) (see Remark 2.1) and satisfy \(P_j(S_{z}\zeta _1^{z},\dots ,S_{z}\zeta _m^{z})=0\). Calculating the Jacobian \(\left( \frac{\partial P_j}{\partial h_k}(h)\right) \), we find

Let

We will show that \(z\mapsto J^{z}\) is not the zero element of \(K'\). For this, we first study the asymptotics of \(J^{z}\) as \(z \rightarrow -\infty \).

Take \(z=-r^2\) with \(r>0\) large. We remark that

This follows from classical estimates [26, Chapter 1]. In fact,

More precisely, we write

where \(R(r,L) = C_{-r^2}(L) - {{\,\mathrm{cosh}\,}}rL\) and \(R'(r,L) = S_{-r^2}(L) - \frac{{{\,\mathrm{sinh}\,}}rL}{r}\). By [26, p. 13], \(\frac{rR'(r,L)}{{{\,\mathrm{sinh}\,}}rL} \rightarrow 0\) and \(\frac{R(r,L)}{{{\,\mathrm{sinh}\,}}rL} \rightarrow 0\) as \(r\rightarrow \infty \). Since \(\frac{{{\,\mathrm{cosh}\,}}rL}{{{\,\mathrm{sinh}\,}}rL} \rightarrow 1\), (3.3) follows. Hence,

On the other hand, since \(h_j\) is Herglotz (see Remark A.2), it has a representation of the form (2.15). If \(t_0=\inf \sigma ({\mathscr {H}}_{{\mathbf {T}}})\), we also know from Remark 2.1 that \(h_j(\lambda )\) is well-defined and real-valued for \(\lambda <t_0\). By [32, Theorem 3.23], the measure \({\mathfrak {m}}\) is thus supported on \([t_0,\infty )\). Hence, for large r (say \(r^2>-t_0+1\)),

where we used that \(h_j(-r^2)= \lim _{\eta \downarrow 0}h_j(-r^2+{\mathrm {i}}\eta )\) and dominated convergence (recall that \(\frac{{\mathrm {d}}{\mathfrak {m}}(t)}{1+t^2}\) is a finite measure). Thus,

Using dominated convergence again, we see that \(h_j(-r^2)/(-r^2)\rightarrow A\) as \(r\rightarrow \infty \). This implies that

Therefore, recalling that the \(C_j\) were defined in (3.4), we find that as \(r\rightarrow \infty \),

Hence, \(J^{-r^2} \sim (-1)^mC_1\cdots C_m r^m = Cr^m\ne 0\) for r large enough. Since \(z\mapsto J^{z}\) is holomorphic on \({\mathbb {C}}{\setminus } [a_0,\infty )\), it follows that it cannot vanish identically on any neighbourhood \(N_{\lambda _0}\cap {\mathbb {C}}^+\). Hence, \(J^{z}\) is not the zero element of \(K'\).

It follows by [24, Proposition VIII.5.3] that each \(S_{z}\zeta _j^{z}\) is algebraic over K. By the Newton-Puiseux theorem (see e.g. [29, Theorem 3.5.2]), each \(h_j\) thus has an expansion of the form

in some neighbourhood \(N_{\lambda _0}\) of \(\lambda _0\). Here \(m\in {\mathbb {Z}}\), \(d\in {\mathbb {N}}\), and the entire series \(\sum _{n\ge 0} a_n z^n\) has a positive radius of convergence. In particular, \(z \mapsto S_{z}\zeta _j^{z}\) is analytic near any \(\lambda \in N_{\lambda _0}{\setminus } \{\lambda _0\}\). The set \( {\mathfrak {D}}\) corresponds to those \(\lambda _0\) for which \(m<0\) in the Newton-Puiseux expansion at \(\lambda _0\), and the set \({\mathfrak {D}}'\) corresponds to those \(\lambda _0\) for which \(d>1\).

Since, for any \(\lambda _0\), the map \(z\mapsto h_j(z)\) is analytic in \(N_{\lambda _0}\backslash \{\lambda _0\}\), we see that, for any \(\lambda _0\), we have \(\left( {\mathfrak {D}}\cup {\mathfrak {D}}'\right) \cap \left( N_{\lambda _0}\backslash \{\lambda _0\}\right) = \emptyset \). Therefore, the sets \({\mathfrak {D}}\) and \({\mathfrak {D}}'\) are discrete. \(\quad \square \)

Our next aim is to show that all WT functions have a positive imaginary part on most of the spectrum.

Let \(\sigma _D\) be the union of the Dirichlet spectra:

We would like to index the WT functions by vertices, but the notation \(R_z^+(v)\) is a bit ambiguous since \(R_z^+(t_b)\ne R_z^+(o_{b^+})\) even if \(t_b=o_{b^+}=v\). So we take the convention that

Here \((t_{b_o})_-=o_{b_o}\) and \((o_{b_o})_+=t_{b_o}\). This keeps with the convention of Sect. 1.1.2 of indexing functions \(\psi (b)\) by their terminusFootnote 4 on \({\mathbb {T}}_{b_o}^+\) and their origin on \({\mathbb {T}}_{b_o}^-\).

As there are finitely many types of \(\zeta ^z(b)\) for \(b=(v_-,v)\in B({\mathbb {T}}_{b_o}^+)\), we see by (2.4) there are finitely many types of \(R_z^+(o_b)\) (this may not be true for \(R_z^-(o_b)\) for such b). We denote \(R_{z}^+(j) := R_{z}^+(o_b)\) if \(b=(v_-,v)\in {\mathbb {T}}_{b_o}^+\) and \(\ell (v)=j\in {\mathfrak {A}}^+\). Similarly, we denote \(R_{z}^-(k) = R_{z}^-(t_b)\) if \(b=(v,v_+)\in {\mathbb {T}}_{b_o}^-\) and \(\ell (v) = k\in {\mathfrak {A}}^-\).

By some abuse of notation, we assume the discrete sets \({\mathfrak {D}}\), \({\mathfrak {D}}'\) of Proposition 3.1 are the same for the system analogous to (3.2) which involves the matrix \((N_{i,j})\).

Remark 3.1

Denote \(R_{\lambda }^{\pm }:=R_{\lambda +{\mathrm {i}}0}^{\pm }\). Then the limits \(R_{\lambda }^+(j)\) exist for \(\lambda \in {\mathbb {R}}{\setminus } ({\mathfrak {D}}\cup \sigma _D)\) and \(j\in {\mathfrak {A}}^+\). This follows from Proposition 3.1 and (2.4), which implies that \(R_{\lambda }^+(j) = \frac{\zeta _j^{\lambda }-C_{\lambda }(L_j)}{S_{\lambda }(L_j)}\). Similarly, the limits \(R_{\lambda }^-(k)\) exist for \(k\in {\mathfrak {A}}^-\).

It follows that \(R_{\lambda }^{\pm }(v)\) exist for \(v\in \{o_{b_o},t_{b_o}\}\) and \(\lambda \notin {\mathfrak {D}}\cup \sigma _D\). In fact, \(\zeta ^{\lambda }(b_o)=\frac{h_{j_o}}{S_{\lambda }(L_o)}\) for some \(j_o\in {\mathfrak {A}}^+\), which exists by Proposition 3.1, so \(R_{\lambda }^+(o_{b_o})\) exists by (2.4). Similarly the result for the \((N_{ij})\) system implies the existence of \(\zeta ^{\lambda }({\hat{b}}_o)\) and \(R_{\lambda }^-(t_{b_o})\). Finally if \(t_{b_o}\) has type \(j_o\in {\mathfrak {A}}^+\), then \(R_{\lambda }^+(t_{b_o})=\sum _{k=1}^mM_{j_o,k}R_{\lambda }^+(k)-\alpha _{t_{b_o}}\) by (2.12), which exists by the previous paragraph. Similarly \(R_\lambda ^-(o_{b_o})=\sum _{k=1}^nN_{j'_o,k}R_{\lambda }^-(k)+\alpha _{o_{b_o}}\) exists.

Proposition 3.1 tells us moreover that \(R_{\lambda +{\mathrm {i}}0}^{\pm }(v)\) are analytic on \({\mathbb {R}}{\setminus } ({\mathfrak {D}}\cup {\mathfrak {D}}' \cup \sigma _D)\). In particular, their zeroes do not accumulate. Hence, there is a discrete set \({\mathfrak {D}}''\) such that \(R_{\lambda }^+(v) + R_{\lambda }^-(v) \ne 0\) for \(\lambda \in {\mathbb {R}}{\setminus } ({\mathfrak {D}}\cup {\mathfrak {D}}'\cup {\mathfrak {D}}'' \cup \sigma _D)\) and \(v\in \{o_{b_o},t_{b_o}\}\). We therefore define

We may actually generalize the result of Remark 3.1 as follows.

Lemma 3.2

-

(a)

If \(\lambda \notin {\mathfrak {D}}\cup \sigma _D\), then \(R_{\lambda }^{\pm }(o_b)\) exists for all \(b=(v_-,v)\in {\mathbb {T}}_{b_o}^+\) and \(R_{\lambda }^{\pm }(t_b)\) exists for all \(b=(w,w_+)\in {\mathbb {T}}_{b_o}^-\).

-

(b)

If moreover \(\lambda \notin {\mathfrak {D}}_0\), then \(G^{\lambda }(v,v)\) exists for any \(v\in {\mathbb {T}}\), and \(G^{\lambda }(v,v)\ne 0\).

Proof

By symmetry it suffices to prove (a) for \({\mathbb {T}}_{b_o}^+\). Consider \(b=(v_-,v)\in {\mathbb {T}}_{b_o}^+\). We already know that \(R_{\lambda }^+(o_b)=R_{\lambda }^+(j)\) exists from Remark 3.1. Next, we show by induction that \(\frac{1}{\zeta ^{\lambda }(\widehat{b})S_{\lambda }(L_b)}\) is finite. Note that we already know \(\frac{1}{\zeta ^{\lambda }(\widehat{b_o})S_{\lambda }(L_o)}\) is finite by (2.5). So consider any oriented edge \(b=(t_{b_o},v)\). Applying (2.6) to \(\widehat{b}\) instead of b, we may express \(\frac{1}{\zeta ^z(\widehat{b})S_z(L_b)}\) in terms of some \(C_z,S_z\) functions, plus \(\zeta ^z(b')\) for \(b'\in {\mathscr {N}}_{\widehat{b}}^+\). One of them is \(\zeta ^z(\widehat{b}_o)\), whose limit on the real axis exists from Remark 3.1. The rest are precisely those with \(b'\in {\mathscr {N}}_{b_o}^+{\setminus } \{b\}\), which also exist by Proposition 3.1. Thus, \(\frac{1}{\zeta ^\lambda (\widehat{b})S_\lambda (L_b)}\) exists for any \(b=(t_{b_o},v)\). By induction we get existence for any \((v_-,v)\in {\mathbb {T}}_{b_o}^+\). It follows from (2.5) that \(R_{\lambda }^-(o_b)\) exists for all such \(b=(v_-,v)\in {\mathbb {T}}_{b_o}^+\).

(b) By Remark 3.1, \(R_{\lambda }^+(v) + R_{\lambda }^-(v) \ne 0\) for \(v=o_{b_o},t_{b_o}\). Using (2.13), this implies \(G^{\lambda }(v,v)\) exists. We now observe that

In fact, by (2.7),

as claimed. Using Proposition 3.1, we thus deduce the existence of \(G^{\lambda }(w,w)\) for all \(w\in {\mathbb {T}}_{b_o}^+\). Similarly the existence of \(w\in {\mathbb {T}}_{b_o}^-\) follows from the analog of Proposition 3.1 with the \((N_{i,j})\) system. Finally using (2.13) we see that (a) implies \(G^{\lambda }(v,v)\ne 0\). \(\quad \square \)

We now observe that under (C1*), all the WT functions are related as follows:

Lemma 3.3

Suppose \({\mathbb {T}}\) satisfies (C1*) and let \(\lambda \in {\mathbb {R}}{\setminus }({\mathfrak {D}}\cup \sigma _D)\).

-

(i)

If \({\text {Im}}R_{\lambda +{\mathrm {i}}0}^\pm (j) = 0\) for some \(j\in {\mathfrak {A}}^\pm \), then \({\text {Im}}R_{\lambda +{\mathrm {i}}0}^\pm (j) = 0\) for all \(j\in {\mathfrak {A}}^\pm \).

-

(ii)

Assume \(\lambda \in {\mathbb {R}}{\setminus }{\mathfrak {D}}_0 \).

If \({\text {Im}}R_{\lambda +{\mathrm {i}}0}^+(j) = 0\) for some \(j\in {\mathfrak {A}}^+\) and \({\text {Im}}R_{\lambda +{\mathrm {i}}0}^-(k) = 0\) for some \(k\in {\mathfrak {A}}^-\), then \({\text {Im}}G^{\lambda +{\mathrm {i}}0}(v,v) = 0\) and \({\text {Im}}R_{\lambda +{\mathrm {i}}0}^{\pm }(v) = 0\) for all \(v\in {\mathbb {T}}\).

The same conclusion holds if \({\text {Im}}G^{\lambda +{\mathrm {i}}0}(w,w)=0\) for some \(w\in {\mathbb {T}}\).

-

(iii)

For any \(v\in {\mathbb {T}}\), we have

$$\begin{aligned} \sigma ({\mathscr {H}}_0){\setminus } {\mathfrak {D}}_0 = \overline{\{ \lambda \in {\mathbb {R}}{\setminus } {\mathfrak {D}}_0 : {\text {Im}}G^{\lambda +{\mathrm {i}}0}(v,v)>0\}}{\setminus }{\mathfrak {D}}_0 \, . \end{aligned}$$

Proof

We first note that \(\zeta _j^{\lambda }\ne 0\), due to the relation

and the fact that the \(h_k\) are finite.

Suppose that \({\text {Im}}R^+_\lambda (k)>0\) for some \(k\in {\mathfrak {A}}^+\) and let \(l\in {\mathfrak {A}}^+\). Then by (C1*), \((M^n)_{l,k}\ge 1\), so if v has label l and w has label k, there is a path \((u_0,\dots ,u_r)\) with \(u_1=v\) and \(u_r=w\). Denote \(b_j = (u_{j-1},u_j)\). Then applying (2.17) repeatedly,

where the sum runs over all \((r-1)\)-paths \((e_2;e_r)\) outgoing from \(b_1\), and the last inequality holds because \({\text {Im}}R^+_\lambda (o_{b_r}) = {\text {Im}}R^+_\lambda (k)>0\) and all \(\zeta _j^{\lambda }\ne 0\).

So under (C1*), if \({\text {Im}}R^+_\lambda (j)>0\) for some \(j\in {\mathfrak {A}}^+\), then \({\text {Im}}R^+_\lambda (k)>0\) for all \(k\in {\mathfrak {A}}^+\). So if \({\text {Im}}R^+_\lambda (j)=0\) for some \(j\in {\mathfrak {A}}^+\), then it must be zero for all \(j\in {\mathfrak {A}}^+\).

The proof for \(R_{\lambda +{\mathrm {i}}0}^-\) is the same.

For (ii), say \(t_{b_o}\) has type \(j_o\). Since \(R_{\lambda }^+(t_{b_o}) = \sum _{k=1}^m M_{j_o,k} R_{\lambda }^+(k) - \alpha _{t_{b_o}}\) by (2.12), we get \({\text {Im}}R_{\lambda }^+(t_{b_o}) = 0\) by (i). Using (2.5), this implies \({\text {Im}}\zeta ^{\lambda }(b_o) = 0\), which by (2.4) implies that \({\text {Im}}R_{\lambda }^+(o_{b_o}) = 0\). Similarly, if \(o_{b_o}\) has type j, then \(R_{\lambda }^-(o_{b_o}) = \sum _{k=1}^n N_{j,k} R_{\lambda }^-(k) + \alpha _{o_{b_o}}\), so \({\text {Im}}R_{\lambda }^-(o_{b_o}) = 0\) by (i), also implying \({\text {Im}}R_{\lambda }^-(t_{b_o})=0\) via (2.5), (2.4). Now \(R_{\lambda }^+(v) + R_{\lambda }^-(v) \ne 0\) for \(v=o_{b_o},t_{b_o}\). Using (2.13), this implies \(G^{\lambda }(v,v)\) exists, and \({\text {Im}}G^{\lambda }(v,v) = 0\) for \(v=o_{b_o},t_{b_o}\). Since \({\text {Im}}G^{\lambda }(t_{b_o},t_{b_o})=0\) and \({\text {Im}}\zeta ^{\lambda }(t_{b_o},v_+)={\text {Im}}\zeta ^{\lambda }_j=0\), then using (3.7), we get \({\text {Im}}G^{\lambda }(v_+,v_+)=0\) for any \(v_+\in {\mathscr {N}}_{t_{b_o}}^+\), so \({\text {Im}}G^{\lambda }(w,w)=0\) for all \(w\in {\mathbb {T}}_{b_o}^+\) by induction. Similarly, we may use \({\text {Im}}G^{\lambda }(o_{b_o},o_{b_o}) = 0\) along with (2.7) to deduce that \({\text {Im}}G^{\lambda }(v,v) = 0\) for all \(v\in {\mathbb {T}}_{b_o}^-\). This proves claim (ii) for the Green function.

Next, if \(v\in {\mathbb {T}}_{b_o}^+\), we know that \({\text {Im}}R_{\lambda }^+(v) = {\text {Im}}R_{\lambda }^+(j) = 0\). By Lemma 3.2\(G^{\lambda }(v,v)\) exists, and we showed \({\text {Im}}G^{\lambda }(v,v)=0\). Using (2.13), it follows that \({\text {Im}}R_{\lambda }^-(v) = 0\). Hence, \({\text {Im}}R_{\lambda }^{\pm }(v) = 0\) for \(v\in {\mathbb {T}}_{b_o}^+\). The claim for \({\mathbb {T}}_{b_o}^-\) follows similarly.

Now suppose that \({\text {Im}}G^{\lambda }(w,w)=0\) for some \(w\in {\mathbb {T}}\). By symmetry we may assume \(w\in {\mathbb {T}}_{b_o}^+\). Recall that \(G^{\lambda }(v,v),R_{\lambda }^\pm (v)\) exist by Lemma 3.2. By (2.13), we get \({\text {Im}}R_{\lambda }^+(w)={\text {Im}}R_{\lambda }^-(w)=0\). Consider \(w_+\in {\mathscr {N}}_w^+\). By Proposition 3.1, \(\zeta ^\lambda (w,w_+)\) exists, so using (2.4), we get \({\text {Im}}\zeta ^{\lambda }(w,w_+)=0\), hence \({\text {Im}}G^\lambda (w_+,w_+)=0\). On the other hand \({\text {Im}}R_\lambda ^+(w)=0\) implies \({\text {Im}}R_\lambda ^+(w_-)=0\) by (i), so we similarly get \({\text {Im}}\zeta ^\lambda (w_-,w)=0\) and \({\text {Im}}G^\lambda (w_-,w_-)=0\). This shows that \({\text {Im}}G^\lambda (v,v)=0\) for all \(v\in {\mathbb {T}}_{b_o}^+\) and also for \(v=o_{b_o}\), since by definition (3.6), \({\text {Im}}R_\lambda ^+(o_{b_o})=:{\text {Im}}R_{\lambda }^+(j_o)=0\) for \(v=t_{b_o}\), if \(\ell (t_{b_o})=j_o\). Now if \(u\in {\mathscr {N}}_{o_{b_o}}^-\), then as in (3.7) we have \(G^\lambda (u,u)=S_\lambda (L_u)\zeta ^\lambda (o_{b_o},u)+\zeta ^\lambda (o_{b_o},u)^2G^\lambda (o_{b_o},o_{b_o})\), but \({\text {Im}}R_\lambda ^-(o_{b_o})=0\) implies we have \(\sum _{b^-\in {\mathscr {N}}_{b_o}^-}{\text {Im}}R_\lambda ^-(t_{b^-})={\text {Im}}R_\lambda ^-(o_{b_o})=0\), so \({\text {Im}}R_\lambda ^-(t_{b^-})=0\) for each \(b^-\), so \({\text {Im}}R_\lambda ^-(k)=0\) for all \(k\in {\mathfrak {A}}^-\) by (i) and we deduce again that \({\text {Im}}G^\lambda (u,u)=0\) for \(u\in {\mathscr {N}}_{o_{b_o}}^-\), hence for all \(u\in {\mathbb {T}}_{b_o}^-\) by induction.

Finally, to prove (iii), recall that if \(E_{{\mathscr {H}}_0}\) is the projection-valued measure \(E_{{\mathscr {H}}_0}(J) = \chi _J({\mathscr {H}}_0)\), then \(\sigma ({\mathscr {H}}_0) = {{\,\mathrm{supp}\,}}E_{{\mathscr {H}}_0}\). Moreover, \(E_{{\mathscr {H}}_0}(J)=0\) if and only if \(\mu _f(J)=0\) for all \(f\in L^2({\mathbf {T}})\), where \(\mu _f(J) = \langle f, \chi _J({\mathscr {H}}_0) f\rangle \). By [32, Lemma 3.13], we know that \({{\,\mathrm{supp}\,}}\mu _f = \overline{\{\lambda \in {\mathbb {R}}: {\text {Im}}\langle f,G^{\lambda } f\rangle >0\}}\). Since \({\mathfrak {D}}_0\) is a discrete set, we deduce that \({{\,\mathrm{supp}\,}}\mu _f {\setminus } {\mathfrak {D}}_0 = \overline{\{\lambda \in {\mathbb {R}}{\setminus } {\mathfrak {D}}_0 : {\text {Im}}\langle f,G^{\lambda } f\rangle >0\}}{\setminus } {\mathfrak {D}}_0\).

Let \(\lambda _0\in {\mathbb {R}}{\setminus } {\mathfrak {D}}_0\) and suppose there is \(\varepsilon \) such that \({\text {Im}}G^{\lambda }(v,v)=0\) for all \(I:=(\lambda _0-\varepsilon ,\lambda _0+\varepsilon )\). Then \({\text {Im}}R_{\lambda }^{\pm }(w)=0\) for all w by (ii), so \({\text {Im}}\langle f,G^{\lambda } f\rangle =0\) for any f by Lemma A.1. Thus, \(\mu _f(I)=0\) for all \(f\in L^2({\mathbf {T}})\), so \(E_{{\mathscr {H}}_0}(I)=0\) and thus \(\lambda _0\notin \sigma ({\mathscr {H}}_0)\).

Conversely, fix \(\lambda \in {\mathbb {R}}{\setminus } {\mathfrak {D}}_0\) and \(v\in {\mathbb {T}}\). If \({\text {Im}}G^{\lambda }(v,v)>0\), then \({\text {Im}}R_{\lambda }^+(v)>0\) or \({\text {Im}}R_{\lambda }^-(v)>0\), say the former holds and let \(v=o_e\). Then by Lemma A.1, \({\text {Im}}\langle f, G^{\lambda } f\rangle >0\) for \(f=\phi _{\lambda ;e}^-\), since \(g_{\phi _{\lambda ;e}^-}^-(\lambda ) \ge \frac{1}{6} \Vert \phi _{\lambda ;e}^-\Vert ^4>0\). In fact, \(\Vert \phi _{\lambda ;e}^-\Vert =0\) would imply \(C_{\lambda }(x)=R_{\lambda }^-(o_e)S_{\lambda }(x)\) for all \(x\in e\), contradicting the fact that \(C_{\lambda }\) and \(S_{\lambda }\) are linearly independent. We thus showed that \(\overline{\{\lambda \in {\mathbb {R}}{\setminus } {\mathfrak {D}}_0:{\text {Im}}G^{\lambda }(v,v)>0\}}{\setminus } {\mathfrak {D}}_0 \subseteq {{\,\mathrm{supp}\,}}\mu _f {\setminus } {\mathfrak {D}}_0 \subseteq \sigma ({\mathscr {H}}_0){\setminus }{\mathfrak {D}}_0\). \(\quad \square \)

Lemma 3.4

If \({\mathbb {T}}\) satisfies (C1*), then:

-

(i)

For any \(j\in {\mathfrak {A}}^+\), \(k\in {\mathfrak {A}}^-\), the map \(\sigma ({\mathscr {H}}){\setminus } {\mathfrak {D}}_0\ni \lambda \mapsto {\text {Im}}R_{\lambda +{\mathrm {i}}0}^+(j) + {\text {Im}}R_{\lambda +{\mathrm {i}}0}^-(k)\) has a discrete set of zeroes. The same holds for \(\sigma ({\mathscr {H}}){\setminus } {\mathfrak {D}}_0\ni \lambda \mapsto {\text {Im}}G^{\lambda +{\mathrm {i}}0}(v,v)\), for any \(v\in {\mathbb {T}}\).

-

(ii)

\(\sigma ({\mathscr {H}})\) is a union of closed intervals and isolated points, \(\bigcup _rI_r \cup {\mathfrak {P}}\). The limits \(G^{\lambda +{\mathrm {i}}0}(v,v)\) exist in the interior \(\mathring{I}_r\) and satisfy \({\text {Im}}G^{\lambda +{\mathrm {i}}0}(v,v)>0\), for any \(v\in {\mathbb {T}}\).

-

(iii)

The spectrum of \(H_{{\mathbf {T}}}\) is purely absolutely continuous in any compact subset \(K\subset \mathring{I}_r\).

Proof

We know that \(f(\lambda )= R_{\lambda }^+(j)+ R_{\lambda }^-(k)\) is analytic on \({\mathbb {R}}{\setminus }{\mathfrak {D}}_0\), so if \({\text {Im}}f(\lambda _0)=0\) for some \(\lambda _0\notin {\mathfrak {D}}_0\), we may expand \({\text {Im}}f(\lambda ) = \sum _{n\ge 0} b_n(\lambda -\lambda _0)^n\) for \(\lambda \in (\lambda _0-\varepsilon ,\lambda _0+\varepsilon )\), where \(b_n={\text {Im}}a_n\) and \((a_n)\) are the coefficients for \(f(\lambda )\). Suppose \(\lambda _0\in \sigma ({\mathscr {H}}){\setminus }{\mathfrak {D}}_0\). If all \(b_n=0\) then \({\text {Im}}f\) is identically zero on \((\lambda _0-\varepsilon ,\lambda _0+\varepsilon )\). In view of Lemma 3.3 (ii)–(iii), this contradicts that \(\lambda _0\in \sigma ({\mathscr {H}})\). Hence let k be the smallest index with \(b_k\ne 0\). Then \({\text {Im}}f(\lambda ) = (\lambda -\lambda _0)^kg(\lambda )\), where \(g(\lambda )=\sum _{n\ge 0}b_{n+k}(\lambda -\lambda _0)^n\). Clearly \(g(\lambda _0)=b_k\ne 0\) and g is continuous, so we may find \(\varepsilon '\le \varepsilon \) such that both \((\lambda -\lambda _0)^k\) and \(g(\lambda )\) are nonzero on \((\lambda _0-\varepsilon ',\lambda _0+\varepsilon '){\setminus } \{\lambda _0\}\). This shows that \(\lambda _0\) is an isolated zero of \({\text {Im}}f\), as required.

This proves the first part of (i). For the second part, suppose \({\text {Im}}G^{\lambda }(w,w)=0\) for some \(w\in {\mathbb {T}}\). By Lemma 3.3, this implies \({\text {Im}}R_{\lambda }^+(j)+{\text {Im}}R_{\lambda }^-(k)=0\). Hence, \(\lambda \) must lie in the preceding discrete set of zeroes.

For (ii), recall that \(\sigma ({\mathscr {H}}_0){\setminus } {\mathfrak {D}}_0 = \overline{\{ \lambda \in {\mathbb {R}}{\setminus } {\mathfrak {D}}_0 : {\text {Im}}G^{\lambda }(v,v)>0\}}{\setminus }{\mathfrak {D}}_0\), again by Lemma 3.3. By Proposition 3.1 and (2.4), we know \(\lambda \mapsto R_{\lambda }^{\pm }(v)\) is continuous, so \({\mathbb {R}}{\setminus }{\mathfrak {D}}_0 \ni \lambda \mapsto G^{\lambda }(v,v)\) is continuous by (2.13). Hence, \(\{\lambda \in {\mathbb {R}}{\setminus }{\mathfrak {D}}_0 : {\text {Im}}G^{\lambda }(v,v)>0\}\) is a union of intervals \(\bigcup _rJ_r\) which is independent of v by Lemma 3.3. We take \(I_r\) as the closure of \(J_r\) and \({\mathfrak {P}} = \sigma (H)\cap {\mathfrak {D}}_0\).

Finally, if K is a compact subset of \(\mathring{I}_r\), we know that \(G^{\lambda }(v,v)\) is uniformly bounded, and the same holds for \(R_{\lambda }^{\pm }(v)\). In particular, if \(v=o_e\) and \(\psi \) is supported in e, we get using respresentation (A.2) along with (2.13) that \(\sup _{\lambda \in K}|\langle \psi , G^{\lambda } \psi \rangle |<\infty \). The claim follows by the density of the linear span of such \(\psi \). \(\quad \square \)

This completes the proof of Theorem 1.1. We next move to Theorem 1.2.

Remark 3.2

Condition (C1) implies (C1*). In fact, as remarked in [5], all cone types are indexed by the directed edges of the finite graph \({\mathbf {G}}\). If we consider the universal cover \({\mathbf {T}}\) rooted at the midpoint o of some \(b_o\in B({\mathbf {G}})\) (here o is not viewed as an added vertex, just a reference point), this means that the type of each vertex \(v\in {\mathbb {T}}\) is determined by a directed edge, so there are at most \(|B({\mathbf {G}})|\) types. By [25, Lemma 3.1], we know the non-backtracking matrix of \(B({\mathbf {G}})\) is irreducible. This implies that if \({\mathbf {T}}\) is considered in the twisted view, and if M is the single matrix over some alphabet \({\mathfrak {A}}\) encoding all cone types, then M satisfies: for any \(k,l\in {\mathfrak {A}}\), there is n(k, l) such that \((M^n)_{k,l}\ge 1\). In particular, (C1*) holds if we take the matrices \({\tilde{M}},N\) encoding the types in \({\mathbb {T}}_{b_o}^+\) and \({\mathbb {T}}_{b_o}^-\), respectively.

Proof of Theorem 1.2

Since (C1) implies (C1*), we already know that \(\sigma ({\mathscr {H}}_0)\) has the structure given in Theorem 1.1. Let \(\lambda \in \mathring{I}_r\) be in the interior of an AC band.

Within the twisted view, all vertices are offspring of o and we deal with the single, combined alphabet \({\mathfrak {A}}\). Under the stronger assumption (C1), we know the larger matrix M is irreducible. Consequently, if we suppose that \({\text {Im}}R_{\lambda +{\mathrm {i}}0}^+(j)=0\) for some \(j\in {\mathfrak {A}}\), then the statement in Lemma 3.3 (i) now implies that \({\text {Im}}R_{\lambda +{\mathrm {i}}0}^+(j)=0\) for all \(j\in {\mathfrak {A}}\).

Now let \(v\in {\mathbb {T}}\). We know \({\text {Im}}G^{\lambda }(v,v)>0\), so by (2.13), \({\text {Im}}R_\lambda ^+(v)+{\text {Im}}R_\lambda ^-(v)>0\), so either \({\text {Im}}R_\lambda ^+(v)>0\) or \({\text {Im}}R_{\lambda }^-(v)>0\) by the Herglotz property. In the former case we are done. Suppose that \({\text {Im}}R_\lambda ^+(v)=0\). Say \(v=t_b\) for some \(b\in B({\mathbb {T}})\) and \(\ell (v)=j\). Then \(0={\text {Im}}R_\lambda ^+(t_b) = \sum _{k=1}^m M_{j,k}{\text {Im}}R_\lambda ^+(k)\) implies that \({\text {Im}}R_\lambda ^+(k)=0\) for some, hence all, \(k\in {\mathfrak {A}}\). But by (2.4), \(R_{\lambda }^-(t_b) = R_{\lambda }^+(o_{\widehat{b}})+\frac{C_{\lambda }(L_b)-S_\lambda '(L_b)}{S_\lambda (L_b)}\), so \({\text {Im}}R_\lambda ^-(t_b) = {\text {Im}}R_\lambda ^+(o_{\widehat{b}})\). As mentioned in Remark 3.2, under (C1), all cone types are indexed by the directed edges of \({\mathbf {G}}\), in particular \({\mathbb {T}}_{\widehat{b}}^+\) is one of the finitely many nonisomorphic conesFootnote 5. In other words, \({\text {Im}}R_\lambda ^+(o_{\widehat{b}}) = {\text {Im}}R_\lambda ^+(r)\) for some \(r\in {\mathfrak {A}}\). Hence, \({\text {Im}}R_\lambda ^-(t_b)=0\). We thus get \({\text {Im}}R_\lambda ^+(v)+{\text {Im}}R_\lambda ^-(v)=0\), a contradiction. Thus, \({\text {Im}}R_\lambda ^+(v)>0\). \(\quad \square \)

4 Examples of Nontrivial Spectrum

For Theorem 1.2 to be interesting, we’ll need to know that \(\sigma ({\mathscr {H}}_0)\) is not reduced to the isolated points \({\mathfrak {P}}\). Our aim in this section is to give some examples in which this can be proved. We believe the phenomenon to be true for a wider class of examples.

4.1 Equilateral trees

Let G be a discrete graph of minimal degree \(\ge 2\) and \({\mathbb {T}}={\widetilde{G}}\) its universal cover. We know from [10, Sect. 1.6] that the spectrum of the adjacency matrix \(\sigma ({\mathscr {A}}_{{\mathbb {T}}})\) has a continuous part. Actually their argument remains valid for the normalized adjacency matrix \(Pf(x) = \frac{1}{d(x)} ({\mathscr {A}}f)(x)\) (and also if we add potentials). Consequently, using [11, Theorem 3.18], the induced quantum tree with equilateral edge length, identical symmetric potentials, and identical coupling constants, will also have some continuous spectrum. Using Theorem 1.2, we can now conclude:

If G is a graph of degree \(\ge 2\), if \({\mathbb {T}}={\widetilde{G}}\) is its universal cover, and we endow each edge of \({\mathbb {T}}\) with the same length L and potential W, and each vertex with the same coupling constant \(\alpha \), then \(\sigma ({\mathscr {H}}_{{\mathbf {T}}})\) consists of non-empty bands of purely absolutely continuous spectrum, and possibly some isolated eigenvalues.

This generalizes the case of regular trees previously considered in [12, 31].

We may easily extend this to graphs with several lengths which are rationally dependent. More precisely, if in \({\mathbf {G}}\), we have \(L_j = n_j L\) for some \(n_j\in {\mathbb {N}}^{*}\), add \(n_j\) vertices of degree 2 to the edge \(e_j\), with Kirchhoff-Neumann conditions. Then using the previous claim, we see that \({\mathbf {T}}\) also has nontrivial AC spectrum in this case.

4.2 An argument of Bordenave-Sen-Virág

We now consider the non-equilateral case. For this, we start by adapting an argument from [10] to quantum graphs.

We begin with some definitions, which appear in a more general framework in [10].

Let G be a discrete graph and \({\mathbb {T}}={\widetilde{G}}\) its universal cover.

A labeling (or colouring) of the vertices of \({\mathbb {T}}\) is a map \(\eta : V({\mathbb {T}})\rightarrow {\mathbb {Z}}\). With respect to a given labeling, we call a vertex v:

-

(a)

prodigy if it has a neighbour w with \(\eta (w)<\eta (v)\) and such that all other neighbours of w also have label less than \(\eta (v)\),

-

(b)

level if it is not a prodigy and if all of its neighbours have the same or lower labels,

-

(c)

bad if it is neither prodigy nor level.

The tree \({\mathbb {T}}={\widetilde{G}}\) is equipped with a natural unimodularFootnote 6 measure on the space of rooted graphs, namely \({{\,\mathrm{{\mathbb {P}}}\,}}= \frac{1}{|G|} \sum _{x\in G} \delta _{[{\widetilde{G}},{\tilde{x}}]}\).

We say the labeling \(\eta \) on \({\mathbb {T}}\) is invariant if there exists a unimodular probability measure on the set of coloured rooted graphs, which is concentrated on \(\{[{\mathbb {T}},v,\eta ]\}_{v\in {\mathbb {T}}}\). See e.g. [3, Appendix A] for some background on coloured rooted graphs.

Let \(S\subset \ell ^2({\widetilde{G}})\) be a subspace and let \(P_S\) be the orthogonal projection onto S. We say that S is invariant if \(P_S(gv,gw)=P_S(v,w)\) for any \(g\in \varGamma \), where \(\varGamma \) is the group of covering transformations with \({\widetilde{G}}/ \varGamma \equiv G\).

Given an invariant subspace \(S\subset \ell ^2({\widetilde{G}})\), we define its von-Neumann dimension by

A line ensemble in \({\mathbb {T}}\) is a disjoint union of bi-infinite lines \((l_i)\). More precisely, \({\mathscr {L}}:V({\mathbb {T}})\times V({\mathbb {T}})\rightarrow \{0,1\}\) is a line ensemble if:

-

\({\mathscr {L}}(u,v)=0\) if \(\{u,v\}\notin E({\mathbb {T}})\),

-

\({\mathscr {L}}(u,v)={\mathscr {L}}(v,u)\),

-

for any \(v\in V({\mathbb {T}})\), we have \(\sum _u {\mathscr {L}}(u,v) \in \{0,2\}\).

Abusing notation, we then let \({\mathscr {L}}= \{e: {\mathscr {L}}(e)=1\}\), which gives a subgraph consisting of disjoint lines.

We say a line ensemble \({\mathscr {L}}\) is invariant if there exists a unimodular probability measure on the space of weighted rooted graphs, which is concentrated on the collection \(\{[{\mathbb {T}},v,{\mathscr {L}}]\}_{v\in {\mathbb {T}}}\).

We say that \({\mathbb {T}}\) is Hamiltonian if there exists an invariant line ensemble \({\mathscr {L}}\) that contains the root with probability 1.

Remark 4.1

Recall that a finite graph G is Hamiltonian if there is a cycle in G which visits each vertex exactly once. If G is Hamiltonian, then \({\widetilde{G}}\) is Hamiltonian in the above sense. In fact, if \(C=(x_0,\dots ,x_m)\) is a cycle in G, then its lift to \({\widetilde{G}}\) is a line ensemble \({\mathscr {L}}\) which generally consists of a disjoint union of countable lines \((l_i)\), where \(l_i=(\dots ,{\tilde{x}}_0,\dots ,{\tilde{x}}_m,{\tilde{x}}_0,\dots )\) (see Fig. 2). Since it is a lift, this line ensemble is invariant. More precisely, if [H, v, R] denotes an equivalence class of graph H with root v and edge weight R(e) for \(e\in E(H)\), then \([{\widetilde{G}},v,{\mathscr {L}}] = [{\widetilde{G}},gv,{\mathscr {L}}]\) for any covering transformation g. This by definition of the universal cover and \({\mathscr {L}}\). So the measure \({\tilde{{{\,\mathrm{{\mathbb {P}}}\,}}}} = \frac{1}{|G|}\sum _{x\in G} \delta _{[{\widetilde{G}},{\tilde{x}},{\mathscr {L}}]}\) is well-defined and unimodularity follows from \(\sum _{(x,y)\in B(G)} f(x,y) = \sum _{(x,y)\in B(G)} f(y,x)\).

Moreover, \({\tilde{{{\,\mathrm{{\mathbb {P}}}\,}}}}(o\in {\mathscr {L}}) = \frac{1}{|G|}\sum _{x\in G} 1_{{\tilde{x}}\in {\mathscr {L}}} = \frac{1}{|G|}\sum _{x\in G}1_{x\in C} = \frac{|C|}{|G|}\). If C covers G, we thus get \({\tilde{{{\,\mathrm{{\mathbb {P}}}\,}}}}(o\in {\mathscr {L}})=1\).

In particular, the \((q+1)\)-regular tree \({\mathbb {T}}_q\) is Hamiltonian, since it covers the complete bipartite \((q+1)\)-regular graph on \(2(q+1)\) vertices, which is Hamiltonian.