Abstract

In this note we study the limiting behaviour of real valued functions on hyperbolic groups as we travel along typical geodesic rays in the Gromov boundary of the group. Our results apply to group homomorphisms, certain quasimorphisms and to the displacement functions associated to convex cocompact group actions on CAT\((-1)\) metric spaces.

Similar content being viewed by others

1 Introduction

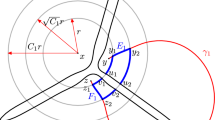

Let G by a non-elementary hyperbolic group and suppose that G acts cocompactly (or convex cocompactly) by isometries on a complete hyperbolic geodesic metric space (X, d). Fix a finite generating set S for G and an origin o for X. Let C(G) denote the Cayley graph of G with respect to S and write \(\partial G\) for the Gromov boundary of G. By the S̆varc-Milnor Lemma, there exists constants \(C_1,C_2>0\) such that, for any infinite geodesic ray \(\gamma \) based at the identity in C(G),

for all \(n \ge 1\). Here \(\gamma _n\) denotes the end point of \(\gamma \) after n steps. This inequality describes the coarse behaviour of the displacement function \(g\mapsto d(o,go)\) along geodesic rays. It is then natural to ask whether we can describe more precisely how the displacement grows along typical geodesic rays in \(\partial G\)? The Patterson–Sullivan measure provides us with a natural way of quantifying typicality in this setting. We say that a property exhibited by elements of \(\partial G\) is typical if it holds on a full Patterson–Sullivan measure set.

Gekhtman, Taylor and Tiozzo asked the above question in a more general setting. They prove the following theorem in [11]. Let \(\nu \) denote the Patterson–Sullivan measure obtained as the weak star limit

where \(\delta _g\) denotes the Dirac measure based at \(g \in G\) and |g| denotes the word length of g. We write \([\gamma ] \in \partial G\) for the element in \(\partial G\) that contains \(\gamma \).

Proposition 1.1

(Theorem 1.3 [11]) Suppose a hyperbolic group G has a non-elementary action by isometries on a separable, hyperbolic geodesic metric space X. Then, there is \(L>0\) such that for every \(x \in X\) and \(\nu \) almost every \([\widetilde{\gamma }] \in \partial G\),

where \(\gamma \) is any geodesic ray in \([\widetilde{\gamma }]\).

To prove this, Gekhtman, Taylor and Tiozzo exploit the strongly Markov structure of G. That is, they use the fact that there exists a finite directed graph \(\mathcal {G}\) that in some sense encodes the key properties of G. They obtain the above theorem by studying random walks on the loop graph associated to \(\mathcal {G}\).

This is one way to exploit the structure provided by \(\mathcal {G}\). It is however possible to make use of the strongly Markov property in a different way. The graph \(\mathcal {G}\) gives rise to a dynamical system \((\Sigma , \sigma :\Sigma \rightarrow \Sigma )\) known as a subshift of finite type. We can embed G into \(\Sigma \) via a function \(i:G \rightarrow \Sigma \) and use this to translate questions about the displacement function on G to questions about \(\Sigma \) and a suitable function \(f: \Sigma \rightarrow \mathbb {R}\). The connection between G and \(\Sigma \) is exploited by Pollicott and Sharp in [20]. They prove an almost sure invariance principle, as well as other limit laws, for the displacement function associated to the action of surface groups and convex cocompact free groups on the hyperbolic plane. In [7] similar ideas are used to derive limit laws for real-valued functions satisfying two conditions named, in that paper, by Condition (1) and Condition (2). Real valued group homomorphisms, certain quasimorphisms as well as the displacement function associated to convex cocompact group actions on \(\text {CAT}(-1)\) metric spaces satisfy these conditions.

This leads us to ask whether Proposition 1.1 remains true if we replace the displacement function with a different real valued function. Furthermore, can we formulate a more precise statement describing how these functions behave along geodesic rays? These are the questions that we consider in this paper. Our main theorems are the following. We will define and discuss Condition (1) and Condition (2) in Sect. 3. Let \(\nu \) denote the Patterson–Sullivan measure as defined above.

Theorem 1.2

Let G be a non-elementary hyperbolic group equipped with a finite generating set S. Suppose that \(\varphi :G\rightarrow \mathbb {R}\) satisfies Condition (1) and Condition (2). Then there exists \(\Lambda \in \mathbb {R}\) such that for \(\nu \) almost every \([\widetilde{\gamma }] \in \partial G\),

for any \(\gamma \) belonging to \([\widetilde{\gamma }]\).

Remark 1.3

When \(\varphi \) is the displacement function associated to a convex cocompact group action on a \(\text {CAT}(-1)\) metric space, we recover a special case of Proposition 1.1. We note that the non-elementary actions to which Proposition 1.1 applies are more general than convex cocompact.

This shows that, along typical elements of \(\partial G\), a function \(\varphi \) satisfying the hypotheses of Theorem 1.2 grows asymptotically like \(\Lambda n\). We can then ask if it is possible to describe more precisely how \(\varphi \) grows along elements of \(\partial G\). To achieve this, we need to impose an additional assumption on \(\varphi \) to ensure that \(\varphi (\cdot )-|\cdot |\Lambda \) grows along typical geodesic rays. Specifically, we need that the set

is non-empty. The fact that this set is well-defined will follow from Condition (2). Surprisingly, this is the only additional hypothesis we need in order to obtain the following, more precise description of how \(\varphi \) grows.

Theorem 1.4

Let G be a non-elementary hyperbolic group equipped with a finite generating set S. Fix a bounded subset H of the Cayley graph of G. Suppose \(\varphi :G\rightarrow \mathbb {R}\) satisfies Condition (1) and Condition (2) and that \(\Lambda \) is the quantity defined in Theorem 1.2. Then, if the set

is non-empty, there exists \(\sigma ^2>0\) such that for \(x\in \mathbb {R}\),

as \(n \rightarrow \infty \), where

The implied constant is uniform in \(x \in \mathbb {R}\).

Remark 1.5

The reason that we ask for \(\gamma _0 \in H\) is due to the following fact. For \(\nu \) almost every \([\widetilde{\gamma }] \in \partial G\) and every \(n \ge 1\), we can find \(\gamma \in [\widetilde{\gamma }]\) for which \(\varphi (\gamma _n) - n\Lambda \) is arbitrarily large. Therefore without this assumption, \(\mathcal {A}_n\) would have zero \(\nu \) measure for all \(n\in \mathbb {Z}_{\ge 0}\).

The following result from [7] then shows that real-valued group homomorphisms satisfy the hypotheses of Theorem 1.4.

Proposition 1.6

([7] Lemma 7.11, Corollary 7.12) Let G be a non-elementary hyperbolic group equipped with a finite generating set S. Suppose \(\varphi :G\rightarrow \mathbb {R}\) is a non-trivial group homomorphism. Then the constant \(\Lambda \) obtained from Theorem 1.2 is zero and the set

is non-empty and in fact has full \(\nu \) measure.

To conclude the introduction, we briefly outline the contents of this paper. In the second section we cover preliminary material concerning hyperbolic groups, their strongly Markov structure and the Patterson–Sullivan measure. In the third section we discuss the regularity conditions, Condition (1) and Condition (2). We then, in Sect. 4, study the properties of the Patterson–Sullivan measure. We prove Theorems 1.2 and 1.4 in the remaining section.

Notation: Throughout the paper, we use the following notation to describe the asymptotic behaviour of sequences. Suppose \(f_n, g_n,h_n\) are real valued sequences. We write \(f_n = O(g_n)\) if there exists \(C>0\) such that eventually \(|f_n| \le C|g_n|\). If \(|f_n/g_n| \rightarrow 0\) as \(n\rightarrow \infty \) we write \(f_n = o(g_n)\). We write \(f_n = O(g_n,h_n)\) if \(f_n=O(\max \{|g_n|,|h_n|\})\).

2 Hyperbolic groups and symbolic codings

In this section we cover preliminary material related to hyperbolic groups and symbolic dynamics.

Definition 2.1

Let G be a finitely generated group with finite generating set S. We define the left and right word metrics on G by

for \(g,h\in G\). Here \(|\cdot |\) denotes the word metric, i.e. |g| is the length of the shortest word(s) representing g with letters in \(S\cup S^{-1}\). We say that G is hyperbolic if there exists \(\delta \ge 0\) such that any geodesic triangle in the \(d_L\) metric is \(\delta \)-thin (i.e. any point on the side of a geodesic triangle is within distance \(\delta \) of one of the other two sides).

We say that a hyperbolic group is non-elementary if it is not virtually cyclic, i.e. it does not contain a finite index cyclic subgroup. Suppose that G is a non-elementary hyperbolic group equipped with a finite generating set and let \(W(n) = \# \{g \in G: |g|=n\}\) denote the word length counting function. Coornaert proved that the growth rate of W(n) is purely exponential [9], i.e. there exists \(\lambda >1\) and \(C_0,C_1 >0\) such that

This fact will be key to our analysis.

Let C(G) denote the Cayley graph of G with respect to S. The Gromov boundary \(\partial G\) of G consists of equivalence classes of infinite geodesic rays in C(G). Two geodesic rays \(\gamma \) and \(\gamma '\) are said to be equivalent if \(d_L(\gamma _n,\gamma '_n)\) is bounded uniformly for \(n\in \mathbb {Z}_{\ge 0}\). Here, \(\gamma _n, \gamma '_n\) denote the end points of \(\gamma \), \(\gamma '\) after n steps. Given an infinite geodesic ray \(\gamma \) we use \([\gamma ]\) to denote the element of \(\partial G\) containing \(\gamma \). There is a natural compact topology for \(G \cup \partial G\) that extends the topology on G given by the word metric. The action of G extends continuously to \(G \cup \partial G\) by sending \([\gamma ] \in \partial G\) to \([g\gamma ] \in \partial G\).

The Patterson–Sullivan measure \(\nu \) is a measure on \(\partial G\) obtained as the weak star limit, as \(n \rightarrow \infty \), of the following sequence of measures

on \(G \cup \partial G\). Here \(\delta _g\) denotes the Dirac measure based at \(g \in G\). The measure \(\nu \) is ergodic with respect to the action of G on \(\partial G\). See [9] and [14] for a comprehensive account of the above material concerning the Patterson–Sullivan measure. We will now discuss the combinatorial properties of hyperbolic groups.

As mentioned in the introduction, hyperbolic groups have nice combinatorial properties that arise due to their strongly Markov structure.

Definition 2.2

A finitely generated group G is strongly Markov if given any finite generating set S there exists a finite directed graph \(\mathcal {G}\) with vertex set V, edge set E and a labeling map \(\rho : E \rightarrow S\) such that:

-

1.

there exists an initial vertex \(*\in V\) such that no directed edge ends at \(*\);

-

2.

the map taking finite paths in \(\mathcal {G}\) starting at \(*\) to G that sends a path with concurrent edges \((*,x_1), \ldots ,(x_{n-1},x_n)\) to \(\rho (*,x_1)\rho (x_1,x_2) \ldots \rho (x_{n-1},x_n)\), is a bijection;

-

3.

the word length of \(\rho (*,x_1) \ldots \rho (x_{n-1},x_n)\) is n.

Cannon introduced this property and proved that cocompact Kleinian groups are strongly Markov [6]. Ghys and de la Harpe showed that Cannon’s method worked for arbitrary hyperbolic groups.

Proposition 2.3

([12] Theorem 13) Any hyperbolic group is strongly Markov.

Throughout the rest of this paper we will assume that G is a non-elementary hyperbolic group equipped with a finite generating set S. Let \(\mathcal {G}\) be a graph associated to a G via the strongly Markov property. We augment \(\mathcal {G}\) by adding an extra vertex \(0\in V\) and edges (v, 0) for all \(v\in V\cup \{0\}\backslash \{*\}\). We define \(\rho (v,0) = e\) for \(v\in V\cup \{0\}\backslash \{*\}\) , where \(e \in G\) is the identity element. We will assume that any graph \(\mathcal {G}\) associated to G has been augmented in this way.

As mentioned in the introduction, we can use this strongly Markov structure to construct a dynamical system that encodes the properties of G. Suppose that \(\mathcal {G} = (E,V)\) is a directed graph associated to G via the strongly Markov property. We define a transition matrix A, indexed by \(V \times V\), by

Using A we define

and \(\sigma :\Sigma _A \rightarrow \Sigma _A\) by \(\sigma ((x_n)_{n=0}^\infty ) = (x_{n+1})_{n=0}^\infty \). The system \((\Sigma _A,\sigma )\) is known as a subshift of finite type. We embed G into \(\Sigma _A\) via the function \(i:G\rightarrow \Sigma _A\) that sends a group element \(g \in G\) to the unique element \((*,x_1,x_2, \ldots ,x_n,0,0, \ldots )\) for which \(\rho (*,x_1) \ldots \rho (x_{n-1},x_n) = g\) and \(|g|=n\). This correspondence will allow us to prove facts about G by studying the properties of \(\Sigma _A\). For the rest of this section we recount (following [16]) the properties of subshifts that we require for our proofs.

Let B be a zero-one matrix. We say that B is irreducible if given i, j, there exists N such that \(B^N(i,j) >0\). If there exists N such that \(B^N(i,j)>0\) for all pairs i, j then we say that B is aperiodic. For each \(0<\theta <1\) there is a metric \(d_\theta \) on \(\Sigma _B\) defined by \(d_\theta (x,y) = \theta ^{s(x,y)}\) where \(s(x,y) \in \mathbb {Z}_{\ge 0}\) is the first integer n such that \(x_n \ne y_n\). We write \(F_\theta (\Sigma _B) = \{ f: \Sigma _B \rightarrow \mathbb {R} : f \text { is Lipschitz in the } d_\theta \text { metric}\}.\) Given \(f \in F_\theta (\Sigma _B)\), we write \(f^n(x)=f(x)+f(\sigma (x))+ \cdots +f(\sigma ^{n-1}(x))\) for \(x \in \Sigma _B\). Throughout the following, we assume that B is irreducible. When this is the case, the system \((\Sigma _B,\sigma )\) is transitive and admits a unique measure of maximal entropy \(\mu \) [15], i.e. there exists unique \(\mu \) such that

where the above supremum is taken over all \(\sigma \)-invariant probability measures. The measure \(\mu \) is ergodic with respect to \(\sigma \). If \(f \in F_\theta (\Sigma _B)\) for some \(0<\theta <1\) and \(\int f \ d\mu =0\), then there exists \(\sigma _f^2 \ge 0\) such that for \(x \in \mathbb {R}\)

as \(n\rightarrow \infty \) [8]. Furthermore, \(\sigma _f^2 =0\) if and only if there exist continuous \(h:\Sigma _B \rightarrow \mathbb {C}\) such that \(f = h \circ \sigma - h\). In [8] this result is proved under the assumption that B is aperiodic, however it is easy to see that this result passes to the irreducible case.

We note that since \(\mathcal {G}\) has no edges that enter \(*\), the matrix A associated to \(\mathcal {G}\) will never be irreducible. It is possible however that if we remove, from A, the rows/columns corresponding to the 0 and \(*\) vertices, then the resulting matrix is irreducible (or aperiodic). We say that A is irreducible (or aperiodic) if this is the case. Although in general it is possible that A is not irreducible, we can, by relabeling the vertex set V, assume A has the form

where \(A_{i,i}\) are irreducible for \(i=1,\ldots ,m\). We call the \(A_{i,i}\) the irreducible components of A. Let \(\lambda >1\) denote the exponential growth rate of W(n). It is easy to see by Property (2) and (3) in Definition 2.2 that all of the \(A_{i,i}\) must have spectral radius at most \(\lambda \). Furthermore there must be at least one \(A_{i,i}\) with spectral radius exactly \(\lambda \). We call an irreducible component maximal if it has spectral radius \(\lambda \). We label the maximal components \(B_i\) for \(i=1,\ldots ,m\). The following key result follows from Coornaert’s estimates on W(n).

Proposition 2.4

([5] Lemma 4.10) The maximal components of A are disjoint. There does not exist a path in \(\mathcal {G}\) that begins in one maximal component and ends in another.

3 Regularity conditions

In this section we discuss Condition (1) and Condition (2). This will be a brief survey of the functions satisfying these conditions, see Sect. 4 of [7] for a more comprehensive account. Condition (1) and Condition (2) are defined as follows.

Condition (1) There exists a graph \(\mathcal {G}\) associated to G, S via the strongly Markov property with transition matrix A and a function \(f\in F_\theta (\Sigma _A)\) (for some \(0<\theta <1\)) such that \(\varphi (g) = f^{|g|}(x)\) for \(g \in G \text { and } x = i(g) \in \Sigma _A.\)

Condition (2) \(\varphi \) is Lipschitz in the left and right word metrics on G.

Although Condition (1) relies on the properties of \(\Sigma _A\), there is a natural assumption we can place on \(\varphi :G \rightarrow \mathbb {R}\) to guarantee the existence of appropriate \(\Sigma _A\) and \(f: \Sigma _A \rightarrow \mathbb {R}\). Given \(g,h \in G\), let (g, h) denote their Gromov product

Definition 3.1

We say that \(\varphi :G\rightarrow \mathbb {R}\) is Hölder if for any fixed finite generating set S and \(a \in G\), there exists \(C >0\) and \(0<\theta <1\) such that

for any \(g,h \in G\). Here, \(\Delta _a\varphi (g)= \varphi (ag)-\varphi (g)\) for \(a,g \in G\).

Pollicott and Sharp prove that Hölder functions satisfy Condition (1) in [18]. In [5] and [7], combable and edge combable functions are defined. We refer the reader to these papers for the definitions. Both these classes of functions satisfy Condition (1), see Lemma 4.5 in [7]. It is clear that homomorphism to \(\mathbb {R}\) are edge combable and so satisfy Condition (1). The homomorphism property implies that real valued homomorphism also satisfy Condition (2). In fact, the more general class of quasimorphism satisfy Condition (2).

Definition 3.2

A function \(\varphi : G \rightarrow \mathbb {R}\) is a quasimorphism if there exists a constant \(A >0\) such that

for all \(g,h \in G\).

It is easy to check that quasimorphisms satisfy Condition (2). In [5], Calegari and Fujiwara show that Brooks counting quasimorphisms (see [3] for a definition) satisfy Condition (1) and so by the above discussion, our theorems apply to these functions. The following example, due to Barge and Ghys [1], is a quasimorphism that satisfies the Hölder condition.

Example: Suppose G acts cocompactly by isometries on a simply connected Riemannian manifold X with all sectional curvatures bounded above by \(-1\). Write \(M=X/G\). Given a smooth 1-form \(\omega \) on M, we can lift \(\omega \) to a G-invariant smooth 1-form \(\widetilde{\omega }\) on X. Fix an origin \(o \in X\) and define \(\varphi : G \rightarrow \mathbb {R}\) by

Note that

where T(g, h) denotes the triangle in \(\mathbb {H}\) with vertices o, go and gho. By compactness and hyperbolicity, the right hand side of the above is bounded uniformly in g, h. This proves that \(\varphi \) is a quasimorphism. In [17] Picaud proved that these quasimorphisms satisfy Condition (1).

Another example of a function satisfying Condition (1) and Condition (2) was mentioned in the introduction. Suppose G acts properly discontinuously, convex cocompactly by isometries on a complete \(\text {CAT}(-1)\) geodesic metric space (X, d). Fix a finite generating set for G and an origin o for X. A result of Pollicott and Sharp (Proposition 3 from [19]) proves that the displacement function satisfies Condition (1). Furthermore, it is easy to see that this function satisfies Condition (2). See Lemma 4.6 of [7] for a more detailed discussion.

This concludes our brief survey of functions satisfying Condition (1) and Condition (2). See [1, 10] and [12] for further examples as well as Chapter 3 of [13] for a more comprehensive account of these functions.

4 Properties of the Patterson–Sullivan measure

The results presented in [7] and [11] as well as this paper rely on the work of Calegari and Fujiwara [5] that compares the Patterson–Sullivan measure \(\nu \) to a natural measure \(\mu \) on \(\Sigma _A\). In this section we construct this measure and compare it to \(\nu \). To deduce our results we need to extend the work in [5] to obtain a deeper understanding of how the measures \(\mu \) and \(\nu \) compare.

Suppose G has associated subshift \(\Sigma _A\) which is obtained from the directed graph \(\mathcal {G}\). Let V denote the vertex set of \(\mathcal {G}\). For \(v \in \mathbb {R}^V\), define the function \(p:\mathbb {R}^V \rightarrow \mathbb {R}^V\) by

This function projects v to the eigenspace of A corresponding to the eigenvalue \(\lambda \). Similarly, the function \(r: \mathbb {R}^V \rightarrow \mathbb {R}^V\) defined by

projects v to the eigenspace of \(A^T\) corresponding to the eigenvalue \(\lambda \). To obtain the error term in Theorem 1.4 we need to know the rate of convergence associated to the limit defining p.

Lemma 4.1

For \(v \in \mathbb {R}^V\) we have that

where the implied constant depends only on v.

Proof

Given \(v \in \mathbb {R}^V\) we can write v as a linear combination of elements in a Jordan basis for A. Since maximal components are disjoint, if an eigenvalue x of A has absolute value \(\lambda \), then there does not exist a Jordan chain of length strictly greater than one associated to x. A simple calculation then shows that if \(\widetilde{v}\) belongs to the generalised eigenspace associated to the eigenvalue \(x\ne \lambda \), then

The result follows. \(\square \)

Let \(\mathbf 1 \in \mathbb {R}^V\) denote the vector consisting of 1 in each coordinate and let \(v_*\) denote the vector consisting of a 1 in the coordinate corresponding to the \(*\) vertex and zeros elsewhere. Using p and r, we define a measure \(\mu \) on \(\Sigma _A\) via a stochastic matrix \(N : \mathbb {R}^V \rightarrow \mathbb {R}^V\) and vertex distribution \(\rho : V\rightarrow \mathbb {R}\). For a vector \(v\in \mathbb {R}^V\), let \(v_j\) denote the coordinate of v corresponding to the vertex \(j \in V\). The matrix N is defined as follows. If \(p(\mathbf 1 )_i \ne 0\) then set

and if \(p(\mathbf 1 )_i=0\) let \(N_{i,i}=1\) or \(N_{i,j} = 0\) when \(i\ne j\). The vertex distribution \(\rho \) is defined by

As for the usual construction of Markov measures, this defines a \(\sigma \)-invariant measure on \(\Sigma _A\). We normalise this measure to obtain the probability measure \(\mu \). There is a nice description of \(\mu \) in terms of thermodynamic formalism.

Proposition 4.2

There exists \(0< \alpha _i <1\) for \(i=1,\ldots ,m\) with \(\sum _{i=1}^m \alpha _i =1\) such that

where each \(\mu _i\) is the measure of maximal entropy for the system \((\Sigma _{B_i},\sigma )\).

Proof

Choose a maximal component \(B_i\). One can check that the vector obtained from restricting \(p(\mathbf 1 )\) or \(r(v_*)\) to the vertices in \(B_i\) is a right or left eigenvector respectively for \(B_i\) (with eigenvalue \(\lambda \)). Then by comparing the construction of \(\mu \) to Parry’s construction of the measure of maximal entropy for a subshift of finite type [15], we see that the restriction of \(\mu \) to the maximal component \(\Sigma _{B_i}\) is up to scaling, the measure of maximal entropy \(\mu _i\) on this component. Furthermore, from the definitions of p and r and the fact that \(\mu \) is \(\sigma \)-invariant, it is clear that \(\mu \) assigns zero mass to the complement of the union of the maximal components. The result follows. \(\square \)

Let \(A'\) denote the matrix A with the row/column corresponding to the 0 vertex removed.

Definition 4.3

Define sets \(Y, Y_1, \ldots ,Y_m \subset \Sigma _{A'}\) by

Let \(h: Y \rightarrow \partial G\) be the natural map associated to the bijection defined in Definition 2.2. Given \(y \in Y\), we use \(h(y)_n\) to denote the nth step in the geodesic ray determined by y.

There is a unique measure \(\widehat{\nu }\) on Y that pushes forward under h to the Patterson–Sullivan measure on \(\partial G\). We denote the pushforward map by \(h_*\) so that \(h_*\widehat{\nu } = \nu \). The measure \(\widehat{\nu }\) can be constructed as in Section 4 of [5]. We will not provide the construction here but will instead present the properties of \(\widehat{\nu }\) that we require for our proofs. One of these properties is the following. We can explicitly calculate the \(\widehat{\nu }\) measure of certain subsets of \(\Sigma _{A'}\) called cylinder sets. Given a finite path in \(\mathcal {G}\) let [y] to denote the elements in \(\Sigma _{A'}\) that have y as an initial segment.

Lemma 4.4

Let y be a finite path in \(\mathcal {G}\) starting at \(*\). We have that

where |y| is the length of y and \(v_y\) denotes the last vertex in y.

Proof

This is a simple calculation that can be found in Section 4 of [5]. Note that in this work, we are using a slightly different scaling for \(\widehat{\nu }\). This introduces the \(p(\mathbf 1 )_{*}\) term, which is not present in [5]. \(\square \)

For \(k \in \mathbb {Z}_{\ge 0}\), let \(\sigma _*^k\widehat{\nu }\) denote the pushforward of \(\widehat{\nu }\) under \(\sigma ^k\). The following lemma compares these pushforward measures to the measure \(\mu \).

Lemma 4.5

For each \(v \in V\) with \(\mu [v]>0\) and \(k \in \mathbb {Z}_{\ge 0}\) there exists \(\alpha _v^k \ge 0\) such that

There exists a length k path from \(*\) to v if and only if \(\alpha _v^k >0\). If \(\mu [v]=0\) we define \(\alpha _v^k = \widehat{\nu }(\sigma ^{-k}[v])\) for all \(k\in \mathbb {Z}_{\ge 0}\). Furthermore,

The implied constants can be taken to be independent of v and n.

Proof

This is a consequence of Lemma 4.1, the construction of \(\widehat{\nu }\) and the proof of Lemma 4.22 in [5]. A simple calculation using the definition of \(\widehat{\nu }\) shows the existence of \(\alpha _v^k\) satisfying the first condition of the lemma. The convergence associated to the final statement is proved in Lemma 4.22 of [5]. By inspecting the proof of this lemma, we see that Lemma 4.1 quantifies the convergence as \(O(n^{-1})\). \(\square \)

It follows that

converges in the weak star topology to the measure \(\mu \). There is a much stronger relationship between \(\widehat{\nu }\) and \(\mu \) however. Given two measures, \(\lambda _1\) and \(\lambda _2\) on \(\Sigma _{A}\), recall that their total variation \(\Vert \lambda _1 - \lambda _2\Vert _{TV}\) is given by \(\sup _{E \subset \Sigma _{A}} |\lambda _1(E)-\lambda _2(E)|\).

Proposition 4.6

We have that,

as \(n\rightarrow \infty \).

Proof

For any \(E \subset \Sigma _{A}\),

where \(\alpha _v^j\) are as defined in the previous lemma. Applying the previous lemma concludes the proof. \(\square \)

We will need the following definition and lemma later.

Definition 4.7

For each \(j \in \mathbb {Z}_{\ge 0}\) let

Then, for each \(n\in \mathbb {Z}_{\ge 0}\), define a measure \(\widehat{\nu }_n\) on \(\Sigma _{A'}\) by

for \(E \subset \Sigma _{A'}\).

Intuitively, each \(A_j\) consists of elements in \(\Sigma _{A'}\) that correspond to a path in \(\mathcal {G}\) that starts at \(*\), enters a maximal component on exactly its jth step and then never leaves this component.

Lemma 4.8

There exists \(0<\theta <1\) such that \( \left\| \widehat{\nu }_n - \widehat{\nu } \right\| _{TV} = O(\theta ^n),\) as \(n\rightarrow \infty \). The implied constant is independent of n.

Proof

We claim that

exponentially quickly as \(n\rightarrow \infty \). To see this, note that the number of length n paths in \(\mathcal {G}\) that start at \(*\) and do not enter a maximal component is \(O((\lambda -\delta )^n)\) for some \(0<\delta < \lambda \). Combining this observation with Lemma 4.4 implies that there exists \(C>0\) independent of j, n such that

This proves the claim. Along with Lemma 4.4, this shows that \(Y \backslash \cup _{i=1}^m Y_i\) can be written as a countable union of zero \(\widehat{\nu }\) measure sets. Hence \(\widehat{\nu }\left( Y\backslash \cup _{i=1}^m Y_i\right) =0\) and for any \(E \subset Y\),

Applying the claim a further time concludes the proof. \(\square \)

We end this section by observing that, for any \(E \subset \cup _i \Sigma _{B_i}\),

We are now ready to prove our results.

5 Proofs of results

Throughout the rest of the paper, suppose that \(\varphi : G \rightarrow \mathbb {R}\) satisfies Condition (1) and Condition (2) and let \(f: \Sigma _A \rightarrow \mathbb {R}\) be the function related to \(\varphi \). Fix a bounded subset \(H \subset C(G)\) (i.e. \(\sup _{g\in H}\{|g|\} < \infty \)).

We begin by noting that Theorem 1.2 is equivalent to the fact that there exists \(\Lambda \in \mathbb {R}\) for which the set

is well-defined and has full \(\nu \) measure.

Lemma 5.1

For any \(\Lambda \in \mathbb {R}\) the set \(\mathcal {U}_\Lambda \) is well-defined and G-invariant.

Proof

Since \(\varphi \) is Lipschitz in the right word metric, if \([\gamma ] \in \partial G\) and \(g \in G\), then there exists \(C>0\) for which

uniformly for \(n\in \mathbb {Z}_{\ge 0}\). Hence

This proves G-invariance assuming that \(\mathcal {U}_\Lambda \) is well-defined. To prove that \(\mathcal {U}_\Lambda \) is well-defined we can follow the same argument as above, this time using that \(\varphi \) is Lipschitz in the left word metric. \(\square \)

We are now ready to prove Theorem 1.2.

Proof of Theorem 1.2

Since the action of G on \(\partial G\) is ergodic with respect to \(\nu \), it suffices, by Lemma 5.1, to prove that there exists \(\Lambda \) for which \(\mathcal {U}_\Lambda \) has positive \(\nu \) measure. Consider a maximal component \(B_i\). By the ergodic theorem, \(\mu (E_\Lambda ) >0,\) where

and \(\Lambda = \int _{\Sigma _{B_i}} f \ d\mu _i\). Hence by Proposition 4.6 there exists \(k \in \mathbb {Z}_{\ge 0}\) for which \(\sigma _*^k\widehat{\nu }(E_\Lambda )>0\). We now note that if \(y \in E_\Lambda \) and \(x \in \bigcup _{n\ge 0} \sigma ^{-n}(\{y\}) \) then

as \(n\rightarrow \infty \). Hence,

By Condition (1), for \(y \in Y,\) \(f^n(y_n) = \varphi (h(y)_n) + O(1)\) where the implied constant is independent of both n and y. Combining this with the fact that \(h_*\widehat{\nu } =\nu \) implies that \(\nu \left( \mathcal {U}_\Lambda \right) >0\) and thus concludes the proof. \(\square \)

We now move on to the proof of Theorem 1.4. By replacing \(\varphi (\cdot )\) with \(\varphi (\cdot ) - \Lambda |\cdot |\) and \(f(\cdot )\) with \(f(\cdot ) - \Lambda \), it suffices to prove Theorem 1.4 under the assumption that \(\Lambda =0\). We will assume this from now on.

The intuition behind our proof of Theorem 1.4 is the following. By Proposition 4.6, \(\mu \) is obtained from averaging the pushforwards of \(\widehat{\nu }\). If we could therefore, in some sense, reverse this averaging and express \(\widehat{\nu }\) in terms of \(\mu \), then we could use our knowledge of \(\mu \) to learn about \(\widehat{\nu }\). The relationship between these measures is particularly nice and allows us carry out such a procedure.

Recall that we want to study the convergence of the following distributions.

Definition 5.2

Define, for \(n\in \mathbb {Z}_{\ge 0}\) and \(x\in \mathbb {R}\),

and

We want to prove that there exists \(\sigma ^2 \ge 0\) for which

as \(n\rightarrow \infty \). To simplify notation we will express this as \(R_n = N(\sigma ) + O(n^{-1/4})\). We will use the following fact multiple times.

Lemma 5.3

Let \(F_n,H_n: \mathbb {R} \rightarrow \mathbb {R}\) be sequences of distributions and suppose that \(k_n, l_n\) are sequences of integers with \(k_n \rightarrow \infty \) and \(l_n \rightarrow \infty \) as \(n \rightarrow \infty \). Suppose further that there exists a constant \(C>0\) independent of n and x such that

for all n, x. Then, if \(H_n = N(\sigma ) +O(k_n^{-1})\), we have that \(F_n = N(\sigma ) +O(k_n^{-1},l_n^{-1})\).

Proof

This is a simple consequence of the fact that the derivative of \(N(\sigma )\) is uniformly bounded. \(\square \)

Our aim is to construct a sequence of distributions on Y with respect to \(\widehat{\nu }\) from which we can gain an understanding of the \(R_n.\) The following two lemmas are the first step in achieving this. The first lemma is an easy consequence of the hyperbolicity of G and so we exclude the proof.

Lemma 5.4

There exists \(C>0\) such that

uniformly for \([\widetilde{\gamma }] \in \partial G\).

Using this lemma we obtain.

Lemma 5.5

Define, for \(n\in \mathbb {Z}_{\ge 0}\) and \(x\in \mathbb {R}\),

Then, if \(\widetilde{R}_n = N(\sigma ) +O(n^{-1/4}),\) we have that \(R_n = N(\sigma ) +O(n^{-1/4})\).

Proof

Clearly \(R_n(x) \le \widetilde{R}_n(x)\) for all \(x \in \mathbb {R}\) and \(n\in \mathbb {Z}_{\ge 0}\). Also, by the previous lemma and the fact that \(\varphi \) is Lipschitz in the \(d_L\) metric, there exists \(C>0\) independent of x and n such that

for all x, n. Combining these two bounds and applying Lemma 5.3 concludes the proof. \(\square \)

The previous two lemmas show that, without loss of generality, we may assume that the identity element of G belongs to H. We will assume this from now on. We can now construct distributions on Y from which we can deduce the convergence of \(R_n\). Recall that given \(y \in Y\), \(h(y)_n\) for \(n\in \mathbb {Z}_{\ge 0}\) denotes the nth group element in the geodesic ray determined by y.

Definition 5.6

Define distributions

for \(n\in \mathbb {Z}_{\ge 0}\) and \(x\in \mathbb {R}\).

The following lemma shows that to prove Theorem 1.4, it suffices to prove the analogous statement for the distributions \(H_n\).

Lemma 5.7

If \(H_n = N(\sigma ) +O(n^{-1/4})\) then \(R_n = N(\sigma ) +O(n^{-1/4})\).

Proof

It is proven in [4] that h is surjective, see Lemma 3.5.1. Hence there exists \(K>0\) independent of n, x such that

for all \(n \in \mathbb {Z}_{\ge 0}\) and \(x \in \mathbb {R}\). Since \(h_*\widehat{\nu } = \nu \),

and applying Lemmas 5.3 and 5.4 completes the proof. \(\square \)

The next step is to study the \(H_n\). We do this by constructing distributions on \(\cup _i \Sigma _{B_i}\) with respect to \(\mu \) and then, by relating \(\mu \) to \(\widehat{\nu }\), use these to understand the \(H_n\) distributions. To simplify notation, we define, for \(x\in \mathbb {R}\) and \(n\in \mathbb {Z}_{\ge 0}\),

The following lemma along with Proposition 4.6 will allow us to compare the \(\widehat{\nu }\) and \(\mu \) measures.

Lemma 5.8

For any sequence of integers \(k_n\) such that \(k_n\rightarrow \infty \) as \(n\rightarrow \infty \),

where the implied constant is independent of n, x.

Proof

By Lemma 4.8 there exists \(0<\theta <1\) such that for each \(j \in \mathbb {Z}_{\ge 0}\),

where the implied constant is independent of j, n and x. Taking the average of \(\widehat{\nu }_1(E_{n}(x)), \ldots ,\widehat{\nu }_{k_n}(E_n(x))\) and letting \(n\rightarrow \infty \) gives the result. \(\square \)

We now, using work from [7], describe how f distributes over \(\Sigma _A\) with respect to the measure \(\mu \). Along with the previous lemma, this will allow us to deduce the convergence of the \(H_n\) distributions.

Proposition 5.9

There exists \(\sigma ^2 \ge 0\) such that for each \(x \in \mathbb {R}\),

as \(n\rightarrow \infty \) and the above error term is uniform in \(x \in \mathbb {R}\). Furthermore, \(\sigma ^2>0\) if and only if

is non-empty.

Proof

By Proposition 4.2, the measure \(\mu \) is a weighted sum of the measures of maximal entropy \(\mu _i\) on each maximal component \(B_i\). We obtain a central limit theorem, with mean \(\Lambda _i\) and variance \(\sigma _i\), for \(\mu _i\) and f on each \(\Sigma _{B_i}\). Proposition 6.2 from [7] uses an argument of Calegari and Fujiwara to show that \(\Lambda _i\) and \(\sigma _i\) do not depend on the maximal component \(B_i\) (and by assumption \(\Lambda _i =0\) for each \(i=1, \ldots ,m\)). From this and the Berry–Esseen Theorem for subshifts of finite type [8] we obtain the desired central limit theorem, with error term, for \(\mu \) and f. The criteria for positive variance follows from Lemma 7.2 and Proposition 7.7 of [7]. \(\square \)

We are now ready to prove Theorem 1.4.

Proof of Theorem 1.4

By Lemma 5.7 it suffices to prove that for \(x \in \mathbb {R}\)

as \(n\rightarrow \infty \).

We begin by applying Propositions 4.6 and 5.9 to deduce that for any integer valued sequence \(k_n\), with \(k_n\rightarrow \infty \) as \(n\rightarrow \infty \),

as \(n\rightarrow \infty \), uniformly for \(x \in \mathbb {R}\). We then define, for \(n\in \mathbb {Z}_{\ge 0}\) and \(x \in \mathbb {R}\),

If we suppose further that \(k_n = o(\sqrt{n})\), then expression (5.1) implies that

We now note that, by containment,

for all n, \(j \le k_n\) and x. Recall that, by (4.2), \(\sigma ^j_*\widehat{\nu }(C_n^{\pm }(x)) = \sigma ^j_*\widehat{\nu }_j(C_n^{\pm }(x))\) for all n, x. Hence, if we choose \(k_n = \lfloor n^{1/4} \rfloor \), then (5.2) along with inequality (5.3) imply that

and so by Lemma 5.8,

Lastly, using Lemma 5.3 and the fact that, for \(y \in Y,\) \(f^n(y_n) = \varphi (h(y)_n) + O(1)\), it is easy to see that

concluding the proof. \(\square \)

Remark 5.10

The \(O(n^{-1/4})\) error term arises due to the fact that \(\nu \) is supported on Y whereas \(\mu \) is supported \(\cup _i \Sigma _{B_i}\). To pass the central limit theorem in Proposition 5.9 to one for \(\nu \) and Y, we need to compare the values f takes on Y to the values f takes on \(\cup _i \Sigma _{B_i}\). This comparison introduces an error term that can be seen explicitly as the \(2k_n|f|_\infty n^{-1/2}\) terms in the sets \(C_{n}^\pm (x)\). In the case that A is aperiodic (or irreducible) this term is no longer needed since for any \(y\in Y\), \(\sigma (y)\) belongs to the only (necessarily maximal) component.

In [2] (see also [21]), Bowen and Series provide a geometrical condition for Fuchsian groups and their generating sets that guarantees the existence of a coding \(\Sigma _A\) described by an aperiodic matrix. This condition is satisfied by the fundamental groups of compact hyperbolic surfaces (i.e. surface groups) with presentation

where \(g \ge 2\) is the genus of the surface. Free groups equipped with their canonical generating set also satisfy this condition. The above remark then implies the following.

Corollary 5.11

If G and \(\varphi :G \rightarrow \mathbb {R}\) satisfy the hypotheses of Theorem 1.4 and G is a free group or surface group equipped with the generating set described above, then the error term in Theorem 1.4 can be improved to \(O(n^{-1/2})\).

Remark 5.12

It seems plausible that the optimal error term in Theorem 1.4 is \(O(n^{-1/2})\). The author has not pursued this however.

References

Barge, J., Ghys, É.: Surfaces et cohomologie bornée. Invent. Math. 92, 509–526 (1998)

Bowen, R., Series, C.: Markov maps associated with Fuchsian groups, Publications mathématiques de l’I.H.É.S, pp. 153–170 (1979)

Brooks, R.: Some remarks on bounded cohomology. In: Riemann Surfaces and Related Topics: Proceedings of the 1978 Stony Brook Conference (State Univ. New York, Stony Brook, NY, 1978), Annals of Mathematics Studies 97, Princeton University Press, Princeton, pp. 53–63 (1981)

Calegari, D.: The ergodic theory of hyperbolic groups, “geometry and topology down under”. Contemp. Math. 597, 1343–1369 (2013)

Calegari, D., Fujiwara, K.: Combable functions, quasimorphism, and the central limit theorem. Ergod. Theory Dyn. Syst. 30, 1343–1369 (2009)

Cannon, J.: The combinatorial structure of cocompact discrete hyperbolic groups. Geom. Dedic. 16, 123–148 (1984)

Cantrell, S.: Statistical limit laws for hyperbolic groups (2019). arXiv:1905.08147 [math.DS]

Coelho, Z., Parry, W.: Central limit asymptotics for shifts of finite type. Isr. J. Math. 69, 235–249 (1990)

Coornaert, M.: Mesures de Patterson-Sullivan sur le bord d’un espace hyperbolique au sens de Gromov. Pac. J. Math. 159, 241–270 (1993)

Epstein, D., Fujiwara, K.: The second bounded cohomology of word-hyperbolic groups. Topology 36, 1275–1289 (1997)

Gekhtman, I., Taylor, S., Tiozzo, G.: Counting loxodromics for hyperbolic actions. J. Topol. 11, 379–419 (2018)

Ghys, É., de la Harpe, P.: Sur les groupes hyperboliques d’après Mikhael Gromov, Progress in Mathematics 83 (1990)

Horsham, M.: Central limit theorems for quasimorphisms of surface groups, Ph.D. Thesis, Manchester (2008)

Kapovich, I., Nagnibeda, T.: The Patterson-Sullivan embedding and minimal volume entropy for outer space. Geom. Funct. Anal. 17, 1201–1236 (2007)

Parry, W.: Intrinsic Markov chains. Trans. Am. Math. Soc. 112, 55–66 (1964)

Parry, W., Pollicott, M.: Zeta functions and periodic orbit structure of hyperbolic dynamics. Asterisque 186–187, 1–268 (1990)

Picaud, J.: Cohomologie bornée des surfaces et courants géodésiques. Bulletin de la Société Mathématique de France 125, 115–142 (1997)

Pollicott, M., Sharp, R.: Comparison theorems and orbit counting in hyperbolic geometry. Trans. Am. Math. Soc. 350, 473–499 (1998)

Pollicott, M., Sharp, R.: Poincaré series and comparison theorems for variable negative curvature. In: Turaev, V., Vershik, A. (eds.) Topology, Ergodic Theory, Real Algebraic Geometry: Rokhlin’s Memorial, pp. 229–240 (2001)

Pollicott, M., Sharp, R.: Statistics of matrix products in hyperbolic geometry, “Dynamical numbers: interplay between dynamical systems and number theory”. Contemp. Math. 532, 213–230 (2011)

Series, C.: Geometrical Markov coding on surfaces of constant negative curvature. Ergod. Theory Dyn. Syst. 4, 601–625 (1986)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Cantrell, S. Typical behaviour along geodesic rays in hyperbolic groups. Math. Z. 297, 711–727 (2021). https://doi.org/10.1007/s00209-020-02529-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00209-020-02529-1