Abstract

We prove a theorem which gives a bijection between the support \(\tau \)-tilting modules over a given finite-dimensional algebra A and the support \(\tau \)-tilting modules over A / I, where I is the ideal generated by the intersection of the center of A and the radical of A. This bijection is both explicit and well-behaved. We give various corollaries of this, with a particular focus on blocks of group rings of finite groups. In particular we show that there are \(\tau \)-tilting-finite wild blocks with more than one simple module. We then go on to classify all support \(\tau \)-tilting modules for all algebras of dihedral, semidihedral and quaternion type, as defined by Erdmann, which include all tame blocks of group rings. Note that since these algebras are symmetric, this is the same as classifying all basic two-term tilting complexes, and it turns out that a tame block has at most 32 different basic two-term tilting complexes. We do this by using the aforementioned reduction theorem, which reduces the problem to ten different algebras only depending on the ground field k, all of which happen to be string algebras. To deal with these ten algebras we give a combinatorial classification of all \(\tau \)-rigid modules over (not necessarily symmetric) string algebras.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The theory of support \(\tau \)-tilting modules, as introduced by Adachi, Iyama and Reiten in [4], is related to, and to some extent generalizes, several classical concepts in the representation theory of finite dimensional algebras.

On the one hand, it is related to silting theory for triangulated categories, which was introduced by Keller and Vossieck in [23] and provides a generalization of tilting theory. Just like tilting objects, silting objects generate the triangulated category they live in, but in contrast to tilting objects they are allowed to have negative self-extensions. Using Keller’s version [22] of Rickard’s derived Morita theorem, a silting object S in an algebraic triangulated category \({\mathcal T}\) gives rise to an equivalence between \({\mathcal T}\) and the perfect complexes over the derived endomorphism ring \(\mathbf {R}{\text {End}}_{{\mathcal T}}(S)\). This ring is a non-positively graded DGA, which can however be very hard to present in a reasonable way (see for example [26]).

On the other hand, \(\tau \)-tilting theory is related to mutation theory, which has its origins in the Bernstein–Gelfand–Ponomarev reflection functors. The basic idea is to replace an indecomposable summand of a tilting object by a new summand to obtain a new tilting object. This mutation procedure has played an important role in several results concerning Broué’s abelian defect group conjecture, see [19, 25, 28]. However, it is not always possible to replace a summand of a tilting object and get a new tilting object in return, which may be seen as sign that one needs to consider a larger class of objects. This is why Aihara and Iyama introduced the concept of silting mutation [6], where one observes quite the opposite behavior: any summand of a silting object can be replaced to get (infinitely) many new silting objects, and among all of those possibilities one is distinguished as the “right mutation” and another one as the “left mutation”. So in this setting it is natural to ask whether the action of iterated silting mutation on the set of basic silting objects in \({\mathcal K}^b(\mathbf {proj}_A)\) is transitive (for an explicit reference, see Question 1.1 in [6]). In general this question is hard, but to make it more manageable, one can start by studying not all of the basic silting complexes, but just the two-term ones. These have the benefit of being amenable to the theory of support \(\tau \)-tilting modules mentioned above.

A support \(\tau \)-tilting module M is a module which satisfies \({{\mathrm{Hom}}}_{A}(M,\tau M)=0\) and which has as many non-isomorphic indecomposable summands as it has non-isomorphic simple composition factors. These modules correspond bijectively to two-term silting complexes, and possess a compatible mutation theory as well. Using \(\tau \)-tilting theory, the computation of all the two-term silting complexes and their mutations is a lot more manageable, and in nice cases, one can deduce from the finiteness of the number of two-term silting complexes, the transitivity of iterated silting mutation.

In this article, we will be concerned with determining all basic two-term silting complexes (or equivalently support \(\tau \)-tilting modules) for various finite dimensional algebras A defined over an algebraically closed field. To this end, we prove the following very general reduction theorem:

Theorem 1

(see Theorem 11) For an ideal I which is generated by central elements and contained in the Jacobson radical of A, the g-vectors of indecomposable \(\tau \)-rigid (respectively support \(\tau \)-tilting) modules over A coincide with the ones for A / I, as do the mutation quivers.

For the purpose of this introduction we will call an algebra obtained from A by taking successive central quotients a good quotient of A. The proof of the theorem is an application of a four-term exact sequence

which is constructed in Proposition 3, where P, Q, R and S are projective modules, and \(C(\alpha )\) and \(C(\beta )\) are two-term complexes with terms P and Q, respectively R and S. The power of this theorem lies in its generality. For example, as an immediate corollary, we recover a result of [3] saying that the mutation quiver and the g-vectors of a Brauer graph algebra do not depend on the multiplicities of the exceptional vertices, without having to classify all \(\tau \)-tilting modules beforehand.

One can often use Theorem 1 to effectively compute all two-term silting complexes over a given algebra. In fact, it turns out that many algebras of interest such as all special biserial algebras, in particular all Brauer graph algebras, and all algebras of dihedral, semidihedral and quaternion type have a string algebra as a good quotient. Thus by Theorem 1 it is enough to consider string algebras for all aforementioned classes of algebras. In Sect. 5 we give a combinatorial algorithm to determine the indecomposable \(\tau \)-rigid modules, the support \(\tau \)-tilting modules and the mutation quiver of a string algebra, provided it is \(\tau \)-tilting-finite i.e. there are only a finite number of isomorphism classes of \(\tau \)-tilting modules (otherwise one still gets a description, but no algorithm for obvious reasons).

As an application, in Sect. 6, we consider blocks of group algebras. Note that because these algebras are symmetric, silting and tilting complexes coincide. We show that all tame blocks are \(\tau \)-tilting-finite, and we give non-trivial (i.e. non-local) examples of wild blocks of (in some sense) arbitrary large defect which are \(\tau \)-tilting-finite.

For tame blocks, there is a list of algebras containing all possible basic algebras of these blocks, which is due to Erdmann [14]. It turns out that all algebras of dihedral, semidihedral and quaternion type (which are the algebras that Erdmann classifies) have a string algebra as good quotient, and we exploit this to determine the g-vectors and Hasse quivers (of the poset of support \(\tau \)-tilting modules) of all of them. In particular, we obtain the following theorem.

Theorem 2

(see Theorem 16) All algebras of dihedral, semidihedral or quaternion type are \(\tau \)-tilting-finite and their g-vectors and Hasse quivers (of the poset of support \(\tau \)-tilting modules) are independent of the characteristic of k and the parameters involved in the presentations of their basic algebras.

The actual computation of the g-vectors and Hasse quivers, which we present in the form of several tables, has been relegated to Appendix 7.

Using a result of Aihara and Mizuno [7], we deduce the following theorem:

Theorem 3

All tilting complexes over an algebra of dihedral, semidihedral or quaternion type can be obtained from A (as a module over itself) by iterated tilting mutation.

This implies in particular that if B is another algebra and \(X\in \mathcal D^b(A^\mathrm{op} \otimes B)\) is a two-sided tilting complex, then there exists a sequence of algebras \(A=A_0,\ A_1, \ldots , A_n=B\) and two-sided two-term tilting complexes \(X_i\in \mathcal D^b(A_{i-1}^\mathrm{op}\otimes A_{i})\) such that \(X\cong X_1\otimes _{A_1}^{L} \cdots \otimes _{A_{n-1}}^L X_{n}\).

2 Preliminaries

Throughout this paper, k denotes an algebraically closed field of arbitrary characteristic, and A is a basic finite-dimensional k-algebra with Jacobson radical \({\text {rad}}(A)\). The category of finitely generated right A-modules is denoted by \(\mathbf {mod}_A\) and the subcategory of finitely generated projective A-modules is denoted by \(\mathbf {proj}_A\). Let \(P_1, \ldots , P_l\) denote the non-isomorphic projective indecomposable A-modules. By \(\tau \) we denote the Auslander–Reiten translate for A. The category of bounded complexes of projective modules is denoted by \({\mathcal C}^b(\mathbf {proj}_A)\). Moreover, \({\mathcal K}^b(\mathbf {proj}_A)\) denotes the corresponding homotopy category and \(K_0(\mathbf {proj}_A)\) denotes its Grothendieck group. For any \(M \in \mathbf {mod}_A\), |M| is defined as the number of indecomposable direct summands of M. We will use the same notation for complexes.

We will now give a short summary of the theory of silting complexes and the theory of support \(\tau \)-tilting modules introduced in [4].

2.1 Two-term silting complexes

Definition 1

A complex \(C=C^{\bullet } \in {\mathcal K}^b(\mathbf {proj}_A)\) is called two-term if \(C^i =0\) for all \(i \ne 0,-1\).

Definition 2

A complex \(C \in {\mathcal K}^b(\mathbf {proj}_A)\) is called

-

1.

presilting if \({{\mathrm{Hom}}}_{ {\mathcal K}^b(\mathbf {proj}_A)}(C,C[i])=0\) for \(i>0\),

-

2.

silting if it is presilting and generates \({\mathcal K}^b(\mathbf {proj}_A)\).

It can be shown that a silting complex has exactly |A| summands.

Remark 1

A two-term presilting complex is also known as a rigid two-term complex. These terms will be used interchangeably.

On the set of basic silting complexes, one can define a partial order as follows:

Theorem 4

[6, Theorem 2.11] For basic silting complexes C and D, we write \(D \le C\) if

for all \(i>0\). This defines a partial order on the set of silting complexes.

Let us denote the Hasse quiver of this poset by H(A). Now let \(C=D \oplus E\) be a basic silting complex with D indecomposable. Then there is a triangle (in \(\mathcal K^b(\mathbf {proj}_A)\))

such that f is a minimal left \(\mathtt{add}\ E\)-approximation of D.

Definition 3

The left mutation of C with respect to D is defined to be

The right mutation \(\mu _{D}^+(C)\) is defined dually.

We denote by Q(A) the left mutation quiver of A with vertices corresponding to basic silting complexes, there being an arrow \(C \rightarrow C'\) whenever \(C'=\mu _{D}^-(C)\) for some indecomposable direct summand D of C.

Remark 2

For symmetric algebras, silting complexes are in fact tilting complexes, so Q(A) is the mutation quiver of tilting complexes.

Theorem 5

[6, Theorem 2.35] The quivers H(A) and Q(A) are the same.

In general, this quiver can be disconnected and has no regularity properties. However, if we restrict our attention to basic two-term silting complexes, then more structure appears. In fact, using the theory of support \(\tau \)-tilting modules, one can prove the following theorem.

Theorem 6

[4, Corollary 3.8] Any basic two-term rigid complex C with \(| C |=|A|-1\) is a direct summand of exactly two basic two-term silting complexes. Moreover, if two basic two-term silting complexes C and D have \(|A|-1\) summands in common, then C is a left or right mutation of D.

This means that if we denote by \(Q_2(A)\) the full subquiver of Q(A) containing the vertices corresponding to basic two-term silting complexes, then we get an |A|-regular graph. With an eye towards explicit calculations, the following properties are very useful.

Proposition 1

[4, Corollary 3.10] If \(Q_2(A)\) has a finite connected component C, then \(Q_2(A)=C\).

In some cases, finiteness of \(Q_2(A)\) implies that for every n, there are only finitely many n-term silting complexes.

Proposition 2

[7, Theorem 2.4][3, Proposition 6.9] Let A be a symmetric algebra. If for any tilting complex C in the connected component of Q(A) containing A, the set of basic two-term \({\text {End}}_{{\mathcal K}^b(\mathbf {proj}_A)}(C)\)-tilting complexes is finite, then for every n, the set of basic n-term A-tilting complexes is finite.

Theorem 7

[5, Theorem 3.5] If for every n, there are only finitely many isomorphism classes of basic n-term silting complexes, then Q(A) is connected, i.e. mutation acts transitively on basic silting complexes.

Rigid two-term complexes have a complete numerical invariant.

Theorem 8

[4, Theorem 5.5] A two-term rigid complex C is uniquely determined by its class \([C] \in K_0(\mathbf {proj}_A)\).

Expanding out [C] in terms of the basis \([P_0], \ldots , [P_l]\), we get

The tuple \(g^{C}=(g_1^{C},\ldots ,g_l^{C})\) is known as the g-vector of C. So Theorem 8 says that two-term rigid complexes are uniquely determined by their g-vectors.

2.2 Support \(\tau \)-tilting modules

Definition 4

A module \(M \in \mathbf {mod}_A\) is called

-

1.

\(\tau \)-rigid if \({{\mathrm{Hom}}}_A(M,\tau M)=0\),

-

2.

\(\tau \)-tilting if it is \(\tau \)-rigid and \(|M|=|A|\),

-

3.

support \(\tau \)-tilting if there is an idempotent \(e \in A\) such that M is a \(\tau \)-tilting A / (e)-module.

We will often think of a support \(\tau \)-tilting module as a pair \((M,e\cdot A)\), and say that it is basic if both M and \(e\cdot A\) are basic. Also, direct sums are defined componentwise.

For basic support \(\tau \)-tilting modules, there is again a notion of mutation, and one can then similarly define a left mutation quiver \(Q_{\tau }(A)\). Also, there is a partial order on this set giving rise to a Hasse quiver \(H_{\tau }(A)\). For details, see [4, Sect. 2.4]. These quivers are again the same (see [4, Corollary 2.34]) and isomorphic to \(Q_2(A)\), as the following shows:

Theorem 9

[4, Theorem 3.2, Corollary 3.9] There are mutually inverse functions

which are defined in the following way:

where \(P \xrightarrow {p} Q \rightarrow M\) is a minimal projective presentation of M, and R is the (uniquely determined, up to isomorphism) basic projective module such that (M, R) is a support \(\tau \)-tilting pair. Moreover, this bijection gives an isomorphism of posets between the left mutation quivers \(Q_2(A)\) and \(Q_{\tau }(A)\).

3 Geometry of two-term complexes of projective modules

In this section we construct an exact sequence which will be useful for proving our first main theorem. It also serves to provide an elementary proof of Theorem 8.

For two fixed projective A-modules P and Q, \({{\mathrm{Hom}}}_A(P,Q)\) can be considered as algebraic variety, isomorphic to affine space. The connected algebraic group \(G={{\mathrm{Aut}}}_A P \times {{\mathrm{Aut}}}_A Q\) acts on \({{\mathrm{Hom}}}_A(P,Q)\), in such a way that there is a bijection between the set of isomorphism classes of two-term complexes in \({\mathcal C}^b(\mathbf {proj}_A)\) with terms P and Q, and the set of orbits of G in \({{\mathrm{Hom}}}_A(P,Q)\). For a morphism of smooth algebraic varieties \(f:X \rightarrow Y\) and a point \(x \in X\), we denote by \(d(f)_x:T_xX \rightarrow T_{f(x)}Y\) the differential of f at x, where \(T_x X\) and \(T_{f(x)}Y\) denote the tangent spaces of X (respectively Y) at the point x (respectively f(x)). In the specific case where X is the algebraic group \({{\mathrm{Aut}}}_A(P) \times {{\mathrm{Aut}}}_A(Q)\) and \(e \in X\) is the identity, \(T_e X = {{\mathrm{Hom}}}_A(P,P) \times {{\mathrm{Hom}}}_A(Q,Q)\).

When we consider \(\alpha \in {{\mathrm{Hom}}}_A(P,Q)\) as a complex in \({\mathcal C}^b(\mathbf {proj}_A)\), we will denote it as \(C(\alpha )\). The orbit of \(\alpha \in {{\mathrm{Hom}}}_A(P,Q)\) will be denoted by \(G \cdot \alpha \) and its stabilizer by \(G_{\alpha }\).

Proposition 3

For \(\alpha \in {{\mathrm{Hom}}}_A(P,Q)\) and \(\beta \in {{\mathrm{Hom}}}_A(R,S)\) (P, Q, R, S projective A-modules), there is an exact sequence

where

and g is the natural map (we have \(C(\alpha )^0 = P\) and \(C(\beta )[1]^0=C(\beta )^{-1}=S\), so we may view an element of \({{\mathrm{Hom}}}_A(P,S)\) as a map of chain complexes).

Proof

The only place where exactness is not immediately clear is at the \({{\mathrm{Hom}}}_A(P,S)\) term. The kernel of g consists of all \(\gamma \in {{\mathrm{Hom}}}_A(P,S)\) such that \(g(\gamma )\) is homotopic to zero. This is exactly the image of \(f_{\alpha ,\beta }\) (the negative sign in the definition of \(f_{\alpha ,\beta }\) does not affect the image).

In Corollary 1 we will use basic properties of algebraic varieties equipped with an action of an algebraic group, which are summarised in the following proposition.

Proposition 4

Let X be an algebraic variety equipped with an algebraic action of a connected algebraic group G and let \(x \in X\). Then:

-

1.

the stabilizer subgroup \(G_x\) is closed,

-

2.

the orbit \(G \cdot x\) is a locally closed, smooth, connected subvariety of X,

-

3.

The dimension of \(G \cdot x\) can be computed via:

$$\begin{aligned} \dim G \cdot x= \dim G - \dim G_x. \end{aligned}$$(3.1)

Proof

Since G is connected, it is also irreducible by [11, Proposition 1.2(b)], and so is the orbit \(G \cdot x\), since it is the image of G under \(\phi (x):G\longrightarrow G\cdot x: g \mapsto g\cdot x\). By [11, Proposition 6.7] \(G\cdot x\) is smooth and locally closed in X, and \(\phi (x)\) is an “orbit map” (defined earlier in the same section) for the action of \(G_x\) on G by right translation. Then [11, Proposition 6.4(b)] applied to this orbit map yields (3.1).

Corollary 1

With the same notation as in Proposition 3, if \(\alpha =\beta \in {{\mathrm{Hom}}}_A(P,Q)\), let again \(G={{\mathrm{Aut}}}_A(P)\times {{\mathrm{Aut}}}_A(Q)\) and

Then \(d(\phi _\alpha )_{e}=f_{\alpha ,\alpha }\) (where e denotes the unit element of G), and we get

Proof

That \(f_{\alpha ,\alpha }\) can be identified with the differential at the identity of the orbit map is clear.

Let us consider the last statement. We should first remark that the equality \(G_\alpha ={{\mathrm{Aut}}}_{\mathcal C^b(\mathbf {proj}_A)}(C(\alpha ))\) follows immediately from the definition, and hence \(\dim G_\alpha = \dim {\text {End}}_{\mathcal C^b(\mathbf {proj}_A)}(C(\alpha ))\). Since orbits are smooth by Proposition 4(2), we find

where we used Proposition 4(3) in the second line, and also the exactness statement from Proposition 3 in the last equality. By the identification of \(f_{\alpha ,\alpha }\) and \(d(\phi _{\alpha })_e\), we are done.

Using this proposition we can easily reprove the following well-known results by Jensen–Su–Zimmermann in the special case of two-term complexes.

Lemma 1

[21, Lemma 4.5] A two-term complex \(C(\alpha ) \in {\mathcal K}^b(\mathbf {proj}_A)\) with terms P and Q is rigid if and only if the orbit \(G \cdot \alpha \) is open (and thus dense) in \({{\mathrm{Hom}}}_A(P,Q)\).

Proof

By Proposition 4, orbits are locally closed and smooth, so they are open exactly when there is a point \(x \in G \cdot \alpha \) such that \(\dim T_x(G \cdot \alpha )=\dim T_x {{\mathrm{Hom}}}_A(P,Q)\). By Proposition 3, there is an isomorphism

and hence the lemma follows. Note that the denseness of \(G \cdot \alpha \) follows from the fact that \({{\mathrm{Hom}}}_A(P,Q)\) is an affine space, and hence irreducible.

We can use this lemma to obtain an alternative proof of Theorem 8. Note that a slightly weaker form of this theorem was also obtained in [21, Corollary 4.8].

Theorem 10

Two-term rigid complexes in \({\mathcal K}^b(\mathbf {proj}_A)\) are determined up to isomorphism by their g-vectors.

Proof

We first show that any two-term rigid complex C is isomorphic in \({\mathcal K}^b(\mathbf {proj}_A)\) to a complex with terms having no direct summands in common. So assume C can be represented by a complex

which is minimal with respect to the number of direct summands of P and Q. This minimality ensures that \({\text {im}}\,d \subseteq {\text {rad}}(Q)\). To now prove that P and Q have no summands in common, it suffices to show that the image of any morphism \(f:P \rightarrow Q\) is contained in \({\text {rad}}(Q)\). By rigidity, there exist \(h_P \in {\text {End}}_A(P)\) and \(h_Q \in {\text {End}}_A(Q)\) such that

But since \({\text {im}}\,d \subseteq {\text {rad}}(Q)\), also \({\text {im}}\,f \subseteq {\text {rad}}(Q)\).

Now let \(C(\alpha )\) and \(C(\beta )\) denote two-term rigid complexes, both with terms P and Q. Then by Lemma 1, the orbits \(G \cdot \alpha \) and \(G \cdot \beta \) are dense in \({{\mathrm{Hom}}}_A(P,Q)\), so they intersect and we get an isomorphism \(C(\alpha ) \cong C(\beta )\) in \({\mathcal C}^b(\mathbf {proj}_A)\). In particular, we find that two-term rigid complexes are uniquely determined by their terms.

Since we know by the first part of the proof that P and Q do not have any summands in common, the class \([C(\alpha )] \in K_0(\mathbf {proj}_A)\) already suffices to determine \(C(\alpha )\) up to isomorphism, which is exactly what we needed to prove.

4 Quotients by a centrally generated ideal

Suppose \(z \in Z(A)\cap {\text {rad}}(A)\) is an element such that \(z^2=0\) and consider the ideal \(I=(z)\) of A. By \(\bar{P_1}, \ldots , \bar{P_l}\) we denote the projective indecomposable \(\bar{A}=A/I\) modules. Note that since \(z \in {\text {rad}}(A)\), the number of projectives is the same.

We know that \({{\mathrm{Hom}}}_A(P_i, P_j)\) is isomorphic to \(e_jAe_i\), and under this isomorphism the kernel of the natural epimorphism

corresponds to \(e_jIe_i\). Since \(I=(z)\) we therefore have for any \(\alpha \in {{\mathrm{Hom}}}_A(P_i, P_j)\)

The following is our main reduction theorem, which will turn out to be very powerful in the remainder of this article.

Theorem 11

For an ideal \(I \subseteq (Z(A)\cap {\text {rad}}(A))\cdot A\) of A, the g-vectors of two-term rigid (respectively silting) complexes for A coincide with the ones for A / I, as do the mutation quivers.

Proof

It suffices to consider \(I=(z)\) a principal ideal, with \(z \in Z(A)\) such that \(z^2=0\). From Proposition 3, we know that for all \(\alpha \in {{\mathrm{Hom}}}_A(P,Q)\), and \(\beta \in {{\mathrm{Hom}}}_A(R,S)\) (P, Q, R, S projective A-modules) there is a commutative diagram with exact rows:

Since \(\psi \) is surjective, by commutativity of the rightmost square, so is the rightmost vertical arrow. This ensures that if \({{\mathrm{Hom}}}_{{\mathcal K}^b(\mathbf {proj}_A)}(C(\alpha ),C(\beta )[1])=0\), also \({{\mathrm{Hom}}}_{{\mathcal K}^b(\mathbf {proj}_{\bar{A}})}(C(\bar{\alpha }),C(\bar{\beta })[1])=0\).

The other way round, if \({{\mathrm{Hom}}}_{{\mathcal K}^b(\mathbf {proj}_{\bar{A}})}(C(\bar{\alpha }),C(\bar{\beta })[1])=0\), we claim that also \({{\mathrm{Hom}}}_{{\mathcal K}^b(\mathbf {proj}_{A})}(C(\alpha ),C(\beta )[1])=0\). From the exact sequence, we see that \(f_{\bar{\alpha },\bar{\beta }}\) is surjective, and it suffices to prove that \(f_{\alpha ,\beta }\) is also surjective. Let \(\gamma \in {{\mathrm{Hom}}}_A(P,S)\) be arbitrary, then there exists an element \((X,Y) \in {{\mathrm{Hom}}}_A(P,R) \times {{\mathrm{Hom}}}_A(Q,S)\) such that

so \(\gamma -f_{\alpha ,\beta }(X,Y) \in {\text {ker}}\psi \), and therefore, by (4.1),

for some \(\gamma ' \in {{\mathrm{Hom}}}_A(P,S)\). Using surjectivity of \(f_{\bar{\alpha },\bar{\beta }} \circ \phi \) again, there exists \((X',Y') \in {{\mathrm{Hom}}}_A(P,R) \times {{\mathrm{Hom}}}_A(Q,S)\) such that

Thus we find that

where we used that \(z \in Z(A)\). Thus \(f_{\alpha ,\beta }\) is surjective.

We conclude that for all \(\alpha \) and \(\beta \):

Since the assignment \(C(\alpha )\in \mathcal K^b(\mathbf {proj}_A) \mapsto C(\bar{\alpha })\in \mathcal K^b(\mathbf {proj}_{A/I})\) does not change the g-vectors, and these uniquely determine a rigid complex by Theorem 8, it is bijective. For the same reason it preserves and reflects direct sums, which means that the aforementioned assignment induces a bijection between the two-term rigid complexes for A and the two-term rigid complexes for A / I which restricts to a bijection between the indecomposable complexes, and therefore also between the silting complexes. The mutation quivers will coincide as well since by Theorem 5 they coincide with the Hasse quivers of the posets formed by the two-term silting complexes, and the order is preserved due to (4.2).

As an immediate corollary of Theorem 11, we obtain [1, Theorem B] in the symmetric case.

Corollary 2

For a symmetric algebra A with \({{\mathrm{soc}}}(A)\subseteq {\text {rad}}(A)\), the g-vectors of two-term rigid (respectively silting) complexes for A coincide with the ones for \(A/{{\mathrm{soc}}}(A)\), as do the mutation quivers.

Proof

We may assume without loss of generality that A is basic. In this case \(A/{\text {rad}}(A)\) is a commutative ring. That implies that \(a\cdot m = m\cdot a\) for all \(m\in A/{\text {rad}}(A)\) (which we now see as an A-A-bimodule), and all \(a\in A\). Since A is symmetric we have \({{\mathrm{soc}}}(A) \cong {{\mathrm{Hom}}}_k(A/{\text {rad}}(A), k)\) as an A-A-bimdule, which implies that \(a\cdot m = m\cdot a\) for all \(m\in {{\mathrm{soc}}}(A)\) and all \(a\in A\). That is, \({{\mathrm{soc}}}(A)\subseteq Z(A)\).

Here is another immediate application of the foregoing theorem, which recovers the result of [3] saying that the mutation quiver and the g-vectors of a Brauer graph algebra do not depend on the multiplicities of the exceptional vertices, without having to classify all \(\tau \)-tilting modules beforehand.

Definition 5

Recall that an algebra \(A=kQ/I\) is called special biserial if

-

1.

There are at most two arrows emanating from each vertex of Q.

-

2.

There are at most two arrows ending in each vertex of Q.

-

3.

For any path \(\alpha _1\cdots \alpha _n\not \in I\) (\(n\ge 1\)) there is at most one arrow \(\alpha _0\) in Q such that \(\alpha _0\cdot \alpha _1\cdots \alpha _n\not \in I\) and there is at most one arrow \(\alpha _{n+1}\) in Q such that \(\alpha _1\cdots \alpha _n\cdot \alpha _{n+1} \not \in I\).

Now suppose A is symmetric special biserial. By the main result of [29], these correspond exactly to the Brauer graph algebras. Using Theorem 11 we now get as a corollary [3, Proposition 6.16].

Corollary 3

The poset of two-term tilting complexes of a Brauer graph algebra is independent of the multiplicities involved.

Proof

The centers of such algebras have been described in [9, Proposition 2.1.1]. More precisely, the sum of all walks around a vertex in the Brauer graph, with each adjacent edge occurring as a starting point precisely once, is a central element. Now one can fairly easily check that all relations involving the exceptional multiplicities become zero modulo the ideal generated by these central elements. Thus all Brauer graph algebras with the same Brauer graph but different exceptional multiplicities have the same quotient \(A/{\text {rad}}(Z(A))A\) and Theorem 11 indeed yields the corollary.

5 String algebras

As a consequence of Theorem 11 the classification of indecomposable \(\tau \)-rigid modules over an algebra A often reduces to the same problem over a quotient A / I, which will typically have a simpler structure than A itself. But of course this quotient still needs to be dealt with. One class of algebras for which one might hope to determine all indecomposable \(\tau \)-rigid modules are the algebras of radical square zero (see [2]), but this class is not large enough for our purposes. In this section we study the \(\tau \)-rigid modules of string algebras, which are special biserial algebras (as defined in Example 5) with monomial relations. There is a well-known classification of indecomposable modules over these algebras, in terms of combinatorial objects known as “strings”, which are certain walks around the \({\text {Ext}}\)-quiver of the algebra. All Auslander–Reiten sequences are known as well. Hence it is clear that it should be possible to give a combinatorial description of the \(\tau \)-rigid modules and support \(\tau \)-tilting modules in terms of these “strings”. Note that for symmetric special biserial algebras such a classification exists already (see [3]). But, as A being symmetric does not imply that A / I is symmetric as well, it is useful to consider non-symmetric special biserial algebras even if one is merely interested in symmetric algebras A. By Remark 5 below we may then restrict our attention to string algebras, even if we are interested in arbitrary special biserial algebras.

Definition 6

(String algebra) Let Q be a finite quiver and let I be an ideal contained in the k-span of all paths of length \(\ge 2\). We say that \(A = kQ/I\) is a string algebra if the following conditions are met:

-

1.

There are at most two arrows emanating from each vertex of Q.

-

2.

There are at most two arrows ending in each vertex of Q.

-

3.

I is generated by monomials.

-

4.

(Unique Continuation) For any path \(\alpha _1\cdots \alpha _n\not \in I\) (\(n\ge 1\)) there is at most one arrow \(\alpha _0\) in Q such that \(\alpha _0\cdot \alpha _1\cdots \alpha _n\not \in I\) and there is at most one arrow \(\alpha _{n+1}\) in Q such that \(\alpha _1\cdots \alpha _n\cdot \alpha _{n+1} \not \in I\).

We will now introduce the combinatorial notions which are needed to classify \(\tau \)-rigid modules over string algebras. For the most part we use the same terminology as used by Butler and Ringel in [13], where they classify all (finite dimensional) indecomposable modules over string algebras, as well as all Auslander–Reiten sequences. We will nonetheless make some definitions which are particular to our situation, since we only have the very specific goal of classifying \(\tau \)-rigid modules in mind. One noteworthy detail on which we deviate from [13] is that, since the convention we use for multiplication in path algebras is the opposite of the one used in [13], the string module \(M(\alpha _1\cdots \alpha _m)\) we define below is going to correspond to the string module \(M(\alpha _1^{-1}\cdots \alpha _m^{-1})\) as defined in [13].

Definition 7

(Direct, inverse and unidirectional strings) Let \(A = kQ/I\) be a string algebra, and let \(Q_1 =\{\alpha _1,\ldots , \alpha _h\}\) denote the set of arrows in Q. By \(\alpha _i^{-1}\) for \(i\in \{1,\ldots ,h\}\) we denote formal inverses of the arrows \(\alpha _i\).

-

1.

A string C is a word \(c_1\cdots c_m\), where \(c_i \in \{\alpha _1, \alpha _1^{-1},\ldots ,\alpha _h,\alpha _h^{-1}\}\) such that \(c_i\ne c_{i+1}^{-1}\) for all \(i\in \{1,\ldots ,m-1\}\) and for every subword W of C, \(W \notin I\) and \(W^{-1} \notin I\). We also ask that if C contains a subword of the form \(\alpha _i\cdot \alpha _j^{-1}\), then the target of \(\alpha _i\) is equal to the target of \(\alpha _j\), and if C contains a subword of the form \(\alpha _i^{-1}\cdot \alpha _j\), then the source of \(\alpha _i\) is equal to the source of \(\alpha _j\). Moreover, for each vertex e of Q, we define two paths of length zero, one of which is called “direct” and one of which is called “inverse” (this will make sense in the context of the next point below).

-

2.

We call a string of length greater than zero direct if all \(c_i\)’s are arrows, and inverse if all \(c_i\)’s are inverses of arrows. We call a string unidirectional if it is either direct or inverse.

-

3.

For an arrow \(\alpha _i\) we denote by \(s(\alpha _i)\) its source and by \(t(\alpha _i)\) its target. We define \(s(\alpha _i^{-1})=t(\alpha _i)\) and \(t(\alpha _i^{-1})=s(\alpha _i)\). We extend this notion to strings by defining \(s(c_1\cdots c_m)=s(c_1)\) and \(t(c_1\cdots c_m)=t(c_m)\). For a string C of length zero, given by a vertex e, we define \(s(C)=t(C)=e\).

-

4.

If C is unidirectional, then we define the corresponding direct string \(\bar{C}\) as follows: if C is direct, then \(\bar{C} := C\), and if C is inverse, then \(\bar{C} := C^{-1}\).

-

5.

Given a string C of length greater than zero let \(C_1\cdots C_l\) be the unique factorization of C such that each \(C_i\) is unidirectional of length greater than zero, and for each \(i\in \{1,\ldots ,l-1\}\) exactly one of the strings \(C_i\) and \(C_{i+1}\) is direct. If C is of length zero we set \(l=1\) and define \(C_1=C_l:=C\).

While the above definition is essentially standard, the next definition is rather specific to the problem we are considering. We should note at this point that we use strings in two different ways. Usually, a string C corresponds to a string module M(C). However, the string \(_PC_P\) we construct in Definition 8 (1) below will correspond to the differential of a two-term complex of projective modules, namely a minimal projective presentation of M(C). It is helpful to keep this in mind.

Definition 8

(\(_{P}C_{P}\) and intermediate points)

-

1.

We call \(C_1\) a loose end if \(C_1\) is inverse and \(C_1^{-1}\cdot \alpha _i \in I\) for all arrows \(\alpha _i\). Similarly, we call \(C_l\) a loose end if \(C_l\) is direct and \(C_l\cdot \alpha _i\in I\) for all arrows \(\alpha _i\). The other constituent factors \(C_{2},\ldots , C_{l-1}\) are never considered loose ends.

-

2.

Assume that C is not of length zero. Then we define a string \(_PC\) as follows: if \(C_1\) is a loose end, we define \(_PC=C_2\cdots C_l\) (or one of the strings of length zero corresponding to \(t(C_1)\) if \(l=1\)). If \(C_1\) is not a loose end then there is at most one arrow \(\alpha _i\) such that \(\alpha _{i}^{-1}\cdot C\) is a string, and we define \(_PC:= \alpha _{i}^{-1}\cdot C\) if such an \(\alpha _i\) exists, and \(_PC := C\) otherwise. In the same vein, if \(C_l\) is a loose end we define \(C_P := C_1\cdots C_{l-1}\) (or one of the strings of length zero corresponding to \(s(C_l)\) if \(l=1\)). If \(C_l\) is not a loose end, then there is at most one arrow \(\alpha _i\) such that \(C\cdot \alpha _i\) is a string and we define \(C_{P} := C\cdot \alpha _i\) if such an \(\alpha _i\) exists, and \(C_P := C\) otherwise.

Now, if C is of length zero, then C is given by a vertex e, and we define \(C_P = {_PC}=\alpha _i^{-1}\) for some \(\alpha _i\) emanating from e, provided such an arrow exists, and \(C_P ={ _PC}:=C\) if no such arrow exists (note that we make a choice here, so in order to make \(_PC\) and \(C_P\) well-defined, we technically have to designate one of the arrows emanating from each vertex as the one to be used).

Unless \(l=1\) and \(C_1=C_l\) is a loose end, we define \({_PC_P} := (_PC)_P={_P(C_P)}\). If \(l=1\) and \(C_1=C_l\) is an inverse loose end, then we define \(_PC_P := {_P(C_P)}\), and if \(C_1=C_l\) is a direct loose end we define \({_PC_P} := (_PC)_P\).

Note that we always have \(_P(C^{-1})_P = ({_PC_P})^{-1}\).

-

3.

If C has length greater than zero, then we call

$$\begin{aligned} I_C(0)=s(C_1),\ I_C(1)=t(C_1),\ I_C(2)=t(C_2),\ \ldots ,\ I_C(l)=t(C_l) \end{aligned}$$the intermediate points of C, and for \(1\le i < l\) we call \(C_{i}\) and \(C_{i+1}\) the adjacent unidirectional strings of the intermediate point \(I_C(i)\). We say that \(C_1\) is the adjacent unidirectional string of \(I_C(0)\) and \(C_l\) is the adjacent unidirectional string of \(I_C(l)\). We say that \(I_C(i)\) is an upper intermediate point if \(C_{i}\) (if it exists, i.e. if \(i>0\)) is inverse and \(C_{i+1}\) (if it exists) is direct. We call \(I_C(i)\) a lower intermediate point if \(C_i\) (if it exists) is direct and \(C_{i+1}\) (if it exists) is inverse. In particular, \(I_C(0)\) is an upper (respectively lower) intermediate point if \(C_1\) is direct (respectively inverse) and \(I_C(l)\) is an upper (respectively lower) intermediate point if \(C_l\) is inverse (respectively direct).

If C is of length zero, then it corresponds to a vertex e, which we consider an upper intermediate point of C. That is, \(I_C(0)=e\) is an upper intermediate point (and, by definition, the only intermediate point of C), and we say that there are no adjacent unidirectional strings.

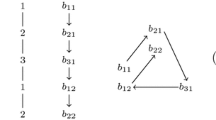

Example 1

Let

Consider the string \(C=\varepsilon ^{-1}\cdot \alpha \cdot \eta ^{-1}\). We also denote this string by \(0{\mathop {\leftarrow }\limits ^{\varepsilon }}0{\mathop {\rightarrow }\limits ^{\alpha }}1{\mathop {\leftarrow }\limits ^{\eta }}1\). In the latter notation, the intermediate points can easily be read off: \(I_C(0)=0\), \(I_C(1)=0\), \(I_C(2)=1\) and \(I_C(3)=1\). Among these, \(I_C(0)\) and \(I_C(2)\) are lower intermediate points (as arrows are pointing towards them), and \(I_C(1)\) as well as \(I_C(3)\) are upper intermediate points (arrows pointing away).

Note that \(C_1=\varepsilon ^{-1}\) is not a loose end, since \(C_1^{-1}\cdot \alpha =\varepsilon \alpha \) is non-zero. Hence \(_PC=1{\mathop {\leftarrow }\limits ^{\varepsilon \alpha }}0{\mathop {\rightarrow }\limits ^{\alpha }}1{\mathop {\leftarrow }\limits ^{\eta }}1\). The other end, \(C_3=\eta ^{-1}\) is not a loose end either, since a loose end on the right has to be direct by definition. Hence \(_PC_P = 1{\mathop {\leftarrow }\limits ^{\varepsilon \alpha }}0{\mathop {\rightarrow }\limits ^{\alpha }}1{\mathop {\leftarrow }\limits ^{\eta }}1{\mathop {\rightarrow }\limits ^{\beta }}0\). As a second example, consider the string \(D=0{\mathop {\rightarrow }\limits ^{\alpha \eta }}1\). In this case \(l=1\), and \(D_1=D\) is a direct loose end. To form \(_PD_P\) we therefore first need to form \(_PD=0{\mathop {\leftarrow }\limits ^{\varepsilon }}0{\mathop {\rightarrow }\limits ^{\alpha \eta }}1\), and then remove the loose end to form \(_PD_P=0{\mathop {\leftarrow }\limits ^{\varepsilon }}0\).

Definition 9

(Presilted strings) Let C and D be two strings. Write \(C'={_PC_P}=C'_1\cdots C'_m\) and \(D'={_PD_P}=D'_1\cdots D'_n\). We say that D is C-presilted if the following two conditions are met:

-

1.

For any \(i\in \{0,\ldots ,m\}\) such that \(I_{C'}(i)\) is a lower intermediate point of \(C'\) and any \(j\in \{0,\ldots ,n\}\) such that \(I_{D'}(j)\) is an upper intermediate point of \(D'\) we have that any direct string W with \(s(W)=I_{D'}(j)\) and \(t(W)=I_{C'}(i)\) factors as either \(W= \bar{X} \cdot W'\), where X is an adjacent string of \(I_{D'}(j)\), or as \(W=W'\cdot \bar{Y}\), where Y is an adjacent string of \(I_{C'}(i)\).

-

2.

Assume that there are \(i\in \{0,\ldots ,m\}\) and \(j\in \{0,\ldots ,n\}\) such that \(I_{C'}(i)=I_{D'}(j)\) and \(I_{C'}(i)\) and \(I_{D'}(j)\) are either both upper intermediate points or they are both lower intermediate points. By replacing, if necessary, \(D'\) by \(D'^{-1}\) (and, as a consequence, \(D'_x\) by \(D'^{-1}_{n-x+1}\) for each x) and j by \(n-j\) we can assume without loss of generality that if \(i+1 \le m\) and \(j+1\le n\), then the strings \(C_{i+1}'\) and \(D_{j+1}'\) both start with the same arrow or inverse of an arrow, and if \(i>0\) and \(j>0\) then the strings \(C_i'\) and \(D_j'\) both end on the same arrow or inverse of an arrow. Define \(t(1)=1\) and \(t(-1)=0\). For \(\sigma \in \{1,-1\}\) we define \(e(\sigma )\in \mathbb Z_{\ge 0}\) to be the maximal integer with respect to the property that

$$\begin{aligned} C'_{i+\sigma x+t(\sigma )}=D'_{j+\sigma x+t(\sigma )}\text { for all }0\le x < e(\sigma ) \end{aligned}$$whilst at the same time satisfying \(0 \le i+\sigma e(\sigma )\le m\) and \(0 \le j+\sigma e(\sigma )\le n\). Now we ask that one of the following holds for at least one of the two choices for \(\sigma \):

-

(a)

\(I_{C'}(i+\sigma e(\sigma ))\) is an upper intermediate point and \(i+\sigma (e(\sigma )+1) \in \{-1, m+1\}\)

-

(b)

\(I_{C'}(i+\sigma e(\sigma ))\) is an upper intermediate point, the previous condition is not met, \(j+\sigma (e(\sigma )+1)\not \in \{-1, n+1\}\) and

$$\begin{aligned} \bar{C}'_{i+\sigma e(\sigma )+t(\sigma )}=\bar{D}'_{j+\sigma e(\sigma )+t(\sigma )} \cdot W \end{aligned}$$for some direct string W (which, by the maximality of \(e(\sigma )\), must have positive length).

-

(c)

\(I_{C'}(i+\sigma e(\sigma ))\) is a lower intermediate point and \(j+\sigma (e(\sigma )+1) \in \{-1, n+1\}\)

-

(d)

\(I_{C'}(i+\sigma e(\sigma ))\) is a lower intermediate point, the previous condition is not met, \(i+\sigma (e(\sigma )+1)\not \in \{-1, m+1\}\) and

$$\begin{aligned} \bar{D}'_{j+\sigma e(\sigma )+t(\sigma )}=W\cdot \bar{C}'_{i+\sigma e(\sigma )+t(\sigma )} \end{aligned}$$for some direct string W (which, by the maximality of \(e(\sigma )\), must have positive length).

-

(a)

Example 2

Let A be as in Example 1.

-

1.

Let \(C=D=0{\mathop {\rightarrow }\limits ^{\varepsilon }}0\). We would like to check whether D is C-presilted. We have \(C'=D'={_PC_P}=1{\mathop {\leftarrow }\limits ^{\alpha }}0{\mathop {\rightarrow }\limits ^{\varepsilon \alpha }}1\). We need to check the first condition of Definition 9 for \(I_{C'}(a)\) with \(a \in \{0, 2\}\) and \(I_{D'}(1)\). For \(a=0\) this means checking that every direct string W from \(I_{D'}(1)=0\) to \(I_{C'}(0)=1\) factors either as \(\alpha \cdot W'\), \(\varepsilon \alpha \cdot W'\) or as \(W'\cdot \alpha \). For \(a=2\) we require a factorization either as \(\alpha \cdot W'\), \(\varepsilon \alpha \cdot W'\) or as \(W'\cdot \varepsilon \alpha \). The direct strings from 0 to 1 are \(\alpha \), \(\varepsilon \alpha \), \(\alpha \eta \) and \(\varepsilon \alpha \eta \), and all of these have factorizations as required. Since \(C=D\) we only need to check the second condition for \(i\ne j\). For \(i=j\) we always end up with either 2a or 2c holding. Hence it suffices to check \((i,j)=(0,2)\) and \((i,j)=(2,0)\). In either case we need to replace \(D'\) by \((D')^{-1}\), that is, assume from now on that \(D'=1{\mathop {\leftarrow }\limits ^{\varepsilon \alpha }}0{\mathop {\rightarrow }\limits ^{\alpha }}1\), and look at \((i,j)=(0,0)\) and \((i,j)=(2,2)\). By definition, \(e(\sigma )\) is the largest non-negative integer such that the constituent factors of \(C'\) between the intermediate points \(I_{C'}(i)\) and \(I_{C'}(i+\sigma e(\sigma ))\) coincide with the constituent factors of \(D'\) between the intermediate points \(I_{D'}(i)\) and \(I_{D'}(i+\sigma e(\sigma ))\). In our case, \(C'\) and \(D'\) share no common constituent factors (recall that \(D'\) was replaced by \(D'^{-1}\)), so \(e(+1)=e(-1)=0\) both if \((i,j)=(0,0)\) and if \((i,j)=(2,2)\). If \((i,j)=(0,0)\), then condition 2c holds for \(\sigma =-1\). If \((i,j)=(2,2)\), then condition 2c holds for \(\sigma =+1\). It follows that D is C-presilted.

-

2.

Let us now consider an example where the conditions 2b and 2d come into play. Let \(C=D=1{\mathop {\leftarrow }\limits ^{\varepsilon \alpha \eta }}0{\mathop {\rightarrow }\limits ^{\alpha }}1 {\mathop {\leftarrow }\limits ^{\varepsilon \alpha \eta }}0{\mathop {\rightarrow }\limits ^{\alpha \eta }}1{\mathop {\leftarrow }\limits ^{\alpha }}0{\mathop {\rightarrow }\limits ^{\varepsilon \alpha \eta }}1\). Note that both \(C_1\) and \(C_6\) are loose ends. Hence \(C'=D'={_PC_P}=0{\mathop {\rightarrow }\limits ^{\alpha }}1 {\mathop {\leftarrow }\limits ^{\varepsilon \alpha \eta }}0{\mathop {\rightarrow }\limits ^{\alpha \eta }}1{\mathop {\leftarrow }\limits ^{\alpha }}0\). We will perform part of the verification that D is C-presilted. Namely, we will check that the second condition holds for \((i,j)=(2,0)\). In this case it is not necessary to replace \(D'\) by \(D'^{-1}\). Since \(j=0\) we get \(e(-1)=0\) and since \(D'_{j+1}\ne C'_{i+1}\) we also get \(e(+1)=0\). One can check that for \(\sigma =-1\) neither one of the conditions 2a–2d is satisfied. For \(\sigma =+1\), only 2b has a chance of holding. This condition asks that \(\bar{C}'_{i+1}=\bar{D}'_{j+1}\cdot W\) for some direct string W. Concretely, we need a W such that \(\alpha \eta =\alpha \cdot W\). Hence condition 2b holds with \(W=\eta \).

Definition 10

(Presilting strings and support)

-

1.

We say that a string C is presilting if C is C-presilted.

-

2.

We say that a vertex e lies in the support of a string C if one of the following holds (again \(C'={_PC_P}=C_1'\cdots C_m'\)):

-

(a)

\(I_{C'}(i)=e\) for some lower intermediate point \(I_{C'}(i)\) of \(C'\) with \(i\ne 0, m\).

-

(b)

There is a direct string W whose source is an upper intermediate point \(I_{C'}(i)\) of \(C'\) and whose target is e, such that W does not factor as \(W=\bar{C}_j'\cdot W'\) for any adjacent unidirectional string \(C_j'\) of \(I_{C'}(i)\).

-

(a)

For a vertex e of Q we denote by \(P_e=e\cdot A\) the corresponding projective indecomposable module. Given two vertices e and f we will identify direct strings C with \(s(C)=e\) and \(t(C)=f\) with the homomorphism from \(P_f\) to \(P_e\) induced by left multiplication with C (considered as an element of A). We will define the string module associated to a string C as the cokernel of a map \(\psi _C\), which is constructed in the following definition.

Definition 11

(The map \(\psi _C\)) Let \(A=kQ/I\) be a string algebra and let C be a string. Decompose \(C'={_PC_P}= C'_1\cdots C'_m\) and consider the length of \(C'\):

-

1.

If \(C'\) is of length zero, then it is given by a vertex e, and we define \(\psi _C:0 \rightarrow P_e\).

-

2.

If \(C'\) has length greater than zero than there are four cases to consider:

-

(a)

If \(C'_1\) is direct and \(C'_m\) is inverse (note that in this case m is even): define \(Q(i) := P_{t(C'_{2i-1})}\) and \(P(i) := P_{s(C'_{2i-1})}\) for \(i\in \{1,\ldots ,\frac{m}{2}\}\). Define \(P(\frac{m}{2}+1):= P_{t(C'_{m})}\). Define

$$\begin{aligned} Q := \bigoplus _{i=1}^{\frac{m}{2}} Q(i)\quad \text { and }\quad P := \bigoplus _{i=1}^{\frac{m}{2}+1} P(i) \end{aligned}$$Furthermore, for each \(i\in \{1,\ldots , \frac{m}{2}\}\), we denote by \(\pi _{Q(i)}\) the projection from Q onto Q(i), and for each \(i\in \{1,\ldots , \frac{m}{2}+1\}\) we denote by \(\iota _{P(i)}\) the embedding of P(i) into P. We define a homomorphism \(\psi _C:\ Q \longrightarrow P\) as follows:

$$\begin{aligned} \psi _C = \sum _{i=1}^{\frac{m}{2}} \iota _{P(i)}\circ C'_{2i-1}\circ \pi _{Q(i)} + \iota _{P(i+1)} \circ C'^{-1}_{2i} \circ \pi _{Q(i)} \end{aligned}$$ -

(b)

If \(C'_1\) is inverse and \(C'_m\) is direct (in this case m is even): define \(Q(i) := P_{s(C'_{2i-1})}\) and \(P(i) := P_{t(C'_{2i-1})}\) for \(i\in \{1,\ldots ,\frac{m}{2}\}\). Define \(Q(\frac{m}{2}+1):= P_{t(C'_{m})}\). Define

$$\begin{aligned} Q := \bigoplus _{i=1}^{\frac{m}{2}+1} Q(i)\quad \text { and }\quad P := \bigoplus _{i=1}^{\frac{m}{2}} P(i) \end{aligned}$$Furthermore, for each \(i\in \{1,\ldots , \frac{m}{2}+1\}\), we denote by \(\pi _{Q(i)}\) the projection from Q onto Q(i), and for each \(i\in \{1,\ldots , \frac{m}{2}\}\) we denote by \(\iota _{P(i)}\) the embedding of P(i) into P. We define a homomorphism \(\psi _C:\ Q \longrightarrow P\) as follows:

$$\begin{aligned} \psi _C = \sum _{i=1}^{\frac{m}{2}} \iota _{P(i)}\circ C'^{-1}_{2i-1}\circ \pi _{Q(i)} + \iota _{P(i)} \circ C'_{2i} \circ \pi _{Q(i+1)} \end{aligned}$$ -

(c)

If \(C'_1\) is direct and \(C'_m\) is direct (in this case m is odd): define \(Q(i) := P_{t(C'_{2i-1})}\) and \(P(i) := P_{s(C'_{2i-1})}\) for \(i\in \{1,\ldots ,\frac{m+1}{2}\}\). Define

$$\begin{aligned} Q := \bigoplus _{i=1}^{\frac{m+1}{2}} Q(i)\quad \text { and }\quad P := \bigoplus _{i=1}^{\frac{m+1}{2}} P(i) \end{aligned}$$Furthermore, for each \(i\in \{1,\ldots , \frac{m+1}{2}\}\), we denote by \(\pi _{Q(i)}\) the projection from Q onto Q(i), and by \(\iota _{P(i)}\) the embedding of P(i) into P. We define a homomorphism \(\psi _C:\ Q \longrightarrow P\) as follows:

$$\begin{aligned} \psi _C = \iota _{P(\frac{m+1}{2})}\circ C'_m \circ \pi _{Q(\frac{m+1}{2})}+\sum _{i=1}^{\frac{m-1}{2}} \iota _{P(i)}\circ C'_{2i-1}\circ \pi _{Q(i)} + \iota _{P(i+1)} \circ C'^{-1}_{2i} \circ \pi _{Q(i)} \end{aligned}$$ -

(d)

If \(C'_1\) and \(C'_m\) are inverse, then \(C^{-1}\) falls under case (2c), so we can define \(\psi _C:=\psi _{C^{-1}}\).

-

(a)

Remark 3

Note that Definition 11 is much less technical than it looks: given a string C, we form \(C'={_PC_P}\), and then define a presentation \(Q\longrightarrow P\) such that the indecomposable direct summands of P are in bijection with the upper intermediate points of \(C'\) and the indecomposable direct summands of Q are in bijection with the lower intermediate points of \(C'\). The map between Q and P is then the sum of the direct versions \(\bar{C}'_1,\ldots , \bar{C}'_m\) of the factors \(C'_1,\ldots , C'_m\), each being considered as a map from the summand of Q corresponding to its target to the summand of P corresponding to its source.

Definition 12

(String modules) Let \(A=kQ/I\) be a string algebra and let C be a string. The associated string module M(C) is defined as:

In fact, \(\psi _C\) is a minimal projective presentation of M(C).

Remark 4

Note \(M(C)\cong M(C^{-1})\) for cases (2a), (2b) and (2c), justifying the seemingly ad hoc definition in (2d).

Proposition 5

Let \(A=kQ/I\) be a string algebra and let M be an indecomposable \(\tau \)-rigid A-module. Then M is a string module.

Proof

By [13, Theorem on page 161] each indecomposable A-module is either a string module or a so-called band module. By [13, Bottom of page 165] each band module occurs in an Auslander–Reiten sequence as both the leftmost and the rightmost term, which means that each band module is isomorphic to its Auslander–Reiten translate. But by definition such a module cannot be \(\tau \)-rigid.

The next proposition is the main technical result of this section.

Proposition 6

Let \(A=kQ/I\) be a string algebra and let C and D be two strings. Denote by \(T(C)^\bullet \in \mathcal K^b(\mathbf {proj}_A)\) and \(T(D)^\bullet \in \mathcal K^b(\mathbf {proj}_A)\) minimal projective presentations of M(C) respectively M(D). Then \({{\mathrm{Hom}}}_{\mathcal K^b(\mathbf {proj}_A)}(T(C)^\bullet , T(D)^\bullet [1])=0\) if and only if D is C-presilted.

Proof

We know that a minimal projective presentation of the string module M(C) is given by the following two-term complex:

where i ranges over all lower intermediate points of \(C'={_PC_P}\) and j ranges over all upper intermediate points of \(C'\) (just as in Definition 11, we merely added the superscript (C), and are intentionally less explicit about the range of the direct sum in order to avoid having to deal with three different cases again). In the same vein we have the minimal projective presentation

of M(D), where i and j range over the lower respectively upper intermediate points of \(D'={_PD_P}\). We adopt the following notation for homomorphisms: given a direct string W whose source is the upper intermediate point of \(D'\) associated with \(P^{(D)}(j)\) and whose target is the lower intermediate point of \(C'\) associated with \(Q^{(C)}(i)\), we denote by \(W_{j,i}\) the element of \({{\mathrm{Hom}}}_A(Q^{(C)}(i), P^{(D)}(j))\) induced by left multiplication with W. Whenever we write \(W_{j,i}\) below, we will mean this to tacitly imply that W starts and ends in the right vertices. Moreover, we identify

Note that the \(W_{j,i}\) form a basis of the above vector space, and we will refer to them as basis elements in what follows. We say that \(W_{j,i}\) is involved in an element \(\varphi \) of the above space if the coefficient of \(W_{j,i}\) is non-zero when we write \(\varphi \) as a linear combination of the basis elements.

Now the condition \({{\mathrm{Hom}}}_{\mathcal K^b(\mathbf {proj}_A)}(T(C)^\bullet , T(D)^\bullet [1])=0\) is equivalent to asking that for each summand \(Q^{(C)}(i)\) of \(Q^{(C)}\) (that is, for each lower intermediate i point of \(C'\)) and each summand \(P^{(D)}(j)\) of \(P^{(D)}\) (that is, for each upper intermediate point j of \(D'\)) each basis element \(W_{j,i}\) is zero-homotopic. One deduces from the definition of \(\psi _C\) and \(\psi _D\) that the space of zero-homotopic maps from \(T(C)^\bullet \) to \(T(D)^\bullet [1]\) is spanned by the following two families of maps:

-

1.

Let u be an upper intermediate point of \(D'\) and let \(u'\) be an upper intermediate point of \(C'\). We define

$$\begin{aligned} \begin{array}{rcll} h_C(W,u,u') &{}=&{} (W\cdot \bar{C}'_{u'})_{u,u'-1} + (W\cdot \bar{C}'_{u'+1})_{u,u'+1} &{} \text {if } 0< u'< m \\ \\ h_C(W,u,u') &{}=&{} (W\cdot \bar{C}'_{u'+1})_{u,u'+1} &{} \text {if }0 = u'< m \\ \\ h_C(W,u,u') &{}=&{} (W\cdot \bar{C}'_{u'})_{u,u'-1} &{} \text {if } 0 < u' = m \\ \\ \end{array} \end{aligned}$$if \(C'\) has length greater than zero, and \(h_C(W,u,u') = 0\) otherwise.

-

2.

Let l be a lower intermediate point of \(D'\) and let \(l'\) be a lower intermediate point of \(C'\)

$$\begin{aligned} \begin{array}{rcll} h_D(W,l,l') &{}=&{} (\bar{D}'_{l}\cdot W)_{l-1,l'}+(\bar{D}'_{l+1} \cdot W)_{l+1,l'} &{} \text {if } 0<l<n \\ \\ h_D(W,l,l') &{}=&{} (\bar{D}'_{l+1} \cdot W)_{l+1,l'} &{} \text {if } 0=l<n \\ \\ h_D(W,l,l') &{}=&{} (\bar{D}'_{l}\cdot W)_{l-1,l'}&{} \text {if } 0<l=n \\ \\ \end{array} \end{aligned}$$By definition, \(D'\) having a lower intermediate point implies that \(D'\) is of length greater than zero, so the length zero case does not need to be considered.

The source and target of the direct string W are \(I_{D'}(u)\) and \(I_{C'}(u')\) in the first case and \(I_{D'}(l)\) and \(I_{C'}(l')\) in the second.

The first condition in the definition of C-presiltedness is fulfilled if and only if each basis element \(W_{j,i}\) is involved in some \(h_C(W',u,u')\) or some \(h_D(W', l, l')\) for some \(W'\). So clearly the first condition is necessary.

Now notice that if W has positive length, then the unique continuation condition (see Definition 6(4)) ensures that \(h_C(W,u,u')\) respectively \(h_D(W,l,l')\) actually involves at most one basis element. Hence every \(W_{j,i}\) is zero-homotopic if and only if all basis vectors involved in maps of the form \(h_C(I_{C'}(u'),u,u')\) with \(I_{C'}(u')=I_{D'}(u)\) and \(h_D(I_{C'}(l'),l,l')\) with \(I_{C'}(l')=I_{D'}(l)\) are zero-homotopic. So assume that we have such a pair \(l',l\) respectively \(u',u\). These correspond precisely to the pairs i, j which are considered in the second part of the definition of C-presiltedness. We may assume that \(D'\) is oriented as in the definition of C-presiltedness, and we get non-negative integers \(e(\sigma )\) for \(\sigma \in \{1,-1\}\) just as in said definition. For ease of notation we will write h instead of \(h_C\) and \(h_D\) (the parameters do in fact determine which of the two we are dealing with). So we want to know when the basis elements involved in \(h(I_{C'}(i), j, i)\) are zero-homotopic, that is, can be written as a linear combination of other h’s. Without loss of generality we can assume that all h’s occurring in such a linear combination lie in the equivalence class of \(h(I_{C'}(i), j, i)\) with respect to the transitive closure of the relation \(h(W, a, b)\sim h(W',c,d)\) if there is a basis element which is involved in both h(W, a, b) and \(h(W',c,d)\). We call h(W, a, b) and \(h(W',c,d)\) neighbors of each other. Note that either \(c=a+1\) and \(d=b+1\), in which case we call \(h(W',c,d)\) a right neighbor of h(W, a, b), or \(c=a-1\) and \(d=b-1\), in which case \(h(W',c,d)\) is called a left neighbor of h(W, a, b). Left and right neighbors are unique if they exist. For any \(-e(-1)< x < e(1)\) we have

depending on whether \(I_{C'}(i+x)\) is an upper or a lower intermediate point. Hence \(h(I_{C'}(i+x), j+x,i+x)\) has exactly two neighbors, namely the right neighbor \(h(I_{C'}(i+x+1), j+x+1,i+x+1)\) and the left neighbor \(h(I_{C'}(i+x-1), j+x-1,i+x-1)\). It hence suffices to check what the right neighbor of \(h(I_{C'}(i+e(1)), j+e(1),i+e(1))\) and the left neighbor of \(h(I_{C'}(i-e(-1)), j-e(-1),i-e(-1))\) are.

If \(I_{C'}(i+e(1))\) is an upper intermediate point, and \(i+e(1)=m\) (i. e. 2a for \(\sigma =+1\) is met), then \(h(I_{C'}(i+e(1)), j+e(1),i+e(1))\) has no right neighbors, but in this case \(h(I_{C'}(i+e(1)), j+e(1),i+e(1))=(\bar{C}'_{i+e(1)})_{j+e(1),i+e(1)-1}\) or \(h(I_{C'}(i+e(1)), j+e(1),i+e(1)) =0\) (if \(C'\) has length zero). If 2a is met neither for \(\sigma =1\) nor for \(\sigma =-1\) then \(h(I_{C'}(i+e(1)), j+e(1),i+e(1))\) involves two different basis elements. If \(i+e(1)<m\), then a right neighbor of \(h(I_{C'}(i+e(1)), j+e(1),i+e(1))\) must have the form \(h(W, i+e(1)+1, j+e(1)+1)\) where \(\bar{D}'_{j+e(1)+1}\cdot W = \bar{C}'_{i+e(1)+1}\). That is, a right neighbor exists if and only if the factorization condition 2b for \(\sigma =+1\) is met, and this right neighbor involves just a single basis element.

Similarly one verifies that if \(I_{C'}(i+e(1))\) is a lower intermediate point, and \(j+e(1)=n\) (i. e. 2c for \(\sigma =+1\) is met), then \(h(I_{C'}(i+e(1)), j+e(1),i+e(1))\) has no right neighbors, but in this case \(h(I_{C'}(i+e(1)), j+e(1),i+e(1))=(\bar{D}'_{j+e(1)})_{j+e(1)-1,i+e(1)}\). If 2c is met neither for \(\sigma =1\) nor for \(\sigma =-1\) then \(h(I_{C'}(i+e(1)), j+e(1),i+e(1))\) involves two different basis elements. If 2c is not met for \(\sigma =+1\), then \(h(I_{C'}(i+e(1)), j+e(1),i+e(1))\) has a right neighbor (which necessarily involves but a single basis element) if and only 2d is met for \(\sigma =+1\).

We can of course apply the same line of reasoning to the left neighbors of \(h(I_{C'}(i-e(-1)), j-e(-1),i-e(-1)\). What we obtain then is the statement that the equivalence class of \(h(I_{C'}(i), j, i)\) with respect to the neighborhood relation contains an element involving only a single basis element if and only if one of the conditions 2a–2d is met for either \(\sigma =+1\) or \(\sigma =+1\).

Now one just has to realize that if some element in the equivalence class of \(h(I_{C'}(i), j, i)\) involves just a single basis element, then any basis element involved in any element of the equivalence class can be written as a linear combination of the elements of the equivalence class. Conversely, if every element of the equivalence class of \(h(I_{C'}(i), j, i)\) involves two basis elements, then no basis element involved in any of the elements of the equivalence class can be written as a linear combination of elements of the equivalence class (note that this is just linear algebra, since such an equivalence class written as row vectors with respect to the basis consisting of all involved basis elements in the right order, looks like \((1,1,0,\ldots ,0)\), \((0,1,1,0,\ldots ,0)\), ..., \((0,\ldots , 0,1,1)\), and possibly \((1,0,\ldots ,0)\) and/or \((0,\ldots ,0,1)\)).

Proposition 7

Let \(A=kQ/I\) be a string algebra and let C be a string. Denote by \(T(C)^\bullet \in \mathcal K^b(\mathbf {proj}_A)\) a minimal projective presentation of M(C). Let e be a vertex of Q, and denote by \(P_e^\bullet \) the stalk complex belonging to the projective indecomposable \(P_e\). Then we always have \({{\mathrm{Hom}}}_{\mathcal K^b(\mathbf {proj}_A)}(T(C)^\bullet , P_e^\bullet [2])=0\), and \({{\mathrm{Hom}}}_{\mathcal K^b(\mathbf {proj}_A)}(P_e^\bullet [1], T(C)^\bullet [1])=0\) if and only if e is not in the support of C.

Proof

Analogous to Proposition 6. \(\square \)

These propositions allow us to give a combinatorial characterisation of the indecomposable \(\tau \)-rigid modules over a string algebra.

Theorem 12

Let \(A=kQ/I\) be a string algebra.

-

1.

There are bijections

$$\begin{aligned} \begin{array}{c} \{ \text { presilting strings for }A \} \\ \updownarrow \\ \{\text { indecomposable }\tau -\text {rigid } A-\text {modules } \} \\ \updownarrow \\ \{ \text { indecomposable rigid two-term complexes} T^\bullet \in \mathcal K^b(\mathbf {proj}_A)\text { with }{} \mathtt{H}^0(T^\bullet ) \ne 0 \} \end{array} \end{aligned}$$where the first bijection is given by the correspondence between strings and indecomposable A-modules, and the second bijection is given by taking a minimal projective presentation of an indecomposable \(\tau \)-rigid module and, in the other direction, taking homology in degree zero.

-

2.

If \(\{C(1),\ldots , C(l)\}\) is a collection of presilting strings, and \(\{e(1), \ldots , e(m)\}\) is a collection of vertices of Q, then

$$\begin{aligned} \left( \bigoplus _{i=1}^l M(C(i)),\ \bigoplus _{j=1}^{m} P_{e(j)} \right) \end{aligned}$$is a support \(\tau \)-tilting pair if and only if \(l+m =|A|\), each C(i) is C(j)-presilted for all \(i,j\in \{1,\ldots , l\}\), and none of the e(j)’s is in the support of any of the C(i)’s.

-

3.

If \(\{C(1),\ldots , C(l)\}\), \(\{e(1), \ldots , e(m)\}\) and \(\{D(1),\ldots , D(l')\}\),\(\{f(1),\ldots ,f(m') \}\) both give rise to a support \(\tau \)-tilting module in the sense of the previous point, say M and N, then \(M \ge N\) if and only if D(i) is C(j)-presilted for all i, j.

In fact, Theorem 12 shows that there is a combinatorial algorithm to determine the indecomposable \(\tau \)-rigid modules, the support \(\tau \)-tilting modules and the mutation quiver of a string algebra, provided A has only finitely many indecomposable \(\tau \)-rigid modules. The steps involved are as follows:

-

1.

Run through a list of all strings up to a given length, check which of these strings are presilting and which vertices lie in their support.

-

2.

Determine the support \(\tau \)-tilting modules involving only the presilting strings from Step 1 using Theorem 12(2).

-

3.

Determine which of the support \(\tau \)-tilting modules from Step 2 are mutations of one another, which gives a subquiver of the Hasse quiver of \(s\tau \text {-tilt}\) (the direction of the arrows follows from Theorem 12(3) above).

-

4.

If each vertex in the quiver has exactly |A| neighbors, then we are done by Theorem 6 and Proposition 1. Otherwise we need to use a bigger maximal length in Step 1 and start over.

Of course, in practice, this can be done somewhat more efficiently.

Example 3

(cf. [20]) Consider the quiver

and define \(A=kQ/I\), where

Then

is central in A. We have

So by Theorem 11 the poset of 2-term silting complexes over A is isomorphic to the poset of 2-term silting complexes over kQ / J, which is a string algebra.

In the same vein, the algebra

also has kQ / J as a central quotient, because z obviously remains central modulo \(\beta _n\cdot \alpha _{n-1}\).

This shows that A, which is the Auslander algebra of \(k[x]/(x^n)\), and B, which is the preprojective algebra of type \(A_n\), have isomorphic posets of 2-term silting complexes. In fact, Theorem 11 immediately recovers all of [20, Theorem 5.3]. Now by a result of Mizuno [24], the poset of 2-term silting complexes over B is isomorphic to the group \(S_{n+1}\) with the generation order as its poset structure.

One could in principle try to reprove that last assertion using string combinatorics for the algebra kQ / J, but it is not clear whether this would make matters easier. However, what we can easily see is that each string for kQ / J is presilting (and strings can easily be counted in this case), and hence both A and B have exactly \(2\cdot (2^n-1) - n\) indecomposable \(\tau \)-rigid modules. Note that for \(n=3\), the algebra kQ / J is equal to the algebra R(3C) given in the appendix. R(3C) has, as expected, 24 support \(\tau \)-tilting modules and 11 indecomposable \(\tau \)-rigid modules, and all (presilting) strings are listed in Fig. 4.

Remark 5

Suppose \(A=kQ/I\) is a special biserial algebra and denote by \({\mathcal P}\) a full set of non-isomorphic indecomposable projective-injective non-uniserial A-modules. Then it is well known (see for example [30]) that the quotient algebra

is a string algebra. By [1, Theorem B], the support \(\tau \)-tilting modules of A can be explicitly computed from those of B, so the techniques in this section can be used for arbitrary special biserial algebras.

6 Blocks of group algebras

Now we will apply our Theorem 11 and the results of Sect. 5 to blocks of group algebras. Throughout this section, k is an algebraically closed field of characteristic p, and G is a finite group. Remember that since (blocks of) kG are symmetric, two-term silting complexes are in fact tilting.

6.1 \(\tau \)-tilting-finite blocks

Let us first recall the definition of \(\tau \)-tilting-finiteness, which was already briefly mentioned in the introduction.

Definition 13

(\(\tau \)-tilting-finite algebras) An algebra is called \(\tau \)-tilting-finite if there are only finitely many isomorphism classes of basic support \(\tau \)-tilting modules.

There is a nice sufficient criterion for \(\tau \)-tilting-finiteness, which is particularly useful for blocks.

Theorem 13

Let A be a symmetric algebra with positive definite Cartan matrix and a derived equivalence class in which the entries of the Cartan matrices are bounded. Then A is \(\tau \)-tilting-finite.

Proof

For a two-term tilting complex \(T=T_1 \oplus \cdots \oplus T_l\) (remember that \(l=|A|\)), write

and consider \(B={\text {End}}_{{\mathcal K}^b(\mathbf {proj}_A)}(T)\). Denote by \(C_A\) (respectively \(C_B\)) the Cartan matrix of A (respectively B), and denote by \(\chi \) the Euler form on \(K_0(\mathbf {proj}_A)\). Then:

where in the second equality we used that the \(T_i\) are tilting. Defining \(M \in M_l({\mathbb Z})\) by \(M_{ij}=t_{ij}^+ - t_{ij}^-\), we obtain

In fact, there are only finitely many such M. To see this suppose that \(M' \in M_l({\mathbb Z})\) also satisfies \(C_B=M'C_AM'^{t}\). Then

which shows that \(M^{-1}M' \in O({\mathbb Z}^n,C_A)\), the group of orthogonal, integral matrices preserving \(C_A\). Since the Cartan matrix \(C_A\) is positive definite by assumption, this is a finite group.

As we assume that the entries of the Cartan matrices of algebras derived equivalent to A are bounded, it follows that only finitely many matrices occur as Cartan matrices of algebras in the derived equivalence class of A (as there are only finitely many \(l\times l\)-matrices with integer entries between 0 and the assumed upper bound). For each of these finitely many Cartan matrices, there are only finitely many matrices M satisfying (6.1). Since the matrix M above is just the matrix of g-vectors of the \(T_i\), this matrix already determines the tilting complex T by Theorem 8, so we are done.

Now we would like to get some idea of how, if at all, \(\tau \)-tilting-finiteness of a block relates to its defect group and representation type. The following theorem determines the representation type of (blocks of) group algebras.

Theorem 14

[10, 12, 16] Let B be a block of kG and let P be a defect group of B. The block algebra B and the group algebra kP have the same representation type. Moreover:

-

1.

kP is of finite type if P is cyclic.

-

2.

kP is of tame type if \(p=2\) and P is the Klein four-group, or a generalized quaternion, dihedral or semi-dihedral group.

-

3.

In all other cases kP is of wild type.

Corollary 4

There exist \(\tau \)-tilting-finite blocks of group algebras of every representation type and of arbitrary large defect.

Proof

Let G denote a finite p-group, so kG is local with defect group G. It is easy to see that local algebras are \(\tau \)-tilting-finite, and by Theorem 14 they can be of arbitrary representation type.

A more interesting question is whether there exist non-local blocks of group algebras which are \(\tau \)-tilting-finite but not representation finite. Using Theorem 11 we can show the following:

Theorem 15

There exist non-local \(\tau \)-tilting-finite wild blocks of group algebras with arbitrary large defect groups, in the sense that every p-group occurs as a subgroup of the defect group of a \(\tau \)-tilting-finite non-local block.

Proof

Assume B is a block of kG, with defect group P. For Q an arbitrary p-group, the algebra \(kQ \otimes _k B\) is a block of \(k(Q \times G)\) with defect group \(Q \times P\) (see for example [8, Ch. IV, §15, Lemma 6]). Since Q is a p-group, there is a non-trivial element \(z \in Z(Q)\), and we can form the quotient

with \(\bar{Q}=Q/\langle z \rangle \). Since \(\bar{Q}\) is again a p-group and Q is finite we can keep repeating this until we get B as a quotient. Now Theorem 11 provides a bijection between the support \(\tau \)-tilting modules for B and the support \(\tau \)-tilting modules for \(kQ \otimes _k B\), so it suffices to take for B a block of cyclic defect or (as we will see below) a tame block, to obtain examples as in the statement of the theorem.

6.2 Tame blocks

In [14], Erdmann determined the basic algebras of all algebras satisfying the following definition, which is satisfied in particular by all tame blocks of group algebras.

Definition 14

A finite-dimensional algebra A defined over an algebraically closed field k of arbitrary characteristic is of dihedral, semidihedral or quaternion type if it satisfies the following conditions:

-

1.

A is tame, symmetric and indecomposable.

-

2.

The Cartan matrix of A is non-singular.

-

3.

The stable Auslander–Reiten quiver of A has the following properties:

Dihedral type | Semidihedral type | Quaternion type | |

|---|---|---|---|

Tubes: | Rank 1 and 3 | Rank \(\le 3\) | Rank \(\le 2\) |

At most two 3-tubes | At most one 3-tube | ||

Others: | \({\mathbb Z}A_{\infty }^{\infty }/\Pi \) | \({\mathbb Z}A_{\infty }^{\infty }\) and \({\mathbb Z}D_{\infty }\) |

The Appendix of Erdmann [14] furnishes a complete list of basic algebras satisfying said definition. Later, Holm [17] showed that non-local tame blocks must actually be of one of the following types:

-

Dihedral: \(D(2A), D(2B), D(3A), D(3B)_1, D(3K)\)

-

Semidihedral: \(SD(2A)_{1,2}, SD(2B)_{1,2}, SD(3A)_1, SD(3B)_{1,2}, SD(3C), SD(3D), SD(3H)\)

-

Quaternion: \(Q(2A), Q(2B)_1, Q(3A)_2, Q(3B), Q(3K)\)

We can show that all tame blocks are \(\tau \)-tilting-finite, even without looking at Erdmann’s classification [14] in greater detail. Namely, the class of algebras defined in Definition 14 is clearly closed under derived equivalences (cf. [18, Proposition 2.1]), and it follows from [14] that the entries of the Cartan matrices of algebras in the derived equivalence class of an algebra satisfying Definition 14 are bounded (to see this one has to use the fact that the dimension of the center is a derived invariant). Moreover, a block of a group algebra always has a positive definite Cartan matrix. Hence, Theorem 13 implies that all tame blocks are \(\tau \)-tilting finite.

So it is in principle possible to completely classify the two-term tilting complexes over tame blocks, and describe the associated Hasse quivers (see Theorem 4 for the poset structure). Using Theorem 11 and the results on string algebras, we are able to achieve this (in fact, for all algebras in Erdmann’s list, not just blocks). In Appendix 7, we provide the presentations of the algebras from the appendix of [14], along with central elements and the quotients one obtains. A direct application of Theorem 11 then reduces the computation of g-vectors and Hasse quivers for all tame blocks to the same computation for five explicitly given finite dimensional algebras (with trivial center): R(2AB), R(3ABD), R(3C), R(3H) and R(3K) (see Table 2 below), which do not depend on any extra data. The computation of g-vectors and Hasse quivers for all algebras of dihedral, semidihedral or quaternion type reduces to the same computation for the aforementioned five algebras, and in addition the five algebras W(2B), W(3ABC), \(W(Q(3A)_1)\), W(3F) and W(3QLR) (also given in Table 2). The following theorem follows immediately from these considerations.

Theorem 16

All algebras of dihedral, semidihedral or quaternion type are \(\tau \)-tilting-finite and their g-vectors and Hasse quivers (of the poset of support \(\tau \)-tilting modules) are independent of the characteristic of k and the parameters involved in the presentations of their basic algebras.

If we restrict our attention to tame blocks of group algebras, the results listed in Appendix 7 imply that the g-vectors and Hasse quiver for such a block depend only on the Ext-quiver of its basic algebra. The isomorphism class of the defect group (dihedral, semidihedral or quaternion) does not play a role.

Corollary 5

All tilting complexes over an algebra A of dihedral, semidihedral, or quaternion type can be obtained from the regular module \(A_A\) by iterated tilting mutation.

Proof

By [18, Proposition 2.1], the class of algebras satisfying Definition 14 is closed under derived equivalence, so the result follows immediately from Proposition 2 and Theorem 7.

Since R(2AB), R(3ABD), R(3C), R(3H), R(3K), W(2B), W(3ABC), \(W(Q(3A)_1)\), W(3F) and W(3QLR) are all string algebras one can go further and actually compute (using the results in Sect. 5) the g-vectors and Hasse quivers of the poset of support \(\tau \)-tilting modules for all algebras of dihedral, semidihedral and quaternion type. For details we refer to Appendix 7.

References

Adachi, T.: The classification of \(\tau \)-tilting modules over Nakayama algebras, arXiv preprint arXiv:1309.2216 (2013)

Adachi, T.: \(\tau \)-rigid-finite algebras with radical square zero. In: Proceedings of the 47th Symposium on ring theory and representation theory, Symp. Ring Theory Represent. Theory Organ. Comm., Okayama, pp 1–6 (2016)

Adachi, T., Aihara, T., Chan, A.: Tilting Brauer graph algebras I: classification of two-term tilting complexes, arXiv preprint arXiv:1504.04827 (2015)

Adachi, T., Iyama, O., Reiten, I.: \(\tau \)-tilting theory. Compos. Math. 150(3), 415–452 (2014)

Aihara, T.: Tilting-connected symmetric algebras. Algebr. Represent. Theory 16(3), 873–894 (2013). (MR 3049676)

Aihara, T., Iyama, O.: Silting mutation in triangulated categories. J. Lond. Math. Soc. 85(3), 633–668 (2012). (2)

Aihara, T., Mizuno, Y.: Tilting complexes over preprojective algebras of Dynkin type. In: Proceedings of the 47th Symposium on ring theory and representation theory, Symp. ring theory represent. Theory Organ. Comm., Okayama, pp 14–19 (2015)

Alperin, J.L.: Local representation theory, Cambridge Studies in Advanced Mathematics. In: Modular representations as an introduction to the local representation theory of finite groups, vol. 11. Cambridge University Press, Cambridge (1986) (MR 860771). https://doi.org/10.1017/CBO9780511623592

Antipov, M.A.: Derived equivalence of symmetric special biserial algebras, Zap. Nauchn. Sem. S.-Peterburg. Otdel. Mat. Inst. Steklov. (POMI) 343 (2007), no. Vopr. Teor. Predts. Algebr. i Grupp. 15, 5–32, 272 (MR 2469411)

Bondarenko, V.M., Drozd, J.A.: The representation type of finite groups, Zap. Naučn. Sem. Leningrad. Otdel. Mat. Inst. Steklov. (LOMI) 71 (1977), 24–41, 282, Modules and representations

Borel, A.: Linear algebraic groups. In: Graduate Texts in Mathematics, second ed., vol. 126. Springer-Verlag, New York (1991). https://doi.org/10.1007/978-1-4612-0941-6

Brenner, S.: Modular representations of \(p\) groups. J. Algebra 15, 89–102 (1970)

Butler, M.C.R., Ringel, C.M.: Auslander-Reiten sequences with few middle terms and applications to string algebras. Comm. Algebra 15(1–2), 145–179 (1987)

Erdmann, K.: Blocks of tame representation type and related algebras. Lecture notes in mathematics, vol. 1428. Springer-Verlag, Berlin (1990)

The GAP Group, GAP – Groups, Algorithms, and Programming, Version 4.8.2, (2016)

Higman, D.G.: Indecomposable representations at characteristic \(p\). Duke Math. J. 21, 377–381 (1954)

Holm, T.: Derived equivalent tame blocks. J. Algebra 194(1), 178–200 (1997)

Holm, T.: Derived equivalence classification of algebras of dihedral, semidihedral, and quaternion type. J. Algebra 211(1), 159–205 (1999)

Hoshino, M., Kato, Y.: Tilting complexes defined by idempotents. Commun. Algebra 30(1), 83–100 (2002)

Iyama, O., Zhang, X.: Classifying \(\tau \)-tilting modules over the Auslander algebra of \(K[x]/(x^{n})\), arXiv preprint arXiv:1602.05037 (2016)

Jensen, B.T., Su, X., Zimmermann, A.: Degenerations for derived categories. J. Pure Appl. Algebra 198(1–3), 281–295 (2005)

Keller, B.: On the construction of triangle equivalences, Derived equivalences for group rings, Lecture Notes in Math., vol. 1685, Springer, Berlin, 1998, pp. 155–176

Keller, B., Vossieck, D.: Aisles in derived categories, Bull. Soc. Math. Belg. Sér. A 40 (1988), no. 2, 239–253, Deuxième Contact Franco-Belge en Algèbre (Faulx-les-Tombes, 1987)

Mizuno, Y.: Classifying \(\tau \)-tilting modules over preprojective algebras of Dynkin type. Math. Z. 277(3–4), 665–690 (2014)

Okuyama, T.: Some examples of derived equivalent blocks of finite groups, preprint (1997)

Oppermann, S.: Quivers for silting mutation, arXiv preprint arXiv:1504.02617 (2015)

The QPA-team, QPA - Quivers, path algebras and representations, Version 1.23, (2015)

Rickard, J.: Derived categories and stable equivalence. J. Pure Appl. Algebra 61(3), 303–317 (1989)

Schroll, S.: Trivial extensions of gentle algebras and Brauer graph algebras. J. Algebra 444, 183–200 (2015)

Skowroński, A., Waschbüsch, J.: Representation-finite biserial algebras. J. Reine Angew. Math. 345, 172–181 (1983)