Abstract

We consider sequential multi-player games with perfect information and with deterministic transitions. The players receive a reward upon termination of the game, which depends on the state where the game was terminated. If the game does not terminate, then the rewards of the players are equal to zero. We prove that, for every game in this class, a subgame perfect \(\varepsilon \)-equilibrium exists, for all \(\varepsilon > 0\). The proof is constructive and suggests a finite algorithm to calculate such an equilibrium.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We study multi-player games where play proceeds from one state to another and where each transition is decided by one of the players. That is, each state is controlled by one of the players and it is the controlling player of a state who decides what the next state will be. We do not consider chance moves in our model and the number of states is finite. The players receive a (possibly negative) reward upon termination of the game. Termination is decided by the controlling player of the active state, who always has this option instead of moving to another state. The rewards to the players depend on the state where the game is terminated. Infinite play is a possibility in these games, as no player is forced to terminate the game (unless the structure of the game leaves him no other option). Infinite play is associated with a zero reward for all players.

Our games belong to the more general class of dynamic games with perfect information, which have numerous applications in economic theory, computer science, and other disciplines. One of the main goals in the literature has always been to identify conditions that guarantee the existence of a subgame perfect equilibrium, or at least of a subgame perfect \(\varepsilon \)-equilibrium for every positive error term \(\varepsilon \). For our class of games, we prove the existence of a subgame perfect \(\varepsilon \)-equilibrium, for every \(\varepsilon >0\). Our existence result extends several earlier results, where further restrictions were imposed on either the transition structure or the reward structure of the game. A subgame perfect \(\varepsilon \)-equilibrium, for every \(\varepsilon >0\), was previously established in games with only nonnegative rewards (Flesch et al. 2010a), in free transition games (Kuipers et al. 2013), and in games where each player only controls one state (Kuipers et al. 2016). In the literature, we can also find sufficient conditions for other classes of games, such as in the classical papers by Fudenberg and Levine (1983) and Harris (1985), and more recently, in the papers by Solan and Vieille (2003), Flesch et al. (2010b), Purves and Sudderth (2011), Brihaye et al. (2013), Roux and Pauly (2014), Flesch and Predtetchinski (2016), Roux (2016), Mashiah-Yaakovi (2014), Cingiz et al. (2019) and Flesch et al. (2019). We further refer to the recent book by Alós-Ferrer and Ritzberger (2016), and the surveys by Jaśkiewicz and Nowak (2016) and Bruyère (2017).

In most economic models, payoffs are bounded and discounted, and this automatically guarantees continuity at infinity, a condition defined by Fudenberg and Levine (1983). For the topological meaning of continuity at infinity, we refer to Alós-Ferrer and Ritzberger (2016, 2017). Even though in our model payoffs are not discounted, our results have an implication for the discounted case. Indeed, the joint strategies that we construct are not only subgame perfect \(\varepsilon \)-equilibria in the undiscounted game, but also in the discounted game, provided that the discount factor is sufficiently close to 1 [cf. the notion of uniform \(\varepsilon \)-equilibrium, e.g., the survey by Jaśkiewicz and Nowak (2016)]. The strategy profile is thus independent of the discount factor, provided it is large enough, so the knowledge of the exact discount factor is not required.

The undiscounted game on its own is also interesting in the context of negotiations or delegation problems, when there is no specific deadline given for an agreement. An example of this can be found in the paper by Bloch (1996), where the negotiation process for coalition formation is modeled as a positive recursive game. A positive recursive model is limited to situations where any agreement is always better than no agreement, for all players. The generalization to recursive games that are not necessarily positive removes this limitation and allows for models, where some players may wish to sabotage certain outcomes.

The relevance of our paper, we think, mostly lies in the fact that we obtain insight in the structure of equilibria in perfect information games with deterministic transitions. Let us briefly discuss this. In general, one can distinguish two essentially different reasons why an error term may be needed for equilibrium play in a dynamic game. It could be that, in subgame perfect \(\varepsilon \)-equilibrium play, every action that is played with positive probability gives the player a reward that is very close or equal to his initially expected reward. Let us say that this is an error term of the first type. It could also be that, in subgame perfect \(\varepsilon \)-equilibrium play, a player must place a very small probability on an action that will lead to a substantially lower reward than initially expected. Let us say that this is an error term of the second type. For a game in our class, if an error term \(\varepsilon \) is required, it is always of the second type, and the suboptimal action that is played with small probability invariably serves as a threat to one of the other players to make him follow the plan (Fig. 1).

Example 1

The following game was introduced in Solan and Vieille (2003). There are two players, player 1 and player 2, and two states, \(s_1\) and \(s_2\). Player 1 controls state \(s_1\) and player 2 controls state \(s_2\). In state \(s_1\), player 1 has two actions: he can nominate state \(s_2\), or terminate the game with reward \(-1\) for himself and reward 2 for player 2. In state \(s_2\), player 2 has two actions: he can nominate state \(s_1\), or terminate the game with reward \(-2\) for player 1 and reward 1 for himself. If no player ever terminates the game, then the reward is 0 for each player. This game can be represented as follows:

Player 1 prefers that the game will never terminate, as he would then obtain reward 0, whereas he will obtain a negative reward, either \(-1\) or \(-2\), upon termination. Player 2 is interested in termination of the game. He can always force termination at state \(s_2\) with reward 1 for himself, but he prefers that player 1 will terminate at state \(s_1\), in which case he will obtain reward 2. Notice that, if the game terminates, then player 1 also prefers termination at state \(s_1\) instead of \(s_2\).

As Solan and Vieille (2003) show, this game has the following two important properties: There is no subgame perfect \(\varepsilon \)-equilibrium in pure strategies for small \(\varepsilon >0\), and there is no subgame perfect 0-equilibrium, not even in randomized strategies.

Nevertheless, they show that the following stationary strategies constitute a subgame perfect \(\varepsilon \)-equilibrium for \(\varepsilon \in (0,1)\): player 1 in state \(s_1\) terminates with probability 1 (regardless the history), whereas player 2 in state \(s_2\) nominates state \(s_1\) with probability \(1-\varepsilon \) and terminates with probability \(\varepsilon \) (regardless the history).

Let us briefly argue that this strategy profile is indeed a subgame perfect \(\varepsilon \)-equilibrium. It is easy to see that player 2 cannot improve his reward by more than \(\varepsilon \), as his expected reward, when starting in state \(s_2\), is \((1-\varepsilon )\cdot 2+\varepsilon \cdot 1=2-\varepsilon \). Player 1 cannot improve his reward at all. Indeed, player 2’s strategy prescribes to always terminate with the same positive probability whenever the play is in state \(s_2\), so player 2’s strategy makes sure that one of the players will eventually terminate. Intuitively, termination by player 2 with probability \(\varepsilon \) can be seen as a threat against player 1, which retaliates any deviations by player 1. Note that the error term \(\varepsilon \) is of the second type. We remark that all subgame perfect \(\varepsilon \)-equilibria in this game have this feature: player 2 threatens player 1 with termination at \(s_2\). \(\square \)

It was shown by Flesch et al. (2010a), who studied perfect information games with positive recursive rewards and with chance moves, that, for their game model, a subgame perfect \(\varepsilon \)-equilibrium exists for every \(\varepsilon > 0\). Here, the error term \(\varepsilon \) is always of the first type and arises as the consequence of chance moves in the model. Indeed, they showed that, in the absence of chance moves, a subgame perfect equilibrium in pure strategies exists. When chance moves are eliminated from the model studied by Flesch et al. (2010a), we obtain a special case of the model we study here, with nonnegative rewards. Thus, the error term \(\varepsilon \) is not needed when the rewards in our model are nonnegative. One can get some intuitive understanding for this by realizing that, when the rewards are all nonnegative, all players have an interest in termination of the game. By contrast, in the presence of negative rewards, some players may want to obstruct termination of the game when they foresee termination with a negative reward for them. This is when a threat, executed with small probability, is necessary to keep such players in check.

Another interesting feature is that subgame perfect \(\varepsilon \)-equilibrium play for a game in our class is mostly deterministic: At most once after each deviation will there be a stage where a small probability is placed on a threat action. In order to play such a strategy, a player needs to have two pieces of data in memory: (i) the most recent deviation from equilibrium play (if there was indeed a deviation) and (ii) whether a lottery took place after the most recent deviation to decide on the execution of a threat action, and if so, the outcome of the lottery.

Example 2

The following instructive game shows that, in order to obtain a subgame perfect \(\varepsilon \)-equilibrium, it may be necessary to make detours in the game, such that the same state must be visited twice, first on the way to reach a player who can execute a threat action, then to reach the player who should terminate the game (Fig. 2).

This game is played by three players and has three states. The controlling player, the state, the possible actions of the controlling player and the rewards upon termination are represented similarly to Example 1. The rewards for players 1 and 2 in states \(s_1\) and \(s_2\) are exactly as in Example 1, i.e., \(-1\) and 2 in state \(s_1\) and \(-2\) and 1 in state \(s_2\). Player 3 can be seen as an additional player, who is not interested in terminating himself, as it gives him the worst possible reward \(-1\).

One can verify that, for \(\varepsilon \in (0,1)\), this game has the following subgame perfect \(\varepsilon \)-equilibrium that is very similar to the one in Example 1. Player 1 in state \(s_1\) terminates with probability 1 (regardless the history). Player 2 in state \(s_2\) nominates state \(s_3\) with probability \(1-\varepsilon \) and terminates with probability \(\varepsilon \) (regardless the history). Player 3’s strategy is not stationary: if state \(s_3\) is reached from state \(s_1\) then player 3 nominates state \(s_2\) with probability 1, whereas if state \(s_3\) is reached from state \(s_2\) then player 3 nominates state \(s_1\) with probability 1.

In this strategy profile, if player 1 deviates by nominating state \(s_3\), then instead of moving back to state \(s_1\) directly, a detour is made via player 2, because player 2 is the only player who has a threat action against player 1. One can verify that such a detour, at least with a positive probability, is necessary to obtain a subgame perfect \(\varepsilon \)-equilibrium. This underlines the difficulty to construct subgame perfect \(\varepsilon \)-equilibria in our class of games. We remark that, in more complex games, a threat is sometimes not immediate termination by a player with small probability, but rather a complete sequence of actions that a player can start with small probability. \(\square \)

Interestingly, our analysis shows that just one computational effort suffices to find a subgame perfect \(\varepsilon \)-equilibrium for every \(\varepsilon > 0\). The only difference between these equilibria is the probability with which a threat action should be executed. Examples 1 and 2 indicate how this works. The analysis in this paper suggests that it is unlikely that such a computation can be done efficiently: A naive implementation of the procedure we propose in this paper obviously requires super-exponential time. This is in contrast with the situation of nonnegative rewards, for which Flesch et al. (2010a) proved that a subgame perfect equilibrium can be computed in polynomial time.

Although the exact computation of a subgame perfect \(\varepsilon \)-equlibrium likely becomes intractable already for moderately sized problems, our results are probably useful for finding a good quality of solutions. As an illustration, let us see what happens if we introduce a discount factor \(\beta \in (0,1)\) in the model to simplify the analysis. It follows from a result by Fink (1964) and Takahashi (1964) that the discounted model has a subgame perfect equilibrium in stationary strategies. For Example 1, we then have precisely one stationary equilibrium, which is also subgame perfect, and where both players should terminate the game with probability \(\frac{1-\beta ^2}{\beta (2-\beta )}\) when they are active. This means that the game will terminate with probability 1, and when \(\beta \) is close to 1, both states have a probability of approximately \(\frac{1}{2}\) of termination. This totally ignores the fact that, given termination of the game, both players have an interest in termination at state 1.

Readers who are only interested in the construction of a subgame perfect \(\varepsilon \)-equilibrium for a game in our class and why it is indeed an equilibrium, can limit themselves to reading the first four sections of this paper. We formally introduce our model in Sect. 2, we introduce terminology and strategic concepts in Sect. 3, and we give a proof of our main result Sect. 4. The proof in Sect. 4 makes use of a fixed point theorem, which we prove in Sect. 5.

2 Formal model

Our class of games was informally introduced as consisting of games that potentially have infinite horizon, but where players only obtain a nonzero reward if one of them chooses to terminate. We formally introduce our class as always having infinite play. This is done by letting termination correspond to entering an absorbing state, after which the game continues, but is strategically over. We consider the class \({\mathcal {G}}\) of dynamic games given by

-

(1)

a non-empty set of players \(N = \{1,\ldots , n\}\), where \(n\in {{\mathbb {N}}}\),

-

(2)

a non-empty and finite set S of non-absorbing states and a set \(S^*\) of absorbing states such that there is a one-to-one correspondence between states in S and \(S^*\); the state in \(S^*\) that corresponds to \(t\in S\) is denoted by \(t^*\),

-

(3)

for each state \(t\in S\cup S^*\), an associated controlling player \(i_t\in N\),

-

(4)

for each state \(t \in S\), a set of actions \(A(t) \subseteq \{t^*\} \cup (S{\setminus } \{t\})\) with \(t^*\in A(t)\); for each state \(t^*\in S\), we have \(A(t^*) = \{t^*\}\),

-

(5)

for each state \(t\in S\), an associated reward vector \(r(t) \in {{\mathbb {R}}}^N\).

A game in \({\mathcal {G}}\) is to be played at stages in \({{\mathbb {N}}}\) in the following way. At any stage m one state is called active. If \(t\in S\) is active, then player \(i_t\) announces a state in A(t), and the announced state will be active at the next stage. If \(t^*\in S^*\) becomes active, then the unique state \(t^*\in A(t^*)\) will be active at the next stage and thus, \(t^*\) will be active forever. The game is then strategically finished and the rewards to the players are according to r(t). The game starts with an initial state \(s\in S\).

We assume complete information (i.e., the players know all the data of the game), full monitoring (i.e., the players observe the active state and the action chosen by the active player), and perfect recall (i.e., the players remember the entire sequence of active states and actions).

3 Strategic concepts

3.1 Basic concepts and terminology

It will be necessary to develop a rather extensive notation and terminology in this paper. Here, we introduce the basics.

Let us define the directed graph \({\mathbf {G}}\) by

This graph can obviously be interpreted as the graph on which the game is played. Whenever we refer to an ordered pair (x, y) as an edge, it is implicit that (x, y) is an edge of the directed graph \({\mathbf {G}}\), and hence that \(y\in A(x)\).

Let us also have notation for the set of non-absorbing states that are controlled by one particular player. For every \(i\in N\), we define

Obviously, the sets \(S^i\) form a partition of the set S of non-absorbing states.

Let us now introduce the basic concepts of this paper.

Plans: A plan is an infinite sequence of states \(g = (t_m)_{m\in {{\mathbb {N}}}}\), such that \((t_m , t_{m+1} )\) is an edge for all \(m \in {{\mathbb {N}}}\). A plan is interpreted as a prescription for play for a game with initial active state \(t_1\). The set of non-absorbing states that become active during play if plan g is executed is denoted by \({\textsc {S}}(g)\), i.e.,

Notice that, if the initial state of g is an element of \(S^*\), then g is of the form \((t^*, t^*, \ldots )\), with \(t^*\in S^*\). Such a plan will also be denoted as \((t^*)\). Also, if plan g contains a state in \(S^*\), say \(t^*\), and the initial state of g is an element of S, then we must have \(t \in {\textsc {S}}(g)\) and there must be a stage M with \(t_M = t\) and with \(t_m = t^*\) for all \(m > M\). This is interpreted as a prescription for player \(i_t\) to announce his absorbing state \(t^*\) at stage M . We say that the plan absorbs at t if this is the case. Otherwise, we say that the plan is non-absorbing. An absorbing plan, for example \((r,s,t,t^*,t^*,\ldots )\) will also be denoted as \((r,s,t,t^*)\). We denote by \(\phi _i(g)\) the reward to player \(i \in N\) when play is according to g, i.e., \(\phi _i(g) = r_i(t)\) if g absorbs at t, and \(\phi _i(g) = 0\) if g is non-absorbing. The initial state of plan g is denoted by \({\textsc {first}}(g)\).

Paths: A path (or history) is a finite sequence \(p = (t_m)_{m=1}^k\) with \(k \ge 1\), such that \((t_m , t_{m+1} )\) is an edge for all \(m\in \{1,\ldots ,k-1\}\). The number \(k-1\) is called the length of p. The initial state \(t_1\) of p is denoted by \({\textsc {first}}(p)\) and the final state \(t_k\) is denoted by \({\textsc {last}}(p)\). We will sometimes want to concatenate a number of paths to make a longer path or a plan, or we may want to concatenate a finite number of paths and a plan to make another plan. We allow concatenation if \(p_1, p_2, \ldots , p_m\) are paths that satisfy \({\textsc {last}}(p_k) = {\textsc {first}}(p_{k+1})\) for all \(k \in \{1,\ldots , m - 1\}\). The concatenation of these paths is denoted by \(\langle p_1, p_2,\ldots , p_m\rangle \) and it represents the path that follows the prescription of \(p_1\) from \({\textsc {first}}(p_1)\) to \({\textsc {last}}(p_1) = {\textsc {first}}(p_2)\), then follows the prescription of \(p_2\) until \({\textsc {last}}(p_2) = {\textsc {first}}(p_3)\) is reached, and so on, until \({\textsc {last}}(p_m)\) is reached. Also, if g is a plan with \({\textsc {first}}(g) = {\textsc {last}}(p_m)\), then the plan that first follows the prescription of \(\langle p_1, p_2,\ldots , p_m\rangle \) and then switches to g is denoted by \(\langle p_1,\ldots , p_m, \)g\(\rangle \). Finally, if we have an infinite number of paths \(p_1, p_2,\ldots \) with the property \({\textsc {last}}(p_k) = {\textsc {first}}(p_{k+1})\) for all \(k\in {{\mathbb {N}}}\), then \(\langle p_1, p_2, \ldots \rangle \) represents the pathFootnote 1 or plan that subsequently follows the prescription of \(p_1\), \(p_2\), etc.

Strategies: A strategy \(\pi ^i\) for player \(i\in N\) is a decision rule that, for any path p with \({\textsc {last}}(p) \in S^i\), prescribes a probability distribution \(\pi ^i(p)\) over the elements of \(A({\textsc {last}}(p))\). We use the notation \(\varPi ^i\) for the set of strategies for player i. A strategy \(\pi ^i \in \varPi ^i\) is called pure if every prescription \(\pi ^i(p)\) places probability 1 on one of the elements of \(A({\textsc {last}}(p))\). We use the notation \(\varPi \) for the set of joint strategies \(\pi = (\pi ^i)_{i\in N}\) with \(\pi ^i \in \varPi ^i\) for \(i\in N\). A joint strategy \(\pi = (\pi ^i)_{i\in N}\) is called pure if \(\pi ^i\) is pure for all \(i\in N\).

Expected rewards: Consider a joint strategy \(\pi \in \varPi \) and a path p. Suppose that the game has developed along the path p and that state \({\textsc {last}}(p)\) is now active. Suppose further that all players, starting at \({\textsc {last}}(p)\), follow the joint strategy \(\pi \), taking p as the history of the game. Denote the overall probability of absorption at t by \({{\mathbb {P}}}^{p,\pi }(t)\). In our model, where nonzero reward are only obtained in absorbing states, the expected reward for player \(i\in N\) can then be expressed as

Equilibria: Consider a joint strategy \(\pi \in \varPi \) and a game that has developed along the path p. The joint strategy \(\pi =(\pi ^i)_{i\in N}\in \varPi \) is called a (Nash) \(\varepsilon \)-equilibrium for path p, for some \(\varepsilon \ge 0\), if

which means that, given history p, no player i can gain more than \(\varepsilon \) by a unilateral deviation from his proposed strategy \(\pi ^i\) to an alternative strategy \(\sigma ^i\). The joint strategy \(\pi \) is called an \(\varepsilon \)-equilibrium for initial state \(s\in S\) if \(\pi \) is an \(\varepsilon \)-equilibrium for path (s). The joint strategy \(\pi \) is called a subgame perfect \(\varepsilon \)-equilibrium if \(\pi \) is an \(\varepsilon \)-equilibrium for every path p.

3.2 Strategic concepts and an update procedure

In this section, we introduce the strategic concepts that we need for the description of a subgame perfect \(\varepsilon \)-equilibrium. These concepts all involve the assignment of a real number to each of the non-absorbing states, represented by a real vector \(\alpha \in {{\mathbb {R}}}^S\). One of the key concepts in the paper is that of an \(\alpha \)-viable plan. These are plans g where every player who controls a state \(t\in S\) on g will receive a reward of at least \(\alpha _t\) when plan g is executed. The vector \(\alpha \) is chosen such that every plan that can possibly occur in a subgame perfect \(\varepsilon \)-equilibrium is surely contained in the set of \(\alpha \)-viable plans. Initially, the set of \(\alpha \)-viable plans may also contain plans that do not occur in any subgame perfect \(\varepsilon \)-equilibrium play for small enough \(\varepsilon \). Our aim is to eliminate those plans by increasing one or more coordinates of \(\alpha \) in an update procedure. The update procedure is repeated until no further increase in the coordinates of \(\alpha \) is possible. The final vector \(\alpha \) will then be used to construct a subgame perfect \(\varepsilon \)-equilibrium for every \(\varepsilon >0\).

Viable plans: For \(\alpha \in {{\mathbb {R}}}^S\), a plan g and a state \(t \in S\), we say that t is \(\alpha \)-satisfied by g if \(\phi _{i_t}(g) \ge \alpha _t\). We define \({\textsc {sat}}(g,\alpha ) = \{t \in S \mid t\hbox { is } \alpha \hbox {-satisfied by } g\}\). We say that plan g is \(\alpha \)-viable if \({\textsc {S}}(g) \subseteq {\textsc {sat}}(g,\alpha )\). This means that, if play is according to g, the controlling player of every non-absorbing state t that becomes active during play will receive a reward of at least \(\alpha _t\). For every state \(t \in S \cup S^*\), we denote the set of \(\alpha \)-viable plans g with \({\textsc {first}}(g) = t\) by \({\textsc {viable}}(t,\alpha )\). Notice that a plan of the form \(g = (t^*, t^*, \ldots )\) with \(t^*\in S^*\) is trivially \(\alpha \)-viable, since \({\textsc {S}}(g) = \varnothing \), and that the set \({\textsc {viable}}(t^*,\alpha )\) consists of only the plan \((t^*)\).

Compatible plans: Consider that a player \(i\in N\) can influence play by choosing a specific action if play visits one of his states, say \(t\in S\). Now, if every \(\alpha \)-viable plan after the selected action yields a strictly higher reward for player i than \(\alpha _t\), then \(\alpha _t\) can be increased without eliminating any plan that may occur in equilibrium. This idea formed the basis for the iterative procedure in Flesch et al. (2010b) and Kuipers et al. (2016). In those papers, it was sufficient to consider only one state at a time per iteration to eventually eliminate all non-equilibrium plans.

The approach fails for the trivial 1-player game in Fig. 3. Note that this game has one subgame perfect equilibrium, which is for the one player to never terminate the game. The values \(\alpha _{1_A} = \alpha _{1_B} = 0\) correspond to this equilibrium. As an illustration, we set \(\alpha _{1_A} = \alpha _{1_B} = -1\), which are the rewards of the game at termination. Then every plan is \(\alpha \)-viable. If player 1 specifies action \(1_B \in A(1_A)\) for when state \(1_A\) is visited, but does not specify a particular action for when \(1_B\) is visited, then termination at \(1_B\) is in accordance with the specification and \(\alpha \)-viable. An iterative procedure would reflect this by letting \(\alpha \) unchanged and thus fail to eliminate any of the absorbing plans. Our iterative procedure should reflect the fact that player 1 is able to coordinate his actions in \(1_A\) and \(1_B\). We therefore consider that a player can select an action at multiple states simultaneously. This leads to the definition of compatible plans.

For \(\alpha \in {{\mathbb {R}}}^S\) and \(t,u\in S\), we say that state t is \(\alpha \)-safe at state u if \(t \in {\textsc {sat}}(\)g\(,\alpha )\) for all \(g \in {\textsc {viable}}(u,\alpha )\). For \(t^*\in S^*\), it will be convenient to say that \(t^*\) is \(\alpha \)-safe at \(t^*\). We define, for all \(t \in S\),

For \(\alpha \in {{\mathbb {R}}}^S\) and a non-empty set \(F\subseteq S\), we say that F is an \(\alpha \)-plateau if there exists \(i\in N\) such that \(i_t = i\) for all \(t\in F\) and if \(\alpha _s = \alpha _t\) for all \(s,t\in F\). An \(\alpha \)-plateau that is maximal with respect to inclusion is called an \(\alpha \)-level.

For \(\alpha \in {{\mathbb {R}}}^S\), we say that a function \(U : F \rightarrow S \cup S^*\) is an \(\alpha \)-safe combination if the domain F of U is an \(\alpha \)-plateau and if we have \(U(t)\in {\textsc {safestep}}(t,\alpha )\) for all \(t \in F\). If the domain of an \(\alpha \)-safe combination \({\mathcal {U}}\) is not explicitly specified, then it will be denoted by F(U). We denote the set of all \(\alpha \)-safe combinations by \({\mathcal {U}}(\alpha )\) and the set of \(\alpha \)-safe combinations with given domain F by \({\mathcal {U}}(F,\alpha )\).

For a plan g and an \(\alpha \)-safe combination U, we now say that plan g is U-compatible if, for every state \(t\in {\textsc {S}}(g) \cap F(U)\), the first occurrence of t on g is followed by U(t). A path p is U-compatible if, for every state \(t\in {\textsc {S}}(g) \cap F(U)\), the first occurrence of t on p is followed by U(t) unless the first occurrence of t is at the end of p. For every \(t\in S\) we denote the set of plans in \({\textsc {viable}}(t, \alpha )\) that are U-compatible by \({\textsc {viacomp}}(t, U, \alpha )\).

Now consider again the 1-player game in Fig. 3, where we set \(\alpha \) by \(\alpha _{1_A} = -1\) and \(\alpha _{1_B} = -1\). We define U by \(U(1_A) = 1_B\) and \(U(1_B) = 1_A\). Notice that U is indeed an \(\alpha \)-safe combination. Also notice that the \(\alpha \)-viable plans \((1_A,1_A^*)\), \((1_A,1_B,1_B^*)\), \((1_B,1_B^*)\), and \((1_B,1_A,1_A^*)\) are not elements of \({\textsc {viacomp}}(t, U, \alpha )\). Nevertheless, there are still plans in \({\textsc {viacomp}}(t, U, \alpha )\) that should be eliminated if we wish to find the unique subgame perfect equilibrium associated with this example. The plans \((1_A,1_B,1_A, 1_A^*)\) and \((1_B,1_A,1_B,1_B^*)\) are examples of this. The set \({\textsc {viacomp}}(t, U, \alpha )\) thus only serves as a pre-selection of plans that are subject to further scrutiny to see if they can remain. For this, we introduce the concept of an admissible plan.

Admissible plans: Consider again the game depicted in Fig. 1, where we set \(\alpha \) by \(\alpha _{s_1} = -1\) and \(\alpha _{s_2} = 2\). We define U by \(U(s_1) = s_2\). Then U is an \(\alpha \)-safe combination, and the plan \((s_1,s_2,s_1,s_1^*)\) is an element of \({\textsc {viacomp}}(t, U, \alpha )\). Here, we do not wish to eliminate the plan, as it is a plan that can occur in equilibrium play. The reason this plan will be considered admissible is the fact that player 2, who controls state \(s_2\) on the plan, can threaten player 1 with termination of the game at \(s_2\). If player 1 does not follow the plan and nominates \(s_2\) always when \(s_1\) is active, then the threat will eventually become reality if player 2 places a small probability on executing the threat. So the intuition here is that player 1 has no possibility to force a better outcome than \((s_1,s_2,s_1,s_1^*)\). This is in contrast with the plan \((1_A,1_B,1_A,1_A^*)\) for the game in Fig. 3, where player 1 can easily force a non-absorbing plan without the possibility of retaliation. The formal criteria for admissibility distinguishes between these two situations and are given below.

Let \(\alpha \in {{\mathbb {R}}}^S\), let \(U\in {\mathcal {U}}(\alpha )\), and let \(t\in F(U)\). For a plan \(g\in {\textsc {viacomp}}(t, U, \alpha )\), we say that g is \((t, U, \alpha )\)-admissible if it satisfies at least one of the following four conditions.

-

AD-i

\(\alpha _t > 0\) or there exists a state x on g with \(i_x = i_t\) and \(\alpha _x > \alpha _t\) that appears on g before any state of F(U) has appeared for the second time;

-

AD-ii

g is non-absorbing;

-

AD-iii

each state of F(U) occurs at most once on g;

-

AD-iv

there exists a threat pair (x, v) for g. Here, x and v are a state and a plan respectively that satisfy the following properties:

-

(a)

\(x\in S\) and x appears on g before any state of F(U) has appeared for the second time on g,

-

(b)

\(i_{x} \ne i_t\),

-

(c)

v is an \(\alpha \)-viable plan with \({\textsc {first}}(v)\in A(x)\),

-

(d)

\({\textsc {first}}(v)\) differs from the state on g that follows the first occurrence of x on g,

-

(e)

\(x, t \notin {\textsc {sat}}(v, \alpha )\).

-

(a)

We denote the set of plans that are \((t, U, \alpha )\)-admissible by \({\textsc {admiss}}(t, U, \alpha )\). We can gain some additional insight in the definition of a \((t,U,\alpha )\)-admissible plan, by considering, for a plan \(g\in {\textsc {viacomp}}(t, U, \alpha )\) an associated plan \(g^U\). Plan \(g^U\) is the plan where player \(i_t\) chooses his selected actions defined by U always, and the other players keep their actions the same as in g. We may have \(g^U = g\), for example when every state in F(U) appears at most once on g. The plans g and \(g^U\) may also differ, which happens when at least one state of \(t\in F(U)\) appears at least twice on g and t is not always followed by U(t). In the latter case \(g^U\) is a non-absorbing plan, where a certain part of g is followed infinitely many times. We compare the plans g and \(g^U\). If the comparison comes out in favor of \(g^U\), then plan g can be discarded, i.e., plan g will not be considered admissible. Let us interpret the conditions for admissibility one by one in this way.

Condition AD-i: If \(\alpha _t >0\) and the plan \(g^U\) is non-absorbing, then \(g^U\) gives a lower reward to \(i_t\) than g does. If \(\alpha _t >0\) and the plan \(g^U\) is absorbing then \(g^U = g\). In either case, g cannot be discarded in favor of \(g^U\). If there exists x on the plan g with \(i_x = i_t = i\) and \(\alpha _x > \alpha _t\), then g is guaranteed to give a strictly higher reward than \(\alpha _t\). Here, we keep g because this will not hinder an increase in \(\alpha _t\). Also, it will be convenient to exclude this situation when we later consider plans that satisfy AD-iv, but not AD-i, AD-ii, or AD-iii.

Condition AD-ii: If g is non-absorbing, then both g and \(g^U\) are non-absorbing, with the same reward 0. There is therefore no reason to discard g.

Condition AD-iii: If each state of F(U) appears at most once on g, then \(g=g^U\).

Condition AD-iv: This describes a situation, where a player other than \(i_t\), who controls a state x on the U-compatible plan g, has the possibility to switch from g to an \(\alpha \)-viable plan v with \(t,x\notin {\textsc {sat}}(v,\alpha )\). Due to condition AD-iv-(a), state x is also on plan \(g^U\). State x does not necessarily lie on the part of \(g^U\) that is repeated, but to obtain intuition we assume that state x does lie on that part. Now imagine that the players are supposed to follow plan g, except for the player \(i_x\), who is required to place a very small probability on the switch to v when state x is active. Then play will be according to g with very high probability if players indeed follow this prescription. If however player \(i_t\) deviates by always playing U(s) for all \(s\in F(U)\) when s is active, in an attempt to force play according to \(g^U\), then this will eventually fail, since the switch to v will then be made with probability 1. Thus, the deviation by player \(i_t\) is not profitable for him, since \(t\notin {\textsc {sat}}(v,\alpha )\). The requirement \(x\notin {\textsc {sat}}(v,\alpha )\) is there because player \(i_x\) should not be tempted to increase the probability of a switch to v. These considerations are the motivation to call g admissible and to not discard g in favor of \(g^U\).

An update procedure: Let \(\alpha \in {{\mathbb {R}}}^S\) and let \(U\in {\mathcal {U}}(\alpha )\). We define, for all \(t\in F(U)\),

We use the convention \(\min \varnothing = \infty \), so that \(\beta (t, U, \alpha )\) is well defined for all \(t\in F(U)\).

We also define

Note that the plans in \({\textsc {admiss}}(t, U, \alpha )\) are all \(\alpha \)-viable, for every \(t\in F(U)\). Thus, \(\beta (t,U,\alpha ) \ge \alpha _t\) for all \(t\in F(U)\), and hence also \(\gamma (U,\alpha ) \ge \alpha _t\) for any representative \(t\in F(U)\). One can interpret the number \(\gamma (U,\alpha )\) as the worst possible reward for the player controlling the states of F(U) when play visits a state \(t\in F(U)\) and if he selects action U(t) when this happens.

Now, we replace in \(\alpha \) the number \(\alpha _t\) by the number \(\gamma (U, \alpha )\) at every coordinate t with \(t \in F(U)\). Let us denote the updated vector by \(\delta (U,\alpha )\).

The update procedure performs a simultaneous update on the states of a given \(\alpha \)-plateau. The idea is to repeat the procedure over and over until the updates do not change any \(\alpha \)-values, for any given \(\alpha \)-plateau.

Example 3

The example in Fig. 4 represents a game with 3 players and with 4 non-absorbing states.

The game admits the following subgame perfect \(\varepsilon \)-equilibrium. When state \(1_a\) is active, player 1 should nominate state 2, when state 2 is active, player 2 should nominate state \(1_b\), and when state \(1_b\) is active, player 1 should nominate state 3 with high probability and place a small probability on terminating the game. Finally, player 3 should terminate the game when state 3 is active. We will go through the process of updating to see how it all works.

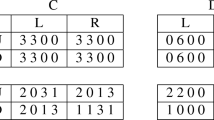

Initialization: We choose \(\alpha \) such that every plan in equilibrium play will surely be \(\alpha \)-viable. A good choice is to set \(\alpha _t\) at the reward for player \(i_t\) if play terminates at t. Thus, we set

Iteration 1: To obtain an overview of all \(\alpha \)-safe combinations, we determine the sets \({\textsc {safestep}}(t,\alpha )\) for all \(t\in S\). The result can be seen in the picture below, where (x, y) is represented by a solid arrow if \(y\in {\textsc {safestep}}(x,\alpha )\) and by a dashed arrow otherwise (Fig. 5).

Note that an \(\alpha \)-safe combination U with \(U(t) = t^*\) for all \(t\in F(U)\) will not lead to an increase of any \(\alpha \)-value. Thus, there is only one \(\alpha \)-safe combination of interest, which is defined by \(U(2) = 1_b\). For the update associated with this choice of U, it is important to see that the plans \((2,1_b,1_a,1_a^*)\) and \((2,1_b,1_a,2,2^*)\) are not \(\alpha \)-viable, and therefore not in \({\textsc {admiss}}(2,U,\alpha )\). Here, the set \({\textsc {admiss}}(2,U,\alpha )\) coincides with \({\textsc {viacomp}}(2,\alpha )\) and these sets consist of the plans that start at 2, then visit \(1_b\), and terminate either at \(1_b\) or at 3. Thus, \(\beta (2,U,\alpha ) = \gamma (U,\alpha ) = 2\) and we update the value of \(\alpha _2\) from 1 to 2. We proceed with

Iteration 2: We now have (Fig. 6)

There is again one \(\alpha \)-safe combination of interest, defined by \(U(1_a) = 2\). Here, the plan \((1_a,2,1_b,1_b^*)\) is \((1_a,U,\alpha )\)-admissible due to condition AD-iii. It is also the plan that determines the number \(\beta (1_a,U,\alpha ) = \gamma (U,\alpha )\). We thus have \(\gamma (U,\alpha ) = -1\). We proceed with

Iteration 3: We now have (Fig. 7)

Here, we have several \(\alpha \)-safe combinations to consider, all involving the states of player 1. The good choice is to define U by \(U(1_a) = 2\) and \(U(1_b) = 3\). Observe that all plans in \({\textsc {viacomp}}(1_a,U,\alpha )\) and \({\textsc {viacomp}}(1_b,U,\alpha )\) terminate at state 3, and that \({\textsc {admiss}}(t,U,\alpha ) = {\textsc {viacomp}}(t,U,\alpha )\) for \(t = 1_a,1_b\). Thus, \(\beta (1_a,U,\alpha ) = \beta (1_b,U,\alpha ) = 2\). The updated vector \(\alpha \) is defined by

Iteration 4: Further attempts to update \(\alpha \) do not lead to an increase in any of its coordinates. The current \(\alpha \)-values indicate that, under equilibrium play, the game will terminate at state 3 (with high probability) (Fig. 8).

A final calculation will demonstrate how the subgame perfect \(\varepsilon \)-equilibrium should be played. Note that \(1_a \in {\textsc {safestep}}(3,\alpha )\). We define the \(\alpha \)-safe combination U by \(U(3) = 1_a\). Then the plan \((3,1_a,2,1_b,3,3^*)\) is an element of \({\textsc {viacomp}}(3,U,\alpha )\). Observe that this plan is also element of \({\textsc {admiss}}(3,U,\alpha )\) due to condition AD-iv: The threat pair is \((1_b,v)\) with \(v = (1_b^*)\), which is also the threat pair needed in equilibrium play.

4 Construction of a subgame perfect \(\varepsilon \)-equilibrium

4.1 Introduction

For this section, we choose an arbitrary game G in the class \({\mathcal {G}}\). We also choose the parameter \(\varepsilon > 0\). We keep G and \(\varepsilon \) fixed throughout this section and we prove that the game G has a subgame perfect \(\varepsilon \)-equilibrium.

For the description of a subgame perfect \(\varepsilon \)-equilibrium for G, we will use the fact that a vector \(\alpha ^*\in {{\mathbb {R}}}^S\) exists with the following properties:

- F-i::

-

For every \(t\in S\), we have \({\textsc {viable}}(t,\alpha ^*) \ne \varnothing \).

- F-ii::

-

For every \(U \in {\mathcal {U}}(\alpha ^*)\), we have \(\delta (U, \alpha ^*) = \alpha ^*\).

The proof that such a vector indeed exists is delayed until Sect. 5. The properties F-i and F-ii essentially describe the existence of a fixed point for the update procedure from Sect. 3.

Property F-i can be used to formulate a (pure) joint strategy such that, for every state \(t\in S\) that is visited during play, player \(i_t\) can expect a reward of at least \(\alpha _t^*\). This can be achieved by prescribing an \(\alpha ^*\)-viable plan that should be executed in its entirety with probability 1.

Property F-ii can be used to formulate a (pure) joint strategy such that, for every state \(t\in S\) that is visited during play, player \(i_t\) can expect a reward of at most \(\alpha _t^*\) if he plays an action that is not prescribed by the joint strategy. This can be achieved by selecting a new \(\alpha ^*\)-viable plan to follow after a deviation. The following lemma shows that property F-ii makes the selection of such a new plan indeed possible.

Lemma 1

Let \(t\in S\) and \(u\in A(t)\).

-

(i)

If \(u\in {\textsc {safestep}}(t,\alpha ^*)\), then there exists \(g\in {\textsc {viable}}(u,\alpha ^*)\) with \(\phi _{i_t}(g) = \alpha ^*_t\).

-

(ii)

If \(u\notin {\textsc {safestep}}(t,\alpha ^*)\), then there exists \(g\in {\textsc {viable}}(u,\alpha ^*)\) with \(\phi _{i_t}(g) < \alpha ^*_t\).

Proof

Proof of (i): Let \(t\in S\) and let \(u\in {\textsc {safestep}}(t, \alpha ^*)\). Denote by U be the \(\alpha ^*\)-safe combination with domain \(\{t\}\) and with \(U(t) = u\). By property F-ii, we have

By definition of the number \(\beta (t,U,\alpha ^*)\), there exists a plan \(h\in {\textsc {admiss}}(t,U,\alpha ^*)\) with \(\phi _{i_t}(h) = \beta (t,U,\alpha ^*)\). Thus, we have \(\phi _{i_t}(h) = \alpha ^*_t\). The plan h is \(\alpha ^*\)-viable, since \({\textsc {admiss}}(t,U,\alpha ^*)\) is by definition a subset of \({\textsc {viable}}(t,\alpha ^*)\). The part of h that starts at the second state (i.e., at u) is the required plan \(g\in {\textsc {viable}}(u,\alpha ^*)\) with \(\phi _{i_t}(g) = \alpha ^*_t\).

Claim (ii) of the lemma follows by the definition of the set \({\textsc {safestep}}(t,\alpha ^*)\). \(\square \)

An informal description of a subgame perfect \(\varepsilon \) -equilibrium. Consider a deterministic joint strategy, where initially, an \(\alpha ^*\)-viable plan is selected for the players to follow in its entirety. Only if a player deviates, a new \(\alpha ^*\)-viable plan is selected, such that the new plan minimizes the payoff to the deviating player. Note that a single deviation or even finitely many deviations do not profit a deviating player, by the result of Lemma 1. If \(\alpha ^*\ge 0\), then infinitely many deviations do not help the deviating player either. Indeed, if \(\alpha ^*\ge 0\), then the formulated joint strategy constitutes a subgame perfect 0-equilibrium. The situation is more complex when the vector \(\alpha ^*\) has negative coordinates. The game of Example 1 is typical for this situation, where play according to a subgame perfect \(\varepsilon \)-equilibrium was achieved by placing a small probability on a non-credible threat. This way of playing a subgame perfect \(\varepsilon \)-equilibrium can be generalized to work for every game in our class. Specifically, at each stage of the game, the players are given a prescription of play that consists of a main plan g and possibly, depending on the properties of g, a threat pair (x, v) for g. If the prescription consists of only the main plan g, then the players are supposed to follow plan g. If the prescription consists of a main plan g together with a threat pair (x, v), then the players are supposed to follow plan g until the first occurrence of state x on g is reached. The controlling player of state x is then required to perform a lottery, where he places a high probability on the continuation of plan g and a small probability on the switch to plan v.

A joint strategy can now be formulated as follows. The game begins with an initial prescription, which could be any \(\alpha ^*\)-viable main plan g. A new prescription is selected when a player does not choose an action with positive probability according to the current prescription. Note that the lottery player may deviate from the prescription without instigating a new prescription, as long as he chooses a continuation of the main plan or a switch to the threat plan. A new prescription is chosen such that its main plan minimizes the reward of the deviating player among the available admissible plans. Note that a threat pair (x, v) can be part of the new prescription only if the main plan g is admissible due to condition AD-iv, as threat pairs are defined only for such plans. If this happens, then the execution of plan v is indeed a threat to the deviating player (who is identified as the player controlling the initial state of g), since this player strictly prefers g over v, by AD-iv-(e). Moreover, the execution of v is a non-credible threat, since player \(i_x\), who must make the switch from g to v, also strictly prefers g over v, by AD-iv-(e). A non-credible threat makes sure that player \(i_x\) cannot make a profit by increasing the probability of a switch to v.

Now, prescriptions consisting of a main plan with a threat pair are essentially there to make it impossible for a deviating player to deviate infinitely many times. Conceivably however, the deviating player may still establish infinite play when lotteries with a threat are prescribed as retaliation for his deviations. This would happen if the deviating player became active again and again after every deviation, before the lottery state is reached and before absorption takes place. By an appropriate choice of the prescriptions, we can however establish a bound on the number of times that a deviating player can avoid absorption or the execution of a lottery. This will ensure a lottery at more or less regular intervals and finally execution of the threat plan with probability 1 when a player keeps deviating.

In Sect. 4.2, we will establish a ranking of the states of each \(\alpha ^*\)-level. The ranking will be the tool to make sure that infinitely many deviations cannot occur. In Sect. 4.3, we give a description of a joint strategy \(\pi _{\varepsilon }\), which is the detailed and complete version of the description given here. Then, in Sect. 4.4 we prove our main result, which is that \(\pi _{\varepsilon }\) is a subgame perfect \(\varepsilon \)-equilibrium.

Let us choose \(\alpha ^*\in {{\mathbb {R}}}^S\) such that it has properties F-i and F-ii and let us keep \(\alpha ^*\) fixed for the remainder of this section.

4.2 A ranking of the states

Let \(U\in {\mathcal {U}}(\alpha ^*)\). We will be interested in all admissible plans that can be associated with the \(\alpha ^*\)-safe combination U. We define therefore

For every \(g\in {\textsc {admiss}}(U,\alpha ^*)\), we wish to identify the set of states on g, where the deviating player could sensibly deviate from g, to avoid a lottery or to avoid absorption at a state with negative reward for him. The following definition of the set D(g, U) simply lists the cases. We define, for \(g\in {\textsc {admiss}}(U,\alpha ^*)\), the set D(g, U) by

-

D-i

\(D(g,U) = \varnothing \) if g satisfies AD-i or AD-ii. (Here, infinitely many deviations do not profit the deviating player.)

-

D-ii

If g violates the conditions AD-i and AD-ii, but satisfies condition AD-iii, then we define \(D(g,U) = {\textsc {S}}(g^-) \cap F(U)\), where \(g^-\) is the part of g that starts at the second state of g. (Here, any deviation before absorption could be profitable, if it could be infinitely repeated.)

-

D-iii

In all other cases, i.e., if g violates the conditions AD-i, AD-ii, and AD-iii, but satisfies condition AD-iv, there exists a threat pair (x, v) for g. In this case, we choose state x as close as possible to the initial state of g, and we define D(g, U) as the set of states in F(U) that appear on g from the second state of g until the first occurrence of x on g. (Here, a deviation should really be before the lottery, as there are only finitely many opportunities available after the lottery.)

Notice that the first state of g can only be a member of D(g, U) if that state reappears on g. This is because we will interpret the first state of a plan in \({\textsc {admiss}}(U,\alpha ^*)\) as the state where a deviation just took place and the second state as the deviation.

Let \(t\in S\) and \(u\in A(t)\). Imagine that the choice for \(u\in A(t)\) at state t is not according to the prescription and that \(u\in {\textsc {safestep}}(t,\alpha ^*)\). Then, for the purpose of punishment, we choose \(U\in {\mathcal {U}}(\alpha ^*)\) with \(t\in F(U)\) and \(U(t) = u\), and a plan g in \({\textsc {admiss}}(t,U,\alpha ^*)\) that minimizes the reward to player \(i_t\). By property F-ii, the reward equals \(\alpha ^*_t\). Ideally, we choose g such that also \(D(g,U) = \varnothing \) holds. This may not always be possible, but we do have the following lemma.

Lemma 2

For every \(U\in {\mathcal {U}}(\alpha ^*)\), there exists \(g\in {\textsc {admiss}}(U,\alpha ^*)\) with \(D(g,U) = \varnothing \) and with \(\phi _{i}(g) = \alpha _t^*\), where i is the controlling player of the states in F(U) and t is any state in F(U).

Proof

Let \(U\in {\mathcal {U}}(\alpha ^*)\). Because we have \(\delta (U,\alpha ^*) = \alpha ^*\) by F-ii, there exists \(s\in F(U)\) with \(\beta (s,U,\alpha ^*) = \alpha _s^*\). Further, by the definition of the number \(\beta (s,U,\alpha ^*)\), there exists a plan \(h\in {\textsc {admiss}}(s,U,\alpha ^*)\) with \(\phi _{i_s}(h) = \beta (s,U,\alpha ^*)\), hence with \(\phi _{i_s}(h) =\alpha _s^*\). Now, if \(D(h,U) = \varnothing \), then the claim of the lemma follows immediately by setting \(t=s\) and \(g=h\). We assume further that \(D(h,U) \ne \varnothing \). This rules out the possibility that h satisfies AD-i or AD-ii. We distinguish between the two remaining possibilities.

Case 1: Assume that plan h satisfies AD-iii. Then each element of D(h, U) is visited exactly once on h. We define t as the state of D(h, U) that is visited last on h and we define g as the plan with \({\textsc {first}}(g) = t\) that follows the prescription of h from the unique occurrence of t on h. It is obvious that \(g\in {\textsc {admiss}}(t,U,\alpha ^*)\) due to property AD-iii and that \(D(g,U) = \varnothing \).

Case 2: Assume that plan h satisfies AD-iv but not AD-iii. Then there exists a threat pair (x, v) for h. By condition AD-iv, every element of D(h, U) is visited exactly once on h before the first occurrence of x on h. Define t as the state of D(h, U) visited last on h before x. Construct plan g with \({\textsc {first}}(g) = t\) as follows.

Follow plan h from the first occurrence of t on h until the next occurrence of a state in F(U), say r. If r is a state of F(U) that is visited for the first time during the construction of g and if the corresponding location of r on h is not the first occurrence of r on h, then we jump back to the first occurrence of r on h. From there, we follow h again. We proceed, jumping back to an earlier location on h every time a state of F(U) is visited for the first time during construction of g and if the corresponding location on h is not the first occurrence of that state on h.

The construction trivially results in a plan g with \({\textsc {S}}(g) \subseteq {\textsc {S}}(h)\). It is also clear that a jump back during the construction can occur only a finite number of times. The resulting plan g will therefore have its tail the same as h, which implies that \(\phi (g) = \phi (h)\). It follows that \({\textsc {S}}(g) \subseteq {\textsc {S}}(h) \subseteq {\textsc {sat}}(h,\alpha ^*) = {\textsc {sat}}(g,\alpha ^*)\), proving that g is \(\alpha ^*\)-viable. Further, g is U-compatible, since at the first visit of a state in F(U) during construction of g, the action of that state’s first occurrence on h is copied to g. Thus, \(g\in {\textsc {viacomp}}(s,U,\alpha ^*)\).

Notice that the construction of g starts at the first occurrence of t on h, after which the construction of g proceeds uninterrupted by jump backs until x is reached. Indeed, by the choice of t, there are no states of F(U) on h between t and x where such a jump back might occur. This demonstrates obviously that x appears on plan g, that the only element of F(U) appearing on g before x is t, and that t appears exactly once before x. Thus, the threat pair (x, v) for h can also serve as threat pair for plan g, and we may conclude that \(g\in {\textsc {admiss}}(t,U,\alpha ^*)\) due to property AD-iv. Now, if g does not satisfy AD-iii, then definition D-iii applies, and we may conclude that D(g, U) consists of the states of \(F(U){\setminus } \{t\}\) that appear before x on g. That is, we may conclude \(D(g,U) = \varnothing \).

It remains to prove that AD-iii does not apply to g, i.e., that one of the states of F(U) appears more than once on g. By assumption, plan h does not satisfy AD-iii, so we have a state \(r\in F(U)\) that appears more than once on h. At least one of the occurrences of r on h comes after the first occurrence of x on h, as all states of F(U) before x are different. It follows that state r appears on plan g, since obviously, all states on h that come after t are eventually visited during the construction of g. If the first appearance of r during the construction of g is upon arrival at the first location of r on h, then r will obviously reappear during the construction of g at a later stage, at the latest upon arrival at the second location of r on h. If the first appearance of r during the construction of g corresponds to the arrival at the second location of r on plan h, then a jump back to the first location on h will take place. Then too state r will reappear during the construction of g, as there will be another arrival at the second location of r on h. \(\square \)

The result of Lemma 2 does not guarantee that, for a given \(t\in S\) and \(u\in {\textsc {safestep}}(t,\alpha ^*)\), an appropriate \(U\in {\mathcal {U}}(\alpha ^*)\) and \(g\in {\textsc {admiss}}(t,U,\alpha ^*)\) exist that we consider ideal for punishment. However, the result is sufficient to prove that, for an arbitrary \(\alpha ^*\)-plateau F, there is at least one \(t\in F\), such that for every \(u\in {\textsc {safestep}}(t,\alpha ^*)\), an ideal pair \(U\in {\mathcal {U}}(\alpha ^*)\) and \(g\in {\textsc {admiss}}(t,U,\alpha ^*)\) for punishment exists.

Let F be an \(\alpha ^*\)-plateau and let \(t\in F\). Say that t is tied to F if, for every \(u\in {\textsc {safestep}}(t,\alpha ^*)\), there exists \(U\in {\mathcal {U}}(F,\alpha ^*)\) with \(U(t) = u\) and a plan \(g\in {\textsc {admiss}}(t,U,\alpha ^*)\) with \(\phi _{i_t}(g) = \alpha _t^*\) and \(D(g,U) = \varnothing \). We define

Lemma 3

For every \(\alpha ^*\)-plateau F, the set \({\textsc {tied}}(F)\) is a non-empty subset of F.

Proof

Let F be an \(\alpha ^*\)-plateau and suppose that \({\textsc {tied}}(F) = \varnothing \). Then, for every \(s\in F\), we can choose \(u_s \in {\textsc {safestep}}(s,\alpha ^*)\) such that, for all \(U\in {\mathcal {U}}(F,\alpha ^*)\) with \(U(s) = u_s\), every plan \(v\in {\textsc {admiss}}(s,U,\alpha ^*)\) satisfies \(D(v,U) \ne \varnothing \) or \(\phi _{i_s}(v) > \alpha _s^*\).

Now, define \({\widehat{U}}: F \rightarrow S\cup S^*\) by \({\widehat{U}}(s) = u_s\) for all \(s\in F\). Then obviously \({\widehat{U}}\in {\mathcal {U}}(F,\alpha ^*)\). By Lemma 2, we can choose \(t\in F\) and \(g\in {\textsc {admiss}}(t,{\widehat{U}},\alpha ^*)\) such that \(D(g,{\widehat{U}}) = \varnothing \) and \(\phi _{i_t}(g) = \alpha _t^*\). On the other hand, by the fact that \({\widehat{U}}(t) = u_t\), every plan \(v\in {\textsc {admiss}}(t,{\widehat{U}},\alpha ^*)\) satisfies \(D(v,{\widehat{U}}) \ne \varnothing \) or \(\phi _{i_t}(v) > \alpha ^*\). Contradiction. \(\square \)

Let us apply Lemma 3 to an \(\alpha ^*\)-level L. The fact that \({\textsc {tied}}(L)\) is non-empty shows that there exist states in L, where a deviation can always be retaliated by an ideal punishment plan, that is, a punishment plan which avoids all states of L, until absorption or until a lottery is executed. Let us apply Lemma 3 again, now to the set \(L{\setminus } {\textsc {tied}}(L)\) (assuming that this set is non-empty). The lemma then shows that there is a non-empty subset of states of \(L{\setminus } {\textsc {tied}}(L)\), where any deviation can be retaliated by a plan that may visit other states of L before absorption or lottery, but only those in \({\textsc {tied}}(L)\). So, after a deviation at a state in \({\textsc {tied}}(L{\setminus } {\textsc {tied}}(L))\), another deviation before absorption or lottery may be possible, but after the second deviation, there will be an ideal punishment plan in place. This suggests that an \(\alpha ^*\)-level L can be partitioned into a hierarchy of \(\alpha ^*\)-plateaus, where each plateau is given a rank indicating the maximum number of deviations to go before an ideal punishment plan is in place.

Let L be an \(\alpha ^*\)-level. We define

Then, for \(k > 1\), we define recursively

We stop the recursive definitions when \(\cup _{1}^{k} {\textsc {rank}}(\ell ,L) = L\). It follows by repeated application of Lemma 3 that the process will indeed terminate, say at iteration K, and that the sets \({\textsc {rank}}(k,L)\) for \(k = 1, \ldots , K\) form a partition of L. We define the rank of a state \(t\in L\) as the unique index k for which \(t\in {\textsc {rank}}(k,L)\) and we denote its rank by \({\textsc {r}}(t)\).

We now demonstrate, for a state \(t\in L\) and a deviation \(u\in {\textsc {safestep}}(t,\alpha ^*)\), a punishment plan exists that, insofar it visits other states of L before absorption or lottery, it only visits those of rank strictly less than \({\textsc {r}}(t)\). The implication is that at state t, at most \({\textsc {r}}(t)\) deviations are possible (including the one at t) before absorption or a lottery will take place.

Lemma 4

Let L be an \(\alpha ^*\)-level, let \(t\in L\), and let \(F = \{x\in L\mid {\textsc {r}}(x) \ge {\textsc {r}}(t)\}\). Then, for all \(u\in {\textsc {safestep}}(t,\alpha ^*)\), there exists \(U\in {\mathcal {U}}(F,\alpha ^*)\) with \(U(t) = u\), together with a plan \(g\in {\textsc {admiss}}(t,U,\alpha ^*)\), such that \(D(g,U) = \varnothing \) and \(\phi _{i_t}(g) = \alpha _t^*\).

Proof

We have \({\textsc {rank}}({\textsc {r}}(t),L) = {\textsc {tied}}(L{\setminus } \cup _{\ell = 1}^{{\textsc {r}}(t) - 1} {\textsc {rank}}(\ell ,L))\) by the recursive definition, and we have \(F = L{\setminus } \cup _{\ell = 1}^{{\textsc {r}}(t) - 1} {\textsc {rank}}(\ell ,L)\) by the definition of F. Thus, we have \(t\in {\textsc {rank}}({\textsc {r}}(t),L) = {\textsc {tied}}(F)\). Now, let \(u\in {\textsc {safestep}}(t,\alpha ^*)\). Then, by definition of the set \({\textsc {tied}}(F)\) and by the fact that t is an element of this set, there exists an \(\alpha ^*\)-safe combination \(U\in {\mathcal {U}}(F,\alpha ^*)\) with \(U(t) = u\) and a plan \(g\in {\textsc {admiss}}(t,U,\alpha ^*)\) such that \(D(g,U) = \varnothing \) and \(\phi _{i_t}(g) = \alpha ^*_t\). \(\square \)

4.3 Description of the joint strategy \(\pi _{\varepsilon }\)

We will associate with each pair (t, u) with \(t\in S\) and \(u\in A(t)\) a main plan \(g^{tu}\). In some cases, depending on the properties of the main plan \(g^{tu}\), we may associate additionally a threat pair \((x^{tu},v^{tu})\) with (t, u). These plans and combinations of a plan and a threat pair will be used in prescription for play as outlined in Sect. 4.1.

Let \(t\in S\) and \(u\in A(t)\).

-

Case 1: \(\alpha _t^*\ge 0\). Then we choose \(g^{tu} \in {\textsc {viable}}(u,\alpha ^*)\) with \(\phi _{i_t}(g^{tu}) \le \alpha _t^*\). This is possible by Lemma 1. We do not choose a threat pair.

-

Case 2: \(\alpha _t^*< 0\) and \(u\notin {\textsc {safestep}}(t,\alpha ^*)\). Then we choose \(g^{tu} \in {\textsc {viable}}(u,\alpha ^*)\) with \(\phi _{i_t}(g^{tu}) < \alpha _t^*\). This is possible by Lemma 1. We do not choose a threat pair.

-

Case 3: \(\alpha _t^*< 0\) and \(u\in {\textsc {safestep}}(t,\alpha ^*)\). Then let L denote the \(\alpha ^*\)-level to which t belongs and let \(F = \{x\in L\mid {\textsc {r}}(x) \ge {\textsc {r}}(t)\}\). By Lemma 4, there exists \(U\in {\mathcal {U}}(F,\alpha ^*)\) with \(U(t) = u\) and a plan \(g^{tu}\in {\textsc {admiss}}(t,U,\alpha ^*)\) such that \(\phi _{i_t}(g^{tu}) = \alpha _t^*\) and such that \(D(g^{tu},U) = \varnothing \). If \(g^{tu}\) is admissible due to condition AD-iv and not due to AD-i, AD-ii, or AD-iii then we choose additionally a threat pair \((x^{tu},v^{tu})\) for \(g^{tu}\).

(The reader may note that the plan \(g^{tu}\) starts at t in Case 3, and that it starts at u in Cases 1 and 2. This is inconsequential regarding its use as a prescription. In all three cases, if the prescription becomes current, the active state is already u when that happens.)

In Table 1, we listed the choices of the main plan \(g^{tu}\) and the threat pair \((x^{tu},v^{tu})\) for the game of Example 3, for every (t, u).

With the above choices, we are set to formulate a joint strategy in the way that was already outlined in 4.1, by providing a prescription for play at every stage of the game. Here, we fill in the details.

The prescription for the players, at any stage, is given in two possible forms. A type I prescription consists of a main plan g alone. A type II prescription consists of a main plan g together with a threat pair (x, v). If the prescription is of type I, then the players are supposed to follow the main plan g in its entirety. If the prescription is of type II, then the players are supposed to follow the main plan g until the first occurrence of x on g. The player who controls x is then required to perform a lottery, where it is decided whether plan g is continued or whether a switch to plan v is made. A type II prescription will only be current until the lottery. After the lottery, a prescription of type II automatically reduces to a prescription of type I. It reduces to the main plan g if the lottery player chose continuation of g, or to the threat plan v if the lottery player decided to make the switch to v.

A renewal of the prescription becomes necessary if one of the players chooses an action with zero probability according to the current prescription. If this should happen, the new prescription becomes the one associated with the pair (t, u), where \(t\in S\) is the state where the deviation took place, and \(u\in A(t)\) is the state that was nominated.

To complete our description of a joint strategy, it remains to provide the specifics of the lotteries that may have to take place. This is where the parameter \(\varepsilon \) plays a role. Let us determine an upper bound M on the absolute value of the expected reward to any player in the game G. We define

To play according to the joint strategy \(\pi _\varepsilon \), a lottery player must always place probability \(1-q_\varepsilon \) on continuation of the main plan and probability \(q_\varepsilon \) on a switch to the threat plan.

4.4 Main result

Before we prove the claim that \(\pi _{\varepsilon }\) is a subgame perfect \(\varepsilon \)-equilibrium for the game G, let us first establish a property of play when one player deviates while other players stick to \(\pi _\epsilon \).

Lemma 5

Let p be an arbitrary path. Assume that play has developed along path p and that \(t = {\textsc {last}}(p)\) is the current state. Suppose that player \(i = i_t\) chooses an action that has probability 0 according to the prescription dictated by \(\pi _{\varepsilon }\) at state t, and suppose that each player \(j\in N{\setminus } \{i\}\) is going to use strategy \(\pi _{\varepsilon }^j\) after p. Suppose further that player i becomes active again after his deviation at t, say at state s. (Player i may or may not be active between t and s.) Then \(\alpha ^*_s \le \alpha ^*_t\). Moreover, if \(\alpha ^*_s = \alpha ^*_t < 0\) and if no lottery took place during play from t to s, then \({\textsc {r}}(s) < {\textsc {r}}(t)\).

Proof

Say that player i violates the prescription at t with the action \(u\in A(t)\). After this, the new prescription of \(\pi _\varepsilon \) is given by the main plan \(g^{tu}\), possibly together with the threat pair \((x^{tu}, v^{tu})\).

Let us first assume that all players acted according to prescription from u to s. For the claim \(\alpha ^*_s \le \alpha ^*_t\), we distinguish between two cases.

Case 1: Assume that state s does not lie on plan \(g^{tu}\). This is only possible if the main plan \(g^{tu}\) is associated with a threat pair \((x^{tu},v^{tu})\), if state \(x^{tu}\) was active before s, and if the lottery player chose the switch to plan \(v^{tu}\). Thus, state s lies on plan \(v^{tu}\). We then have \(t\notin {\textsc {sat}}(v^{tu},\alpha ^*)\) by the properties of a threat plan (see AD-iv), and \(s\in {\textsc {sat}}(v^{tu},\alpha ^*)\), because plan \(v^{tu}\) is \(\alpha ^*\)-viable. It follows that \(\alpha ^*_s < \alpha ^*_t\).

Case 2: Assume that state s lies on plan \(g^{tu}\). We have \(\phi _i(g^{tu}) \le \alpha ^*_t\) by the choice of plan \(g^{tu}\). We also have \(\phi _i(g^{tu}) \ge \alpha ^*_s\), since s lies on the \(\alpha ^*\)-viable plan \(g^{tu}\). It follows that \(\alpha ^*_s \le \alpha ^*_t\).

For the second claim of the lemma, assume that \(\alpha ^*_s = \alpha ^*_t <0\) and that no lottery took place during play from t to s. Then \(u\in {\textsc {safestep}}(t,\alpha ^*)\), as otherwise a plan \(g^{tu}\) with \(\phi _i(g^{tu}) < \alpha ^*_t\) would have been chosen, and we would have \(\alpha ^*_t > \phi _i(g^{tu}) \ge \alpha ^*_s\).

We have \(g^{tu}\in {\textsc {admiss}}(t,U,\alpha ^*)\), where U is an \(\alpha ^*\)-safe combination with \(U(t) = u\) with domain \(F = \{x\in L\mid {\textsc {r}}(x) \ge {\textsc {r}}(t)\}\), and where L denotes the \(\alpha ^*\)-level to which t belongs. Moreover, we have \(D(g^{tu},U) = \varnothing \) and \(\phi _{i}(g^{tu}) = \alpha _t^*\), by the choice of \(g^{tu}\). We see that \(g^{tu}\) does not satisfy AD-i as we assume \(\alpha ^*_t < 0\). We also see that \(g^{tu}\) does not satisfy AD-ii, since \(\phi _{i}(g^{tu}) = \alpha _t^*< 0\) implies that \(g^{tu}\) is absorbing. Thus, plan \(g^{tu}\) satisfies AD-iii or AD-iv.

Notice that s and t must be different states. Indeed, if \(g^{tu}\) satisfies AD-iii, this follows from the fact that all states of F on \(g^{tu}\) are different. If \(g^{tu}\) satisfies AD-iv, then state t is also different from s, by our assumption in the lemma that s comes before the lottery and the fact that, by AD-iv-(a), all states of F on \(g^{tu}\) before \(x^{tu}\) are different.

We now prove that \(s\notin F\). Suppose to the contrary that \(s\in F\), and hence that \(s\in ({\textsc {S}}(g^{tu}) \cap F) {\setminus } \{t\}\). If \(g^{tu}\) satisfies AD-iii, then it follows that \(s\in ({\textsc {S}}(g^{tu}) \cap F) {\setminus } \{t\} = D(g^{tu},U)\). If \(g^{tu}\) satisfies AD-iv, then it follows that s is a state in \(({\textsc {S}}(g^{tu}) \cap F) {\setminus } \{t\}\) that comes before the lottery, hence that \(s\in D(g^{tu},U)\). This contradicts that \(g^{tu}\) has the property \(D(g^{tu},U) = \varnothing \).

We proved that \(s\notin F\), hence that \(s\in L {\setminus } F = \{x\in L\mid {\textsc {r}}(x) < {\textsc {r}}(t)\}\). Thus, \({\textsc {r}}(s) < {\textsc {r}}(t)\).

Now assume that player i did not only deviate at state t, but that he deviated multiple times before s was reached. Then we apply the result for a single deviation multiple times, for each play between one deviation and the next. This then shows that the lemma also holds for multiple deviations. \(\square \)

Theorem 1

Joint strategy \(\pi _\varepsilon \) is a subgame perfect \(\varepsilon \)-equilibrium for the game G.

Proof

Let p be an arbitrary path and let \(i\in N\). Assume that play has developed along path p, that all players \(j\in N{\setminus } \{i\}\) are going to use strategy \(\pi ^j_\varepsilon \) after p, and that i is the only player who does not necessarily play according to \(\pi _\varepsilon \). Let us denote the strategy of player i by \(\sigma ^i\) and the resulting joint strategy by \(\sigma \). We will prove that the reward to player i is at most \(\varepsilon \) higher in expectation if, after p, play is according to \(\sigma \), compared to i’s reward if play is according to \(\pi _{\varepsilon }\): \(\psi _i^p(\sigma ) \le \psi _i^p(\pi _\varepsilon ) + \varepsilon \).

Let us first provide a lower bound for the expected reward for i if play is according to the joint strategy \(\pi _\varepsilon \). For this, we denote by \(g^p\) the main plan from the prescription of \(\pi _{\varepsilon }\), given to the players, when play has reached the last state of path p. If the prescription of \(\pi _\varepsilon \) comes without a threat pair, then plan \(g^p\) will be executed with probability 1, and the expected reward for player i equals \(\phi _i(g^p)\). If the prescription consists of the main plan \(g^p\) together with a threat pair \((x^p,v^p)\), then the expected reward for player i equals \((1-q_\varepsilon ) \phi _i(g^p) + q_\varepsilon \phi _i(v^p)\), as \(g^p\) will be executed with probability \(1- q_\varepsilon \) and \(v^p\) with probability \(q_\varepsilon \). In both cases, the number \(\phi _i(g^p) - 2 q_\varepsilon M\) is a lower bound for the expected reward for player i under joint strategy \(\pi _\varepsilon \), i.e.,

Recall that, by definition of \(q_\varepsilon \), we have \(2 q_\varepsilon M \le \frac{1}{2} \varepsilon \). Thus, it will be sufficient to prove that the expected reward for player i under joint strategy \(\sigma \) is bounded from above by \(\phi _i(g^p) + 2 q_\varepsilon M\), i.e.,

We divide the proof in three cases, depending on the number of deviations by player i after p. For each case, we either bound the expected reward of the deviating player i from above by \(\phi _i(g^p) + 2 q_\varepsilon M\), or we prove that the case has probability 0 of happening.

Case I: Player i does not deviate during play after history p under \(\sigma \) . We distinguish three subcases.

(a): Assume that the prescription is given by the main plan \(g^p\) without a threat pair. Then the expected reward to player i is equal to \(\phi _i(g^p)\).

(b): Assume that the prescription is given by the main plan \(g^p\) together with the threat pair \((x^p,v^p)\), and that player i is the controlling player of state \(x^p\). Then it is still true that either plan \(g^p\) or plan \(v^p\) will be executed, because we assume no deviations. By the properties of a threat pair, we have \(x^p\in {\textsc {sat}}(g^p,\alpha ^*)\) and \(x^p\notin {\textsc {sat}}(v^p,\alpha ^*)\), hence \(\phi _i(v^p) < \phi _i(g^p)\). Therefore, the expected reward for player i in this subcase is bounded from above by \(\phi _i(g^p)\).

(c): Assume that the prescription is given by the main plan \(g^p\) together with the threat pair \((x^p,v^p)\), and that player i is not the controlling player of state \(x^p\). Then, like under strategy \(\pi _{\varepsilon }\), plan \(g^p\) will be executed with probability \(1-q_\varepsilon \) and plan \(v^p\) will be executed with probability \(q_\varepsilon \). Here, player i may gain if \(v^p\) is executed, but in expectation the gain will be small: The expected reward for i under \(\sigma \) is \((1-q_\varepsilon ) \phi _i(g^p) + q_\varepsilon \phi _i(v^p) \le \phi _i(g^p) + 2 q_\varepsilon M\).

We see that (2) holds in each of the cases (a), (b), and (c).

Case II: Player i deviates at least once after history p under \(\sigma \), but only finitely many times. Let us denote the state where player i makes his first deviation by \(t\in S\) and the state where i makes his last deviation by \(s\in S\). Let us further denote the chosen action by player i at state s by \(u\in A(s)\). After the last deviation by player i, prescribed play according to \(\pi _\varepsilon \) is given by the main plan \(g^{su}\), possibly together with the threat pair \((x^{su},v^{su})\). Since no further deviations will take place, either plan \(g^{su}\) or \(v^{su}\) will be executed in its entirety. We have \(\phi _{i}(g^{su}) \le \alpha _s^*\) and \(\phi _{i}(v^{su}) < \alpha _s^*\) by the choices for \(g^{su}\) and \(v^{su}\). Therefore, the expected reward for player i is at most \(\alpha _s^*\). By Lemma 5, we have \(\alpha _s^*\le \alpha _t^*\), so we can bound the expected reward to player i from above by \(\alpha _t^*\).

Let h denote the main plan in the prescription of \(\pi _{\varepsilon }\) just before player i wants to make his first deviation at t. (We have \(h = g^p\) or possibly \(h=v^p\) if a lottery takes place before player i makes his first deviation.) Then \(\phi _i(h) \ge \alpha ^*_t\), since t lies on h and since h is an \(\alpha ^*\)-viable plan. Thus, the reward to player i will be at least as good if he just follows the prescription of the main plan with probability 1, which is a strategy where he does not deviate. We have already seen in Case I that his reward is then bounded from above by \(\phi _i(g^p) + 2q_\varepsilon M\).

Case III: Player i deviates infinitely many times after history p under \(\sigma \) . We will prove that this case occurs with probability 0.

Let t denote the state where player i first deviates. First assume that \(\alpha ^*_t \ge 0\). Infinitely many deviations by player i obviously implies infinite play along non-absorbing states. Therefore, the reward to player i will be zero if this happens. Let h denote the main plan in the prescription of \(\pi _\varepsilon \) just before player i wants to make his first deviation at t. We have \(\phi _i(h) \ge \alpha ^*_t\), since s lies on h and since h is \(\alpha ^*\)-viable. Thus, the reward to player i will be at least as good if he just follows the prescription of the main plan with probability 1, which is a strategy where he does not deviate. We have seen in Case I that his reward is then bounded from above by \(\phi _i(g^p) + 2q_\varepsilon M\).

Now assume that \(\alpha _t < 0\). By assuming that player i deviates infinitely many times, it is implied that infinitely many times a state in \(S^i\) becomes active. The \(\alpha ^*\)-value of subsequent states in \(S^i\) does not increase, by Lemma 5. Therefore, after a while, the \(\alpha ^*\)-value of the visited states in \(S^i\) becomes a constant, say c. Then we have \(c < 0\), since we assume \(\alpha _t < 0\). Then, by Lemma 5, the rank of visited states in \(S^i\) strictly decreases until a lottery is executed by a player \(j\ne i\). Since the rank of a state can decrease only finitely many times, the execution of a lottery will happen infinitely many times. If at the lottery, where the prescription is given by say main plan h together with a threat pair (y, w), player \(i_y\) chooses plan w, then player i will not be able to deviate again at a state with the constant \(\alpha ^*\)-value c. Thus, at every lottery the outcome must be continuation of the main plan. The probability of this happening is 0. \(\square \)

5 A fixed point theorem

There is one thing left to do, which is to prove that a vector \(\alpha \in {{\mathbb {R}}}^S\) with properties F-i and F-ii exists. For this, we introduce, in Sect. 5.1, a non-empty set \(\Omega \subseteq {{\mathbb {R}}}^S\) of semi-stable vectors, for which we prove that

This implies that, for \(\alpha \in \Omega \) and \(U\in {\mathcal {U}}(\alpha )\), the updated vector \(\delta (U,\alpha )\) is finite and satisfies \(\delta (U,\alpha ) \ge \alpha \) (see Sect. 5.2). Now, if we could prove additionally that \(\delta (U,\alpha ) \in \Omega \), then it would be an easy corollary to establish the existence of a fixed point in \(\Omega \). However, as was demonstrated in Kuipers et al. (2016) by means of an example, for certain vectors \(\alpha \in \Omega \) and \(U\in {\mathcal {U}}(\alpha )\), we have \(\delta (U,\alpha ) \notin \Omega \). This motivates the definition of a set \(\Omega ^*\subseteq \Omega \) of stable vectors, in Sect. 5.3. The results derived in Sects. 5.1 and 5.2 for vectors of the set \(\Omega \) hold for vectors of the set \(\Omega ^*\) as well, as \(\Omega ^*\) is by definition a subset of \(\Omega \). The main effort in Sects. 5.3, 5.4, and 5.5 will therefore go into proving that, for all \(\alpha \in \Omega ^*\) and all \(U\in {\mathcal {U}}(\alpha )\), the vector \(\delta (U,\alpha )\) is an element of \(\Omega ^*\). The fixed point theorem is subsequently established in Sect. 5.6.

5.1 Semi-stable vectors and their properties

In this subsection, we present a condition for \(\alpha \in {{\mathbb {R}}}^S\), which we call semi-stability. This condition guarantees the existence of a \((t,U,\alpha )\)-admissible plan for all \(U\in {\mathcal {U}}(\alpha )\) and all \(t\in F(U)\), even with the additional property that, for every edge (x, y) of the plan, x is \(\alpha \)-safe at state y. For \(\alpha \in {{\mathbb {R}}}^S\), let us therefore define the edge set

and the graph

In the following, our aim is to impose an appropriate set of properties on the subsets of S, and then deduce the existence of a plan \(g\in {\textsc {admiss}}(t,U,\alpha )\) in \({\mathbf {G}}(\alpha )\) for all \(U\in {\mathcal {U}}(\alpha )\) and all \(t\in F(U)\). For \(\alpha \in {{\mathbb {R}}}^S\) and \(X\subseteq S\), we define

We also define

and for \(\alpha \in {{\mathbb {R}}}^S\), we define