Abstract

Hong and Kao (2004) proposed a class of general applicable wavelet-based tests for serial correlation of unknown form in the residuals from a panel regression model. The tests can be applied to both static and dynamic panel models. Their test, however, is computationally difficult to implement, and simulation studies show that the test has poor small-sample properties. In this paper, we extend Gençay’s (2010) time-series test for serial correlation to panel data case. Our new test is also wavelet based and maintains the advantages of the Hong and Kao (2004) test, but it is much simpler and easier to implement. Furthermore, simulation results show that our test has quicker convergence rate and hence better small-sample properties, compared to Hong and Kao (2004) test. We also compare our test with several other existing tests for series correlation, and our test has in general better statistical properties in terms of both size and power.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Serially correlated errors in regression models have several implications for econometric modeling, such as making parameter estimation inefficient and invalidating commonly used Student’s t test and F tests. For the panel data case, moreover, commonly used estimators of dynamic models such as system GMM and Arellano–Bond (1991) are only valid as long as the model errors are serially uncorrelated. Testing for serially correlated errors in panel models is thus an essential part of econometric modeling.

Most panel data tests; should be, for example, Breusch and Pagan (1980), Bhargave et al. (1982), Baltagi and Li (1995) and Bera et al. (2001) test for no serial correlation against the alternative of serial correlation of some known form. Lee and Hong (2001) and Hong and Kao (2004) relaxed the assumption that the serial correlation form should be known. This new class of tests is constructed using the wavelet spectrum, which can detect serial correlation where the spectrum has peaks or kinks. This class of tests can be applied to residuals from a wide range of different panel data models: static models, dynamic models, one- or two-way error-component models and fixed-effects and random-effects models. Because the Hong and Kao (2004) test is more general than other tests, it also has higher power. Among the weaknesses of the Hong and Kao (2004) test is its complex structure, which causes the convergence rate to be slow. Additionally, although Hong and Kao test under the null has a limit standard normal distribution asymptotically. For small samples, critical values are generated by simulations, which further complicates the tests and makes the tests computationally time-consuming.

In this paper, we propose an alternative serial correlation test for panel data models that maintains the strengths of the Hong and Kao (2004) test and at the same time has a more simplified structure, higher convergence rate and better small-sample properties. Our test is still wavelet based and is constructed by combining the variance ratio test proposed by Gençay (2010) for time-series models with the Fisher-type test applied in Choi (2001). Our test has a simple construction and converges to a normal distribution quickly. No empirical critical values need to be simulated; thus, the computational burden is significantly reduced. Simulations show that the small-sample properties of our test are compared favorably to those of other commonly used tests.

The rest of this paper has the following structures: Sect. 2 introduces the wavelet transform and the Hong and Kao test, Sect. 3 introduces the panel data test, Sect. 4 contains the simulation study, and Sect. 5 concludes the paper.

2 Wavelet method and the Hong and Kao test

2.1 Introduction to the wavelet transform

Wavelet transform methods began to gain the attention of statisticians and econometricians after a series of articles by, for example, Schleicher (2002) and Crowley (2007), Vidakovic (1999), Percival and Walden (2000) and Gençay et al. (2001). The wavelet methodology represents an arbitrary time series in both time and frequency domains by convolution of the time series with a series of small wavelike functions. Corresponding to the time-infinite sinusoidal waves in the Fourier transform, the time-located wavelet basis functions \( \left\{ {\psi_{jk} ( \cdot ):j,k \in {\mathbb{Z}}} \right\} \) used in the wavelet transform are generated by translations and dilations of a basic mother wavelet \( \psi \in L^{2} ({\mathbb{R}}) \). The function basis is constructed through \( \psi_{jk} (t) = 2^{j/2} \psi (2^{j} t - k) \), where k is the location index and j is the scale index that corresponds to the information inside the frequency band \( \left[ {\frac{1}{{2^{j + 1} \Delta }};\frac{1}{{2^{j} \Delta }}} \right] \), in which \( \Delta \) is the length of sampling interval of the series. For a continuous signal f, its wavelet transform is given by the wavelet coefficients \( f^{*} = \{ \gamma (j,k)\}_{{k,j \in {\mathbb{Z}}}} \) with \( \gamma (j,k) = \langle f,\psi_{jk} \rangle = \int {f(t)\psi_{jk}^{*} (t)\,{\text{d}}t} \), which represent the resolution at time k and scale j. The resolutions in the time domain and the frequency domain are achieved by shifting the time index k and the scale index j, respectively. A lower level of j corresponds to higher-frequency bands, and a higher level of j corresponds to lower-frequency bands. For a time series \( Z = \{ Z_{t} ,t = 0, \ldots ,T - 1\} \) sampled at discrete time points, its wavelet coefficients are obtained via the discrete wavelet transform (DWT) or maximum overlap discrete wavelet transform (MODWT).

The DWT is implemented by applying a cascade of orthonormal high-pass and low-pass filters to a time series that separates its characteristics at different frequency bands (Mallat 1989). The level J DWT transforms a dyadic time series to the form of \( T - 1 \) wavelet coefficients structured as J scales and one scaling coefficient. Scale \( j = 1, \ldots ,J \) contains information in the frequency band \( \left[ {\frac{1}{{2^{j + 1} }};\frac{1}{{2^{j} }}} \right] \) and consists of \( \frac{T}{{2^{j} }} \) coefficients that correspond to strictly adjacent wavelet functions. The maximum overlap wavelet transform is a variation of the DWT. The level J MODWT projects time series Z on \( J \times T \) wavelet functions. After the level J MODWT, we can get \( J + 1 \)-transformed vectors \( {\mathbf{W}}_{1} , \ldots ,{\mathbf{W}}_{J} ,{\mathbf{V}}_{J} \). The T-dimensional vectors \( {\mathbf{W}}_{j} \) and \( {\mathbf{V}}_{J} \) are computed by \( {\mathbf{W}}_{j} = w_{j} {\text{Z}},_{{}}^{{}} {\mathbf{V}}_{J} = v_{J} {\text{Z}} \) with \( j = 1,2, \ldots ,J \). The \( T \times T \) matrices \( w_{j} \) (\( j = 1,2, \ldots ,J \)) can be viewed as the high-pass filter which filters out the higher part of the frequency band in Z. The output from this high-pass filtering is wavelet coefficients \( {\mathbf{W}}_{j} \), which corresponds to fluctuations or changes in average on a scale of \( \tau_{j} = 2^{j - 1} \). The \( T \times T \) matrix \( v_{J} \) is then the low-pass filter which filters out the lowest part of the frequency band in Z. The output from this low-pass filtering is scaling coefficients \( {\mathbf{V}}_{J} \), which corresponds to averages on a scale of \( \lambda_{J} = 2^{J} \). In MODWT, the T functions in each scale are translated by only one time period per iteration and thus overlap to a great extent, making a difference to the strictly adjacent wavelet functions of the DWT. The overlapping property allows considerably greater smoothness in the reconstruction of selected frequency bands at the cost of losing the orthogonality property. For detailed illustration of DWT and MODWT, we refer to Vidakovic (1999), Percival and Walden (2000) and Gençay et al. (2001).

Generally, the wavelet coefficients from either the DWT or the MODWT are separated to different scales of wavelet and scaling coefficients representing different frequencies. When the series \( Z = \{ Z_{t} ,t = 0, \ldots ,T - 1\} \) is pure white noise, as the variance of white noise is evenly distributed in the whole frequency interval, after the DWT and MODWT transform, the variance of wavelet and scaling coefficients should be evenly distributed as well. On the other hand, if \( Z = \{ Z_{t} ,t = 0, \ldots ,T - 1\} \) is not white noise and it contains serial correlation, the character of evenly distributed variance will be violated. This concept can help us to build up a variance-ratio-based test to test serial correlation in Sect. 3.

2.2 The Hong and Kao (2004) test

The panel data model in Hong and Kao (2004) is given by:

where \( X_{it}^{{}} \) can be either static or dynamic in the form of including lag values of \( Y_{it}^{{}} \), \( \left\{ {\mu_{i} } \right\} \) is an individual effect which is mutually independent for the individual and \( \left\{ {\lambda_{t} } \right\} \) is a common time effect. In the Hong and Kao test, the test framework is:

The test is performed on the demeaned estimated residuals \( \hat{v}_{it} = \hat{u}_{it} - \bar{u}_{i.} - \bar{u}_{.t} + \bar{u},\quad (t = 1, \ldots ,T_{i} ; \, i = 1, \ldots ,n) \), where \( \hat{u}_{it} = Y_{it} - X_{it}^{\prime } \hat{\beta } \), \( \bar{u}_{i.} = T_{i}^{ - 1} \sum\nolimits_{t = 1}^{{T_{i} }} {\hat{u}_{it} } \), \( \bar{u}_{.t} = n_{{}}^{ - 1} \sum\nolimits_{t = 1}^{n} {\hat{u}_{it} } \), \( \bar{u}_{.t} = (nT_{i} )_{{}}^{ - 1} \sum\nolimits_{i = 1}^{n} {\sum\nolimits_{t = 1}^{{T_{i} }} {\hat{u}_{it} } } \) and \( \hat{\beta } \) indicates the consistent estimators under \( H_{0} \). Instead of using the lag h autocovariance function \( R_{i} (h) = E(v_{it} v_{it - \left| h \right|} ) \), Hong and Kao (2004) use the power spectrum \( f_{i} (\omega ) = (2\pi )^{ - 1} \sum\nolimits_{h = - \infty }^{ + \infty } {R_{i} (h){\text{e}}^{{ - {\text{i}}h\omega }} } ,\omega \in [ - \pi ,\pi ] \) to build the test statistic because it can contain the information on serial correlation at all lags. Moreover, instead of Fourier representation of the spectral density, a wavelet-based spectral density \( \varPsi_{jk} (\omega ) \) using the above-mentioned wavelet basis \( \psi \in L^{2} ({\mathbb{R}}) \) is used, with \( \varPsi_{jk} (\omega ) \) defined as:

\( \varPsi_{jk} (\omega ) \) can effectively capture the local peaks and spikes in spectral density by shifting the time effect index k. Based on the empirical wavelet coefficients \( \hat{\alpha }_{ijk} = (2\pi )^{ - 1/2} \sum\nolimits_{h} {R_{i} (h)\varPsi_{jk}^{*} (\omega )} \), the heteroscedasticity-consistent test statistic \( \tilde{W}_{1} \) and heteroscedasticity-corrected test statistic \( \tilde{W}_{2} \), as well as their distribution under \( H_{0} \), are defined separately as:

The Hong and Kao (2004) test is spectral density based and requires no specification of the alternative form; thus, it is generally applicable to a wide range of serial correlations. However, Hong and Kao (2004) test has three main disadvantages. First, both test statistics are constructed using the hyperparameters \( J_{i} \) (resolution level in wavelet decomposition), which are determined in a computationally intensive, data-driven method. Second, although Hong and Kao (2004) show \( \tilde{W}_{1} \mathop{\longrightarrow}\limits{d}N(0,1) \) and \( \tilde{W}_{2} \mathop{\longrightarrow}\limits{d}N(0,1) \), the slow convergence rates of both test statistics show a serious bias below the nominal size when using the asymptotic critical values from the standard normal distribution directly. It is therefore necessary to use bootstrapped or simulated critical values, which further complicates the test. Third, because the test statistics are based on the DWT, the data set is restricted as \( T_{i} \) should be a multiple power of 2. All of these disadvantages hinder the test’s popularity. We hereby propose a much more simplified test and show that our test overcomes the shortcomings of \( \tilde{W}_{1} \) and \( \tilde{W}_{2} \) while still giving good results in testing a wide range of serial correlations in the generalized panel model.

3 A panel data test based on wavelet variance ratio

The test in Hong and Kao (2004) is the panel version extension of the wavelet spectrum-based serial correlation test in single series proposed by Lee and Hong (2001). However, the Lee and Hong (2001) test has a slow convergence rate because of the estimation of the nonparametric spectrum density. An alternative time-series test for serial correlation of unknown form is the Gençay (2010) variance ratio test, which converges to normal distribution at a much faster parametric rate. In this paper, we extend the Gençay (2010) test to the panel data case by using a Fisher-type test combining the p-values from individual serial correlation tests based on Gençay (2010). This p value combination strategy is inspired by Maddala and Wu (1999) and Choi (2001). Choi (2001) noted that the method to combine p-values can allow more general assumptions of the underlying panel models such as stochastic or non-stochastic models, balanced or non-balanced data and homogeneous or heterogeneous alternatives. This general assumption coincides with the aforementioned wide range assumption of the panel models in Hong and Kao (2004), making our further comparisons possible. Choi (2001) also shows this p-value combination test generally has better size and power performance compared with the previous panel unit root test. A potential shortcoming of this testing procedure is that it requires the cross-sectional units to be uncorrelated. This assumption is also imposed by the Hong and Kao test whereby we do not consider the case of cross-sectional dependence in this paper.

Our test procedure for serial correlation is straightforward. First, the errors in Eq. (1) for each individual i are estimated. Second, the estimated errors are transformed to the wavelet domain using the Haar filter-based MODWT. Unlike the DWT, the MODWT does not impose any restrictions on the sample size, whereby in the Hong and Kao restriction, the sample size is restricted to the power of 2. The MODWT on the estimated errors yields two sets of transform coefficients, \( W_{i} \) and \( V_{i} \). For the discrete time series which has the frequency band \( \left( {0,\frac{1}{2}} \right] \), then \( W_{i} \) represent the higher half part of frequency component in the errors (frequency band is \( \left( {\frac{1}{4},\frac{1}{2}} \right] \)), and \( V_{i} \) represents the lower half part of frequency component in the errors (frequency band is \( \left( {0,\frac{1}{4}} \right] \)). Third, if the errors are white noise, each half part of the frequency has the same energy. Thus, the following wavelet-ratio test statistic \( G_{i} = \frac{{\sum\nolimits_{t = 1}^{{T_{i} }} {W_{it}^{2} } }}{{\sum\nolimits_{t = 1}^{{T_{i} }} {W_{it}^{2} } + \sum\nolimits_{t = 1}^{{T_{i} }} {V_{it}^{2} } }} \) has the expected value \( \frac{1}{2} \). Gençay (2010) shows that the test statistic \( S_{i} = \sqrt {4T} \left( {\frac{1}{2} - G_{i} } \right) = N(0,1) + O_{p} (T_{i}^{ - 1} )\mathop{\longrightarrow}\limits{d}N(0,1) \) under \( H_{0} \), and it can be used to test for an unknown order of serial correlation in time series. Gençay (2010) further shows that the test based on \( S_{i} \) performs well in small samples. The last step in our test procedure is to combine the individual wavelet-ratio test with the Fisher type of the test based on p-values of individual tests (Choi 2001). Three p value-based test statistics are defined in Choi (2001) as \( P = - 2\sum\nolimits_{i = 1}^{n} {\ln (p_{i} )} \), \( Z = \frac{1}{\sqrt n }\sum\nolimits_{i = 1}^{n} {\varPhi^{ - 1} (p_{i} )} \), \( L = \sum\nolimits_{i = 1}^{n} {\ln \left( {\frac{{p_{i} }}{{1 - p_{i} }}} \right)} \) where \( p_{i} \) is the p-value of the test for individual i and \( \varPhi \) is cumulative density functions for the normal distribution. Among the three test statistics, we choose the inverse normal test statistic \( Z = \frac{1}{\sqrt n }\sum\nolimits_{i = 1}^{n} {\varPhi^{ - 1} (p_{i} )} \). Stouffer et al. (1949) already proved that \( Z\mathop{\longrightarrow}\limits{d}N(0,1) \) under \( H_{0} \), and Choi (2001) showed that, compared to other two Fisher-type tests P and L, the inverse normal test statistic Z performs best and is recommended for empirical applications. Furthermore, the p value in the Z test statistic is defined as \( p_{i} = 1 - \varGamma (S_{i}^{2} ) \) and \( \varGamma \) is cumulative density function for Chi-square distribution. The reason why we use \( S_{i}^{2} \) instead of applying \( S_{i} \) directly to obtain the p values in the panel framework is that the test based on \( S_{i} \) is a two-sided normal test and will lose power seriously when the panel data contain both positive and negative correlations. As \( S_{i}^{2} \mathop{\longrightarrow}\limits{d}\chi^{2} (1) \), the test based on \( S_{i}^{2} \) is then a one-sided test and can be used to test the heterogeneous alternatives. Another advantage of using \( S_{i}^{2} \) instead of \( S_{i}^{{}} \) is that the convergence rate turns into \( O_{p} (T_{i}^{ - 2} ) \) and will lead to even better small-sample performance. The last advantage can be derived directly form the rules of product for big oh (Lemma 1.18; p. 16, Dinneen et al. 2009): if \( g_{1} \in O_{p} (f_{1} ) \) and \( g_{2} \in O_{p} (f_{2} ) \), then \( g_{1} g_{2} \in O_{p} (f_{1} f_{2} ) \).

4 Simulation study

The small-sample properties of our proposed inverse normal test statistic Z are compared with those of the Hong and Kao (2004) test, \( \hat{W}_{1} \) and \( \hat{W}_{2} \), in a simulation study. We also compare it with Hong’s (1996) kernel-based tests, \( \hat{K}_{1} ,\hat{K}_{2} \), Baltagi and Li’s (1995) Lagrange multiplier (LM) test BL and Bera et al.’s (2001) modified LM test BSY. We use the same data generation process used in Hong and Kao (2004) for direct comparison. To evaluate the size of the test, we assume both a static panel model with a data-generating process (DGP1): \( Y_{it} = 5 + 0.5X_{it} + \mu_{i} + u_{it} \), \( X_{it} = 5 + 0.5X_{it - 1} + \eta_{it} \) with \( \eta_{it} \sim{\text{i}} . {\text{i}} . {\text{d}} .\;U[ - 0.5,0.5] \) and a dynamic panel model DGP2: \( Y_{it} = 5 + 0.5Y_{it - 1} + \mu_{i} + u_{it} \). In both DGP1 and DGP2, the individual effect \( \mu_{i} \sim{\text{i}} . {\text{i}} . {\text{d}} .\;N(0,0.4) \) and error process \( u_{it} \sim{\text{i}} . {\text{i}} . {\text{d}} .\;N(0,1) \). The error processes \( \left\{ {u_{it} } \right\} \) and \( \left\{ {u_{ls} } \right\} \) are mutually independent for \( i \ne l \) and all \( t,s \); thus, we assume independence of cross-sectional units here. For the power part, the error process \( \left\{ {u_{it} } \right\} \) has two alternatives:

In our simulations, we employ the Haar wavelet to limit the number of boundary coefficients, which may negatively affect the size and power of the test. Although other wavelet filters such as the Daubechies filters or the least asymmetric filters come closer to represent an ideal band pass filter, they also generate more boundary coefficients. These filters are therefore more appropriate for longer time dimensions than considered in our simulations. See Percival and Walden (2006) for a detailed discussion of how to choose an appropriate wavelet filter.

For the size and power of the Z test, we take the critical values at 10% and 5% significance levels directly from the N(0, 1) distribution and set the replication number for the simulation to 1000, the same as Hong and Kao (2004). We use \( Z_{1} \) to represent our Z test constructed from the static data generation process DGP1 and \( Z_{2} \) to represent the Z test from the dynamic data generation process (DGP2). Table 1 shows the size performance of our test statistic \( Z_{1} \), and Table 2 shows the size performance of our of our test statistic \( Z_{2} \). Table 1 is compared with Hong and Kao’s (2004) Table I for the static model, and Table 2 is compared to Hong and Kao’s (2004) Table II for the dynamic model.

In Tables 1 and 2, \( \hat{K}_{1} ,\hat{K}_{2} ,{\text{BL}}\,{\text{and}}\,{\text{BSY}} \) are separately heteroscedasticity-consistent Daniell kernel-based tests, heteroscedasticity-corrected Daniell kernel-based tests, Baltagi–Li (Baltagi and Li, 1995) tests and Bera, Sosa-Escudero and Yoon tests (Bera et al. 1995), respectively. When the replication number of the simulation is set to 1000, the confidence intervals for an unbiased size at the 10% and 5% significance levels are separately \( 0.10 \pm 1.96*\sqrt {\frac{0.10(1 - 0.10)}{1000}} = (0.0814,0.1186) \) and \( 0.05 \pm 1.96*\sqrt {\frac{0.05(1 - 0.05)}{1000}} = (0.0365,0.0635) \), respectively. Tables 1 and 2 show that the sizes of our test statistic Z are almost all unbiased for both the static panel model and the dynamic panel model in all cases, even for very small sample sizes such as when \( (n,T) \) = (5, 8) or (8, 5). However, Tables I and II in Hong and Kao (2004) show that when using the asymptotic critical values, the size is seriously under-biased for \( \hat{W}_{1} \), \( \hat{W}_{2} \), \( \hat{K}_{1} \) and \( \hat{K}_{2} \); for BL, the size is seriously over-biased, and for BSY, the size is either under-biased or over-biased. The wide bootstrapped critical value is then used for Hong and Kao (2004) to adjust the size, and there is still an under- or over-bias problem for all of the tests. On the contrary, our test uses critical values directly from the normal distribution, and the results are mostly unbiased.

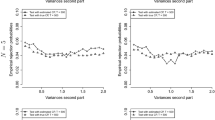

To evaluate the power of the proposed tests, we let the error process follow an AR(1) in Eq. (2) and ARMA(12.4) process in Eq. (3), and we get Tables 3 and 4 corresponding to Tables III and IV in Hong and Kao (2004). We use \( Z_{1}^{{}} \) and \( Z_{2}^{{}} \) to report separately the results for static and dynamic cases, whereas Hong and Kao (2004) did not show the results for the dynamic model.

In the power case for DGP1, because of poor performance for small-sample sizes for their tests, Hong and Kao (2004) use a simulated empirical critical value and bootstrap critical value for power comparison. This is a challenging task because critical values must be simulated for all combinations of \( (n,T) \). However, we can directly use the critical values from the standard normal distribution for power tests, making the testing procedure much more straightforward and easier to conduct. For the AR(1) type of error, Table 3 shows that our test performance is modestly improved compared to the other tests and its performance is much better than almost all of the tests for the AR(1) model for sample size (5, 8) and is better than all three models for the sample size (50, 64). All of these examples demonstrate the much faster convergence rate of our test statistic. However, for sample sizes (10, 16) and (25, 32), even though the power performance of our test is modest, it is still quite acceptable.

Table 4 shows that for the ARMA(12, 4) type of error, the tests in Hong and Kao (2004) have almost no power when the sample size is (10, 16), whereas our test performs much better. For sample sizes (25, 32) and (50, 64) in all three models, the only Hong and Kao (2004) test that compares well with our test is their \( \tilde{W}_{1} (J_{0} ) \) test. However, this test requires a computationally intensive, data-driven procedure for the choice of \( J_{0} \), making the already complex test even more of an obstacle. Moreover, compared with \( \tilde{W}_{1} \) and \( \tilde{W}_{2} \), our test places no restrictions on T, whereas \( \tilde{W}_{1} \) and \( \tilde{W}_{2} \) require T to be a multiple power of 2.

5 Conclusion

Compared with the test statistics \( \tilde{W}_{1} \) and \( \tilde{W}_{2} \) in Hong and Kao (2004), our test based on statistic Z has a much more simplified construction, quicker convergence rate and better small-sample performances, especially in the perspective of size property. The faster convergence rate of Z may be explained in two factors: First, the nonparametric spectral density estimation in \( \tilde{W}_{1} \) and \( \tilde{W}_{2} \) slows the convergence rate; second, the p values in Z are derived from \( S_{i}^{2} \) instead of \( S_{i}^{{}} \), which lead to a convergence rate in individuals being \( O_{p} (T_{i}^{ - 2} ) \) instead of \( O_{p} (T_{i}^{ - 1} ) \). Moreover, by using the inverse normal test, our test can be easily extended to a cross-sectional dependence robust test by using a modified inverse normal test (Hartung 1999) when combining the p-values or by obtaining critical values for the Fisher type of test by wavestrapping (bootstrapping) method. Generally speaking, just by using the N(0, 1) distribution, we obtain unbiased size and quite comparable power performance when compared with all previous tests. The other shortage in Hong and Kao (2004) is that there wavelet transform is based on orthonormal DWT, which requires T to be a multiple power of 2. By using the MODWT in our test, this restriction is relaxed. Moreover, in a small sample, the MODWT yields a more accurate energy decomposition due to its smoothing property. A shortcoming of both the Hong and Kao (2004) test and our test is that it requires the assumption of no cross-sectional dependence. We have left the case of cross-sectional dependence to future research since the main aim of this paper is to develop a test that maintains the strength of the Hong and Kao test but has quicker convergence rate than the Hong and Kao test and does not require bootstrapping the critical values when the cross-sectional units are independent.

References

Arellano M, Bond S (1991) Some tests of specification for panel data: Monte Carlo evidence and an application to employment equations. Rev Econ Stud 58:277–297

Baltagi BH, Li Q (1995) Testing AR(1) against MA(1) disturbances in an error component model. J Econom 68:133–151

Bera AK, Sosa-Escudero W, Yoon M (2001) Tests for the error component model in the presence of local misspecification. J Econom 101:1–23

Bhargava A, Franzini L, Narendranathan W (1982) Serial correlation and the fixed effects model. Rev Econ Stud 49(4):533–549

Breusch TS, Pagan A (1980) The Lagrange multiplier test and its applications to model specification in econometrics. Rev Econ Stud 47:239–253

Crowley PM (2007) A guide to wavelets for economists. J Econ Surveys 21(2):207–267

Choi I (2001) Unit root tests for panel data. J Int Money Finance 20:249–272

Dinneen MJ, Gimel’farb G, Wilson MC (2009) An introduction to algorithms, data structures and formal languages, 2nd edn. Pearson Education, New York

Gençay R (2010) Serial correlation tests with wavelets. https://www.researchgate.net/publication/228534169_Serial_Correlation_Tests_with_Wavelets

Gençay R, Selçuk F, Whitcher B (2001) An introduction to wavelets and other filtering methods in finance and economic. Academic Press, San Diego

Hartung J (1999) A note on combining dependent tests of significance. Biom J 41(7):849–855

Hong Y (1996) Consistent testing for serial correlation of unknown form. Econometrica 64:837–864

Hong Y, Kao C (2004) Wavelet-based testing for serial correlation of unknown form in panel models. Econometrica 72:1519–1563

Lee J, Hong Y (2001) Testing for serial correlation of unknown form using wavelet methods. Econom Theory 17:386–423

Percival DB, Walden AT (2000) Wavelet methods for time series analysis. Cambridge University Press, Cambridge

Schleicher C (2002) An introduction to wavelets for economists. Staff Working Papers, Bank of Canada

Stouffer SA, Suchman EA, DeVinney LC, Star SA, Jr Williams R M (1949) The American soldier. Adjustment during army life, vol 1. Princeton University Press, Princeton

Vidakovic B (1999) Statistical modeling by wavelets. Wiley, New York

Acknowledgements

Open Access funding provided by University of Bergen. Fredrik N. G. Andersson gratefully acknowledges funding from Swedish Research Council (Project No. 421-2009-2663). Yushu Li gratefully acknowledges funding from Finance Market Fund, Norwegian Research Council (Project No. 274569).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Li, Y., Andersson, F.N.G. A simple wavelet-based test for serial correlation in panel data models. Empir Econ 60, 2351–2363 (2021). https://doi.org/10.1007/s00181-020-01830-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00181-020-01830-6