Abstract

Partial least squares path modeling is a statistical method that allows to analyze complex dependence relationships among several blocks of observed variables, each one represented by a latent variable. The computation of latent variable scores is an essential step of the method, achieved through an iterative procedure named here Hanafi–Wold’s procedure. The present paper generalizes properties already known in the literature for this procedure, from which additional convergence results will be obtained.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Partial least squares path modeling (PLS-PM), originally developed by Wold (1982, (1985), is a powerful multivariate statistical method that allows us to analyze relationships among several blocks of observed variables, usually called manifest variables (MVs). Each block is assumed to represent an unobserved variable, the so-called latent variable (LV).

PLS-PM is considered to be an alternative approach to the covariance structure analysis (Jöreskog 1970), traditionally used for parameter estimation in Structural Equation Modeling (SEM). However, PLS-PM is based on a statistical approach quite different from covariance structure analysis for SEM, leading to different parameters to be estimated. It is actually better defined as an estimation method for composite-based path modeling, according to the most recent literature (Dijkstra 2017).

PLS-PM focuses on LV score computation, accounting for variances of MVs and correlations among LVs. It shows a number of interesting features (e.g., great flexibility, robustness, few demands concerning distributional assumptions and requirement for identification) and has become increasingly important in many areas (Esposito Vinzi et al 2010; Hair et al 2017).

The fundamental principle in PLS-PM is that all the information concerning the relationships between \(\textit{K}\) blocks of observable variables \(\mathbf{X }_{1}\),\(\mathbf{X }_{2},\ldots ,\mathbf{X }_{K}\) is assumed to be conveyed by \(\textit{K}\) composites \(\mathbf{z }_{1}\),\(\mathbf{z }_{2},\ldots ,\mathbf{z }_{K}\). Each composite, \(\mathbf{z }_{K}\), is a proxy of the corresponding LV, \( \varvec{\xi }_{k}\), which can not be directly observable and is assumed to represent the block of \(\textit{p}_{k}\) MVs \(\mathbf{X }_{k}= [\mathbf{x }_{k,1}, \mathbf{x }_{k,2}, \ldots , \mathbf{x }_{k,p_{k}}]\). The same \(\textit{N}\) observations are measured on the blocks of MVs, stacked in matrices \(\mathbf{X }_{1}\),\(\mathbf{X }_{2},\ldots ,\mathbf{X }_{K}\).

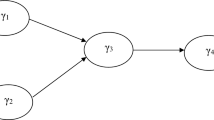

In PLS-PM the investigator starts with a conceptual picture of the model. In the conventional graphical representation of the conceptual model (see Fig. 1), the MVs \(\mathbf{x }_{k,j}\) \((1\le j\le p_{k}\); \(1\le k\le \textit{K})\) are represented by squares and LVs by circles. Using prior substantive knowledge and intuition the investigator can specify the links between LVs (represented by arrows) when these LVs are assumed to be related. The investigator therefore defines which LVs are to be connected to others and which are not.

Once the conceptual model is designed, the design of the formal model and the estimation algorithm are straightforward. Two models are considered in PLS-PM. The first one, called the measurement model, relates the MVs to the LVs. Each MV \(\mathbf{x }_{\mathrm{\textit{k,j}}}\) is related to its LV \( \varvec{\xi }_{k}\) by a simple regression \((1\le j\le p_{k}\); \(1\le k\le \textit{K})\):

The only hypothesis made on the model in Eq. (1) is called the predictor specification condition:

This condition implies that the residuals \(\varvec{\epsilon }_{k,j}\) have zero mean and are even mean independent with the LV \(\varvec{\xi }_{k}\). The error terms of each block are allowed to freely correlate.

The second model, called the structural model, specifies the relationship between LVs. A LV that never appears as a dependent variable is called exogenous; otherwise, it is called endogenous. In the structural model, a dependent LV \(\varvec{\xi }_{k'}\) is related by a multiple regression model to the corresponding predictor LVs \(\varvec{\xi }_{k}\), \(k\in {J_{k'}}\), where \(J_{k'}=\lbrace k: \varvec{\xi }_{k'} \text { is predicted by } \varvec{\xi }_{k} \rbrace \):

where the usual hypotheses on the residuals implied by the predictor specification condition are made.

The estimation of the parameters of the models in Eqs. (1) and (3) is based on the PLS-PM algorithm which proceeds in three stages. The first stage computes the LV scores, \(\mathbf{z }_{1}\),\(\mathbf{z }_{2},\ldots ,\mathbf{z }_{K}\). Each \(\mathbf{z }_{k}\) is constructed as a linear combination of its indicators \(\mathbf{z }_{k}=\mathbf{X }_{k}\mathbf{w }_{k}\) with unit variance \((\frac{\mathbf{w }^{'}_{k}\mathbf{X }^{'}_{k}\mathbf{X }_{k}\mathbf{w }_{k}}{N}=1)\). The second stage estimates the model parameters in Eqs. (1) and (3), i.e., the parameters \(\pi _{k,j}(1\le j\le p_{k}, 1\le k\le K)\) and \(\beta _{k'k}\). Finally, the third stage estimates the location parameters, the parameters \(\pi ^{0}_{k,j}(1\le j\le p_{k}, 1\le k\le K)\) and \(\beta _{k'0}\). The first two stages use centred data \(\mathbf{X }_{1},\mathbf{X }_{2}\ldots \mathbf{X }_{K}\), and the two last stages use classical OLS regression and do not show any difficulty concerning the computation.

The present paper focuses on the computation of LV scores (i.e. the first stage of the PLS-PM algorithm). Hanafi (2007) points out that there are two main procedures for the computation of scores in the first stage of the PLS-PM algorithm: the original procedure as proposed by Wold (1982, (1985), and extended by Hanafi (2007) (called here Hanafi–Wold’s procedure), and an alternative procedure introduced by Lohmöller (1989).

As demonstrated by Hanafi (2007), the advantage of Hanafi–Wold’s procedure is that it is monotonically convergent, it reaches a stable solution faster and has a better performance in terms of convergence speed compared to Lohmöller’s procedure. This latter procedure does not converge monotonically, but is implemented in most PLS-PM software applications, such as PLS-Graph (Chin 2003), PLS-GUI (Hubona 2015), SmartPLS (Ringle et al 2015), XLSTAT’s PLSPM package (Addinsoft XLSTAT 2019), among others. The present paper focuses on Hanafi–Wold’s procedure.

Hanafi (2007) showed that the sequence of LV scores computed through Hanafi–Wold’s procedure increases two different criteria, depending on two schemes for computing the weights relating LV scores (see Theorem 1 below), well known in the PLS-PM community as centroid and factorial schemes. Both criteria are defined as a function of the correlation matrix of the LVs scores. These monotony properties make it easy to establish the monotone convergence of the Hanafi–Wold’s procedure. Here, monotony convergence refers that the values obtained iteratively by the two criteria define a real sequence that is convergent.

The present paper proposes generalizations of some properties already established in the literature (Hanafi 2007) and from which additional convergence results for Hanafi–Wold’s procedure will be established.

The paper is organized as follows. Section 2 describes briefly Hanafi–Wold’s procedure for the computation of LV scores and summarizes their monotony properties as obtained by Hanafi (2007). A generalized forms of these properties will be provided in Sect. 3. Conclusions and future research are presented in Sect. 4.

2 Hanafi–Wold’s procedure

The basic idea of Hanafi–Wold’s procedure was initially proposed by Wold (1985, pp. 586) in the particular case of six blocks, and extended by Hanafi (2007, pp. 280) considering (i) any number of blocks and (ii) any conceptual design model.

Let \(\mathbf{C }=[\textit{c}_{\mathrm{\textit{k,l}}}]\) be a (\(\textit{K}\),\(\textit{K}\)) symmetric matrix, which takes into account the link between the LVs. It is defined from the conceptual design of the model. The elements of the matrix \({\mathbf{C }}\) are defined as follows : \(c_{k,l}=c_{l,k}=1\) if there is an arrow between the LVs \(\varvec{\xi }_{k}\) and \(\varvec{\xi }_{l}\), and \(c_{k,l}=c_{l,k}=0\) otherwise.

Overall, it is a symmetrical procedure (Dolce et al. 2018) that concerns the computation of the values of \(\mathbf{w }_{k}\) vectors of weights, associated with \(\mathbf{z }_{k}=\mathbf{X }_{k}\mathbf{w }_{k} (1\le k\le \textit{K})\), with the constraints that these LV scores are centered and have unit variance \((\frac{\mathbf{w }^{'}_{k}\mathbf{X }^{'}_{k}\mathbf{X }_{k}\mathbf{w }_{k}}{N}=1)\).

The Hanafi–Wold’s procedure consists of building iteratively a sequence of LV scores \(\mathbf{z }^{(s)}_{k}=\mathbf{X }_{k}\mathbf{w }^{(s)}_{k},\) \( (1 \le k\le \textit{K})\) and \( (\textit{s} =0,1,2,\ldots )\), as follows :

For \(k_{0} = 1,2,\ldots ,K\):

-

1.

for the block \(\mathbf{X }_{k_{0}}, \quad {r^{(s)}_{k_{0},l}} = \left\{ {\begin{array}{ll}{r(\mathbf{z }^{(s)}_{k_{0}},\mathbf{z }^{(s+1)}_{l})}, \, \hbox {if} \quad l\le {k_{0}} \\ {r(\mathbf{z }^{(s)}_{k_{0}},\mathbf{z }^{(s)}_{l})}, \, \hbox {if} \quad l > {k_{0}} \end{array}} \right. \), where r denotes the Pearson correlation coefficient

-

2.

\(\theta ^{(s)}_{k_{0},l} = \left\{ {\begin{array}{ll} sign(r^{(s)}_{k_{0},l}) \quad \hbox {(centroid)} \\ r^{(s)}_{k_{0},l} \quad \hbox {(factorial)} \end{array}} \right. \)

-

3.

\(\mathbf{Z }^{(s)}_{k_{0}}=\Sigma ^{k_{0}-1}_{l=1}c_{k_{0},l} \theta ^{(s)}_{k_{0},l}\mathbf{z }^{(s+1)}_{l}+\Sigma ^{K}_{l=k_{0}+1} c_{k_{0},l}\theta ^{(s)}_{k_{0},l}\mathbf{z }^{(s)}_{l}\)

-

4.

\(\tilde{\mathbf{w }}^{(s+1)}_{k_{0}}= ({\mathbf{X }^{'}_{k_{0}}\mathbf{X }_{k_{0}}})^{-1} \mathbf{X }^{'}_{k_{0}}{\mathbf{Z }^{(s)}_{k_{0}}}\)

-

5.

\({\mathbf{w }}^{(s+1)}_{k_{0}}=\sqrt{N} \frac{\tilde{\mathbf{w }}^{(s+1)}_{k_{0}}}{\left\| {\mathbf{X }_{k_{0}} \tilde{\mathbf{w }}^{(s+1)}_{k_{0}}}\right\| }\)

-

6.

\(\mathbf{z }^{(s+1)}_{k_{0}} =\mathbf{X }_{k_{0}}\mathbf{w }^{(s+1)}_{k_{0}}\)

The procedure begins with an arbitrary choice of initialization. Suppose that \(\mathbf{z }^{(s+1)}_{1}\),\(\mathbf{z }^{(s+1)}_{2},\ldots , {\mathbf{z }}^{(s+1)}_{{k_{0}-1}}\) are computed for the blocks \(\mathbf{X }_{1}\),\(\mathbf{X }_{2},\ldots , \mathbf{X }_{k_{0}-1}\), \(\mathbf{z }^{(s+1)}_{k_{0}}\),\(\mathbf{z} ^{(s+1)}_{k_{0}+1},\ldots , {\mathbf{z }}^{(s+1)}_{{K}}\) are computed following Steps 2, 3, 4 and 5. The process is iterated over (s) until the quantity \(\sum _{k=1}^{K} || \mathbf{z }^{(s+1)}_{k} - \mathbf{z }^{(s)}_{k} ||^2\) is smaller or equal to a fixed threshold.

Clearly, the procedure depends on two schemes (step 2) depending on how \(\theta ^{(s)}_{k_{0},l}\) elements are calculated. In step 4 is considered here only the calculation of the weights using the so-called mode B. Another alternative for the weights calculation, known as mode A (see Hanafi 2007), can also be used, but the present work is limited only to mode B.

The Hanafi–Wold’s procedure can be presented in a compact form, depending on the two chosen schemes (i.e., centroid or factorial). For the centroid scheme, the procedure can be presented as follows:

where

For the factorial scheme, the procedure can be presented as follows:

where

The compact forms (4) and (6) are obtained straightforwardly by substituting step 4 in step 3 in the Hanafi–Wold’s procedure.

Hanafi (2007) establishes two monotony properties for the iterative procedure presented above. These two properties are summarized in the following theorem.

Theorem 1

(Hanafi 2007, pp. 282)

-

(i)

Let \({\mathbf{z }}_k^{\left( s \right) } = {{\mathbf{X }}_k}{\mathbf{w }}_k^{\left( s \right) } (1 \le k \le K)\), \(s = 0,1,2,\ldots \), be a sequence of LV scores generated by Hanafi–Wold’s procedure. When the centroid scheme is considered, then the following inequalities hold:

$$\begin{aligned} \forall s\quad f\left( {{\mathbf{z }}_1^{\left( s \right) },{\mathbf{z }}_2^{\left( s \right) }, \ldots ,{\mathbf{z }}_K^{\left( s \right) }} \right) \le f\left( {{\mathbf{z }}_1^{\left( {s + 1} \right) },{\mathbf{z }}_2^{\left( {s + 1} \right) }, \ldots ,{\mathbf{z }}_K^{\left( {s + 1} \right) }} \right) , \end{aligned}$$(8)where f is given as the following :

$$\begin{aligned} f\left( {{\mathbf{z }}_1^{},{\mathbf{z }}_2^{}, \ldots ,{\mathbf{z }}_K^{}} \right) = \sum \limits _{k,l = 1,k \ne l}^K {{c_{kl}}\left| {r\left( {{\mathbf{z }}_k^{},{\mathbf{z }}_l^{}} \right) } \right| } \end{aligned}$$(9) -

(ii)

Let \({\mathbf{z }}_k^{\left( s \right) } = {{\mathbf{X }}_k}{\mathbf{w }}_k^{\left( s \right) }\) be a sequence of LVs generated by the Hanafi–Wold’s procedure. When the factorial scheme is considered, then the following inequalities hold:

$$\begin{aligned} \forall s\quad g\left( {{\mathbf{z }}_1^{\left( s \right) },{\mathbf{z }}_2^{\left( s \right) }, \ldots ,{\mathbf{z }}_K^{\left( s \right) }} \right) \le g\left( {{\mathbf{z }}_1^{\left( {s + 1} \right) },{\mathbf{z }}_2^{\left( {s + 1} \right) }, \ldots ,{\mathbf{z }}_K^{\left( {s + 1} \right) }} \right) \end{aligned}$$(10)where g is given as the following:

$$\begin{aligned} g\left( {{\mathbf{z }}_1^{},{\mathbf{z }}_2^{}, \ldots ,{\mathbf{z }}_K^{}} \right) = \sum \limits _{l \ne k\;k,l = 1,}^K {{c_{kl}}{r^2}\left( {{\mathbf{z }}_k^{},{\mathbf{z }}_l^{}} \right) } \end{aligned}$$(11)

As a direct consequence of Theorem 1, monotony convergence of Hanafi Wold’s procedure was established.

The Corollary 1 (respectively, Corollary 2) in Hanafi (2007, pp.284–287), established the monotony convergence of Hanafi Wold’s Procedure. That is to say, that the real sequence \({a^{\left( s \right) }} = f\left( {{\mathbf{z }}_1^{\left( s \right) },{\mathbf{z }}_2^{\left( s \right) }, \ldots ,{\mathbf{z }}_K^{\left( s \right) }} \right) \) (respectively, the real sequence \({b^{\left( s \right) }} = g\left( {{\mathbf{z }}_1^{\left( s \right) },{\mathbf{z }}_2^{\left( s \right) }, \ldots ,{\mathbf{z }}_K^{\left( s \right) }} \right) \) converges.

3 Generalization of Hanafi’s Theorem 1 and its consequences

The present section provides a generalisation of Hanafi’s Theorem 1.

Theorem 2

-

(i)

Let \({\mathbf{z }}_k^{\left( s \right) } = {{\mathbf{X }}_k}{\mathbf{w }}_k^{\left( s \right) }\)(\(1 \le k \le K\)), \(s = 0,1,2,\ldots \), be a sequence of LVs scores generated by Hanafi–Wold’s procedure, when the centroid scheme is considered the following equality holds:

$$\begin{aligned} f\left( {\mathbf{{z}}_1^{\left( {s + 1} \right) },\mathbf{{z}}_2^{\left( {s + 1} \right) }, \cdots ,\mathbf{{z}}_K^{\left( {s + 1} \right) }} \right) - f \left( {\mathbf{{z}}_1^{\left( s \right) },\mathbf{{z}}_2^{\left( s \right) }, \cdots , \mathbf{{z}}_K^{\left( s \right) }} \right) =\dfrac{1}{N} \sum \limits _{k = 1}^K {\lambda _k^{\left( s \right) }{{\left\| {\mathbf{{z}}_k^{\left( {s + 1} \right) } - \mathbf{{z}}_k^{\left( s \right) }} \right\| }^2}} \qquad \end{aligned}$$(12)where f is given in (9) and \(\lambda _k^{\left( s \right) }\) is given in (5).

-

(ii)

Let \({\mathbf{z }}_k^{\left( s \right) } = {{\mathbf{X }}_k}{\mathbf{w }}_k^{\left( s \right) }\)(\(1 \le k \le K\)), \(s = 0,1,2,\ldots \), be a sequence of LVs generated by Hanafi–Wold’s procedure, when the factorial scheme is considered the following equalities hold:

$$\begin{aligned}&g\left( {{\mathbf{z }}_1^{\left( {s + 1} \right) },{\mathbf{z }}_2^{\left( {s + 1} \right) }, \ldots ,{\mathbf{z }}_K^{\left( {s + 1} \right) }} \right) - g\left( {{\mathbf{z }}_1^{\left( s \right) },{\mathbf{z }}_2^{\left( s \right) }, \ldots ,{\mathbf{z }}_K^{\left( s \right) }} \right) \nonumber \\&\quad =\dfrac{1}{N} \sum \limits _{k = 1}^K {\mu _k^{\left( s \right) } {{\left\| {\mathbf{{z}}_k^{\left( {s + 1} \right) } - \mathbf{{z}}_k^{\left( s \right) }} \right\| }^2}} \end{aligned}$$(13)where g is given in (11) and \(\mu _k^{\left( s \right) }\) is given in (7).

Theorem 2 generalizes Theorem 1. Indeed, it is sufficient to note that the right side of the Eq. (12) in the Theorem 2(i) is always positive:

It follows that:

As a consequence, inequalities (8) in Theorem 1(i) hold.

In the same way, the right side of the Equalities (13) in the Theorem 2(ii) is also always positive:

As a consequence, inequalities (9) in Theorem 1(ii) hold.

Proof of Theorem 2 (i)

For \(k = 1,2,\ldots ,K\), let \({f_k}\) be the function defined by:

It is worth noting that \({f_k}\) can be also written as the function of \({\mathbf{Z }}_k^{\left( {s} \right) }\) given in (5), as:

where cov denotes the covariance.

Hanafi (2007, pp. 281) shows that \({f_k}\left( {{\mathbf{w }}_k^{\left( s \right) }} \right) \le {f_k}\left( {{\mathbf{w }}_k^{\left( {s + 1} \right) }} \right) \) with

Evaluating \({f_k}\) respectively in \({\mathbf{w }}_k^{\left( {s + 1} \right) }\) and \({\mathbf{w }}_k^{\left( {s} \right) }\) as follows:

and

it follows that

Considering the following equalities:

and summing over k, it follows

Substitution (16) in the right of the equality (17) gives:

Proof of Theorem 2 (ii)

For \(k = 1,2,\ldots ,K\), let \({g_k}\) be the function defined by :

Hanafi (2007, pp. 285) shows that

\({\mathbf{w }}_k^{\left( {s + 1} \right) }\) can be written equivalently as

Noting that

it results that

The proof of the Theorem 2 (ii) is straightforward, just by observing the following equalities:

Indeed by summing over k these equalities, it follows:

and substituting Eq. (18) in right side of Eq. (19), it follows

\(\square \)

Theorem 2 allows additional convergence results of Hanafi–Wold’s procedure, as summarized in the following corollary.

Corollary 3

Let \({\mathbf{z }}_k^{\left( s \right) }\) be a sequence of LV scores generated by Hanafi–Wold’s procedure, then

-

(i)

when the centroid scheme is considered, the sequence \({\lambda _k^{\left( s \right) }} \left( {{\mathbf{z }}_k^{\left( {s + 1} \right) } - {\mathbf{z }}_k^{\left( s \right) }} \right) \) converges to 0;

-

(ii)

when the factorial scheme is considered, the sequence \({\mu _k^{\left( s \right) }} \left( {{\mathbf{z }}_k^{\left( {s + 1} \right) } - {\mathbf{z }}_k^{\left( s \right) }} \right) \) converges to 0.

Proof of Corollary 3

As a matter of fact, starting from theorem 2(i) the following inequalities hold for each \(k (1\le k\le K)\):

Because \(f\left( {{\mathbf{z }}_1^{\left( s \right) },{\mathbf{z }}_2^{\left( s \right) }, \ldots ,{\mathbf{z }}_K^{\left( s \right) }} \right) \) converges, see Corollary 1 in Hanafi (2007, pp 284), then

\(f\left( {{\mathbf{z }}_1^{\left( {s + 1} \right) },{\mathbf{z }}_2^{\left( {s + 1} \right) }, \ldots ,{\mathbf{z }}_K^{\left( {s + 1} \right) }} \right) - f\left( {{\mathbf{z }}_1^{\left( s \right) },{\mathbf{z }}_2^{\left( s \right) }, \ldots ,{\mathbf{z }}_K^{\left( s \right) }} \right) \) converges to 0. Therefore, \({\lambda _k^{\left( s \right) }} \left( {{\mathbf{z }}_k^{\left( {s + 1} \right) } - {\mathbf{z }}_k^{\left( s \right) }} \right) \) converges to 0. \(\square \)

The convergence of the sequence \({\mu _k^{\left( s \right) }} \left( {{\mathbf{z }}_k^{\left( {s + 1} \right) } - {\mathbf{z }}_k^{\left( s \right) }} \right) \) proceeds in the same manner by updating f by g and \(\lambda _k^{\left( s \right) }\) by \(\mu _k^{\left( s \right) }\).

4 Conclusion and perspectives

The main contribution of the present paper is the generalization of some properties of Hanafi–Wold’s procedure established in Hanafi (2007). A generalized form of Theorem 1 in Hanafi (2007) is given here by the Theorem 2. Moreover, additional convergence results to those already established by Corollary 1 and Corollary 2 in Hanafi (2007), are presented in the Corollary 3 of the present paper.

The results presented in this paper do not allow us to conclude that the sequence \({{\mathbf{z }}_k^{\left( {s + 1} \right) } - {\mathbf{z }}_k^{\left( s \right) }}\) convergences to zero, but gives a substantial contribution towards this achievement. Indeed, the Corollary 3 suggests to focus further work on finding a strictly positive lower bounds for the sequences \(\lambda _k^{\left( s \right) }\) and \(\mu _k^{\left( s \right) }\). More precisely, under the condition that there is a strictly lower bound for \(\lambda _k^{\left( s \right) }\) and \(\mu _k^{\left( s \right) }\), denoted \(\tau \) and \(\kappa \), respectively, Corollary 3 allows to conclude straightforwardly that the sequences \({{\mathbf{z }}_k^{\left( {s + 1} \right) } - {\mathbf{z }}_k^{\left( s \right) }}\) convergences to zero as following.

For centroid scheme, using Corollary 3 (i) it follows that for every k and every s, \( {\left\| {{\mathbf{z }}_k^{\left( {s + 1} \right) } - {\mathbf{z }}_k^{\left( s \right) }} \right\| ^2} \le \frac{1}{\tau }\left[ \lambda _k^{\left( s \right) } {\left\| {{\mathbf{z }}_k^{\left( {s + 1} \right) } - {\mathbf{z }}_k^{\left( s \right) }} \right\| ^2} \right] \), which leads the sequences \({{\mathbf{z }}_k^{\left( {s + 1} \right) } - {\mathbf{z }}_k^{\left( s \right) }}\) converging to zero. In the same way, for factorial scheme, using Corollary 3 (ii) it follows that for every k and every s, \( {\left\| {{\mathbf{z }}_k^{\left( {s + 1} \right) } - {\mathbf{z }}_k^{\left( s \right) }} \right\| ^2} \le \frac{1}{\kappa }\left[ \mu _k^{\left( s \right) } {\left\| {{\mathbf{z }}_k^{\left( {s + 1} \right) } - {\mathbf{z }}_k^{\left( s \right) }} \right\| ^2} \right] \), which leads the sequences \({{\mathbf{z }}_k^{\left( {s + 1} \right) } - {\mathbf{z }}_k^{\left( s \right) }}\) converging to zero.

References

Addinsoft XLSTAT (2019). https://www.xlstat.com/en

Chin WW (2003) PLS graph 3.0. Soft Modeling Inc, Houston

Dijkstra TK (2017) A perfect match between a model and a mode. In: Latan H, Noonan R (eds) Partial least squares path modeling: basic concepts, methodological issues and applications. Springer, Cham, pp 55–80

Dolce P, Esposito Vinzi V, Lauro C (2018) Non-symmetrical composite-based path modeling. Adv Data Anal Classif 12(3):759–784

Esposito Vinzi V, Chin WW, Henseler J, Wang H (2010) Handbook of partial least squares: concepts, methods and applications. Springer, Berlin

Hair J, Hult G, Ringle C, Sarstedt M (2017) A primer on partial least squares structural equation modeling (PLS-SEM), 2nd edn. Sage, Thousand Oaks

Hanafi M (2007) PLS path modeling: computation of latent variables with the estimation mode B. Comput Stat 22:275–292

Hubona G (2015) PLS-GUI [Software program]. http://www.pls-gui.com

Jöreskog K (1970) A general method for analysis of covariance structure. Biometrika 57:239–251

Lohmöller J (1989) Latent variable path modeling with partial least squares. Physica-Verlag, Heildelberg

Ringle CM, Wende S, Becker JM (2015) SmartPLS 3 [software]. SmartPLS, Bönningstedt

Wold H (1982) Soft modeling: the basic design and some extensions. In: Jöreskog K, Wold H (eds) Systems under indirect observation, vol 2. North-Holland, Amsterdam, pp 1–54

Wold H (1985) Partial least squares. In: Kotz S, Johnson N (eds) Encyclopedia of statistical sciences, vol 6. Wiley, New York, pp 581–591

Acknowledgements

Open access funding provided by Università degli Studi di Napoli Federico II within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hanafi, M., Dolce, P. & El Hadri, Z. Generalized properties for Hanafi–Wold’s procedure in partial least squares path modeling. Comput Stat 36, 603–614 (2021). https://doi.org/10.1007/s00180-020-01015-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00180-020-01015-w