Abstract

Model-based predictive control (MPC) describes a set of advanced control methods, which make use of a process model to predict the future behavior of the controlled system. By solving a—potentially constrained—optimization problem, MPC determines the control law implicitly. This shifts the effort for the design of a controller towards modeling of the to-be-controlled process. Since such models are available in many fields of engineering, the initial hurdle for applying control is deceased with MPC. Its implicit formulation maintains the physical understanding of the system parameters facilitating the tuning of the controller. Model-based predictive control (MPC) can even control systems, which cannot be controlled by conventional feedback controllers. With most of the theory laid out, it is time for a concise summary of it and an application-driven survey. This review article should serve as such. While in the beginnings of MPC, several widely noticed review paper have been published, a comprehensive overview on the latest developments, and on applications, is missing today. This article reviews the current state of the art including theory, historic evolution, and practical considerations to create intuitive understanding. We lay special attention on applications in order to demonstrate what is already possible today. Furthermore, we provide detailed discussion on implantation details in general and strategies to cope with the computational burden—still a major factor in the design of MPC. Besides key methods in the development of MPC, this review points to the future trends emphasizing why they are the next logical steps in MPC.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

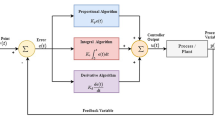

For the automation of technical systems, feedback controllers (also called closed-loop controllers) compare a reference r with a measured variable y determining a suitable value for the manipulated variable u on the basis of the resulting deviation e = r −y (Fig. 1). Based on the working principle, they can be divided into the categories: classical controllers, predictive controllers, and repetitive controllers. Classical controllers, such as PID controllers, bang-bang controllers, or state controllers, only consider past and current system behavior (i.e. they are “reactive” to a deviation). Predictive controllers use a system model to predict the future behavior anticipating deviations from the reference [101]. Repetitive controllers, on the other hand, consider the system behavior of the previous cycle and calculate an optimal trajectory for the next cycle [46].

The PID controller is the best known controller with an outstanding importance and spread in industrial applications [4]. Although there exist several setup rules, it is often difficult to find a parametrization—especially for nonlinear or time-variant systems [131].

“The effectiveness of any feedback design is fundamentally” limited by system dynamics and model accuracy. Hence, even in theory, perfect tracking of time-varying reference trajectories is not possible with feedback control alone—regardless of design methodology [58].

Special cases, such as technical limitations of actuators, require individual solutions that are often heuristically based, hard to understand, and maintain. Higher control methods, such as sliding mode controllers or back-stepping controllers, are similarly abstract and complex in their interpretation [146].

In fact, the founders of MPC theory ([34] and [104]) stressed that classic control suits 90% of all control problems perfectly. Only for the remaining fraction advanced control needs to be applied. Instead, we want to argue that MPC is a decent approach in almost all problems—even in those, which have not been controlled so far due to a lack of control theoretic understanding or of missing trust in feasibility. MPC is based on a repeated real-time optimization of a mathematical system model [101]. Based on this system model, the MPC predicts the future system behavior considering it in the optimization that determines the optimal trajectory of the manipulated variable u, Fig. 2. Thus, MPC comes with an intuitive parameterization through adjusting a process model at the cost of a higher computational effort than classical controllers.

The anticipating behavior and the fact that it can consider hard constraints makes the method so valuable for controlling real systems. Aligned with the rise of computational power and as models of complex processes become more and more available for all kinds of different systems, MPC now enables for the control of systems that were previous unthinkable.

MPC relies on models, which are available in almost every discipline. This allows to make use of this long-grown knowledge and saves the tedious formulation of an explicit control law—a task that is usually reserved for control experts. Instead, MPC determines the control law automatically through a model-based optimization. This implicit formulation, the flexibility, and the explicit use of models are the main advantages of MPC and the reasons for us to campaign for MPC in the engineering community. This paper shall give a summary from the application point of view, but it shall not claim the MPC to be the optimal choice over all control algorithms in every particular problem.

When MPC was new, several widely noticed review paper have been published on both, theory [13, 44, 77, 85] and applications [99]. In contrast, this review is driven by the idea that MPC does not remain forever a topic for control engineers. Today, the development of MPC theory is pulled forward by application, in which manufacturing technology just emerged to make an important contribution—often having challenging requirements on reliability, constraints, and time. The work should inspire non-control experts to jump on the bandwagon and to develop new use cases pushing the barriers of technological limitations further.

The article starts with the fundamental theory and a rough sketch of the historic evolution to learn from the visions and detours of the beginnings. The focus lies on practical considerations of feasibility, stability, and robustness together with representative applications. On our way, we discuss the different flavors of MPC, of which related keywords are DMC, model(-based) predictive control, receding horizon control, etc. [12, 70, 101].

2 Theory

MPC is a set of advanced control methods, which explicitly use a model to predict the future behavior of the system. Taking this prediction into account, the MPC determines an optimal output u by solving a constrained optimization problem. It is one of the few control methods that directly considers constraints. Often, the cost function is formulated in such a way that the system output y tracks a given reference r for a horizon N2, Fig. 3. Only the first value of the optimized output trajectory is applied to the system. This prediction and optimization is repeated in each time instance. This is why MPC is also referred to as “receding horizon” control. In essence, the idea is that a short-term (predictive) optimization achieves optimality over a long time. This is assumed to be true since the error of a proximal forecast is considered to be small compared to a distant prediction. The combination of prediction and optimization is the main difference from conventional control approaches, which use precomputed control laws [77].

Function principle of a model-based predictive with horizons N1, N2, Nu (in accordance to [105])

The prediction horizon N2 must be long enough to represent the effect of a change in the manipulated variable u on the control variable y. Delays can be considered by the lower prediction horizon N1 or by incorporating them into the system model. Often, the latter is more intuitive and the lower prediction horizon is set to N1 = 1 to account for the computation time (hence the computation is conducted in one time step, the solution u is implemented not before the next time step).

Assuming an arbitrary system

MPC minimizes a user-defined cost function J, Eq. 3, e.g. the tracking error between the reference vector r and the model output y, Eq. 4:

This formulation uses an arbitrary norm \(\lVert \cdot \rVert \).

We will refer to the predicted state k + i at time point k as x(k + i|k). Bold written variables A indicate higher dimensions, i.e. a vector (lowercase characters) or a matrix (uppercase characters). A sequence of states will be indicated by x(⋅):

In this way, the constraint formulation will be abbreviated by

indicating that the sequence x(⋅) being in the feasible set \(\mathbb {X}_{f}\).

3 History

In the late 1970s, [105] and [24] independently laid the foundation of MPC theory. With the upcoming digital controllers, they were able to efficiently control complex problems demonstrating a massive economic potential. [105] introduced model predictive heuristic control (MPHC) in 1978, which already included all characteristics of a MPC:

-

an explicit process model, described by impulse response functions (IRFs),

-

a receding horizon,

-

input and output constraints, and

-

an iterative determination of the controls (value of the manipulated variable u).

However, [105]) did not claim to obtain optimal controls. Instead, the future controls where determined iteratively until they met the constraints. The additional term “heuristic” stressed the missing explicit control law. The technique was developed for the process industry with their multiple input multiple output (MIMO) systems, distinctive delays, and long processing times [105]. They even considered to identify the process model on-line—although only for changes in the set points.

Roughly at the same time, [24] from Shell Oil Company developed dynamic matrix control (DMC). They used a piecewise linear model to predict the future behavior of a catalytic cracking unit. Thus, the controller gained awareness of the plant’s time delay and its dynamic system behavior. Cutler and Ramaker used a receding prediction horizon and updated the model coefficients based on the error between the previously predicted output and the currently measured output. They showed that DMC outperformed classic cascaded PID control claiming that DMC has been applied to control problems at Shell Oil since 1974. The main difference to MPHC was that DMC calculates optimal control variables. However, the matrix formulation of the control problem restricts DMC to linear process models.

Both works laid the basis for a wide and fast spread of MPC in the petrochemical process industry. Even with linear models, the sampling times were several hours [97]. At the beginning, the focus was on simplifying the controller design and establishing a comprehensive theory so that the method could be used in industry [24, 34, 105]. The potential of MPC was not solely based on prediction but also on the fact that it can use non-linear models—both not supported by classic control. In fact, the process model formulation was a hot topic in the beginning of MPC theory: impulse response formulation (IRF) [105], piecewise linear step response functions [24], ARMA models [22, 23], or state space formulations [56]. This flexibility in the choice of model formulation was one of the key reasons for the fast success of MPC.

The first approaches simply neglected model uncertainties and process instabilities—because most chemical engineering processes were open-loop stable [35]. From the late 1980s on, the research focus shifted to robustness and stability of MPC, which was especially pursued by the research group around Manfred Morari [13, 18, 19, 53, 144]. A detailed discussion about stability and robustness of MPC provides Section 4.

With a finite horizon, i.e. a fixed moving window, the (linear) estimation problem could be formulated as a quadratic programming problem [100], which was computationally favorable. With computation pressing [14]) introduced “explicit MPC” which shifts the computation to massive a priori optimization (Section 8.1).

With the millennium and computers becoming more and more powerful, research shifted towards application. The trend was coming from large problems and long calculation times towards problems with less control variables and much faster requirements to computational time.

4 Feasibility, stability, and robustness

One has to distinguish several aspects of MPC:

-

feasibility of the open-loop optimization problem,

-

stability of the closed-loop controller, and

-

robustness regarding uncertainties.

The first concerns the formulation of the optimization problem, the second the controller as a whole with regard to disturbances, and the last mainly the accuracy of the process model.

In a stable system, the controller manages to get the output to a constant value at the end of the horizon N2, in spite of disturbances to the control loop. Robustness, in contrast, aims at uncertainties. It is mostly related to model inaccuracies regarding the output prediction. The model is the key element of MPC, but it is never perfect [101]. However, for stability analysis, a perfect model is assumed. Only in a subsequent step robustness is examined. Furthermore, signal noise is an important topic for robustness [13]. Garcia and Morari [34] pointed out early that optimal control improves the control behavior but complicates robustness examination. Robustness does not follow from stability or vice versa [13] but a closed-loop stable system always reduces the effect of disturbances.

This work draws crisp lines in the following between those separated problems of MPC design.

4.1 Feasibility

Hard input constraints (on u) represent physical limitations of, e.g. actuators, which in fact must not be violated. In contrast, hard output constraints (on y) are often rather desired than required. They may render the optimization problem infeasible. Relaxing these output constraints by introducing slack variables ξ to the optimization problem creates an extra degree of freedom [84]. The extend of violation is penalized in the objective function:

Both terms posse an individual weighting matrix W. If the norm is quadratic, it can be resolved to a matrix multiplication: \(\lVert \boldsymbol {x}\rVert ^{2}_{\boldsymbol {W}} = \boldsymbol {x}^{\intercal } \boldsymbol {W} \boldsymbol {x}\).

The weight Wξ is a trade-off between the amount and duration of a violation [101]. The slack variables ξ do not resemble a function but represent individual series for every time step k. Note that they are vectors of length N2 − N1 as they cover the prediction horizon.

All commercial (linear) MPC software packages soften hard output constraints through slack variables to guarantee feasibility [85].

Nevertheless, the input constraints are still hard and turn the optimization problem to be non-linear [101]. A non-feasible desired trajectory w provokes instabilities [112]. To tackle the problem of unfeasible desired trajectories, [39] suggested to filter the trajectory w generating a feasible reference trajectory r. Thereby, the problem of stabilizing a closed-loop system with input constraints was separated from the problem of fulfilling these constraints [13, 39]. This approach was called “reference governor”. It avoided constraint violations on the input by adjusting the desired trajectory beforehand with regard to the response behavior of the plant. This adjustment could be a simple smoothing of abrupt changes [13] or a dynamic optimization of its own [112]. Even a second MPC could be used to build the new reference trajectory r [112]. The separation was charming as it was applicable to non-linear problems in discrete and continuous time.

4.2 Stability

In its most basic formulation, stability is the property of a system that a bounded input results in a bounded output: the BIBO stability. In case that the transient behavior converges against an equilibrium, the closed-loop system is called to be asymptotically stable. Furthermore, if the equilibrium is reached from every possible initial state, then the system is labeled “globally asymptotically stable”. This can be guaranteed for all linear time invariant (LTI) discrete time systems with hard input and soft output constraints if the optimization problem is solved over infinite horizons [144]. Infinite prediction and control horizon \(N_{2} = N_{u} = \infty \) results in a linear quadratic gaussian (LQG) optimal control problem, for which a comprehensive stability theory exists: global asymptotic stability is guaranteed if and only if all eigenvalues of the closed-loop system are located inside the unit disk.

However, finite prediction horizon obviously is an extreme restriction. Computational restrictions limit the MPC in general to a finite horizon. To still guarantee asymptotic stability, the optimal cost function of the MPC must be monotonically decreasing over time.

To illustrate this, let us assume a system behaving as illustrated in Fig. 4. It could constitute a continuous active cooling of glass at the end of the production line. In this case, the measurement y would be the temperature difference between glass and environment. The same way, the optimal control applied at time t0 would correspond to u0, whereas the according value of the objective function would be J0.

The depicted output y as well as the change in u0 tend towards the system’s equilibrium (as desired for the stable closed-loop behavior).

The cost J is not explicitly a function of time, so the desired monotonically decreasing behavior over time needs to be artificially imposed on it. One way to do this is to formulate an optimization problem that the control function is bounded by a Lyapunov function.

A Lyapunov function is a continuously differentiable scalar function \(V\left (\boldsymbol {x} \right ):\mathbb {R}^{n} \rightarrow \mathbb {R}\) with \(V \left (\boldsymbol {0} \right ) =\boldsymbol {0}\). It is always positive and does not increase over time:

The Lyapunov theorem essentially defines a prototypical function resulting in a bounded system state over time. Thus, the state of the art for stability schemes for (non-linear) MPC is to define the cost function in such a way that the optimal cost behaves as a Lyapunov function—or to prove this to be the case respectively. For this purpose, the optimization problem is extended by additional cost terms or constraints.

An adequate Lyapunov function to the optimal cost J0 of Fig. 4 is illustrated in Fig. 5, where the decreasing optimal cost is depicted over two system states.

One approach to make the optimal cost J0 behave like a Lyapunov function is to introduce a terminal cost J(k + N2). This nullifies the advantage of an infinite horizon, since the cost stays the same until infinity \(J(\textit {\textbf {k}}+N_{2}) \approx J(\infty )\) [75, 77]. Whereby, more constraints to guarantee stability of the controller may again cause feasibility problems of the optimization—especially for short prediction horizons. Therefore, it is common practice to constraint a terminal region instead of, e.g. a zero terminal constraint \(\lVert \textit {\textbf {x}}(\textit {\textbf {k}}+N_{2})\rVert =0\).

The most common stability approach, which avoids a Lyapunov analysis, is to introduce so-called “contraction constraints” ensuring that (usually the euclidean norm of) the state vector is decreasing over time [13]:

Some applications even use both, a Lyapunov-based cost function and contraction constraints, e.g. [116].

Mayne et al. [75, 77] concluded that stability of MPC-controlled (linear) systems was at a “mature” stage in 2000, whereas for robustness, only conceptual approaches existed.

With the understanding of stability analysis for linear MPC, [44] pointed out that a stability analysis for non-linear MPC became more urgent.

While the approaches to design a stable system that was elaborated above (Lyapunov-based cost function or contraction constraints) apply equally for linear and non-linear systems, still, many implementations of MPC meet non-linearity by successive linearization avoiding a non-linear stability analysis [101], Section 7.

For a more complete discussion and mathematical foundation regarding stability, the authors refer to [3, 72, 77, 98] and [31, 81, 87].

4.3 Robustness

In contrast to what have been claimed, [35] stressed that MPC is not inherently more or less robust than classic feedback control (e.g. PID controller).

Robustness follows stability of the closed-loop system only if no input constraints are present [106]. “When we say that a control system is robust we mean that stability is maintained and that the performance specifications are met for a specified range of model variations (uncertainty range)” [85].

Essentially, robustness deals with model uncertainty, which can be formulated in several ways [13]:

-

by uncertainty intervals,

-

by structured feedback, or

-

by using a set of models.

For the latter, one describes the plant by multiple models and optimize, e.g. the worst-case of them (\(L_{\infty }\)-norm) [19].

A similar approach was pursued by [53] distinguishing different types of uncertainty: uncertainty in the gain, the time constant, and time delay. They considered them all simultaneously. The approach was taken up again later as matrix formulation [25]. This assumes structured noise in the feedback loop so that it can be considered in the model. Assuming a linear time invariant (LTI) system and (linear time invariant (LTI)) uncertainty to be present in the feedback loop, robustness can be guaranteed if the norm of the uncertainty matrix is lower than a defined threshold [13].

Uncertainty intervals can often be assigned to model coefficients of an empirical transfer function. In this idea, the model structure remains the same and only the coefficients change. However, [13] concluded that allowing model coefficients to vary within intervals is not sufficient to achieve robustness. A comprehensible example is that oscillating step responses would be allowed.

For all these approaches you need to quantify uncertainty in the model of the system. The robustness calculations come at the cost of performance (regarding optimality and computation) [13].

An entirely different approach is to define a cost function that favors robustness by design: e.g. minimizing the maximum error in the prediction horizon would result in less extreme control actions, which in turn lead to a smoother process guidance [18]. This suggests to use the \(L_{\infty }\)-norm to formulate the optimization problem instead of a—standard—least squares (L2) formulation.

In this case, the \(L_{\infty }\) norm is the maximum of all errors between the predicted model outcome and the desired reference. [18] motivated its use with the smoothing influence on the control outputs u. Using the \(L_{\infty }\)-norm hinders the controller to make full use of the plant potential due to very conservative control actions [13]. However, if the process model is linear, the optimization problem becomes quadratic if the cost function is expressed as a L2- or a \(L_{\infty }\)-norm [13]—supposed that there are no constraints present. Quadratic problems are favorable because they can be solved efficiently.

Both approaches, a more elaborate model or a special objective function, undermine the key advantage of MPC: optimality. One idea to overcome this is to enforce robustness by introducing a contraction constraint (similar to stability), i.e. requiring the worst-case prediction to contract [85, 144]. This let MPC still implement the optimal trajectory as long as the additional constrained is fulfilled.

4.4 Summary on feasibility, stability, and robustness

García et al. [35] noted that for every unconstrained, linear MPC there exist an equivalent classic feedback controller with all benefits of its well-proven stability theory. However, not using constraints loses much of the charm of MPC. Therefore, it is more an academic twitch than a practical option. The same is true for infinite horizon MPC.

There exists an extensive stability theory for linear MPCs. For systems in state space form, the stability analysis is based on eigenvalues and on the unit disk as it is familiar from the stability analysis of conventional (linear) control [144]. However, optimization problems with hard input constraints are often non-linear [101].

Establishing stability—especially robust stability—is extremely difficult for non-linear problems. This is mainly due to the lack of an explicit functional description of the control algorithm, which is required for most stability analysis [84]. Today, stability of non-linear, constrained, finite-horizon MPC is achieved by formulating the cost function as a Lyapunov function and introducing a terminal set constraint [75, 77]. Using a terminal set links the stability problem with the constraint satisfaction problem [17]—ironically, additional constraints stabilize a constrained, non-linear MPC.

Robustness is a trade-off to performance. Several approaches increase robustness at the cost of computation and optimality (e.g. HCode \(L_{\infty }\)-norm). Nevertheless, it can only be achieved if the amount of uncertainty can be quantified.

A practical compromise to maintain optimality—the key feature of MPC—is to add the requirement the the worst-case prediction must contract [85, 144].

5 Recent developments in MPC theory

Once again, motivated by the chemical process industry, [58] integrated a MPC into an iterative learning control (ILC) building a controller dedicated for batch processing. A classic iterative learning control (ILC) works during the process as open-loop control but adjusts this profile of commands between cycles or “iterations”. In this way, it approaches the ideal profile incrementally from cycle-to-cycle and may react to trends over multiple cycles. The essence is that the “information gathered during previous runs can be used to improve the performance of a present run” [57]. In contrast to this, MPC is a closed-loop controller but considers repetitive tasks as independent of each other.

Combining both methods builds a system that reacts to disturbances within a cycle or process (“as they occur”) and minimizes the tracking error over multiple cycles. However, integrating MPC to iterative learning control (ILC) limits the use to fixed-time operations, i.e. the number of time samples must stay the same over cycles [58]. Splitting both techniques, let the iterative learning control (ILC) work as an upper-level reference governor for the MPC as was conducted, e.g. by [86], and may overcome such limitations. In this combination, MPC introduces constraints to iterative learning control (ILC) [57].

Li et al. [59] presented a third flavor of such a combination effectively being an optimal iterative learning control (ILC): They took the optimization part of MPC, i.e. optimizing the manipulated variable over a horizon, transplanting it into an iterative learning control (ILC). The resulting system determined an optimal profile of the manipulated variable(s) for each cycle. In a subsequent work, [60] suggested to smooth the commands over cycles. This essentially states that the optimal solution is not entirely trusted. Such systems only touch MPC in general, because they lack of a receding horizon and effectively filter their optimal control recursively.

Among the works of [66] and [128] lies the combination of iterative learning MPC and the uprising field of data-based learning in control theory. The former extracts new trajectories of a linear-quadratic regulator (LQR) based on overall objectives and data of previous trajectories with the help of the k-nearest-neighborhood algorithm. The latter extends the idea of an iterative, data-driven adjustment of trajectories to the application of MPC.

Although also applied to a repetitive task, [78] focused on learning a model of the system dynamics rather than a trajectory. The authors took advantage of data and weighted linear Bayesian regression to model uncertainties of vehicle dynamics on a repeating path. The same way [50] applied Gaussian process modeling to elaborate confidence intervals on possible trajectories to guarantee safety.

Data-driven modeling, such as machine learning, can be used for the system model that the MPC uses in its optimization, or to approximate the solution space of an explicit MPC, as e.g. in [45, 71, 88], Section 8.1.

The possibilities of learning are enhanced especially for multi-agent systems, where every single agent contributes to the data-acquisition and policy exploration. [68] utilized such a swarm intelligence to learn the trajectory for a distributed MPC. The learning problem for this purpose was defined as a quadratic optimization problem under the condition of collision avoidance as constraint.

6 Applications

The idea of optimal control in the presence of constraints and the intuitive design of the control law as an optimization problem has made MPC interesting for many different tasks. Applications have spread wide recently throughout all fields of engineering. The following highlights main movements.

6.1 Process industry

For a long time, the process industry used MPC almost exclusively. This is not surprising as the petrochemical industry promoted the development decisively [24, 97, 99, 105]. Motivated by its complex, multi-variable processes with time delay, MPC spread quickly since optimal control lead to significant economic benefit due to the large throughput. Darby et al. [26] acknowledged that MPC is “the standard approach for implementing constrained, multi-variable control in the process industries today”.

In the founding paper of MPC, [105] described three applications: a distillation column of a catalytic cracker in oil refinery, a steam generator, and a polyvinyl chloride (PVC) plant. The catalytic cracker had two manipulated variables (mass flow rates) and three control variables (temperatures), of which only one was constrained. The plant was modeled through twelve impulse response functions and the sample time was \(T_{s} = 3 \min \limits \) – manageable only because it used a heuristic control law.

With the control of the polyvinyl chloride (PVC) plant, they wanted to demonstrate the versatility of MPC by controlling five subprocesses. The results showed a severe reduction in variance of the controlled variables yielding to higher quality and energy savings. The impressive demonstration paved the way for the popularity of MPC. Richalet later also described how a distillation column and a vacuum unit was controlled in a refinery of Mobil Oil [104]. The objective function was already formulated as a quadratic Lyapunov function, which—as was shown—is favorable for stability. He did not address robustness but mentioned a back-up control system in case of failure. The results showed that the controller reduced the variance in the quality criteria resulting in a payout time of less than a year.

Oil companies were the promoters of model-based advanced controllers. Cutler and Ramaker [24] used a piecewise-linear model to control the furnace of a catalytic cracking unit at Shell Oil. With a prediction horizon of N2 = 30 and a control horizon of Nu = 10, they exploited the predictive potential.

Prett and Gillette 97 used even longer horizons (N2 = 35,Nu = 15) with a sampling time of “a few hours”. They successively linearized a non-linear process model determining the optimal operation point of the reactor and the regenerator of a catalytic cracker.

With distillation being one of the workhorses of the chemical process industry for the separation of molecules, it is still today a popular application examples for MPC, as in [21, 80], which both were a simulation study on linear MPC. Only that [80] successively linearized a non-linear model of a methanol/water mixture to apply linear MPC.

Piche et al. [95] introduced a neural network (NN) in MPC to control the set point change in an polyethylene (PE) reactor. A neural network (NN) is a non-linear empirical model based on historic data. This type of machine learning model is experiencing extraordinary attention nowadays. Linear dynamic models were constructed from conventional (open-loop) plant tests to control the plant at its set points. Piche et al. achieved 30% faster transitions and an overall reduction in variation of the controlled variables. The idea is still under active research. Li et al. [63] also explored successive linearization of a neural network (NN) in MPC but to control the temperature of a stirred reactor—a common application in process industry, e.g. for bioreactors. Shin et al. [117] used a neural network (NN) (fully connected, 14-15-2) with MPC for a propane devaporizer (e.g. specialized distillation column). Although claiming that neural network (NN)–based non-linear MPC achieved better performance than linear MPC, they benchmarked the new controller on conventional PI control demonstrating a 60% quicker settling time (35 min with neural network (NN)-MPC to 92 min with PI control). They further stressed easier modeling of data-driven models as an additional benefit of using NNs in conjunction with MPC. Nunez et al. [89] used a more complicated neural network (NN) structure, a recurrent neural network (RNN) (in fact, an attention-based encoder decoder recurrent neural network (RNN) with 23,000 free parameters) to model an industrial past thickening process. The sampling time was Ts = 5min giving the controller enough time to conduct a global optimization with particle swarm optimization (PSO) – a rather unusual choice – for a prediction and control horizon of N2 = 10 and Nu = 5 respectively. Presenting one rare example of an actual industrial deployment, they demonstrated the effectiveness of the control on an industrial plant for a working day. The recurrent neural network (RNN)–based MPC was capable of maintaining the target concentration of the paste thickener in spite of a severe disturbance when a pump failed. A recurrent neural network (RNN) structure was also used to control chained stirred reactors [136]. There are applications with further network types with distinct features, such as echo state networks to model time delay of buffer tanks, e.g. for a refrigerator compressor test rig with (non-linear) MPC [9].

In general, besides oil and gas, and the chemical industry, pharmaceutical and biology industry use MPC to manage the non-linearity coupled with large time-delays of their processes, e.g. in a fermentation process [42]. Ławryńczuk [6] compared linear MPC to non-linear MPC again for a stirred reactor and for a distillation column. He concluded that, in particular for the distillation process, the non-linear controller was more economic. On this background, he suggested to combine both approaches reducing the computational burden of pure non-linear MPC: applying non-linear optimization only for the first time instant k = 1 and using a linearized model for the other steps 1 < k < N2. To the knowledge of the authors, such an approach has not been examined further.

Prasad et al. [96] took a different route, preferring to use multiple linear models rather than a single non-linear one. They controlled the filled-height of a conical shaped tank. Since the diameter varies continuously with the height, they suggested to identify three separate linear models at different heights, to design one controller for each and combine the outputs as an ensemble to obtain a general output for the manipulation variable (the inlet flow rate).

In 2003, [99] already counted over 4 600 industrial applications reviewing the available commercial software packages for MPC. They differed in the model structure, its identification, and in how constraints were implemented (as hard constraints or as an additional penalization term in the cost function). Nevertheless, all models were linear, time-invariant, and derived by empirical test data. Online adaption of the model was not supported by any software, although there had been (academic) works on this issue already from the beginning, e.g. [105].

Although stability theory is at a mature level, AspenTech as a major vendor of commercial MPC software assumed an infinite horizon control to ensure stability, which was implemented in practice by a prediction horizon much larger than the reaction time of the system [33]. With regard to academia, the software MATLAB/Simulink from The Mathworks is very popular, e.g. [80, 96, 108].

Today, process industry is still the major user of MPC [76] evolving towards faster, mechanical processes such as paper machines [145] or stone mills [108, 124].

Again, a report of an industrial application was presented by the Anglo American Platium company, where a linear MPC (to be more precise: (DMC)) outperformed a back-than famous fuzzy controller [124]. The power consumption of a large stone mill was reduced by 66% using the commercial system from AspenTech. Nevertheless, no fully thrusting the novel control method, the established fuzzy controller was run as back-up option for abnormal states.

Olivier and Craig [92] and coworkers [55] detected faults of actuators within the process to update the available manipulated variables of the MPC maintaining the control performance. They used a particle filter in order to estimate whether a certain actuator could still be used or not (binary decision). Self-awareness was especially important for continuously-running large systems in rough environments. They simulated a mill of a mining facility to grind ore. The simulation demonstrated that the MPC can manage actuator failure if it knew about it.

Table 1 summarizes the key parameters of the discusses works in process industry. Only works are listed that provided their implementation details on MPC. The order has no significance besides order of publication.

MPC often served as a supervisory control of classic PID controllers forming a cascaded control loop. Large multiple input multiple output (MIMO) systems, empirical models—mostly derived through step or impulse tests [99]—and long calculation times Ts > 1h favored MPC in process industry. Today, the sampling times have largely decreased to the region of minutes and seconds [26], Table 1. Complex couplings between process variables require empirical, nonlinear models, which are at the beginning often linearized.

6.2 Power electronics

Not until the mid 2000s, an opposite trend has taken shape in power electronics. These extremely fast single input single output (SISO) systems used pure analytical models to work at sampling frequencies below the ms-range [15, 52, 65, 129]. The characteristics are diametrically different to process industry. Richalet [104] foresaw this counter movement early reporting from an application to control a servo drive with a sampling time of Ts < 1ms. To achieve such short sample times, relatively simple models, short horizons, and often an explicit solution of the optimization problem were used. Explicit MPC solves the optimization in advance for a variety of cases to obtain a polytope of explicit (linear) control laws [14]. This increases the overall computational effort but shifts it to offline optimization.

Linder and Kennel [65] applied MPC for “field oriented control” of electrical AC drives using such an explicit MPC. The results were sobering: there was hardly any improvement to a conventional PID controller for large signal steps. For small steps, the MPC reached the new target value faster and better, but in summary, Linder and Kennel attributed potential of MPC more due to features like intuitive tuning and constraint satisfaction.

Nevertheless, Bolognani et al. [15] saw MPC as being ideal for electric motor control since there existed analytical linear models describing the motor behavior accurately. They also used an explicit MPC formulation to achieve an sample time of Ts = 83ms. Since the prediction horizon N2 = 5 was far from covering the complete drive dynamics, the assumption of an infinite prediction horizon did not hold, making stability a major (unconsidered) concern. The control was perfect if the load torque matched the design torque of the MPC design. Otherwise, there occurred an offset between the desired and the actual values (current, voltage, etc.). Nevertheless, the controller worked stable and enforced the current and voltage limits reliably.

Kouro et al. [52] examined MPC regarding control of power converters. Power converters have only a finite number of discrete states n. This handicaps an optimization requiring heuristic approaches (mixed-integer optimization). They took a brute force approach testing every possible control action resulting in an exponential increase of calculations: \(n^{N_{2}}\). With n = 7 converter states the prediction horizon was limited to N2 = 2 in order to achieve a sample time of Ts = 100ms. Compared to a classic PID control, they concluded that the advantage of MPC is its flexibility regarding control variables and constraints—similar to [65] before.

Geyer et al. [38] used MPC for direct torque control of electrical drives. The control problem consisted of keeping the motor toque, the magnitude of the stator flux, and the inverter’s neutral point potential within their (hysteresis) bounds minimizing the switch frequency of the inverter. To reduce the computational complexity and to solve the MPC within Ts = 25ms, the control and prediction horizon were limited to Nu = N2 = 2. As a compromise between computational effort and system behavior, the value of the prediction horizon was extrapolated linearly 100 steps to roughly recognize future system behavior. The simulation results showed that MPC respected the constraints only slightly better but reduced the switching frequency on average by 25% thus reducing the power dissipation.

As an experimental validation for this, Papafotiou et al. [93] implemented MPC for direct torque control on a 1.5 MW motor drive. Again, the major concern was on the computational speed, so that the control horizon was further reduced to a single step Nu = 1. The two control tasks, motor flux and motor speed, were split into separate control tasks with different execution times (25 ms and 100 ms respectively). The results could not hold the euphoria of the simulation above. On average the control reduced the inverter’s switching frequency by 16.5% maintaining the same output quality as standard control. For motor drives of this size, the achieved faster torque response was even more valuable for certain applications. Especially high-voltage applications, such as motor control, must consider the time delay of the converter [10]. Converters often exhibit a programmed time delay after switching in order to avoid a shoot-through. Model-based predictive control (MPC) can manage this naively, e.g. in the system model [10].

The number of applications in power electronics increased so rapidly that Vazquez et al. [129] felt impelled to give an extensive review of the academic implementations. They concluded that the lack of proper models is still the major obstacle towards an industrial application. And MPC for power converters and rectifiers (electrical devices that convert alternating current (AC) to direct current (DC)) is still subject of active research due to their ubiquity. It is likely to increase even further due to the transformation of society in the context of combating climate change and the accompanying electrification of whole industries. Efficiency is prime and researchers found MPC to provide valuable contribution, e.g. for determining optimal switching sequences of converters and rectifies already for mid-level voltage ratings [40, 83]. Although computation is still an issue, e.g. [2], both formulations are still competing in the this field of very fast control problems in power electronics: The standard implicit formulation of MPC with solving the control problem online and the explicit formulation where the optimization problem is solved a priori for all cases. A detailed general discussion on explicit MPC includes Section 8.1.

Again, Table 2 provides a condensed overview of the works on the application of MPC in power electronics. It emphasizes the diversity of the used parameters of MPC in this field. Having started with the control of individual electrical components, in particular converters, the application in electrical engineering has widened towards the control of systems of multiple components as the next section will show.

6.3 Building climate and energy

Since 2010, MPC has attracted notice to the community of building climate control. Analytical and empirical models were combined in non-linear multiple input multiple output (MIMO) systems with long prediction horizons. Typical sample times were in the order of minutes to 1 h with prediction times usually smaller than 48 h [113]. The objective was always to reduce the energy consumption while maintaining a certain (thermal) comfort. The success of MPC in this field was due to that it allows to incorporate statistical uncertainties and even weather forecasts [5], e.g. as in [90].

MPC for heating, ventilation and air conditioning (HVAC) had been applied to a broad range of buildings, starting from a single room to large spaces as airport buildings or multi-room problems as office buildings [1]. The overwhelming majority of the works addressed non-residential buildings, where only 4% included residential buildings often as one energy sink among others in a micro-grid [74]. In their latest review, they noted that heating, ventilation and air conditioning (HVAC) plays an important role in the field of building energy management systems with more than 50% of all publications; and that MPC is the most used strategy. The authors ascribed this to its native consideration of weather and occupation forecasts, e.g. demand forecasting. Google reported that MPC increased the efficiency of the air handling in one of their data centers so that they cut cooling costs by 9% [54].

Most works in the field of climate and energy management were simulations due to the large implementation effort and the risk of discomfort. Gunay et al. [43] actually demonstrated their findings on an actual room of their university offices; and Ma et al. [69] implemented a MPC controller to the cooling system of their university building. The main component was a cold water storage tank, whose operation was controlled (when to fill, how fast to fill, how cold should the water input be—coming from the chillers, etc.). They reduced the energy costs by 19%, introducing the interesting idea of optimizing financial costs instead of pure energy consumption, [1] later picked-up again in this filed. With “MPC”, nowadays a dedicated term for such formulations exist.

Yu et al. [141] conducted a whole benchmark of different temperature control approaches on a small mock-up building in a thermal chamber. Model-based predictive control (MPC) outperformed the other approaches—including a commercial thermostat with a programmable schedule—and reduced the energy consumption by 43% compared to a constant temperature controller. However, the results suggested that for small buildings the main benefit came from an enhanced temperature measurement.

Industrial applications of MPC in building climate control are still rare, which is due to the enormous modeling effort (being up to 70% of the control effort) [5, 94].

Often, individual rooms were modeled as capacity resistor elements [82, 90, 91, 107]. Coupled resistance-capacitance models based on physical principles and pure empirical approaches are the two main types of modeling building energy systems for MPC [113].

One way to approach the modeling effort and the related requirement of domain knowledge was to use black box modeling approaches, namely from the field of machine learning. Already Qin and Badgwell [99] noted that NNs were popular to model unknown non-linear behavior for MPC. Afram et al. [1] used NNs to model the individual subsystem of an energy management system, such as ventilation, heat storage, or a heat pump. The increase in model accuracy came at the cost of a non-linear optimization in the MPC. The system was tested on historic weather data—assuming an ideal weather forecast at every point as it is common practice, e.g. also in [36, 90, 91]. Unfortunately, no details on the MPC parameters were given in [1]. The objective was to optimize the cost of the energy consumption and not the amount of consumption itself. For this, the proposed neural network (NN)–based MPC shifted the energy consumption to the off-peak hours of the electricity price using the mass of the building as a storage. This worked excellent for moderate weather conditions but failed at extreme conditions as in midsummer when such passive thermal storage are not sufficient.

The interlaced individual models in building climate control let to a complex optimization problem, where gradient-based algorithms may fail and heuristic-based global optimization were more desirable [82]. This increased the computational effort further and, thus, enlarged the sample time, which was seldom a problem due to the inertial nature of thermal behavior. If the number of rooms became large, the control problem was broken down into multiple decoupled MPCs achieving a near optimal solution at a lower computational cost [82]. Shaltout et al. [5] plead for a distributed network of MPC controllers cooperating with each other.

Gunay et al. [43] claimed that shorter sample time favors temperature control (Ts,short = 10min compared to Ts,long = 1h, both N2 = 6) since the model accuracy usually deteriorates with the predicted time. Furthermore, long horizons may be torpedoed by stochastic disturbances such as the occupancy behavior. They claimed that a short prediction horizon of \(T_{N_{2}} = 6 h\) would have even eliminated the need for accurate weather forecasts and make the MPC more reactive. Yu et al. [141] supported the finding that shorter horizons enabled for a more accurate tracking of a given temperature reference. In contrast, [91] argued that \(T_{N_{2}}=24 h\) should be used as a prediction horizon for heating, ventilation and air conditioning (HVAC) systems.

Park and Nagy [94] identified MPC as recent trend in heating, ventilation and air conditioning (HVAC) control through mining the keywords of publications and predict that it will spread towards the control of smart grids. Another recent review on MPC for heating, ventilation and air conditioning (HVAC) systems [113] stressed that it is importance will increase in step with the transformation in power generation towards renewable sources and its higher variability. And in fact, the increasing pressure to integrate flexible sources and sinks into power grids (introduced by renewable energy plants and PEVs) called for advanced control methods, e.g. [126].

In particular, the ability to include stochastic models and, thus, modeling uncertainty explicitly was considered a unique feature especially in the field of energy management [11]. Oldewurtel et al. [91] formulated the MPC problem as a probability problem considering the uncertainty of weather forecast. Instead of using weather forecasts, Morrison et al. [86] learned the day-to-day changes in solar radiation due to seasonal trends. The algorithm learned the behavior of humans in terms of hot water demand over days and weeks, while the MPC implements this learned reference on a lower-level (\(T_{N_{2}} = 12 h\)). In a simulation study, they mimicked four weeks from midsummer to midwinter for the considered thermal-storage-tank system.

Also in the field of renewable energies, Dickler et al. [27] applied a time-variant MPC for load alleviation and power leveling of wind turbines, where the model for the mechanical demand on the turbine was linearized at every control step for the current prediction and control horizon. The wind speed as one major load on the mechanical structure was handled by incorporating wind speed predictions. Sun et al. [125] used MPC to smooth the effect of fluctuations in wind speed for wind turbines on resulting frequency of the power generation. The idea was to consider both, the dynamics of the turbine and of the wind itself, in a linearized MPC. Shaltout et al. [114] picked up the same idea coupling the wind turbine with an energy storage system. Targeting multiple objectives, some with non-technical motivation, they formulated a so-called economic MPC. Adding fluctuating energy consumers to such a system, [126] simulated a (connected) micro grid with an wind power supplier and 100 PEVs. The objective was to minimize the overall operation costs: maximizing the consumption of wind energy and minimizing the exchange to the main grid, i.e. balancing the energy consumption over consumption and production peaks. PEVs could be used as sources or sinks as long as they were fully charged at the end of a working day. The energy demand of the PEVs was modeled as a truncated Gaussian model; the supply of a wind turbine in an auto-regressive integrated moving average model (ARIMA). They proposed a two-layer MPC where the top layer balanced the overall power demand aggregating the PEVs to a single value, while the underlying MPC handled the energy distribution to the individual PEVs. The top layer optimized the cost of the energy and the risk, which was determined through a Monte Carlo simulation and stochastic models. A simulation showed that the costs was be reduced by more than 30% compared to an immediate maximum charge strategy, in which the batteries were charged to full capacity as soon as it was connected to the grid. This may exacerbate the energy imbalance of the micro grid at peak hours. Schmitt et al. [109] optimized energy management for hybrid electric vehicles by establishing also a two-layer MPC. On the higher level non-linear MPC, the driving strategy including a rule-based gear selection was optimized, and the control and actuation of the physical system were realized on the faster lower level linear MPC.

In the advent of the electrification of the mobility, MPC experiences a new blossom, e.g. in balancing the fuel consumption of a hybrid-electric vehicle taking also the individual driving behavior into account [61], or in health-aware battery charging [147].

Again, the mega trend of energy transition and energy efficiency will lead to an increasing demand of intelligent strategies for energy balancing in (micro) grids and for building energy management systems. This in turn will call for more applications of advanced control strategies, especially MPC [74, 113]. The field has developed from the control of pure heating, ventilation and air conditioning (HVAC) systems to entire consumer-producer systems (or grids). The complexity of the models represent this evolution, Table 3.

6.4 Manufacturing

Manufacturing is a comparably new field for MPC and can be considered representative for a new development: MPC does not substitute existing controllers anymore but exploits new control tasks.

We want to emphasize the field of manufacturing in general and cutting technology in particular, where several papers already showed the potential benefit of advanced control, e.g. on a conceptual basis [28].

Nevertheless first, fixed-gain controllers for the position control loop of machining centers were substituted to achieve higher precision [122, 123]. Compensating the dynamics in high-precision milling with MPC is still an active field of research, e.g. [73]. Nonetheless, the application evolved towards introducing additional high-level control with MPC. The control turned into process control rather than implementing machine tool settings, creating before unseen benefit. Mehta and Mears [79] described a concept for controlling the deflection of slender bars in turning. And Zhang et al. [142] examined MPC to avoid chatter—an undesired resonance phenomenon—in milling. The MPC used a linearized oscillation model assuming that mass, damping, and stiffness were given. The controller manipulated an external force actuator at the tool holder. In simulation, the system enlarged the chatter-free region by 60%.

The first constrained MPC for force control in milling was implemented at the RWTH Aachen University, Germany [111, 112, 119, 120]. They manipulated the feed velocity in order to achieve a constant force in this highly dynamic process. Later, a black box model (support vector regression (SVR)) was added to consider non-linearities of machining centers [7, 8].

Staying in the area of metal processing, Liu and Zhang [67] introduced MPC-based control to welding. Predicting the N2 = Nu = 5 next steps (Ts = 0.5s), they controlled the penetration depth of the weld as a measure of quality. While the first approach relied on a dedicated vision system and a linearized model of the penetration depth, a newer approach dropped the vision system: [148]. The feedback loop was closed by identifying a model online, which described the relation to the penetration depth. This was a similar set-up as for the milling process above. The approaches demonstrated the control of system variables that were hard to impossible to control without MPC.

Wehr et al. [133] applied a linear MPC to control the gap during precision cold rolling of thin and narrow strips. The structure of the given process is anatomically overactuated by the existence of two redundant actuators for gap control. The overactuation and computational effort of the MPC are tackled at the same time by the introduction of a single time-varying optimization variable, which exploits the different availability of the actuators during the process.

A different field of production technology addressed Wu et al. [135], who optimized the air-jet to insert the weft in weaving. This is the key to reduce the energy consumption (in terms of compressed air) of weaving machines.

And for injection molding of plastics, Reiter et al. [103] (conceptual) and later Stemmler et al. [121] built a MPC controlling the pressure within the mold. The idea was to obtain constant weight of the product as a quality criterion. It was standard to control the process with separate controllers for the different phases (injection and packing phases), while MPC was able to handle both phases and optimizing the transition (which was originally a switch of the controller) [121]. The contribution to a higher usability of the MPC was the main driver in this work.

A bit more general, the field of “production” adds automation and handling systems to the scope. These are often graph or state-based modeled, e.g. by Petri Nets as Cataldo et al. [20] did with a palette transportation and processing system. Using an MPC, they enabled the system to adapt to faults on the transportation line such as a blocked section. Automation applications with discrete states present mixed-integer optimization problems. They require dedicated solver, which often are heuristic-based and come with a larger computational burden than gradient-based optimizers.

Table 4 provides a quick overview on the chosen parameters. The sampling times are quite low with rather large prediction horizons compared to the early works on power electronics.

6.5 Further applications

Apart from these main movements, the range of applications in engineering is immense. From balancing walking robots [134], hanging crane loads [110], and cruise control for heavy duty trucks [62, 140], to optimizing buffering and quality in video streaming [138]. Even for path tracking of underwater robots, MPC was applied [116]. In almost all applications, MPC outperforms classic controllers.

In particular, robotics is an emerging field of applications of MPC, e.g. [47, 88, 134]. While humanoid robots are a special case [134], industrial robots are ubiquitous in the shop floors today. The success of light-weight, economic, and collaborating robots has contributed to a significant increase of MPC related works in this field. Nubert et al. [88] improved the tracking robustness in general with a robust MPC. While [47] made use of the force feedback of a lightweight robot to polish the free-form surface of a metal workpiece. The MPC maintained a given pressure on a varying area while moving over the surface.

With the upcoming of new concepts of how vehicles are powered was accompanied with new applications of control strategies and applications of MPC. Be it traction control of in-wheel electric motors [? ], cruise control [61, 62], or path planning for autonomous driving [48]. The focus of advanced cruise control is yet on larger commercial vehicles, such as (hybrid) electric buses [61, 137], due to its faster return on invest. It seems that the electrification of the power train spread electrical-engineering know-how to the development cycle of vehicles and with it, control engineering expertise.

6.6 Notes

While many researchers show an extraordinary meticulousness when describing the models they have used, some miss to provide basic information on MPC tuning. We want to emphasize that at least the sample time Ts and all horizons (lower prediction horizon N1, upper prediction horizon N2, and the control horizon Nu) should be listed, as Table 1 to Table 4 demonstrate.

Ideally, also the cost function should be provided including the weights of the slack variables ξ. With the horizons given, applications can be compared and and the computational effort can be estimated. The exact cost function is required to reproduce the results ensuring good scientific practice.

7 Controller design and tuning

The initial hurdle to use MPC is relatively small—provided you have an adequate model describing the process in question. The effort is shifted from controller design towards modeling [35, 104, 105]. Nonetheless, the MPC offers an enormous flexibility regarding its design and tuning [37]. The most significant effect have:

-

the model,

-

the cost function,

-

the constraints (what is constrained and how it is: bound, inequality, or non-linear constraints), and

-

the choice of the solver itself.

The model is the essence of a MPC. As [101] put it: “models are not perfect forecasters, and feedback can overcome some effects of poor models, but starting with a poor process model is akin to driving a car at night without headlights; the feedback may be a bit late to be truly effective”.

Both, theory and commercial application software favor linear models or a linear MPC. To apply linear control even to non-linear systems successive lineraization can be used, e.g. [6, 8, 63, 80, 97, 147], or model switching, e.g. [95]. The idea is to take advantage of a linear optimization, i.e. linear MPC, with a comparably low computational burden and a non-linear prediction.

Few applications use non-linear MPC meeting the fact that often the available models are non-linear. However, not all check stability. Others focus explicitly on the stability aspect in their applications, e.g. [116]. In particular with the popularity of machine learning model, non-linear MPC applications increase. A sometimes ignored drawback of non-linear MPC is the larger computation of non-linear optimization. However, there was a new computation scheme introduced recently: RTI. Gros et al. [41] summarizes this approach presenting linear MPC as a special case of it. The main idea is as simple as it is charming, making use of the previous solution. At time step k, the controller calculates a solution for time steps k + 1 to k + Nu. A good optimization given, the solution for time step k + 2 presents a rationally good starting point for the next optimization at time step k + 1. Thus, one can limit the number of iterations of each optimization assuming that the next optimizations continue improving the solution of the trajectory of the manipulation variable. “The RTI approach consists in performing the Newton steps always using the latest information on the system evolution” [41]. This idea of “warm starting” relies on a sufficiently high sampling frequency to ensure only small changes between iterations. Because the RTI scheme implements one single full Newton step per time step, it generally works better if the non-linearity between time steps is mild and if the prediction horizon is longer.

Controlling large multiple input multiple output (MIMO) systems with a single MPC may be difficult [32], that is why cascaded or hierarchical MPC structures are some times suggested, e.g. a two-layer MPC [112, 126] running at different sample rates.

Slack variables soften constraints moving it to the cost function where the amount of its violation is penalized. This generates the additional tuning factor Wξ, which is a weight matrix ensuring feasibility by softening constraints on the model output (and with this, on the reaction of the system). It is usually an identity matrix, whose entries are several orders higher than the weight matrix of the control error Ww.

A trade-off between accurate tracking of the reference and smooth control behavior can be performed by considering the change of the manipulated variable in the cost function:

The same constraints apply as before in Eq. 5. The cost function minimizes the deviation from the reference r over the prediction horizon N2. It additionally considers the change in the manipulated variable Δ uk = uk −uk − 1. The last term includes the slack variables ξ, which quantify the violation of output constraints. It must be tuned manually until the controller reflects the desired behavior. To the experience of the authors, a good starting point lies within Wu = (0.01I,1I), with the lower values let the MPC use its potential unhindered at the exchange of more (usually small) violations of the boundaries.

Typical solvers are based on linear programming (LP) or quadratic programming (QP) [26]. If one uses the commercial tools, i.e. from the popular program MATLAB by The MathWorks, the choice of an optimization algorithm is not a question. But, for deeper dives into the design, a good option for a solver is quadratic programming online active set strategy (qpOASIS). It is an open-source optimization algorithm for linear problems, which has “several theoretical features that make it particularly suited for model predictive control (MPC) applications” as the project stated [30]. The choice of the solver influences the demand of computational resources.

Besides those major design building blocks, the MPC exhibits a whole slew of tuning parameters: the horizons (N1, N2, Nu), the weights in the cost function, Eq. 11, and the time step or sampling time Ts. It is unique for every case but this review can provide tips and best practices for the other tuning parameters.

The horizons are crucial of the system’s performance and must be determined for every case. The prediction horizon N2 must be long enough to capture the effect of a change of the manipulated variable u. In this way the minimum length of the manipulation horizon Nu can be estimated by

To reduce the complexity of algorithmic tuning, [118] suggested to neglect the difference of the prediction and the manipulation horizon: N2 = Nu. The effect on computation is small if the time delay of the system is small in terms of multiples of the sampling time.

The lower prediction horizon describes the time delay of the system. It is best practice to consider this in the model of the system and, therefore, setting N1 = 1. This considers that the manipulated variable is not implemented instantly, which would make the exact moment indeterministic as it depends on the time the MPC requires for solving the optimization problem. Instead, the obtained optimal command u is implemented at the next time step. These considerations reduce the problem of finding suitable prediction horizons to the problem of determining the necessary prediction horizon N2. Its choice can be estimated using the system model by simulating all possible step changes in the manipulated variable(s). If the combination that has the longest effect on the control variable is known, it is sufficient to simulate this.

8 Computation

It does not help to talk about MPC, i.e. repeatedly solving an optimization problem online, without talking about its computational effort. In the control of power electronics, the prediction horizon was often limited to N2 = 1 due to tight time requirements [38]. Nevertheless, there are more sophisticated strategies to reduce computation than wrecking prediction. Morari [84] argued that computational effort was irrelevant based on the computing power in 1994. This is remarkable from today’s perspective: although computing power increased exponentially, Fig. 6, at the same time control intervals have shrunken and thus computation is still an issue.

Moore’s law states that the number of transistors on a microprocessor doubles roughly every two years [132]. That usually implies that computational performance doubles too – and prices dropped in sync, Fig. 6. This comfortable development may not continue forever; in fact, special-purpose chips are on the advance (think of low energy CPUs that power smartphones) letting the microprocessor landscape diverge. The tremendous success of machine learning techniques and the increasing parallelization in software were paved by the replacement of CPUs for GPU chips. At the same time, the clock speed had been limited because of the heat dissipation in the resistors. To still keep up with Moore’s law, multiple cores were integrated on the same chip from the early 2000s on. With this in mind, strategies to reduce the computational load become very well important again. With increasing computational resources, more demanding systems were controlled that were not even imaginable before.

8.1 Explicit MPC

In the year 2000, [14] still claimed that MPC was only applicable to slow or small systems due to the computational effort that solving an optimization problem imposes. Parallel to the increasing computational power, many dedicated approaches have been introduced bringing MPC towards more efficiency. As an intermezzo hybrid MPC or explicit MPC approaches popped up [13]. They combine an offline solved optimization problem with online control. The optimization problem—and thereby the control law—is solved for a multitude of possible situations and stored in a look-up table. This shifts the task of computation to a non-time-critical offline calculation. Essentially, MPC in this was becomes an online gain-scheduling algorithm. The advantage is that closed-loop control can be performed at higher rates which, in some cases, made closed-loop control feasible in the first place and, in other cases, improved the control behavior due to quicker feedback.

The major drawback is the increasing computational effort solving the problem for all possible situations in conjunction with the increasing memory demand. It lacks of flexibility regarding unexpected disturbances and of the opportunity to adjust the process model.

Explicit MPC increases the overall computation because every possible state needs to be calculated a priori . This might be the reason why it emerged from the control of power converters with simple (mostly binary) problems, short horizons, and almost no time for calculation [130]. For complex systems, the advantage at execution is somewhat diminished if searching the a priori solved result takes long [122]. The solution space scales exponentially with the problem size making look-up-table-approaches inefficient – this is sometimes dubbed “curse of dimensionality” [102].

One way to reduce the general computational effort is to approximate the solution-space by a non-linear function. Recent studies suggested to use NNs for this [64, 143]. This sped up the required online computation by a factor of 65–100 in [143]. Approximating the solution space by a function let the MPC work with near optimal solutions but shifts the computational burden may allow to decrease the online computation time. [143] built a second model to quantify the approximation error at every point in the solution space. The charm of an approximation through machine learning is that the training can be flexibly stopped if a defined accuracy is reached. Hertneck et al. [45] took this thought focusing on accurate learning of the solution space by the neural network (NN). They quantified the probability of a wrong approximation. In this way, they were able to adjust and extend the training until it reached the desired quality. The procedure was demonstrated on a simple numerical example reducing the computation time by a factor of 200—at the cost of a training effort of 20 days. Only recently the idea was tested on an industrial robot as real system [88]. The to-be-approximated MPC was designed for robust control with regard to the output of the MPC. In this way, measurement noise—or an inaccurate approximation of the solution space through the neural network (NN)—did not affect the stability of the to-be-controlled system.

Maddalena et al. [71] generalized the idea proposing a neural network (NN) with two linear layers and a parametric quadratic program layer in between to learn the control law of any linear MPC with A quadratic cost function. They showed that the resulting explicit MPC was still closed-loop stable in the sense of Lyapunov by using out-of-the-box the certification technique proposed by [51]. The technique was applicable because the neural network (NN) structure essentially presented a linear mapping with polynomial inequalities. In fact, [102] concluded that NNs—in particular with rectified linear units (ReLUs)—present a continuous piece-wise linear mapping ideal for approximating large solution spaces of explicit MPC policies.

8.2 Move blocking

Move blocking strategy for MPC (in sense of input blocking as its most common formulation) is a scheme, where the degree of freedom for the optimization is reduced by trimming the number of calculated control outputs. Thereby, the control output is held constant at defined steps over the control horizon. In this way, the computational burden decreases because the control output does not have to be calculated at every time step over the control horizon anymore.

Overall, the result of move blocking strongly depends on the choice of blocked time steps. One conceivable approach is to block the later time steps to obtain a higher degree of freedom at the beginning of the control horizon. Such an approach is appealing for uncertain systems, where the predicted system behavior is more trusted at early time steps. Nevertheless, one has to be aware of the aforementioned drawback. A more sophisticated, but also more computationally expensive, approach is to optimize the choice of blocked time steps as a mixed-integer problem [115].

One major drawback of the strategy is that the continuity of the optimization for a receding horizon can no longer be ensured. This is due to the shift of fixed (or blocked) time steps with the receding horizon between iterations. Therefore, the degree of freedom at a certain point in time in the future cannot be guaranteed at the following iteration of the optimization. In the worst case, neither the satisfaction of constraints for the optimization, nor the controller stability can be met. One way to overcome this is to adapt the fixed time steps, such that the degree of freedom is defined at the same time [16].

9 Conclusion

Popularity of MPC “comes in great part from the fact that a suitable model being given, the controller can be easily implemented with a direct physical understanding of the parameters to be tuned and easy constraints handling” [104]. With the great advances in microprocessors and the omnipresent availability of models, this is more true than ever. One key characteristic of MPC is the implicit determination of the control law by solving the constrained optimization problem online. The incorporation of physical constraints in the optimization problem shifts the effort of designing a controller towards modeling the to-be-controlled system [35, 104, 105].

The hurdle to overcome for a lasting impact of MPC on industry is the complexity of modeling and algorithmic tuning. In most cases, the potential benefit is not worth the effort of building up expert knowledge in modeling, optimization, and control theory.

Modeling is often the most time consuming activity [44]. As the age of microprocessors removed computational resources as the largest obstacle and paved the way for an enduring success of MPC, the second era may be herald by the use of data-driven modeling lowering the barriers even more. Machine learning enables an easy description of complex systems lowering the hurdle of applying MPC to new processes.

For applications first the extreme have been covered: large and complex multiple input multiple output (MIMO) systems with long sample times (petrochemical industry). Then, almost as a counter movement, fast systems with short sample times and often an explicit formulations were developed (power converters). These days, the craziness has settled leaving the field to reasonable sample times. Although computational power has increased tremendously, even today, an efficient calculation should always be the dictum but requires expert knowledge in programming hindering a plug&play usage. Forbes et al. [32] concluded that a higher usability of existing techniques is required by industry rather than new MPC algorithm. Nowadays, it is almost as if the focus has shifted from theory to application letting both advance in conjunction. The theory becomes application-driven again—as it was in its beginnings.

We are convinced that the global mega trend of decarbonization will further boost MPC applications in electronics, due to the expansion of electrification as well as the constantly pressing demand for high efficiency of electric components. Model-based predictive control (MPC) can contribute to efficiency in many fields, e.g. in climate control systems (precisely heating, ventilation and air conditioning (HVAC)) They deal with sluggish systems and comparably precise forecasting models, e.g. for room occupations or for the weather, what makes MPC predestined for them.

The buds of the new trends and the thick trunks of the established disciplines suggest, to our eyes, that one step way from an exponential increase in the number of MPC applications.