Abstract

The vehicle structure is a highly complex system as it is subject to different requirements of many engineering disciplines. Multidisciplinary optimization (MDO) is a simulation-based approach for capturing this complexity and achieving the best possible compromise by integrating all relevant CAE-based disciplines. However, to enable operative application of MDO even under consideration of crash, various adjustments to reduce the high numerical resource requirements and to integrate all disciplines in a target way must be carried out. They can be grouped as follows: The use of efficient optimization strategies, the identification of relevant load cases and sensitive variables as well as the reduction of CAE calculation time of costly crash load cases by so-called finite element (FE) submodels. By assembling these components in a clever way, a novel, adaptively controllable MDO process based on metamodels is developed. There are essentially three special features presented within the scope of this paper: First, a module named global sensitivity matrix which helps with targeted planning and implementation of a MDO by structuring the multitude of variables and disciplines. Second, a local, heuristic and thus on all metamodel types computable prediction uncertainty measure that is further used in the definition of the optimization problem. And third, a module called adaptive complexity control which progressively reduces the complexity and dimensionality of the optimization problem. The reduction of resource requirements and the increase in the quality of results are significant, compared to the standard MDO procedure. This statement is confirmed by providing results for a FE full vehicle example in six load cases (five crash load cases and one frequency analysis).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

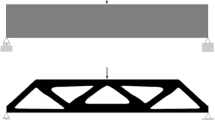

In the vehicle development process, many CAE departments work both in parallel and sequentially on the structural design of a vehicle. They cannot make design decisions independently of each other without restrictions as their decisions may not only affect the requirements of their own responsible discipline (e.g., crash, stiffness and durability). Multidisciplinary optimization (MDO) is a tool to capture this complexity and interdisciplinary interactions (Fig. 1). It searches for designs that meet all discipline-specific and cross-discipline requirements in a targeted and automated (algorithm-based) way. The outcome is a best possible compromise and thus full vehicle-rather than single-discipline-oriented. MDO promotes both development quality and development speed of complex systems. For this reason, the MDO has gained importance in recent decades and has been repeatedly applied in both the automotive and aerospace environments. The MDO is therefore feasible in principle. However, for operational use, it requires an approach for an efficient use of CPU resources and for a targeted integration of all disciplines and thus R&D-departments relevant to the specific problem. Previous studies and applications more or less address these important points and will be discussed below.

Basic idea of multidisciplinary optimization (Büttner 2022)

In the eighties and nineties of the last century, Sobieszczanski-Sobieski contributes significantly to the establishment of the MDO from an organizational point of view. The areas of application considered are the development of aircraft and spacecraft. Sobieszczanski-Sobieski and Haftka (1987) propose to decompose the complex MDO problem either aspect-oriented (into different disciplines) or object-oriented (into different physical components). This study focuses on the aspect-oriented decomposition. Sobieszczanski-Sobieski implements the global sensitivity matrix to identify and quantify the coupling of different disciplines (1992). As it is developed in an aerospace environment, the disciplines may not only be coupled via design variables but also capture so called interdisciplinary mappings as explained in Cramer et al. (1994). They describe the latter with an illustrative example: the disciplines of aerodynamics and structure are strongly and mutually coupled (feedforward and feedback). Aerodynamic forces cause a structural deformation of the aircraft wings. At the same time, this deformation in turn causes a change in the aerodynamic forces. This coupling is so strong that it cannot be neglected. Therefore, interdisciplinary mappings only exist when the requirements of several disciplines are interdependent. Multidisciplinary consistency is only reached if all design variables and interdependent requirement values converge in course of iterations.

Based on this coupling information obtained from the global sensitivity matrix, various optimization procedures are developed, which can be divided into single-level- and multi-level-methods. Single-level means that only one system optimizer changes all design variables of all disciplines together. However, if there are interdisciplinary mappings, multidisciplinary consistency is not automatically given. Cramer et al. (1994) therefore present the single-level-methods multidisciplinary feasible method (MDF), individual discipline feasible method (IDF) and all-at-once (AAO). A major disadvantage of single-level approaches is that the different disciplines cannot make their own design decisions. Therefore, the potential of the global sensitivity matrix is not fully exploited. Multi-level-methods overcome that problem. The basic idea is to decompose the optimization problem in such a way that each discipline can autonomously work on its own subsystem optimization problem and choose its own strategies and algorithms. This is advantageous but leads to a problem: It does not guarantee multidisciplinary consistency, neither regarding the design variables nor the interdisciplinary mappings. That point leads to the need of a system optimizer which interacts sequentially with the subsystem optimizers and to complex compatibility or consistency formulations in the optimization equations at both levels. Some famous multi-level-methods are mentioned in the following: concurrent subspace optimization (CSSO) developed by Sobieszczanski-Sobieski (1989) and further developed by i.a. Renaud and Gabriele (1991) and Wujek et al. (1995); collaborative optimization (CO) developed by Kroo et al. (1994); enhanced collaborative optimization (ECO) developed by Roth (2008); analytical target cascading (ATC) developed by Kim (2001) and further developed by i.a. Tosserams et al. (2006); bi-level integrated system synthesis (BLISS) developed by Sobieszczanski-Sobieski et al. (1998) and BLISS 2000 developed by Sobieszczanski-Sobieski et al. (2003). Martins and Lambe (2013) provide a comprehensive overview of several single-level- and multi-level-methods. According to Agte et al. (2010), the decision to decompose a MDO depends on the coupling breadth and strength of the problem at hand.

Agte et al. (2010) reflect on the use of multi-level-methods, whereas Bäckryd et al. (2017) as well as Ryberg et al. (2012) reflect on the use of both single-level- and multi-level-methods in the automotive industry. They conclude that there are no strong interdisciplinary mappings or that they are so small that they can be neglected. In the absence of interdisciplinary mappings, the various single-level-formulations become redundant as multidisciplinary consistency is achieved in each state of the optimization. Multi-level-formulations are also greatly simplified by this circumstance. Bäckryd et al. (2017) provide a detailed overview of the reduced formulations (in the absence of interdisciplinary mappings) usable for automotive developments. However, multi-level-formulations are still more complex than single-level-formulations. Therefore Ryberg et al. (2012) and Bäckryd et al. (2017) only recommend their use if the benefits (e.g., concurrently and autonomously work of all disciplines) exceed the complexity of the optimization formulation. As it is described in Sect. 2 and is mentioned by Ryberg et al. (2012) and Bäckryd et al. (2017), the use of metamodels for supporting optimization in order to deal with extremely relevant non-linear load cases such as crash simulations is a way to give the disciplines their autonomy without the need for a complex multi-level-method.

Nevertheless, the global sensitivity matrix can be used for variable screening to reduce the number of variables to the most sensitive ones. It can also identify relevant load cases for the optimization problem at hand. Therefore, it supports the targeted integration of all disciplines and thus R&D-departments relevant to the specific problem. Both possibilities are shown in Sect. 3.1 and are also opportunities for efficient use of CPU resources as explained in Sect. 2. Another opportunity for efficient use of CPU resources is an efficient optimization strategy that reduces the number of necessary FE simulations without compromising the optimization quality, e.g., through insufficient metamodel qualities and therefore insufficient local predictions. Section 2 explains this in more detail while Sect. 3.2 shows the potentials. The last way to use CPU resources efficiently mentioned in this study is the reduction of CAE calculation time of costly crash load cases as explained in more detail in Sects. 2 and 3.3. To exploit the full potential of the three mentioned opportunities, they should be adaptively controllable during the optimization process. Section 3.4 gives a detailed insight.

Table 1 provides an overview of previous studies in the automotive environment that focus on one or more of the above-mentioned opportunities for efficient use of CPU resources and targeted integration of disciplines shown in Fig. 1. We do not claim this table to be exhaustive.

As can be seen from Table 1, previous studies mainly focus only on some components required for an operational use of MDO. Therefore, MDO has rarely been used productively in the vehicle development process. The aim of this study is to address all components that make MDO fit for practical usage in car body development.

2 Requirements and challenges for MDO application

As explained in Büttner et al. (2020), significant preparations on an organizational and technical level are necessary for the implementation of MDO. At the organizational level, all disciplines relevant to the optimization problem at hand must be selected. Together, they must define discipline-specific and cross-disciplinary requirements and set up the optimization problem as follows:

In common automotive problems, the objective function \(f({\varvec{x}})\) is the vehicle weight which should be minimized while maintaining all relevant discipline-specific and cross-disciplinary requirements defined in the \({n}_{g}\in {\mathbb{N}}_{0}\) functions \(g\left({\varvec{x}}\right)\) and \({n}_{h}\in {\mathbb{N}}_{0}\) functions \(h\left({\varvec{x}}\right)\) (constraints) collected in the vectors \({\varvec{g}}\) and \({\varvec{h}}\). The objective function and the constraints together form the group of design criteria, which can be summarized in the vector \({\varvec{y}}\) with length \({n}_{y}={n}_{g}+{n}_{h}+1\) (\({n}_{y}\in {\mathbb{N}}\)). To affect the design criteria, all disciplines must develop design variables that are relevant for their load cases and that exist in their own, usually discipline-specific FE models. For each of the \({n}_{x}\in {\mathbb{N}}\) design variables \({x}_{i}\), the upper and lower bounds (restrictions) have to be set:

where \({{\varvec{x}}}^{*}\) represents the optimal design variable configuration within these bounds. The appropriate design space \(X\) for all disciplines can be summarized as follows:

At the technical level, a fully automated process is necessary to coordinate the complexity of usually discipline-specific software, computational architectures (e.g., supercomputing, local workstations) and scripts. The architecture of the automated process therefore depends on the integrated disciplines but also on the chosen design variables and the optimization strategy.

Design variables which are directly definable in the FE input files (e.g., sheet thicknesses) are easier to integrate in the automated process than non-directly definable variables (e.g., shape variables). Depending on the strategy and the degree of change, shape variables require the integration of a pre-processor and, if necessary, CAD-based software prior to that. Therefore, sheet thicknesses are chosen as design variables to focus on the reduction of the numerical resource requirement rather than making the process chain for this study unnecessarily complicated.

The last point which influences the architecture of the automated process is the chosen optimization strategy. One can distinguish whether the algorithm works directly with the FE model or indirectly via metamodels and whether the algorithm uses local or global information. The use of local algorithms (e.g., LAGRANGE-methods like SQP or SLP) that obtain the gradient information directly from the FE simulation in an (semi-) analytical way not only leads to a quite accurate (local) result but is also very efficient. Unfortunately, efficient gradient-based algorithms cannot be used anymore due to the integration of crash load cases into the MDO process. On the one hand, the sensitivities cannot be provided efficiently. On the other hand, the use of this local information in the optimization does not make sense for the strongly oscillating structural responses of crash. Algorithms that use global information (e.g., evolutionary algorithms) by exploiting the entire design space overcome this problem. On the positive side, global optimization algorithms have the potential to converge asymptotically to the global optimum. On the negative side, they generally require more function calls than gradient-based algorithms.

In automotive MDO workflows, it is common that global algorithms work on metamodels instead of working directly on FE simulations. The use of metamodels that approximate the structural responses in the entire design space has several advantages: First, performing the function calls on metamodels rather on FE simulations is less time- and cost-intensive. A modification of the optimization problem (e.g., modification of the objective function, addition of further constraints or adjustment of the limits) is easily possible. This is particularly advantageous for highly non-linear load cases as the desired structural behavior is difficult to describe. Second, the models can be used for data mining purposes. This means, for example, that design variable sensitivities can be determined through a global sensitivity study or any correlations and trends of the design criteria. Third, the metamodel strategy offers immense advantages on the organizational level as addressed in Sect. 1: While the use of algorithms on FE simulations causes a permanent change between optimization and FE simulations and all necessary FE models must be simultaneously available and evaluable, this problem no longer arises when using metamodels. The metamodels cannot only be created decentrally, i.e., in the respective CAE department, but also sequentially at different points in time in the product development process (PDP). This also gives the possibility to easily include non-simulative (experimental) problems. By providing all metamodels, the optimization problem can then be solved centrally or decentrally but it is solved on a single-level by one algorithm which takes the Eqs. 1, 2, 3, 4, 5 into account. A complex optimization formulation as in multi-level approaches is no longer necessary to enable all disciplines to work simultaneously and independently of each other.

Figure 2 shows a typical architecture of the automated MDO process using metamodels as optimization strategy. Before the process begins, the setup must be carried out at the organisational level as described above. The automated process then starts with a design of experiments (DOE). The DOE generates well-distributed samples (design variable configurations) in the entire design space. A suitable and well-known space-filling strategy is the optimal latin hypercube (OLH). It additionally optimizes the difference between the samples and therefore tries to avoid an insufficient scanning. In the next step, FE models are automatically set up with these design variable configurations and calculated by the respective FE-solver on the corresponding computational architectures. The respective post-processors or scripts then extract the needed design criteria values of each corresponding sample. The design variable configurations (input) with the corresponding design criteria values (output) are summarized in tabular form and serve as a basis for metamodel-training. Since the entire optimization success now depends on the quality of the metamodels, well-chosen and adapted models are needed. Therefore, it is necessary to allow design criteria-specific metamodel-training as each design criterion is subject to a different degree of nonlinearity. To avoid badly chosen models, a possible strategy is to fit several metamodel types per design criterion and determine the best model through a metamodel competition. To ensure that each of these models is well-fitted, hyperparameter optimization is used to avoid underfitting and a cross-validation strategy (test-train split) is used to avoid overfitting (Bäck et al. 2015). Subsequently, the various, i.e., criterion-dependent best metamodels (possibly of different metamodel types) are provided and serve as the basis for the optimization. Finally, the calculated optimum (optimal design variable configurations) must be verified by FE simulations (verification).

Standard MDO process chain [modified from Büttner (2022)]

In this study, the software SIMULIA Isight® by Dassault Systèmes is used for process control and for the generation of the OLH. ClearVu Analytics® (CVA) software by divis intelligent solutions GmbH is used for metamodel-training, as it contains all the necessary strategies explained above. The following metamodel types are used in this study: linear model (LM), Gaussian process (GP), support vector machines (SVM), kernel quantile regression (KQR) and neural network (NN). There are also more types available (e.g., fuzzy models or decision trees), but they are not suitable for the problem of this study or take too long to adapt. The global optimization algorithm used in this study is the Derandomized ES 2 from the family of evolutionary algorithms, implemented in ClearVu Analytics® (CVA) (Bäck et al. 2013).

Looking at Fig. 2, the points mentioned in Sect. 1 can be identified for an operational use of MDO: The numerical resource requirement depends on the type and number of load cases. The organizational effort depends on both the number of load cases and their degree of coupling. The meaning of the latter is explained in Sect. 3.1. A targeted integration of all disciplines is necessary and at the same time difficult to manage only on the basis of engineering know-how. There is a large number of disciplines located in many different R&D-departments that work not only in parallel but also sequentially in a vehicle development process. In addition, the departments need to know the most sensitive design variables within the many hundreds to thousands of design variables in a full vehicle. The need for numerical resources depends on this number of variables since the appropriate design space \(X\) (Eq. 5) becomes larger as the number of variables increases. More DOE samples are required to scan it sufficiently. As each sample has to be calculated in an FE simulation, the need of numerical resources also strongly depends on the FE calculation time. Especially highly non-linear load cases like full vehicle crash simulations are extremely time- and cost-intensive. They require a large number of CPUs and take hours or days to calculate. Based on this knowledge, it is obvious that an efficient optimization strategy saves numerical resources. Efficient optimization strategy means gaining the most information from the smallest possible number of FE simulations without significantly reducing the optimization success. Using the above-mentioned metamodel-training strategies is a good start but many potentials are not exploited. In addition, a globally well-suited metamodel does not mean that it is also suitable for local predictions. The standard MDO process does not take the local prediction quality into account. For this reason, it is possible that the predicted, valid optimum lies in a region of high error, so that the FE verified result is no longer valid. Consequently, more samples and thus FE simulations become necessary, which further reduces the efficiency of the already cost-intensive process.

3 Potentials for reducing the numerical resource requirements and increasing the quality of optimization results

To overcome these bottlenecks, some important adjustments need to be made to the standard MDO process to realize its full potential.

3.1 Global sensitivity matrix

The global sensitivity matrix proposed by Sobieszczanski-Sobieski (1992) is a tool to identify and quantify the couplings between disciplines, as the strength and direction of influence are not always predictable due to the complexity of a vehicle development process.

In this study the global sensitivity matrix is based on metamodels. The underlying data can either be taken from existing simulations, if available and correctly prepared, or it has to be created. The latter makes new simulations necessary. To save numerical resources, the DOE should therefore scan the design space only roughly. Based on the initial metamodels, a global sensitivity analysis such as the variance-based approach Sobol-Indices (Sobol 1993, 2001) can be evaluated. The Sobol-method has two main advantages: First, it works independent of the metamodel type. For this reason, this method is usable and well-suited for non-linear structural responses. The requirement, of course, is that the underlying metamodels approximate the structural responses well. Second, the method is able to detect interactions between variables. The Sobol-Indices are calculated in general as follows: The variance \({\widetilde{s}}^{2}\left({\widetilde{y}}_{j}\right)\) is calculated for each of the \({n}_{y}\) approximated design criteria \({\widetilde{y}}_{j}\) using a Monte Carlo simulation. Afterwards it is determined which of the \({n}_{x}\) design variables or their interaction cause this variance and to which proportions. Thus, each variable has a Sobol-Index between zero and one, whereby the sum of all Sobol-Indices per approximated design criterion \({\widetilde{y}}_{j}\) is equal to one. By setting a threshold (e.g., \({Th}_{Sobol}\ge 0.05\)), the variables can be divided into insensitive and sensitive variables with respect to this single design criterion. Since a discipline usually has several design criteria, a variable should be defined as sensitive for that discipline if the threshold is reached for at least one of these criteria.

With this information at hand, one can now decompose the full design variable vector \({\varvec{x}}\) as follows (– note, that in this context the design variable vector \({\varvec{x}}\) represents the variable names instead of their associated values): \({{\varvec{x}}}_{local,d}\) is the vector of design variables that are sensitive to only one specific discipline \(d\) of all \({n}_{D}\) disciplines. The vector \({{\varvec{x}}}_{global}\) collects the variables that are sensitive to more than one discipline. There are also variables that are insensitive to all disciplines (\({{\varvec{x}}}_{insensitive}\)). A discipline-specific sensitive variable vector \({{\varvec{x}}}_{sensitive,d}\) therefore contains \({{\varvec{x}}}_{local,d}\) and a subset of \({{\varvec{x}}}_{global}\). The degree of influence on the design criteria by changing these sensitive variables can be determined by simple predictions on the available metamodels. Therefore, the global sensitivity matrix is also very helpful in daily development situations, as shown by Büttner et al. (2021).

Figure 3 illustrates the information provided by the global sensitivity matrix using four exemplary disciplines and twelve design variables. The insensitive variable \({x}_{1}\) can be excluded for the optimization problem at hand, as it does not influence any design criteria. Also, discipline 4 is not relevant for an MDO. Since it does not share any design variables with other disciplines (\({{\varvec{x}}}_{sensitive,4}\) only contains \({{\varvec{x}}}_{local,4}\)), there is no need for discipline 4 to plan and conduct an MDO. However, the sensitivity matrix provides more information than just the sensitive variables and relevant load cases. It also detects the coupling breadth and strength—not in the common way as described in Agte et al. (2010) since no interdisciplinary mappings exist. The coupling breadth and strength between disciplines can also be measured depending on the shared variables \({{\varvec{x}}}_{global}\) (in this example \({x}_{4}\), \({x}_{5}\) and \({x}_{6}\)). As shown in Fig. 3, discipline 1 and 2 share two variables \({x}_{4}\) and \({x}_{5}\) whereas discipline 2 and 3 only share one variable \({x}_{6}\). Therefore, discipline 1 and 2 have a bigger coupling breadth than discipline 2 and 3. Discipline 1 and 3 share no variables. So they are not coupled. The coupling strength is measured by the influence that a variable change within one discipline (e.g., \({x}_{4}\) in discipline 1) causes in another discipline (e.g., \(\frac{\partial {{\varvec{y}}}_{2}}{\partial {x}_{4}}\), whereas \({{\varvec{y}}}_{2}\) represent the design criteria of discipline 2). This can also be equated with the level of the respective Sobol-Index of the shared sensitive design variable, as a large Sobol-Index also means a high influence on the associated design criterion. Assuming that discipline 1 and 2 have the variables with the highest Sobol-Indices in common, they show high coupling strength.

With this knowledge, two ways of planning and conducting an MDO can be defined:

-

(1)

Discipline 1,2 and 3 perform the new implemented process together. Furthermore, they use a fully shared variable vector that contains all design variables that are sensitive to at least one of these disciplines:

$${{\varvec{x}}}_{sens.}^{T}=\left({x}_{2}\, {x}_{3}\, {x}_{4}\, {x}_{5}\, {x}_{6}\, {x}_{7}\, {x}_{8}\, {x}_{9}\, {x}_{10}\right).$$(6)This variant is called single-system (SiS) in this study.

-

(2)

All disciplines perform the new implemented process separately, except those that have a large coupling breadth and strength. The latter is necessary because the location of the resampling depends on the constraints. Discipline 1 and 2 therefore perform the process together and discipline 3 separately due to the small coupling. Furthermore, they use a partially shared variable vector which depends on the respective system. Discipline 1 and 2 use these design variables:

$${{\varvec{x}}}_{sens.}^{T}=\left({x}_{2}\, {x}_{3}\, {x}_{4}\, {x}_{5}\, {x}_{6}\, {x}_{7}\, {x}_{8}\right),$$(7)While discipline 3 uses these design variables:

$${{\varvec{x}}}_{sens.}^{T}=\left({x}_{6}\, {x}_{9}\, {x}_{10}\right).$$(8)The variant is called multi-system (MuS) in this study. As there are several systems, the metamodels have to be provided at the end of the process. A final and interdisciplinary consistent optimization has to be performed.

This study differs from the studies of Craig et al. (2002) and Ryberg et al. (2015), which also use the fully shared and partially shared variables but ignore the coupling of disciplines caused by the shared variables.

While the SiS variant is less resource-efficient and has greater coordinative interdisciplinary dependencies than the MuS variant, the MuS variant has some disadvantages in terms of optimization success: the optimization success depends on the correct classification of variables and that this also applies locally at the optimal point. It also depends on the sufficient quality of the metamodels in the interdisciplinary area of interest.

Note: In this study, the calculation of Sobol-Indices and thus the generation of the global sensitivity matrix is only based on each of the \({n}_{g}+{n}_{h}\) approximated constraints as they describe the structural, mechanical behaviour within each discipline. The variables defined as insensitive to each discipline-specific structural behaviour can then be adjusted at the beginning of the optimization in favor of the geometric objective (weight minimization).

3.2 Efficient optimization algorithms and strategies

3.2.1 Local uncertainty measurement and integration in the optimization problem definition

Since metamodels are only approximations of the real structural responses, one has to take the uncertainty of the predictions into account in order to reliably produce valid results. One way to obtain these uncertainty measurements analytically at each point \({{\varvec{x}}}^{new}\) of the metamodel \(\widetilde{y}\) is to use a Gaussian process, since the expected value and the associated variance \({\widetilde{s}}^{2}\left({{\varvec{x}}}^{new}\right)\) (and hence the standard deviation \(\widetilde{s}\left({{\varvec{x}}}^{new}\right)\)) can be determined a posteriori. The problem with this strategy is that it eliminates the possibility of using a metamodel competition to determine the most useful metamodel type for the problem at hand. To overcome this bottleneck, a local, heuristic and thus on all metamodel types computable prediction uncertainty measure must be used. The implementation of van Stein et al. (2018) is adopted with minimal changes as defined in Eqs. 9, 10, 11, 12, 13:

This heuristic approach determines the local prediction uncertainty \({\widetilde{U}}_{L}\) based on the existing \({n}_{L}\in {\mathbb{N}}\) nearest neighbors (samples and thus FE simulations) in the immediate vicinity. It uses \({n}_{L}\ll {n}_{S}\), where \({n}_{S}\in {\mathbb{N}}\) is the number of samples of the whole DOE. van Stein et al. (2018) recommend a value of \({n}_{L}=20\) to obtain a stable and smooth confidence function. The function \({\widetilde{U}}_{L}\) (Eq. 9) is composed of an empirically determined prediction error \({\widetilde{P}}_{L}\) and the variability of the local data \({\widetilde{V}}_{L}\). Since both depend on the range of the respective design criterion \(y\), they are divided by the mean value \(\overline{y}\) of all \({n}_{S}\) samples of the corresponding design criterion. The empirical prediction error \({\widetilde{P}}_{L}\) (Eq. 10) includes the difference between the prediction value \(\widetilde{y}\left({{\varvec{x}}}^{new}\right)\) of the new variable configuration and the existing FE calculated value of the \(k\) th nearest-neighbor \(y({{\varvec{x}}}_{k})\). In addition, this difference is weighted with a weighting factor \({w}_{k}\), which is penalized by the \(k\) th power, and finally divided by the sum of all \({n}_{L}\) penalized weighting factors \({w}_{k}^{k}\). The penalty helps to make near neighbors, measured by the Euclidean distance \(d({{\varvec{x}}}_{k},{{\varvec{x}}}^{new})\), matter more than distant ones. The variability of the local data \({\widetilde{V}}_{L}\) (Eq. 11) is calculated mainly based on the standard deviation \(\widetilde{s}\) of the FE values of the \({n}_{L}\) samples and the predicted value \(\widetilde{y}\left({{\varvec{x}}}^{new}\right)\). Subsequently, \({\widetilde{V}}_{L}\) is also scaled in space, where \(d({\varvec{x}},{{\varvec{x}}}^{^{\prime}})\) represents the maximum distance within the entire design space \(X\).

In the best case, \({\widetilde{U}}_{L}\) becomes one when the empirical prediction error and the variability of the local data are zero.

This information can now be used for optimization by searching for solutions in the areas of the metamodels where the confidence is high. Equations 14, 15, 16 show three possibilities in terms of an additional objective function chosen in this study:

The first option (Eq. 14) is to maximize the sum of all \({n}_{y}\) confidences \(\tilde{U}_{Lj}\), while the second option (Eq. 15) is to maximize the minimal confidence of these \({n}_{y}\) confidences. Both possibilities can have a disadvantage: With the first option, the confidence of some predictions can be very poor if most are good. In the second option, the worst confidence is limited since it is maximized. However, the others do not have to be better than this one. For this reason, the third option (Eq. 16) is a hybrid solution of options 1 and 2. The minimum confidence, which can have a maximum value of 1, is multiplied by a factor \(a\) and the number of design criteria \({n}_{y}\). Afterwards the sum of all confidences, which can have a maximum value of \({n}_{y}\), is added. This term is maximized. In this study, the factor \(a\) is chosen to be 2.7 because in this example we have 34 design criteria (\({n}_{y}=34)\) and often a minimum confidence value of about 0.4. With this knowledge in mind, term 1 and 2 of Eq. 16 become almost equal.

Combining each of these new data-driven objectives individually (Eq. 14, 15, 16) with the standard geometrical/mechanical objective (Eq. 1, here weight minimization) results in a two-dimensional PARETO-front in each case, and thus in a variety of solutions that always represent a compromise between the two objective functions. To save numerical resources, a script automatically selects only two PARETO-optimal solutions per option for the FE verification: first, the one with the highest confidence function value (and thus the highest mass function value) and second, the one with the smallest confidence function value. In order to check the success of the newly implemented objective functions, the standard optimization (without confidence integration), which only leads to one optimal result, is still maintained. Finally, the four different optimization problem formulations that differ in the choice of objective/s (only Eq. 1 and three combinations of Eq. 1 with Eqs. 14, 15, 16) result in seven optimal designs (\({n}_{O,q}\)).

Consequently, if a multiple-step procedure as explained in the next text paragraph is chosen, the number of optimization results increases with the number of iterations \(q\) (\({n}_{O}\) is incremented by \({n}_{O,q}\), i.e., \({n}_{O}={n}_{O}+{n}_{O,q}\)). Note, that these \({n}_{O}\) results are only interdisciplinary consistent, if the process is performed in a single-system (SiS) way. Otherwise, with a multi-system (MuS) variant, only \({n}_{O,q}\) interdisciplinary consistent optimization results are available after all metamodels are provided for the final optimization.

If only the valid results after verification simulation of these predicted optima are considered, interesting results may be overlooked. Even with the use of local uncertainty measures, complete elimination of the deviation between prediction and FE value is unlikely. With the definition of optimization success thresholds, one can filter out so-called interesting points from all results. These results lead to minor violations so that they have the potential to gain full validity through fine-tuning. The number of violated constraints \({Th}_{ViolQuant}\), their mean degree of violation in percentage \({Th}_{MeanViolQual}\), and the maximum degree of violation \({Th}_{MaxViolQual}\) can serve as thresholds for the optimization success.

3.2.2 Resampling strategy in multiple-step procedure

In order to exploit the full potential of optimization, it is important not only to react to local inadequacies of the metamodels within the optimization formulation but also to actively improve the metamodels themselves. A common solution for this is a multiple-step procedure in which samples are selectively added on the basis of the knowledge gained during an initial DOE. Typically, the new samples are set in areas of high uncertainty and thus poor local metamodel quality. The quality of the metamodels is improved globally even if this is not necessary for the optimization, since the entire design space \(X\) does not usually lead to valid solutions. A more targeted strategy is therefore to set the samples in an area that forms the largest possible contiguous solution space. This new design space \({X}_{valid}\) is smaller than the original design space \(X\). Thus, it favors the fact that less samples are needed for sufficient sampling or that better sampling can be guaranteed with the same number of samples. The solution space algorithm is implemented in the software ClearVu Solution Spaces (CVSS) by divis intelligent solutions GmbH. The basic idea is based on the algorithms of Fender et al. (2017) and Daub et al. (2020). The necessary equations for the implemented algorithm are shown below:

In the context of solution space analysis (Eqs. 17, 18, 19) an optimization problem is solved, where the individuals are spaces instead of single variable configurations. Within each space, \({n}_{ST}\) samples can now be set by means of a Monte Carlo study. The associated design criteria values can be determined based on the metamodels \(\widetilde{{\varvec{y}}}\left({{\varvec{x}}}_{s}\right)\) (with \(s=1,\dots ,{n}_{ST}\)). Using these design criterion values, the fitness value \({F}_{m}\) can be determined for every \(m\) th space. The fitness value calculation depends on the fulfilment of the \({n}_{g}\) constraints defined in the optimization problem. As soon as one of the solutions generated by the \({n}_{ST}\) samples is invalid, the fitness value is calculated as Sum-max-dist-to-valid value \({\widetilde{D}}_{m}\) (Eq. 18). For this measurement \({\widetilde{D}}_{m}\), the \({n}_{V}\) violated constraints (\({n}_{V}\le {n}_{g}, {n}_{V}\in {\mathbb{N}}_{0}\)) per sample \({{\varvec{x}}}_{s}\) are considered, choosing the maximum normalized deviation between predicted design criteria value \({\widetilde{y}}_{n}\left({{\varvec{x}}}_{s}\right)\) and defined constraint limit \({g}_{n}\). This max-dist-to-valid value is calculated and summed up for each of the \({n}_{ST}\) samples. \({\widetilde{D}}_{m}\) thus represents the summed maximum normalized distance to a valid solution space.

If all \({n}_{g}\) constraints of all \({n}_{ST}\) samples are within the defined limits, \({\widetilde{D}}_{m}\) becomes 0. In this case, the \({n}_{x}\)-dimensional volume measured by the new lower and upper bounds \({x}_{i,space}^{l}\) and \({x}_{i,space}^{u}\) defines the new fitness value \({F}_{m}\) (Eq. 19).

The objective of the optimization is to minimize this fitness value, whereby a “fitter” space has qualitatively less constraint violation (\({\widetilde{D}}_{m}\), Eq. 18) or is larger (\(-{V}_{m}\), Eq. 19) than an “unfit” one.

3.3 Reduction of the FE calculation times of computationally intensive crash analyses

A potential for reducing the numerical resource requirements is the reduction of the FE calculation time and thus the associated analysis costs, especially for computationally intensive crash load cases. In addition to the resource savings, the quality of the reduced FE model (also known as FE submodels) compared to the full model as well as the modeling effort and thus the automation capability play a major role. The latter does not only guarantee user independence, but also makes extensive MDO with many load cases possible with respect to working time. Furthermore, there is the possibility of integrating and adaptively adjusting the reduced FE models in an automated optimization process in order to ensure quality in large design spaces and thus strongly changing solution spaces.

To check this validity of the submodel, one has to evaluate it not only in the initial design but in \(p>1\) designs as a subset of all DOE samples of the current \(q\) th iteration (\({n}_{S,q}\)) of the multiple-step procedure. Thus, a value between 2 and \({n}_{S,q}\) must be chosen for \(p\). The user-definable value should be closer to 2 than to \({n}_{S,q}\) in order to save numerical resources, since FE full model calculations are necessary for comparison. To obtain designs that are as different as possible, a \(k\)-nearest-neighbor algorithm is used to identify the \(p\) furthest points in the \({n}_{x}\)-dimensional design space. The correlation per design criterion between the values calculated by the FE submodel and FE full model is used as a measure of quality.

The FE submodel generation and validation process only needs to be done per load case, if, trivially, the use of FE full model (FM) should be avoided in the optimization process (trigger FM = False in the newly implemented process) or if an existing FE submodel (SM) has to be adapted in the running process (trigger SM_adapt. = True in the newly implemented process).

In this study, load case-specific submodels are generated automatically by a tool (Büttner (2022)). In preparation, it is necessary to choose a section plane that divides the vehicle structure into high and low strain areas. The low strain areas are deleted and represented with an inertia element rigidly connected to the boundary nodes. By keeping the suspension in the deleted area and connecting it to the inertia element, the kinematics during the crash can be better replicated.

Depending on the load case, 10% to 60% of CPUh can be saved in a single FE analysis compared to the FE full model. In combination with the low working time requirement (time for submodel generation) due to the automatic tool and our choice of \(p=10\) (since \({n}_{S,q}=84\) in this paper), which influences the CPUh for submodel validation, the whole optimization is less time-consuming than calculating all required function calls with the FE full model.

It should be noted, however, that any other submodel strategy (see referred literature in Table 1 or for example Guyan (1965), Irons (1965), Craig and Bampton (1968), Rutzmoser (2018)) that meets the criteria described above can also be included in the process.

3.4 Adaptive complexity control

The fact that the new optimization process is a multiple-step approach enables a very important point: a newly implemented process component called adaptive complexity control adapts the whole process as the number of iterations \(q\) and thus the number of FE simulations increases (\({n}_{O}\) is incremented by \({n}_{O,q}\) optimization results per iteration, i.e., \({n}_{O}={n}_{O}+{n}_{O,q}\) and \({n}_{S}\) is incremented by \({n}_{S,q}\) DOE samples per iteration, i.e., \({n}_{S}={n}_{S}+{n}_{S,q}\)). The adaptation ensures a reduction in the dimensionality and complexity of the entire optimization leading to faster and more reliable results.

The adaptive complexity control obtains its information from three sources:

-

(1)

From a metamodel quality analysis, which evaluates the approximation quality of the metamodels.

-

(2)

From the solution space analysis described in Sect. 3.2.

-

(3)

And from the verification simulations of the full models and, if used (i.e., FM = False, definable per discipline), of the submodels of the predicted optimum.

The number of load cases \({n}_{D,q}\) of the \(q\) th iteration can be adjusted with source 1, as no further DOE sampling is required for a discipline if the underlying metamodels are well-fitted. Threshold values are needed for the evaluation of the approximation quality, e.g., with regard to the correlation and the normalized mean absolute error (\({Th}_{Corr}\) and \({Th}_{NormMAE}\)) of the validations folds of the cross-validation procedure.

Source 2 is used to adjust the design space of the \(q\) th iteration using the updated bounds \({{\varvec{x}}}_{q}^{l}\) and \({{\varvec{x}}}_{q}^{u}\) within which all designs based on the metamodels are valid.

It should be noted that the exclusion of the disciplines and the adjustment of the design space only applies to the setting of new DOE samples. For the optimization in each \(q\) th iteration, all existing metamodels of the disciplines of the first iteration (\({n}_{D,1}\)) initialized by the global sensitivity matrix as well as the initial design space (\({{\varvec{x}}}_{1}^{l}\) and \({{\varvec{x}}}_{1}^{u}\)) should be used. The latter is necessary because the optimum does not always have to lie in the largest possible contiguous solution space. This procedure guarantees that in case of doubt, two interesting areas are refined by additional FE simulations (via new DOE samples in the solution space and the seven optimization results) in each iteration. If any of the stopping criteria in the multiple-step-procedure is reached, a final iteration is performed in which all \({n}_{D,1}\) disciplines are used again for DOE sampling to refine the local regions of the optimal solutions rather than the solution spaces.

The last possible adaptation is the size of the discipline-specific FE submodel. For this purpose, information from both the metamodel quality analysis (source 1) and the verification simulations (source 3) can be used. If the latter leads to large deviations between full and submodels, the submodels must be adjusted at the expense of resource savings. In contrast, the increasingly precise sampling per iteration reduces the variety of solutions. This enables the precise definition of suitable FE submodel sizes and techniques and therefore positively influences the computation time. An adjustment of the submodel in favor of the computation time can also take place if design criteria that force a certain submodel size are identified as sufficiently approximated by the metamodel quality analysis (source 1). Even if the entire discipline cannot yet be excluded due to further insufficiently approximated design criteria, at least the FE submodel can be reduced in size. To adjust the submodel in the next iteration, the trigger SM_adapt. = True is set, otherwise SM_adapt. = False. This decision can be made per discipline.

This study therefore differs from the studies of Sheldon et al. (2011), Craig et al. (2002), Ryberg et al. (2015) and Schumacher et al. (2019), which propose or use only one of the above adjustments.

4 Novel, adaptively controllable MDO process

The final process, which unites the concept for a targeted integration of all disciplines as well as all the above-mentioned potentials for reducing numerical resource requirements and for increasing the quality of results, is shown in Fig. 4. The nomenclature used in this figure was introduced and explained in Sect. 3.

Novel, adaptively controllable MDO process (Büttner 2022)

As part of the process-setup (initialization step), all necessary process parameters described so far must be defined. Table 2 shows the values of these parameters chosen for the application example of this study.

Since the process is iterative, stopping criteria have to be implemented and are shown in Table 3. For the first stopping criterion, it is assumed that a total of about \({n}_{x,sens.}^{2}\) DOE samples (\({n}_{S,max}\)) are to be computed. The maximum number of iterations \({q}_{max}\) is then calculated based on the chosen number of DOE samples per iteration (Table 2) and is rounded up to the next higher integer. The second stopping criterion is related to the accuracy of the metamodels of the various disciplines. It should be noted that, even though obviously not all metamodels meet the quality thresholds in the running process, optimizations are performed in each iteration. There are three reasons for this: first, we like to scan the “potentially” optimal area as early as possible to store this knowledge in the metamodels in the subsequent iterations. Second, the correlation and normalized mean absolute error measurements indicate the quality only globally. This does not mean that this applies also locally in the region of interest. With our implementation of the confidence functions in the optimization problem at hand, we increase the probability that we are in an area with better prediction quality than the global measurements suggest. Third, disciplines usually evaluate many structural responses and thus need to create many metamodels. Not all of them may be active constraints at the optimum, so a poorer prediction quality does not necessarily affect the validity of the FE verified result.

The last stopping criterion refers to the contraction of the PARETO-front, i.e., the situation that the two PARETO-optimal solutions become almost identical.

If any of the defined stopping criteria is fulfilled, the trigger END = False switches to END = True, a last iteration is performed and then the process terminates.

5 MDO example

In order to validate the success and resource savings of the newly implemented MDO process compared to the standard process, a representative MDO full vehicle example with five crash load cases (simulated in LS-DYNA® by LSTC) and one frequency analysis (simulated in PERMAS® by INTES GmbH) is implemented. The full vehicle example is modified and simplified compared to a real-world application but is nevertheless a large FE model with, depending on the discipline, up to about 950,000 FE nodes.

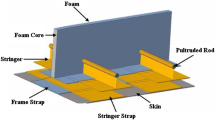

The design variables for this example are 38 sheet thicknesses of the components in the main load paths of the body in white (Fig. 5). The variable bounds depend on the different manufacturing processes (deep-drawn sheets: 0.8–2.5 mm thickness; hot-formed tubes: 1.5–5 mm thickness). The objective function is the minimization of the vehicle weight while maintaining a specific structural behavior for all disciplines. In this study, a representative set of constraints is therefore defined, which is derived from real-world application and modified for these purposes. It does not claim to be exhaustive but shows typical interdisciplinary conflicts. The target of this research is not the functional design of a vehicle but the comparison of different MDO strategies. An overview of the optimization problem is shown in Fig. 6. The limits of the constraints are chosen so that the initial design is valid. For a more detailed view, see Büttner (2022).

Overview of the design variables [modified from Büttner (2022)]

For a better understanding, Table 4 shows the global sensitivity matrix for this MDO example by presenting the maximum Sobol-Index per variable across all discipline-specific approximated constraints. Values that do not meet the Sobol-Index-threshold are hidden. The green color intensity used in Table 4 is proportional to the Sobol-Index value to highlight the importance of variables. We can identify that 10 of the 38 variables are globally (for all disciplines) insensitive and that the load cases front FN and front ODB are strongly and broadly coupled by their sensitive variables.

With this knowledge, the setups of the different MDO process variants can be made and are shown in Table 5. Analogous to the newly implemented MDO process, the standard MDO process is carried out with a total of about \({n}_{x}^{2}\) samples (Table 3, criterion 01) but, as explained, in one-step via a DOE. With 38 variables, this results in about 1500 design variable configurations (\({n}_{S,max}\approx {n}_{x}^{2}\)) and thus FE simulations per load case. Afterwards, one optimization run (\({n}_{O,max}\)) is done and validated in all six load cases. Since the global sensitivity matrix initializes the newly implemented process, the number of design variables and thus the maximum numerical resource requirement can be significantly reduced. Note: For calculating \({n}_{S,max}\), \({q}_{max}\) and \({n}_{x}\) are substituted into the upper formula (lefthand side) of criterion 01 (Table 3). For the calculation of \({n}_{O,max}\) the equation \({n}_{O,q}\cdot{q}_{max}\) is used. Additionally, adding \({n}_{O,q}\) is required for the MuS variant in order to achieve interdisciplinary consistent results after the process terminates. The globally insensitive variables are excluded at the beginning of the process in a way that they are left at the values of the initial design (no postfix in Table 6) or that they are set to the lower restriction bounds \({{\varvec{x}}}^{l}\) in favor of the weight minimization (postfix “_min” in Table 6).

Note, that the global sensitivity matrix in this example is based on samples from the standard MDO process to ensure correct planning of the subsequent MDO. However, it is not included in the calculation of the maximum numerical resource requirement in Table 5. There are four reasons for this: First, subsequent research has shown that this large number of samples is not strictly necessary (Büttner 2022). Figure 7 confirms this by showing the maximum Sobol-Index per variable across all approximated constraints depending on different underlying sample sizes. The biggest and used sample size defines the “ground truth”. Second, the global sensitivity matrix only has to be generated once for each vehicle series/derivative. It can then be used for many optimizations and for other questions in the vehicle development process that go beyond optimization (Büttner et al. 2021). Third, the newly implemented process (Fig. 4) using the single-system (SiS) approach theoretically also works without a global sensitivity matrix. Only the optimization efficiency (number of variables and thus required FE simulations) would suffer, as insensitive design variables would remain undetected and could not be excluded, analogous to Eq. 6. Fourth and last, it has to be investigated to what extent existing, partly non-parameterized simulations and even the engineer’s know-how can be used to create a global sensitivity matrix to save numerical resources.

Maximum Sobol-Indices per design variable across all approximated discipline-specific constraints depending on different underlying sample sizes (Büttner 2022)

Based on these settings, the various MDO processes are executed. The best results, referred to as interesting points (if existing) since they meet the optimization success thresholds, are presented in Table 6. These results are FE verified, since they are all valid on the metamodels. In addition to the violation quantity and quality information explained above, the value of the weight (objective function) is shown. Since the initial design is completely valid, the weight reduction is an indicator for the success of the optimization strategy. The right side of the table shows the number of simulations in total and per discipline, and therefore, in contrast to Table 5, the needed instead of maximum resource requirement. The reduction in CPUh (computation time) is measured in comparison to the computationally intensive standard MDO process. If not marked with the postfix “_SUB”, FE full vehicle simulations are used to assess the success purely from the newly implemented optimization strategy.

The one-step DOE of the standard MDO process leads to 9000 FEM simulations without finding a better design than the valid initial design. Only 17 interdisciplinary valid designs are found and the lightest one weighs 15.61 kg more than the initial design. This is not unusual for such complex interdisciplinary issues, which is why one tries to find better design variable configurations through metamodels and subsequent optimizations. The optimization leads to a FE verified result which is highly invalid even though the metamodels are globally well-fitted (Büttner 2022). In the verification simulations, twelve constraints are violated by an average of 14.8%. The maximum violation of one constraint is 61.28%. Since the optimization does not meet the optimization success thresholds, the calculation of the interesting points is also applied to the DOE. This ensures that we identify a more comparable result with the results of the new MDO process. In addition to the 17 interdisciplinary valid designs, 104 more interesting points can be found. The lightest one weighs 8.76 kg more than the initial design.

In comparison, the results of the novel, adaptively controllable MDO process are promising. Conducting the MDO process in a SiS way leads to a FE verified result that saves 13.45 kg and only violates four constraints by an average of 0.64% and a maximum violation of 1.51%. In addition, this result is generated with considerably fewer simulations than the standard MDO process. The process ends with the maximum number of iterations (Table 3, criterion 01) but not every load case needs this. The load cases rear ODB, roof crush and frequency require fewer simulations (i.e., less than 854 simulations per load case, Table 5) for sufficient metamodel quality and are therefore excluded during the running process by the adaptive complexity control. This variant thus saves 48.81% of CPUh compared to the standard process.

By setting the insensitive variables to the lower bounds at the beginning of the process, like the variant SiS_min does, a better result can be found. This variant saves 17.75 kg without significant violations of the constraints. Unlike variant SiS, the process in variant SiS_min ends with the contraction of the PARETO-fronts (Table 3, criterion 03). Therefore, none of the load cases requires the maximum number of simulations (i.e., less 854 simulations per load case, Table 5) although again the load cases rear ODB, roof crush and frequency can be excluded even earlier. This variant thus saves 64.55% of CPUh compared to the standard process.

As described in Sect. 3.1, the MuS_min variant is more ressource-efficient but leads to less successful results than its counterpart SiS_min: only 12.21 kg can be saved. A subsequent investigation shows that this interdisciplinary optimal variable configuration does not fully correspond to the discipline-specific areas of interest in the individual processes. This consequently leads to insufficient sampling in the interdisciplinary area of interest (Büttner 2022). In addition, although the classification of the variables at the beginning of the process may be correct due to the global sensitivity matrix generated on the largest number of samples, this correct classification need not hold locally. A local numerical sensitivity analysis with all variables using the forward difference method shows that the global classification using a global sensitivity analysis (Sobol-method) does not match to the local sensitivities at the optimal point (Büttner 2022). However, only 920 simulations are necessary for this result, which results in 87.68% less CPUh than the standard MDO process. From this point of view and compared to the result of the standard process, the achieved result is extremely good. In this MuS_min variant, all separate processes end when the maximum number of iterations is reached (Table 3, criterion 01), except the process of system frequency. This process ends by excluding all disciplines (Table 3, criterion 02), as all underlying metamodels are sufficiently approximated in an earlier iteration.

The final investigation SiS_min_SUB again uses the SiS_min variant—this time however with the help of FE submodels. Therefore, five discipline-specific FE submodels, whose sizes are mainly limited by the evaluability of design criteria, are created for the nonlinear crash load cases in the first iteration. For the rear ODB load case, a second FE submodel is created in the running process thanks to the adaptive complexity control, since the design criterion that forced the first submodel size is identified as sufficiently approximated and thus no further extraction out of simulations is required. The process ends when the maximum number of iterations is reached (Table 3, criterion 01), although some load cases can be excluded earlier. In comparison to its counterpart SiS_min, which uses full vehicle models, CPUh can be saved more significantly, if, of course, the same stopping criterion applies. Note that in this example SiS_min ends with a different stopping criterion than SiS_min_SUB. Therefore, the CPUh savings within the newly implemented process should be compared to variant SiS (same stopping criterion). Compared to the standard MDO process, 58.75% less CPUh are required, which slightly reduces to 53.27% by including all necessary full vehicle verification simulations. This resource requirement can only be reduced by even more resource-efficient submodels, a smaller value for \(p\) and the avoidance of verifying all optimization results in the FE full model as well. The presented optimization result is full vehicle verified. It saves less weight than its counterpart SiS_min, which is no surprise since the whole optimization success depends on the FE submodel quality at each point in the design space. This FE submodel validity in the design space and thus the optimization success can only be positively influenced by even more reliable submodels (e.g., through less ressource-efficient models or other submodeling techniques) and a higher value for \(p\) for a more reliable submodel validation.

The discussion of the results so far assumes that the process is carried out in the respective variant until a stopping criterion is reached. The fact that the newly implemented process is a multiple-step approach favors a very important point in combination with a single-system variant: since interdisciplinary consistent optimizations are carried out in each iteration, it is also possible to manually stop the process when an acceptable optimization success is reached to save numerical resources. Figure 8 therefore shows the best FE (full model) verified optimization results referred to as interesting points gained in the individual iterations and SiS variants. Especially in the first iterations the optimization result oscillates. This is not uncommon with a metamodel-based approach, since an increasing number of DOE samples might catch new structural effects. Areas of interest that were found at the beginning by chance can be poorer approximated as the number of samples increases. The changing metamodels thus lead to optimization results in different areas of the design space. However, as the number of iterations increases, the results tend to be in the same area of the design space.

The overall best (most light-weight) result per variant discussed in Table 6 so far is highlighted as a square. Each variant already finds a better design variable configuration than the initial one in the first iteration. Variant SiS_min_SUB even finds its best one in its entire process loop. Manually stopping the process of this variant at this point would save even 93.87% of CPUh (all FE full vehicle verifications inclusive) compared to the standard MDO process.

A final conclusion can be drawn: the confidence integration in the optimization problem definition (Sect. 3.2) has a significant impact on the optimization quality. All results referred to as interesting points are obtained by integrating the Eqs. 14, 15, 16 to the optimization problem. However, it is not possible to identify a clear winner within these three additional objective functions. The standard optimization problem definition (without confidence integration) is not able to find any interesting points during the processes.

6 Conclusion and outlook

The approach presented in this study enables the operational use of MDO in the vehicle development process, as it ensures targeted planning and thus integration of all R&D-departments relevant to a specific problem as well as an efficient use of CPU resources. Some highlights compared to the standard MDO process are the global sensitivity matrix, the metamodel-based local uncertainty measurement and its integration in the optimization problem definition and finally the adaptive adjustments during the running process. The advantages of the new MDO process over the standard approach were demonstrated using a full vehicle example. The new process led to a significant reduction in CPU resources and a significant improvement in the quality of the results compared to the standard MDO process. The latter is not even close to providing a valid result.

In the future, the new process should to be tested on other industrial examples that also include disciplines other than crash and frequency analysis. If necessary, it needs to be improved or expanded on the basis of these new findings. In addition, certain assumptions such as the setting of the process parameters (e.g., Sobol-Index and approximation quality thresholds) are made within the scope of this work. They are certainly not applicable in general. It therefore makes sense to collect and conserve experience depending on the problem at hand. The same applies to the choice of whether to pursue a single-system or multi-system approach.

At last, this standardized approach should be firmly anchored in the product development process.

References

Agte J, de Weck O, Sobieszczanski-Sobieski J, Arendsen P, Morris A, Spieck M (2010) MDO: assessment and direction for advancement—an opinion of one international group. Struct Multidisc Optim 40:17–33. https://doi.org/10.1007/s00158-009-0381-5

Bäck T, Foussette C, Krause P (2013) Contemporary evolution strategies. Series: Natural Computing Series. Springer, Berlin. ISBN 978-3-642-40136-7

Bäck T, Krause P, Foussette C (2015) Automatic metamodelling of CAE simulation models. ATZ Worldwide 117(5):36–41. https://doi.org/10.1007/s38311-015-0015-z

Bäckryd RD, Ryberg A-B, Nilsson L (2017) Multidisciplinary design optimisation methods for automotive structures. Int J Automotive Mech Eng 14(1):4050–4067. https://doi.org/10.15282/ijame.14.1.2017.17.0327

Bitzenbauer J, Franz U, Schulz A, Mlekusch B (2005a) Coupling of Deformable Rigid Bodies with Finite Elements to Simulate FMVSS Head Impact. In: Proceedings 4. LS-DYNA Anwenderforum, Bamberg

Bitzenbauer J, Franz U, Schweizerhof K (2005b) Deformable Rigid Bodies in LS-DYNA with Applications - Merits and Limits. In: Proceedings 5th European LS-DYNA User Conference, Birmingham

Büttner J, Schwarz S, Schumacher A (2020) Reduction of the numerical resource requirements for multidisciplinary optimization. Atzelectronics Worldwide 15(12):52–56. https://doi.org/10.1007/s38314-020-0284-1

Büttner J, Schwarz S, Schumacher A, Bäck T (2021) Global sensitivity matrix for vehicle development. ATZ Worldwide 123(3):26–31. https://doi.org/10.1007/s38311-020-0630-1

Büttner J (2022) Effiziente Lösungsansätze zur Reduktion des numerischen Ressourcenbedarfs für den operativen Einsatz der Multidisziplinären Optimierung von Fahrzeugstrukturen. Dissertation, Bergische Universität Wuppertal. Shaker-Verlag. ISBN 978-3-8440-8560-0

Craig RR Jr, Bampton MC (1968) Coupling of substructures for dynamic analyses. AIAA J 6(7):1313–1319. https://doi.org/10.2514/3.4741

Craig K, Stander N, Dooge D, Varadappa S (2002) MDO of automotive vehicle for crashworthiness and NVH using response surface methods. AIAA 2002-5607. 9th AIAA/ISSMO Symposium on Multidisciplinary Analysis and Optimization. Atlanta, Georgia, USA. https://doi.org/10.2514/6.2002-5607

Cramer EJ, Dennis JE, John E, Frank PD, Lewis RM, Shubin GR (1994) Problem formulation for multidisciplinary optimization. SIAM J Optim 4:754–776. https://doi.org/10.1137/0804044

Daub M, Duddeck F, Zimmermann M (2020) Optimizing component solution spaces for systems design. Struct Multidisc Optim 61(5):2097–2109. https://doi.org/10.1007/s00158-019-02456-8

Duddeck F (2008) Multidisciplinary optimization of car bodies. Struct Multidisc Optim 35(4):375–389. https://doi.org/10.1007/s00158-007-0130-6

Falconi DCJ, Walser AF, Singh H, Schumacher A (2017) Automatic generation, validation and correlation of the submodels for the use in the optimization of crashworthy structures. In: Advances in Structural and Multidisciplinary Optimization: Proceedings of the 12th World Congress of Structural and Multidisciplinary Optimization (WCSMO12), edited by A. Schumacher, Th. Vietor, S. Fiebig, K.-U. Bletzinger, K. Maute. pp. 1558–1571. https://doi.org/10.1007/978-3-319-67988-4_117

Fehr J, Holzwarth P, Eberhard P (2016) Interface and model reduction for efficient explicit simulations—a case study with nonlinear vehicle crash models. Math Comput Model Dyn Syst 22(4):380–396. https://doi.org/10.1080/13873954.2016.1198385

Fender J, Duddeck F, Zimmermann M (2017) Direct computation of solutions spaces. Struct Multidisc Optim 55(5):1787–1796. https://doi.org/10.1007/s00158-016-1615-y

Guyan RJ (1965) Reduction of stiffness and mass matrices. AIAA J 3(2):380. https://doi.org/10.2514/3.2874

Irons B (1965) Structural eigenvalue problems—elimination of unwanted variables. AIAA J 3(5):961–962. https://doi.org/10.2514/3.3027

Kang N, Kokkolaras M, Papalambros PY, Yoo S, Na W, Park J, Featherman D (2014) Optimal design of commercial vehicle systems using analytical target cascading. Struct Multidisc Optim 50:1103–1114. https://doi.org/10.1007/s00158-014-1097-8

Kim H M (2001) Target cascading in optimal system design. Dissertation, University of Michigan

Kim HM, Rideout DG, Papalambros PY, Stein JL (2003) Analytical target cascading in automotive vehicle design. J Mech Des 125:481–489. https://doi.org/10.1115/1.1586308

Kroo I, Altus S, Braun R, Gage P, Sobieski I (1994) Multidisciplinary optimization methods for aircraft preliminary design. At: 5th AIAA/USAF/NASA/ISSMO Symposium on Multidisciplinary Analysis and Optimization. AIAA Paper 94–4325:697–707. https://doi.org/10.2514/6.1994-4325

Martins JRRA, Lambe AB (2013) Multidisciplinary design optimization: a survey of architectures. AIAA J 51:2049–2075. https://doi.org/10.2514/1.J051895

Paas MHJW, van Dijk HC (2017) Multidisciplinary Design optimization of body exterior structures. In: Advances in Structural and Multidisciplinary Optimization: Proceedings of the 12th World Congress of Structural and Multidisciplinary Optimization (WCSMO12), edited by A. Schumacher, Th. Vietor, S. Fiebig, K.-U. Bletzinger, K. Maute; pp. 17–30. https://doi.org/10.1007/978-3-319-67988-4_2

Renaud J, Gabriele G (1991) Sequential global approximation in non-hierarchic system decomposition and optimization. In: Proceedings of the ASME 1991 Design Technical Conferences. 17th Design Automation Conference: Volume 1—Design Automation and Design Optimization. Miami, Florida, USA. September 22–25. pp.191–200. https://doi.org/10.1115/DETC1991-0086

Roth BD (2008) Aircraft family design using enhanced collaborative optimization. Dissertation, Stanford University

Rutzmoser JB (2018) Model order reduction for nonlinear structural dynamics. Dissertation, Technical University of Munich

Ryberg A-B, Bäckryd RD, Nilsson L (2012) Metamodel-based multidisciplinary design optimization for automotive applications. Technical Report LIU-IEI-R-12/003, Linköping University

Ryberg A-B, Bäckryd RD, Nilsson L (2015) A metamodel-based multidisciplinary design optimization process for automotive structures. Eng Comput 31(4):711–728. https://doi.org/10.1007/s00366-014-0381-y

Schäfer M, Sturm R, Friedrich HE (2017) Methodological approach for reducing computational costs of vehicle frontal crashworthiness analysis by using simplified structural modelling. Int J Crashworthiness 24(1):39–53. https://doi.org/10.1080/13588265.2017.1389631

Schäfer M, Sturm R, Friedrich HE (2018) Automated generation of physical surrogate vehicle models for crash optimization. Int J Mech Mater Des 15(1):43–60. https://doi.org/10.1007/s10999-018-9407-8

Schumacher A, Singh H, Wielens S (2019) Submodel-based multi-level optimization of crash structures using statistically generated universal correlations of the different levels. In: Proceeding of the World Congress of Structural and Multidisciplinary Optimization (WCSMO13). Beijing, China

Sheldon A, Helwig E, Cho Y-B (2011) Investigation and application of multi-disciplinary optimization for automotive body-in-white development. In: Proceedings of the 8th European LS-DYNA Users Conference. Strasbourg, France

Sobieszczanski-Sobieski J (1989) Optimization by decomposition: a step from hierarchic to non-hierarchic systems. At: Second NASA/Air Force Symposium on Recent Advances in Multidisciplinary Analysis and Optimization, Hampton, VA, September 28–30, pp. 51–78

Sobieszczanski-Sobieski J (1992) Multidisciplinary design and optimization. In: Integrated design analysis and optimization of aircraft structures, AGARD Lecture Series 186, UK

Sobieszczanski-Sobieski J, Haftka RT (1987) Interdisciplinary and multilevel optimum design. Computer aided optimal design: structural and mechanical systems. Springer, Berlin, pp 655–701

Sobieszczanski-Sobieski J, Agte JS, Sandusky Jr RR (1998) Bi-level integrated system synthesis (BLISS). At: 7th AIAA/USAF/NASA/ISSMO Symposium on Multidisciplinary Analysis and Optimization. pp.1543–1557. https://doi.org/10.2514/6.1998-4916

Sobieszczanski-Sobieski J, Altus TD, Phillips M, Sandusky R (2003) Bi-Level Integrated System Synthesis (BLISS) for concurrent and distributed processing. AIAA J 41(10):1996–2003. https://doi.org/10.2514/6.2002-5409

Sobol IM (1993) Sensitivity estimates for non-linear mathematical models. Math Modeling Comput Experiment 4:407–414

Sobol IM (2001) Global sensitivity indices for nonlinear mathematical models and their Monte Carlo estimates. Math Comput Simul 55:271–280. https://doi.org/10.1016/S0378-4754(00)00270-6

van Stein B, Wang H, Kowalczyk W, Bäck T (2018) A novel uncertainty quantification method for efficient global optimization. In: Information Processing and Management of Uncertainty in Knowledge-Based Systems. Applications. Springer International Publishing. ISBN: 978-3-319-91478-7. https://doi.org/10.1007/978-3-319-91479-4_40

Tosserams S, Etman L, Papalambros P, Rooda J (2006) An augmented Lagrangian relaxation for analytical target cascading using the alternating direction method of multipliers. Struct Multidisc Optim 31:176–189. https://doi.org/10.1007/s00158-005-0579-0

Wang W, Gao F, Cheng Y, Lin C (2017) Multidisciplinary design optimization for front structure of an electric car body-in-white based on improved collaborative optimization method. Int J Automot Technol 18(6):1007–1015. https://doi.org/10.1007/s12239−017−0098−1

Wujek BA, Renaud JE, Batill SM, Brockman JB (1995) Concurrent subspace optimization using design variable sharing in a distributed computing environment. In: Proceedings of the 1995 Design Engineering Technical Conferences, Advances in Design Automation 82:181–188. https://doi.org/10.1115/DETC1995-0024

Xue Z, Elango A, Fang J (2016) Multidisciplinary design optimization of vehicle weight reduction. SAE Int J Mater Manuf 9(2):393–399. https://doi.org/10.4271/2016-01-0301

Acknowledgements

Special thanks to Lukas Rafalski (Dr. Ing. h.c. F. Porsche AG), Dr. Christopher Ortmann and Florian Koch (Volkswagen AG) for their close collaboration in the development of the automated FE submodel generation and validation tool.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no conflict of interest.

Replication of results

The datasets generated during and/or analyzed during the current study are not publicly available due to confidentiality restrictions but are available from the corresponding author on reasonable request.

Additional information

Responsible editor YoonYoung Kim.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Büttner, J., Schumacher, A., Bäck, T. et al. Making multidisciplinary optimization fit for practical usage in car body development. Struct Multidisc Optim 66, 62 (2023). https://doi.org/10.1007/s00158-023-03505-z

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00158-023-03505-z