Abstract

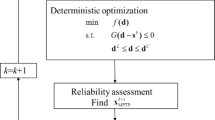

In recent years, several approaches have been proposed for solving reliability-based design optimization (RBDO), where the probability of failure is a design constraint. The same cannot be said about risk optimization (RO), where probabilities of failure are part of the objective function. In this work, we propose a performance measure approach (PMA) for RO problems. We first demonstrate that RO problems can be solved as a sequence of RBDO sub-problems. The main idea is to take target reliability indexes (i.e., probabilities of failure) as design variables. This allows the use of existing RBDO algorithms to solve RO problems. The idea also extends the literature concerning RBDO to the context of RO. Here, we solve the resulting RBDO sub-problems using the PMA. Sensitivity expressions required by the proposed approach are also presented. The proposed approach is compared to an algorithm that employs the first-order reliability method (FORM) for evaluation of the probabilities of failure. The numerical examples show that the proposed approach is efficient and more stable than direct employment of FORM. This behavior has also been observed in the context of RBDO, and was the main reason for the development of PMA. Consequently, the proposed approach can be seen as an extension of PMA approaches to RO, which result in more stable optimization algorithms.

Similar content being viewed by others

Change history

17 May 2019

The original article unfortunately contains error in one of the equations and the results of the last example. This is also to emphasize that these corrections do not affect the conclusions of the paper.

Notes

In this work, the term “surrogate model” is employed if the relation between the probability of failure (alternatively the objective function/constraint of the optimization problem) and the design variables is approximated (i.e., the approximation occurs at the upper-level optimization problem). The term “stochastic expansion” is employed when the relation between the probability of failure (or statistical moments) and the random variables is approximated (i.e., the approximation occurs at the lower-level reliability analysis problem). Note that the classification is not related to the approximation technique itself. Kriging and artificial neural networks, for example, can be employed for both purposes.

References

Aoues Y, Chateauneuf A (2010) Benchmark study of numerical methods for reliability-based design optimization. Struct Multidiscip Optim 41(2):277–294. https://doi.org/10.1007/s00158-009-0412-2

Atkinson K (1989) An introduction to numerical analysis, 2nd. Wiley, New York

Au SK, Beck JL (2001) Estimation of small failure probabilities in high dimensions by subset simulation. Probab Eng Mech 16(4):263–277. https://doi.org/10.1016/S0266-8920(01)00019-4

Beck AT, Gomes WJS (2012) A comparison of deterministic, reliability-based and risk-based structural optimization under uncertainty. Probab Eng Mech 28:18–29. https://doi.org/10.1016/j.probengmech.2011.08.007

Beck AT, Gomes WJS, Lopez RH, Miguel LFF (2015) A comparison between robust and risk-based optimization under uncertainty. Struct Multidiscip Optim 52(3):479–492. https://doi.org/10.1007/s00158-015-1253-9

Blatman G, Sudret B (2010) An adaptive algorithm to build up sparse polynomial chaos expansions for stochastic finite element analysis. Probab Eng Mech 25(2):183–197. https://doi.org/10.1016/j.probengmech.2009.10.003

Bojórquez J, Ruiz S E, Ellingwood B, Reyes-Salazar A, Bojórquez E (2017) Reliability-based optimal load factors for seismic design of buildings. Eng Struct 151:527–539. https://doi.org/10.1016/j.engstruct.2017.08.046

Buzzard GT (2012) Global sensitivity analysis using sparse grid interpolation and polynomial chaos. Reliab Eng Syst Saf 107:82–89. https://doi.org/10.1016/j.ress.2011.07.011

Chen Z, Qiu H, Gao L, Li P (2013a) An optimal shifting vector approach for efficient probabilistic design. Struct Multidiscip Optim 47:905–920. https://doi.org/10.1007/s00158-012-0873-6

Chen Z, Qiu H, Gao L, Su L, Li P (2013b) An adaptive decoupling approach for reliability-based design optimization. Compos Struct 117:58–66. https://doi.org/10.1016/j.compstruc.2012.12.001

Chen Z, Qiu H, Gao L, Li X, Li P (2014) A local adaptive sampling method for reliability-based design optimization using Kriging model. Struct Multidiscip Optim 49(3):401–416. https://doi.org/10.1007/s00158-013-0988-4

Chen Z, Peng S, Li X, Qiu H, Xiong H, Gao L, Li P (2015) An important boundary sampling method for reliability-based design optimization using Kriging model. Struct Multidiscip Optim 52(1):55–70. https://doi.org/10.1007/s00158-014-1173-0

Cheng G, Xu L, Jiang L (2006) Sequential approximate programming strategy for reliability-based optimization. Compos Struct 84(21):1353–1367. https://doi.org/10.1016/j.compstruc.2006.03.006

Choi M J, Cho H, Choi K K, Cho S (2015) Sampling-based RBDO of ship hull structures considering thermo-elasto-plastic residual deformation. Mech Based Des Struct Mach 43(2):183–208. https://doi.org/10.1080/15397734.2014.940463

Ditlevsen O, Madsen H O (1996) Structural reliability methods. Wiley, Chichester

Du X, Chen W (2004) Sequential optimization and reliability assessment method for efficient probabilistic design. ASME J Mech Des 126(2):225–233. https://doi.org/10.1115/1.1649968

Du X, Guo J, Beeram H (2008) Sequential optimization and reliability assessment for multidisciplinary systems design. Struct Multidiscip Optim 35:117–130. https://doi.org/10.1007/s00158-007-0121-7

Dubourg V, Sudret B, Bourinet J M (2011) Reliability-based design optimization using Kriging surrogates and subset simulation. Struct Multidiscip Optim 44(5):673–690. https://doi.org/10.1007/s00158-011-0653-8

Gere J M (2004) Mechanics of materials, 6th edn. Brooks/Cole, Belmont

Ghanem R, Spanos P (1991) Stochastic finite elements: a spectral approach. Springer, New York

Gomes H M, Awruch A M, Lopes P A M (2011) Reliability based optimization of laminated composite structures using genetic algorithms and artificial neural networks. Struct Saf 33(3):186–195. https://doi.org/10.1016/j.strusafe.2011.03.001

Gomes WJS, Beck AT (2013) Global structural optimization considering expected consequences of failure and using ANN surrogates. Comput Struct 126:56–68. https://doi.org/10.1016/j.compstruc.2012.10.013

Gomes WJS, Beck AT (2016) The design space root finding method for efficient risk optimization by simulation. Probab Eng Mech 44:99–110. https://doi.org/10.1016/j.probengmech.2015.09.019

Guo X, Bai W, Zhang W, Gao X (2009) Confidence structural robust design and optimization under stiffness and load uncertainties. Comput Methods Appl Mech Eng 198(41):3378–3399. https://doi.org/10.1016/j.cma.2009.06.018

Haldar A, Mahadevan S (2000) Reliability assessment using stochastic finite element analysis. John Wiley & Sons, New York

Hawchar L, El Soueidy C P, Schoefs F (2018) Global Kriging surrogate modeling for general time-variant reliability-based design optimization problems. Struct Multidiscip Optim 58(3):955–968. https://doi.org/10.1007/s00158-018-1938-y

Hsu KS, Chan KY (2010) A filter-based sample average SQP for optimization problems with highly nonlinear probabilistic constraints. ASME J Mech Des 132(11):9. https://doi.org/10.1115/1.4002560

Hu C, Youn B D (2011) Adaptive-sparse polynomial chaos expansion for reliability analysis and design of complex engineering systems. Struct Multidiscip Optim 43(3):419–442. https://doi.org/10.1007/s00158-010-0568-9

Hu W, Choi K K, Cho H (2016) Reliability-based design optimization of wind turbine blades for fatigue life under dynamic wind load uncertainty. Struct Multidiscip Optim 54(4):953–970. https://doi.org/10.1007/s00158-016-1462-x

Huang Z L, Jiang C, Zhou Y S, Luo Z, Zhang Z (2016) An incremental shifting vector approach for reliability-based design optimization. Struct Multidiscip Optim 53(3):523–543. https://doi.org/10.1007/s00158-015-1352-7

Jiang C, Qiu H, Gao L, Cai X, Li P (2017) An adaptive hybrid single-loop method for reliability-based design optimization using iterative control strategy. Struct Multidiscip Optim 56(6):1271–1286. https://doi.org/10.1007/s00158-017-1719-z

Jiménez Montoya P, García Meseguer A, Morán Cabré F (2000) Hormignón Armado, 4th edn. Gustavo Gili, Barcelona

Keshavarzzadeh V, Meidani H, Tortorelli D A (2016) Gradient based design optimization under uncertainty via stochastic expansion methods. Comput Methods Appl Mech Eng 306:47–76. https://doi.org/10.1016/j.cma.2016.03.046

Keshtegar B, Hao P (2018a) Enriched self-adjusted performance measure approach for reliability-based design optimization of complex engineering problems. Appl Math Model 57:37–51. https://doi.org/10.1016/j.apm.2017.12.030

Keshtegar B, Hao P (2018b) A hybrid descent mean value for accurate and efficient performance measure approach of reliability-based design optimization. Comput Methods Appl Mech Eng 336:237–259. https://doi.org/10.1016/j.cma.2018.03.006

Kiureghian A D, Dakessian T (1998) Multiple design points in first and second-order reliability. Struct Saf 20(1):37–49. https://doi.org/10.1016/S0167-4730(97)00026-X

Lee I, Choi KK, Zhao L (2011) Sampling-based RBDO using the stochastic sensitivity analysis and Dynamic Kriging method. Struct Multidiscip Optim 44(3):299–317. https://doi.org/10.1007/s00158-011-0659-2

Lee TH, Jung JJ (2008) A sampling technique enhancing accuracy and efficiency of metamodel-based RBDO: constraint boundary sampling. Compos Struct 86(13-14):1463–1476. https://doi.org/10.1016/j.compstruc.2007.05.023

Li G, Li B, Hu H (2017) A novel first-order reliability method based on performance measure approach for highly nonlinear problems. Struct Multidiscip Optim 57(4):1593–1610. https://doi.org/10.1007/s00158-017-1830-1

Li HS, Cao ZJ (2016) Matlab codes of Subset Simulation for reliability analysis and structural optimization. Struct Multidiscip Optim 54(2):391–410. https://doi.org/10.1007/s00158-016-1414-5

Li X, Xiu H, Chen Z, Gao L, Shao X (2016) A local Kriging approximation method using MPP for reliability-based design optimization. Compos Struct 162:102–115. https://doi.org/10.1016/j.compstruc.2015.09.004

Li X, Gong C, Gu L, Jing Z, Fang H, Gao R (2019) A reliabilitybased optimization method using sequential surrogate model and Monte Carlo simulation. Struct Multidiscip Optim 59(2):439–460. https://doi.org/10.1007/s00158-018-2075-3

Lim J, Lee B (2016) A semi-single-loop method using approximation of most probable point for reliability-based design optimization. Struct Multidiscip Optim 53(4):745–757. https://doi.org/10.1007/s00158-015-1351-8

Liu P L, Der Kiureghian A (1991) Optimization algorithms for structural reliability. Struct Saf 9(3):161–177. https://doi.org/10.1016/0167-4730(91)90041-7

Liu X, Wu Y, Wang B, Ding J, Jie H (2017) An adaptive local range sampling method for reliability-based design optimization using support vector machine and Kriging model. Struct Multidiscip Optim 55 (6):2285–2304. https://doi.org/10.1007/s00158-016-1641-9

Liu X, Wu Y, Wang B, Yin Q, Zhao J (2018) An efficient RBDO process using adaptive initial point updating method based on sigmoid function. Struct Multidiscip Optim, pp 1–22. https://doi.org/10.1007/s00158-018-2038-8

Lopez R, Torii A, Miguel L, Cursi JS (2015a) Overcoming the drawbacks of the FORM using a full characterization method. Struct Saf 54:57–63. https://doi.org/10.1016/j.strusafe.2015.02.003

Lopez RH, Beck AT (2012) RBDO methods based on FORM: a review. J Braz Soc Mech Sci Eng 34 (4):506–514. https://doi.org/10.1590/S1678-58782012000400012

Lopez R H, Lemosse D, de Cursi J E S, Rojas J, El-Hami A (2011) An approach for the reliability based design optimization of laminated composites. Eng Optim 43(10):1079–1094. https://doi.org/10.1080/0305215X.2010.535818

Lopez R H, Miguel L F F, Belo I M, de Cursi E S (2014) Advantages of employing a full characterization method over FORM in the reliability analysis of laminated composite plates. Compos Struct 107:635–642. https://doi.org/10.1016/j.compstruct.2013.08.024

Lopez RH, Torii AJ, Miguel LFF, de Cursi JES (2015b) An approach for the global reliability based optimization of the size and shape of truss structures. Mec Ind 16(6):603. https://doi.org/10.1051/meca/2015029

Luenberger D G, Ye Y (2008) Linear and nonlinear programming, 3rd edn. Springer, New York

Madsen H O, Krenk S, Lind N C (1986) Methods of structural safety. Prentice Hall, Englewood Cliffs

McGuire W, Gallagher R H, Ziemian R D (2000) Matrix structural anlysis, 2nd. Wiley, New York

Melchers R E, Beck A T (2018) Structural reliability analysis and prediction, 3rd edn. Wiley, Hoboken

Meng Z, Yang D, Zhou H, Wang B P (2018) Convergence control of single loop approach for reliability-based design optimization. Struct Multidiscip Optim 57(3):1079–1091. https://doi.org/10.1007/s00158-017-1796-z

Ren X, V Yadav V, Rahman S (2016) Reliability-based design optimization by adaptive-sparse polynomial dimensional decomposition. Struct Multidiscip Optim 53(3):425–452. https://doi.org/10.1007/s00158-015-1337-6

Schuëller GI, Jensen HA (2008) Computational methods in optimization considering uncertainties: an overview. Comput Methods Appl Mech Eng 198(1):2–13. https://doi.org/10.1016/j.cma.2008.05.004

Strömberg N (2017) Reliability-based design optimization using SORM and SQP. Struct Multidiscip Optim 56(3):631–645. https://doi.org/10.1007/s00158-017-1679-3

Suryawanshi A, Ghosh D (2016) Reliability based optimization in aeroelastic stability problems using polynomial chaos based metamodels. Struct Multidiscip Optim 53(5):1069–1080. https://doi.org/10.1007/s00158-015-1322-0

Timoshenko S P, Gere J M (1961) Theory of elastic stability, 2nd. McGraw-Hill, New York

Torii A, Lopez R, Miguel L (2016) A general RBDO decoupling approach for different reliability analysis methods. Struct Multidiscip Optim 54(2):317–332. https://doi.org/10.1007/s00158-016-1408-3

Torii A J, de Faria J R (2017) Structural optimization considering smallest magnitude eigenvalues: a smooth approximation. J Braz Soc Mech Sci Eng 39(5):1745–1754. https://doi.org/10.1007/s40430-016-0583-x

Torii A J, Lopez R H, Miguel L F F (2015) Modeling of global and local stability in optimization of truss-like structures using frame elements. Struct Multidiscip Optim 51(6):1187–1198. https://doi.org/10.1007/s00158-014-1203-y

Torii A J, Lopez R H, Miguel L F F (2017a) A gradient-based polynomial chaos approach for risk and reliability-based design optimization. J Braz Soc Mech Sci Eng 39(7):2905–2915. https://doi.org/10.1007/s40430-017-0815-8

Torii AJ, Lopez RH, Miguel LFF (2017b) Probability of failure sensitivity analysis using polynomial expansion. Probab Eng Mech 48:76–84. https://doi.org/10.1016/j.probengmech.2017.06.001

Tu J, Choi K K, Park Y H (1999) A new study on reliability-based design optimization. ASME J Mech Des 121:557–564. https://doi.org/10.1115/1.2829499

Valdebenito MA, Schuëller GI (2010) A survey on approaches for reliability-based optimization. Struct Multidiscip Optim 42(5):645–663. https://doi.org/10.1007/s00158-010-0518-6

Wiener N (1938) The homogeneous chaos. Am J Math 60(23-26):897–936. https://doi.org/10.2307/2371268

Wu Y T, Millwater H R, Cruse T A (1990) Advanced probabilistic structural analysis method for implicit performance functions. AIAA J 28(9):1663–1669. https://doi.org/10.2514/3.25266

Xiong F, Greene S, Chen W, Xiong Y, Yang S (2010) A new sparse grid based method for uncertainty propagation. Struct Multidiscip Optim 41(3):335–349. https://doi.org/10.1007/s00158-009-0441-x

Xiu D, Karniadakis GE (2002) The Wiener-Askey polynomial chaos for stochastic differential equations. SIAM J Sci Commun 24(2):619–644. https://doi.org/10.1137/S1064827501387826

Yi P, Cheng G (2008) Further study on efficiency of sequential approximate programming strategy for probabilistic structural design optimization. Struct Multidiscip Optim 35(6):509–522. https://doi.org/10.1007/s00158-007-0120-8

Yi P, Cheng G, Jiang L (2008) A sequential approximate programming strategy for performance-measure-based probabilistic structural design optimization. Struct Saf 30(2):91–109. https://doi.org/10.1016/j.strusafe.2006.08.003

Yi P, Zhu Z, Gong J (2016) An approximate sequential optimization and reliability assessment method for reliability-based design optimization. Struct Multidiscip Optim 54(6):1367–1378. https://doi.org/10.1007/s00158-016-1478-2

Youn B D, Choi K K (2003) An investigation of nonlinearity of reliability-based design optimization approaches. ASME J Mech Des 126(3):403–411. https://doi.org/10.1115/1.1701880

Youn BD, Choi KK, Du L (2005) Adaptive probability analysis using an enhanced hybrid mean value method. Struct Multidiscip Optim 29:134–148. https://doi.org/10.1007/s00158-004-0452-6

Yun W, Lu Z, Jiang X (2018) An efficient reliability analysis method combining adaptive Kriging and modified importance sampling for small failure probability. Struct Multidiscip Optim 58:1383–1393. https://doi.org/10.1007/s00158-018-1975-6

Zhang J, Taflanidis A A (2018) Multi-objective optimization for design under uncertainty problems through surrogate modeling in augmented input space. Struct Multidiscip Optim, pp 1–22

Zhang X, Huang H Z (2010) Sequential optimization and reliability assessment for multidisciplinary design optimization under aleatory and epistemic uncertainties. Struct Multidiscip Optim 40(1):165–175. https://doi.org/10.1007/s00158-008-0348-y

Zhou M, Luo Z, Yi P, Cheng G (2018) A two-phase approach based on sequential approximation for reliability-based design optimization. Struct Multidiscip Optim 57(2):489–508. https://doi.org/10.1007/s00158-017-1888-9

Zou T, Mahadevan S (2006) A direct decoupling approach for efficient reliability-based design optimization. Struct Multidiscip Optim 31(3):190–200. https://doi.org/10.1007/s00158-005-0572-7

Funding

This research was financially supported by CNPq-Brazil.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Replication of results

The results obtained with the proposed approach are presented in Section 5.

Additional information

Responsible Editor: Xu Guo

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Sensitivity of the RBDO problem

The proposed approach requires the sensitivity of the optimal cost according to target reliability indexes of RBDO problems, .i.e., ∇βc. This information is required to update the target reliability indexes. For this purpose, we first evaluate the sensitivity considering the RBDO problem from (6)–(7), with constraints imposed directly on target reliability indexes β. In Appendix B, the expressions are extended to the case where the RBDO problem is solved with the PMA from (12)–(13).

The procedure employed to obtain the sensitivity of the optimization problem is similar to that described by Luenberger and Ye (2008). Take the optimization problem: Find \(\mathbf {d} \in \mathbb {R}^{n}\) that,

subject to

In the RBDO problem from (6)–(7), we have b = β.

The KKT conditions for this problem result (Luenberger and Ye 2008)

By the chain rule, we have

where dc/db is the explicit ordinary derivative of c according to b. Assuming active constraints we also have the following:

Thus, sensitivity of the Lagrangian results (Luenberger and Ye 2008)

that can be written as follows:

From (62), we get

and finally,

Note that this result is basically the same as that presented in literature (Luenberger and Ye 2008), with a correction dc/db, accounting for the explicit dependence of the objective function on b. Further mathematical requirements are as discussed in details by Luenberger and Ye (2008).

Appendix B: Sensitivity for the PMA

In order to employ the results from Appendix A for the PMA (12)–(13), we first write a single constraint of the PMA as follows:

For active and inactive constraints, we have, respectively,

If the reliability constraint is inactive, then ∂gi/∂βi = 0. Otherwise,

Consequently,

From the sensitivity results presented in Appendix A, we have

where λ are Lagrange multipliers related to the PMA constraints. By multiplying the above sensitivity by ∇βb, we get the following:

and, from (72),

From this result, we conclude that in the PMA, the sensitivity expressions from Appendix A must be corrected by ∇βg. We thus require the derivatives of the constraints gi according to βi.

Considering a single constraint g = gi, the first-order optimality condition for the inverse reliability analysis problem of (14)–(15) is known to be (Wu et al. 1990; Yi et al. 2008)

We thus conclude that,

and

where ∇ux is evaluated from the transformation of (17). Note that ∇ux can be evaluated numerically with little computational effort, if necessary. We finally have the following:

If X is a vector of independent normal random variables, we have as follows:

where μ is the vector of expected values and σ is a diagonal matrix of standard deviations of the random variables. In this case, we get the following:

and (79) results the following:

Rights and permissions

About this article

Cite this article

Torii, A.J., Lopez, R.H., Beck, A.T. et al. A performance measure approach for risk optimization. Struct Multidisc Optim 60, 927–947 (2019). https://doi.org/10.1007/s00158-019-02243-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00158-019-02243-5