Abstract

Neuroimaging is critical in clinical care and research, enabling us to investigate the brain in health and disease. There is a complex link between the brain’s morphological structure, physiological architecture, and the corresponding imaging characteristics. The shape, function, and relationships between various brain areas change during development and throughout life, disease, and recovery. Like few other areas, neuroimaging benefits from advanced analysis techniques to fully exploit imaging data for studying the brain and its function. Recently, machine learning has started to contribute (a) to anatomical measurements, detection, segmentation, and quantification of lesions and disease patterns, (b) to the rapid identification of acute conditions such as stroke, or (c) to the tracking of imaging changes over time. As our ability to image and analyze the brain advances, so does our understanding of its intricate relationships and their role in therapeutic decision-making. Here, we review the current state of the art in using machine learning techniques to exploit neuroimaging data for clinical care and research, providing an overview of clinical applications and their contribution to fundamental computational neuroscience.

Zusammenfassung

Die Neurobildgebung ist bei der klinischen Behandlung und Forschung ein entscheidender Faktor, und ermöglicht es, das Gehirn in gesundem und krankem Zustand zu untersuchen. Es besteht ein komplexer Zusammenhang zwischen der morphologischen Struktur, der physiologischen Architektur und den entsprechenden Bildmerkmalen. Die Form, Funktion und Verbindungen zwischen verschiedenen Hirnarealen verändern sich während der frühen Entwicklung und im Laufe des gesamten Lebens, sowie bei Krankheit und Genesung. Wie nur wenige andere Bereiche zieht die Neurobildgebung einen Nutzen aus fortgeschrittenen Auswertungsverfahren, mit denen Bildgebungsdaten analysiert werden, um das Gehirn und seine Funktion zu untersuchen. Seit Kurzem leistet auch das maschinelle Lernen ein Beitrag (a) zu anatomischen Messungen, der Erkennung, Segmentierung und Quantifizierung von Läsionen und Krankheitsmustern, (b) zur schnellen Identifizierung akuter Erkrankungen wie zum Beispiel Schlaganfall oder (c) zur Nachverfolgung von Veränderungen über die Zeit. Mit den Fortschritten bei der Neurobildgebung und ihrer Auswertung nimmt auch das Verständnis der komplexen Beziehungen zwischen Struktur und Funktion und ihrer Bedeutung für die therapeutische Entscheidungsfindung zu. Für die vorliegende Arbeit wurde der aktuelle Wissensstand zum Einsatz von Verfahren des maschinellen Lernens bei der Auswertung von Daten aus der Neurobildgebung für klinische Behandlung und Forschung erhoben; klinische Anwendungen sowie ihr Beitrag zur als Grundlage dienenden computergestützten Neurowissenschaft werden im Überblick dargestellt.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Background

Since recognizing the brain’s significance for human cognition, scientists have studied the intricate relationship between body and mind [1]. The complexity of the human brain has fostered an array of advanced neuroimaging techniques to quantify its structure and function [2]. These techniques provide insights for neuroscientific research, clinical evaluation, and treatment decisions.

Machine learning can identify patterns and relationships of signals in neuroimaging data. Classification and regression techniques detect and quantify clinically relevant findings with increasing reliability and accuracy. Yet, these models can do more than repeat what we train them to do. What if instead of trusting the neuroanatomy to guide the comparison of individual brains, we use their individual functional interaction structure itself to establish correspondences? Can machine learning help to create cortical maps of functional roles, or the influence of genes and environment? Machine learning may offer a tool to fundamentally change our perspective on the observations we make.

A tangible visualization of the brain’s anatomy and neurophysiological properties is essential for cognitive neuroscience and clinical applications [2]. Neuroimaging can be broadly characterized by two categories: structural and functional imaging. Structural neuroimaging aims to visualize the anatomy of the central nervous system and to identify and describe structural anomalies associated with traumatic brain injury, stroke, or neurological diseases such as epilepsy or cancer [1]. Structural MRI can also serve as a predictor for various neurological and psychiatric disorders [3]. Functional neuroimaging on the other hand captures the brain’s neurophysiological or metabolic processes. In clinical practice, it is important to understand neurological impairment and neuropsychiatric disorders, and to inform surgical treatment to keep vital cognitive function such as language or motor capabilities unharmed [4]. Although functional MRI (fMRI) finds broad applications in research, it is typically limited to task-based preoperative mapping of essential functions such as motor control in clinical routine [5]. Resting-state fMRI has emerged as a promising tool [6, 7] since it does not rely on the patient’s ability to perform a specific task. Although promising, the translation into clinical practice is still challenging [8]. Nevertheless, the importance of the interconnectedness of the brain has been acknowledged in the clinical context [9]. While fMRI is a central modality in functional imaging [10], other imaging techniques such as positron emission tomography (PET; [11]) or MR spectroscopy [12] can capture underlying metabolic processes and thus complement structural imaging. Characterizing metabolic properties in the brain is useful for a variety of clinical applications, such as defining infiltration zones and tumor properties in neuro-oncology [13, 14], understanding the pathogenesis of progressive diseases such as multiple sclerosis [15], or understanding neuroinflammation and psychiatric disorders [16]. Compared to structural imaging, functional neuroimaging can detect underlying neurophysiological and molecular properties, and combining both enables a multi-modal description of the brain and the modeling of its structure–function relationship as a marker for disease [17,18,19].

Computational analysis has long been part of neuroimaging, as the recorded signals must be translated into quantitative comparable measurements for which relationship to disease and treatment response can be assessed. The tool box includes techniques such as image-based registration to establish correspondence across individuals for summary and comparison of measurements [20], voxel-based morphometry (VBM; [21]) to quantify phenomena such as gray matter atrophy [22], or statistical parametric maps obtained by general linear models (GLM; [23]).

In this context, machine learning has gained significant importance. Early work advanced functional neuroimage analysis from univariate inspection of individual voxels as in GLM analysis [24] to localized activity patterns [25] and the detection of multivariate widely distributed functional response patterns [26, 27]. These distributed functional patterns offered the opportunity to treat and align their relationship architecture similarly to anatomical maps [28]. Decoupling the analysis of function from its anatomical location altogether enabled the assessment of instances where anatomy is affected by disease such as in brain tumors and reorganizational processes [29]. Machine learning led to a substantial acceleration in brain segmentation [30], image registration, and the mapping of individuals to atlases [31]. Finally, it has led to the ability to automatically detect and segment brain lesions such as tumors with high accuracy and reliability [32].

Here, we review the current state of the art of machine learning in neuroimaging. We structure the overview into three areas. The review of machine learning for structural neuroimaging includes the registration, segmentation, and quantification of anatomy as well as the detection and analysis of findings associated with disease. The overview of machine learning for functional neuroimaging encompasses multivariate analysis of response and the independent representation of function and anatomy as they may be decoupled through development or disease. Finally, network analysis describes how brain networks can be quantitatively assessed and represented. In each section we address research and clinically relevant applications of machine learning techniques. Finally, we summarize open challenges that may be tackled with machine learning approaches.

Machine learning in structural neuroimaging

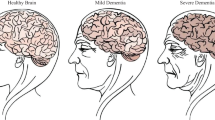

Structural neuroimaging is the mainstay in clinical diagnostic neuroradiology. Although it can only capture overt structural properties of the brain, it is of great value in supporting the diagnosis and treatment decisions for various diseases [1]. It facilitates quantifying the size of brain structures and their deviation associated with disease as potential markers for clinical outcome [33]. Given the availability of structural neuroimaging, machine learning approaches aim to utilize the increasing number of available images to establish robust models for segmentation, classification, or prediction tasks ([3, 34]; Fig. 1).

Machine learning can detect, segment, and quantify characteristics of anatomical structures and abnormalities associated with disease. Supervised machine learning learns from paired training examples such as imaging data and annotated tumors. Unsupervised machine learning identifies structures in a large set of observations, establishing normal variability or detecting groups of similar observations

Structural imaging for quantification of anatomical structures

The accurate segmentation of neuroanatomical structures is important as a basis for their quantification and as a prerequisite for the analysis of possible anomalies of specific areas related to clinical findings, disease, and treatment response. While early work on brain segmentation focused on atlas-based tissue probability maps [35, 36], recent machine learning-based approaches aim to predict accurate segmentation labels. Deep convolutional neural network approaches were able to improve the segmentation of neuroanatomy compared to standard tools in both speed and accuracy [30]. Deep learning-based approaches have shown promising results for challenging applications of brain tissue segmentation, such as in neonates [37] and fetuses [38]. Furthermore, a U-net deep learning model has demonstrated the feasibility of highly accurate segmentation of neuroanatomical structures from CT scans [39]. The robust quantification of anatomical structures is important to establish markers of disease progression and outcome, and the introduction of deep learning-based U‑net models [40] has advanced the accuracy of medical image segmentation overall. Such models have been successfully applied to automatically quantify possible structural markers with prognostic values, such as temporalis muscle mass [41], white matter hyperintensities [42], or brain vessel status [43].

Structural imaging for disease assessment

One important application of structural neuroimaging in clinical routine is lesion detection and characterization. The characterization of brain tumors benefits from the wide range of available neuroimaging techniques and modalities. A multitude of machine learning approaches have been applied to brain tumors to improve their delineation and characterization, informing treatment decisions to ultimately improve patient outcome. Deep learning approaches have improved lesion detection by quantifying anomalies in a model of normal brain structure [44]. Machine learning for fully automated quantitative tumor characterization contributed to the basis for clinical decision-making [45]. This potential benefit in neuro-oncology has also prompted scientific initiatives such as the Brain Tumor Segmentation Challenge (BraTS; [32]), highlighting the value of data sharing for methodological improvements. This challenge leverages multimodal MRI data from multiple institutions, bringing together the scientific community to evaluate and advance segmentation, prediction, and classification tasks within the highly heterogeneous brain tumor landscape. In addition to raw imaging data, this initiative also provides preprocessed data and radiomic features in an accessible open dataset to lower the barrier for the development of new machine learning approaches [46].

Radiomics has become a widely adopted approach in medical image analysis [47], aiming to describe lesions via tissue properties based on shape, intensity, and texture features [48]. Such features build the basis for unsupervised learning, aimed at identifying and characterizing subgroups based on their radiomic features, or classification tasks, assessing features for their discriminative power (Fig. 1). In the clinical context, machine learning approaches based on radiomic features have been used to identify subgroups of tumor patients [49] or to differentiate between primary central nervous lymphoma and atypical glioblastoma [50, 51].

Another area in which structural neuroimaging and machine learning are relevant is the assessment of patients with epilepsy. Here, the detection of often subtle cortical malformations or lesions is critical for informing treatment decisions. Supervised machine learning approaches such as artificial neural networks have been shown to identify focal cortical dysplasia [52, 53]. Unsupervised approaches including clustering techniques were able to reveal a structural anomaly landscape that defines distinct subgroups of patients with epilepsy [54]. A similar application of unsupervised machine learning was able to detect subtypes in multiple sclerosis that exhibited distinct treatment responses [55]. In acute stroke, where rapid treatment decisions are essential, machine learning has the potential to improve patient outcome by detecting the type of arterial occlusion or hemorrhage and informing short- and long-term prognosis [56]. Machine learning has also facilitated the linking of disease-related imaging phenotypes to their underlying biological processes. For instance, so-called radiogenomics in glioma patients showed promise to inform treatment decisions in a personalized medicine approach to support optimal treatment decisions [57].

Machine learning in functional neuroimaging

Functional neuroimaging aims at capturing neurophysiological processes in the brain. The use of fMRI has given rise to mapping the location of cognitive functions across the cortex, so-called functional localization. This is relevant in basic neuroscience and in clinical applications such as the presurgical localization of eloquent areas. The first functional localization techniques treated each brain region independently in a univariate fashion [23]. However, as fMRI can image the entire brain, it enables the analysis of relationships between brain regions. Therefore, multivariate machine learning approaches, relating observations and relationships across multiple brain regions, are relevant [26].

Detecting multivariate functional response patterns: encoding and decoding

The multivariate nature of the brain motivated machine learning approaches for functional mapping, aiming to map the anatomical location of cognitive function. Initial fMRI analysis was dominated by mass univariate task activation analysis [23], and only in the early 2000s did processing of multivariate patterns of neuronal signals emerge [58]. Multivariate pattern analysis (MVPA) is a broad term describing methods of machine learning that aim to decode neuronal activity as response patterns rather than as isolated brain regions. It demonstrated a distributed representation of high-level visual perception for faces and objects [26]. While mass univariate analysis struggles to reveal distributed effects, machine learning approaches make it possible to capture and model the multivariate phenomenon of brain activity, providing a more complete picture of neural activation.

Machine learning models link the observed neuroimaging information such as the sequential BOLD signal observed for each image voxel of fMRI data to experiment conditions, aiming to identify brain regions whose functional signal is associated with the condition (Fig. 2). Encoding models attempt to predict the image signal at each voxel based on the experiment condition. They then test for each individual voxel if its signal can be predicted from experiment conditions such as the class of the visual stimulus. Univariate encoding models such as the GLM [23] are a prominent example, testing each individual voxel independent of the others. If prediction is possible, then GLM treats it as evidence of a significant association or “activation” of this region by the experiment condition. Multivariate encoding models represent the experiment condition with a feature vector instead of a single on/off label. Examples are the representation of words with the help of semantic features to investigate the mapping of semantic concepts across the cortex [59], or the extraction of visual features from images or movies to establish a cortical map of the representations of visual concepts [60, 61]. Decoding models predict experiment condition features from the brain imaging data. Typically, a feature selection method is then used to identify sets of features, i.e., voxels, that contribute to a successful prediction of the condition from the imaging data. In contrast to univariate models that test each voxel’s association (e.g., correlation) with the condition independently of others, multivariate models treat the functional imaging data as a pattern possibly consisting of many distributed voxels [62]. Therefore, multivariate models can identify distributed areas, for which individual tests might not identify a relationship, but which taken as a whole actually carry information about the experiment condition. Examples of such approaches are the identification of distributed patterns associated with face processing [26] that spread beyond areas connected in a univariate manner such as the fusiform face area [24]. The so-called searchlight technique proposed an intermediate approach, where instead of individual voxels, the ability of functional patterns observed in localized cortical patches to predict experiment conditions was tested [25]. Ensemble learning approaches such as random forests were used to identify widely distributed patterns associated with visual stimuli. Ensemble learning approaches have a property called the “grouping effect”; they identify all informative voxels, even if they are highly correlated, as opposed to only selecting the single most informative one. Consequently, their feature-scoring methodology, called “Gini importance,” makes it possible to more reliably detect patterns of activation in the form of the so-called Gini contrasts with higher reproducibility [27] than approaches such as support vector machine-based recursive feature elimination [63].

Encoding or decoding models link a feature representation of the experiment condition during functional neuroimaging to the image information. For every time point, image information is represented as a feature vector consisting of the values of each voxel. Correspondingly, the experiment condition is represented by a feature vector, either consisting of labels (house, face, etc.) or features extracted from the condition (e.g., wavelet decomposition of the image, semantic embedding of a word). Encoding models predict image features from the experiment condition features, while decoding models predict in the opposite direction

Decoding models have introduced the capability to reconstruct stimuli from observed brain activation. While “mind-reading applications,” such as using fMRI as a lie detector, are not feasible with fMRI [64], remarkable results have been achieved for the decoding of visual stimuli [60] and even dreams [65]. Even for video decoding, a regularized regression approach was used to model dynamic brain activity and was successfully applied to generate similar videos as seen based on the neuronal response patterns in the visual cortex [66]. Similar remarkable progress was made with the decoding of the semantic landscape of natural speech-related brain activity [67], and recent advances in brain decoding have shown that deep learning models can establish a predictor of eye movement from fMRI data [68].

As the number of available data have increased, deep learning models have become relevant in functional neuroimaging. While conventional linear models can be on par with deep learning approaches [69], recent studies have demonstrated that deep learning encodes robust discriminative neuroimaging representations to outperform standard machine learning [70].

Brain networks and their change during disease

Neuroimaging is able to capture structural connectivity in the form of nerve fiber bundles with diffusion tensor imaging (DTI) or functional connectivity in the form of correlation among fMRI signals. This enables the analysis of structural or functional brain networks, an area called “connectomics” [73]. Each point on the cortex is viewed as a node in a graph. The connections between pairs of nodes are assigned a measure of connectivity, defined, for instance, by the correlation of fMRI signals observed at the two nodes (Fig. 3). Graph analysis then measures the connectivity properties of individual nodes, with characteristics ranging from the number of connections of a node, the so-called degree, to the role of the nodes in connecting otherwise relatively dissociated networks, the so-called hubness [74]. Statistical analysis of networks [75] has led to insights into the differences of networks across cohorts and the changes during disease progression or during brain development [76]. Representational approaches to render global network structures comparable, and to find components of networks in other networks (see “Machine learning for alignment” section), have led to the ability to track network changes during reorganization in tumor patients [29] or to model the brain network during maturation [77]. Brain network structures are more challenging to process with deep learning methods than image data. Instead of a regular grid structure as in the voxel representation, graphs are largely irregular, and their neighborhood relationships—a critical component of deep artificial network architectures—are heterogeneous. Nevertheless, research has become active in the area of graph convolutional neural networks in neuroimaging [78].

Network analysis investigates the connections among all points in the brain or in the cortex. Connections can be defined by functional MRI signal correlation, or by structural connectivity acquired with diffusion tensor imaging. Connection strengths or presence is represented in a matrix, based on which graph analysis can quantitatively compare networks across cohorts [71]. Representational spaces can capture the connectivity structure and enable its use as a reference when tracking reorganization during disease and treatment, or when comparing the functional layout across species with substantially different anatomy [72]

The relationship between biological and artificial neural systems

Machine learning does not only provide a toolbox for the analysis of neuroimaging data. Methodological advances in fields such as deep learning and artificial neural networks are heavily guided by our understanding of biological networks. The relationship between biological instances and computational implementations of the three core components—objective functions, learning rules, and network architectures—is attracting increasing scientific attention [79]. Deep neural networks have been used as a model to understand the human brain [80], and in the opposite direction, the topology of human neural networks has been used to shape the architecture of artificial neural networks [81]. The quantitative comparison of real biological neural networks and artificial neural networks is challenging, not least because the best computational learning algorithms do not fully correspond to biologically plausible learning mechanisms [82]. Nevertheless, fMRI offers comparative analysis of neural systems, as developed for cross-species analysis [83]. To this end, the dissimilarity of functional activation when processing different visual stimulus categories can be measured in fMRI. At the same time, it can be read out from perceptron activations in artificial neural networks. While the individual activations are not comparable, their dissimilarity structure is, leading to insights into how convolutional neural networks trained to classify objects resemble parts of the human inferior temporal lobe [84]. This has inspired research into the increasingly fine-grained mapping between artificial convolutional neural networks and the visual cortex [85].

Clinical relevance of machine learning and functional imaging

The brain network architecture is critical for our cognitive capabilities and can be affected by disease [86]. Hence, quantifying and understanding associated changes in the network architecture, or modeling reorganization mechanisms associated with disease progression and recovery, are clinically relevant. Clinical application is challenging, but initial results show promise [87]. Machine learning enables the comparison of networks, the detection of anomalies, and the identification of associations between disease and network alterations up to the establishment of early markers preceding more advanced disease. Deviations in the connectivity structures from a normative model have been found to be predictive of brain tumor recurrence up to 2 months in advance [88]. Alterations in functional connectivity patterns were also predictive of cognitive decline and showed different manifestations in low- and high-grade gliomas [89]. Patients suffering from epilepsy or undergoing epilepsy surgery exhibit specific reorganization patterns of network architecture [71, 90]. Supervised machine learning using functional connectivity data revealed disease correlates not visible in structural imaging, for instance, deep learning models for the identification of characteristics of autism spectrum disorders [91] or a generative autoencoder model to classify autism [92]. An example of the relevance of brain network dynamics is the investigation of patients with schizophrenia [93]. Electroencephalography is a cost-effective and widely available tool in the clinical routine for early diagnosis, and its high temporal resolution can be leveraged by machine learning approaches [94]. Deep convolutional networks utilizing electroencephalography recordings have been successfully applied to detect and classify seizures in patients with epilepsy [95] and to classify attention deficit hyperactivity disorder [96].

Machine learning for alignment

Establishing reliable correspondence across the brains of individuals and cohorts is essential for group studies and for probing the impact of disease or intervention on an individual’s brain. Therefore, a variety of image registration approaches have been proposed to align individual brains to a common reference frame based on their anatomical properties [97].

Machine learning for structural image registration

Structural registration methods for the entire brain volume or cortical surface optimize an objective function by deforming one image to match another. Here, machine learning approaches to improve alignment of anatomy have contributed to increasing speed with techniques such as voxel morph [31] for entire volumes, or methods aligning cortical surfaces [98].

Machine learning for functional alignment

Can we establish alternative bases for registration, if anatomical correspondence is only loosely coupled to functional roles as is the case in the prefrontal cortex [99] or after reorganization due to disease? The anatomy of the brain is highly variable [100] and even after structural alignment a substantial amount of functional variability remains heterogeneously distributed across the cortex [99]. Machine learning offers a means to align individual brains based on their functional imaging data. The individualized functional parcellation of the cortex offered the first way of establishing correspondence independently of the anatomical frame by iterative projection [101] or Bayesian models [102]. Functional response patterns themselves can be mapped to a representational space, where correspondence can be established by so-called hyper-alignment of functional response behavior, even if it occurs at different cortical locations [28]. Finally, the functional connectivity architecture can be represented in an embedding space decoupled from its anatomical anchors, enabling the tracking of reorganization across the cortex in patients with brain tumors [29]. This allows us to investigate shared functional architecture across a large population and has led to insights into the continual hierarchical structure of the cortical processing in the form of so-called functional gradients [103]. The decoupling of anatomical and functional alignment has shown that the link between anatomy and function is not equal across the cortex, but high in primary areas, and comparatively low in association areas or the prefrontal cortex [104]. It has also enabled the comparison of neural architecture across species, since despite cross-anatomical differences there is sufficient similarity in the functional connectivity structure to match and compare across human and macaque cortex [72]. Decoupling anatomy and function has further enabled the study of the different impact of genes and environment on the cortical topography of functional units and their interconnectedness [105]. Recently, it has been shown that functional alignment can improve the generalization of machine learning algorithms to new individuals [106].

Summary and challenges

Machine learning plays an increasingly important role in the exploitation of neuroimaging data for research and clinical applications. Its capabilities range from the computational segmentation of anatomical structures and the quantification of their properties to the detection and characterization of disease-related findings, such as tumors. In functional imaging, machine learning is able to link distributed activation patterns to cognitive tasks. It is starting to enable the ability to track and model processes such as reorganization, disease progression, or recovery. Unlike many other application fields of machine learning, neuroimaging is itself a source of methodological advancements in areas such as deep learning. There, the comparison of artificial network architectures and learning algorithms with biological mechanisms yields anchors for novel methodology and fosters insights into the working of the central nervous system.

The current clinical relevance of machine learning is based on its ability to detect, quantify, track, and compare anatomy and disease-related patterns. Some of the most promising challenges facing the field currently comprise three central directions. First, for the linking of phenotypic data observed in neuroimaging to underlying biological mechanisms, machine learning methodology can bridge the gap between representing imaging data and other molecular markers of processes. Second, embedding methods offer the ability to go beyond the anatomical reference frame when studying brain architecture and its change during disease, treatment response, and recovery. As the link between anatomy and function loses critical importance as a basis for analysis, we gain the ability to study their intricate relationship and its variability. Finally, we need to improve our understanding of differentiating the vast natural variability of the brain’s anatomy and function and the often subtle deviations associated with disease.

Practical conclusion

-

Machine learning techniques can detect and segment anatomical structures and findings associated with disease to support diagnosis and to provide quantitative characterizations.

-

Encoding and decoding models identify brain areas whose multivariate functional activity is associated with specific cognitive tasks.

-

Graph theoretical methods can analyze and compare brain networks in individuals and across cohorts.

-

Representational models can uncouple analysis of function and structure and leverage the connectivity structure to establish correspondences when anatomy is affected by disease.

References

Symms M, Jäger HR, Schmierer K, Yousry TA (2004) A review of structural magnetic resonance neuroimaging. J Neurol Neurosurg Psychiatry 75(9):1235–1244

Raichle ME (1998) Behind the scenes of functional brain imaging: a historical and physiological perspective. Proc Natl Acad Sci U S A 95(3):765–772

Mateos-Pérez JM, Dadar M, Lacalle-Aurioles M, Iturria-Medina Y, Zeighami Y, Evans AC (2018) Structural neuroimaging as clinical predictor: a review of machine learning applications. Neuroimage Clin 20:506–522

Silva MA, See AP, Essayed WI, Golby AJ, Tie Y (2018) Challenges and techniques for presurgical brain mapping with functional MRI. Neuroimage Clin 17:794–803

Petrella JR et al (2006) Preoperative functional MR imaging localization of language and motor areas: effect on therapeutic decision making in patients with potentially resectable brain tumors. Radiology 240(3):793–802

Lee MH, Smyser CD, Shimony JS (2013) Resting-state fMRI: a review of methods and clinical applications. AJNR Am J Neuroradiol 34(10):1866–1872

Leuthardt EC et al (2018) Integration of resting state functional MRI into clinical practice—a large single institution experience. PLoS ONE 13(6):e198349

Specht K (2020) Current challenges in translational and clinical fMRI and future directions. Front Psychiatry. https://doi.org/10.3389/fpsyt.2019.00924

Wu C et al (2021) Clinical applications of magnetic resonance imaging based functional and structural connectivity. Neuroimage 244:118649

Logothetis NK (2008) What we can do and what we cannot do with fMRI. Nature 453(7197):869–878

Vaquero JJ, Kinahan P (2015) Positron emission tomography: current challenges and opportunities for technological advances in clinical and preclinical imaging systems. Annu Rev Biomed Eng 17:385–414

Soares DP, Law M (2009) Magnetic resonance spectroscopy of the brain: review of metabolites and clinical applications. Clin Radiol 64(1):12–21

Juweid ME, Cheson BD (2006) Positron-emission tomography and assessment of cancer therapy. N Engl J Med 354(5):496–507

Herholz K, Coope D, Jackson A (2007) Metabolic and molecular imaging in neuro-oncology. Lancet 6(8):711–724

Pelletier D et al (2014) Pathogenesis of multiple sclerosis: insights from molecular and metabolic imaging. Lancet Neurol 13(8):807–822

Meyer JH, Cervenka S, Kim MJ, Kreisl WC, Henter ID, Innis RB (2020) Neuroinflammation in psychiatric disorders: PET imaging and promising new targets. Lancet Psychiatry. https://doi.org/10.1016/S2215-0366(20)30255-8

Jirsa VK et al (2017) The virtual epileptic patient: individualized whole-brain models of epilepsy spread. Neuroimage 145:377–388

Cocchi L, Harding IH, Lord A, Pantelis C, Yucel M, Zalesky A (2014) Disruption of structure—function coupling in the schizophrenia connectome. Neuroimage Clin 4:779–787

Rosenthal G et al (2018) Mapping higher-order relations between brain structure and function with embedded vector representations of connectomes. Nat Commun 9(1):2178

Fischl B (2012) FreeSurfer. Neuroimage 62(2):774–781

Ashburner J, Friston KJ (2000) Voxel-based morphometry—the methods. Neuroimage 11(6):805–821

Ceccarelli A et al (2008) A voxel-based morphometry study of grey matter loss in MS patients with different clinical phenotypes. Neuroimage 42(1):315–322

Friston KJ, Holmes AP, Worsley KJ, Poline J‑P, Frith CD, Frackowiak RSJ (1994) Statistical parametric maps in functional imaging: a general linear approach. Hum Brain Mapp 2(4):189–210

Kanwisher N, McDermott J, Chun MM (1997) The fusiform face area: a module in human extrastriate cortex specialized for face perception. J Neurosci 17(11):4302–4311

Kriegeskorte N, Goebel R, Bandettini P (2006) Information-based functional brain mapping. Proc Natl Acad Sci U S A 103(10):3863–3868

Haxby JV, Gobbini MI, Furey ML, Ishai A, Schouten JL, Pietrini P (2001) Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science 293(5539):2425–2430

Langs G, Menze BH, Lashkari D, Golland P (2011) Detecting stable distributed patterns of brain activation using Gini contrast. Neuroimage 56(2):497–507

Haxby JV et al (2011) A common, high-dimensional model of the representational space in human ventral temporal cortex. Neuron 72(2):404–416

Langs G et al (2014) Decoupling function and anatomy in atlases of functional connectivity patterns: language mapping in tumor patients. Neuroimage 103:462–475

Wachinger C, Reuter M, Klein T (2018) DeepNAT: deep convolutional neural network for segmenting neuroanatomy. Neuroimage 170:434–445

Balakrishnan G, Zhao A, Sabuncu MR, Guttag J, Dalca AV (2019) VoxelMorph: a learning framework for deformable medical image registration. IEEE Trans Med Imaging. https://doi.org/10.1109/TMI.2019.2897538

Menze BH et al (2015) The multimodal brain tumor image segmentation benchmark (BRATS). IEEE Trans Med Imaging 34(10):1993–2024

Furtner J et al (2017) Survival prediction using temporal muscle thickness measurements on cranial magnetic resonance images in patients with newly diagnosed brain metastases. Eur Radiol. https://doi.org/10.1007/s00330-016-4707-6

Sabuncu MR (2015) Clinical prediction from structural brain MRI scans: a large-scale empirical study. Neuroinform 13(1):31

Ashburner J, Friston KJ (2005) Unified segmentation. Neuroimage. https://doi.org/10.1016/j.neuroimage.2005.02.018

Zhang Y, Brady M, Smith S (2001) Segmentation of brain MR images through a hidden Markov random field model and the expectation-maximization algorithm. IEEE Trans Med Imaging 20(1):45–57

Ding Y et al (2020) Using deep convolutional neural networks for neonatal brain image segmentation. Front Neurosci. https://doi.org/10.3389/fnins.2020.00207

Payette K et al (2021) An automatic multi-tissue human fetal brain segmentation benchmark using the fetal tissue annotation dataset. Sci Data 8(1):1–14

Cai JC et al (2020) Fully automated segmentation of head CT neuroanatomy using deep learning. Radiol Artif Intell. https://doi.org/10.1148/ryai.2020190183

Ronneberger O, Fischer P, Brox T (2015) U‑net: convolutional networks for biomedical image segmentation. Med Image Comput Comput Assist Interv. https://doi.org/10.48550/arXiv.1505.04597

Mi E, Mauricaite R, Pakzad-Shahabi L, Chen J, Ho A, Williams M (2021) Deep learning-based quantification of temporalis muscle has prognostic value in patients with glioblastoma. Br J Cancer 126(2):196–203

Park G et al (2021) White matter hyperintensities segmentation using the ensemble U‑Net with multi-scale highlighting foregrounds. Neuroimage 237:118140

Livne M et al (2019) A U‑Net deep learning framework for high performance vessel segmentation in patients with cerebrovascular disease. Front Neurosci. https://doi.org/10.3389/fnins.2019.00097

Chen X, Konukoglu E (2018) Unsupervised detection of lesions in brain MRI using constrained adversarial auto-encoders. http://arxiv.org/abs/1806.04972. Accessed 15 Feb 2022

Kickingereder P et al (2019) Automated quantitative tumour response assessment of MRI in neuro-oncology with artificial neural networks: a multicentre, retrospective study. Lancet Oncol 20(5):728–740

Bakas S et al (2017) Advancing the cancer genome atlas glioma MRI collections with expert segmentation labels and radiomic features. Sci Data 4(1):1–13

Aerts HJWL et al (2014) Decoding tumour phenotype by noninvasive imaging using a quantitative radiomics approach. Nat Commun 5:4006

Zhou M et al (2018) Radiomics in brain tumor: image assessment, quantitative feature descriptors, and machine-learning approaches. AJNR Am J Neuroradiol 39(2):208–216

Choi SW et al (2020) Multi-habitat radiomics unravels distinct phenotypic subtypes of glioblastoma with clinical and genomic significance. Cancers. https://doi.org/10.3390/cancers12071707

Kim Y, Cho H‑H, Kim ST, Park H, Nam D, Kong D‑S (2018) Radiomics features to distinguish glioblastoma from primary central nervous system lymphoma on multi-parametric MRI. Neuroradiology 60(12):1297–1305

Kang D et al (2018) Diffusion radiomics as a diagnostic model for atypical manifestation of primary central nervous system lymphoma: development and multicenter external validation. Neuro Oncol 20(9):1251–1261

Jin B et al (2018) Automated detection of focal cortical dysplasia type II with surface-based magnetic resonance imaging postprocessing and machine learning. Epilepsia 59(5):982–992

Ganji Z, Hakak MA, Zamanpour SA, Zare H (2021) Automatic detection of focal cortical dysplasia type II in MRI: is the application of surface-based morphometry and machine learning promising? Front Hum Neurosci 15:608285

Lee HM et al (2020) Unsupervised machine learning reveals lesional variability in focal cortical dysplasia at mesoscopic scale. Neuroimage Clin 28:102438

Eshaghi A et al (2021) Identifying multiple sclerosis subtypes using unsupervised machine learning and MRI data. Nat Commun 12(1):2078

Mouridsen K, Thurner P, Zaharchuk G (2020) Artificial intelligence applications in stroke. Stroke 51(8):2573–2579

Singh G et al (2021) Radiomics and radiogenomics in gliomas: a contemporary update. Br J Cancer 125(5):641–657

Haxby JV (2012) Multivariate pattern analysis of fMRI: the early beginnings. Neuroimage 62(2):852–855

Mitchell TM et al (2008) Predicting human brain activity associated with the meanings of nouns. Science 320(5880):1191–1195

Kay KN, Naselaris T, Prenger RJ, Gallant JL (2008) Identifying natural images from human brain activity. Nature 452(7185):352–355

Huth AG, Lee T, Nishimoto S, Bilenko NY, Vu AT, Gallant JL (2016) Decoding the semantic content of natural movies from human brain activity. Front Syst Neurosci. https://doi.org/10.3389/fnsys.2016.00081

Martino FD et al (2008) Combining multivariate voxel selection and support vector machines for mapping and classification of fMRI spatial patterns. Neuroimage 43(1):44–58. https://doi.org/10.1016/j.neuroimage.2008.06.037

Hanson SJ, Halchenko YO (2008) Brain reading using full brain support vector machines for object recognition: there is no ‘face’ identification area. Neural Comput 20(2):486–503

Farah MJ, Hutchinson JB, Phelps EA, Wagner AD (2014) Functional MRI-based lie detection: scientific and societal challenges. Nat Rev Neurosci 15(2):123–131

Horikawa T, Tamaki M, Miyawaki Y, Kamitani Y (2013) Neural decoding of visual imagery during sleep. Science 340(6132):639–642

Nishimoto S et al (2011) Reconstructing visual experiences from brain activity evoked by natural movies. Curr Biol 21(19):1641–1646

Huth AG, de Heer WA, Griffiths TL, Theunissen FE, Gallant JL (2016) Natural speech reveals the semantic maps that tile human cerebral cortex. Nature 532(7600):453–458

Frey M, Nau M, Doeller CF (2021) Magnetic resonance-based eye tracking using deep neural networks. Nat Neurosci 24(12):1772–1779

Schulz M‑A et al (2020) Different scaling of linear models and deep learning in UKBiobank brain images versus machine-learning datasets. Nat Commun 11(1):4238

Abrol A et al (2021) Deep learning encodes robust discriminative neuroimaging representations to outperform standard machine learning. Nat Commun 12(1):353

Nenning K‑H et al (2021) The impact of hippocampal impairment on task-positive and task-negative language networks in temporal lobe epilepsy. Clin Neurophysiol 132(2):404–411

Xu T et al (2020) Cross-species functional alignment reveals evolutionary hierarchy within the connectome. Neuroimage 223:117346

Sporns O (2012) Discovering the human connectome. MIT Press

Fornito A, Zalesky A, Breakspear M (2013) Graph analysis of the human connectome: promise, progress, and pitfalls. Neuroimage 80:426–444

Zalesky A, Fornito A, Bullmore ET (2010) Network-based statistic: identifying differences in brain networks. Neuroimage 53(4):1197–1207

Jakab A et al (2015) Disrupted developmental organization of the structural connectome in fetuses with corpus callosum agenesis. Neuroimage 111:277–288

Nenning K‑H et al (2020) Joint embedding: a scalable alignment to compare individuals in a connectivity space. Neuroimage 222:117232

Zhao K, Duka B, Xie H, Oathes DJ, Calhoun V, Zhang Y (2022) A dynamic graph convolutional neural network framework reveals new insights into connectome dysfunctions in ADHD. Neuroimage 246:118774

Richards BA et al (2019) A deep learning framework for neuroscience. Nat Neurosci 22(11):1761–1770

Kriegeskorte N (2015) Deep neural networks: a new framework for modeling biological vision and brain information processing. Annu Rev Vis Sci 1:417–446

Goulas A, Damicelli F, Hilgetag CC (2021) Bio-instantiated recurrent neural networks: Integrating neurobiology-based network topology in artificial networks. Neural Netw 142:608–618

Bengio Y, Lee D‑H, Bornschein J, Mesnard T, Lin Z (2015) Towards biologically plausible deep learning. http://arxiv.org/abs/1502.04156. Accessed 30 Aug 2022

Kriegeskorte N et al (2008) Matching categorical object representations in inferior temporal cortex of man and monkey. Neuron 60(6):1126–1141

Khaligh-Razavi S‑M, Kriegeskorte N (2014) Deep supervised, but not unsupervised, models may explain IT cortical representation. PLoS Comput Biol 10(11):e1003915. https://doi.org/10.1371/journal.pcbi.1003915

la Tour TD, Lu M, Eickenberg M (2021) A finer mapping of convolutional neural network layers to the visual cortex. https://openreview.net/forum?id=EcoKpq43Ul8 (SVRHM 2021 Workshop). Accessed 30 Aug 2022

Fox MD, Greicius M (2010) Clinical applications of resting state functional connectivity. Front Syst Neurosci 4:19

Du Y, Fu Z, Calhoun VD (2018) Classification and prediction of brain disorders using functional connectivity: promising but challenging. Front Neurosci. https://doi.org/10.3389/fnins.2018.00525

Nenning K‑H et al (2020) Distributed changes of the functional connectome in patients with glioblastoma. Sci Rep 10(1):18312

Stoecklein VM et al (2020) Resting-state fMRI detects alterations in whole brain connectivity related to tumor biology in glioma patients. Neuro Oncol 22(9):1388–1398

Foesleitner O et al (2020) Language network reorganization before and after temporal lobe epilepsy surgery. J Neurosurg 134(6):1–9

Heinsfeld AS, Franco AR, Craddock RC, Buchweitz A, Meneguzzi F (2018) Identification of autism spectrum disorder using deep learning and the ABIDE dataset. Neuroimage Clin 17:16–23

Eslami T, Mirjalili V, Fong A, Laird AR, Saeed F (2019) ASD-DiagNet: a hybrid learning approach for detection of autism spectrum disorder using fMRI data. Front Neuroinform. https://doi.org/10.3389/fninf.2019.00070

Damaraju E et al (2014) Dynamic functional connectivity analysis reveals transient states of dysconnectivity in schizophrenia. Neuroimage Clin 5:298–308

Siddiqui MK, Morales-Menendez R, Huang X, Hussain N (2020) A review of epileptic seizure detection using machine learning classifiers. Brain Inform 7(1):1–18

Acharya UR, Oh SL, Hagiwara Y, Tan JH, Adeli H (2018) Deep convolutional neural network for the automated detection and diagnosis of seizure using EEG signals. Comput Biol Med 100:270–278

Dubreuil-Vall L, Ruffini G, Camprodon JA (2020) Deep learning convolutional neural networks discriminate adult ADHD from healthy individuals on the basis of event-related spectral EEG. Front Neurosci. https://doi.org/10.3389/fnins.2020.00251

Klein A et al (2009) Evaluation of 14 nonlinear deformation algorithms applied to human brain MRI registration. Neuroimage 46(3):786–802

Cheng J, Dalca AV, Fischl B, Zöllei L, Alzheimer’s Disease Neuroimaging Initiative (2020) Cortical surface registration using unsupervised learning. Neuroimage 221:117161

Mueller S et al (2013) Individual variability in functional connectivity architecture of the human brain. Neuron 77(3):586–595

Schmitt JE, Raznahan A, Liu S, Neale MC (2021) The heritability of cortical folding: evidence from the human connectome project. Cereb Cortex 31(1):702–715

Wang D et al (2015) Parcellating cortical functional networks in individuals. Nat Neurosci 18(12):1853–1860

Kong R et al (2021) Individual-specific areal-level parcellations improve functional connectivity prediction of behavior. Cereb Cortex 31(10):4477–4500

Margulies DS et al (2016) Situating the default-mode network along a principal gradient of macroscale cortical organization. Proc Natl Acad Sci U S A 113(44):12574–12579

Nenning K‑H, Liu H, Ghosh SS, Sabuncu MR, Schwartz E, Langs G (2017) Diffeomorphic functional brain surface alignment: functional demons. Neuroimage 156:456–465

Burger B et al (2022) Disentangling cortical functional connectivity strength and topography reveals divergent roles of genes and environment. Neuroimage 247:118770

Bazeille T, DuPre E, Richard H, Poline J‑B, Thirion B (2021) An empirical evaluation of functional alignment using inter-subject decoding. Neuroimage 245:118683

Funding

This work has been partially funded by the European Commission (TRABIT 765148), the Austrian Science Fund (FWF) (P 35189, P 34198), and Vienna Science and Technology Fund (WWTF) Project Nr. LS20-065 (PREDICTOME).

Funding

Open access funding provided by Medical University of Vienna.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

G. Langs is co-founder and shareholder of contextflow GmbH. K.-H. Nenning declares that he has no competing interests.

For this article no studies with human participants or animals were performed by any of the authors. All studies mentioned were in accordance with the ethical standards indicated in each case.

The supplement containing this article is not sponsored by industry.

Additional information

Scan QR code & read article online

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Nenning, KH., Langs, G. Machine learning in neuroimaging: from research to clinical practice. Radiologie 62 (Suppl 1), 1–10 (2022). https://doi.org/10.1007/s00117-022-01051-1

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00117-022-01051-1