Abstract

Though the \({\mathcal {A}}\)-quasiconvexity condition has been fully explored since its introduction, no explicit examples of associated variational principles have been considered except in the classical \({\text {curl}}\)-case. Our aim is to propose such a family of problems in the \({\text {div}}{-}{\text {curl}}\)-situation, and explore the corresponding \({\mathcal {A}}\)-polyconvexity condition as the main structural assumption to ensure weak lower semicontinuity and existence results.

Similar content being viewed by others

1 Introduction

Vector variational problems for an integral functional of the form

are of paramount importance for hyper-elasticity, where the internal energy density

has to comply with a bunch of important conditions. In particular, the structural properties of W with respect to its gradient variable U are central to the existence of equilibrium states under typical boundary conditions. Another fundamental motivation to examine this family of variational problems is the study of non-linear systems of PDEs. As a matter of fact, this variational approach is the main method to show existence of weak solutions for such systems beyond the convex case. Check [7] for a discussion on these topics.

In these classic problems, the presence of the gradient variable \(U=\nabla u\) stands as a major feature to be understood to the point that it determines the kind of structural assumption to be demanded on the dependence of W with respect to U. This leads to the quasiconvexity condition in the sense of Morrey [24], property that the community is still struggling to understand. This condition is precisely equivalent to the weak lower semicontinuity of functional E(u) above over usual Sobolev classes of functions.

The main source of quasiconvex functions which are not convex is the class of polyconvex integrands. These can be defined and determined in a very natural way once the collection of weak continuous integrands has been identified. In known existence results for minimizers of functional E under usual boundary conditions in hyper-elasticity, the polyconvexity of W with respect to variable U is always assumed, though it is known that the class of quasiconvex integrands is strictly larger than that of polyconvex densities.

The weak lower semicontinuity property has been treated in a much more general framework in which a constant-rank, linear partial differential operator of the form

is involved. The concept of \({\mathcal {A}}\)-quasiconvexity is then suitably introduced [10], and shown to be necessary and sufficient [16] for the weak lower semicontinuity of a functional of the form

under the differential constraint \({\mathcal {A}}v=0\), possibly in addition to suitable boundary restrictions. The importance of such an extension cannot be underestimated as it expands in an unbelievable way the analytical framework. For the particular case \({\mathcal {A}}={\text {curl}}\), we fall back to the classical gradient case.

This new theory is by now very well understood covering the most fundamental developments: Young measures and \({\mathcal {A}}\)-free measures, relaxation, homogenization, regularity, dynamics, etc, (see [1, 3, 8, 12,13,14,15, 17, 18, 20, 21, 23, 28], among others); and yet explicit variational problems under more general differential constraints of the kind \(Av=0\) have not been systematically pursued, probably due to a lack of such examples of a certain relevance in Analysis or in applications. In the same vein, the natural and straightforward concept of \({\mathcal {A}}\)-polyconvexity, as far as we can tell, has not been explicitly examined (except recently in [18], and in a different form in [4]), again possibly because of lack of examples where such concept could go beyond plain convexity, and use in a fundamental way to show existence of solutions for such variational problems.

Our goal in this contribution is two-fold. On the one hand, we would like to focus on a particular example of a differential operator of the above kind distinct from the gradient situation (but not far), provide and motivate some examples of variational problems like (1.2) of a certain interest, and resort to \({\mathcal {A}}\)-polyconvexity as the main hypothesis allowing existence of minimizers. On the other hand, given that the representation form of polyconvexity in terms of compositions of convex and weak continuous functions involves some ambiguity, we have pushed some standard ideas and explicit calculations to gain more insight into this issue.

1.1 A-Polyconvexity

Suppose a certain non-linear, vector function \(A(x):\mathrm{I\hspace{-1.69998pt}R}^n\rightarrow \mathrm{I\hspace{-1.69998pt}R}^m\) is given. This function will play the role of encoding all (or some) independent weak continuous functions for a certain constant-rank, differential operator of the kind given above. Yet for the computations to be performed, it is not important where the vector function A comes from. It is simply assumed to be given. For this reason, we will not make any reference to the operator \({\mathcal {A}}\) here.

Definition 1

A function \(\psi (x): \mathrm{I\hspace{-1.69998pt}R}^n\rightarrow \mathrm{I\hspace{-1.69998pt}R}\) is declared A-polyconvex if there is a function \(\Psi (x, y): \mathrm{I\hspace{-1.69998pt}R}^n\times \mathrm{I\hspace{-1.69998pt}R}^m\rightarrow \mathrm{I\hspace{-1.69998pt}R}\), convex in the usual sense, such that \(\psi (x)=\Psi (x, A(x))\).

It is well-known that the representation of \(\psi (x)\) in terms of \(\Psi (x, y)\) is non-unique. There is however a canonical representative which is the largest such convex function \(\Psi \). This is in fact standard (check for instance [11]). Consider the function

for a function \(\psi (x): \mathrm{I\hspace{-1.69998pt}R}^n\rightarrow \mathrm{I\hspace{-1.69998pt}R}\). More in general, if \(\phi (x, y): \mathrm{I\hspace{-1.69998pt}R}^n\times \mathrm{I\hspace{-1.69998pt}R}^m\rightarrow \mathrm{I\hspace{-1.69998pt}R}\), then

Proposition 2

A function \(\psi (x): \mathrm{I\hspace{-1.69998pt}R}^n\rightarrow \mathrm{I\hspace{-1.69998pt}R}\) is A-polyconvex if and only if

where C stands for convexification in the usual sense. Moreover, if \(\psi (x)=\phi (x, A(x))\) with \(\phi \) convex, then

The conclusion in this proposition lets us define a canonical representative for A-polyconvex functions.

Definition 3

Let \(\psi (x)=\phi (x, A(x))\) be A-polyconvex with \(\phi (x, y)\), convex. The function \(C\phi _A(x, y)\) is called its canonical representative as a A-polyconvex function.

Though the definition of the convex hull \(C\phi _A(x, y)\) is pretty clear, it may be worthwhile to go through explicit calculations to check its explicit form in some distinguished examples like the ones below. Even in very simple situations, calculations turn out to be pretty demanding but they may help in gain insight into the nature of A-polyconvexity. The following are the situations examined here:

-

1.

First scalar case. Take \(A(x)=x^3\), and find the canonical representative of \(\psi (x)=x^2\).

-

2.

Second scalar case. Take again \(A(x)=x^3\), and find the canonical representative for \(\psi (x)=x^4+x^3\) as a A-polyconvex function.

-

3.

Separately convex case. Take \(A(x, y)=xy\), and check if \(\psi (x, y)=x^2+y^2\) is its own canonical representative.

-

4.

The true polyconvex case (\(2\times 2\)-situation). In this case

$$\begin{aligned} A(\xi )=\det \xi ,\quad \psi (\xi )=|\xi |^2, \quad \xi =\begin{pmatrix}\xi _{11}&{}\xi _{12}\\ \xi _{21}&{}\xi _{22}\end{pmatrix}. \end{aligned}$$Check if \(\psi \) is its own canonical representative.

1.2 \({\mathcal {A}}\)-Polyconvexity

Suppose a constant-rank operator \({\mathcal {A}}\) as in (1.1) is given.

Definition 4

A continuous, non-linear function \(a(v):\mathrm{I\hspace{-1.69998pt}R}^m\rightarrow \mathrm{I\hspace{-1.69998pt}R}\) is weak continuous under the differential constraint \({\mathcal {A}}v=0\) if, for a suitable exponent \(p\ge 1\),

implies

Assume the components of the vector function \(A(v):\mathrm{I\hspace{-1.69998pt}R}^m\rightarrow \mathrm{I\hspace{-1.69998pt}R}^d\) are independent, weakly continuous functions under \({\mathcal {A}}v=0\) according to Definition 4. Note how this time the vector function A is precisely coming from a (partial) list of weak continuous functions for the operator \({\mathcal {A}}\) as indicated in the above definition. It is known that every component of A is a certain polynomial [25].

Definition 5

Let the constant-rank operator \({\mathcal {A}}\) as in (1.1) be given. An integrand W(v) is said to be \({\mathcal {A}}\)-polyconvex if it can be written in the form \(W(v)=\Psi (A(v))\) where \(A(v):\mathrm{I\hspace{-1.69998pt}R}^m\rightarrow \mathrm{I\hspace{-1.69998pt}R}^d\) is a collection of weak continuous functions for \({\mathcal {A}}\) according to Definition 4, and \(\Psi :\mathrm{I\hspace{-1.69998pt}R}^d\rightarrow \mathrm{I\hspace{-1.69998pt}R}\) is a convex function in the usual sense.

Consider the variational problem

under suitable boundary conditions for feasible \(v\in L^p(\Omega ,{\mathbb {R}}^m)\) or associated potentials, where \(\Omega \subset {\mathbb {R}}^N\) is a bounded open set with Lipschitz boundary and \(W:\Omega \times {\mathbb {R}}^m\rightarrow {\mathbb {R}}\) is a Carathéodory function, in addition to complying with the previous differential constraint. We take for granted that boundary conditions imposed in the problem represent a set of competing fields that is weakly closed so that weak limits preserve such boundary conditions. We will denote by

the set of such competing fields.

The following existence result is but a natural generalization of important existence results in hyperelasticity [2].

Theorem 6

Suppose, in addition to assumptions just indicated, that the integrand \(W(x, \cdot ):\mathrm{I\hspace{-1.69998pt}R}^m\rightarrow \mathrm{I\hspace{-1.69998pt}R}\) complies with:

-

1.

It satisfies the growth condition

$$\begin{aligned} W(x,F)\ge a|F|^p+b, \end{aligned}$$for some \(p\ge 2\), \(a>0\), \(b\in {\mathbb {R}}\); and

-

2.

It is \({\mathcal {A}}\)-polyconvex in the sense of Definition 5 for a.e. \(x\in \Omega \), for a family of weak continuous functions \(A:\mathrm{I\hspace{-1.69998pt}R}^m\rightarrow \mathrm{I\hspace{-1.69998pt}R}^d\) such that

$$\begin{aligned} |A(F)|\le C|F|^r,\quad r<p, \quad C>0. \end{aligned}$$

Then the above variational problem admits minimizers \(v\in {\mathcal {L}}\).

The main example corresponds to \({\mathcal {A}}={\text {curl}}\). This is the case for the classic Calculus of Variations and it is very well studied. Another one is the \({\text {div}}\) case when complementary principles are utilized [6]. This particular case has been recently retaken [9]. We would like to focus and motivate the \({\text {div}}{-}{\text {curl}}\) case. Though variational principles under the \({\text {div}}{-}{\text {curl}}\)-constraint have been considered sporadically [19], they have not been pursued systematically as far as we can tell.

To be specific, we will stick to the framework in which \(\Omega \subset \mathrm{I\hspace{-1.69998pt}R}^N\), and competing pairs of fields \((v, \nabla u)\) belong to suitable Sobolev spaces under the differential constraint \({\text {div}}v=0\) in \(\Omega \). The family of integrands we would like to consider are of the form

for

if \(t>0\), and \(\Psi =+\infty \), if \(t\le 0\), that is to say

Densities a(x) and b(x), and exponents p, q, r may vary in suitable ranges, and small coercive perturbation terms are typically added to it to ensure coercivity. More specifically, the following lemma is elementary.

Lemma 7

If \(a,b>0\) and \(\min \{p,q\}\ge r+1\), the functionals given by (1.4) are \(div{-}curl{\text {-}}polyconvex\).

The proof of this lemma is elementary. Simply note that functions in (1.3) are convex under the conditions just given.

In addition to proving Theorem 6, we will justify the form of the integrands in (1.4), show an existence theorem for minimizers (that will be a corollary of Theorem 6), and treat an easy argument to better understand the role of small perturbations added to ensure coerciveness. Needless to say, the div–curl Lemma [26, 30] is central to state some non-standard results in this context. It yields the weak continuity of the inner product \(v\cdot \nabla u\) precisely under the differential constraint \({\text {div}}v=0\).

2 Examples for A-Polyconvexity

We cover in this section the calculations involved for the explicit examples listed in Sect. 1.1.

Example 8

In this first scalar case, we take \(\psi ,A:{\mathbb {R}}\rightarrow {\mathbb {R}}\) with \(A(x)=x^3\) and \(\psi (x)=\phi (x,x^3)=x^2\).

We can treat separately the case where only one of the \(\alpha _i\) is not zero, because it is trivial to conclude that we get \(x^2\) for points (x, y) with \(y=x^3\) and \(+\infty \) otherwise. Now, if at least two of the \(\alpha _i\) are non-zero, the above equality can be written as

As a preliminary step, we study the case where exactly one of the \(\alpha _i\) is zero, Without loss of generality, we take \(\alpha _3=0\) and so, for each (x, y), we have to minimize

subject to

From \(\alpha x_1+(1-\alpha ) x_2=x\) we get \(\alpha =\frac{x_2-x}{x_2-x_1}\), for \(x_2\ne x_1\) (otherwise we fall again in a trivial case). From here and supposing e.g. \(x_1< x < x_2\), one is lead to minimize

subject to

The obtained optimality conditions are

which have no solutions in its domain. The conclusion is that we have that \(x^2\) is the minumum and it is attained for the points where \(y=x^3\) (with \(x_1=x_2=x\)) and that the minimum cannot be attained when \(y\ne x^3\).

To prove this, take the case where \(y>x^3\) (the other being analogue). It is easy to observe that the infimum cannot be smaller than \(x^2\), because by hypotheses \(\alpha (x_1,x_1^3)+(1-\alpha )(x_2,x_2^3)=(x,y)\), so in particular \(\alpha x_1+(1-\alpha )x_2=x\) and as \(x^2\) is convex, then \(\alpha x_1^2+(1-\alpha )x_2^2\ge x^2\). We will prove that the infimum is \(x^2\) and it is obtained when \(x_1\nearrow x\) (and \(x_2\rightarrow +\infty \)). To do this, suppose that (x, y) is fixed (but arbitrary), with \(y>x^3\). We take three sequences of real numbers, \(\alpha _n,x_1^n,x_2^n\) such that \(x_1^n< x< x_2^n\), \(\alpha _n\in (0,1)\) and

If we take \(x_1^n\nearrow x\) then we have \(\alpha _n=\frac{x_2^n-x}{x_2^n-x_1^n}\nearrow 1\). From the other equation in (2.1), we have

One must take some care while computing this limit, avoiding possible indeterminations. But as \(y-\alpha _n(x_1^n)^3 \rightarrow y-x^3>0\) in a monotone way, then

From here, we have

and

To finish the computations, one has to consider the case with 3 points. But given the solution obtained in the previous step, and again as the function \(x^2\) is convex, the best possible value to obtain is indeed \(x^2\), and this value cannot be lowered by taking convex combinations of 2 or more points. From here, the conclusion is that in this case, one has

that is, \(\psi \) is its own canonical representation as a A-polyconvex function.

We consider again a scalar function.

Example 9

In this second scalar case, we take \(\psi ,A:{\mathbb {R}}\rightarrow {\mathbb {R}}\) with \(A(x)=x^3\) and \(\psi (x)=\phi (x,x^3)=x^4+x^3\).

in which we supposed that at least two of the \(\alpha _i\) are non-zero, to avoid trivialities. We will start again by dealing with the situation where one of the \(\alpha _i\) is zero. For each (x, y), we have to minimize

subject to

For \(x_1\ne x_2\) we have \(\alpha =\frac{x_2-x}{x_2-x_1}\), (notice that, as before, if \(x_2=x_1=x\) then \(y=x^3\) and so the minimum is \(x^4+x^3\)). From here and supposing without loss of generality, that \(x_1< x < x_2\), one is lead to minimize

subject to

The optimality conditions are

with \(\gamma =(1-\lambda )\) in the last system. As

is a cubic equation in \(\gamma \), it always has at least one real solution. Substituting they/them into the expressions of \(x_1\), \(x_2\) and then in the function that we are minimizing will lead us to the minimum. Unfortunately it is not possible to have an closed expression for the solution in this case, but at least we can still hope to compute \(C\phi _A(x,y)\), for each (x, y). Nevertheless, some interesting conclusions could be made: for fixed \(\gamma \), equation (2.2) defines the line that passes through the points \((x_1,x_1^3)\) and \((x_2,x_2^3)\). This means that the minimum for any (x, y) that belongs to this segment will always be attained at the extreme points \((x_1,x_1^3)\) and \((x_2,x_2^3)\) and, furthermore, \(C\phi _A(x,y)\) will be a linear function along this segments which, in particular, this implies that we cannot have \(C\phi _A(x,y)=x^4+y\), if the minimum is attained in the case where one of the \(\alpha _i\) is zero.

In order to obtain \(C\phi _A(x,y)\), we must analyze the situation when \(\alpha _1\alpha _2\alpha _3\ne 0\). We followed here a different technique: instead of solving the corresponding system of optimality conditions, we will consider two sub-cases, each of which will be reduce to the situation of the previous case. The idea of the reduction came to our minds after a couple of hundreds of numerical computations, where we always obtained optimal solutions with only two distinct points instead of three.

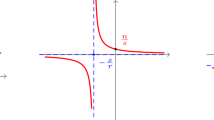

First, we consider the sub-case when of the points (\((x_3,x_3^3),(x_4,x_4^3)\) or \((x_5,x_5^3)\)) of the candidate to be a minimizer coincides with one of the two points \((x_1,x_1^3), (x_2,x_2^3)\) of the minimizer obtained from solving (2.2) (see Fig. 1). This case is easy to rule out: suppose that the coinciding point is \((x_1,x_1^3)\). It is easy to see that

with

for some \(\beta \in (0,1)\), because for any point the lies within the segment \(\overline{(x_1,x_1^3),(x_2,x_2^3)}\), the minimum is attained exactly as a linear combination of the images of \((x_1,x_1^3)\) and \((x_2,x_2^3)\).

In the second and last sub-case, none of the points \((x_3,x_3^3),(x_4,x_4^3),(x_5,x_5^3)\) coincides with any point of the two points \((x_1,x_1^3),(x_2,x_2^3)\), but at least of the three segments \(\overline{(x_3,x_3^3),(x_4,x_4^3)}\), \(\overline{(x_4,x_4^3),(x_5,x_5^3)}\) or \(\overline{(x_5,x_5^3),(x_3,x_3^3)}\) has to intersect the segment \(\overline{(x_1,x_1^3),(x_2,x_2^3)}\) (see Fig. 2). This case is also possible to rule out. Suppose without loss of generality, that \(\overline{(x_4,x_4^3),(x_5,x_5^3)}\) intersect the segment \(\overline{(x_1,x_1^3),(x_2,x_2^3)}\). Then

for some \(\beta \in (0,1)\), otherwise the minimum at the common point of segments \(\overline{(x_5,x_5^3),(x_3,x_3^3)}\) and \(\overline{(x_1,x_1^3),(x_2,x_2^3)}\), obtained with convex combinations of two support points in the graph of \(x^3\), would be smaller than the minimum at (x, y), which it is not possible.

Consequently the minimum is attained in the case where one of the \(\alpha _i\) is zero and so we cannot have \(C\phi _A(x,y)=x^4+y\) and so \(\psi \) is not its own canonical representative. Nevertheless, we can compute \(C\phi _A(x,y)\) at each (x, y) by the algorith described before.

Next we proceed with the separately convex case. Although it is not associated with a constant rank operator, it is a well-known important example which was introduced by Tartar [31] as a model to study rank-one convexity.

Example 10

For \(\psi ,A:{\mathbb {R}}^2\rightarrow {\mathbb {R}}\) with \(A(x,y)=xy\) and \(\psi (x,y)=\phi (x,y,xy)=x^2+y^2\), we have

Again, we supposed that at least two of the \(\alpha _i\) are non-zero, to avoid trivialities, in the last equality. Once again, in order to simplify the minimization problem, we will start again by dealing with the situation where two of the \(\alpha _i\) are zero. We take \(\alpha _3=\alpha _4=0\), without loss of generality. For each (x, y, z), we are left to minimize

subject to

The optimality conditions are

The first solution is always well defined for \(z\le xy\) and the second for \(z\ge xy\) (we recall that, in particular, we can take y in the interior of the segment whose extreme points are \(y_1\) and \(y_2\); otherwise we interchange the papers of \(y_2\) with \(x_2\)). Computing the minimum, one gets

Notice that one need not take more than two of the \(\alpha _i\ne 0\) in the minimization problem, because the function that we have obtained is already convex and lies below \(\phi _A(x,y,z)\). As in the previous case, \(\psi (x, y)\) is not its own canonical representative.

Proposition 11

The canonical representative \(C\phi _A(x, y, z)\) of \(\psi (x, y)=x^2+y^2\) as a separately convex function of the two variables x, y, is

We now proceed to the typical example of polyconvexity in \({\mathbb {R}}^{2\times 2}\) case, see e.g. [11].

Example 12

In this fully vector example, we take

with \(A(\xi )=\det \xi \) and

We have

(in the last equality, we supposed as usual that at least two of the \(\alpha _i\) are non-zero) Once again, in order to simplify the minimization problem, we will start again by dealing with the simplest situation, which in here will be the case where four of the \(\alpha _i\) are zero, which we take \(\alpha _3=\alpha _4=\alpha _5=\alpha _6=0\), without loss of generality. For each \((\xi ,t)\in {\mathbb {R}}^5\), we have to minimize

subject to

The optimality conditions are

where \(\lambda =(\lambda _1,\lambda _2,\lambda _3,\lambda _4)\), \(\langle a,b\rangle \) stands for the inner product of the vectors \(a,b\in {\mathbb {R}}^4\) and \({\text {adj}}\xi _i \) is the matrix (taken as a vector in \({\mathbb {R}}^4\)) of all the \(1\times 1\) minors of matrix \(\xi _i\). The solutions of the above system are (where, in order to drop some of the indexes we will take, when necessary, \(\xi =(\xi _{11},\xi _{12},\xi _{21},\xi _{22})=(x,y,z,w)\) and \(\xi _i=(\xi _{i,11},\xi _{i,12},\xi _{i,21},\xi _{i,22})=(x_i,y_i,z_i,w_i)\)).

and

The first solution is well defined for \(t\ge \det \xi \) and the second for \(t\le \det \xi \) (notice that \(\xi _1,\xi _2\ne \xi \) so, interchanging variables for which we solve the system of optimality conditions, one can suppose without loss of generality that \((x_2-x)^2+(z_2-z)^2>0\)). Computing the minimum, one gets

Notice that, as in the separately convex case, one need not to take more than two of the \(\alpha _i\ne 0\) in the minimization problem, because the function that we have obtained is already convex and lies below \(\phi _A(\xi ,t)\). And so, the answer to the question of whether \(\psi \) is its own canonical representative is again negative.

Proposition 13

The canonical representative \(C\phi _A(\xi , t)\) of \(\psi (\xi )=|\xi |^2\) as a polyconvex function is

3 Existence Theorems

We start by proving our general existence result Theorem 6. The proof is quite standard (see e.g. [29]), and does not require any special ingredient. We restate it here. It refers to the variational problem

where

is a weakly closed subset.

Theorem 14

Suppose that the integrand \(W(x, \cdot ):\mathrm{I\hspace{-1.69998pt}R}^m\rightarrow \mathrm{I\hspace{-1.69998pt}R}\) is a Carathéodory integrand and complies with:

-

1.

It satisfies the growth condition

$$\begin{aligned} W(x,F)\ge a|F|^p+b, \end{aligned}$$for some \(p\ge 2\), \(a>0\), \(b\in {\mathbb {R}}\); and

-

2.

It is \({\mathcal {A}}\)-polyconvex in the sense of Definition 5 for a.e. \(x\in \Omega \), for a family of weak continuous functions \(A:\mathrm{I\hspace{-1.69998pt}R}^m\rightarrow \mathrm{I\hspace{-1.69998pt}R}^d\) such that

$$\begin{aligned} |A(F)|\le C|F|^r,\quad r<p, \quad C>0. \end{aligned}$$

Then the above variational problem admits minimizers \(v\in {\mathcal {L}}\).

Proof

Suppose that \((v_j)\) is a minimizing sequence. Then, by coercivity of W, there exists \(c\in {\mathbb {R}}^+\) such that

and so, for an appropriate subsequence (\(p\ge 2\)), one has

We first prove that I is strongly lower semicontinuous. Suppose that instead of weak convergence in \(L^p(\Omega )\) we have strong convergence, that is

Then, for an appropriated subsequence, it is true that

By hypothesis, \(W(x,F)\ge 0\) (eventually by adding some constant) so, applying Fatou’s Lemma,

This holds for all subsequences, so it follows for the sequence itself. We now prove the (sequentially) weak lower semicontinuity of I. From (3.1), and as we are supposing that \(W(x,\cdot )\) is \({\mathcal {A}}\)-polyconvex in the sense of Definition 5, and due to the upper bound assumed on A, we have

for some \(q>1\), and so

in \(L^p(\Omega ,{\mathbb {R}}^m) \times L^{q}(\Omega ,{\mathbb {R}}^d)\). From Mazur Lemma, we can find a sequence of convex combinations

with \(0\le \alpha _{j,i}\le 1 \) and \(\sum \nolimits _{i=j}^{\tau (j)} \alpha _{j,i}=1\) such that

in \(L^p(\Omega ,{\mathbb {R}}^m) \times L^{q}(\Omega ,{\mathbb {R}}^d)\). As \(W(x,\cdot )\) is \({\mathcal {A}}\)-polyconvex, there exists a convex \(\Psi (x,\cdot )\) such that \(W(x,X)=\Psi (x,A(X))\), and so

Put

Taking a subsequence, we can assume that \(I ( v_i)\) converges to m, and taking \(\liminf \) in both sides of (3.3), we have

On the other hand, by (3.2) and because I is strongly lower semicontinuous,

In particular the boundary conditions are ensured by the weak continuity of the trace and consequently v is a minimizer.\(\square \)

As indicated in the Introduction, Theorem 6 is a natural extension of important results in classical settings. Our motivation was to look for new interesting explicit examples in non-standard situations. We have been inspired by some variational approaches to deal with the classic Calderón’s problem [5] in inverse 3D-conductivity [22, 27], in which we presume to aim at \(v=\gamma \nabla u\) for a certain unknown conductivity coefficient \(\gamma (x)\). We intend to recover such unknown coefficient \(\gamma \) by minimizing the functional corresponding to the integrand

with

We take \(\Psi =+\infty \) whenever \(t\le 0\). We plan to examine this approach more specifically in the future.

An existence theorem, as a corollary of Theorem 6, can then be proved based on div–curl-polyconvexity. It can be very easily adapted to cover more general situations for integrands of the form (1.4).

Corollary 15

Let \(\Omega \subset {\mathbb {R}}^3\) be a bounded open set with Lipschitz boundary, and outer normal vector n to \(\partial \Omega \). Consider the class

and for each, \(\varepsilon >0\), the functional

where

Then, for each \(\varepsilon >0\), the minimization problem

has at least one solution.

Proof

Consider an arbitrary, but fixed, \(\varepsilon >0\). \(W_{\varepsilon }\) is \({\mathcal {A}}\)-polyconvex and satisfies the required growth condition. If \((v_j,\nabla u_j, v_j\cdot \nabla u_j)\) is a minimizing sequence, then, possible for a subsequence, coercivity entails

From (3.6) and (3.7), remembering that \({\text {div}}v_j={\text {div}}v=0\), the div–curl Lemma [26, 30] gives us

in the sense of distributions; in fact, in \(L^2(\Omega ,{\mathbb {R}})\) because of (3.8). Though elementary to check, it is relevant to stress that the function in (3.4) is convex in the usual sense, and so we are entitled to apply Theorem 6 (or rather its proof) with

\(\square \)

to conclude that \((v,u)\in {\mathcal {L}}^+\) is a minimizer.

There is nothing to prevent us from proving a similar existence theorem for a family of integrands like in (1.3) based on Lemma 7.

It is finally interesting to stress that the effect of the small perturbation added in (3.5) to ensure coercivity is quite innocent in the following sense.

Proposition 16

Let \(\{(v_\varepsilon ,u_\varepsilon )\}_{\varepsilon >0}\) be a sequence where, for each \(\varepsilon >0\), \((v_\varepsilon ,u_\varepsilon )\) is the minimizer of \(I_\varepsilon \), that is,

If we put \(I_0(v,u)=I_\varepsilon (v,u)|_{\varepsilon =0}\), then \(\{(v_\varepsilon ,u_\varepsilon )\}_{\varepsilon >0}\) is minimizing for \(I_0\).

Proof

We have, by one side,

and, on the other side,

In particular,

Taking limits in both sides of this last inequality leads us to

from where it follows that \(\{(v_\varepsilon ,u_\varepsilon )\}_{\varepsilon >0}\) is a minimizing sequence for \(I_0\) due to the arbitrariness of \((v, u)\in {\mathcal {L}}^+\). \(\square \)

Data Availability

Not applicable.

References

Baía, M., Matias, J., Santos, P.M.: Characterization of generalized Young measures in the \({\cal{A} }\)-quasiconvexity context. Indiana Univ. Math. J. 62(2), 487–521 (2013)

Ball, J.M.: Convexity conditions and existence theorems in nonlinear elasticity. Arch. Rational Mech. Anal. 63, 337–403 (1977)

Braides, A., Fonseca, I., Leoni, G.: \({\cal{A} }\)-quasiconvexity: relaxation and homogenization. ESAIM Control Optim. Calc. Var. 5, 539–577 (2000)

Boussaid, O., Kreisbeck, C., Schlmerkemper, A.: Characterizations of symmetric polyconvexity. Arch. Ration. Mech. Anal. 234(1), 1–26 (2019)

Calderón, A.P.: On an inverse boundary value problem. In: Seminar on Numerical Analysis and its Applications to Continuum Physics (Rio de Janeiro, 1980), pp. 65–73, Soc. Brasil. Mat., Rio de Janeiro (1980)

Capecchi, D., Favata, A., Ruta, G.: On the complementary energy in elasticity and its history: the Italian school of nineteenth century. Meccanica 53(1–2), 77–93 (2018)

Ciarlet, P.G.: Mathematical Elasticity. Vol. I. Three-Dimensional Elasticity. Studies in Mathematics and its Applications, p. 20. North-Holland Publishing Co, Amsterdam (1988)

Conti, S., Gmeineder, F.: \({\cal{A} }\)-quasiconvexity and partial regularity. Calc. Var. Partial Differ. Equ. 61(6), 215 (2022)

Conti, S., Müller, S., Ortiz, M.: Symmetric div-quasiconvexity and the relaxation of static problems. Arch. Ration. Mech. Anal. 235(2), 841–880 (2020)

Dacorogna, B.: Weak continuity and weak lower semicontinuity for nonlinear functionals. In: Springer Lecture Notes in Mathematics, p. 922 (1982)

Dacorogna, B.: Direct Methods in the Calculus of Variations, 2nd edn. Springer, Berlin (2008)

Dacorogna, B., Fonseca, I.: A–B quasiconvexity and implicit partial differential equations. Calc. Var. Partial Differ. Equ. 14(2), 115–149 (2002)

Davoli, E., Fonseca, I.: Periodic homogenization of integral energies under space-dependent differential constraints. Port. Math. 73(4), 279–317 (2016)

Davoli, E., Fonseca, I.: Homogenization of integral energies under periodically oscillating differential constraints. Calc. Var. Partial Differ. Equ. 55(3), Art. 69 (2016)

De, P., Guido, R.: Filip on the structure of \({\cal{A} }\)-free measures and applications. Ann. Math. (2) 184(3), 1017–1039 (2016)

Fonseca, I., Müller, S.: \({\cal{A} }\)-quasiconvexity, lower semicontinuity, and Young measures. SIAM J. Math. Anal. 30(6), 1355–1390 (1999)

Fonseca, I., Leoni, G., Müller, S.: \({\cal{A} }\)-quasiconvexity: weak-star convergence and the gap. Ann. Inst. H. Poincaré C Anal. Non Linéaire 21(2), 209–236 (2004)

Guerra, A., Raiţă, B.: Quasiconvexity, null Lagrangians, and Hardy space integrability under constant rank constraints. Arch. Ration. Mech. Anal. 245(1), 279–320 (2022)

Kohn, R.V., Vogelius, M.: Relaxation of a variational method for impedance computed tomography. Commun. Pure Appl. Math. 40(6), 745–777 (1987)

Koumatos, K., Vikelis, A.P.: \({\cal{A} }\)-quasiconvexity, Gårding inequalities, and applications in PDE constrained problems in dynamics and statics. SIAM J. Math. Anal. 53(4), 4178–4211 (2021)

Krämer, J., Krömer, S., Kružík, M., Pathó, G.: \({\cal{A} }\)-quasiconvexity at the boundary and weak lower semicontinuity of integral functionals. Adv. Calc. Var. 10(1), 49–67 (2017)

Maestre, F., Pedregal, P.: Some non-linear systems of PDEs related to inverse problems in conductivity. Calc. Var. Partial Differ. Equ. 60(3), 110 (2021)

Matias, J., Morandotti, M., Santos, P.M.: Homogenization of functionals with linear growth in the context of \({\cal{A} }\)-quasiconvexity. Appl. Math. Optim. 72(3), 523–547 (2015)

Morrey, C.B., Jr.: Quasi-convexity and the lower semicontinuity of multiple integrals. Pac. J. Math. 2, 25–53 (1952)

Murat, F.: Compacité par compensation: condition nécessaire et suffisante de continuité faible sous une hypothèse de rang constant Annali della Scuola Normale Superiore di Pisa. Classe di Scienze 4e série, tome 8(1):69–102 (1981)

Murat, F.: A survey on compensated compactness. In: Contributions to Modern Calculus of Variations (Bologna, 1985), Pitman Res. Notes Math. Ser., vol. 148, pp. 145–183. Longman Sci. Tech., Harlow (1987)

Pedregal, P.: On some non-linear systems of PDEs related to inverse problems in 3-d conductivity. Rev. R. Acad. Cienc. Exactas F’is. Nat. Ser. A Mat. RACSAM 116(3), 104 (2022)

Raiţă, B.: Potentials for \({\cal{A} }\)-quasiconvexity. Calc. Var. Partial Differ. Equ. 58(3), 105 (2019)

Rindler, F.: Calculus of Variations. Universitext, pp. xii+444. Springer, Cham (2018). (ISBN: 978-3-319-77636-1; 978-3-319-77637-8)

Tartar, L.: Compensated compactness and applications to partial differential equations. In: Nonlinear Analysis and Mechanics: Heriot–Watt Symposium, vol. IV, pp. 136–212, Res. Notes in Math., p. 39. Pitman, London (1979)

Tartar, L.: Some remarks on separately convex functions. In: Microstructure and Phase Transition, pp. 191–204, IMA Vol. Math. Appl., p. 54. Springer, New York (1993)

Acknowledgements

This work was done during the visit of L. Bandeira to Omeva Research Group at Universidad de Castilla-La Mancha, to whom he thanks for their support and hospitality. L. Bandeira was partially supported by Centro de Investigaccão em Matemática e Aplicações, (CIMA), through the Project UIDB/04674/2020 of FCT-Fundação para a Ciência e a Tecnologia, Portugal. P. Pedregal was supported by Agencia Estatal de Investigación, and Junta de Comunidades de Castilla-La Mancha Grants PID2020-116207GB-I00, and SBPLY/19/180501/000110.

Funding

Open access funding provided by FCT|FCCN (b-on).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bandeira, L., Pedregal, P. \({\mathcal {A}}\)-Variational Principles. Milan J. Math. 91, 293–314 (2023). https://doi.org/10.1007/s00032-023-00382-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00032-023-00382-5