Abstract

We consider the spin boson model with external magnetic field. We prove a path integral formula for the heat kernel, known as Feynman–Kac–Nelson (FKN) formula. We use this path integral representation to express the ground state energy as a stochastic integral. Based on this connection, we determine the expansion coefficients of the ground state energy with respect to the magnetic field strength and express them in terms of correlation functions of a continuous Ising model. From a recently proven correlation inequality, we can then deduce that the second order derivative is finite. As an application, we show existence of ground states in infrared-singular situations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we study the spin boson model with external magnetic field. This model describes the interaction of a two-level quantum mechanical system with a boson field in presence of a constant external magnetic field. We derive a Feynman–Kac–Nelson (FKN) formula for this model, which relates expectation values of the semigroup generated by the Hamilton operator to the expectation value of a Poisson-driven jump process and a Gaussian random process indexed by a real Hilbert space obtained by an Euclidean extension of the dispersion relation of the bosons. Especially, when calculating expectation values with respect to the ground state of the free Hamiltonian, one can explicitly integrate out the boson field and obtain expectations only with respect to the jump process. This allows us to express the ground state energy and its derivatives in terms of correlation functions of a continuous Ising model, provided a gap assumption is satisfied. As an application, we show the existence of ground states for the spin boson model in the case of massless bosons for infrared singular interactions, using a recent correlation bound and a regularization procedure.

The history of FKN-type theorems dates back to the work of Feynman and Kac [14, 30]. Such functional integral respresentations were used to study the spectral properties of models in quantum field theory by Nelson [37]. Since then, many authors have used this approach to study models of non-relativistic quantum field theory, see, for example, [6, 7, 11, 15, 19, 20, 27, 28, 47] and references therein. The spin boson model without an external magnetic field has been investigated using this approach in [1, 16, 44] and recently in [24]. In [48], path measures for the spin boson model with magnetic field were studied by means of Gibbs measures. In this paper, we extend the FKN formula for the spin boson model to external magnetic fields.

This paper is structured as follows. Section 2 is devoted to the definition of the spin boson model and the statement of our main results. We start out with a rigorous definition of the spin boson Hamiltonian with external magnetic field as a self-adjoint lower-semibounded operator in Sect. 2.1. In Sect. 2.2, we then describe its probabilistic description through the FKN formula stated in Theorem 2.4 and reduce the degrees of freedom to study expectation values with respect to the ground state of the free operator as expectation values of a continuous Ising model in Corollary 2.6. In Sect. 2.3, we then use the well-known connection between expectation values of the semigroup and the ground state energy to express the derivatives of the ground state energy with respect to the magnetic field strength as correlation functions of this continuous Ising model, under the assumption of massive bosons. The proofs of the results presented in Sect. 2 are given in Sect. 3.

In Sect. 4, we then apply our results and prove Theorem 4.1. Explicitly, we use the recent result from [26] to prove the existence of ground states of the spin boson Hamiltonian with vanishing external magnetic field. Our proof especially includes the case of massless bosons with infrared-singular coupling.

The article is accompanied by a series of appendices. In Appendices A to C, we present some essential technical requirements for our proofs, including standard Fock space properties in Appendix C.1 and a construction of the so-called \({\mathcal Q}\)-space in Appendix C.2. In Appendix D, we give a proof for the existence of ground states at arbitrary external magnetic field in the case of massive bosons, a case which to our knowledge is not covered in the literature.

1.1 General Notation

\(L^2\)-spaces: For a measure space \(({\mathcal M},\mathrm{d}\mu )\) and a real or complex Hilbert space \({\mathfrak h}\), we denote by \(L^2({\mathcal M},\mathrm{d}\mu ;{\mathfrak h})\) the real or complex Hilbert space of square-integrable \({\mathfrak h}\)-valued measurable functions on \({\mathcal M}\), respectively. If \({\mathfrak h}={\mathbb C}\), we write for simplicity \(L^2({\mathcal M},\mathrm{d}\mu )=L^2({\mathcal M},\mathrm{d}\mu ;{\mathbb C})\). Further, we assume \({\mathbb R}^d\) for any \(d\in {\mathbb N}\) to be equipped with the Lebesgue measure without further mention.

Characteristic functions: For \(A\subset X\), we define the function \(\mathbf {1}_{A}:X\rightarrow {\mathbb R}\) with \(\mathbf {1}_{A}(x)=1\), if \(x\in A\), and \(\mathbf {1}_{A}(x)=0\), if \(x\notin A\).

2 Model and Results

2.1 Spin Boson Model with External Magnetic Field

In this section, we give a precise definition of the spin boson Hamiltonian with external magnetic field and prove that it defines a self-adjoint lower-semibounded operator.

Let us recall the standard Fock space construction from the Hilbert space perspective. Textbook expositions on the topic can, for example, be found in [4, 10, 38, 42].

Throughout, we assume \({\mathfrak h}\) to be a complex Hilbert space. Then, we define the bosonic Fock space over \({\mathfrak h}\) as

where \(\otimes ^n_{\mathrm {s}}{\mathfrak h}\) denotes the n-fold symmetric tensor product of Hilbert spaces. We write Fock space vectors as sequences \(\psi =\left( \psi ^{(n)}\right) _{n\in {\mathbb N}_0}\) with \(\psi ^{(0)}\in {\mathbb C}\) and \(\psi ^{(n)}\in \otimes ^n_{\mathrm {sym}}{\mathfrak h}\). Especially, we define the Fock space vacuum \(\Omega =(1,0,0,\ldots )\).

For a self-adjoint operator A, let the (differential) second quantization operator \({\mathsf d}\Gamma (A)\) on \({\mathcal F}({\mathfrak h})\) be the operator

where \(\bar{(\cdot )}\) denotes the operator closure. Next, if \({\mathfrak v}\) is another complex Hilbert space and \(B:{\mathfrak h}\rightarrow {\mathfrak v}\) is a contraction operator (i.e., \(\Vert B\Vert \le 1\)), the second quantization operator \(\Gamma (B):{\mathcal F}({\mathfrak h})\rightarrow {\mathcal F}({\mathfrak v})\) is given as

Furthermore, for \(f\in {\mathfrak h}\), we define the creation and annihilation operators \(a^\dag (f)\) and a(f) as the closed linear operators acting on pure tensors as

where \(\otimes _{{\mathsf {s}}}\) denotes the symmetric tensor product and \(\ {\widehat{\cdot }}\ \) in the first line means that the corresponding entry is omitted. Note that \(a^\dagger (f)\) is the adjoint of a(f). We introduce the field operator as

In Appendix C.1, we provide a variety of well-known properties of the operators defined above, which will be used throughout this article. From now on, we also write \({\mathcal F}={\mathcal F}(L^2({\mathbb R}^d))\).

To define the spin boson Hamiltonian with external magnetic field, let \(\sigma _x,\sigma _y,\sigma _z\) denote the \(2\times 2\)-Pauli matrices

We consider the Hamilton operator

To prove that the expression (2.7) defines a self-adjoint lower-semibounded operator, we need the following assumptions.

Hypothesis A

-

(i)

\(\omega :{\mathbb R}^d\rightarrow [0,\infty )\) is measurable and has positive values almost everywhere.

-

(ii)

\(v\in L^2({\mathbb R}^d)\) satisfies \(\omega ^{-1/2}v\in L^2({\mathbb R}^d)\).

Lemma 2.1

Assume Hypothesis A holds. Then, the operator \(H(\lambda ,\mu )\) given by (2.7) is self-adjoint and lower-semibounded on the domain \({\mathcal D}({\mathbb {1}}\otimes {\mathsf d}\Gamma (\omega ))\) for all values of \(\lambda ,\mu \in {\mathbb R}\).

Proof

As a sum of strongly commuting self-adjoint and lower-semibounded operators H(0, 0) is self-adjoint and lower-semibounded, cf. Lemma C.1 (ii). Further, by Lemma C.1 (vii), with \(A=\omega \), and the boundedness of \(\sigma _x\), the operator \(\sigma _x\otimes (\lambda \varphi (v)+\mu {\mathbb {1}})\) is infinitesimally bounded with respect to H(0, 0). Hence, the statement follows from the Kato–Rellich theorem ([42, Theorem X.12]). \(\square \)

2.2 Feynman–Kac–Nelson Formula

In this section, we move to a probabilistic description of the spin boson model. Except for Lemma 2.2, all statements are proved in Sect. 3.1.

The spin part can be described by a jump process, which we construct here explicitly. To that end, let \((N_t)_{t\ge 0}\) be a Poisson process with unit intensity, i.e., a stochastic process with state space \({\mathbb N}_0\), stationary independent increments, and satisfying

realized on some measurable space \(\Omega \). We refer the reader to [8] for a concrete realization of \(\Omega \). Moreover, we can choose \(\Omega \) such that \(N_t(\omega )\) is right-continuous for all \(\omega \in \Omega \), see for example [9, Section 23]. Further, let B be a Bernoulli random variable with \({\mathbb {P}}[B=1]={\mathbb {P}}[B=-1]=\frac{1}{2}\), which we realize on the space \(\{-1, 1\}\). Then, we define the jump process \(( {\widetilde{X}}_t)_{t\ge 0}\) on the product space \(\Omega \times \{-1 , 1\}\) (equipped with the product measure) by

To fix a suitable measure space to work with, we use the law of the process \(({\widetilde{X}}_t)_{t \ge 0}\). That is, we realize the stochastic process on the space

where we equip \({\mathscr {D}}\) with the \(\sigma \)-algebra generated by the projections \(\pi _t(x)=x_t\), \(t \ge 0\). The measure, \(\mu _X\), on \({\mathscr {D}}\) is then given by the pushforward with respect to the map

for which it is straightforward to see that it is measurable. We define the process \(X_t(x) = x_t\) for \(x \in {\mathscr {D}}\), \(t \ge 0\). It follows by construction that the stochastic processes \(X_t\) and \({\widetilde{X}}_t\) are equivalent, in the sense that they have the same finite-dimensional distributions. For random variables Y on the measure space \(({\mathscr {D}},\mu _X)\), we define

We note that by the construction (2.8), the paths of X \(\mu _X\)-almost surely have only finitely many jumps in any compact interval. We denote the set of all such paths by \({\mathscr {D}}_{\mathsf f}\). The property \(\mu _X({\mathscr {D}}_{\mathsf f})=1\) can alternatively also be deferred from the theory of continuous-time Markov processes, cf. [33, 40].

We now want to give a probabilistic description of the bosonic field. To that end, we define the Euclidean dispersion relation \({\omega _{{\mathsf {E}}}}:{\mathbb R}^{d+1}\rightarrow [0,\infty )\) as \({\omega _{{\mathsf {E}}}}(k,t)=\omega ^2(k)+t^2\) and the Hilbert space of the Euclidean field as

Let \({\phi _{{\mathsf {E}}}}\) be the Gaussian random variable indexed by the real Hilbert space

on the (up to isomorphisms unique) probability space \(({{\mathcal Q}_{{\mathsf {E}}}},{\Sigma _{{\mathsf {E}}}},{\mu _{{\mathsf {E}}}})\) and denote expectation values w.r.t. \({\mu _{{\mathsf {E}}}}\) as \({\mathbb {E}}_{{\mathsf {E}}}\). For the convenience of the reader, we have described a possible explicit construction in Appendix C.2. We note that the complexification \({\mathcal R}_{\mathbb C}\) is unitarily equivalent to \({\mathcal E}\), by the map \((f,g)\mapsto f+{\mathsf {i}}g\), and hence \({\mathcal F}({\mathcal E})\) and \(L^2({{\mathcal Q}_{{\mathsf {E}}}})\) are unitarily equivalent, by Proposition C.3.

For \(t\in {\mathbb R}\), we define

Lemma 2.2

-

(i)

(2.13) defines an isometry \(j_t:L^2({\mathbb R}^d)\rightarrow {{\mathcal {E}}}\) for any \(t\in {\mathbb R}\).

-

(ii)

If, for almost all \(k\in {\mathbb R}^d\), \(f\in L^2({\mathbb R}^d)\) satisfies \(f(k)=\overline{f(-k)}\) and \(\omega (k)=\omega (-k)\), then \(j_tf\in {\mathcal R}\).

-

(iii)

\(j_s^*j_t = e^{-|t-s|\omega }\) for all \(s,t\in {\mathbb R}\).

Proof

The statements follow by the direct calculation

\(\square \)

Remark 2.3

In the literature (2.13) is often defined via the Fourier transform  .

.

We set

where \(\Theta _{{\mathcal R}}\) denotes the Wiener–Itô–Segal isomorphism introduced in Proposition C.3 and \(\Gamma \) is the second quantization of the contraction operator \(j_t\), as defined in (2.3). Further, we define the isometry \(\iota : {\mathbb C}^2 \rightarrow L^2( \{ \pm 1 \} , \mathrm{d}\mu _{1/2} )\), with \(\mu _{1/2}(\{ s \} ) = \frac{1}{2}\) for \(s \in \{\pm 1\}\), by

where \(\alpha _i\) denotes the i-th entry of the vector \(\alpha \in {\mathbb C}^2\). We define the map \(I_t := \iota \otimes \widetilde{ I}_t\), where

To formulate the Feynman–Kac–Nelson (FKN) formula, it will be suitable to work with the following transformed Hamilton operator, which is unitary equivalent to \(H(\lambda ,\mu )\) up to a constant multiple of the identity. Explicitly, we apply the unitary

and define the transformed Hamilton operator

where we used \(U\sigma _zU^*=-\sigma _x\) and \(U\sigma _x U^*=\sigma _z\).

Our result holds under the following assumptions.

Hypothesis B

Assume Hypothesis A and the following:

-

(i)

\(\omega (k)=\omega (-k)\) for almost all \(k\in {\mathbb R}^d\).

-

(ii)

v has real Fourier transform, i.e., \(v(k)=\overline{v(-k)}\) for almost all \(k\in {\mathbb R}^d\).

We are now ready to state the FKN formula for the spin boson model with external magnetic field.

Theorem 2.4

(FKN Formula) Assume Hypothesis B holds. Then, for all \(\Phi ,\Psi \in {\mathcal H}\) and \(\lambda ,\mu \in {\mathbb R}\), we have

We note that the integrability of the right hand side in above theorem follows from the identity

which holds for any Gaussian random variable Z (see, for example, [45, (I.17)]). We outline the argument in the remark below.

Remark 2.5

By (2.13), the map \([0,T]\rightarrow {\mathcal E},t\mapsto j_tv\) is strongly continuous. Hence, by (C.1), the map \({\mathbb R}\rightarrow L^2({{\mathcal Q}_{{\mathsf {E}}}}),t\mapsto {\phi _{{\mathsf {E}}}}(j_tv)\) is continuous. Thus, for \((x_t)_{t\ge 0}\in {\mathscr {D}}_{\mathsf f}\), the function

\(t \mapsto \phi _E(j_t v) x_t\) is a piecewise continuous \(L^2({{\mathcal Q}_{{\mathsf {E}}}})\)-valued function on compact intervals of \([0,\infty )\). Thus, the integral over t exists as an \(L^2({{\mathcal Q}_{{\mathsf {E}}}})\)-valued Riemann integral \(\mu _X\)-almost surely. Since Riemann integrals are given as limits of sums, the measurability with respect to the product measure \(\mu _X \otimes {\mu _{{\mathsf {E}}}}\) follows. In fact, again fixing \(x\in {\mathscr {D}}_{\mathsf f}\) and using Fubini’s theorem as well as Hölder’s inequality, one can prove that the integral \(\int _0^{ T} {\phi _{{\mathsf {E}}}}(j_tv)x_t\mathrm{d}t\) can also be calculated as Lebesgue-integral evaluated \({\mu _{{\mathsf {E}}}}\)-almost everywhere pointwise in \({{\mathcal Q}_{{\mathsf {E}}}}\) with the same result. This is outlined in Appendix A. Furthermore, \(\int _0^\mathrm{T} {\phi _{{\mathsf {E}}}}(j_tv)x_t\mathrm{d}t\) is a Gaussian random variable, since \(L^2\)-limits of linear combinations of Gaussians are Gaussian. We conclude that the right hand side of the FKN formula is finite, since exponentials of Gaussian random variables are integrable, cf. (2.18).

We now want to describe the expectation value of the semigroup associated with \(H(\lambda ,\mu )\) (cf. (2.7)) with respect to the ground state of the free operator H(0, 0), by integrating out the field contribution in the expectation value. To that end, let

and define

Corollary 2.6

Assume Hypothesis B holds. Then, for all \(\lambda ,\mu \in {\mathbb R}\), we have

Remark 2.7

For \((x_t)_{t\ge 0}\in {\mathscr {D}}_{\mathsf f}\), the functions \((s,t)\mapsto W(t-s)x_tx_s\) and \(t\mapsto x_t\) are Riemann-integrable, since W is continuous. Further, the continuity also implies that the expression on the right hand side is uniformly bounded in the paths x and hence the expectation value exists and is finite by the dominated convergence theorem.

Remark 2.8

The expectation value on the right hand side can be interpreted as the partition function of a long-range continuous Ising model on \({\mathbb R}\) with coupling functions W. This model can be obtained as a limit of a discrete Ising model with long-range interactions, see [25, 44, 48].

2.3 Ground State Energy

We are especially interested in studying the ground state energy of the spin boson model

In this section, we want to use the FKN formula from the previous section to express derivatives of the ground state energy.

Starting point of this investigation is the following well-known formula, sometimes referred to as Bloch’s formula, expressing the ground state energy as expectation value of the semigroup, see for example [46]. We verify it in Sect. 3.2 using a positivity argument.

Lemma 2.9

Assume Hypothesis A holds. Then, for all \(\lambda ,\mu \in {\mathbb R}\),

The central statement of this section is that above equation carries over to the derivatives with respect to \(\mu \), provided that the ground state energy of \(H(\lambda ,\mu )\) is in the discrete spectrum, i.e., \(E(\lambda ,\mu ) \in \sigma _\mathsf{disc}(H(\lambda ,\mu ))\). We note that this spectral assumption has been shown in [2, Theorem 1.2] for \(\mu =0\) if \({{\,\mathrm{ess\,inf}\,}}_{k\in {\mathbb R}^d}\omega (k)>0\) and we extend the result to arbitrary choices of \(\mu \) in Appendix D.

Theorem 2.10

Assume Hypothesis A holds. Let \(\lambda ,\mu _0 \in {\mathbb R}\) and suppose \(E(\lambda ,\mu _0) \in \sigma _\mathsf{disc}(H(\lambda ,\mu _0))\). Then, for all \(n \in {\mathbb N}\), the following derivatives exist and satisfy

We now want to combine this observation with the FKN formula from Theorem 2.4. To that end, we define

with W as defined in (2.20) and note that

by Corollary 2.6. Thus, Lemma 2.9 gives

We note that the stochastic integral in (2.24) was used in [1] to show analyticity of \( \lambda \mapsto E(\lambda ,0)\) in a neighborhood of zero. The next two statements express the derivatives of the ground state energy in terms of a stochastic integral. To that end, for a random variable Y on \(({\mathscr {D}},\mu _X)\), we define the expectation

Further, we denote by \({\mathcal P}_n\) the set of all partitions of the set \(\{1,\ldots ,n\}\) and by |M| the cardinality of a finite set M.

Theorem 2.11

Assume Hypothesis B holds. Let \(\lambda , \mu \in {\mathbb R}\) and suppose \(E(\lambda ,\mu ) \in \sigma _\mathsf{disc}(H(\lambda ,\mu ))\). Then, for all \(n \in {\mathbb N}\), the following derivatives exist and satisfy

In addition, we can express derivatives of the ground state energy in terms of the so-called Ursell functions [39] or cumulants. This allows us to use correlation inequalities to prove bounds on derivatives. In fact, we will use this in Corollary 2.14 below to estimate the second derivative with respect to the magnetic field at zero. Given random variables \(Y_1,\ldots ,Y_n\) on \(({\mathscr {D}},\mu _X)\), we define the Ursell function

Corollary 2.12

Assume Hypothesis B holds. Let \(\lambda , \mu \in {\mathbb R}\) and suppose \(E(\lambda ,\mu ) \in \sigma _\mathsf{disc}(H(\lambda ,\mu ))\). Then, for all \(n \in {\mathbb N}\), the following derivatives exist and satisfy

Next, we show how the formulas in Theorem 2.11 and Corollary 2.12, respectively, can be used to obtain bounds on derivatives of the ground state energy. For this, we will use the following correlation bound of a continuous long-range Ising model, cf. Remark 2.7. Approximating this model by a discrete Ising model, we proved a bound on these correlation functions in [25].

Theorem 2.13

([25]) There exist \(\varepsilon >0\) and \(C>0\) such that for all \(h\in L^1({\mathbb R})\) which are even, continuous and satisfy \(\Vert h\Vert _{L^1({\mathbb R})}\le \varepsilon \), we have

As an application of Theorems 2.11 and 2.13, we obtain the following result, giving us a bound on the second derivative of the ground state energy which is uniform in the size of the spectral gap. Since the proof only demonstrates the application of these theorems, we state it here directly.

Corollary 2.14

Let \(\nu \) be a measurable function on \({\mathbb R}^d\) satisfying \(\nu > 0\) a.e. and \(\nu (-k) = \nu (k) \). Let \(v \in L^2({\mathbb R}^d)\) have real Fourier transform and \(\nu ^{-1/2}v\in L^2({\mathbb R}^d)\). Let \(m > 0\) and \(\omega = \sqrt{ \nu ^2 + m^2}\). Then, for every \(\lambda \in {\mathbb R}\), the function \( \mu \mapsto E(\lambda ,\mu )\) is twice differentiable in a neighborhood of zero and, choosing W as defined in (2.20),

Further, there exists a \(\lambda _{\mathsf c}> 0\) such that for all \(\lambda \in (- \lambda _{\mathsf c}, \lambda _{\mathsf c})\) the second derivative satisfies

Proof

Due to the definition, we have \({{\,\mathrm{ess\,inf}\,}}_{k\in {\mathbb R}^d}\omega (k)\ge m>0\) and hence \(E(\lambda ,0)\in \sigma _\mathsf{disc}(H(\lambda ,0))\), by Theorem D.1. Thus, Theorem 2.11 is applicable.

Due to the so-called spin-flip-symmetry of the model, i.e., X and \(-X\) being equivalent stochastic processes in the sense of their finite-dimensional distributions by the choice of the Bernoulli random variable in (2.9), we have

and hence

Thus, by Theorem 2.11

By the definition in (2.20), the interaction function \(W\in L^1({\mathbb R})\) satisfies

Setting \(\lambda _{\mathsf c}= (\frac{1}{2} \varepsilon )^{1/2} /\Vert \nu ^{-1/2} v \Vert _2\) with \(\varepsilon \) given as in Proposition 2.13, we can apply Proposition 2.13 with \(h=\lambda ^2 W\) for all \(\lambda \in (-\lambda _{\mathsf c},\lambda _{\mathsf c})\), which proves \(|\partial _\mu ^2 E(\lambda ,0)|\le C\) for all \(m>0\). This concludes the proof. \(\square \)

3 Proofs

In this section, we prove the results presented in Sect. 2.2 and 2.3

3.1 The FKN Formula

We start with the proof of Theorem 2.4. To that end, we first derive a FKN formula for the spin part, which is described by the jump process. For the statement, we recall the definition of \(\iota :{\mathbb C}^2\rightarrow L^2({\pm 1},\mu _{1/2})\) in (2.15).

Lemma 3.1

Let \(n\in {\mathbb N}\) and \(t_1,\ldots ,t_n\ge 0\). We set \(s_k = \sum \limits _{i=1}^{k}t_k\) for \(k=1,\ldots ,n\). Then, for all \(\alpha ,\beta \in {\mathbb C}^2\) and \(f_0,f_1,\ldots ,f_n:\{\pm 1\}\rightarrow {\mathbb C}\), we have

Proof

Since any function \(f:\{\pm 1\}\rightarrow {\mathbb C}\) is a linear combination of the identity and the constant function 1, it suffices to consider the case \(f_0=f_1=\cdots = f_n= {\text {id}}\). Further, due to bilinearity, it suffices to choose \(\alpha \) and \(\beta \) to be arbitrary basis vectors. We hereby use the basis consisting of eigenvectors of \(\sigma _x\), i.e., \(e_1=\frac{1}{\sqrt{2}}(1,1)\) and \(e_2=\frac{1}{\sqrt{2}}(1,-1)\). Then,

and hence

Now, observe that \(X_0\), \(X_tX_s\) and \(X_uX_v\) are independent random variables if \(0\le t\le s\le u\le v\), by construction. For \(i,k\in {\mathbb N}\), this yields the identities

If k is odd, then \({\mathbb {E}}_X[ (X_0)^k ]=0\), by (2.9) and the definition of B. Further, by (2.8), we find

Hence, setting \(s_0=0\), we arrive at

Combining (3.2) and (3.1), we have

Observing that \(\iota e_1(x)=1\) and \( \iota e_2(x)=x\) for \(x=\pm 1\) finishes the proof. \(\square \)

We now move to proving the FKN formula for the field part. We recall the definition of the isometry \(j_t:L^2({\mathbb R}^d)\rightarrow {\mathcal E}\) in (2.13). For \(I\subset {\mathbb R}\), let \(e_I\) denote the projection onto the closed subspace \(\overline{\mathrm{lin}\{f\in {{\mathcal {E}}}:f\in {\text {Ran}}(j_t)\ \text{ for } \text{ some }\ t\in I\}}\). Further, set \(e_t=e_{\{t \}} \) for any \(t \in {\mathbb R}\).

Lemma 3.2

Assume \(a\le b\le t\le c\le d\). Then

-

(i)

\(e_t = j_t j_t^*\),

-

(ii)

\(e_ae_be_c = e_ae_c\),

-

(iii)

\(e_{[a,b]}e_te_{[c,d]}=e_{[a,b]}e_{[c,d]}\).

Proof

Lemma 2.2 (i) and the definition of \(e_{\{t\}}\) directly imply (i). Further, (ii) follows from Lemma 2.2 (iii) by

To prove (iii), let \(f,g\in {{\mathcal {E}}}\). By the definition, there exist sequences of times \((t_k)_{k\in {\mathbb N}}\subset [a,b]\) and \((s_m)_{m\in {\mathbb N}}\subset [c,d]\) and functions \(f_k\in {\text {Ran}}(j_{t_k})\), \(g_m\in {\text {Ran}}(j_{s_m})\) such that

Furthermore, again by definition, \( {\text {Ran}}(j_{t}) = {\text {Ran}}(e_{t})\) for any \(t \in {\mathbb R}\). Hence, we can apply (ii) and obtain

Since f and g were arbitrary, this proves the statement. \(\square \)

Now, for \(t\in {\mathbb R}\) and \(I\subset {\mathbb R}\), let

Then the next statement in large parts follows directly from Lemma 2.2, 3.2 and C.1.

Lemma 3.3

Assume \(a\le b\le t\le c\le d\) and \(I\subset {\mathbb R}\). Then,

-

(i)

\(E_I\) is the orthogonal projection onto \(\overline{\mathrm{lin}\{f\in {\mathcal F}({{\mathcal {E}}}):f{\in }{\text {Ran}}(J_t)\ \text{ for } \text{ some }\ t{\in } I\}}\).

-

(ii)

\(E_t=J_tJ_t^*\),

-

(iii)

\(E_aE_bE_c=E_aE_c\),

-

(iv)

\(E_{[a,b]}E_tE_{[c,d]}=E_{[a,b]}E_{[c,d]}\).

-

(v)

For all \(F\in {\text {Ran}}(E_{[a,b]}) \) and \(G\in {\text {Ran}}(E_{[c,d]})\), we have

.

. -

(vi)

\(J_s^*J_t = e^{-|t-s|{\mathsf d}\Gamma (\omega )}\) for all \(s\in {\mathbb R}\),

-

(vii)

\(J_t\varphi (f)J_t^*=E_t\varphi (j_tf)E_t = \varphi (j_tf)E_t\) for all \(f\in L^2({\mathbb R}^d)\).

-

(viii)

\(J_tG(\varphi (f))J_t^*=E_t G(\varphi (j_tf))E_t = G(\varphi (j_tf))E_t\) for all \(f\in L^2({\mathbb R}^d)\) and bounded measurable functions G on \({\mathbb R}\).

Proof

All statements except for (v)–(vii) follow trivially from Lemma 3.2 and C.1 and the definitions. (v) follows from (iv), by the simple calculation

Finally, (vi) and (vii) follow by combining Lemmas 2.2 (iii) and C.1. Repeated application of (vii) shows that (viii) holds for G a polynomial. That it holds for arbitrary bounded measurable G follows from the measurable functional calculus [41]. \(\square \)

We can now prove the full FKN formula.

Proof of Theorem 2.4

Throughout this proof, we drop tensor products with the identity in our notation. Further, for the convenience of the reader, we explicitly state in which Hilbert space the inner product is taken.

Let \(\chi _K(x) = \min \{ x , K \} \) if \(x \ge 0\), and \(\chi _K(x) = \max \{x , -K\}\) if \(x < 0\). Further, let \(\varphi _K(v)=\chi _{K}(\varphi (v))\), \({\phi _{{\mathsf {E}},K}}(j_tv)=\chi _{K}({\phi _{{\mathsf {E}}}}(j_tv))\) and \({\widetilde{H}}_K(\lambda ,\mu )\) as in (2.17) with \(\varphi \) replaced by \(\varphi _K\). Since \({\widetilde{H}}_K(\lambda ,\mu )\) is lower-semibounded and \(\varphi _K\) is bounded, we can use the Trotter product formula (cf. [41, Theorem VIII.31]) and Lemma 3.3 Parts (vi) and (viii) (where the exponential is considered on the eigenspaces of \(\sigma _x\)) to obtain

Now we make iterated use of Lemma 3.3 (v). Explicitly, by Lemma 3.3 (viii), the vector to the left of any \(E_{k\frac{T}{N}}\), i.e.,

is an element of \({\text {Ran}}(E_{[0,k\frac{T}{N}]})\). Equivalently, the vector to the right is an element of \({\text {Ran}}(E_{[k\frac{T}{N},T]})\). Hence, we can drop all the factors \(E_{k\frac{T}{N}}\). Then, using Proposition C.3 and (2.14), we derive

Hence, we can apply Lemma 3.1 to obtain

Since \(\chi _K\) is Lipschitz continuous, it follows that \( t \mapsto {\phi _{{\mathsf {E}},K}}(j_{t} v)\) is an \(L^2({{\mathcal Q}_{{\mathsf {E}}}})\)-valued continuous function. Thus, the sum in the exponential in (3.4) converges to an \(L^2({{\mathcal Q}_{{\mathsf {E}}}})\)-valued Riemann integral. By possibly going over to a subsequence the Riemann sum converges \(\mu _X \otimes {\mu _{{\mathsf {E}}}}\)-almost everywhere. Thus, it follows by dominated convergence that

(Alternatively, the convergence could also be deduced by estimating the expectation.) Since \(\varphi (v)\) is bounded with respect to \({\mathsf d}\Gamma (\omega )\) (cf. Lemma C.1), the spectral theorem implies that \({\widetilde{H}}_K(\lambda ,\mu )\) converges to

\({\widetilde{H}}(\lambda ,\mu )\) in the strong resolvent sense and hence the left hand side of above equation converges to  as \(K\rightarrow \infty \). On the other hand, using that for \(\mu _X \otimes {\mu _{{\mathsf {E}}}}\)-almost every \((x,q) \in {\mathscr {D}}\times {{\mathcal Q}_{{\mathsf {E}}}}\) the function \(t \mapsto ({\phi _{{\mathsf {E}},K}}(j_tv))(q)x_t\) is Lebesgue integrable, see Remark 2.5, it follows that \(\int _0^{ T} {\phi _{{\mathsf {E}},K}}(j_tv) x_t \mathrm{d}t \) converges to \(\int _0^{ T} {\phi _{{\mathsf {E}}}}(j_tv) x_t \mathrm{d}t \) almost everywhere. Hence, the right hand side of (3.5) converges to

as \(K\rightarrow \infty \). On the other hand, using that for \(\mu _X \otimes {\mu _{{\mathsf {E}}}}\)-almost every \((x,q) \in {\mathscr {D}}\times {{\mathcal Q}_{{\mathsf {E}}}}\) the function \(t \mapsto ({\phi _{{\mathsf {E}},K}}(j_tv))(q)x_t\) is Lebesgue integrable, see Remark 2.5, it follows that \(\int _0^{ T} {\phi _{{\mathsf {E}},K}}(j_tv) x_t \mathrm{d}t \) converges to \(\int _0^{ T} {\phi _{{\mathsf {E}}}}(j_tv) x_t \mathrm{d}t \) almost everywhere. Hence, the right hand side of (3.5) converges to

as \(K\rightarrow \infty \), by the dominated convergence theorem. For the majorant, we use that by Jensen’s inequality

where in the second line we used \(\max \{ e^x , 1 \} \le e^x + e^{-x}\). Now the right hand side is integrable over \({{\mathcal Q}_{{\mathsf {E}}}}\)-space by (2.18). This proves the statement. \(\square \)

We end this section with the following:

Proof of Corollary 2.6

First, observe that with U as in (2.16), we have \((I_t(U^*\otimes {\mathbb {1}}){\Omega _\downarrow })(x) = 1\) for \(x=\pm 1\) and \(t\in {\mathbb R}\) (cf. (2.3) and (C.3)). Hence, Theorem 2.4 implies

Now, let \(x\in {\mathscr {D}}_{\mathsf f}\). Then, Fubini’s theorem and the identity (2.18) yield

Now, the definitions of the \({\mathcal R}\)-indexed Gaussian process (cf. (C.1)) and W (2.20) imply

where we used \(j_s^*j_t = e^{-|t-s|\omega }\) (cf. Lemma 2.2). This proves the statement. \(\square \)

3.2 Derivatives of the Ground State Energy

To prove our results on derivatives of the ground state energy, we start out with the proof of Lemma 2.9. Let us first state our version of Bloch’s formula. For the convenience of the reader, we provide the simple proof. Similar arguments are, for example, used in [3, 34].

Since the notion of positivity is essential therein, we recall it here for the convenience of the reader. For an arbitrary measure space \(({\mathcal M},\mu )\), we call a function \(f\in L^2({\mathcal M},\mathrm{d}\mu )\) (strictly) positive if it satisfies \(f(x)\ge 0\) (\(f(x)>0\)) for almost all \(x\in {\mathcal M}\). If A is a bounded operator on \(L^2({\mathcal M},\mathrm{d}\mu )\), we say A is positivity preserving (improving) if Af is (strictly) positive for all nonzero positive \(f\in L^2({\mathcal M},\mathrm{d}\mu )\).

Lemma 3.4

Let \(({\mathcal M},\mu )\) be a probability space and let H be a self-adjoint and lower-semibounded operator on \(L^2({\mathcal M},\mathrm{d}\mu )\). If \(e^{-TH}\) is positivity preserving for all \(T\ge 0\) and \(f\in L^2({\mathcal M},\mathrm{d}\mu )\) is strictly positive, then

Proof

First, we note that by the spectral theorem

where \(\nu _g\) denotes the spectral measure of H associated with g. This easily follows from the inequality

Now, for \(h\in L^2({\mathcal M},\mathrm{d}\mu )\) satisfying

it follows from \(e^{-TH}\) being positivity preserving that

Combined with (3.6), it follows that \(\inf \sigma (H)\le E_f=E_h\). Since f is strictly positive, the linear span of the set \({\mathcal X}\) of functions satisfying (3.7) is dense in \(L^2({\mathcal M},\mathrm{d}\mu )\). It follows that \(E_f = \sigma (H)\), since otherwise \(E_f > \inf \sigma (H)\) and \(\chi _{(-\infty , E_f)}(H) L^2({\mathcal M}, \mathrm{d}\mu )\) would contain a nonzero vector which is orthogonal to \({\mathcal X}\). Thus, the statement follows from (3.6). \(\square \)

We now prove that the transformed spin boson Hamiltonian from (2.17) is positivity improving in an appropriate \(L^2\)-representation.

Lemma 3.5

Let \(\vartheta \) be the natural isomorphism \({\mathcal H}={\mathbb C}^2\otimes {\mathcal F}\rightarrow L^2(\{1,2\}\times {\mathcal Q}_{L^2 ({\mathbb R}^d;{\mathbb R})})\) which is determined by \( \alpha \otimes \psi \mapsto \left( (i,x)\mapsto \alpha _i (\Theta _{L^2 ({\mathbb R}^d;{\mathbb R})} \psi ) (x) \right) , \) where \(\Theta _{L^2 ({\mathbb R}^d;{\mathbb R})}:{\mathcal F}\rightarrow L^2({\mathcal Q}_{L^2 ({\mathbb R}^d;{\mathbb R})})\) is the unitary from Proposition C.3. Then, the operator \( \vartheta e^{-T{\widetilde{H}}(\lambda ,\mu )} \vartheta ^* \) is positivity improving for all \(T>0\).

Proof

First observe that

To prove that (3.8) is positivity improving, we use a perturbative argument which can be found in [43, Theorem XIII.45]. Explicitly, by the definition of \(\vartheta \) and Proposition C.3,

is a bounded multiplication operator in \(L^2(\{1,2\}\times {\mathcal Q}_{L^2 ({\mathbb R}^d;{\mathbb R})})\) for all \(n \in {\mathbb N}\). Furthermore, by the boundedness of \(\varphi (v)\) w.r.t. \({\mathsf d}\Gamma (\omega )\) (cf. Lemma C.1), we find \(\vartheta {\widetilde{H}}(0,\mu ) \vartheta ^*+\lambda V_n\) converges to \(\vartheta {\widetilde{H}}(\lambda ,\mu ) \vartheta ^*\) in strong resolvent sense and \(\vartheta {\widetilde{H}}(\lambda ,\mu ) \vartheta ^* - \lambda V_n\) converges to \(\vartheta {\widetilde{H}}(0,\mu )\vartheta ^*\) in strong resolvent sense. Hence, by [43, Theorems XIII.43,XIII.45] it follows that (3.8) is positivity improving if and only if

is. Note that in (3.9) the first factor only acts on the variables \(\{1,2\}\), and the second factor only acts on the variables in \({\mathcal Q}_{L^2({\mathbb R}^d;{\mathbb R})}\). It is well known (cf. [45, Theorem I.16]) that the second factor on the right hand side of (3.9) is positivity improving on \(L^2({\mathcal Q}_{L^2({\mathbb R}^d;{\mathbb R})})\). Further, by explicit computation, we have that the first factor on the right hand side of (3.9)

is positivity improving on \(L^2(\{1,2\})\), since all matrix elements are strictly positive. This finishes the proof. \(\square \)

We now obtain Lemma 2.9 as an easy corollary of Lemma 3.5.

Proof of Lemma 2.9

Let \(\vartheta \) be defined as in Lemma 3.5. By the definitions (2.7) and (2.17), we have

By (2.16), (2.19), and (C.3), we see that \(\vartheta (U\otimes {\mathbb {1}})^*{\Omega _\downarrow }= 2^{-1/2}\), which is a constant strictly positive function. Hence, the statement follows from Lemma 3.5 and 3.4, since \(\inf \sigma (H(\lambda ,\mu )) = \inf \sigma ({\widetilde{H}}(\lambda ,\mu )- {\mathbb {1}})\). \(\square \)

Further, the following statement also is a direct consequence of Lemma 3.5. It will be a useful ingredient to our proof of Proposition 2.10.

Proposition 3.6

If \(E(\lambda ,\mu )\) is an eigenvalue of \(H(\lambda ,\mu )\), then the corresponding eigenspace is non-degenerate. In this case, if \(\psi _{\lambda ,\mu }\) is a ground state of \(H(\lambda ,\mu )\), then  .

.

Proof

By the Perron–Frobenius–Faris theorem [43, Theorem XIII.44] and Lemma 3.5, if \(E(\lambda ,\mu )\) is an eigenvalue of \(H(\lambda ,\mu )\), then there exists a strictly positive \(\phi _{\lambda ,\mu }\in L^2(\{1,2\}\times {\mathcal Q}_{L^2 ({\mathbb R}^d;{\mathbb R})})\) such that the eigenspace corresponding to \(E(\lambda ,\mu )\) is spanned by \(\vartheta (U\otimes {\mathbb {1}})^*\phi _{\lambda ,\mu }\), where \(\vartheta \) again is the defined as in Lemma 3.5. Since \(\vartheta (U\otimes {\mathbb {1}})^* {\Omega _\downarrow }\) is (strictly) positive, this proves the statement. \(\square \)

We can now prove the Bloch formula for the derivatives.

Proof of Proposition 2.10

Throughout this proof, we fix \(\lambda ,\mu _0\) as in the statement of the theorem. Further, for compact notation, we write

Hence, we want to prove

where \((\cdot )^{(n)}\) as usually denotes the n-th derivative.

We observe that the ground state energy \(\mathbf {e}(\mu _0)\) is a simple eigenvalue of \(\mathbf {h}(\mu _0)\), by Lemma 3.6. Further, by view of (2.7), it is obvious that the operator valued family  is an analytic family of type (A), cf. [31, 43]. Then, by the Kato–Rellich theorem [43, Theorem XII.8], it follows that \(\mu \mapsto \mathbf {e}(\mu )\) is analytic and \(\mathbf {e}(\mu )\) is an isolated simple eigenvalue of \(\mathbf {h}(\mu )\) in a neighborhood of \(\mu _0\).

is an analytic family of type (A), cf. [31, 43]. Then, by the Kato–Rellich theorem [43, Theorem XII.8], it follows that \(\mu \mapsto \mathbf {e}(\mu )\) is analytic and \(\mathbf {e}(\mu )\) is an isolated simple eigenvalue of \(\mathbf {h}(\mu )\) in a neighborhood of \(\mu _0\).

We introduce the distance of \(\mathbf {e}(\mu _0)\) to the rest of the spectrum by

By the Kato–Rellich theorem [43, Theorem XII.8], we can choose an \(\varepsilon >0\) such that

where the second inequality can be obtained using a Neumann series, cf. (3.12), or alternatively it can be obtained from the lower boundedness of Lemma 2.1 and a compactness argument involving that the set of \((\mu ,z)\), for which \(\mathbf {h}(\mu )-z\) is invertible, is open, see [43, Theorem XII.7]. Henceforth, we assume \(\mu \in (\mu _0-\varepsilon ,\mu _0+\varepsilon )\). Then, by (3.10), we can write the ground state projection \(P(\mu )\) of \(\mathbf {h}(\mu )\) as

where \(\Gamma _0\) is a curve counterclockwise encircling the point \(\mathbf {e}(\mu _0)\) at a distance \(\delta /2\) . Further, let

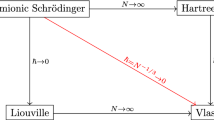

and define the curve \(\Gamma _1 = - \gamma _+ + \gamma _0 + \gamma _- \) surrounding the set \(\sigma (\mathbf {h}(\mu _0)) \setminus \{ \mathbf {e}(\mu _0) \}\) (see Fig. 1).

In view of (3.10), we can define

where the integral is understood as a Riemann integral with respect to the operator topology. The spectral theorem for the self-adjoint operator \(\mathbf {h}(\mu )\) and Cauchy’s integral formula yield

For \(z \in \rho (\mathbf {h}(\mu _0))\) and \(\mu \) in a neighborhood of \(\mu _0\) we have

Using this expansion and the following bounds obtained from (3.10)

we see that \(P(\mu )\) and \(Q_T(\mu )\) are real analytic for \(\mu \) in a neighborhood of \(\mu _0\) and, moreover, that the integrals and derivatives with respect to \(\mu \) can be interchanged due to the uniform convergence of the integrand on the curves \(\Gamma _0\) and \(\Gamma _1\). Hence, by virtue of (3.11), we see that the function  is real analytic on \((\mu _0-\widetilde{\varepsilon },\mu _0+\widetilde{\varepsilon })\) for \(\widetilde{\varepsilon }\in (0,\varepsilon )\) small enough.

is real analytic on \((\mu _0-\widetilde{\varepsilon },\mu _0+\widetilde{\varepsilon })\) for \(\widetilde{\varepsilon }\in (0,\varepsilon )\) small enough.

Let \(\psi _\mu \) be a normalized ground state of \(\mathbf {h}(\mu )\). Then, by Lemma 3.6, we find

Further, by the spectral theorem and (3.10)

where \(\nu _{{\Omega _\downarrow }}\) denotes the spectral measure of \(\mathbf{h} (\mu )\) associated with \({\Omega _\downarrow }\), cf. [41, Section VII.2].

By (3.11) and the definition of \(\mathbf {e}_T(\mu )\), for \(\mu \in (\mu _0-\widetilde{\varepsilon },\mu _0+\widetilde{\varepsilon })\) we have

Hence, we can calculate the n-th derivative of the expression on the left hand side at \(\mu =\mu _0\), by taking the n-th derivative on the right hand side. Using the Faà di Bruno formula (Lemma B.1) and recalling the notation from Theorem 2.11, we find

By (3.14) and (3.15), the first factor is uniformly bounded in T. Hence, it remains to prove that  is uniformly bounded in T for all \(k=1,\ldots ,n\). Therefore, we explicitly calculate the derivative of \(Q_T(\mu )\) at \(\mu =\mu _0\). This is done by interchanging the integral with the derivative, which we justified above.

is uniformly bounded in T for all \(k=1,\ldots ,n\). Therefore, we explicitly calculate the derivative of \(Q_T(\mu )\) at \(\mu =\mu _0\). This is done by interchanging the integral with the derivative, which we justified above.

Note that, by the series expansion (3.12), we have

Again using Faà di Bruno’s formula (Lemma B.1) and the Leibniz rule, this yields

Applying the bounds (3.13), we find

Since \(P_{k,\ell }(T)\) only grows polynomially in T, this implies \(\Vert Q_T^{(k)}(\mu _0)\Vert \xrightarrow {T\rightarrow \infty }0\) and especially proves  is uniformly bounded in T. \(\square \)

is uniformly bounded in T. \(\square \)

We now combine Bloch’s formula for derivatives of the ground state energy with the FKN formula.

Proof of Theorem 2.11

First, we recall the definition of \(Z_T(\lambda ,\mu )\) in (2.22) and the notation  from (2.25). By the dominated convergence theorem, one sees that \(Z_T\) is infinitely often differentiable in \(\mu \) and has the derivatives

from (2.25). By the dominated convergence theorem, one sees that \(Z_T\) is infinitely often differentiable in \(\mu \) and has the derivatives

Further, first using Proposition 2.10 and the Faà di Bruno formula Lemma B.1 to calculate the derivatives of the logarithm yields

where in the last line we inserted the identity (2.23) (which in turn follows from Corollary2.6). Combining (3.16) and (3.17) proves Theorem 2.11.

Proof of Corollary 2.12

By the definition of the Ursell functions (2.26), we find using the Faà die Bruno formula

Now, the Ursell functions are multilinear, cf. [39, Section 11], and by the dominated convergence theorem we can hence exchange the integrals with the expectation value, i.e.,

Inserting this into Theorem 2.11 finishes the proof of Corollary 2.12. \(\square \)

4 Existence of Ground States

In this section, we use the bound on the second derivative of the ground state energy as function of the magnetic coupling from Corollary 2.14 to obtain the result that the spin boson Hamiltonian with massless bosons has a ground state for couplings which exhibit strong infrared singularities. This result is non-trivial, since the massless bosons imply that there is no spectral gap.

Our main result needs the following assumptions.

Hypothesis C

-

(i)

\(\nu :{\mathbb R}^d\rightarrow [0,\infty )\) is locally Hölder continuous, positive a.e., and \(\nu (k)=\nu (-k)\).

-

(ii)

\(\lim \limits _{|k|\rightarrow \infty }\nu (k)=\infty \).

-

(iii)

\(v\in L^2({\mathbb R}^d)\) has real Fourier transform and there exists \(\varepsilon >0\), such that \(\nu ^{-1/2}v\in L^{2+\varepsilon }({\mathbb R}^d) \cap L^2({\mathbb R}^d)\).

-

(iv)

\(\displaystyle \sup _{|p|\le 1}\int _{{\mathbb R}^d}\frac{|v(k)|}{\sqrt{\nu (k)}\nu (k+p)}\mathrm{d}k<\infty \) and \(\displaystyle \sup _{|p|\le 1}\int _{{\mathbb R}^d}\frac{|v(k+p)-v(k)|}{\sqrt{\nu (k)}|p|^\alpha }\mathrm{d}k<\infty \) for some \(\alpha > 0\) .

We can now state the main result of this section.

Theorem 4.1

Assume Hypothesis C holds. Then there exists \(\lambda _{\mathsf c}>0\) such that for all \(\lambda \in (-\lambda _{\mathsf c},\lambda _{\mathsf c})\) the spin boson Hamiltonian

acting on \({\mathbb C}^2 \otimes {\mathcal F}\) has a ground state, i.e., the infimum of the spectrum is an eigenvalue.

Remark 4.2

This improves the previously known results on ground state existence [5, 23]. We also remark that in principal our method of proof not only gives existence of a small \(\lambda _{\mathsf c}\), but could in fact be used to estimate the critical coupling constant, due to its non-perturbative nature.

Example 4.3

Let us consider the case

where \(\chi :{\mathbb R}^d\rightarrow {\mathbb R}\) is the characteristic function of an arbitrary ball around \(k=0\). Obviously the assumptions on \(\omega \) in Hypothesis C are satisfied. Further, (iii) holds for any \(\delta >-1\) as is easily verified by integration in polar coordinates. The finiteness conditions (vi) of Hypothesis C also hold in this case by simple estimates. We remark that the previous results [5, 23] covered the situation (4.2) with \(\delta =-\frac{1}{2}\).

The method of proof relies on the approximation of the photon dispersion relation \(\nu \) by the infrared-regularized versions \(\nu _m= \sqrt{\nu ^2+m^2}\) with \(m>0\). We denote by \(H_m\) and \(E_m\) the definitions (2.7) and (2.21) with \(\omega \) replaced by \(\nu _m\). Since \(\inf _{k\in {\mathbb R}^d}\nu _m(k)\ge m >0\), the operator \(H_m(\lambda ,0)\) has a spectral gap for any \(m>0\) and hence also a ground state, cf. Theorem D.1. In the recent paper [26], we showed the following result, which together with Corollary 2.14 give a proof of Theorem 4.1.

Theorem 4.4

( [26]) Assume Hypothesis C holds and let \(\lambda \in {\mathbb R}\). If for small \(m > 0\) the function \(\mu \mapsto E_m(\lambda ,\mu )\) is twice differentiable at zero and \(\limsup _{m\downarrow 0} | \partial _\mu ^2 E_m(\lambda ,0)|<\infty \), then \(H_\lambda \) as defined in (4.1) has a ground state.

We conclude with the proof for existence of ground states.

Proof of Theorem 4.1

Applying Corollary 2.14 to the function \(\nu \), we see that the assumptions of Proposition 4.4 are satisfied. This proves the theorem.

References

Abdesselam, A.: The Ground State Energy of the Massless Spin-Boson Model. Ann. Henri Poincar. 12(7), 1321–1347 (2011). https://doi.org/10.1007/s00023-011-0103-6. arXiv:1005.4366

Arai, A., Hirokawa, M.: On the existence and uniqueness of ground states of the Spin-Boson Hamiltonian. Hokkaido Univ. Prepr. Ser. Math. 309, 2–20 (1995). https://doi.org/10.14943/83456

Abdesselam, A., Hasler, D.: Analyticity of the ground state energy for massless nelson models. Commun. Math. Phys. 310(2), 511–536 (2012). https://doi.org/10.1007/s00220-011-1407-6. arXiv:1008.4628

Arai, A.: Analysis on Fock Spaces and Mathematical Theory of Quantum Fields. World Scientific, New Jersey (2018). https://doi.org/10.1142/10367

Bach, V., Ballesteros, M., Könenberg, M., Menrath, L.: Existence of ground state eigenvalues for the spin-boson model with critical infrared divergence and multiscale analysis. J. Math. Anal. Appl. 453(2), 773–797 (2017). https://doi.org/10.1016/j.jmaa.2017.03.075. arXiv:1605.08348

Betz, V., Hiroshima, F.: Gibbs measures with double stochastic integrals on a path space. Infin Dimens Anal Quantum Probab Relat Top 12(1), 135–152 (2009). https://doi.org/10.1142/S0219025709003574. arXiv:0707.3362

Betz, V., Hiroshima, F., Lörinczi, J., Minlos, R.A., Spohn, H.: Ground state properties of the Nelson Hamiltonian–a Gibbs measure-based approach. Rev. Math. Phys. 14(02), 173–198 (2002). https://doi.org/10.1142/S0129055X02001119. arXiv:math-ph/0106015

Billingsley, P.: Convergence of Probability Measures. Wiley Series in Probability and Statistics, 2nd edn. Wiley, New York (1999). https://doi.org/10.1002/9780470316962

Billingsley, P.: Probability and Measure. Wiley Series in Probability and Statistics, anniversary Wiley, New York (2012)

Bratteli, Q., Robinson, D.W.: Operator Algebras and Quantum Statistical Mechanics 2: Equilibrium States. Models in Quantum Statistical Mechanics. Texts and Monographs in Physics. Springer, Berlin, 2nd edition (1997). https://doi.org/10.1007/978-3-662-09089-3

Betz, V., Spohn, H.: A central limit theorem for Gibbs measures relative to Brownian motion. Probab. Theory Relat. Fields 131(3), 459–478 (2005). https://doi.org/10.1007/s00440-004-0381-8. arXiv:math/0308193

Dereziński, J., Gérard, C.: Asymptotic completeness in quantum field theory. Massive Pauli–Fierz Hamiltonians. Rev. Math. Phys. 11(4), 383–450 (1999). https://doi.org/10.1142/S0129055X99000155

Dam, T.N., Møller, J.S.: Spin-Boson type models analysed using symmetries. Kyoto J. Math. 60(4), 1261–1332 (2020). https://doi.org/10.1215/21562261-2019-0062. arXiv:1803.05812

Feynman, R.P.: The principle of least action in quantum mechanics. In: Brown, L.M. (ed.) Feynman’s Thesis? A New Approach to Quantum Theory. World Scientific, Singapore (2005). https://doi.org/10.1142/9789812567635_0001

Fefferman, C., Fröhlich, J., Graf, G.M.: Stability of Ultraviolet–Vutoff quantum electrodynamics with non-relativistic matter. Commun. Math. Phys. 190(2), 309–330 (1997). https://doi.org/10.1007/s002200050243

Fannes, M., Nachtergaele, B.: Translating the spin-boson model into a classical system. J. Math. Phys. 29(10), 2288–2293 (1988). https://doi.org/10.1063/1.528109

Folland, G.B.: Real Analysis: Modern Techniques and Their Applications. Pure and Applied Mathematics, 2nd edn. Wiley, New York (1999)

Fröhlich, J.: Existence of dressed one-electron states in a class of persistent models. Fortschr. Phys. 22(3), 159–198 (1974). https://doi.org/10.1002/prop.19740220304

Glimm, J., Jaffe, A.: Collected papers. Constructive quantum field theory selected papers. Birkhäuser, Boston (1985). Reprint of articles published 1968–1980

Glimm, J., Jaffe, A.: Quantum Physics. A Functional Integral Point of View, 2nd edn. Springer, New York (1987). https://doi.org/10.1007/978-1-4612-4728-9

Griesemer, M., Lieb, E., Loss, M.: Ground states in non-relativistic quantum electrodynamics. Invent. math. 145(3), 557–595 (2001). https://doi.org/10.1007/s002220100159. arXiv:math-ph/0007014

Hardy, M.: Combinatorics of partial derivatives. Electron. J. Comb. 13, R1 (2006)

Hasler, D., Herbst, I.: Ground states in the spin boson model. Ann. Henri Poincaré 12(4), 621–677 (2011). https://doi.org/10.1007/s00023-011-0091-6. arXiv:1003.5923

Hirokawa, M., Hiroshima, F., Lörinczi, J.: Spin-boson model through a Poisson-driven stochastic process. Math. Z. 277(3), 1165–1198 (2014). https://doi.org/10.1007/s00209-014-1299-1. arXiv:1209.5521

Hasler, D., Hinrichs, B., Siebert, O.: Correlation bound for a one-dimensional continuous long-range Ising model. Stoch. Proc. Appl. (2021). https://doi.org/10.1016/j.spa.2021.12.010. arXiv:2104.03013

Hasler, D., Hinrichs, B., Siebert, O.: On existence of ground states in the spin boson model. Commun. Math. Phys. 388(1), 419–433 (2021). https://doi.org/10.1007/s00220-021-04185-w. arXiv:2102.13373

Hiroshima, F.: Functional integral representation of a model in quantum electrodynamics. Rev. Math. Phys. 9(4), 489–530 (1997). https://doi.org/10.1142/S0129055X97000208

Hiroshima, F., Lörinczi, J.: Functional integral representations of the Pauli-Fierz model with spin 1/2. J. Funct. Anal. 254(8), 2127–2185 (2008). https://doi.org/10.1016/j.jfa.2008.01.002. arXiv:0706.0833

Hasler, D., Siebert, O.: Ground States for translationally invariant Pauli–Fierz Models at zero Momentum. arXiv Preprint, 2020, arXiv:2007.01250

Kac, M.:. On some connections between probability theory and differential and integral Equations. In: Proceedings of the Second Berkeley Symposium on Mathematical Statistics and Probability, pp. 189–215 (1951)

Kato, T.: Perturbation Theory for Linear Operators , volume 132 of Classics in Mathematics. Springer, Berlin, 2nd edition (1980). https://doi.org/10.1007/978-3-642-66282-9

Lörinczi, J., Hiroshima, F., Betz, V.: Feynman-Kac-Type Theorems and Gibbs Measures on Path Space, volume 34 of De Gruyter Studies in Mathematics. De Gruyter, Berlin (2011). https://doi.org/10.1515/9783110330397

Liggett, T.M.: Continuous Time Markov Processes: An Introduction, Volume 113 of Graduate Studies in Mathematics. AMS, Providence, RI (2010). https://doi.org/10.1090/gsm/113

Lörinczi, J., Minlos, R.A., Spohn, H.: The infrared behaviour in Nelson’s model of a quantum particle coupled to a massless scalar field. Ann. Henri Poincaré 3(2), 269–295 (2002). https://doi.org/10.1007/s00023-002-8617-6. arXiv:math-ph/0011043

Loss, M., Miyao, T., Spohn, H.: Lowest energy states in nonrelativistic QED: atoms and ions in motion. J. Funct. Anal. 243(2), 353–393 (2007). https://doi.org/10.1016/j.jfa.2006.10.012. arXiv:math-ph/0605005

Möller, J.S.: The translation invariant massive Nelson model: I. The bottom of the spectrum. Ann. Henri Poincaré 6(6), 1091–1135 (2005). https://doi.org/10.1007/s00023-005-0234-8

Nelson, E.: Quantum fields and Markoff fields. In: Partial Differential Equations, volume 23 of Proceedings of the Symposium on Pure Mathematics, pp. 413–420, Berkeley (1973). AMS. https://doi.org/10.1090/pspum/023

Parthasarathy, K.R.: An Introduction to Quantum Stochastic Calculus, volume 85 of Monographs in Mathematics. Birkhäuser, Basel (1992). https://doi.org/10.1007/978-3-0348-0566-7

Percus, J.K.: Correlation inequalities for Ising spin lattices. Commun. Math. Phys. 40(3), 283–308 (1975). https://doi.org/10.1007/BF01610004

Resnick, S.: Adventures in Stochastic Processes. Birkhäuser, Basel (1992). https://doi.org/10.1007/978-1-4612-0387-2

Reed, M., Simon, B.: Functional Analysis, volume 1 of Methods of Modern Mathematical Physics. Academic Press, San Diego (1972)

Reed, M., Simon, B.: Fourier Analysis, Self-Adjointness, volume of 2 Methods of Modern Mathematical Physics. Academic Press, San Diego (1975)

Reed, M., Simon, B.: Analysis of Operators, volume of 4 Methods of Modern Mathematical Physics. Academic Press, San Diego (1978)

Spohn, H., Dümcke, R.: Quantum tunneling with dissipation and the Ising model over \(\mathbb{R}\). J. Stat. Phys. 41(3), 389–423 (1985). https://doi.org/10.1007/BF01009015

Simon, B.: The \(P(\phi )_{2}\) Euclidean (Quantum) Field Theory. Princeton Series in Physics. Princeton University Press, Princeton (1974)

Simon, B.: Functional Integration and Quantum Physics, volume of 86 Pure and Applied Mathematics. Academic Press, New York (1979)

Spohn, H.: Effective mass of the polaron: a functional integral approach. Ann. Phys. 175(2), 278–318 (1987). https://doi.org/10.1016/0003-4916(87)90211-9

Spohn, H.: Ground state(s) of the spin-boson Hamiltonian. Commun. Math. Phys. 123(2), 277–304 (1989). https://doi.org/10.1007/BF01238859

Teschl, G.: Mathematical Methods in Quantum Mechanics, volume of 157 Graduate Studies in Mathematics, 2nd edn. AMS, Providence (2014). https://doi.org/10.1090/gsm/157

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Jan Derezinski.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

\(L^2\)-valued Riemann Integral and Pointwise Lebesgue Integrability

In Remark 2.5, we use the following lemma with \(f(t)={\phi _{{\mathsf {E}}}}(j_tv)x_t\).

Lemma A.1

Let \(({\mathcal Q},\mu )\) be a probability space and assume \(t\mapsto f_t\in L^2({\mathcal Q})\) is piecewise continuous on the interval [0, T]. Then, \([t\mapsto f_t(q) ] \in L^1([0,T])\) for almost every \(q\in {\mathcal Q}\) and

where the integral on the right hand side is the \(L^2({\mathcal Q})\)-valued Riemann integral.

Proof

Using Fubini’s theorem and Hölder’s inequality, we find

Hence, for \(\mu \)-almost all \(q\in {\mathcal Q}\), the map \(t\mapsto f_t(q)\) is Lebesgue-integrable. Let \(f_{n,t}\) be an \(L^2({\mathcal Q})\)–valued step function. Then, using the triangle inequality, Fubini’s theorem and Hölder’s inequality, we find

Now, by the piecewise \(L^2({\mathcal Q})\)-continuity of \(t \mapsto f_t\), the right hand side can be made arbitrarily small by making the mesh of the Riemann sum arbitrarily small. This implies (A.1). \(\square \)

The Faà di Bruno Formula

The following formula is used in several places throughout the paper. A proof and historical discussion can be found in [22].

Lemma B.1

Let \(I \subset {\mathbb R}\) and \(\Omega \subset {\mathbb R}^m\) be open and let \(f:J\rightarrow {\mathbb R}\) and \(g: \Omega \rightarrow J\) be n-times continuously differentiable functions. Then, \(f\circ g: \Omega \rightarrow {\mathbb R}\) is n-times continuously differentiable and for any choice of \(k_1,\ldots ,k_n \in \{1,\ldots ,m\}\)

where \({\mathcal P}_n\) denotes the set of partitions of the set \(\{1,\ldots ,n\}\).

Fock Space and \({\mathcal Q}\)-Space

1.1 Standard Fock Space Properties

In this appendix, we collect well-known properties of the Fock space operators introduced in Sect. 2.1. In large parts, these can be found in standard textbooks such as [4, 10, 38, 42]. For the convenience of the reader, we give exemplary precise references to [4] below.

Lemma C.1

Let \({\mathfrak h},{\mathfrak v},{\mathfrak w}\) be complex Hilbert spaces, let A be a self-adjoint operator on \({\mathfrak h}\), let \(B:{\mathfrak v}\rightarrow {\mathfrak w}\) and \(C:{\mathfrak h}\rightarrow {\mathfrak v}\) be contraction operators and let \(f,g\in {\mathfrak h}\).

-

(i)

\({\mathsf d}\Gamma (A)\) is self-adjoint and \(e^{{\mathsf {i}}t{\mathsf d}\Gamma (A)}=\Gamma (e^{{\mathsf {i}}tA})\).

-

(ii)

If \(A\ge 0\), then \({\mathsf d}\Gamma (A)\ge 0\) and \(e^{-t{\mathsf d}\Gamma (A)}=\Gamma (e^{-tA})\) for \(t\ge 0\).

-

(iii)

\(\Gamma (B)\) is a contraction operator.

-

(iv)

If B is unitary, so is \(\Gamma (B)\).

-

(v)

\(\Gamma (B)\Gamma (C)=\Gamma (BC)\) and \(\Gamma (B)^* = \Gamma (B^*)\).

-

(vi)

\(\varphi (f)\) is self-adjoint.

-

(vii)

If \(A\ge 0\) is injective and \(f\in {\mathcal D}(A^{-1/2})\), then \(\varphi (f)\) is \({\mathsf d}\Gamma (A)^{1/2}\)-bounded and for all \(\psi \in {\mathcal D}({\mathsf d}\Gamma (A)^{1/2})\)

$$\begin{aligned} \Vert \varphi (f)\psi \Vert \le \sqrt{2}\Vert A^{-1/2}f\Vert \Vert {\mathsf d}\Gamma (A)^{1/2}\psi \Vert + \frac{1}{\sqrt{2}}\Vert f\Vert \Vert \psi \Vert . \end{aligned}$$Especially, in this case \(\varphi (f)\) is infinitesimally \({\mathsf d}\Gamma (A)\)-bounded.

-

(viii)

\(\Gamma (B)a^*(f)=a^*(Bf)\Gamma (B)\) and \(a(f) \Gamma (B)^*= \Gamma (B)^*a(Bf) \).

-

(ix)

If B is an isometry, i.e., \(B^*B = {\mathbb {1}}_{{\mathfrak h}}\), then \(\Gamma (B)a(f)=a(Bf)\Gamma (B)\) and hence \(\Gamma (B)\varphi (f) = \varphi (Bf)\Gamma (B)\).

References in [4]. For (i), (ii) see Theorems 5.2 and 5.7. For (iii) see Theorem 5.5. For (iv), (v) see Theorem 5.6. For (vi) see Theorem 5.22. For (vii) see Proposition 5.12. The last sentence in (vii) follows from the inequality \(\Vert {\mathsf d}\Gamma (A)^{1/2}\psi \Vert \le \varepsilon \Vert {\mathsf d}\Gamma (A)\psi \Vert + \frac{1}{\varepsilon }\Vert \psi \Vert \), which holds for any \(\varepsilon >0\).

Proof of (viii) and (xi)

(viii) follows by observing

and using that the span of vectors of the form \(g_1\otimes _{{\mathsf {s}}}\cdots g_n\) is a core for \(a^\dagger (f)\) by construction. Similarly, using the isometry property, we have

which proves (xi).\(\square \)

1.2 \({\mathcal Q}\)-Space Construction

In this appendix, we define Gaussian processes indexed by a real Hilbert space \({\mathfrak r}\) on a probability space \(({\mathcal Q},\Sigma ,\mu )\). We then recall the isomorphism theorem connecting \({\mathcal F}({\mathfrak r}\oplus {\mathsf {i}}{\mathfrak r})\) and \(L^2({\mathcal Q})\). More details can be found in [32, 45].

A random process indexed by \({\mathfrak r}\) is a (\({\mathbb R}\)-)linear map \(\phi \) from \({\mathfrak r}\) to the random variables on \(({\mathcal Q},\Sigma ,\mu )\). A Gaussian random process indexed by \({\mathfrak r}\) is a random process indexed by \({\mathfrak r}\), such that \(\phi (v)\) is normally distributed with mean zero for any \(v\in {\mathfrak r}\), has covariance

and \(\Sigma \) is the minimal \(\sigma \)-field generated by \(\{\phi (v):v\in {\mathfrak r}\}\).

The following lemma states existence and uniqueness of Hilbert space valued Gaussian processes. Extensive proofs can, for example, be found in [45, Theorems I.6 and I.9] or [32, Prop. 5.6, Section 5.4]. For the convenience of the reader, we add a sketch of the proof below.

Lemma C.2

For any real Hilbert space \({\mathfrak r}\) there exist a unique (up to isomorphism) probability space \(({\mathcal Q}_{\mathfrak r},\Sigma _{\mathfrak r},\mu _{\mathfrak r})\) and a unique (again up to isomorphism) Gaussian random process \(\phi _{\mathfrak r}\) indexed by \({\mathfrak r}\) on \(({\mathcal Q}_{\mathfrak r},\Sigma _{\mathfrak r},\mu _{\mathfrak r})\).

Sketch of Proof

Existence: We present one possible construction here; further constructions can be found in [32, 45]. Let \(\{e_i\}_{i\in {\mathscr {I}}}\) be a (not necessarily countable) orthonormal basis of \({\mathfrak r}\). We set  and equip it with the infinite product measure of the probability measures \(\pi ^{-1/2}\exp (-x_i^2)\mathrm{d}x_i\), \(i\in {\mathscr {I}}\), which obviously is a probability measure itself. The Gaussian random process is now defined by \(\phi _{\mathfrak r}(e_i)\) being the multiplication operator with the variable \(x_i\). Clearly, \(\mu _{\mathfrak r}\circ \phi _{\mathfrak r}(e_i)\) is normally distributed with mean zero and variance \(\frac{1}{2}\). It also easily follows that \(\int _{{\mathcal Q}_{\mathfrak r}}\phi _{\mathfrak r}(e_i)\phi _{\mathfrak r}(e_j)\mathrm{d}\mu _{\mathfrak r}= \frac{1}{2}\delta _{i,j}\), with \(\delta \) denoting the usual Kronecker symbol. Finally, the Borel \(\sigma \)-algebra on \({\mathcal Q}_{\mathfrak r}\) is generated by the set \(\{\phi _{\mathfrak r}(e_i):i\in {\mathscr {I}}\}\). Hence, extending this definition to \(\phi _{\mathfrak r}(f)\) for arbitrary \(f\in {\mathfrak r}\) by linearity finishes the construction.

and equip it with the infinite product measure of the probability measures \(\pi ^{-1/2}\exp (-x_i^2)\mathrm{d}x_i\), \(i\in {\mathscr {I}}\), which obviously is a probability measure itself. The Gaussian random process is now defined by \(\phi _{\mathfrak r}(e_i)\) being the multiplication operator with the variable \(x_i\). Clearly, \(\mu _{\mathfrak r}\circ \phi _{\mathfrak r}(e_i)\) is normally distributed with mean zero and variance \(\frac{1}{2}\). It also easily follows that \(\int _{{\mathcal Q}_{\mathfrak r}}\phi _{\mathfrak r}(e_i)\phi _{\mathfrak r}(e_j)\mathrm{d}\mu _{\mathfrak r}= \frac{1}{2}\delta _{i,j}\), with \(\delta \) denoting the usual Kronecker symbol. Finally, the Borel \(\sigma \)-algebra on \({\mathcal Q}_{\mathfrak r}\) is generated by the set \(\{\phi _{\mathfrak r}(e_i):i\in {\mathscr {I}}\}\). Hence, extending this definition to \(\phi _{\mathfrak r}(f)\) for arbitrary \(f\in {\mathfrak r}\) by linearity finishes the construction.

Uniqueness: The uniqueness can be deduced from the Kolmogorov extension theorem [46, Theorem 2.1], which states that a probability space is uniquely determined by a consistent family of probability measures. \(\square \)

The Hilbert space isomorphism introduced in Lemma C.3 is often referred to as Wiener–Itô–Segal isomorphism. More details on its construction, which is sketched below, can be found in [45, Theorem I.11] or [32, Prop. 5.7]. Here, we denote the complexification of the real Hilbert space \({\mathfrak r}\) as \({\mathfrak r}_{\mathbb C}\), which is the real Hilbert space \({\mathfrak r}\times {\mathfrak r}\) with the complex structure given by \( {\mathsf {i}}(\psi ,\phi ) = -(\phi ,\psi )\).

Lemma C.3

There exists a unitary operator \(\Theta _{\mathfrak r}:{\mathcal F}({\mathfrak r}_{\mathbb C})\rightarrow L^2({\mathcal Q}_{\mathfrak r})\) such that

-

(i)

\(\Theta _{\mathfrak r}\Omega = 1\),

-

(ii)

\(\Theta _{\mathfrak r}^{-1} \phi _{\mathfrak r}(f)\Theta _{\mathfrak r}= \varphi (v)\) for all \(f\in {\mathfrak r}\).

Sketch of Proof

We recursively define the Wick product of \({\mathfrak r}\)-indexed Gaussian random variables by

Then, the map \(\Theta _{{\mathfrak r}}:{\mathcal F}({\mathfrak r}_{\mathbb C})\mapsto L^2({\mathcal Q}_{\mathfrak r})\) given by (i) and

extends to a unitary. The property (ii) follows explicitly from the definitions (2.4) (2.5) and (C.1) and the fact that the pure symmetric tensors form a core for \(\varphi (f)\) by construction.\(\square \)

The Massive Spin Boson Model

In this appendix, we prove that the ground state energy of the spin boson model with external magnetic field \(H(\lambda ,\mu )\) is in the discrete spectrum for any choice of the coupling constants \(\lambda ,\mu \in {\mathbb R}\), if the dispersion relation \(\omega \) is massive, i.e.,

The statement of the following theorem for the case \(\mu =0\), except for some simple technical restrictions on Hypothesis A, can be found in [2].

Theorem D.1

Assume Hypothesis A and (D.1) hold. Then \(E(\lambda ,\mu )\in \sigma _{\mathrm{disc}}(H(\lambda ,\mu ))\) for any choice of \(\lambda ,\mu \in {\mathbb R}\).

We obtain the above theorem as a corollary of the following proposition.

Proposition D.2

Assume Hypothesis A holds. Then, for all \(\lambda ,\mu \in {\mathbb R}\), we have

Remark D.3

The statement can be seen as one half of an HVZ-type theorem for the spin boson model with external magnetic field. By a slight generalization of known techniques, one can prove

see for example [2, 13]. Here, we restrict our attention to the proof of the lower bound.

In the context of non-relativistic quantum field theory, HVZ-type theorems are often proven using spatial localization of quantum particles, cf. [12, 21, 29, 35, 36]. To bound the error terms obtained by confining the system to a ball of radius L in position space, one needs to estimate the commutator of the multiplication operator \(\omega \) and the Fourier multiplier \(\eta (-{\mathsf {i}}\nabla /L)\), where \(\eta \) is a smooth and compactly supported function. Bounds on the commutator can be easily obtained, when \(\omega \) is Lipschitz-continuous (cf. [29, Proof of Lemma 24]). However, for less regular choices of the dispersion relation, a generalization of the standard localization approach does not seem obvious.

Here, we use an approach used by Fröhlich [18] and recently applied in [13], allowing us to work directly in momentum space and without any regularity assumptions on \(\omega \) going beyond Hypothesis A. The proof needs several approximation steps, so we start out with a convergence lemma. In the statement, the norms \(\Vert \cdot \Vert _\infty \) and \(\Vert \cdot \Vert _2\) are the usual norms in \(L^\infty ({\mathbb R}^d)\) and \(L^2({\mathbb R}^d)\).

Lemma D.4

Let \((\omega _k)_{k\in {\mathbb N}}\) and \((v_k)_{k\in {\mathbb N}}\) be chosen such that Hypothesis A holds, i.e., \(\omega _k :{\mathbb R}^d\rightarrow [0,\infty )\) is measurable and has positive values almost everywhere, and \(v_k \in L^2({\mathbb R}^d)\) satisfies \(\omega _k^{-1/2}v_k\in L^2({\mathbb R}^d)\). Moreover, define \(H_k(\lambda ,\mu )\) to be the operator defined in (2.7), i.e.,

Further, assume \(\Vert \omega / \omega _{k} \Vert _\infty \) and \(\Vert \omega _k / \omega \Vert _\infty \) are bounded and

Then, for all \(\lambda ,\mu \in {\mathbb R}\), the operators \(H_k(\lambda ,\mu )\) are uniformly bounded from below and converge to \(H(\lambda ,\mu )\) in the norm resolvent sense.

Remark D.5

If \(\omega \) and \(\omega _k\) are uniformly bounded above and below by some positive constants, then the uniform convergence assumptions (D.3) are easily seen to be equivalent to \(\Vert \omega _k-\omega \Vert _\infty \xrightarrow {k\rightarrow \infty }0\).

Proof

The uniform lower bound follows directly from the \(L^2\)-convergence assumptions (D.4), the bounds in Lemma C.1 (vii) and the Kato–Rellich theorem [42, Theorem X.12], see also the proof of Lemma 2.1.

By the definition and setting \(\omega _{\infty }=\omega \), for \(n\in {\mathbb N}\) and \(f_1,\ldots ,f_n\in L^2({\mathbb R}^d)\), we find

Since the vectors \(f_1\otimes _{{\mathsf {s}}}\cdots \otimes _{{\mathsf {s}}}f_n\) span a core for \({\mathsf d}\Gamma (\omega )\) by construction, we have \({\mathcal D}({\mathsf d}\Gamma (\omega _k))={\mathcal D}({\mathsf d}\Gamma (\omega ))\) for all \(k\in {\mathbb N}\). A similar argument yields

Further, observe that the assumptions (D.3) and (D.4) easily imply

Now, by Lemma 2.1, \(\big \Vert ( {\mathbb {1}}\otimes {\mathsf d}\Gamma (\omega ))(H(\lambda ,\mu )+{\mathsf {i}})^{-1}\big \Vert \) is bounded. Hence, using the resolvent identity as well as the standard bounds Lemma C.1 (vii) and

we find

where the right hand side tends to zero by (D.3), (D.4) and (D.5). \(\square \)

Proof of Theorem D.2

It suffices to treat the case \(m_\omega >0\), since the statement is trivial otherwise. The proof has three steps, and we fix \(\lambda ,\mu \in {\mathbb R}\) throughout.

Step 1. We first prove the statement in a very simplified case: Assume \(M\subset {\mathbb R}^d\) is a bounded and measurable set, \(\omega \mathbf {1}_{M}\) and \(v\mathbf {1}_{M}\) are simple functions on M and \(v=0\) almost everywhere on \(M^c\).

Let \(M_k\) for \(k=1,\ldots ,N\) be a disjoint partition of M into measurable sets such that \(\omega \!\upharpoonright _{M_k}\) and \(v\!\upharpoonright _{M_k}\) are constant for each \(k=1,\ldots ,N\). We define

Since \({\mathcal V}\) is finite-dimensional, it is closed and we have the decomposition \(L^2({\mathbb R}^d)={\mathcal V}\oplus {\mathcal V}^\perp \). Observing that by the assumptions \(v\in {\mathcal V}\), we can define

We define a linear map \(U:{\mathbb C}^2\otimes {\mathcal F}\rightarrow \bigoplus \limits _{n=0}^\infty ({\mathbb C}^2\otimes {\mathcal F}({\mathcal V}))\otimes \left( {\mathcal V}^\perp \right) ^{\otimes _{\mathsf s}n}\), where we set \({\mathbb C}:= V^{\otimes _{\mathsf s}0}\) for any vector space V, by

where \(P_{\mathcal V}\) denotes the orthogonal projection in \(L^2({\mathbb R}^d)\) onto \({\mathcal V}\). It is straightforward to verify that U is unitary. For \(n\in {\mathbb N}_0\), we denote by \(\Pi _n\) the projection onto the subspace \({\mathbb C}^2\otimes {\mathcal F}({\mathcal V})\otimes \left( {\mathcal V}^\perp \right) ^{\otimes _{\mathsf s}n}\) in the range of U.

From the definition (2.7) and the definitions of T and U above, it is easily verified that

Thus, \(\inf \sigma (T)\ge E(\lambda ,\mu )\) and hence \(\Pi _k UH(\lambda ,\mu )U^* \Pi _k \ge E(\lambda ,\mu ) + m_\omega \) for \(k\ge 1\).

Now, assume \(\gamma \in \sigma _{{\mathsf e}{\mathsf s}{\mathsf s}}(H(\lambda ,\mu ))\). Then, by Weyl’s criterion, there exists a normalized sequence \((\psi _n)_{n\in {\mathbb N}}\) weakly converging to zero such that

Using (D.7) and (D.6), we find

We now want to show that the last term converges to zero. To that end, we write

where \(S={\mathbb {1}}\otimes ({\mathbb {1}}+ {\mathsf d}\Gamma (\omega ))\) as operator on \({\mathbb C}^2\otimes {\mathcal F}({\mathcal V})\). By (D.7), \(\Vert S \Pi _0(U^*\psi _n)\Vert \) is uniformly bounded in n. We write

The assumption \(\omega \ge m_\omega >0\) implies that

Since \({\mathcal F}^{(\le N)}({\mathcal V})\) is finite-dimensional by construction \(S^{-1}T \!\upharpoonright _{{\mathcal F}^{(\le N)}({\mathcal V})} \) has finite rank for any \(N\in {\mathbb N}\) and it follows that \(S^{-1}T\) is compact, since it is the limit of compact operators. Hence, the last term on the right hand side of (D.9) (and hence that of (D.8)) converges to zero as \(n\rightarrow \infty \).

This finishes the first step.

Step 2. We now relax the condition that \(\omega \) and v must be simple functions: Assume \(M\subset {\mathbb R}\) is a bounded measurable set, \(\omega \mathbf {1}_{M}\) is bounded and \(v=0\) almost everywhere on \(M^c\).

By the simple function approximation theorem [17, Theorem 2.10], we can pick a sequence \((\omega _k)_{k\in {\mathbb N}}\) of pointwise monotonically increasing simple functions on M uniformly converging to \(\omega \). Outside of M, we set \(\omega _k\) equal to \(\omega \). Further, w.l.o.g., we can assume that \(m_\omega \le \omega _k\).

For given \(k\in {\mathbb N}\) let \(M_{k,i}\), \(i=1,\ldots ,N_k\), be a disjoint partition of M into measurable sets such that \(\omega _k\!\upharpoonright _{M_{k,i}}\) is constant for all \(i=1,\ldots ,N_k\). Further, w.l.o.g, we can assume that

where \({\text {diam}}\) denotes the usual diameter of a bounded set. Then, we define a projection P onto the vector space of simple functions with support in M by

which can be easily verified to be well-defined for any \(f\in L^2({\mathbb R}^d)\). If f is continuous and compactly supported on M, then it is straightforward to verify \(P_kf\xrightarrow {k\rightarrow \infty } f\) in \(L^2\)-sense. Since the continuous, compactly supported functions are dense in \(L^2(M)\), this implies \(\mathop {{\text {s-lim}}}\limits _{{k \rightarrow \infty }} P_{k} = {\mathbb {1}}_{L^2(M)}\) in strong operator topology.

We now define

and observe this directly implies \(\omega _k^{-1/2} v_k\) converges to \(\omega ^{-1/2}v\) in \(L^2\)-sense. Further, by the triangle inequality and monotonicity of the integral we find

By construction, the right hand side goes to zero as \(k\rightarrow \infty \).

Hence, we have shown that all assumptions of Lemma D.4 are satisfied and the operators \(H_k(\lambda ,\mu )\), defined in (D.2), are uniformly bounded from below and converge to \(H(\lambda ,\mu )\) in norm resolvent sense. Further, \(\omega _k\) and \(v_k\) satisfy by construction the assumptions of Step 1. The statement of the theorem now follows under the simplifying assumptions of Step 2, since, on the one hand, the uniform convergence of \(\omega _k\) to \(\omega \) implies \(m_{\omega _k}\) converges to \(m_\omega \) and on the other the norm resolvent convergence and uniform lower boundedness imply convergence of the ground state energy, cf. [49, Theorem 6.38], as well as the infimum of the essential spectrum, cf. [43, Theorem XIII.77].

Step 3. We now move to the general case.

For \(k \in {\mathbb N}\) define

Set \(v_k = \mathbf {1}_{M_k}v\). Then, taking \(k\rightarrow \infty \), it is straightforward to verify that both \(v_k\) and \(\omega ^{-1/2}v_k\) converge to v and \(\omega ^{-1/2}v\) in \(L^2\)-sense, respectively. Hence, we can once more apply Lemma D.4 to see that \(H_k(\lambda ,\mu )\) with \(\omega = \omega _k\) and \(v_k\) in (D.2) is uniformly bounded below and converges to \(H(\lambda ,\mu )\) in the norm resolvent sense as \(k\rightarrow \infty \). Since, \(\omega \) and \(v_k\) also satisfy the assumptions of Step 2, the statement again follows by the spectral convergence as in Step 2. \(\square \)

We conclude this appendix with the

Proof of Theorem D.1

Since the spectrum of \(H(\lambda ,\mu )\) is the disjoint union of discrete and essential spectrum, the statement follows from Theorem D.2. \(\square \)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hasler, D., Hinrichs, B. & Siebert, O. FKN Formula and Ground State Energy for the Spin Boson Model with External Magnetic Field. Ann. Henri Poincaré 23, 2819–2853 (2022). https://doi.org/10.1007/s00023-022-01160-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00023-022-01160-6

.

.