Abstract

The paper presents a novel method that allows one to establish numerical solutions of linear and nonlinear ordinary differential equations—with polynomial coefficients—that contain any finite products of the unknown functions and/or their general derivatives. The presented algorithm provides numerical solutions of these differential equations subject to initial or boundary conditions. This algorithm proposes the desired solution in terms of B-polynomials (Bernstein polynomial basis) and then uses the orthonormal relation of B-polynomials with its weighted dual basis with respect to the Jacobi weight function to construct a linear/nonlinear system in the unknown expansion coefficients which can be solved using a suitable solver. The properties of B-polynomials provide greater flexibility in which to impose the initial or boundary conditions at the end points of the interval [0, R] and enable us to obtain exactly and explicitly some of the unknown expansion coefficients in the form of a suggested numerical solution. Consequently, the presented algorithm leads to a linear or nonlinear algebraic system in the unknown expansion coefficients that has a simpler form than that was obtained by the other algorithms. So that, this procedure is a powerful tool that we may utilize to overcome the difficulties associated with boundary and initial value problems with less computational effort than the other techniques. An accepted agreement is obtained between the exact and approximate solutions for the given examples. The error analysis was also studied, and the obtained numerical results clarified the validity of the theoretical results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A boundary-value problem for an ordinary differential equation (or system of equations) is obtained by requiring that the dependent variable (or variables) is satisfying subsidiary conditions at one or more distinct points. If these conditions are imposed at one or two points, the existence and uniqueness theory for initial/boundary-value problems can be expanded for many special classes of equations and systems of equations. Many approximate methods for obtaining numerical solutions for various classes of differential equations using orthogonal polynomials are available in the literature (see, for instance, [4,5,6, 50]).

A great interest to use B-polynomials

for solving different classes of differential equations (see, for instance, [7,8,9, 12, 16, 35, 36, 42,43,44,45]) due to the perfect properties like the recursive relation, the appropriate properties, and making partition of unity (see, for instance, [17, 24,25,26, 28, 40]). These advantages make them the most known used basis in approximation theory and computer-aided geometric design (CAGD) (see, [23, 32, 37]). Farouki and GoodMan [26] show that there is no other nonnegative basis that provides systematically smaller condition numbers than B-polynomials, in the sense that they have optimal stability. Also, they declare that although B-polynomials are not uniquely optimal, no other basis in popular use provides this distinction, and it is uncertain whether other optimally stable bases would present the advantages and algorithms that we associate with the Bernstein form. Farouki and Rajan [29] had a head start in developing Bernstein formulations for all the procedures of basic polynomials required in geometric modeling algorithms, and they declared that these formulations were as simple as their customary power formulations.

In the current paper, a new numerical algorithm to find numerical solutions of higher order linear and nonlinear ordinary differential equations—with polynomial coefficients—that contain any finite products of the unknown functions and/or their derivatives subject to initial and boundary conditions will be discussed. Our interest in such models stems from the fact that they appear as models of many practical problems in mechanics and other areas of mathematical physics. For instance, the study of hydrodynamics, hydromagnetics, fluid dynamics, astrophysics, astronomy, beam and long wave theory, and applied physics. To be precise, for example, fifth-order boundary-value problems (BVPs) can be used to model viscoelastic flows (see, [19, 34]), sixth-order BVPs arise in the narrow convecting layers bounded by stable layers, which are believed to surround A-type stars [10, 14, 22, 48], the seventh-order BVPs generally arise in modeling induction motors with two rotor circuits [41] and ordinary convection and overstability yield a tenth-order and a twelfth-order BVPs, respectively.

The suggested numerical algorithm approximates the solution in the B-polynomials and it is based on the orthonormal relation of B-polynomials with its weighted dual basis with respect to the Jacobi weight function to construct a linear/nonlinear system in the unknown expansion coefficients which can be solved using a suitable solver. It will also be demonstrated that this algorithm provides excellent agreement between exact and approximate solutions, with the maximum pointwise error of no more than \(O(10^{-20})\). In this paper, we discuss formulae related to B-polynomials, which are useful in many ways. These formulae enable us with great flexibility to impose boundary conditions at \(x=0,\, R\) or initial conditions at \(x=0\). Thus, the contribution of this paper can be summarized in the following items:

-

A new explicit formula expressing the general-order derivative of B-polynomial in terms of the B-polynomials themselves is proved (see Lemma 2).

-

A formula for the B-polynomial coefficients of the moments of one single or a general-order derivative of B-polynomial is given (see Theorem 1).

-

An explicit formula, which expresses the B-polynomial coefficients of a general-order derivative of any polynomial in terms of its original B-polynomial coefficients, is also proved (see Theorem 2).

-

Establishing an expression of the B-polynomial coefficients of the finite products of polynomials and/or their general-order derivatives in terms of its original B-polynomial coefficients is given (see Theorem 3 and Corollary 3).

-

Establishing an expression of the B-polynomial coefficients of the moments of finite products of polynomials and/or their general-order derivatives in terms of its original B-polynomial coefficients is given (see Corollary 2).

-

Designing an approach based on the orthonormal relation of B-polynomials with its weighted dual basis with respect to the Jacobi weight function to treat two types of high-order initial and initial-boundary problems.

-

Investigating the error analysis of the B-polynomial expansion.

-

Examining the accuracy of the proposed method via the presentation of some illustrative examples.

Up to now, and to the best of our Knowledge, it is worthy to state that the proven formulae in Theorems 1–3, two Corollaries 2 and 3 and Lemma 2 for those previously mentioned are new and traceless in the literature. These formulae lead to the systematic character and simplicity of the proposed algorithm, which allow it to be implemented in any computer algebra (here, the Mathematica 12) symbolic language used. Also, the proposed algorithm provide exactly and explicitly some of the unknown expansion coefficients (see Eqs. (54) and (61)) in the form of a suggested numerical solution. Consequently, the presented algorithm leads to a linear or nonlinear algebraic system with unknown expansion coefficients that has a simpler form than that obtained by the other algorithms. So that, this procedure is a powerful tool that we may utilize to overcome the difficulties associated with boundary and initial value problems with less computational effort than the other techniques.

The current paper is organized as follows: In Sect. 2, computational implementations which have useful uses in introducing the proposed algorithm are given. In Sects. 3 and 4, the details of the proposed numerical method to solve linear and nonlinear differential equations with polynomial coefficients are provided. The error analysis is presented in Sect. 5. Numerical examples are given in Sect. 6, and the obtained numerical results illustrate the validity of the theoretical results in Sect. 5.

2 Polynomial Basis

The general form of the B-polynomials of nth degree is defined by [12, pp.273–274]

which constitute the entire basis of \((n+1)\) nth-degree polynomials. For convenience, we set \(B_{i,n}(x)=0,\) if \(i<0\) or \(i>n\).

A recursive definition can also be used to generate the B-polynomials over this interval, allowing us to write the ith nth-degree B-polynomial in the form

The derivative of \(B_{i,n}(x)\) is given by

Each B-polynomial is positive and the sum of all the B-polynomials is unity for all \(x \in [0,R]\), that is

The set of functions which has these properties is called a partition of unity on the interval [0, R]. In addition, any given polynomial P(x) of degree n can be expanded as follows [27, p.3]:

The dual basis to the Bernstein polynomials

with respect to the Jacobi weight function \(w(x)=2^{\alpha +\beta }x^{\beta }(1-x)^{\beta },\,x\in [0,1]\) is characterized by the property [39, p.1587]

where the dual basis functions \(d_{k,n}(u)\) have explicit representations

and the coefficients \(c_{j,k}(n)\) are defined by

where \(A_{i}=(2i+\beta +1)\left( {\begin{array}{c}n+\alpha +\beta +i+1\\ n+\beta +i+1\end{array}}\right) \left( {\begin{array}{c} n+\alpha -i\\ n-i\end{array}}\right) ^{-1}.\) Therefore, the dual basis, \(D_{k,n}(x)=\frac{1}{R} d_{k,n}(x/R),\) to the Bernstein basis, \(\,B_{i,n}(x)=\) \( b_{i,n}(x/R),\,\)can be characterized by the property

and has the explicit representations

Lemma 1

Proof

Using relation (4), we have

then using the orthogonality relation (9), one can see that (11) is valid, and the proof of Lemma 1 is complete. \(\square \)

Remark 1

Formula (11) is a generalization for the result of Jani et al. [33, p.7666]

Lemma 2

Let \(B_{i,n}(x)\) be the ith nth-degree B-polynomial, and then, the pth-derivatives can be written in the form

where \(\lambda _{k}^{(p,n)}=\dfrac{(-1)^{p+k}\,n!}{R^{p}(n-p)!}\genfrac(){0.0pt}0{p}{k}.\)

Proof

To prove (12), we proceed by induction. In view of relation (3), we may write

and this in turn shows that (12) is true for \(p=1.\) Proceeding by induction, assuming that (12) is valid for p, we want to prove that

From (3) and assuming the validity for p, we have

Collecting similar terms and using the relations

we get (13) and the proof of Lemma 2 is complete. \(\square \)

Note 1

Since \(B_{i,n}(x)=0,\) if \(i<0\) or \(i>n\), then formula (12) takes the form

In particular, and for the special case \(R=1\), this formula coincides formula (3.1) in [20].

Corollary 1

Let \(B_{i,n}(x)\) be the ith nth-degree B-polynomial, then it is not difficult to show that

where \((a)_{n}=\Gamma (a+n)/\Gamma (a)\) is the pochhammer symbol.

Theorem 1

Let \(B_{i,n}(x)\) be the ith nth degree B-polynomial, then

where

Proof

Using Corollary 1 and Lemma 2, we obtain

where

By \((n-k)\)-fold degree elevation (see, [28]), we can express each Bernstein basis function of degree k in the Bernstein basis of degree n as

where

Then, by the aid of (20), formula (18) can be written in the form

Collecting similar terms and using \(B_{i,n}(x)=0,\)if \(i<0\) or \(i>n\), then formula (22) takes the form

Substitution of (19) and (21) into (23) gives (16), and this completes the proof of Theorem 1. \(\square \)

According to Theorem 1, we can state the following theorem which relates the B-polynomial basis coefficients of the moments of a general-order p-derivative of a polynomial f(x) in terms of its B-polynomial basis coefficients.

Theorem 2

Assume that a polynomial f(x) of degree N at most, and the moments of a general-order p-derivative of \(f(x),\,x^{m}f^{(p)}(x),\) are formally expanded in a finite series of B-polynomial basis

and

then

Proof

Using relation (16) gives

Collecting similar terms, we get

which completes the proof of Theorem 2. \(\square \)

Note 2

It is to be noted here that

and then

Also, it is worth to note that

In particular, and for the special case \(m=r=0\), and using relation (29)—and after some rather manipulation—formula (26) takes the form

where

This shows that Theorem 3.3 in [20] is a direct consequence of Theorem 2 for \(R=1\).

Farouki and Rajan[29, pp.13–14] show that if the two functions f(x) and g(x) have the form

then the product f(x)g(x) may be expressed as

In view of this result and Theorem 2, the following theorem can be proved.

Theorem 3

Assume that the polynomials \(f_{\ell }(x)\) of degrees \(N_{\ell }\,\,(\ell =1,2,\dots ,s)\) at most, respectively, are formally expanded in finite series of B-polynomial basis

and let \(M_{k}=\sum _{j=1}^{k}N_{j}\), then

where

where

and

Proof

In view of Theorem 2, we have

By repeating the application of formula (32)—and after some rather manipulation—one can obtain (34). \(\square \)

Note 3

It is worthy to note that formula (34) for the case of \(s=1\) can be written as

where the coefficients \(C_{j_{1}}^{(p_{1})}\) can be defined as

In view of two Theorems 2 and 3, we obtain the following corollary.

Corollary 2

Under the assumptions of two Theorems 2 and 3, we get

where

In view of Note 2, formula (32) can be generalized as a direct consequence of Corollary 2 as in the following corollary.

Corollary 3

Under the assumptions of Theorem 3, we get

where

Corollary 4

Under the conditions of two Theorems 2 and 3, we get

3 An Application for the Solution of High-Order Linear Differential Equations with Polynomial Coefficients

In this section, we aim to discuss an algorithm for approximating solutions to qth-order ordinary linear differential equations

subject to the boundary conditions

where \(q_{1}+q_{2}+2=q\), or the initial conditions

where \(p_{i}(x),i=0,1,...,q,\) are given polynomials. Without loss of any generality, we suppose that \(p_{i}(x)=\gamma _{i}x^{m_{i}},i=0,1,...,q,\) where \(m_{i}\) are positive integers and \(\gamma _{i}\) are constants, f(x) is a given source function and \(\alpha _{j}\,\,(j=0,1,...,q_{1}),\beta _{j}\) \( (\,j=0,1,...,q_{2})\) are constants. An approximation to the solution of (43) may be written as

In the case of boundary-value problem (43) and (44):

We make \(y_{N}(x)\) satisfies the following equations:

for \(n=q_{1}+1,...,N-q_{2}-1\), and

In this case, the coefficients \(a_{0},...,a_{q_{1}},a_{N-q_{2}},...,a_{N}\) can be determined as follows: According to relation (12) and \(B_{i,N}(0)=\delta _{i,0},\,B_{i,\,N}(R)=\delta _{i,n},\) we have for \(j=1,...,N-1\)

then Eq. (49) takes the form

and

Since (50) and (51) are two triangular systems, then we obtain [15, p.362]

and

It is not difficult to show that

Now, the coefficients \(a_{q_{1}+1},...,a_{N-q_{2}-1}\) can be obtained as follows:

In view of Theorem 2, we obtain

then substituting (55) in (47) and using the formula (26) and the orthonormal relation

lead to

where \(f_{i}=\dfrac{1}{R}\int \nolimits _{0}^{R}\omega (x)f(x)\,B_{i,N+m}(x)\,dx.\) Any appearance of \( a_{0},...,a_{q_{1}},a_{N-q_{2}},...,a_{N}\) in (57) is moved to the right-hand side, then the system of equations (57) is equivalent to the following matrix equation:

where \({a}_{{N}}^{(q)}=[a_{q_{1}+1},...,a_{N-q_{2}-1}]^{T}\), and the elements of \({A}_{{N}}^{(q)}=(a_{n+q_{1}, \,k+q_{1}}^{(q)})_{n,k=1}^{N-(q_{1}+q_{2}+1)}\) and \({B}_{{N} }^{(q)}=[b_{q_{1}+1},...,b_{N-q_{2}-1}]^{T}\) have the forms

and

respectively.

In the case of the initial value problem (43) and (45):

In similar way, it can be shown that the coefficients \(a_{0},...,a_{q-1}\) have the form

and the coefficients \(a_{q},...,a_{N}\) can be obtained by making \(y_{N}(x)\) satisfies (47) for \(n=q,...,N.\) Then, the obtained equations can be written in the matrix form (58) where \({a}_{{N}}^{(q)}=[a_{q},...,a_{N}]^{T}\), and the elements of \({A}_{{N}}^{(q)}=(a_{n+q,\,k+q}^{(q)})_{n,k=1}^{N-q}\) and \({B}_{{N}}^{(q)}=[b_{q},...,b_{N}]^{T}\) have the forms (59) and

respectively.

Note 4

It is worthy to note that, if the polynomials \(p_{j}(x)\, (j=0,1,\dots ,q)\) have the form \(p_{j}(x)=\sum \nolimits _{i=0}^{m_{j}}\gamma _{i}^{(j)}x^{i}\), then the elements of \({A}_{{N}}^{(q)}\) have the form

4 An Application for the Solution of High-Order Nonlinear Differential Equations with Polynomial Coefficients

In this section, we are interested in solving numerically the nonlinear differential equation with polynomial coefficients

subject to the boundary conditions (44) or the initial conditions (45), where \(Q_{i}(x)=\sum \nolimits _{k=0}^{m_{i}}\gamma _{k}^{(i)}x^{k},\, i=0,1,\dots ,q.\) Assume the proposed approximated solution \(y_{N}(x)\) as in (46).

In the case of boundary-value problem (63) and (44):

We make \(y_{N}(x)\) satisfies the equations (48) and

where \(s=\underset{0\le \,i\,\le q}{\max }s_{i}\) and \(m=\underset{0\le \,i\,\le q}{\max }m_{i}\). Using Corollary 2 gives the expansions

Substituting (65) in (64) leads to the system of equations

Now, the coefficients \(a_{0},...,a_{q_{1}},a_{N-q_{2}},...,a_{N}\) have the form (54) and the coefficients \(a_{q_{1}+1},...,a_{N-q_{2}-1}\) can be obtained by solving \((N-q+1)\) nonlinear equations (66) using an appropriate solver. Substituting the obtained coefficients, \(a_{i}\, (i=0,1,\dots ,N)\), in the form (46) gives the approximated solution of (63).

In the case of initial value problem (63) and (45):

In similar way, the coefficients \(a_{0},...,a_{q-1}\) have the form (61). While the coefficients \(a_{q},...,a_{N}\) can be obtained by making \(y_{N}(x)\) satisfy (64) for \(n=q,...,N,\) which can be written in the form of nonlinear equations (66) for \(n=q,...,N.\) Then, these nonlinear equations can be solved using an appropriate solver to obtain these coefficients.

5 Error Analysis

In this section, we provide the studying of error resulting from the presented numerical method. For a positive integer N, consider the space \(S_{N}\) defined by

Let \(P_{N}\) denote the orthogonal projection of the \(\omega \)-weighted \(L^{2}\) onto \(S_{N}.\) This projection has the following approximation properties, whose proof is standard.

Proposition 1

There exists a constant C independent of N, such that for any \(h\in H^{r}[a,b]=\{y:y^{(i)}\in L^{2},\,i=0,1,..,r\}\)

Lemma 3

The approximated solutions \(y_{N}(x),\) in the form (46), of the differential Eq. (43) with the boundary conditions (44) or the initial conditions (45) satisfy the inequality

Proof

We have

Using \(\sum \nolimits _{k=0}^{N}\,B_{k,N}(x)=1,\) we can see that

Also, we have

then

In view of relation (11), we get

From (69) and (70), we obtain (68). \(\square \)

The following lemma proves the stability of the presented solution (As an analog of the results in [8]).

Lemma 4

The obtained approximated solutions \(y_{N}(x)\) satisfy the following two inequalities:

where \(\,h_{r}^{(\alpha ,\beta )}=\,\left( 2^{\alpha +\beta }\frac{\Gamma (\alpha +1)\Gamma (\beta +1)}{\Gamma (\alpha +\beta +2)}\right) ^{\frac{1}{r} }\) and C is a constant independent of N. Hence, for sufficient large N, we have

Proof

If f is a function defined on [0, 1], then the Bernstein polynomial \(B_{N}(f,x)\) of f is given by

converges to f(x) uniformly on [0, 1] if f is continuous there [11, 18]. Regarding the rate of converges to f(x), Popviciu [38] has shown that

where \(\,\omega _{f}\,\,\)is the modulus of continuity of f in [0, 1], which have the form \(\,\omega _{f}\,\,(N^{-1/2})=C\) \(N^{-1/2}\). Without loss of generality, we can consider \([0,R]=[0,1],\) then we obtain

and

Now, using (77), one can obtain

From (79) and using that \(\sum \nolimits _{k=0}^{N}\,b_{k,N}(x)=1\ \)lead to

then relation (71) is proved, and this implies that for sufficient large N, we have

and this completes the proof of (73). Also, we have

then using that \(\sum \nolimits _{k=0}^{N}\,B_{k,N}(x)=1\) leads to obtain

From (81) and using two relations (68) and (71) enable one to get

then (72) is proved, and hence, for sufficient large N, we have

which completes the proof of (74) and the proof of Lemma 4 is complete. \(\square \)

Theorem 4

Let \(y(x)\in H^{r}[0,R],\) for some integer \(r\ge 2,\) and \(y_{N}(x)\) be the obtained approximate solution, then we have

where \(\lambda \) is a constant independent of N. Hence, for sufficient large N, we have

in addition to

and

Proof

Let \(P_{N}\) denote an orthogonal projection of the \(\omega \) -weighted \(L^{2}\) onto \(S_{N}.\) We can define it as follows:

where \(\mu (N)=\sum \nolimits _{i=0}^{N}\left\| D_{i,N}\right\| _{\infty }\) for \( N\ge 1\) and \(\,M<1.\) Then, we can deduce that \(P_{N}\) is a bounded, such that \(\left\| P_{N}h\right\| _{\infty }\le M\,\left\| h\right\| _{\infty }.\)

We have

In view of Proposition 1, we get

However

then the two inequalities (87) and (88) lead to

The inequality (89) can be written as

where \(\lambda =\frac{\max (C_{1},C_{2})}{1-M}\,\)is a constant independent of N, then (82) is proved. Hence, for sufficient large N, (74) and (82) give

However, we have

then using (90) and (91) lead to (83). Then, (84) and (85) are obtained directly from (83) which completes the proof of this theorem. \(\square \)

The following two corollaries ensure that the stability of the solutions as N increases (see [1,2,3, 8]).

Corollary 5

Under the assumptions of Theorem 4, we have for sufficient large N, and for minimum integer \(r\ge 2\), such that (83) is valid, we have

and

Proof

We have

In view of (83), we get (92). Then, (93) is a direct consequence of (92). \(\square \)

Corollary 6

Under the assumptions of Theorem 4, we have for sufficient large N, and for minimum integer \(r\ge 2\), such that (83) is valid, we have

and

Proof

We have

In view of Corollary 5, we get (94). Then, (95) is a direct consequence of (94). \(\square \)

6 Numerical Results

In this section, we use the algorithms presented in Sects. 3 and 4 to solve several numerical examples. All computations presented were performed on a computer (Intel(R) Core(TM) i9-10850 CPU@ 3.60GHz, 3600 Mhz, 10 Core(s), 20 Logical Processor (s)) running Mathematica 12 . Comparisons between the maximum absolute errors

obtained by the present method and other methods proposed in [20, 21, 31, 46, 47, 49] are made. In view of Theorem 4, we have

where

is the upper bound of absolute errors.

Example 1

Consider the following boundary-value problem to demonstrate that the B-polynomials are powerful to approximate the solution to desired accuracy:

whose exact solution is \(y(x)=e^{x}.\) Computational results for \(\left\| E_{N}\right\| _{\infty }\), with various choices of \(\alpha , \beta \) and N, are presented in Table 1 with displaying the computational time (CT(s)), in seconds, to get these results. In Table 2, a comparison between the error obtained using the presented method (using \(\alpha =\beta =2\)), the sinc-Galerkin, modified decomposition methods, BGM, and PBGM (see, [20, 21]) is displayed which shows improved performance in the presented method. Figure 1a shows that the obtained absolute errors \(E_{12}(x)\) and \(E_{13}(x)\) at \(\alpha =2\) and \(\beta =2\), to demonstrate the convergence of solutions. Additionally, Fig. 1b shows an excellent agreement between the exact and approximate solution \(y_{14}(x)\) to the given problem (98).

Remark 2

In this example, we find that \(\left\| y\right\| _{\infty }=e\approx 2.71828182845904524,\) and \(\left\| y_{N}\right\| _{\infty } =2.7182817685212886,2.7182817685002005,2.7182817685001983,\) for \(N=6,10,14,\) respectively, with \(\alpha =\beta =2\). The computations of \(\left\| y_{N}\right\| _{r}\) \((r=2,...,7)\) are presented in Table 3 which show that

These results ensure the validity of results (71)–(74) for \(N=6,10,14\). Also, the computations of \(\left\| y_{N}\right\| _{r}\) and \(\left\| y\right\| _{r},\) \(r=2,3,...7,\) and using the numerical results of \(\left\| E_{N}\right\| _{\infty }=\left\| y-y_{N}\right\| _{\infty }\) in Table 1 tell us that

which ensures the validation of (82) for \(N=6,10,14\). Also, the computations of upper bound

for \(r=2,3,4,...,7\) and \(N=6,10,14\), are presented in Table 4. In view of Tables 1 and 4, we can see that \(\left\| y-y_{N}\right\| _{\infty }\le \,{UB}_{r}(N),\) for \(r=2,3,4,5,6,7,\) and \( N=6,10,14,\) which ensures the validation of (84). In fact, the computations show that the maximum values of r such that \(\left\| y-y_{N}\right\| _{\infty }\le {UB}_{r}(N)\) for \(N=6,10,14\) are \( r=7,11,14,\) respectively. Hence, the obtained approximated solutions \(y_{N}(x)\) converge to the exact solution y(x) with order 13.5 when r = 14. Additionally, the last row of Table 1 shows the upper bounds \({MUB}_{r}(N)\) which are the maximum values of the computed upper bounds \({UB}_{r}(N)\) at \((\alpha ,\beta )\in \{(2,2),(0,1),(-1/2,-1/2),(1/20,-1/20)\),

\((1/200,-1/200)\}\) using \(r=7,10,14\) and \(N=6,10,14\), respectively.

Figures of obtained errors \(E_{N}\) and approximate solution for Example 1

Example 2

Consider the following seventh-order linear boundary-value problem:

whose exact solution is given by \(y(x)=(1-x)e^{x}\). Computational results for \(\left\| E_{N}\right\| _{\infty }\)-error norm with various choices of \( \alpha ,\beta \) and N, are presented in Table 5. A comparison between the absolute errors obtained by using the present method (using \(N =17\), \(\alpha =0\), and \(\beta =0\)) and the methods in [46, 47] at various values of x, is given in Table 6. This shows that the present method is more accurate. Figure 2a shows Log-errors for various N values, when \(\alpha =0\) and \(\beta =0\), to demonstrate the stability of solutions. Additionally, Fig. 2b shows an excellent agreement between the exact and approximate solution \(y_{17}(x)\) to the given problem (99).

Graph of \(Log_{10}(E_{N})\) and approximate solution for Example 2

Example 3

Consider the following linear initial value problem:

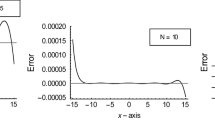

whose exact solution is given by \(y(x)=(1-x)e^{x}\). Computational results for \(\left\| E_{N}\right\| _{\infty }\)-error norm with various choices of \( \alpha ,\beta \) and N, are presented in Table 7. A comparison between the absolute errors obtained by using the present method (using \(N =20\), \(\alpha =0\) and \(\beta =1\)) and the methods in [31, 49] at various values of x is given in Table 8. Figure 3a shows that the obtained absolute error \(E_{20}(x)\) at \(\alpha =0\) and \(\beta =1\), to demonstrate the convergence of solutions. Additionally, Fig. 3b shows an excellent agreement between the exact and approximate solution \(y_{20}(x)\) to the given problem (100).

Graph of \(E_{N}\) and approximate solution for Example 3

Example 4

Consider the following initial value problem to demonstrate that the B-polynomials are powerful to approximate the solution to desired accuracy:

where f(x) is selected, such that exact solution is such that the exact solution is \(y(x)=x^5\,cosx\). In Table 9, we list the obtained maximum absolute errors with different values of \(\alpha , \beta \) at \(N=9,13,17\). Table 10 compares the obtained absolute errors from the presented method (using \(N =17\), \(\alpha =-1/2\) and \(\beta =-1/2\)) to those of [13] at various values of x. Figure 4a shows that the obtained absolute errors \(E_{16}(x)\) and \(E_{17}(x)\) at \(\alpha =1\) and \(\beta =0\), to demonstrate the convergence of solutions. Additionally, Fig. 4b shows an excellent agreement between the exact and approximate solution \(y_{9}(x)\) to the given problem (101).

Graph of \(E_{N}\) and approximate solution for Example 4

Example 5

Consider the Abel equation of the second kind

where the exact solution is \(y(x)=e^{-x}\). In Table 11, we list the obtained maximum absolute errors with different values of \(\alpha , \beta \) at \(N=5,10,20\). Table 12 compares the obtained absolute errors from the presented method (using \(N=5\), \(\alpha =0\) and \(\beta =0\)) to those of [30] at various values of x. Figure 5a shows Log-errors for various N values, when \(\alpha =0\) and \(\beta =1\), to demonstrate the stability of solutions. Additionally, Fig. 5b shows an excellent agreement between the exact and approximate solution \(y_{6}(x)\) to the given problem (102).

Graph of \(Log_{10}(E_{N})\) and approximate solutions for Example 5

Example 6

Consider the boundary value problem

where f(x) is selected, such that the exact solution is \(y(x)=x^{1.5}\,e^{-x}\).

In Table 13, we list the obtained maximum absolute errors with different values of \(\alpha , \beta \) at \(N=10,15,20\). Figure 6a shows Log-errors for various N values, when \(\alpha =1\) and \(\beta =0\), to demonstrate the stability of solutions. Additionally, Fig. 6b shows an excellent agreement between the exact and approximate solution \(y_{10}(x)\) to the given problem (103).

Remark 3

In Example 6, the proposed algorithm is applied to a nonlinear differential equation with a nonsmooth solution. Also, Table 13 shows that the obtained accuracy is less than that obtained in Examples 1–5 where the exact solutions are smooth. Therefore, the effectiveness of the proposed algorithm in the case of problems that have nonsmooth solutions is lower but still accepted.

Graph of \(Log_{10}(E_{N})\) and approximate solutions for Example 6

7 Conclusions

The proposed algorithm discussed some properties and formulae related to B-polynomials which are utilized with either the boundary conditions (44) or the initial conditions (45) to obtain explicitly the q unknown expansion coefficients (54) or (61), respectively. This algorithm leads to a linear algebraic system of \((N-q+1)\) equations in \((N-q+1)\) unknowns which has the matrix form (58) when the numerical solution of a linear differential equation with polynomial coefficients is discussed. While it leads to a nonlinear algebraic system of \((N-q+1)\) equations in \((N-q+1)\) unknowns when the numerical solution of a nonlinear differential equation with polynomial coefficients is discussed. In each case, a comparison between the absolute errors obtained by the presented method and other methods is given. Furthermore, in all of the numerical examples presented, agreements were reached for between \(10^{-16}\) and \(10^{-20}\). All computations presented were performed on a computer running Mathematica 12 [Intel(R) Core(TM) i9-10850 CPU@ 3.60 GHz, 3600 Mhz, 10 Core(s), 20 Logical Processor (s)]. We have shown that the presented B-polynomial method will return a valid solution for a differential equation and is a powerful tool that we may utilize to overcome the difficulties associated with boundary and initial value problems with less computational effort. We have also provided some theoretical results regarding the error resulting from the presented numerical method. These theoretical results have been validated through the presented numerical examples, and the corresponding explanations have been given.

References

Abdelhakem, M., Alaa-Eldeen, T., Baleanu, D., Alshehri, M.G., El-Kady, Mamdouh: Approximating real-life BVPs via Chebyshev polynomials first derivative Pseudo-Galerkin method. Fractal Fract. 5(4), 165 (2021)

Abdelhakem, M., Youssri, Y.H.: Two spectral Legendre’s derivative algorithms for Lane-Emden, Bratu equations, and singular perturbed problems. Appl. Numer. Math. 169, 243–255 (2021)

Abdelhakem, M., Fawzy, M., El-Kady, M., Moussa, H.: An efficient technique for approximated BVPs via the second derivative Legendre polynomials pseudo-Galerkin method: Certain types of applications, Results Phys., 43, Article 106067 (2022)

Abd-Elhameed, W.M., Ahmed, H.M.: Tau and Galerkin operational matrices of derivatives for treating singular and Emden-Fowler third-order-type equations. Int. J. Mod. Phys. C. 33(5), 2250061–22500617 (2022)

Abd-Elhameed, W.M., Alkenedri, A.M.: Spectral Solutions of Linear and Nonlinear BVPs Using Certain Jacobi Polynomials Generalizing Third- and Fourth-Kinds of Chebyshev Polynomials. Comput. Model. Eng. Sci 126(3), 955–989 (2021)

Abd-Elhameed, W.M., Napoli, A.: A Unified Approach for Solving Linear and Nonlinear Odd-Order Two-Point Boundary Value Problems. Bull. Malays. Math. Sci. Soc. 43(3), 2835–2849 (2020)

Ahmed, H.M.: Solutions of 2nd-order linear differential equations subject to dirichlet boundary conditions in a Bernstein polynomial basis. J. Egypt. Math. Soc. 22(2), 227–237 (2014)

Ahmed, H.M.: Numerical Solutions of Korteweg-de Vries and Korteweg-de Vries-Burger’s Equations in a Bernstein Polynomial Basis. Mediterr. J. Math. 16(4), 102 (2019)

Bahatta, D.D., Bhatti, M.I.: Numerical solution of KdV equation using modified Bernstein polynomials. Appl. Math. Comput. 174(2), 1255–1268 (2006)

Baldwin, P.: Asymptotic estimates of the eigenvalues of a sixth order boundary value problem obtained by using global phase-integral methods. Phil. Trans. R. Soc. Lond. A. 322, 281–305 (1987)

Bernšteın, S.: Démonstration du théoréme de Weierstrass, fondé sur le probabilités, Comm. Soc. Math. Kharkov, 13, 1–2 (1912-1913)

Bhatti, M.I., Bracken, P.: Solutions of differential equations in a Bernstein polynomial basis. J. Comput. Appl. Math. 205(1), 272–280 (2007)

Bhrawy, A.H., Alghamdi, M.A.: Numerical Solutions of Odd Order Linear and Nonlinear Initial Value Problems Using a Shifted Jacobi Spectral Approximations, Abstr. Appl. Anal. 2012, Article ID 364360, 25 pages (2012). https://doi.org/10.1155/2012/364360

Boutayeb, A., Twizell, E.: Numerical methods for the solution of special sixth-order boundary value problems. Int. J. Comput. Math. 45, 207–233 (1992)

Burden, R.L., Faires, J.D.: Numerical Analysis. Brooks/Cole (2011)

Bushnaq, S., Shah, K., Tahir, S., Ansari, K.J., Sarwar, M., Abdeljawad, T.: Computation of numerical solutions to variable order fractional differential equations by using non-orthogonal basis. AIMS Math. 7(6), 10917–10938 (2022)

Carnicer, J.M., Peña, J.M.: Shape preserving representations and optimality of the Bernstein basis. Adv. Comput. Math. 1(2), 173–196 (1993)

Cheng, F.: On the rate of convergence of Bernstein polynomials of functions of bounded variation. J. Approx. Theor. 39(3), 259–274 (1983)

Davies, A.R., Karageorghis, A., Phillips, T.N.: Spectral Galerkin methods for the primary two-point boundary-value problem in modelling viscoelastic flows. Int. J. Numer. Methods Eng. 26, 647–662 (1988)

Doha, E.H., Bhrawy, A.H., Saker, M.A.: On the Derivatives of Bernstein Polynomials: An Application for the Solution of High Even-Order Differential Equations, Bound. Value Probl., 2011, Article ID 829543, 16 pages (2011). https://doi.org/10.1155/2011/829543

El-Gamel, M.: A comparison between the sinc-Galerkin and the modified decomposition methods for solving two-point boundary-value problems. J. Comput. Phys. 223(1), 369–383 (2007)

El-Gamel, M., Cannon, J.R., Zayed, A.I.: Sinc-Galerkin method for solving linear sixth-order boundary-value problems. Math. Comput. 73, 1325–1343 (2003)

Farin, G.: Curves and Surfaces for Computer-Aided Geometric Design, 3rd edn. Academic Press, Boston (1993)

Farin, G.: Curves and Surfaces for CAGD: A Practical Guide. Academic Press, San Diego (2002)

Farouki, R.T.: On the stability of transformations between power and Bernstein polynomial forms. Comput. Aided Geom. Design 8(1), 29–36 (1991)

Farouki, R.T., Goodman, T.N.T.: On the optimal stability of the Bernstein basis. Math. Comp. 65(216), 1553–1566 (1996)

Farouki, R.T., Rajan, V.T.: On the numerical condition of polynomials in Bernstein form. Comput. Aided Geom. Design 4(3), 191–216 (1987)

Farouki, R.T., Rajan, V.T.: Algorithms for polynomials in Bernstein form. Comput. Aided Geom. Design 5(1), 1–26 (1988)

Farouki, R.T., Rajan, V.T.: Algorithms for polynomials in Bernstein form. Comput. Aided Geom. Design 5(1), 1–26 (1988). https://doi.org/10.1016/0167-8396(88)90016-7

Guler, C.: A new numerical algorithm for the Abel equation of the second kind. Int. J. Comput. Math. 84(1), 109–119 (2007)

Haq, S., Idrees, M., Islam, S.: Application of optimal homotopy asymptotic method to eighth order initial and boundary value problems. Int. J. Appl. Math. Comput. Sci. 2(4), 73–80 (2010)

Hoschek, J., Lasser, D.: Fundamentals of Computer Aided Geometric Design. Taylor and Francis (1996)

Jani, M., Babolian, E., Javadi, S.: Bernstein modal basis: application to the spectral Petrov-Galerkin method for fractional partial differential equations. Math. Appl. Sci. 40(18), 7663–7672 (2017)

Karageorghis, A., Phillips, T.N., Davies, A.R.: Spectral collocation methods for the primary two-point boundary-value problem in modelling viscoelastic flows. Int. J. Numer. Methods Eng. 26, 805–813 (1988)

Karimi, K.: Numerical solution of nonlocal parabolic partial differential equation via Bernstein polynomial method. Punjab Univ. J. Math. 48(1), 47–53 (2016)

Keller, H.B.: Numerical Methods for Two-point Boundary-value Problems. Courier Dover Publications, lnc. (2018)

Lorentz, G.G.: Bernstein Polynomials. University of Toronto Press, Toronto (1953)

Popoviciu, T.: Sur l’ approximation des fonctions convexes d’ordre supérieur. Mathematica (Cluj) 10, 49–54 (1935)

Rababah, A., Al-Natour, M.: The weighted dual functionals for the univariate Bernstein basis. Appl. Math. Comput. 186(2), 1581–1590 (2007)

Rababah, A., Lee, B.G., Yoo, J.: A simple matrix form for degree reduction of bézier curves using chebyshev-Bernstein basis transformations. Appl. Math. Comput. 181(1), 310–318 (2006)

Richards, G., Sarma, P.R.R.: Reduced order models for induction motors with two rotor circuits. IEEE Trans. Energy Convers. 9(4), 673–678 (1994)

Shah, K.: Using a numerical method by omitting discretization of data to study numerical solutions for boundary value problems of fractional order differential equations. Math. Methods Appl. Sci. 42(18), 6944–6959 (2019)

Shah, K., Abdeljawad, T., Khalil, H., Khan, R.A.: Approximate solutions of some boundary value problems by using operational matrices of Bernstein polynomials, In: Functional Calculus. IntechOpen, 1-25 (2020)

Shah, K., Wang, J.: A numerical scheme based on non-discretization of data for boundary value problems of fractional order differential equations. Revista de la Real Academia de Ciencias Exactas, Fisicas y Naturales. Serie A. Matemáticas 113(3), 2277–94 (2019)

Shahni, J., Singh, R.: Numerical solution of system of Emden-Fowler type equations by Bernstein collocation method. J. Math. Chem. 59(4), 1117–1138 (2021)

Siddiqi, S.S., Akram, G., Iftikhar, M.: Solution of seventh order boundary value problem by differential transformation method. WASJ. 16(11), 1521–1526 (2012)

Siddiqi, S.S., Iftikhar, M.: Numerical solution of higher order boundary value problems, Abstr. Appl. Anal. 2013, Article ID 427521, 12 pages (2013). https://doi.org/10.1155/2013/427521

Twizell, E., Boutayeb, A.: Numerical methods for the solution of special and general sixth-order boundary value problems with applications to Benard layer eigenvalue problems. Proc. Roy. Soc. Lond. A. 431, 433–450 (1990)

Xu, X., Zhou, F.: Numerical solutions for the eighth-order initial and boundary value problems using the second kind chebyshev wavelets, Adv. Math. Phys., 2015 (2015)

Youssri, Y.H., Abd-Elhameed, W.M., Abdelhakem, M.: A robust spectral treatment of a class of initial value problems using modified Chebyshev polynomials. Math. Meth. Appl Sci. 44(11), 9224–9236 (2021)

Acknowledgements

The author would like to thank the referees for their valuable comments and their constructive suggestions which improved the manuscript to its present form.

Funding

Open access funding provided by The Science, Technology & Innovation Funding Authority (STDF) in cooperation with The Egyptian Knowledge Bank (EKB). This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The author declares that he has no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ahmed, H.M. Numerical Solutions of High-Order Differential Equations with Polynomial Coefficients Using a Bernstein Polynomial Basis. Mediterr. J. Math. 20, 303 (2023). https://doi.org/10.1007/s00009-023-02504-0

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00009-023-02504-0

Keywords

- Fourier series in special orthogonal functions

- boundary (initial) value problems for ordinary differential equation

- Bernstein polynomial

- Galerkin method