Abstract

Traditional iterative learning control (ILC) algorithms usually assume that full system information and operation data can be utilized. However, due to the uncertainty and complexity of actual systems, it is difficult to access full system information and operation data accurately and completely. In this chapter, a novel ILC scheme based on stochastic variance reduced gradient (SVRG) is proposed. This scheme is not only suitable for resolving the incomplete information problem, but also converges efficiently under both strongly convex and non-strongly convex control objectives. To demonstrate the advantages, this chapter studied two scenarios, i.e., random error data dropout and model-free data-driven approach, and proposed two SVRG-based ILC algorithms for these two scenarios, respectively. It is theoretically demonstrated and experimentally verified that the proposed SVRG-based ILC scheme converges faster than both the full gradient and stochastic gradient methods for the two involved scenarios.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

1.1 Background

Iterative learning control (ILC) is a control method applicable to systems doing repeated operations. The basic idea is to use the input and error signals from previous iteration to improve the input of the next iteration. Arimoto et al. first proposed iterative learning control for robotic arms in 1984 and clarified the basic idea of iterative learning control (Arimoto et al., 1984). Subsequently, academia has published numerous chapters around ILC. It has gradually become one of the important branches in the field of control and is widely used in robotics, industrial production and hard disk manufacturing, and other controlled systems with repeated operation.

To achieve excellent control performance, most ILC assume that full operational data and system information can be obtained and utilized. However, in real systems, data delays and dropouts often occur due to various uncertainties. On the other hand, when the system structure is complex or unstable, it is difficult to obtain the system information accurately. To solve the incomplete information problems, it is of great theoretical and practical significance to design ILC algorithms with high performance.

Information incompleteness can be classified into two categories, objective and subjective incompleteness. Information incompleteness caused by objective factors is often related to the uncertainty of the system itself. For example, during the transmission of the signal, the instability of the channel can cause data packet loss. Three main random packet dropout models have been developed for this problem: the random sequence model, the Bernoulli distribution model, and the Markov chain model. Shen (2018) designed an iterative learning control algorithm based on the stochastic approximation algorithm corresponding to the three models and proved that the algorithms satisfy mean-square convergence and probabilistic strong convergence. Information incompleteness due to subjective factors usually artificially assumes that the system information is unknown, thus avoiding the complexity of system modeling and system instability. For example, Oomen et al. (2014) designed a model-free data-driven iterative learning control algorithm for \(H_\infty \)-parametric estimation of multi-input multi-output (MIMO) systems, which obtains the full gradient by conducting \(n_0\times n_1\) experiments on \(n_0\times n_1\)-dimensional MIMO systems. However, this algorithm is difficult to be applied to large MIMO systems due to the excessive number of experiments. Subsequently, Aarnoudse et al. (Owens et al., 2009) designed an iterative learning control algorithm based on the stochastic approximation method by constructing a random matrix to estimate the gradient, which effectively reduces the number of experiments.

It is important to note that the effect of information incompleteness on ILC tracking performance is essentially the robustness of ILC. However, this robustness differs for objective and subjective-type information incompleteness problems. The former is usually model-based and emphasizes modeling to analyze the causes of information deficiency. While the latter is data-based and is generally not concerned with the causes of information deficiency but with the inherent limitations of information deficiency on control performance. Model-based and data-based control methods are not opposed. To achieve the best control effect, the two control methods can also be used in combination. Existing studies on ILC for solving the information incompleteness problems are usually based on stochastic approximation method or other gradient methods. In this chapter, we will use a stochastic variance reduction gradient (SVRG) method to give a general framework for solving the system information incompleteness problems.

1.2 Design and Analysis of SVRG-Based ILC

Modeling the control objective as an optimization function, for a deterministic discrete-time linear system, Owens et al. (Aarnoudse & Oomen, 2020) proposed a gradient-type ILC algorithm based on optimization ideas and analyzed the stability, monotonicity, and robustness of the algorithm. For noisy discrete-time linear systems, Yang and Ruan (2017) proposed an enhanced gradient-based ILC algorithm that can effectively converge in the presence of perturbations in the system. However, the above gradient-based ILC algorithm requires full error and system information for each iteration, and when this information is not fully available, the traditional gradient-based ILC algorithm is no longer applicable.

Notice that in Machine Learning, Stochastic Gradient Descent (SGD) method replaces the total gradient by randomly selecting a partial gradient each time. Corresponding to the control problems, the partial gradient can also be obtained when there is insufficient information about the error or the system. This correlation inspires us to find suitable stochastic gradient methods to solve errors or system information insufficient problems.

In order to improve the convergence speed and apply to non-smooth and non-strongly convex objective functions, recent research in Machine Learning has produced a large number of improved versions of stochastic gradient descent algorithms, including momentum method, variance reduction method, and incremental aggregated gradients. Allen-Zhu (2018) divided these algorithms to three types according to their complexity under strongly convex conditions. The first generation is the momentum-based gradient algorithm, the second generation includes the variance reduction-based gradient algorithm and the proximal stochastic variance reduction gradient algorithm, the third generation includes the Katyusha algorithm and incremental aggregated gradient algorithms. In most cases, the complexity of the algorithms decreases with the growth of generation. Considering specific control problems, the algorithms in first generation are slow to converge and often fail to meet the practical needs, while the algorithms in third generation require accurate system modeling to achieve faster convergence and are difficult to apply to data-driven ILC. Therefore, the research in this chapter is mainly based on the algorithm in second generation—Stochastic Variance Reduction Gradient (SVRG) algorithm.

1.3 Main Work and Organization

The purpose of this chapter is to construct SVRG-based ILC and use this framework to solve specific information incompleteness problems. As representatives of objective and subjective information incompleteness, two scenarios, error data random dropouts and model-free ILC, are selected in this chapter to give the corresponding SVRG-based ILC algorithms, respectively. The contribution is threefold.

-

1.

Propose a SVRG-based ILC framework for single-input single-output (SISO) systems. The algorithm is shown to converge linearly under smooth and strongly convex conditions.

-

2.

Apply the SVRG-based ILC framework to error data random data dropouts and give the convergence proof of the algorithm.

-

3.

Extend the SVRG-based ILC framework to multi-input multi-output (MIMO) systems in model-free data-driven scenario and prove the convergence of the algorithm under smooth and non-strongly convex conditions.

Section 2 serves as the basis of the chapter, giving the SVRG-based ILC framework for SISO systems. Section 3 applies the framework to error data random dropouts problem. Section 4 extends the framework to MIMO systems in model-free scenario. Since Sect. 2 only gives the algorithm framework and does not cover the specific scenario, Sect. 2 does not give numerical simulations and contains only three parts: system description, algorithm design, and convergence analysis. Both Sects. 3 and 4 include four parts: system description, algorithm design, convergence analysis, and numerical simulation.

2 SVRG-Based ILC Framework

As the basis of the following sections, this section uses SISO systems to give the basic framework of SVRG-based ILC algorithm. This section includes three parts: system description, algorithm design, and convergence analysis.

2.1 System Description

Consider the following single-input single-output (SISO) discrete-time linear system

where \(t=0, 1,\ldots , N-1\) is time index, and \(k=1, 2, 3,\ldots \) denotes the iteration index. \(x_k\left( t \right) \in \mathbb {R}^n, u_k\left( t\right) \in \mathbb {R}\), and \(y_k\left( t\right) \in \mathbb {R}\) represent the system state, input and output, respectively. \(A\in \mathbb {R}^{n\times n}, B\in \mathbb {R}^n, \text { and } C\in \mathbb {R}^{1\times n}\) are the system matrices. The initial condition is the same for each iteration, i.e., \(x_k\left( 0\right) =x_0, \forall k\in \mathbb {N}^*\).

Taking \(t=0,1, \ldots , N-1\) in (1) yields

Combining the above equations, system (1) can be rewritten in the following equivalent form

where \(u_{k}=\left[ u_{k}(0), u_{k}(1), \ldots , u_{k}(N-1)\right] ^{T} \in \mathbb {R}^{n}, y_{k}=\left[ y_{k}(1), y_{k}(2), \ldots , y_{k}(N)\right] ^{T} \in \mathbb {R}^{n}\),

For further analysis, the following assumptions are required.

Assumption 1

The input/output coupling matrix \(C B \ne 0\).

Assumption 2

For desired trajectory \(y_{d}(t)\), there exists a unique desired input \(u_{d}(t)\) and initial state \(x_{d}(0)\) such that

Also written in the form of (2), we have

Remark 1

Assumptions 1 and 2 describe the realizability of the system for desired trajectory \(y_d\). To be specific, Assumption 1 means that the relative degree of the system is 1. Assumption 2 describes the existence of an input signal \(u_{d}\) that can precisely trace \(y_{d}\). If the system does not satisfy the Assumption 2, the system output can only be as close as possible to the desired trajectory \(y_{d}\).

Assumption 3

The initial states of (1) and (3) are identical, i.e., \(x_{d}(0)=x_{k}(0)=x_{0}, \forall k\). Assume that \(x_{0}=0\).

Remark 2

Assumption 3 is based on the requirement for system repeatability in ILC. In order to simplify the algorithm, without loss of generality, take \(x_{0}=0\). It is easy to verify that the result of this chapter is also valid when \(x_{0} \ne 0\).

In this chapter, the above three assumptions will be followed, but in fact, the SVRG-based ILC can also be established when Assumptions 1 and 2 are appropriately relaxed. Section 4 will give specific explanations on how to relax these assumptions.

Figure 1 illustrates the basic framework of ILC. The plant takes input \(u_{k}\) and generates output \(y_{k}\) and gets the error \(e_{k}=y_{d}-y_{k}\) between the output and the desired trajectory \(y_{d}\), which is transmitted to the controller. The controller uses error \(e_{k}\) and input \(u_{k}\) to calculate the input signal \(u_{k+1}\) for the next batch and transmits it to the plant. Our goal is to find a sequence of input \(\left\{ u_{k}\right\} \), s.t.

where \(\Vert \cdot \Vert \) is the vector 2-norm and its induced matrix norm, and henceforth refers to this norm if not otherwise specified.

By Assumptions 2 and 3, (5) is equivalent to the optimization problem of function F:

2.2 Algorithm Design

The traditional gradient-based ILC updating law (Gu et al., 2019) is

where \(\eta _{k}\) denotes step length, and \(\nabla _{k}\) is the gradient of the objective function. From (6), we have

In (8), calculating the full gradient requires all the information of conjugate matrix \(H^{T}\) and error \(e_{k}\). To give the gradient under partial information, consider decomposing the error \(e_{k}=\left[ e_{k}(1), e_{k}(2), \ldots , e_{k}(N)\right] ^{T}\) according to the time index. Let \(f_{i}\left( u_{k}\right) =\frac{1}{2}\left\| e_{k}(i)\right\| ^{2}=\frac{1}{2}\left( y_{d}(i)-h_{i}^{T} u_{k}\right) ^{2}\), where \(h_{i}=\left[ h_{i 1}, \ldots , h_{i i}, 0, \ldots , 0\right] ^{T}\) denotes the i-th row of the matrix H. Then, equation (6) can be rewritten as

Take the gradient of both sides, we have

Define random gradient \(\tilde{\nabla }_{k}\) as a discrete random variable that takes value uniformly over \(\left\{ \nabla f_{i}\left( u_{k}\right) \right\} _{i=1}^{N}\), satisfying \(P\left( \tilde{\nabla }_{k}=\nabla f_{i}\left( u_{k}\right) \right) =\frac{1}{N}\). Therefore \(\mathbb {E}\left[ \tilde{\nabla }_{k}\right] =\nabla _{k}\), i.e., \(\tilde{\nabla }_{k}\) is unbiased estimation of \(\nabla _{k}\).

Note that by decomposing (6)–(9), calculating the specific value \(\nabla f_{i}\left( u_{k}\right) \) of random vector \(\tilde{\nabla }_{k}\) only requires one row of the system matrix H and one-dimensional information of the error \(e_{k}\). Therefore the decomposition can effectively reduce the information required for each iteration. This technique of gradient decomposition is the basis for solving the ILC of information incompleteness using SVRG method in this chapter. In Sects. 3 and 4, two specific decomposition methods are presented for incompleteness of error and system information, respectively.

Consider the stochastic gradient descent (SGD) method used in Machine Learning. Replacing the full gradient \(\nabla _{k}\) with the stochastic gradient \(\tilde{\nabla }_{k}\) in the ILC updating law (7), we can obtain the SGD-based ILC algorithm. However, the convergence rate of SGD algorithm is \(O(1 / \sqrt{k})\) even under strongly convex condition (Allen-Zhu, 2018), which cannot meet the practical requirements. This is because although the stochastic gradient \(\tilde{\nabla }_{k}\) is unbiased estimate of the full gradient \(\nabla _{k}\), the variance accumulates as the iteration increases. To reduce the variance, Johnson and Zhang (2013) proposed a general stochastic variance reducted gradient (SVRG) descent method. By recording a “snapshot” \(\tilde{u}^{s}\) every few updates to construct an converging upper bound of the gradient, the rate of convergence of SVRG method is \(O\left( \rho ^{k}\right) \) under strongly convex condition and O(1/k) under non-strongly convex condition. Based on this method, the input updated with “snapshot” \(\tilde{u}^{s}\) is denoted as \(u_{s, k}\), and the SVRG-based ILC updating law is

For system (2), the SVRG-based ILC algorithm with updating law (11) is shown in Algorithm 1.

Algorithm 1 has two loops. The outer loop updates the “snapshot” \(\tilde{u}^{s}\) once when the inner loop iterates m times. The iteration length m is taken as an integer multiple of N, which is empirically set to 2N. Line 9 and 10 of Algorithm 1 shows two ways of updating the “snapshot”, Option I and Option II. Option I takes the average of the first \(m-1\) inputs as the “snapshot”, without using \(u_{s, m}\), so actually the inner loop only requires \(m-1\) iterations. The corresponding Option II takes the mth-iteration and uses \(u_{s, m}\) as the “snapshot”. The two “snapshot” updating methods do not change the convergence of Algorithm 1 (Bottou et al., 2018). Due to the limitation of space, we only prove the convergence of Option I under strongly convex conditions and Option II under non-strongly convex conditions in this section and Sect. 4, respectively.

2.3 Convergence Analysis

This subsection is divided into two parts, first giving the convex optimization knowledge required for the proof of this chapter and then analyzing the convergence of the system (2) when the “snapshot” update method of Algorithm 1 is set for Option I.

2.3.1 Preliminaries of Convex Optimization

The basics of convex optimization required for this chapter are given below (Lyubashevsky, 2005).

Definition 1

(Smoothness) Suppose S is an nonempty convex subset of \(\mathbb {R}^{d}\), \(f: S \rightarrow \mathbb {R}\in C^1\). If \(\exists L>0\), s.t. \(\forall x, y \in S\),

then we say that f is L-smooth or \(\nabla f(x)\) is L-Lipschitz continuous on S, where L is the Lipschitz constant.

Definition 2

(Strong convexity) Suppose S is an nonempty convex subset of \(\mathbb {R}^{d}\), \(f: S \rightarrow \mathbb {R}\in C^1\). If \(\exists \sigma >0\), s.t. \(\forall x, y \in S\),

then we say that f is \(\sigma \)-strongly convex on S. When \(\sigma =0\), \(f(y) \ge f(x)+\langle \nabla f(x), y-x\rangle \), f is convex.

Definition 3

(Conditional number) If f is L-smooth and \(\sigma \)-strongly convex, \(\kappa =L / \sigma \) is the conditional number of f.

Theorem 1

For convex function f, the followings are equivalent:

a. \(\nabla f(x)\) is L-Lipschitz continuous,

b. \(f(y) \le f(x)+\langle \nabla f(x), y-x\rangle +\frac{L}{2}\Vert y-x\Vert ^{2}\),

c. \(f(y) \ge f(x)+\langle \nabla f(x), y-x\rangle +\frac{1}{2 L}\Vert \nabla f(y)-\nabla f(x)\Vert ^{2}\),

d. \(\frac{1}{L}\Vert \nabla f(y)-\nabla f(x)\Vert ^{2} \le \langle \nabla f(x)-\nabla f(y), x-y\rangle \).

Proof

\(a \rightarrow b\) : Denote \(g(t)=f(t(y-x)+x)\), then \(f(x)=g(0), f(y)=g(1)\), and \(g^{\prime }(t)=\langle \nabla f(t(y-x)+x), y-x\rangle \). Therefore,

\(b \rightarrow c\) : Denote \(f_{x}(z)=f(z)-\langle \nabla f(x), z\rangle \), for \(\forall z, z^{\prime } \in \mathbb {R}^{d}\),

By using the convexity of f, we have

Therefore \(f_{x}(z)\) is also convex, since \(\nabla f_{x}(z)=\nabla f(z)-\nabla f(x)\), \(f_{x}(z)\) achieves its minimum at \(z=x\). By (12),

Therefore \(f_{x}(z)-f_{x}(x) \ge \frac{1}{2 L}\left\| \nabla f_{x}(z)\right\| ^{2}\), which implies

\(c \rightarrow d\) : Swapping \(\textrm{x}\) and \(\textrm{y}\) in c. , we have

Summing the two equations, we have

\(d \rightarrow a\): \(\Vert \nabla f(y)-\nabla f(x)\Vert ^{2} \le L\langle \nabla f(x)-\nabla f(y), x-y\rangle \le \) \(L\Vert \nabla f(x)-\nabla f(y)\Vert \cdot \Vert x-y\Vert \) by Cauchy inequality, thus \(\Vert \nabla f(x)-\nabla f(y)\Vert \le L\Vert x-y\Vert \). \(\square \)

Theorem 2

Let \(f(x)=\frac{1}{2} x^{T} Q x+q^{T} x+c\), where \(\textrm{Q}\) is positive definite. Then f(x) is L-smooth and \(\sigma \)-strongly convex, where \(L=\lambda _{M}, and\; \sigma =\lambda _{m}\). \( \lambda _{M}, and\; \lambda _{m}\) are the maximum and minimum eigenvalues of Q, respectively.

Proof

Since \(\nabla f(x)=Q x+q\), we have

Hence f(x) is \(\lambda _{M}\)-smooth. It is easy to verify that

Since Q is positive definite, the orthogonal similarity can be diagonalized as \(Q=P^{T} \Lambda P\), where P is the orthogonal matrix, \(\Lambda \) is the diagonal matrix of eigenvalues, and \(\lambda _{m}>0\). Thus

Thus f(x) is \(\lambda _{m}\)-strongly convex. \(\square \)

For system (2) and objective function (6), we have:

Proposition 1

Each \(f_{i}\) is convex and L-smooth.

Proof

For \(f(x)=\frac{1}{2}\left( q^{T} x+c\right) ^{2}\), where \(q=\left[ q_{1}, q_{2}, \ldots , q_{n}\right] ^{T} \in \mathbb {R}^{n}, x \in \mathbb {R}^{n}, c \in \mathbb {R}\). Obviously, f is convex, and \(\nabla f(x)=q q^{T} x+c q\), \(\Vert \nabla f(x)-\nabla f(y)\Vert =\left\| q q^{T}(x-y)\right\| \le \left\| q q^{T}\right\| \cdot \Vert x-\) \(y\Vert \le \Vert q\left\| ^{2} \cdot \right\| x-y \Vert \). Therefore, for each \(f_{i}\) in (9), let \(L=\max _{i} \{\left\| h_{i}\right\| ^{2} \}>0, h_{i}=\left[ h_{i 1}, \ldots , h_{i i}, 0, \ldots , 0\right] ^{T}\), then \(f_{i}\) is L-smooth. \(\square \)

Proposition 2

F is L-smooth and \(\sigma \)-strongly convex.

Proof

By (6), we have

where \(H^{T} H\) is positive definite. Then by Theorem 2, \(F\left( u_{k}\right) \) is L-smooth and \(\sigma \)-strongly convex, where L and \(\sigma \) are the \(\frac{1}{2 N}\) of the maximal and minimal eigenvalues of \(H^{T} H\), respectively. \(\square \)

2.3.2 Proof of Convergence

In Algorithm 1, set the “snapshot” updating as Option I. Then, under the assumptions of system (2), the convergence of Algorithm 1 is given by the following theorem.

Theorem 3

If each \(f_{i}\) is convex and L-smooth, and F is \(\sigma \)-strongly convex. We denote the optimal point \(u^{*}={\text {argmin}}_{u} F(u)\), and assume that m is large enough such that

Then the convergence of Algorithm 1 satisfies

Proof

Since \(f_{i}\) is convex and L-smooth, for any i, by Theorem 1,

Since \(\frac{1}{N} \sum _{i=1}^{n} \nabla f_{i}(u)=\nabla F(u)\), and \(\nabla F\left( u^{*}\right) =0\), we regard \(\nabla f_{i}\) as random vectors which take values from \(\left\{ \nabla f_{i}\right\} _{i=1}^{N}\), then

For any fixed s, we set \(w_{k}=\nabla f_{i}\left( u_{s, k}\right) -\nabla f_{i}\left( \tilde{u}^{s}\right) +\nabla F\left( \tilde{u}^{s}\right) \), then

In the above, we have used the inequality \(\Vert a+b\Vert ^{2} \le 2\Vert a\Vert ^{2}+2\Vert b\Vert ^{2}\), and the property \(\mathbb {E}\left[ \Vert \zeta -\mathbb {E} \zeta \Vert ^{2}\right] \) \(=\mathbb {E}\Vert \zeta \Vert ^{2}-\Vert \mathbb {E} \zeta \Vert ^{2} \le \mathbb {E}\Vert \zeta \Vert ^{2}\), as well as (14). Notice that \(\mathbb {E}\left[ w_{k}\right] =\nabla F\left( u_{s, k}\right) \), thus

In the above, we have used (15) and the convexity of F, i.e., \(\left\langle \nabla F\left( u_{s, k}\right) , u_{s, k}-u^{*}\right\rangle \ge F\left( u_{s, k}\right) -F\left( u^{*}\right) \).

We sum up the expectations of the above equation for \(k=0,1, \ldots , m-1\). Using the convexity of F and the selection of \(\tilde{u}^{s+1}\) under Option I, we have \(F\left( \tilde{u}^{s+1}\right) =F\left( \frac{1}{m} \sum _{k=0}^{m-1} u_{s, k}\right) \le \frac{1}{m} \sum _{k=0}^{m-1} F\left( u_{s, k}\right) \). Therefore,

Thus, we obtain

Summing up the above equation for \(s=0,1, \ldots , S-1\), we have

\(\square \)

Remark 3

Theorem 3 indicates that Algorithm 1 has the rate of convergence \(O\left( \alpha ^{S}\right) \). And this convergence rate is related to the value of \(\alpha \). If the information of the system H is known, to make \(\alpha \) as small as possible, we generally take \(\eta =\frac{0.1}{L}, m=\Theta (n)\), so that the value of \(\alpha \) is close to \(\frac{1}{2}\). If the system information is unknown, we need to find the appropriate \(\eta \) and m by experiment.

3 SVRG-Based ILC Under Random Data Dropouts

This section follows the SISO system in Sect. 2, but assumes that random data dropouts occur in the error signal transmission. This section consists of four parts: system description, algorithm design, performance analysis, and numerical simulation.

3.1 System Description

In SISO system (2), we still hold Assumptions 1–3, but assuming that data dropouts occur in the transmission of the error signal, as shown in Fig. 2. We further assume that the dropouts satisfy the Bernoulli distribution model (Shen, 2018). Therefore, the ILC updating law (7) becomes

where \(\Gamma _{k}={\text {diag}}\left\{ \gamma _{k}(1), \gamma _{k}(2), \ldots , \gamma _{k}(N)\right\} \). \(\left\{ \gamma _{k}(i)\right\} _{i=1}^{N}\) is i.i.d following Bernoulli distribution. Let \(\gamma \triangleq \mathbb {E}\left[ \gamma _{k}(i)\right] \) be the successful transmission rate, where \(\gamma _{k}(i)=0\) means data dropout occurs in the i-th time of k-th batch, and otherwise, data dropout does not occur.

3.2 Algorithm Design

Based on gradient descent method, there are two main approaches to solve the data dropouts problem:

-

(1)

Obtain the full gradient by retransmissing. For each transmission, the controller stores the successfully transmitted data and asks the lost data to be retransmitted until all data are received. This method eliminates the effect of data dropouts by retransmissing.

-

(2)

Use successfully transmitted data to construct random gradient. The data is updated directly using successfully transmitted data each iteration, as shown in the update law (16).

The first method requires a lot of wasted time when data retransmission is slow. Although the second method saves the time of data retransmission, the actual running time may be larger than first method when data retransmission.

Based on the framework of Algorithm 1, the SVRG-based ILC under error data dropouts can be constructed by utilizing the second method for each iteration, but calculating the full gradient every several iterations using the first method. This algorithm does not require data retransmission in most cases compared to the first method and has a significant improvement in convergence speed compared to the second method. Thus it can achieve a good balance between convergence rate and data retransmission speed, and it is more suitable for general data dropout cases.

The formal construction of the algorithm is given below.

Firstly, we take the random gradient \(\tilde{\nabla }_{k} \triangleq -\frac{1}{\gamma N} H^{T} \Gamma _{k} e_{k}\), and we note that \(\mathbb {E}\left[ \tilde{\nabla }_{k}\right] =-\frac{1}{N} H^{T} e_{k}=\nabla _{k}\). For the convenience of proof, we present \(\tilde{\nabla }_{k}\) as

where \(\nabla f_{i}\) is defined in the same way as (10). Notice that if there is no dropout, \(\gamma =1\), and therefore (17) is equivalent to (10).

Remark 4

Equation (17) is similar to the Batch Gradient Descent (BGD) method in Machine Learning, but they are fundamentally different. In (17), the number of \(\nabla f_{i}\) in each summation \(\sum _{i} \nabla f_{i}\) varies according to the value of the random vector \(\{\gamma _{k}(i)\}_{i=1}^{N}\). But in BGD, the number of \(\nabla f_{i}\) is fixed. Therefore, the algorithm based on gradient \(\nabla \tilde{F}_{k}\) cannot be directly applied to BGD.

Secondly, similar to (11), ILC updating law under random data dropouts is constructed:

Finally, change the iteration length m in Algorithm 1 from 2N to \(\lceil 2 \gamma N\rceil \). Because the number of summation in each batch is \(\mathbb {E}\left[ \sum _{i=1}^{N} \gamma _{k}(i)\right] =\gamma N\) in the desired sense, \(\nabla \tilde{F}_{k}\) is equivalent to \(\gamma N\) sum of \(\nabla f_{i}\).

In conclusion, SVRG-based ILC under random data dropouts is shown in Algorithm 2.

For the “snapshot” of Algorithm 2, the update method is taken as Option I in Algorithm 1, and the recommended iteration length is set to \(\lceil 2 \gamma N\rceil \). When \(\gamma \) is unknown, we need to find the appropriate m by experiments.

3.3 Convergence Analysis

By Proposition 1, every \(f_{i}\) is convex and L-smooth, and the following proposition holds:

Proposition 3

Each value of \(\nabla \tilde{F}_{k}\left( u_{k}\right) \) is convex and \(L^{\prime }\)-smooth, where \(L^{\prime }=L / \gamma \), and L is the Lipschitz constant corresponding to the smoothness of \(f_{i}\) in Proposition 1.

Proof

Since every \(f_{i}\) is convex and L-smooth, \(\frac{1}{\gamma N} \sum _{i=1}^{N} \nabla f_{i}\left( u_{k}\right) \) is \(L^{\prime }\)-Lipschitz continuous, where \(L^{\prime }=L/\gamma \). Because each value of \(\nabla \tilde{F}_{k}\left( u_{k}\right) \) is a linear combination of \(\nabla f_{i}\), the summation number does not exceed \(\frac{1}{\gamma N} \sum _{i=1}^{N} \nabla f_{i}\left( u_{k}\right) \). Therefore \(\nabla f_{i}\left( u_{k}\right) \); as a result, \(\nabla \tilde{F}_{k}\left( u_{k}\right) \) is convex and \(L^{\prime }\)-smooth. \(\square \)

For the convergence of Algorithm 2, we have the following theorem.

Theorem 4

If each value of \(\nabla \tilde{F}_{k}\left( u_{k}\right) \) is convex and \(L^{\prime }\)-smooth, F is L-smooth and \(\sigma \)-strongly convex, and for the optimal point \(u^{*}={\text { argmin}}_{u} F(u)\), assuming that m is large enough s.t.

Then the convergence of Algorithm 2 satisfies

Proof

By Proposition 3, replacing \(f_{i}\) in the proof of Theorem 3 with \(\nabla \tilde{F}_{k}\), equation (13) is rewritten as:

The corresponding Eq. (14) is

The rest of the proof repeats the proof of Theorem 3. \(\square \)

Remark 5

Theorem 4 shows that Algorithm 2 also converges linearly, and the speed of convergence is related to \(\alpha \). For the choice of m, note that Theorem 4 differs from Theorem 3 in the Lipschitz constant corresponding to the smoothness of the condition. By Proposition 3, with \(L^{\prime }=L / \gamma \), for

We can consider multipling m by \(\gamma \) times, i.e., changing m from 2N to \(\lceil 2 \gamma N\rceil \), to approximately keep the convergence of Algorithm 2.

3.4 Numerical Simulation

In SISO system (1), take the system matrix (A, B, C) as

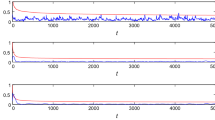

Take desired trajectory \(y_{d}(t)=\sin (2 \pi t / 50)\), time length \(N=50\), initial state \(x_{0}=0\), and initial input \(u_{0}=0\). When \(\gamma =0.9\) and \(\gamma =0.6\), the ILC based on full gradient (GD), stochastic gradient (SGD) and stochastic variance reduced gradient (SVRG) is shown in Fig. 3.

When calculating the full gradient, each data retransmission increases 1 to the iteration number, which means that the controller skips one round of computation until all error information is completely transmitted. Take the optimal step that the three methods can converge.

When \(\gamma =0.9\), Fig. 3a shows that the SVRG-based ILC converges slightly faster than the GD- and SGD-based ILC. When \(\gamma =0.6\), Fig. 3b illustrates a significant difference in the convergence speed of the three types ILC, from fast to slow for SVRG-, SGD-, and GD-based ILC. In summary, the SVRG-based ILC under error data dropouts, i.e., Algorithm 2, outperforms the GD- and SGD-based ILC under different successful transmission rates, and the difference becomes more significant as the \(\gamma \) decreases.

4 Model-Free SVRG-Based ILC for MIMO Systems

This section extends the Algorithm 1 in Sect. 2 from SISO systems to MIMO systems. Firstly, a system description of the discrete linear MIMO system is given. Secondly, the existing model-free data-driven methods are introduced, and a new model-free data-driven ILC based on SVRG method is constructed. Thirdly, the convergence of the algorithm under non-strongly convex conditions is proved. Finally, numerical simulations are established to verify the convergence performance of SVRG-based ILC in deterministic and noisy systems.

4.1 System Description

Consider the following discrete linear multi-input multi-ouput (MIMO) system \( \mathscr {J}\), which has q inputs \(u_{k}^{1}, u_{k}^{2}, \ldots , u_{k}^{q}\), and p outputs \(y_{k}^{1}, y_{k}^{2}, \ldots , y_{k}^{p}\). Rewrite the system in the form of (2),

where

Each \(J_{i j} \in \mathbb {R}^{N \times N}\) has the same properties as matrix H in (2). \(y_{k}^{i}=\left[ y_{k}^{i }(1), \ldots , y_{k}^{i}(N)\right] ^{T}\), \(u_{k}^{j}=\left[ u_{k}^{j}(0), \ldots , u_{k}^{j}(N-1)\right] ^{T}\), N is the length of time, and the desired trajectory is

For this system, consider the following assumptions:

Assumption 4

System matrix \(\mathscr {J} \ne 0\).

Assumption 5

The dimension of input signal does not exceed the dimension of output signal, i.e., \(p \ge q\).

Remark 6

If \(p=q=1\), the system (19) degenerates to SISO system (2). Unlike Assumptions 1 and 4 can no longer guarantee that the system matrix \(\mathscr {J}\) is of full rank. Assumption 4 is the most fundamental, since if \(\mathscr {J} = 0\), any input signal cannot track \(y_{d}\). Assumption 2 is also relaxed from the system, the reasons will be given in the proof of the convergence. In addition, Assumption 5 is added for the MIMO system, because if the input dimension is larger than the output dimension, it means that there is a redundant information.

For desired trajectory \(\boldsymbol{y}_d \), consider control objective similar to (6), i.e., to find a sequence \(\left\{ \boldsymbol{u}_k \right\} \), s.t.

where \(\boldsymbol{u}^{*}\) is the input when G takes the minimum value, and the error signal \(\boldsymbol{e}_k \) is

4.2 Algorithm Design

The full gradient of G in (20) is \(\nabla _{k}=\nabla G\left( \boldsymbol{u}_k \right) =-\frac{1}{p} \mathscr {J}^{T}\left( \boldsymbol{y}_d -\mathscr {J} \boldsymbol{u}_k \right) \). We need the information of \(\mathscr {J}^{T}\) to calculate the full gradient. However, in model-free learning, we want to obtain the gradient by conducting experiments on system \(\mathscr {J}\) only. For this purpose, Oomen et al. (2014) gives the following method to estimate \(\mathscr {J}^{T}\).

Lemma 1

For SISO system \(\mathscr {J}=J_{11}\), its transpose \(\mathscr {J}^{T}\) can be obtained by matrix multiplication

where \(\mathcal {T}\) is the N-order permutation matrix whose anti-diagonal is 1, i.e.,

Therefore the full gradient of SISO system \(-\frac{1}{p}\left( J_{11}\right) ^{T} \boldsymbol{e}_k =-\frac{1}{p} \mathcal {T}_{N} J_{11} \mathcal {T}_{N} \boldsymbol{e}_k \) can be obtained by a single experiment.

Lemma 2

For MIMO system \(\mathscr {J}\), whose transpose \(\mathscr {J}^{T}\) is

For symmetric MIMO systems, \(-\tilde{\mathscr {J}} \ne \mathcal {J}\), so the full gradient \(-\frac{1}{p} \mathscr {J}^{T} \boldsymbol{e}_k \) of MIMO system cannot be obtained from a single experiment on system \( \mathscr {J}\). The method proposed by Oomen et al. (2014) estimates \(\mathscr {J}^{T}\) from pq experiments:

where \(\mathcal {L}^{i j}\) is a matrix consisting of \(q \times p\) blocks. In \(\mathcal {L}^{i j}\), the (i, j) block is unit matrix of order N, and the remaining blocks are all 0:

In (21), the left multiplication matrix \(\mathcal {L}^{i j}\) takes the i-th row of \(\mathscr {J}\), and the right multiplication matrix \(\mathcal {L}^{i j}\) takes out the j-th column of \(\mathscr {J}\). The two multiplications lead to a great loss of system information. We would like to improve the above method by extracting as much system information as possible. Therefore, consider the following decomposition as (9).

Set \(g_{i}\left( \boldsymbol{u}_k \right) =\frac{1}{2}\left\| e_{k}^{i}\right\| ^{2}=\frac{1}{2}\left\| y_{d}^{i}-\sum _{j=1}^{q} J_{i j} u_{k}^{j}\right\| ^{2}\), then (20) can be written as

Taking gradient on the both sides, we have

where

Note that the \(\nabla g_{i}\left( \boldsymbol{u}_k \right) \) can be calculate by one line of the system matrix. The following lemma can help us design a controller to take out this information.

Lemma 3

Calculating \(\nabla g_{i}\left( \boldsymbol{u}_k \right) \) only needs a single experiment.

Proof

First, we note that

where \(\mathcal {T}_{i}^{p N} \in \mathbb {R}^{N \times p N}\) is the matrix whose i-th block is \(\mathcal {T}_{N}\) and the rest blocks are 0.

For \(e_{k}^{i} \in \mathbb {R}^{N}\),

where \(\mathscr {L}_{i} \in \mathbb {R}^{N \times q N}\) is the matrix whose i-th block is an identity matrix of order N and the rest blocks are 0.

The matrix multiplication method can retrieve a row of information of the system, but it cannot directly obtain the matrix for further computation. Therefore, simple and easy-to-implement linear mappings are considered to change the matrix to suitable dimension.

Set \(E_{k}^{i} \in \mathbb {R}^{q N \times q}\) and define a linear mapping \({\Psi }\):

where \(E_{k}^{i}\) is the matrix blocked by \(N\times 1\), with \(e_{k}^{i}\) on the diagonal and 0 in the rest of the blocks.

Since

we can define the linear map \(\Phi \): \(\mathbb {R}^{N \times q} \rightarrow \mathbb {R}^{q N}\). It maps matrix in \(\mathbb {R}^{N \times q}\) to \(\mathbb {R}^{q N}\) by arranging each column of the matrix in order to a vector, i.e.,

Combining above, we have

Thus, \(\nabla g_{i}\left( \boldsymbol{u}_k \right) \) can be calculated in a single experiment. \(\square \)

Based on Lemma 3, a controller can be designed as shown in Fig. 4.

This controller can reduce the calculation of the full gradient in (21) from pq experiments to p experiments. However, when the system is noisy, there is no guarantee that the partial gradient estimated for each experiment \(\nabla g_{i}\left( \boldsymbol{u}_k \right) \) all correspond to the same full gradient \(\nabla G\left( \boldsymbol{u}_k \right) \). In nosiy systems, we can use the random gradient \(\tilde{\nabla }_{k}\), which takes values uniformly \(\left\{ \nabla g_{i}\left( \boldsymbol{u}_k \right) \right\} _{i=1}^{N}\) s.t. \(P\left( \tilde{\nabla }_{k}=\nabla g_{i}\left( \boldsymbol{u}_k \right) \right) =\frac{1}{N}\). However, the SGD method converges slowly. Combining the convergence speed and the effect of noise in the system, we consider to design a SVRG-based ILC algorithm similar to Algorithm 1, as shown in Algorithm 3.

Algorithm 3 uses Option II in Algorithm 1 to update the “snapshot”. If m is twice as many as p, a total of 2p experiments are required for each internal iteration. p experiments are required to compute the full gradient, so a total of 3p system experiments are required for each iteration. Compared with the SGD-based ILC, Algorithm 3 requires p more systematic experiments per m iterations to compute the full gradient in order to accelerate the convergence.

4.3 Convergence Analysis

From the following two propositions, we will see that G is not necessarily strongly convex.

Proposition 4

G and \(g_{i}\) are convex and L-smooth.

Proof

We note that

where \(J_{i}=\left[ J_{i 1} J_{i 2} \ldots J_{i q}\right] , \boldsymbol{x} \in \mathbb {R}^{q N}. g_{i}\) is convex, and

Let \(L=\max _{i}\left\{ \sum _{j=1}^{q} \lambda _{i j}\right\} \), where \(\lambda _{i j}\) is the maximum eigenvalue of \(J_{i j}^{T} J_{i j}\). Since \(J_{i j}^{T} J_{i j}\) is always semipositive definite, \(\lambda _{i j}=0\) if and only if \(J_{i j}=0\). Hence by Assumption 4, \(L>0\), \(g_{i}\) is convex and L-smooth.

Since G is a convex combination of \(g_{i}\), G is convex and L-smooth. \(\square \)

When \(p=q=1, \mathscr {J}=J_{11}={\text {diag}}\{1,0, \ldots , 0\}\), G is not strong convex. The following proposition gives a sufficient condition for G to be strongly convex.

Proposition 5

If the system matrix \(\mathscr {J}\) is of full column rank, then G is \(\sigma \)-strongly convex.

Proof

By Assumption 5, \(p \ge q\), and

Since \(\mathscr {J}^{T} \mathscr {J}\) is positive definite if and only if \(\mathscr {J}\) has full column rank. Therefore by Theorem 2.2, \(G\left( u_{k}\right) \) is \(\sigma \)-strongly convex when \(\mathscr {J}\) has full column rank, and the strong convexity factor is \(\frac{1}{2 p}\) of the minimum eigenvalue of matrix \(\mathscr {J}^{T} \mathscr {J}\). \(\square \)

When G is strongly convex, we can prove that Algorithm 3 converges linearly similar to Algorithm 1 (Bottou et al., 2018). The convergence proof of Algorithm 3 under the strongly convex condition is omitted because of limited space. We only give the convergence proof under non-strongly convex.

First we have the following lemma (Reddi et al., 2016).

Lemma 4

Assume that \(c_{k}, c_{k+1}, \beta >0\),

If \(\eta , \beta \) and \(c_{k+1}\) are chosen such that

then each iteration of Algorithm 3 has an upper bound

where \(R_{s, k} \triangleq \mathbb {E}\left[ G\left( \boldsymbol{u_{s, k}}\right) +c_{t}\left\| \boldsymbol{u_{s, k}}-\tilde{\boldsymbol{u}}^s\right\| ^{2}\right] \).

Proof

Since \(g_{i}\) is L-smooth,

For fixed s, let \(\boldsymbol{w}_k =\nabla g_{i}\left( \boldsymbol{u_{s,k}}\right) -\nabla g_{i}\left( \tilde{\boldsymbol{u}}^s\right) +\boldsymbol{\mu }_s \), then \(\mathbb {E}\left[ \boldsymbol{w}_k \right] =\nabla G\left( \boldsymbol{u_{s,k}}\right) \). Since \(\boldsymbol{u_{s, k+1}}-\boldsymbol{u_{s,k}}=-\eta \boldsymbol{w}_k \), we use the above equation and take the expectation on both sides to obtain

In addition, for \(\left\| \boldsymbol{u_{s, k+1}}-\tilde{\boldsymbol{u}}^s\right\| ^{2}\) , we have

In the above, we have used Young’s inequality \(\langle x, y\rangle \le \frac{1}{2 \beta }\Vert x\Vert ^{2}+\frac{\beta }{2}\Vert y\Vert ^{2}\).

For \(\mathbb {E}\left[ \left\| \boldsymbol{w_{k}}\right\| ^{2}\right] \), we have the following estimation:

where the inequality is given by \(\Vert a+b\Vert ^{2} \le 2\Vert a\Vert ^{2}+2\Vert b\Vert ^{2}\), \(\mathbb {E}\left[ \Vert \zeta -\mathbb {E} \zeta \Vert ^{2}\right] =\mathbb {E}\Vert \zeta \Vert ^{2}-\Vert \mathbb {E} \zeta \Vert ^{2} \le \mathbb {E}\Vert \zeta \Vert ^{2}\) and the smoothness of \(g_{i}\), i.e., \(\Vert \nabla g_{i}\left( \boldsymbol{u}_{s,k} \right) -\) \(\nabla g_{i}\left( \tilde{\boldsymbol{u}}^s\right) \Vert \le \Vert \boldsymbol{u_{s,k}}-\tilde{\boldsymbol{u}}^s \Vert ^{2}\).

Denoting \(R_{s, k} \triangleq \mathbb {E}\left[ G\left( \boldsymbol{u_{s,k}}\right) +c_{k}\left\| \boldsymbol{u_{s,k}}-\tilde{\boldsymbol{u}}^s\right\| ^{2}\right] \), by (24) and (25), we have

From (26), we have

Let \(\textrm{T}_{k} \triangleq \left( \eta -\frac{c_{k+1} \eta }{\beta }-\eta ^{2} L-2 c_{k+1} \eta ^{2}\right) , \textrm{T}_{k}>0\), then

\(\square \)

Because of the complexity of non-strongly convex problem, we do not consider convergence criteria in Theorems 3 and 4 such as \(\mathbb {E}\left[ G(\boldsymbol{u})-G\left( \boldsymbol{u}^{*}\right) \right] \le \varepsilon \), but instead proving \(\mathbb {E}\left[ \Vert \nabla G(\boldsymbol{u})\Vert ^{2}\right] \le \varepsilon \) for Algorithm 3. Note that if G is \(\sigma \)-strongly convex, it is easy to verify that

Thus by \(\mathbb {E}\left[ \Vert \nabla G(\boldsymbol{u})\Vert ^{2}\right] \le \varepsilon \), we have \(\mathbb {E}\left[ G(\boldsymbol{u})-G\left( \boldsymbol{u}^{*}\right) \right] \le \varepsilon \). However, this relationship does not always hold under non-strongly convex case (Ghadimi & Lan, 2013). The following theorem gives the proof of the convergence \(\mathbb {E}\left[ \Vert \nabla G(\boldsymbol{u})\Vert ^{2}\right] \le \varepsilon \) of Algorithm 3.

Theorem 5

Suppose each \(g_{i}\) is convex and L-smooth, and G is convex. For \(0 \le k \le m-1\), \(c_{k}, c_{k+1}, \beta >0, c_{m}=0\) satisfying

and \(\eta \), \(\beta \), \(c_{k+1}\) are chosen such that

Let \(\tau _{m}=\min _{k} \mathrm {~T}_{k}, \boldsymbol{u}_a \) be a uniformly distributed random vector with values \(\{\boldsymbol{u}_{s,k} \mid 0 \le s \le S-1,0 \le k \le m -1\}\). Denote that \(\boldsymbol{u}^{*}={\text {argmin}}_{\boldsymbol{u}} G(\boldsymbol{u})\), then Algorithm 3 satisfies

Proof

We take \(k=0,1, \ldots , m-1\) in Lemma 1 and sum up to obtain

By the definition of \(R_{s, k}\), we choose \(\boldsymbol{u}_{s, 0}=\tilde{\boldsymbol{u}}^s, \boldsymbol{u_{s, m}}=\tilde{\boldsymbol{u}}^{s+1}\) in \(\tilde{\boldsymbol{u}}^s\). We take \(s=0,1, \ldots , S-1\) in the above and sum up to get

In the above, use the definition of \(\boldsymbol{u}_a \) and \(G\left( \tilde{\boldsymbol{u}}^s\right) \ge G\left( \boldsymbol{u}^{*}\right) \). \(\square \)

Remark 7

Theorem 5 shows that the convergence of Algorithm 3 is O(1/Sm) under non-strongly convex conditions. The convergence of the corresponding SGD-based ILC under non-strongly convex conditions is \(O(1 / \sqrt{S m})\) (Johnson & Zhang (2013)). Moreover, the theorem states that the convergence of Algorithm 3 is only related to the step size \(\eta \) but not to the choice of the number of iterations m. Since the system information is unknown, \(\eta \) needs to be estimated by experiment.

Remark 8

Theorem 5 indicates that \(G\left( \boldsymbol{u}_k \right) \) can approach the optimal value \(G\left( \boldsymbol{u}^{*}\right) =(1 / 2 p) \Vert \boldsymbol{y}_d -\) \(\mathscr {J} \boldsymbol{u}^{*} \Vert ^{2}\), i.e., \(\boldsymbol{u}_k \) can converge to the optimal input \(\boldsymbol{u}^{*}\). Similarly, the Assumption 2 can also be changed to \(\lim _{k \rightarrow \infty } F\left( u_{k}\right) =F\left( u^{*}\right) \) without affecting the convergence of Algorithm 1 and Algorithm 2. If \(F\left( u^{*}\right) =0\), then \(\lim _{k \rightarrow \infty } F\left( u_{k}\right) =0\).

Remark 9

System (21) degenerates to SISO system when both input and output are one dimension. At this point, the theorem indicates that when system (2) satisfies Assumption 4 (Assumption 1 need not to be satisfied), Algorithm 1 and Algorithm 2 still converge with updating the “snapshot” as Option II. But the convergence rate becomes O(1/Sm) when the objective function is not strongly convex.

4.4 Numerical Simulation

We take MIMO systems with \(21\times 21\) input-output dimensions randomly generated by the Matlab drss function (Aarnoudse & Oomen, 2021) and set the time length to \(N=42\). The desired trajectory \(\boldsymbol{y}_d \) is 0.025 in each dimension. Model-free ILC based on full gradient (GD), stochastic gradient (SGD), and stochastic variance reduced gradient (SVRG) are performed in Fig. 5. We take the optimal step of each algorithm after multiple experiments.

As shown in Fig. 5a, the GD- and SVRG-based ILC converge similarly for deterministic systems, and both are faster than the SGD-based ILC algorithm.

For randomly generated MIMO systems with input-output dimensions of \(21\times 21\) and \(N=42\), we add Gaussian white noise to the system.

From Fig. 5b, we can see that both GD- and SGD-based ILC converge worse than SVRG type ILC when the system is noisy, and SVRG-based ILC can still maintain excellent convergence when the system is noisy.

5 Conclusions

This chapter focuses on exploring ILC based on the SVRG method. Firstly, Sect. 2 gives the basic framework of SVRG-based ILC and proves that the algorithm converges at a rate of \( O(\alpha ^k)\) under smooth and strongly convex condition. Secondly, Sect. 3 designs a SVRG-based ILC algorithm to solve random error data dropouts and proves that the algorithm converges linearly. Finally, Sect. 4 constructs a model-free SVRG-based ILC by improving the existing model-free algorithm for MIMO systems and proves that the convergence rate is O(1/k) under smooth and convex condition. Compared to the GD- and SGD-based ILC, two numerical simulations in Sects. 3 and 4 verify that the SVRG-based ILC has superior convergence rate in both the random error dropouts and model-free contexts, respectively.

It should be noted that the SVRG-based ILC framework given in this chapter is not only applicable to the random error dropouts and model-free problems but can also be utilized to solve other error or system information deficient problems by properly decomposing the control objectives. Future research includes comparing its advantages and disadvantages with the stochastic approximation (SA) method, extending the framework to other information deficient problems, and attempting to develop algorithms with faster convergence based on this framework.

References

Aarnoudse, L., & Oomen, T. (2020). Model-free learning for massive MIMO systems: Stochastic approximation Adjoint iterative learning control. IEEE Control Systems Letters,5(6), 1946–1951.

Aarnoudse, L., & Oomen,T. (2021). Conjugate gradient MIMO iterative learning control using data-driven stochastic gradients. In 2021 60th IEEE Conference on Decision and Control (CDC) (pp. 3749–3754).

Allen-Zhu, Z. (2018). Katyusha: The first direct acceleration of stochastic gradient methods. Journal of Machine Learning Research, 18, 1–51.

Arimoto, S., Kawamura, S., & Miyazaki, F. (1984). Bettering operation of robots by learning. The Journal of Intelligent and Robotic Systems, 1(2), 123–140.

Bottou, L., Curtis, F. E., & Nocedal, J. (2018). Optimization methods for large-scale machine learning. SIAM Review,60(2), 223–311.

Ghadimi, S., & Lan, G. (2013). Stochastic first- and zeroth-order methods for nonconvex stochastic programming. SIAM Journal on Optimization,23, 2341–2368.

Gu, P., Tian, S., & Chen, Y. (2019). Iterative learning control based on Nesterov accelerated gradient method. IEEE Access,7, 115836–115842.

Johnson, R., & Zhang, T. (2013). Accelerating stochastic gradient descent using predictive variance reduction. Advances in Neural Information Processing Systems,1, 315–323.

Nesterov, Y. (2005). Introductory lectures on convex programming volume: A basic course (Vol. I). Kluwer Academic Publishers.

Oomen, T., van der Maas, R., Rojas, C. R., & Hjalmarsson, H. (2014). Iterative data-driven H-infinity norm estimation of multivariable systems with application to robust active vibration isolation. IEEE Transactions on Control Systems Technology,22(6), 2247–2260.

Owens, D. H., Hatonen, J. J., & Daley, S. (2009). Robust monotone Radient-based discrete-time iterative learning control. The International Journal of Robust and Nonlinear Control,19(6), 634–661.

Reddi, J. S., Hefny, A., Sra, S., Poczos, B., & Smola, A. (2016). Stochastic variance reduction for nonconvex optimization. In Proceedings of the 33rd International Conference on International Conference on Machine Learning (Vol. 48, pp. 314–323).

Shen, D. (2018). Iterative learning control with incomplete information: A survey. IEEE/CAA Journal of Automatica Sinica, 5(5), 885–901.

Yang, X., & Ruan, X. (2017). Reinforced gradient-type iterative learning control for discrete linear time-invariant systems with parameters uncertainties and external noises. IMA Journal of Mathematical Control and Information,34(4), 1117–1133.

Acknowledgements

This work was supported by the National Natural Science Foundation of China (62173333), Beijing Natural Science Foundation (Z210002), and Research Fund of Renmin University of China (2021030187).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2023 The Author(s)

About this paper

Cite this paper

Gao, Y., Shen, D., Qian, J. (2023). Iterative Learning Control Based on Random Variance Reduction Gradient Method. In: Zheng, Z. (eds) Proceedings of the Second International Forum on Financial Mathematics and Financial Technology. IFFMFT 2021. Financial Mathematics and Fintech. Springer, Singapore. https://doi.org/10.1007/978-981-99-2366-3_5

Download citation

DOI: https://doi.org/10.1007/978-981-99-2366-3_5

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-99-2365-6

Online ISBN: 978-981-99-2366-3

eBook Packages: Economics and FinanceEconomics and Finance (R0)