Abstract

Image restoration under sparsity constraints has received increased attention in recent years. This problem can be formulated as a nondifferentiable convex optimization problem whose solution is challenging. In this work, the non-differentiability of the objective is addressed by reformulating the image restoration problem as a nonnegatively constrained quadratic program which is then solved by a specialized Newton projection method where the search direction computation only requires matrix-vector operations. A comparative study with state-of-the-art methods is performed in order to illustrate the efficiency and effectiveness of the proposed approach.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

This work is concerned with the general problem of image restoration under sparsity constraints formulated as

where \(\mathbf {A}\) is a \({m\times n}\) real matrix (usually \(m\le n\)), \(\mathbf {x}\in \mathbb {R}^{n}\), \(\mathbf {b}\in \mathbb {R}^{m}\) and \(\lambda \) is a positive parameter. (Throughout the paper, \(\Vert \cdot \Vert \) will denote the Euclidean norm). In some image restoration applications, \(\mathbf {A}=\mathbf {K}\mathbf {W}\) where \(\mathbf {K}\in \mathbb {R}^{m\times n}\) is a discretized linear operator and \(\mathbf {W}\in \mathbb {R}^{n\times n}\) is a transformation matrix from a domain where the image is a priori known to have a sparse representation. The variable \(\mathbf {x}\) contains the coefficients of the unknown image and the data \(\mathbf {b}\) is the measurements vector which is assumed to be affected by Gaussian white noise intrinsic to the detection process. The formulation (1) is usually referred to as synthesis formulation since it is based on the synthesis equation of the unknown image from its coefficients \(\mathbf {x}\).

The penalization of the \(\ell _1\)-norm of the coefficients vector \(\mathbf {x}\) in (1) simultaneously favors sparsity and avoids overfitting. For this reason, sparsity constrained image restoration has received considerable attention in the recent literature and has been successfully used in various areas. The efficient solution of problem (1) is a critical issue since the nondifferentiability of the \(\ell _1\)-norm makes standard unconstrained optimization methods unusable. Among the current state-of-the-art methods there are gradient descent-type methods as TwIST [5], SparSA [14], FISTA [2] and NESTA [3]. GPSR [6] is a gradient-projection algorithm for the equivalent convex quadratic program obtained by splitting the variable \(\mathbf {x}\) in its positive and negative parts. Fixed-point continuation methods [9], as well as methods based on Bregman iterations [7] and variable splitting, as SALSA [1], have also been recently proposed. In [12], the classic Newton projection method is used to solve the bound-constrained quadratic program formulation of (1) obtained by splitting \(\mathbf {x}\). A Modified Newton projection (MNP) method has been recently proposed in [11] for the analysis formulation of the \(\ell _1\)-regularized least squares problem where \(\mathbf {W}\) is the identity matrix and \(\mathbf {x}\) represents the image itself. The MNP method uses a fair regularized approximation to the Hessian matrix so that products of its inverse and vectors can be computed at low computational cost. As a result, the only operations required for the search direction computation are matrix-vector products.

The main contribution of this work is to extend the MNP method of [11], developed for the case \(\mathbf {W}=\mathbf {I}_n\), to the synthesis formulation of problem (1) where \(\mathbf {W}\ne \mathbf {I}_n\). In the proposed approach, problem (1) is firstly formulated as a nonnegatively constrained quadratic programming problem by splitting the variable \(\mathbf {x}\) into the positive and negative parts. Then, the quadratic program is solved by a special purpose MNP method where a fair regularized approximation to the Hessian matrix is proposed so that products of its inverse and vectors can be computed at low computational cost. As a result, the search direction can be efficiently obtained. The convergence of the proposed MNP method is analyzed. Even if the size of the problem is doubled, the low computational cost per iteration and less iterative steps make MNP quite efficient. The performance of MNP is evaluated on several image restoration problems and is compared with that of some state-of-the-art methods. The results of the comparative study show that MNP is competitive and in some cases is outperforming some state-of-the-art methods in terms of computational complexity and achieved accuracy.

The rest of the paper can be outlined as follows. In Sect. 2, the quadratic program formulation of (1) is derived. The MNP method is presented and its convergence is analyzed in Sect. 3. In this section, the efficient computation of the search direction is also discussed. In Sect. 4, the numerical results are presented. Conclusions are given in Sect. 5.

2 Nonnegatively Constrained Quadratic Program Formulation

The proposed approach firstly needs to reformulate (1) as a nonnegatively constrained quadratic program (NCQP). The NCQP formulation is obtained by splitting the variable \(\mathbf {x}\) into its positive and negative parts [6], i.e.

Problem (1) can be written as the following NCQP:

where \(\mathbf {1}\) denotes the n-dimensional column vector of ones. The gradient \(\mathbf {g}\) and Hessian \(\mathbf {H}\) of \(\mathcal {F}(\mathbf {u},\mathbf {v})\) are respectively defined by

We remark that the computation of the objective function and its gradient values requires only one multiplication by \(\mathbf {A}\) and one by \(\mathbf {A}^H\), nevertheless the double of the problem size. Since \(\mathbf {H}\) is positive semidefinite, we propose to approximate it with the positive definite matrix \(\mathbf {H}_\tau \):

where \(\tau \) is a positive parameter and \(\mathbf {I}\) is the identity matrix of size n.

Proposition 21

Let \(\sigma _1,\sigma _2,\ldots ,\sigma _n\) be the nonnegative eigenvalues of \(\mathbf {A}\) in nonincreasing order:

Then, \(\mathbf {H}_\tau \) is a positive definite matrix whose eigenvalues are

The proof is immediate since the spectrum of \(\mathbf {H}_\tau \) is the union of the spectra of \(\mathbf {A}^H\mathbf {A}+\tau \mathbf {I}+\mathbf {A}^H\mathbf {A}\) and \(\mathbf {A}^H\mathbf {A}+\tau \mathbf {I}-\mathbf {A}^H\mathbf {A}\).

The following proposition shows that an explicit formula for the inverse of \(\mathbf {H}_\tau \) can be derived.

Proposition 22

The inverse of the matrix \(\mathbf {H}_\tau \) is the matrix \(\mathbf {M}_\tau \) defined as

where

Proof

We have

Similarly, we can prove that

We now show that \(\mathbf {M}_1\mathbf {M}_2=\mathbf {M}_2\mathbf {M}_1\). We have

After some simple algebra, it can be proved that

and thus

From (8), (9) and (10), it follows

3 The Modified Newton Projection Method

The Algorithm. The Newton projection method [4] for problem (2) can be written as

where \([\cdot ]^+\) denotes the projection on the positive orthant, \(\mathbf {g}_\mathbf {u}^{(k)}\) and \(\mathbf {g}_\mathbf {v}^{(k)}\) respectively indicate the partial derivatives of \(\mathcal {F}\) with respect to \(\mathbf {u}\) and \(\mathbf {v}\) at the current iterate. The scaling matrix \(\mathbf {S}^{(k)}\) is a partially diagonal matrix with respect to the index set \(\mathcal {A}^{(k)}\) defined as

and \(\varepsilon \) is a small positive parameter.

The step-length \(\alpha ^{(k)}\) is computed with the Armijo rule along the projection arc [4]. Let \(\mathbf {E}^{(k)}\) and \(\mathbf {F}^{(k)}\) be the diagonal matrices [13] such that

In MNP, we propose to define the scaling matrix \(\mathbf {S}^{(k)}\) as

Therefore, the complexity of computation of the search direction \(\mathbf {p}^{(k)}=\mathbf {S}^{(k)}\mathbf {g}^{(k)}\) is mainly due to one multiplication of the inverse Hessian approximation \(\mathbf {M}_\tau \) with a vector since matrix-vector products involving \(\mathbf {E}^{(k)}\) and \(\mathbf {F}^{(k)}\) only extracts some components of the vector and do not need not to be explicitly performed. We remark that, in the Newton projection method proposed in [4], the scaling matrix is \(\mathbf {S}^{(k)}=\left( \mathbf {E}^{(k)}\mathbf {H}_\tau \mathbf {E}^{(k)}+\mathbf {F}^{(k)}\right) ^{-1}\) which requires to extract a submatrix of \(\mathbf {H}_\tau \) and then to invert it.

Convergence Analysis. As proved in [4], the convergence of Newton-type projection methods can be proved under the general following assumptions which basically require the scaling matrices \(\mathbf {S}^{(k)}\) to be positive definite matrices with uniformly bounded eigenvalues.

- A1:

-

The gradient \(\mathbf {g}\) is Lipschitz continuous on each bounded set of \(\mathbb {R}^{2n}\).

- A2:

-

There exist positive scalars \(c_1\) and \(c_2\) such that

$$\begin{aligned} c_1\Vert \mathbf {y}\Vert ^2 \le \mathbf {y}^H \mathbf {S}^{(k)} \mathbf {y} \le c_2 \Vert \mathbf {y}\Vert ^2, \; \forall \mathbf {y}\in \mathbb {R}^{2n}, \; k=0,1,\ldots \end{aligned}$$

The key convergence result is provided in Proposition 2 of [4] which is restated here for the shake of completeness.

Proposition 31

[4, Proposition 2] Let \(\{[\mathbf {u}^{(k)},\mathbf {v}^{(k)}]\}\) be a sequence generated by iteration (11) where \(\mathbf {S}^{(k)}\) is a positive definite symmetric matrix which is diagonal with respect to \(\mathcal {A}^{(k)}\) and \(\alpha ^k\) is computed by the Armijo rule along the projection arc. Under assumptions A1 and A2 above, every limit point of a sequence \(\{[\mathbf {u}^{(k)},\mathbf {v}^{(k)}]\}\) is a critical point with respect to problem (2).

Since the objective \(\mathcal {F}\) of (2) is twice continuously differentiable, it satisfies assumption A1. From Propositions 21 and 22, it follows that \(\mathbf {M}_\tau \) is a symmetric positive definite matrix and hence, the scaling matrix \(\mathbf {S}^{(k)}\) defined by (12) is a positive definite symmetric matrix which is diagonal with respect to \(\mathcal {A}^{(k)}\). The global convergence of the MNP method is therefore guaranteed provided \(\mathbf {S}^{(k)}\) verifies assumption A2.

Proposition 32

Let \(\mathbf {S}^{(k)}\) be the scaling matrix defined as \( \mathbf {S}^{(k)}=\mathbf {E}^{(k)}\mathbf {H}_\tau \mathbf {E}^{(k)}+\mathbf {F}^{(k)}. \) Then, there exist two positive scalars \(c_1\) and \(c_2\) such that

Proof

Proposition 21 implies that the largest and smallest eigenvalue of \(\mathbf {M}_\tau \) are respectively \({1}/{\tau }\) and \(1/(2\sigma _1+\tau )\), therefore

We have \( \mathbf {y}^H \mathbf {S}^{(k)} \mathbf {y}= \big ( \mathbf {E}^{(k)}\mathbf {y}\big )^H \mathbf {M}_\tau \big ( \mathbf {E}^{(k)}\mathbf {y}\big ) + \mathbf {y}^H\mathbf {F}^{(k)}\mathbf {y} . \) From (13) it follows that

Moreover we have \( \mathbf {y}^H\mathbf {F}^{(k)}\mathbf {y} = \sum _{i\in \mathcal {A}^{(k)}} y_i^2\), \(\Vert \mathbf {E}^{(k)}\mathbf {y}\Vert ^2 = \sum _{i\notin \mathcal {A}^{(k)}} y_i^2 \); hence:

and

The thesis follows by setting \(c_1=\min \{\frac{1}{2\sigma _1+\tau },1\}\) and \(c_2=\max \{\frac{1}{\tau },1\}\).

Computing the Search Direction. We suppose that \(\mathbf {K}\) is the matrix representation of a spatially invariant convolution operator with periodic boundary conditions so that \(\mathbf {K}\) is a block circulant with circulant blocks (BCCB) matrix and matrix-vector products can be efficiently performed via the FFT. Moreover, we assume that the columns of \(\mathbf {W}\) form an orthogonal basis for which fast sparsifying algorithms exist, such as a wavelet basis, for example. Under these assumptions, \(\mathbf {A}\) is a full and dense matrix but the computational cost of matrix-vector operations with \(\mathbf {A}\) and \(\mathbf {A}^H\) is relatively cheap. As shown by (12), the computation of the search direction \(\mathbf {p}^{(k)}=\mathbf {S}^{(k)}\mathbf {g}^{(k)}\) requires the multiplication of a vector by \(\mathbf {M}_\tau \). Let \( \begin{bmatrix} \mathbf {z}, \mathbf {w} \end{bmatrix} \in \mathbb {R}^{2n}\) be a given vector, then it immediately follows that

Formula (14) needs the inversion of \(2\mathbf {A}^H\mathbf {A}+\tau \mathbf {I}\). Our experimental results indicate that the search direction can be efficiently and effectively computed as follows. Using the Sherman-Morrison-Woodbury formula, we obtain

Substituting (15) and (16) in (14), we obtain

Since \(\mathbf {K}\) is BCCB, it is diagonalized by the Discrete Fourier Transform (DFT), i.e. \(\mathbf {K} = \mathbf {U}^H\mathbf {D}\mathbf {U}\) where \(\mathbf {U}\) denotes the unitary matrix representing the DFT and \(\mathbf {D}\) is a diagonal matrix. Thus, we have:

Substituting (19) and (20) in (17) and (18), we obtain

Equations (14), (21) and (22) show that, at each iteration, the computation of the search direction \(\mathbf {p}^{(k)}\) requires two products by \(\mathbf {W}\), two products by \(\mathbf {W}^H\), two products by \(\mathbf {U}\) and two products by \(\mathbf {U}^H\). The last products can be performed efficiently by using the FFT algorithm.

4 Numerical Results

In this section, we present the numerical results of some image restoration test problems. The numerical experiments aim at illustrating the performance of MNP compared with some state-of-the-art methods as SALSA [1], CGIST [8], the nonmonotonic version of GPSR [6], and the Split Bregman method [7]. Even if SALSA has been shown to outperform GPSR [1], we consider GPSR in our comparative study since it solves, as MNP, the quadratic program (2). The Matlab source code of the considered methods, made publicly available by the authors, has been used in the numerical experiments. The numerical experiments have been executed in Matlab R2012a on a personal computer with an Intel Core i7-2600, 3.40 GHz processor.

The numerical experiments are based on the well-known Barbara image (Fig. 1), whose size is \(512\times 512\) and whose pixels have been scaled into the range between 0 and 1. In our experiments, the matrix \(\mathbf {W}\) represents an orthogonal Haar wavelet transform with four levels. For all the considered methods, the initial iterate \(\mathbf {x}^{(0)}\) has been chosen as \(\mathbf {x}^{(0)}=\mathbf {W}^H\mathbf {b}\); the regularization parameter \(\lambda \) has been heuristically chosen. In MNP, the parameter \(\tau \) of the Hessian approximation has been fixed at \(\tau = 100\lambda \). This value has been fixed after a wide experimentation and has been used in all the presented numerical experiments.

The methods iteration is terminated when the relative distance between two successive objective values becomes less than a tolerance \(tol_\phi \). A maximum number of 100 iterations has been allowed for each method.

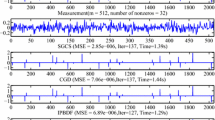

MSE (left column) and obiective function (right column) histories of MNP (blue solid line), SALSA (magenta dashed line), GPSR (red dotted line), CGIST (black dashdotted line) and Split Bregman (cyan dashdotted line) methods. Top line: Guassian blur; bottom line: out-of-focus blur. The noise level is NL = \(1.5\cdot 10^{-2}\). (Color figure online)

In the first experiment, the Barbara image has been convolved with a Gaussian PSF with variance equal to 2, obtained with the code psfGauss from [10], and then, the blurred image has been corrupted by Gaussian noise with noise level equal to \(7.5\cdot 10^{-3}\) and \(1.5\cdot 10^{-2}\). (The noise level \(\text {NL}\) is defined as \(\text {NL}:={\Vert \varvec{\eta }\Vert }\big /{\Vert \mathbf {A}\mathbf {x}_\text {original}\Vert }\) where \(\mathbf {x}_\text {original}\) is the original image and \(\varvec{\eta }\) is the noise vector.) In the second experiment, the Barbara image has been corrupted by out-of-focus blur, obtained with the code psfDefocus from [10] and by Gaussian noise with noise level equal to \(2.5\cdot 10^{-3}\) and \(7.5\cdot 10^{-3}\). The degraded images and the MNP restorations are shown in Fig. 1 for \(\text {NL}=7.5\cdot 10^{-3}\). Table 1 reports the Mean Squared Error (MSE) values, the objective function values and the CPU times in seconds obtained by using the stopping tolerance values \(tol_\phi =10^{-1},10^{-2},10^{-3}\). In Fig. 2, the MSE behavior and the decreasing of the objective function versus time (in seconds) are illustrated.

The reported numerical results indicate that MNP is competitive with the considered state-of-the-art and, in terms of MSE reduction, MNP reaches the minimum MSE value very early.

5 Conclusions

In this work, the MNP method has been proposed for sparsity constrained image restoration. In order to gain low computational complexity, the MNP method uses a fair approximation of the Hessian matrix so that the search direction can be computed efficiently by only using FFTs and fast sparsifying algorithms. The results of numerical experiments show that MNP may be competitive with some state-of-the-art methods both in terms of computational efficiency and accuracy.

References

Afonso, M.V., Bioucas-Dias, J.M., Figueiredo, M.A.T.: Fast image recovery using variable splitting and constrained optimization. IEEE Trans. Image Process. 19, 2345–2356 (2010)

Beck, A., Teboulle, M.: A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2(1), 183–202 (2009)

Becker, S., Bobin, J., Candès, E.J.: NESTA: a fast and accurate first-order method for sparse recovery. SIAM J. Imaging Sci. 4(1), 1–39 (2011)

Bertsekas, D.: Projected Newton methods for optimization problem with simple constraints. SIAM J. Control Optim. 20(2), 221–245 (1982)

Bioucas-Dias, J., Figueiredo, M.: A new twist: two-step iterative shrinkage/thresholding algorithms for image restoration. IEEE Trans. Image Process. 16(12), 299173004 (2007)

Figueiredo, M.A.T., Nowak, R.D., Wright, S.J.: Gradient projection for sparse reconstruction: application to compressed sensing and other inverse problems. IEEE J. Sel. Top. Signal Process. 1, 586–597 (2007)

Goldstein, T., Osher, S.: The split Bregman method for L1 regularized problems. Siam J. Imaging Sci. 2(2), 323–343 (2009)

Goldstein, T., Setzer, S.: High-order methods for basis pursuit. Technical report CAM report 10–41, UCLA (2010)

Hale, E.T., Yin, W., Zhang, Y.: Fixed-point continuation for L1-minimization: methodology and convergence. SIAM J. Optim. 19, 1107–1130 (2008)

Hansen, P.C., Nagy, J., O’Leary, D.P.: Deblurring Images. Matrices, Spectra and Filtering. SIAM, Philadelphia (2006)

Landi, G.: A modified Newton projection method for \(\ell _1\)-regularized least squares image deblurring. J. Math. Imaging Vis. 51(1), 195–208 (2015)

Schmidt, M., Kim, D., Sra, S.: Projected Newton-type methods in machine learning. In: Optimization for Machine Learning. MIT Press (2011)

Vogel, C.R.: Computational Methods for Inverse Problems. SIAM, Philadelphia (2002)

Wright, S., Nowak, R., Figueiredo, M.: Sparse reconstruction by separable approximation. IEEE Trans. Signal Process. 57(7), 2479172493 (2009)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 IFIP International Federation for Information Processing

About this paper

Cite this paper

Landi, G. (2016). Sparsity Constrained Image Restoration: An Approach Using the Newton Projection Method. In: Bociu, L., Désidéri, JA., Habbal, A. (eds) System Modeling and Optimization. CSMO 2015. IFIP Advances in Information and Communication Technology, vol 494. Springer, Cham. https://doi.org/10.1007/978-3-319-55795-3_32

Download citation

DOI: https://doi.org/10.1007/978-3-319-55795-3_32

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-55794-6

Online ISBN: 978-3-319-55795-3

eBook Packages: Computer ScienceComputer Science (R0)