Abstract

Our objective in this chapter is to examine the distributions of the eigenvalues and eigenvectors associated with a matrix-variate random variable. For instance, the distributions of the determinant and the trace of such a matrix are available from the distributions of simple functions of its eigenvalues. Actually, several statistical quantities are associated with eigenvalues or eigenvectors. In order to delve into such problems, we present additional properties of the matrix-variate gamma and beta distributions previously introduced in Chap. 5. The nonsingular and singular cases are considered, both in the real and complex domains. Particular attention is paid to the distribution of the eigenvalues and eigenvectors of a Wishart or gamma matrix.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

8.1. Introduction

We will utilize the same notations as in the previous chapters. Lower-case letters x, y, … will denote real scalar variables, whether mathematical or random. Capital letters X, Y, … will be used to denote real matrix-variate mathematical or random variables, whether square or rectangular matrices are involved. A tilde will be placed on top of letters such as \(\tilde {x},\tilde {y},\tilde {X},\tilde {Y}\) to denote variables in the complex domain. Constant matrices will for instance be denoted by A, B, C. A tilde will not be used on constant matrices unless the point is to be stressed that the matrix is in the complex domain. Other notations will remain unchanged.

Our objective in this chapter is to examine the distributions of the eigenvalues and eigenvectors associated with a matrix-variate random variable. Letting W be such a p × p matrix-variate random variable, its determinant is the product of its eigenvalues and its trace, the sum thereof. Accordingly, the distributions of the determinant and the trace of W are available from the distributions of simple functions of its eigenvalues. Actually, several statistical quantities are associated with eigenvalues or eigenvectors. In order to delve into such problems, we will require certain additional properties of the matrix-variate gamma and beta distributions previously introduced in Chap. 5. As a preamble to the study of the distributions of eigenvalues and eigenvectors, these will be looked into in the next subsections for both the real and complex cases.

8.1.1. Matrix-variate gamma and beta densities, real case

Let W 1 and W 2 be statistically independently distributed p × p real matrix-variate gamma random variables whose respective parameters are (α 1, B) and (α 2, B) with \(\Re (\alpha _j)>\frac {p-1}{2},~ j=1,2,\) their common scale parameter matrix B being a real positive definite constant matrix. Then, the joint density of W 1 and W 2, denoted by f(W 1, W 2), is the following:

Consider the transformations

which are matrix-variate counterparts of the changes of variables \(u_1=\frac {w_1}{w_1+w_2}\) and \( u_2=\frac {w_1}{w_2}\) in the real scalar case, that is, for p = 1. Note that the square roots in (8.1.2) are symmetric positive definite matrices. Then, we have the following result:

Theorem 8.1.1

When the real matrices U 1 and U 2 are as defined in (8.1.2), then U 1 is distributed as a real matrix-variate type-1 beta variable with the parameters (α 1, α 2) and U 2 , as a real matrix-variate type-2 beta variable with the parameters (α 1, α 2). Further, U 1 and U 3 = W 1 + W 2 are independently distributed, with U 3 having a real matrix-variate gamma distribution with the parameters (α 1 + α 2, B).

Proof

Given the joint density of W 1 and W 2 specified in (8.1.1), consider the transformation (W 1,W 2) → (U 3 = W 1 + W 2, U = W 1). On observing that its Jacobian is equal to one, the joint density of U 3 and U, denoted by f 1(U 3, U), is obtained as

where

Noting that

we now let \(U_1=U_3^{-\frac {1}{2}}UU_3^{-\frac {1}{2}}\) for fixed U 3, so that \(\mathrm {d}U_1=|U_3|{ }^{-\frac {p+1}{2}}\mathrm {d}U\). Accordingly, the joint density of U 3 and U 1, denoted by f 2(U 3, U 1), is the following, observing that U 1 is as defined in (8.1.2) with W 1 = U and U 3 = W 1 + W 2:

for \(\Re (\alpha _1)>\frac {p-1}{2},~ \Re (\alpha _2)>\frac {p-1}{2},~ U_3>O,~ O<U_1<I\), and zero elsewhere. On multiplying and dividing (iii) by Γ p(α 1 + α 2), it is seen that U 1 and U 3 = W 1 + W 2 are independently distributed as their joint density factorizes into the product of two densities g(U 1) and g 1(U 3), that is, f 2(U 1, U 3) = g(U 1)g 1(U 3) where

for \(\Re (\alpha _1)>\frac {p-1}{2}, ~\Re (\alpha _2)>\frac {p-1}{2},\) and zero elsewhere, is a real matrix-variate type-1 beta density with the parameters (α 1, α 2), and

for \(B>O,~ \Re (\alpha _1+\alpha _2)>\frac {p-1}{2}\), and zero elsewhere, which is a real matrix-variate gamma density with the parameters (α 1 + α 2, B). Thus, given two independently distributed p × p real positive definite matrices W 1 and W 2, where W 1 ∼ gamma (α 1, B), \(~B>O,~\Re (\alpha _1)>\frac {p-1}{2},\) and \(W_2\sim \,\mbox{gamma}\,(\alpha _2,~B), ~B>O,~ \Re (\alpha _2)>\frac {p-1}{2}\), one has U 3 = W 1 + W 2 ∼gamma (α 1 + α 2, B), B > O.

In order to determine the distribution of U 2, we first note that the exponent in (8.1.1) is \(\mathrm {tr}(B(W_1+W_2))=\mathrm {tr}[B^{\frac {1}{2}}W_1B^{\frac {1}{2}}+B^{\frac {1}{2}}W_2B^{\frac {1}{2}}]\). Letting \(V_j=B^{\frac {1}{2}}W_jB^{\frac {1}{2}},~ \mathrm {d}V_j=|B|{ }^{\frac {p+1}{2}}\mathrm {d}W_j\), j = 1, 2, which eliminates B, the resulting joint density of V 1 and V 2, denoted by f 3(V 1, V 2), being

for \(\Re (\alpha _j)>\frac {p-1}{2},~j=1,2\), and zero elsewhere. Now, noting that \(\mathrm {tr}[V_1+V_2]=\mathrm {tr}[V_2^{\frac {1}{2}}(I+V_2^{-\frac {1}{2}}V_1V_2^{-\frac {1}{2}})V_2^{\frac {1}{2}}]\) and letting \(V=V_2^{-\frac {1}{2}}V_1V_2^{-\frac {1}{2}}=U_2\mbox{ of ( 8.1.2)}\ \mbox{so that} \ \mathrm {d}V=|V_2|{ }^{-\frac {p+1}{2}}\mathrm {d}V_1\) for fixed V 2, the joint density of V and V 2 = V 3, denoted by f 4(V, V 3), is obtained as

where \(\mathrm {tr}[V_3^{\frac {1}{2}}(I+V)V_3^{\frac {1}{2}}]\) was replaced by \( \mathrm {tr}[(I+V)^{\frac {1}{2}}V_3(I+V)^{\frac {1}{2}}]\). It then suffices to integrate out V 3 from the joint density specified in (8.1.6) by making use of a real matrix-variate gamma integral, to obtain the density of V = U 2 that follows:

for \(\Re (\alpha _j)>\frac {p-1}{2},~j=1,2\), and zero elsewhere, which is a real matrix-variate type-2 beta density whose parameters are (α 1, α 2). This completes the proof.

8.1a. Matrix-variate Gamma and Beta Densities, Complex Case

Parallel results can be obtained in the complex domain. If \(\tilde {W}_1\) and \(\tilde {W}_2\) are statistically independently distributed p × p Hermitian positive definite matrices having complex matrix-variate gamma densities with the parameters \((\alpha _1,~\tilde {B})\) and \((\alpha _2,~\tilde {B}),~ \tilde {B}=\tilde {B}^{*}>O\), where an asterisk designates a conjugate transpose, then their joint density, denoted by \(\tilde {f}(\tilde {W}_1,\,\tilde {W}_2)\), is given by

for \(\tilde {B}>O,~ \tilde {W}_1>O,~\tilde {W}_2>O,~\Re (\alpha _j)>p-1,~ j=1,2\), and zero elsewhere, with \(|\mathrm {det}(\tilde {W}_j)|\) denoting the absolute value or modulus of the determinant of \(\tilde {W}_j\). Since the derivations are similar to those provided in the previous subsection for the real case, the next results will be stated without proof. Note that, in the complex domain, the square roots involved in the transformations are Hermitian positive definite matrices.

Theorem 8.1a.1

Let the p × p Hermitian positive definite matrices \(\tilde {W}_1\) and \(\tilde {W}_2\) be independently distributed as complex matrix-variate gamma variables with the parameters \((\alpha _1,~\tilde {B})\) and \((\alpha _2,~\tilde {B}), \ \tilde {B}=\tilde {B}^{*}>O,\) respectively. Letting \(\tilde {U}_3=\tilde {W}_1+\tilde {W}_2\),

then (1): \(\tilde {U}_3\) is distributed as a complex matrix-variate gamma with the parameters \((\alpha _1+\alpha _1,~\tilde {B}),~\tilde {B}=\tilde {B}^{*}>O,~\Re (\alpha _1+\alpha _2)>p-1\) ; (2): \(\tilde {U}_1\) and \(\tilde {U}_3\) are independently distributed; (3): \(\tilde {U}_1\) is distributed as a complex matrix-variate type-1 beta random variable with the parameters (α 1, α 2); (4): \(\tilde {U}_2\) is distributed as a complex matrix-variate type-2 beta random variable with the parameters (α 1, α 2).

8.1.2. Real Wishart matrices

Since Wishart matrices are distributed as matrix-variate gamma variables whose parameters are \(\alpha _j=\frac {m_j}{2},~ m_j\ge p,\) and \( B=\frac {1}{2}\varSigma ^{-1}, ~\varSigma >O\), we have the following corollaries in the real and complex cases:

Corollary 8.1.1

Let the p × p real positive definite matrices W 1 and W 2 be independently Wishart distributed, W j ∼ W p(m j, Σ), with m j ≥ p, j = 1, 2, degrees of freedom, common parameter matrix Σ > O, and respective densities given by

and zero elsewhere. Then (1): U 3 = W 1 + W 2 is Wishart distributed with m 1 + m 2 degrees of freedom and parameter matrix Σ > O, that is, U 3 ∼ W p(m 1 + m 2, Σ), Σ > O; (2): \(U_1=(W_1+W_2)^{-\frac {1}{2}}W_1(W_1+W_2)^{-\frac {1}{2}}=U_3^{-\frac {1}{2}}W_1U_3^{-\frac {1}{2}}\) is a real matrix-variate type-1 beta random variable with the parameters (α 1, α 2), that is, \(U_1\sim \mathit{\mbox{type-1 beta }}(\frac {m_1}{2},~\frac {m_2}{2})\) ; (3): U 1 and U 3 are independently distributed; (4): \(U_2=W_2^{-\frac {1}{2}}W_1W_2^{-\frac {1}{2}}\) is a real matrix-variate type-2 beta random variable with the parameters (α 1, α 2), that is, \(V\sim \mathit{\mbox{type-2 beta }}(\frac {m_1}{2},~\frac {m_2}{2})\).

The corresponding results for the complex case are parallel with identical numbers of degrees of freedom, m 1 and m 2, and parameter matrix \(\tilde {\varSigma }=\tilde {\varSigma }^{*}>O\) (Hermitian positive definite). Properties associated with type-1 and type-2 beta variables hold as well in the complex domain. Consider for instance the following results which are also valid in the complex case. If U is a type-1 beta variable with the parameters (α 1, α 2), then I − U is a type-1 beta variable with the parameters (α 2, α 1) and \((I-U)^{-\frac {1}{2}}U(I-U)^{-\frac {1}{2}}\) is a type-2 beta variable with the parameters (α 1, α 2).

8.2. Some Eigenvalues and Eigenvectors, Real Case

Observe that when X, a p × p real positive definite matrix, has a real matrix-variate gamma density with the parameters \((\alpha ,~ B),~ B>O, ~\Re (\alpha )>\frac {p-1}{2}\), then \(Z=B^{\frac {1}{2}}XB^{\frac {1}{2}}\) has a real matrix-variate gamma density with the parameters (α, I) where I is the identity matrix. The corresponding result for a Wishart matrix is the following: Let W be a real Wishart matrix having m degrees of freedom and Σ > O as its parameter matrix, that is, W ∼ W p(m, Σ), Σ > O, m ≥ p, then \(Z=\varSigma ^{-\frac {1}{2}}W\varSigma ^{-\frac {1}{2}} \sim W_p(m,~I),~ m\ge p\), that is, Z is a Wishart matrix having m degrees of freedom and I as its parameter matrix. If we are considering the roots of the determinantal equation \(|\hat {W}_1-\lambda \hat {W}_2|=0\) where \(\hat {W}_1\sim W_p(m_1,~\varSigma )\) and \( \hat {W}_2\sim W_p(m_2,~\varSigma ),~ \varSigma >O, ~m_j\ge p,~j=1,2,\) and if \(\hat {W}_1\) and \(\hat {W}_2\) are independently distributed, so will \(W_1=\varSigma ^{-\frac {1}{2}}\hat {W}_1\varSigma ^{-\frac {1}{2}}\) and \(W_2=\varSigma ^{-\frac {1}{2}}\hat {W}_2\varSigma ^{-\frac {1}{2}}\) be. Then

Thus, the roots of |W 1 − λW 2| = 0 and \(|\hat {W}_1-\lambda \hat {W}_2|=0\) are identical. Hence, without any loss of generalily, one needs only consider the roots of W j, W j ∼ W p(m j, I), m j ≥ p, j = 1, 2, when independently distributed Wishart matrices sharing a common matrix parameter are involved. Observe that

which means that λ is an eigenvalue of \(W_2^{-\frac {1}{2}}W_1W_2^{-\frac {1}{2}}\) when \(W_j\overset {ind}{\sim } W_p(m_j,~I),~j=1,2\). If Y j is an eigenvector corresponding to the eigenvalue λ j, it must satisfy the equation

Let the eigenvalues λ j’s be distinct so that λ 1 > λ 2 > ⋯ > λ p. Actually, it can be shown that Pr{λ i = λ j} = 0 almost surely for all i≠j. When the eigenvalues of \(W_2^{-\frac {1}{2}}W_1W_2^{-\frac {1}{2}}\) are distinct, then the eigenvectors are orthogonal since \(W_2^{-\frac {1}{2}}W_1W_2^{-\frac {1}{2}}\) is symmetric. Thus, in this case, there exists a set of p linearly independent mutually orthogonal eigenvectors. Let Y 1, …, Y p be a set of normalized mutually orthonormal eigenvectors and let Y = (Y 1, …, Y p) be the p × p matrix consisting of the normalized eigenvectors. Our aim is to determine the joint density of Y and λ 1, …, λ p, and thereby the marginal densities of Y and λ 1, …, λ p. To this end, we will need the Jacobian provided in the next theorem. For its derivation and connection to other Jacobians, the reader is referred to Mathai (1997).

Theorem 8.2.1

Let Z be a p × p real symmetric matrix comprised of distinct real scalar variables as its elements, except for symmetry, and let its distinct nonzero eigenvalues be λ 1 > λ 2 > ⋯ > λ p , which are real owing to its symmetry. Let D = diag(λ 1, …, λ p), dD = dλ 1 ∧… ∧dλ p , and P be a unique orthonormal matrix such that PP′ = I, P′P = I, and Z = PDP′. Then, after integrating out the differential element of P over the full orthogonal group O p , we have

Corollary 8.2.1

Let g(Z) be a symmetric function of the p × p real symmetric matrix Z—symmetric function in the sense that g(AB) = g(BA) whenever AB and BA are defined, even if AB≠BA. Let the eigenvalues of Z be distinct such that λ 1 > λ 2 > ⋯ > λ p , D = diag(λ 1, …, λ p) and dD = dλ 1 ∧… ∧dλ p . Then,

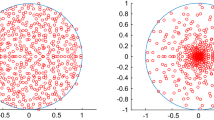

Example 8.2.1

Consider a p × p real matrix X having a matrix-variate gamma density with shape parameter \(\alpha =\frac {p+1}{2}\) and scale parameter matrix I, whose density is

A variable having this density is also said to follow the real p × p matrix-variate exponential distribution. Let p = 2 and denote the eigenvalues of X by ∞ > λ 1 > λ 2 > 0. It follows from Corollary 8.2.1 that the joint density of λ 1 and λ 2, denoted by f 1(D), with D = diag(λ 1, λ 2), is given by

Verify that f 1(D) is a density.

Solution 8.2.1

Since f 1(D) is nonnegative, it suffices to show that the total integral is equal to 1. Excluding the constant part, the integral to be evaluated is the following:

Let us now compute the constant part:

Since the product of (i) and (ii) gives 1, f 1(D) is indeed a density.

Example 8.2.2

Consider a p × p matrix having a real matrix-variate type-1 beta density with the parameters \(\alpha =\frac {p+1}{2},~\beta =\frac {p+1}{2}\), whose density, denoted by f(X), is

and zero elsewhere. This density f(X) is also referred to as a real p × p matrix-variate uniform density. Let p = 2 and the eigenvalues of X be 1 > λ 1 > λ 2 > 0. Then, the density of D = diag(λ 1, λ 2), denoted by f 1(D), is

and zero elsewhere. Verify that f 1(D) is a density.

Solution 8.2.2

The constant part simplifies to the one:

Let us now consider the functional part of the integrand:

As the product of (i) and (ii) equals 1, it is verified that f 1(D) is a density.

Example 8.2.3

Consider a p × p matrix having a matrix-variate gamma density with shape parameter α and scale parameter matrix I. Let D = diag(λ 1, …, λ p) where ∞ > λ 1 > λ 2 > ⋯ > λ p > 0 are the eigenvalues of that matrix. The joint density of the λ j’s or the density of D is then available as

Even when α is specified, the integral representation of the density f 1(D) will generally only be expressible in terms of incomplete gamma functions or confluent hypergeometric series, with simple functional forms being obtainable only for certain values of α and p. Verify that f 1(D) is a density for \(\alpha =\frac {7}{2}\) and p = 2.

Solution 8.2.3

For those values of p and α, we have

whose integral over ∞ > λ 1 > λ 2 > 0 is the sum of (i) and (ii):

that is,

Now, consider the constant part:

The product of (iii) and (iv) giving 1, this verifies that f 1(D) is a density when p = 2 and \(\alpha =\frac {7}{2}\).

8.2a. The Distributions of Eigenvalues in the Complex Case

The complex counterpart of Theorem 8.2.1 is stated next.

Theorem 8.2a.1

Let \(\tilde {Z}\) be a p × p Hermitian matrix with distinct real nonzero eigenvalues λ 1 > λ 2 > ⋯ > λ p . Let \(\tilde {Q}\) be a p × p unique unitary matrix, \(\tilde {Q}\tilde {Q}^{*}=I,~ \tilde {Q}^{*}\tilde {Q}=I\) such that \(\tilde {Z}=\tilde {Q}D\tilde {Q}^{*}\) where an asterisk designates the conjugate transpose. Then, after integrating out the differential element of \(\tilde {Q}\) over the full orthogonal group \(\tilde {O}_p\) , we have

Note 8.2a.1

When the unitary matrix \(\tilde {Q}\) has diagonal elements that are real, then the integral of the differential element over the full orthogonal group \(\tilde {O}_p\) will be the following:

where \(\tilde {h}(\tilde {Q})=\wedge [({\mathrm{{d}}}\tilde {Q})\tilde {Q}^*]\); the reader may refer to Theorem 4.4 and Corollary 4.3.1 of Mathai (1997) for details. If all the elements comprising \(\tilde {Q}\) are complex, then the numerator in (8.2a.2) will be \(\pi ^{p^2}\) instead of π p(p−1). When unitary transformations are made on Hermitian matrices such as \(\tilde {Z}\) in Theorem 8.2a.1, the diagonal elements in the unitary matrix \(\tilde {Q}\) are real and hence the numerator in (8.2a.2) remains π p(p−1) in this case.

Note 8.2a.2

A corollary parallel to Corollary 8.2.1 also holds in the complex domain.

Example 8.2a.1

Consider a complex p × p matrix \(\tilde {X}\) having a matrix-variate type-1 beta density with the parameters α = p and β = p, so that its density, denoted by \(\tilde {f}(\tilde {X})\), is the following:

which is also referred to as the p × p complex matrix-variate uniform density. Let D = diag(λ 1, …, λ p) where 1 > λ 1 > λ 2 > ⋯ > λ p > 0 are the eigenvalues of \(\tilde {X}\). Then, the density of D, denoted by f 1(D), is given by

Verify that (i) is a density for p = 2.

Solution 8.2a.1

For Hermitian matrices the eigenvalues are real. Consider the integral over (λ 1 − λ 2)2:

Let us now evaluate the constant part:

The product of (ii) and (iii) equalling 1, the solution is complete.

Example 8.2a.2

Consider a p × p complex matrix \(\tilde {X}\) having a matrix-variate gamma density with the parameters (α = p, β = I). Let D = diag(λ 1, …, λ p), where ∞ > λ 1 > ⋯ > λ p > 0 are the eigenvalues of \(\tilde {X}\). Denoting the density of D by f 1(D), we have

When α = p, this density is the p × p complex matrix-variate exponential density. Verify that f 1(D) is a density for p = 2.

Solution 8.2a.2

The constant part simplifies to the following:

and the integrals over the λ j’s are evaluated as follows:

Now, taking the product of (i) and (ii), we obtain 1, and the result is verified.

8.2.1. Eigenvalues of matrix-variate gamma and Wishart matrices, real case

Let W 1 and W 2 be two p × p real positive definite matrix-variate random variables that are independently distributed as matrix-variate gamma random variables with the parameters (α 1, B) and (α 2, B), respectively. When \(\alpha _j=\frac {m_j}{2},~ m_j\ge p, ~j=1,2,\) with m 1, m 2 = p, p + 1, …, and \(B=\frac {1}{2}I\), W 1 and W 2 are independently Wishart distributed with m 1 and m 2 degrees of freedom, respectively; refer to the earlier discussion about the elimination of the scale parameter matrix Σ > O in a matrix-variate Wishart distribution. Consider the determinantal equation

Thus, λ is an eigenvalue of \(U_2=W_2^{-\frac {1}{2}}W_1W_2^{-\frac {1}{2}}\). It has already been established in Theorem 8.1.1 that \(U_2=W_2^{-\frac {1}{2}}W_1W_2^{-\frac {1}{2}}\) is distributed as a real matrix-variate type-2 beta random variable with the parameters α 1 and α 2 whose density is

for \(U_2>O,~ \Re (\alpha _j)>\frac {p-1}{2},~j=1,2,\) and zero elsewhere. Note that this distribution is free of the scale parameter matrix B. Writing U 2 in terms of its eigenvalues and making use of (8.2.4), we have the following result:

Theorem 8.2.2

Let λ 1 > λ 2 > ⋯ > λ p > 0 be the distinct roots of the determinantal equation (8.2.5) or, equivalently, let the λ j ’s be the eigenvalues of \(U_2=W_2^{-\frac {1}{2}}W_1W_2^{-\frac {1}{2}}\) , as defined in (8.2.5). Then, after integrating out over the full orthogonal group O p , the joint density of λ 1, …, λ p , denoted by g 1(D) with D = diag(λ 1, …, λ p), is obtained as

Proof

Applying the transformation U 2 = PDP′, PP′ = I, P′P = I, where P is a unique orthonormal matrix, to the density of U 2 given in (8.2.6), it follows from Theorem 8.2.1 that

after integrating out the differential element corresponding to the orthonormal matrix P. On substituting |U 2| = λ 1⋯λ p and |I + U 2| = (1 + λ 1)⋯(1 + λ p) in (8.2.6), the result is established.

Note 8.2.1

When \(\alpha _1=\frac {m_1}{2},~ \alpha _2=\frac {m_2}{2},~ m_j\ge p, \) with m 1, m 2 = p, p + 1, …, in Theorem 8.2.2, we have the corresponding result for real Wishart matrices having m 1 and m 2 degrees of freedom and parameter matrix \(\frac {1}{2}I_p\).

Example 8.2.4

Let the p × p real matrix X have a real matrix-variate type-2 beta density with the parameters \(\alpha =\frac {p+1}{2}\) and \(\beta =\frac {p+1}{2}\). Then, the joint density of its eigenvalues λ 1 > λ 2 > ⋯ > λ p > 0, or that of D = diag(λ 1, …, λ p), denoted by g 1(D), is

Verify that g 1(D) is a density for p = 2.

Solution 8.2.4

Consider the total integral for p = 2. The constant part is

and the integral part is obtained as follows:

The first integral over λ 2 in (ii) is

then, integrating with respect to λ 1 yields

Now, after integrating by parts, the second integral over λ 2 in (ii) is the following:

then, integrating with respect to λ 1 gives

Combining (iii) and (iv), the sum is

Finally, the product of (i) and (v) is 1, which verifies that f(D) is indeed a density when p = 2.

8.2a.1. Eigenvalues of complex matrix-variate gamma and Wishart matrices

A parallel result can be obtained in the complex domain. Let \(\tilde {W}_1\) and \(\tilde {W}_2\) be independently distributed p × p complex matrix-variate gamma random variables with parameters \((\alpha _1,\,\tilde {B})\) and \( (\alpha _2,\,\tilde {B}),\) \(\tilde {B}=\tilde {B}^{*}>O, ~\Re (\alpha _j)>p-1,~ j=1,2\). Consider the determinantal equation

It follows from Theorem 8.1a.1 that \(\tilde {U}_2=\tilde {W}_2^{-\frac {1}{2}}\tilde {W}_1\tilde {W}_2^{-\frac {1}{2}}\) has a complex matrix-variate type-2 beta distribution with the parameters (α 1, α 2), whose associated density is

for \(\tilde {U}_2=\tilde {U}_2^{*}>O,~ \Re (\alpha _j)>p-1,~ j=1,2\), and zero elsewhere. Observe that the distribution of \(\tilde {U}_2\) is free of the scale parameter matrix \(\tilde {B}>O\) and that \(\tilde {W}_1\) and \(\tilde {W}_2\) are Hermitian positive definite so that their eigenvalues λ 1 > ⋯ > λ p > 0, assumed to be distinct, are real and positive. Writing \(\tilde {U}_2\) in terms of its eigenvalues and making use of (8.2a.1), we have the following result:

Theorem 8.2a.2

Let \(\tilde {U}_2=\tilde {W}_2^{-\frac {1}{2}}\tilde {W}_1\tilde {W}_2^{-\frac {1}{2}}\) and its distinct eigenvalues λ 1 > ⋯ > λ p > 0 be as defined in the determinantal equation (8.2a.3). Then, after integrating out the differential element corresponding to the unique unitary matrix \(\tilde {Q}\), \(\tilde {Q}\tilde {Q}^{*}=I,~ \tilde {Q}^{*}\tilde {Q}=I \) , such that \( \tilde {U}_2=\tilde {Q}D\tilde {Q}^{*},\) with D = diag(λ 1, …, λ p), the joint density of λ 1, …, λ p , denoted by \(\tilde {g}_1(\tilde {D})\) , is obtained as

where

Example 8.2a.3

Let the p × p matrix \(\tilde {X}\) have a complex matrix-variate type-2 beta density with the parameters (α = p, β = p). Let its eigenvalues be λ 1 > ⋯ > λ p > 0 and their joint density be denoted by \(\tilde {g_1}(D)\), D = diag(λ 1, …, λ p). Then,

Verify that \(\tilde {g_1}(D)\) is a density for p = 2.

Solution 8.2a.3

Since the total integral must be unity, let us integrate out the λ j’s. The constant part is the following:

Now, consider the integrals over λ 1 and λ 2, noting that \((\lambda _1-\lambda _2)^2=\lambda _1^2+\lambda _2^2-2\lambda _1\lambda _2\):

As they appear in (ii), the integrals over λ 2 are

then, integrating with respect to λ 1 yields

Summing (vi),(vii) and (viii), we have

As the product of (ix) and (i) is 1, the result is established. Note that since 2p is a positive integer, the method of integration by parts works for a general p when the first parameter in the type-2 beta density α is equal to p. However, ∏i<j(λ i − λ j) will be difficult to handle for a general p.

Example 8.2a.4

Give an explicit representation of (8.2a.5) for p = 3, α 1 = 4 and α 2 = 3.

Solution 8.2a.4

For p = 3, α 1 − p = 4 − 3 = 1, α 1 + α 2 = 4 + 3 = 7, p(p − 1) = 6. The constant part is the following:

the functional part being the product of

and

Multiplying (i), (ii) and (iii) yields the answer.

8.2.2. An alternative procedure in the real case

This section describes an alternative procedure that is presented in Anderson (2003). The real case will first be discussed. Let W 1 and W 2 be independently distributed real p × p matrix-variate gamma random variables with the parameters \((\alpha _1,~B),~(\alpha _2,~B),~B>O,~\Re (\alpha _j)>\frac {p-1}{2},~ j=1,2\). We are considering the determinantal equation

where \(\lambda =\frac {\mu }{1-\mu }\). Thus, μ is an eigenvalue of \(U_1=(W_1+W_2)^{-\frac {1}{2}}W_1(W_1+W_2)^{-\frac {1}{2}}\). It follows from Theorem 8.1.1 that he joint density of W 1 and W 2, denoted by f(W 1, W 2), can be written as

where \(U_1=(W_1+W_2)^{-\frac {1}{2}}W_1(W_1+W_2)^{-\frac {1}{2}}\) and U 3 = W 1 + W 2 are independently distributed. Further,

for \(\Re (\alpha _j)>\frac {p-1}{2},~j=1,2\), and g(U 1) = 0 elsewhere, is a real matrix-variate type-1 beta density, and

for \(B>O,~ \Re (\alpha _1+\alpha _2)>\frac {p-1}{2},\) and g 1(U 3) = 0 elsewhere, is a real matrix-variate gamma density with the parameters (α 1 + α 2, B), B > O. Now, consider the transformation U 1 = PDP′, PP′ = I, P′P = I, where D = diag(μ 1, …, μ p), μ 1 > ⋯ > μ p > 0 being the distinct eigenvalues of the real positive definite matrix U 1, and the orthonormal matrix P is unique. Given the density of U 1 specified in (8.2.10), the joint density of μ 1, …, μ p, denoted by g 4(D), which is obtained after integrating out the differential element corresponding to P, is

Hence, the following result:

Theorem 8.2.3

The joint density of the eigenvalues μ 1 > ⋯ > μ p > 0 of the determinantal equation in (8.2.8) is given by the expression appearing in (8.2.12), which is equal to the density specified in (8.2.7).

Proof

It has already been established in Theorem 8.1.1 that \(U_1=(W_1+W_2)^{-\frac {1}{2}}W_1\) \((W_1+W_2)^{-\frac {1}{2}}\) has the real matrix-variate type-1 beta density given in (8.2.10). Now, make the transformation U 1 = PDP′ where D = diag(μ 1, …, μ p) and the orthonormal matrix, P is unique. Then, the first part is established from Theorem 8.2.2. It follows from (8.2.8) that \(\lambda =\frac {\mu }{1-\mu }\) or \(\mu =\frac {\lambda }{1+\lambda }\) with \(\mathrm {d}\mu =\frac {1}{(1+\lambda )^2}\mathrm {d}\lambda \) and \( \mu =1-\frac {1}{1+\lambda }\). Observe that \(\prod _{i<j}(\mu _i-\mu _j)=\prod _{i<j}\frac {(\lambda _i-\lambda _j)}{(1+\lambda _i)(1+\lambda _j)}\) and that, in this product’s denominator, 1 + λ i appears p − 1 times for i = 1, …, p. The exponent of \(\frac {1}{1+\lambda _i}\) is \(\alpha _1-\frac {p+1}{2}+\alpha _2-\frac {p+1}{2}+2+(p-1)=\alpha _1+\alpha _2\). On substituting these values in (8.2.12), a perfect agreement with (8.2.7) is established, which completes the proof.

Example 8.2.5

Provide an explicit representation of (8.2.12) for p = 3, α 1 = 4 and α 2 = 3.

Solution 8.2.5

Note that \(\frac {p+1}{2}=\frac {3+1}{2}=2\), \(\alpha _1-\frac {p+1}{2}=4-2=2\) and \(\alpha _2-\frac {p+1}{2}=3-2=1\). The constant part is

the functional part being the product of

and

The product of (i), (ii) and (iii) yields the answer.

8.2.3. The joint density of the eigenvectors in the real case

In order to establish the joint density of the eigenvectors, we will proceed as follows, our starting equation being |W 1 − λW 2| = 0. Let λ j be a root of this equation and let Y j be the corresponding vector. Then,

This shows that Y j is the eigenvector corresponding to the eigenvalue μ j of (W 1 + W 2)−1 W 1 or, equivalently, of \((W_1+W_2)^{-\frac {1}{2}}W_1(W_1+W_2)^{-\frac {1}{2}}=U_1\) which is a real matrix-variate type-1 beta random variable. Since the μ j’s are distinct, μ 1 > ⋯ > μ p > 0 and the matrix is symmetric, the eigenvectors Y 1, …, Y p are mutually orthogonal. Consider the equation

For i≠j, we also have

Premultiplying (iii) by \(Y_i^{\prime }\) and (iv) by \(Y_j^{\prime }\), and observing that \(W_1^{\prime }=W_1\), it follows that \((Y_i^{\prime }W_1Y_j)'=Y_j^{\prime }W_1Y_i\). Since both are real 1 × 1 matrices and one is the transpose of the other, they are equal. Then, on subtracting the resulting right-hand sides, we obtain

Since \(Y_i^{\prime }(W_1+W_2)Y_j=Y_j^{\prime }(W_1+W_2)Y_i\) by the previous argument and μ i≠μ j, we must have

Let us normalize Y j as follows:

Then, combining (v) and (vi), we have

which is the p × p matrix of the normalized eigenvectors. Thus,

which follows from an application of Theorem 1.6.6. We are seeking the joint density of Y 1, …, Y p or the density of Y . The density of W 1 + W 2 = U 3 denoted by g 1(U 3), is available from (8.2.11) as

Letting U 3 = Z′Z, ascertain the connection between the differential elements dZ and dU 3 from Theorem 4.2.3 for the case q = p. Then,

Hence, the density of Z, denoted by g z(Z), is the following:

so that the density of Y = Z −1, denoted by g 5(Y ), is given by

Then, we have the following result:

Theorem 8.2.4

Let W 1 and W 2 be independently distributed p × p real matrix-variate gamma random variables with parameters \((\alpha _1,~B),~(\alpha _2,~B),~B>O, ~\Re (\alpha _j)>\frac {p-1}{2},~ j=1,2\) . Consider the equation

where Y j is a vector corresponding to the root λ j of the determinantal equation. Let λ 1 > ⋯ > λ p > 0 be its distinct roots, which are also the eigenvalues of the matrix \(W_2^{-\frac {1}{2}}W_1W_2^{-\frac {1}{2}}\) . The eigenvalues λ 1, …, λ p and the linearly independent orthogonal eigenvectors Y 1, …, Y p are independently distributed. The joint density of the eigenvalues λ 1, …, λ p is available from Theorem 8.2.2 and the joint density of the eigenvectors is given in (8.2.15).

Example 8.2.6

Illustrate the steps to show that the solutions of the determinantal equation |W 1 − λW 2| = 0 are also the eigenvalues of \(W_2^{-\frac {1}{2}}W_1W_2^{-\frac {1}{2}}\) for the following matrices:

Solution 8.2.6

First, let us assess whether W 1 and W 2 are positive definite matrices. Clearly, \(W_1=W_1^{\prime }\) and \( W_2=W_2^{\prime }\) are symmetric and their leading minors are positive:

Consider the determinantal equation |W 1 − λW 2| = 0, that is,

whose roots are \(\lambda _1=2,~ \lambda _2=\frac {4}{3}\). Let us determine \(W_2^{-\frac {1}{2}}\). To this end, let us evaluate the eigenvalues and eigenvectors of W 2. The eigenvalues of W 2 are the values of ν satisfying the equation |W 2 − νI| = 0 ⇒ (2 − ν)2 − 12 = 0 ⇒ ν 1 = 3 and ν 2 = 1 are the eigenvalues of W 2. An eigenvector corresponding to ν 1 = 3 is given by

is one solution, the normalized eigenvector corresponding to ν 1 = 3 being Y 1 whose transpose is \(Y_1^{\prime }=\frac {1}{\sqrt {2}}[1,1]\). Similarly, an eigenvector associated with ν 2 = 1 is x 1 = 1, x 2 = −1, which once normalized becomes Y 2 whose transpose is \(Y_2^{\prime }=\frac {1}{\sqrt {2}}[1,-1]\). Let Λ = diag(3, 1) be the diagonal matrix of the eigenvalues of W 2. Then,

Observe that \(W_2,~W_2^{-1},~W_2^{\frac {1}{2}}\) and \(W_2^{-\frac {1}{2}}\) share the same eigenvectors Y 1 and Y 2. Hence,

and

The eigenvalues of T are \(\frac {1}{12}\) times the solutions of (20 − δ)2 − 42 = 0 ⇒ δ 1 = 24 and δ 2 = 16. Thus, the eigenvalues of T are \(\frac {24}{12}=2\) and \(\frac {16}{12}=\frac {4}{3},\) which are the solutions of the determinantal equation (i). This verifies the result.

8.2a.2. An alternative procedure in the complex case

Let \(\tilde {W}_1\) and \(\tilde {W}_2\) be independently distributed p × p complex matrix-variate gamma random variables with the parameters \((\alpha _1,~\tilde {B}),~ (\alpha _2,~\tilde {B}),~ \tilde {B}=\tilde {B}^{*}>O,~\Re (\alpha _j)>p-1,~j=1,2\). We are considering the roots λ j’s and the corresponding eigenvectors Y j’s of the determinantal equation

Let the eigenvalues λ 1, …, λ p of \(\tilde {W}_2^{-\frac {1}{2}}\tilde {W}_1\tilde {W}_2^{-\frac {1}{2}}>O\) be distinct and such that λ 1 > ⋯ > λ p > 0, noting that for Hermitian matrices, the eigenvalues are real. We are interested in the joint distributions of the eigenvalues λ 1, …, λ p and the eigenvectors \(\tilde {Y}_1,\ldots ,\tilde {Y}_p\). Alternatively, we will consider the equation

Proceeding as in the real case, one can observe that the joint density of \(\tilde {W}_1\) and \(\tilde {W}_2\), denoted by \(\tilde {f}(\tilde {W}_1,\tilde {W}_2)\), can be factorized into the product of the density of \(\tilde {U}_1=(\tilde {W}_1+\tilde {W}_2)^{-\frac {1}{2}}\tilde {W}_1(\tilde {W}_1+\tilde {W}_2)^{-\frac {1}{2}}\), denoted by \(\tilde {g}(\tilde {U}_1),\) which is a complex matrix-variate type-1 beta random variable with the parameters (α 1, α 2), and the density of \(\tilde {U}_3=\tilde {W}_1+\tilde {W}_2\), denoted by \(\tilde {g}_1(\tilde {U}_3),\) which is a complex matrix-variate gamma density with the parameters \((\alpha _1+\alpha _2,~\tilde {B}),~ \tilde {B}>O\). That is,

where

for \(O<\tilde {U}_1<I,~ \Re (\alpha _j)>p-1,~j=1,2\), and \(\tilde {g}=0\) elsewhere, and

for \(\tilde {U}_3=\tilde {U}_3^{*}>O,~\Re (\alpha _1+\alpha _2)>p-1, \) and \( \tilde {B}=\tilde {B}^{*}>O,\) and zero elsewhere.

Note that by making the transformation \(\tilde {U}_1=QDQ^{*}\) with QQ ∗ = I, Q ∗ Q = I, and D = diag(μ 1, …, μ p), the joint density of μ 1, …, μ p, as obtained from (8.2a.10), is given by

also refer to Note 8.2a.1. Then, we have the following result on observing that the joint density of λ 1, …, λ p is available from (8.2a.12) by making the substitution \(\lambda =\frac {\mu }{1-\mu }\) or \(\mu =\frac {\lambda }{1+\lambda }\). Note that \(\mathrm {d}\mu _j=\frac {1}{(1+\lambda _j)^2}\mathrm {d}\lambda _j\), \(1-\mu _j=\frac {1}{1+\lambda _j}\), and

whose denominator contains p − 1 times (1 + λ j)2 for each j = 1, …, p. Thus, the final exponent of \(\frac {1}{1+\lambda _j}\) is (α 1 − p) + (α 2 − p) + 2 + 2(p − 1) = α 1 + α 2. Hence, the following result:

Theorem 8.2a.3

In the complex case, the joint density of the eigenvalues μ 1 > ⋯ > μ p > 0 of the determinantal equation in (8.2a.8) is given by the expression appearing in (8.2a.12), which is equal to the density specified in (8.2a.5).

We now consider the joint density of the eigenvectors \(\tilde {Y}_1,\ldots ,\tilde {Y}_p\), which will be available from (8.2a.11), thus establishing that the set of eigenvalues λ 1, …, λ p and the eigenvectors \(\tilde {Y}_1,\ldots ,\tilde {Y}_p\) are independently distributed. For determining the joint density of the eigenvectors, we start with the equation

observing that λ j and μ j share the same eigenvector \(\tilde {Y}_j\). That is,

We continue as in the real case, showing that \(\tilde {Y}_i^{*}(\tilde {W}_1+\tilde {W}_2)\tilde {Y}_j=0\) for all i≠j. Then, we normalize \(\tilde {Y}_j\) as follows: \(\tilde {Y}_j^{*}(\tilde {W}_1+\tilde {W}_2)\tilde {Y}_j=1, ~j=1,\ldots ,p\). Letting \(\tilde {Y}=(\tilde {Y}_1,\ldots ,\tilde {Y}_p)\) be the p × p matrix of the normalized eigenvectors, we have \(\tilde {Y}^{*}(\tilde {W}_1+\tilde {W}_2)\tilde {Y}=I\Rightarrow \tilde {U}_1= \tilde {W}_1+\tilde {W}_2=(\tilde {Y}^{*})^{-1}(\tilde {Y})^{-1}\). Letting \((\tilde {Y})^{-1}=\tilde {Z}\) so that \(\tilde {Z}^{*}\tilde {Z}=(\tilde {Y}\tilde {Y}^{*})^{-1}\) and applying Theorem 4.2a.3, \(\mathrm {d}(\tilde {Z}^{*}\tilde {Z})=\frac {\tilde {\varGamma }_p(p)}{\pi ^{p(p-1)}}\mathrm {d}\tilde {Z}\). Hence, given the density of \(\tilde {W}_1+\tilde {W}_2\) specified in (8.2a.11), the density of \(\tilde {Z}\), denoted by \(\tilde {g}_3(\tilde {Z})\), is obtained as

Now noting that \(\tilde {Z}=\tilde {Y}^{-1}\Rightarrow \mathrm {d}\tilde {Z}=|\mathrm {det}(\tilde {Y}^{*}\tilde {Y})|{ }^{-p}\mathrm {d}\tilde {Y}\) from an application of Theorem 1.6a.6, and substituting in (8.2a.13), we obtain the following density of \(\tilde {Y}\), denoted by \(\tilde {g}_4(\tilde {Y})\):

for \(\tilde {B}=\tilde {B}^{*}>O,~ \Re (\alpha _1+\alpha _2)>p-1\), and zero elsewhere.

Example 8.2a.5

Show that the roots of the determinantal equation \(\mathrm {det}(\tilde {W}_1-\lambda \tilde {W}_2)=0\) are the same as the eigenvalues of \(\tilde {W}_2^{-\frac {1}{2}}\tilde {W}_1\tilde {W}_2^{-\frac {1}{2}}\) for the following Hermitian positive definite matrices:

Solution 8.2a.5

Let us evaluate the eigenvalues and eigenvectors of \(\tilde {W}_2\). Consider the equation \(\mathrm {det}(\tilde {W}_2-\mu I)=0\Rightarrow (3-\mu )^2-2^2=0\Rightarrow \mu _1=5\) and μ 2 = 1 are the eigenvalues of \(\tilde {W}_2\). An eigenvector corresponding to μ 1 = 5 must satisfy the equation

Since it is a singular system of linear equations, we can solve any one of them for x 1 and x 2. For x 2 = 1, we have \(x_1=\frac {1}{\sqrt {2}}(1+i)\). Thus, one eigenvector is

where \(\tilde {Y}_1\) is the normalized eigenvector obtained from \(\tilde {X}_1\). Similarly, corresponding to the eigenvalue μ 2 = 1, we have the normalized eigenvector

so that

observing that the above format is \(\tilde {W}_2=\tilde {Y}D\tilde {Y}^{*}\) with \(\tilde {Y}=[\tilde {Y}_1,~\tilde {Y}_2]\) and D = diag(5, 1), the diagonal matrix of the eigenvalues of \(\tilde {W}_2\). Since \(\tilde {W}_2,~\tilde {W}_2^{\frac {1}{2}},~\tilde {W}_2^{-1}\) and \(\tilde {W}_2^{-\frac {1}{2}}\) share the same eigenvectors, we have

It is easily verified that

Letting \(Q=\tilde {W}_2^{-\frac {1}{2}}\tilde {W}_1\tilde {W}_2^{-\frac {1}{2}}\), we have

The eigenvalues of 4Q can be determined by solving the equation

Thus, the eigenvalues of Q, denoted by δ, are

Now, let us consider the determinantal equation \(\mathrm {det}(\tilde {W}_1-\lambda \tilde {W}_2)=0\), that is,

which yields

The eigenvalues obtained in (iv) and (v) being identical, the result is established.

8.3. The Singular Real Case

If a p × p real matrix-variate gamma distribution with parameters (α, β) and B > O is singular and positive semi-definite, its p × p-variate density does not exist. When \(\alpha =\frac {m}{2},~ m\ge p,\) and \(B=\frac {1}{2}\varSigma ^{-1},~\varSigma >O,\) the gamma density is called a Wishart density with m degrees of freedom and parameter matrix Σ > O. If the rank of the gamma or Wishart matrices is r < p, in which case they are positive semi-definite, the resulting distributions are said to be singular. It can be shown that, in this instance, we have in fact nonsingular r × r-variate gamma or Wishart distributions. In order to establish this, the matrix theory results presented next are required.

Let A = A′≥ O (non-negative definite) be a p × p real matrix of rank r < p, and the elementary matrices E 1, E 2, …, E k be such that by operating on A, one has

where O 1, O 2 and O 3 are null matrices, with O 3 being of order (p − r) × (p − r). Then,

where Q is a product of inverses of elementary matrices and hence, nonsingular. Letting

Note that

where  which is a full rank p × r matrix, r < p, so that the r columns of A

1 are all linearly independent. This result can also be established by appealing to the fact that when A ≥ O, its eigenvalues are non-negative, and A being symmetric, there exists an orthonormal matrix P, PP′ = I, P′P = I, such that

which is a full rank p × r matrix, r < p, so that the r columns of A

1 are all linearly independent. This result can also be established by appealing to the fact that when A ≥ O, its eigenvalues are non-negative, and A being symmetric, there exists an orthonormal matrix P, PP′ = I, P′P = I, such that

where P 1, …, P p are the columns of the orthonormal matrix P, λ 1, …, λ r, 0, …, 0 are the eigenvalues of A where λ j > 0, j = 1, …, r, and P (1) contains the first r columns of P. The first r eigenvalues must be positive since A is a non-negative definite matrix of rank r. Now, we can write \(A=P_{(1)}DP_{(1)}^{\prime }=A_1A_1^{\prime },\) with \( A_1=P_{(1)}D^{\frac {1}{2}}\). Observe that A 1 is p × r and of rank r < p. Thus, we have the following result:

Theorem 8.3.1

Let A = A′ be a real p × p positive semi-definite matrix, A ≥ O, of rank r < p. Then, A can be represented in the form \(A=A_1A_1^{\prime }\) where A 1 is a p × r, r < p, matrix of rank r or, equivalently, the r columns of the p × r matrix A 1 are all linearly independent.

In the case of Wishart matrices, we can interpret Theorem 8.3.1 as follows: Let the p × 1 vector random variable X j have a nonsingular Gaussian distribution whose mean value is the null vector and covariance matrix Σ is positive definite. Let the X j’s, j = 1, …, n, be independently distributed, that is, \(X_j\overset {iid}{\sim } N_p(O,~\varSigma ),~\varSigma >O,\ j=1,\ldots ,n\). Letting the p × n sample matrix be X = [X 1, …, X n], the joint density of X 1, …, X n or that of X, denoted by f(X), is the following:

Letting W = XX ′, the p × p matrix W will be positive definite provided n ≥ p; otherwise, that is when n < p, W will be singular. Let us consider the case n ≥ p first. This will also provide a derivation of the real Wishart density which was earlier obtained as a special case of real matrix-variate gamma density. Observe that we can write dX in terms of dW by applying Theorem 4.2.3, namely,

Therefore, if the density of W is denoted by f 1(W), then f 1(W) is available from (8.3.1) by expressing dX in terms of dW. That is,

for n ≥ p, W > O, Σ > O, and f 1(W) = 0 elsewhere. This is the density of a nonsingular Wishart distribution with n degrees of freedom, n ≥ p, and parameter matrix Σ > O, which is denoted W ∼ W p(n, Σ), Σ > O, n ≥ p. It has previously been shown that when \(X_j\overset {iid}{\sim } N_p(\mu ,~\varSigma ),~j=1,\ldots ,n\), where μ≠O is the common p × 1 mean value vector and Σ is the positive definite covariance matrix,

where \(\bar {\mathbf {X}}=[\bar {X},\ldots ,\bar {X}],\ \bar {X}=\frac {1}{n}(X_1+\cdots +X_n)\). Thus, we have the following result:

Theorem 8.3.2

Let \(X_j\overset {iid}{\sim } N_p(\mu ,~\varSigma ), ~\varSigma >O, ~j=1,\ldots ,n\) . Let X = [X 1, …, X n] and W = XX ′. Then, when μ = O, the p × p positive definite matrix W ∼ W p(n, Σ), Σ > O for n ≥ p. If μ≠O, \(W=(\mathbf {X}-\bar {\mathbf {X}})(\mathbf {X}-\bar {\mathbf {X}})'\sim W_p(n-1,~\varSigma ),~\varSigma >O,\) for n − 1 ≥ p, where \(\bar {\mathbf {X}}=[\bar {X},\ldots ,\bar {X}],\ \bar {X}=\frac {1}{n}(X_1+\cdots +X_n)\).

Now, consider the case n < p. Let us denote n as r < p in order to avoid any confusion with n as specified in the nonsingular case. Letting X be as previously defined, X is a real matrix of order p × r, n = r < p. Let T 1 = XX ′ and T 2 = X ′ X where X ′ X is an r × r positive definite matrix since X and X ′ are full rank matrices of rank r < p. Thus, all the eigenvalues of T 2 = X ′ X are positive and the eigenvalues of T 1 are either positive or equal to zero since T 1 is a real positive semi-definite matrix. Letting λ be a nonzero eigenvalue of T 2, consider the following determinant, denoted by δ, which is expanded in two ways by making use of certain properties of the determinants of partitioned matrices that are provided in Sect. 1.3:

which shows that λ is an eigenvalue of X ′ X. Now expand δ as follows:

so that all the r nonzero eigenvalues of T 2 = X ′ X are also eigenvalues of T 1 = XX ′, the remaining eigenvalues of T 1 being zeros. As well, one has |I r −X ′ X| = |I p −XX ′|. These results are next stated as a theorem.

Theorem 8.3.3

Let X be a p × r matrix of full rank r < p. Let the real p × p positive semi-definite matrix T 1 = XX ′ and the r × r real positive definite matrix T 2 = X ′ X . Then, (a) the r positive eigenvalues of T 2 are identical to those of T 1 , the remaining eigenvalues of T 1 being equal to zero; (b) |I r −X ′ X| = |I p −XX ′|.

Additional results relating the p-variate real Gaussian distribution to the real Wishart distribution are needed in connection with the singular case. Let the p × 1 vector X j have a p-variate real Gaussian distribution whose mean value is the null vector and covariance matrix is positive definite, with \(X_j \overset {iid}{\sim } N_p(O,~\varSigma ),~\varSigma >O\), j = 1, …, r, r < p. Let X = [X 1, …, X r] be a p × r matrix, which, in this instance, is also the sample matrix. We are seeking the distribution of T 1 = XX ′ when r < p, where T 1 corresponds to a singular Wishart matrix. Letting T be an r × r lower triangular matrix with positive diagonal elements, and G be an r × p, r < p, semiorthonormal matrix, that is, GG′ = I r, we have the representation X ′ = TG, so that

where h(G) is a differential element associated with G. Then, on applying Theorem 4.2.2, we have

where V r,p is the Stiefel manifold or the space of semi-orthonormal r × p, r < p, matrices. Observe that the density of X ′, denoted by \(f_{\mathbf {X}'}(\mathbf {X}')\), is the following:

Let T 2 = X ′Σ −1 X or simply T 2 = X ′ X when Σ = I; note that Σ will vanish upon letting \(\mathbf {Y}=\varSigma ^{-\frac {1}{2}}\mathbf {X} \Rightarrow \mathrm {d}\mathbf {Y} = |\varSigma |{ }^{-\frac {r}{2}}\mathrm {d}\mathbf {X}\). Now, on expressing dX ′ in terms of dT 2 by making use of Theorem 4.2.3, the following result is obtained:

Theorem 8.3.4

Let \(X_j\overset {iid}{\sim }N_p(\mu ,~\varSigma ),~\varSigma >O,~ j=1,\ldots ,r,~ r<p\) . Let \(\mathbf {X}=[X_1,\ldots ,X_r],~\bar {X}=\frac {1}{r}(X_1+\cdots +X_r) \) and \( \bar {\mathbf {X}}=(\bar {X},\ldots ,\bar {X})\) . Letting T 2 = X ′Σ −1 X or T 2 = X ′ X when Σ = I, T 2 has the following density, denoted by f t(T 2), when μ = O:

and zero elsewhere. Note that the r × r matrix T 2 = X ′ X or T 2 = X ′Σ −1 X when Σ≠I, has a Wishart distribution with p degrees of freedom and parameter matrix I, that is, T 2 ∼ W r(p, I), r ≤ p. When μ≠O, \(T_2=(\mathbf {X}-\bar {\mathbf {X}})'\varSigma ^{-1}(\mathbf {X} - \bar {\mathbf {X}})\) has a Wishart distribution with p − 1 degrees of freedom or, equivalently, T 2 ∼ W r(p − 1, I), r ≤ p − 1.

8.3.1. Singular Wishart and matrix-variate gamma distributions, real case

We now consider the case of a singular matrix-variate gamma distribution. Let the p × 1 vector X j have a p-variate real Gaussian distribution whose mean value is the null vector and covariance matrix is positive definite, with \(X_j \overset {iid}{\sim } N_p(O,~\varSigma ),~\varSigma >O\), j = 1, …, r, r < p. For convenience, let Σ = I p. Let X = [X 1, …, X r] be the p × r sample matrix. Then, for r ≥ p, XX ′ is distributed as a Wishart matrix with r ≥ p degrees of freedom, that is, XX ′∼ W p(r, I), r ≥ p. This result still holds for any positive definite matrix Σ; it suffices then to replace I by Σ. What about the distribution of XX ′ if r < p, which corresponds to the singular case? In this instance, the real matrix XX ′≥ O (positive semi-definite) and the density of X, denoted by f 1(X), is the following:

Let W 2 be a p × p nonsingular Wishart matrix with n ≥ p degrees of freedom, that is, W 2 ∼ W p(n, I). Let X and W 2 be independently distributed. Then, the joint density of X and W 2, denoted by f 2(X, W 2), is given by

for W 2 > O, XX ′≥ O, n ≥ p, r < p. Letting U = XX ′ + W 2 > O, and the joint density of U and X be denoted by f 3(X, U), we have

where

Letting \(V=U^{-\frac {1}{2}}\mathbf {X}\) for fixed U, \(\mathrm {d}V=|U|{ }^{\frac {r}{2}}\mathrm {d}\mathbf {X}\), and the joint density of U and V , denoted by f 4(U, V ), is then

Note that U and V are independently distributed. By integrating out U with the help of a real matrix-variate gamma integral, we obtain the density of V , denoted by f 5(V ), as

in view of Theorem 8.3.3(b), where V is p × r, r < p, c being the normalizing constant. Thus, we have the following result:

Theorem 8.3.5

Let X = [X 1, …, X r] and \(X_j \overset {iid}{\sim } N_p(O,\,I),~ j=1,\ldots ,r,~ r<p. \) Let the p × p real positive definite matrix W 2 be Wishart distributed with n degrees of freedom, that is, W 2 ∼ W p(n, I). Let U = XX ′ + W 2 > O and let \(V=U^{-\frac {1}{2}}\mathbf {X}\) where V is a p × r, r < p, matrix of full rank r. Observe that V V ′≥ O (positive semi-definite). Then, the densities of the r × p matrix V ′ and the matrix S = V ′V , respectively denoted by f 5(V ) and f 6(S), are as follows, U and V being independently distributed:

and

Proof

In light of Theorem 8.3.3(b), |I p − V V ′| = |I r − V ′V |. Observe that V V ′ is p × p and positive semi-definite whereas V ′V is r × r and positive definite. As well, \(\frac {n}{2}-\frac {p+1}{2}=\frac {n-p+r}{2}-\frac {r+1}{2}\). Letting S = V ′V and expressing dV ′ in terms of dS by applying Theorem 4.2.3, then for r < p,

Now, integrating out S by making use of a type-1 beta integral, we have

for n ≥ p, r < p. The normalizing constants in (8.3.11) and (8.3.12) follow from (i) and (ii) . This completes the proofs.

At this juncture, we are considering the singular version of the determinantal equation in (8.2.8). Let W 1 and W 2 be independently distributed p × p real matrices where W 1 = XX ′, X = [X 1, …, X r] with \(X_j\overset {iid}{\sim } N_p(O,~I), ~j=1,\ldots ,r, ~r<p\), and the positive definite matrix W 2 ∼ W p(n, I), n ≥ p. The equation

which, in turn, implies that μ is an eigenvalue of V V ′≥ O and all the eigenvalues are positive or zero. However, it follows from (8.3.3) and (8.3.4) that

Hence, the following result:

Theorem 8.3.6

Let X be a p × r matrix whose columns are iid N p(O, I) and r < p. Let W 1 = XX ′≥ O which is a p × p positive semi-definite matrix, W 2 > O be a p × p Wishart distributed matrix having n degrees of freedom, that is, W 2 ∼ W p(n, I), n ≥ p, and let W 1 and W 2 be independently distributed. Then, U = W 1 + W 2 > O and \(V=U^{-\frac {1}{2}}X\) are independently distributed. Moreover,

where the roots μ j > 0, j = 1, …, r, are the eigenvalues of V ′V > O, and the eigenvalues of V V ′ are μ j > 0, j = 1, …, r, with the remaining p − r eigenvalues of V V ′ being equal to zero.

Let S = PDP′, D = diag(μ 1, …, μ r), PP′ = I r, P′P = I r. Then, on applying Theorem 8.2.2,

after integrating out over the differential element associated with the orthonormal matrix P. Substituting in f 6(S) of Theorem 8.3.5 yields the following result:

Theorem 8.3.7

Let μ 1, …, μ r be the nonzero roots of the determinantal equation |W 1 − μ(W 1 + W 2)| = 0 where \(W_1=\mathbf {XX}',~ \mathbf {X}=[X_1,\ldots ,X_r], \ X_j\overset {iid}{\sim } N_p(O,~I),~ j=1,\ldots ,r<p\) , W 2 ∼ W p(n, I), n ≥ p, and W 1 and W 2 be independently distributed. Letting μ 1 > μ 2 > ⋯ > μ r > 0, r < p, the joint density of the nonzero roots μ 1, …, μ r , denoted by f μ(μ 1, …, μ r), is given by

It can readily be observed from (8.3.9) that U = XX ′ + W 2 and \(V=U^{-\frac {1}{2}}X\) are indeed independently distributed.

8.3.2. A direct evaluation as an eigenvalue problem

Consider the singular version of the original determinantal equation

where W 1 is singular, W 2 is nonsingular, and W 1 and W 2 are independently distributed. Thus, the roots λ j’s of the equation |W 1 − λW 2| = 0 coincide with the eigenvalues of the matrix \(U=W_2^{-\frac {1}{2}}W_1W_2^{-\frac {1}{2}}\). Let W 2 be a real nonsingular matrix-variate gamma or Wishart distributed matrix. Let \(W_1=\mathbf {XX}',~ \mathbf {X}=[X_1,\ldots ,X_r],~ X_j\overset {iid}{\sim } N_p(O,~\varSigma ),~\varSigma >O,~ j=1,\ldots ,r,~ r<p,\) or, equivalently, the p × r, r < p, matrix X is a simple random sample from this p-variate real Gaussian population. We will take Σ = I without any loss of generality. In this case, X is a p × r full rank matrix with r < p. Let W 2 ∼ W p(n, I), n ≥ p, that is, W 2 is a nonsingular Wishart matrix with n degrees of freedom and parameter matrix I, and W 1 ≥ O (positive semi-definite). Then, the joint density of X and W 2, denoted by f 7(X, W 2), is the following:

Consider the exponent

where \(V=W_2^{-\frac {1}{2}}\mathbf {X}\Rightarrow \mathrm {d}\mathbf {X}=|W_2|{ }^{\frac {r}{2}}\mathrm {d}V\) for fixed W 2. The joint density of W 2 and V , denoted by f 8(V, W 2), is then

Observe that \(VV'=W_2^{-\frac {1}{2}}\mathbf {XX}'W_2^{-\frac {1}{2}}=U\) of (8.3.14). Integrating out W 2 in (ii) by using a real matrix-variate gamma integral, we obtain the marginal density of V , denoted by f 9(V ), as

where V V ′≥ O (positive semi-definite). Note that

which follows from Theorem 8.3.3(b). This last result can also be established by expanding the following determinant in two ways as was done in (8.3.3) and (8.3.4):

Hence, the density of V ′ must be of the following form where c 1 is the normalizing constant:

On applying Theorem 4.2.3, (iv) can be transformed into a function of S 1 = V ′V > O, S 1 being of order r × r. Then,

Substituting the above expression for dV ′ in f 10(V ′) and then integrating over the r × r matrix S 1 > O, we have

Accordingly, the density of V ′ is the following:

Note that \(\varGamma _r(\frac {p}{2})\) cancels out. Then, by re-expressing dV ′ in terms of dS 1 in (8.3.15), the density of S 1 = V ′V is obtained as

for S 1 = V ′V > O, n ≥ p, r < p, and zero elsewhere, which is a real r × r matrix-variate type-2 beta density with the parameters \((\frac {p}{2},~\frac {n+r-p}{2})\). Thus, the following result:

Theorem 8.3.8

Let \(W_1=\mathbf {XX}',~ \mathbf {X}=[X_1,\ldots ,X_r], ~X_j\overset {iid}{\sim } N_p(O,\,I),~ j=1,\ldots ,r,\) r < p, W 2 ∼ W p(n, I), n ≥ p, and W 1 and W 2 be independently distributed. Let \(V=W_2^{-\frac {1}{2}}X,~ VV'\ge O\) and S 1 = V ′V > O. Then, |I p + V V ′| = |I r + V ′V | = |I r + S 1|. The density of V ′ is given in (8.3.15) and that of S 1 , which is specified in (8.3.16), is a real matrix-variate type-2 beta density with the parameters \((\frac {p}{2},\, \frac {n+r-p}{2})\) . Moreover, the positive semi-definite matrix \(S_2=W_2^{-\frac {1}{2}}\mathbf {XX}'W_2^{-\frac {1}{2}}\) is distributed, almost surely, as S 1 , which has a nonsingular real matrix-variate type-2 beta distribution with the parameters \((\frac {p}{2}, \, \frac {n+r-p}{2})\).

Observe that this theorem also holds when \(X_j\overset {iid}{\sim } N_p(O,\varSigma ),~ \varSigma >O\) and W 2 ∼ W p(n, Σ), Σ > O, and the distribution of S 2 will still be free of Σ. Converting (8.3.16) in terms of the eigenvalues of S 1, which are also the nonzero eigenvalues of \(S_2=W_2^{-\frac {1}{2}}\mathbf {XX}'W_2^{-\frac {1}{2}}\) of (8.3.14), we have the following density, denoted by f 12(λ 1, …, λ r)dD, D = diag(λ 1, …, λ r), assuming that the eigenvalues are distinct and such that λ 1 > λ 2 > ⋯ > λ r > 0:

Theorem 8.3.9

Let W 2 and X be as defined in (8.3.14). Then, the joint density of the nonzero eigenvalues λ 1, …, λ r of \(S_2=W_2^{-\frac {1}{2}}\mathbf {XX}'W_2^{-\frac {1}{2}}\) , which are assumed to be distinct and such that λ 1 > ⋯ > λ r > 0, is given in (8.3.17).

In (8.3.13) we have obtained the joint density of the nonzero roots μ 1 > ⋯ > μ r of the determinantal equation

Hence, making the substitution \(\mu _j=\frac {\lambda _j}{1+\lambda _j}\) in (8.3.13) should yield the density appearing in (8.3.17). This will be stated as a theorem.

Theorem 8.3.10

When \(\mu _j=\frac {\lambda _j}{1+\lambda _j}\) or \(\lambda _j=\frac {\mu _j}{1-\mu _j},\) the distributions of the μ j ’s or the λ j ’s, as respectively specified in (8.3.13) and (8.3.17), coincide.

8.3a. The Singular Complex Case

The matrix manipulations that were utilized in the real case also apply in the complex domain. The following result parallels Theorem 8.3.1:

Theorem 8.3a.1

Let the p × p matrix A = A ∗≥ O be a Hermitian positive semi-definite matrix of rank r < p, where A ∗ designate the conjugate transpose of A. Then, A can be represented as \(A=A_1A_1^{*}\) where A 1 is p × r, r < p, of rank r, that is, all the columns of A 1 are linearly independent.

A derivation of the Wishart matrix in the complex case can also be worked out from a complex Gaussian distribution. In earlier chapters, we have derived the Wishart density as a particular case of the matrix-variate gamma density, whether in the real or complex domain. Let the p × 1 complex vectors \(\tilde {X}_j,\ j=1,\ldots ,n,\) be independently distributed as p-variate complex Gaussian random variables with the null vector as their mean value and a common Hermitian positive definite covariance matrix, that is, \(\tilde {X}_j\overset {iid}{\sim } \tilde {N}_p(O,~\varSigma ),~ \varSigma =\varSigma ^{*}>O\) for j = 1, …, n. Let the p × n matrix \(\tilde {\mathbf {X}}=[\tilde {X}_1,\ldots ,\tilde {X}_n]\) be the simple random sample matrix from this complex Gaussian population. Then, the density of \(\tilde {\mathbf {X}}\), denoted by \(\tilde {f}(\tilde {\mathbf {X}})\), is given by

for n ≥ p. Let the p × p Hermitian positive definite matrix \(\tilde {\mathbf {X}}\tilde {\mathbf {X}}^{*}=\tilde {W}\). For n ≥ p, it follows from Theorem 4.2a.3 that

where \(\mathrm {d}\tilde {\mathbf {X}}=\mathrm {d}Y_1\wedge \mathrm {d}Y_2, ~\tilde {\mathbf {X}}=Y_1+iY_2,~ i=\sqrt {-1},~ Y_1,Y_2\) being real p × n matrices. Given (8.3a.1) and (8.3a.2), the density of \(\tilde {W}\), denoted by \(\tilde {f}_1(\tilde {W})\), is obtained as

and zero elsewhere; this is the complex Wishart density with n degrees of freedom and parameter matrix Σ > O, which is written as \(\tilde {W}\sim \tilde {W}_p(n,~\varSigma ),~\varSigma >O,~ n\ge p\). If \(\tilde {X}_j\sim \tilde {N}_p(\tilde {\mu },~\varSigma ),~\varSigma >O,\) then letting \(\bar {\tilde {X}}=\frac {1}{n}(\tilde {X}_1+\cdots +\tilde {X}_n),~\bar {\tilde {\mathbf {X}}} = (\bar {\tilde {X}},\ldots ,\bar {\tilde {X}})\) and

we have \(\tilde {W}\sim \tilde {W}_p(n-1,~\varSigma ),~ n-1\ge p,~\varSigma >O\), or \(\tilde {W}\) has a Wishart distribution with n − 1 instead of n degrees of freedom, \(\tilde {\mu }\ne O\) being eliminated by subtracting the sample mean. The remainder of this section is devoted to the distribution of \(\tilde {\mathbf {X}}\tilde {\mathbf {X}}^{*}\) for n < p, that is, in the singular case. Proceeding as in the real case, we have

where \(\tilde {\mathbf {X}}\tilde {\mathbf {X}}^{*}\ge O\) is p × p, whereas the r × r, r < p, matrix \(\tilde {\mathbf {X}}^{*}\tilde {\mathbf {X}}>O\). Then, we have the following result:

Theorem 8.3a.2

Let \(\tilde {T}_1=\tilde {\mathbf {X}}\tilde {\mathbf {X}}^{*}\) and \(\tilde {T}_2=\tilde {\mathbf {X}}^{*}\tilde {\mathbf {X}}\) . Then, the eigenvalues of \(\tilde {T}_2\) are all real and positive and the nonzero eigenvalues of \(\tilde {T}_1\) are identical to those of \(\tilde {T}_2\) , the remaining ones being equal to zero.

The complex counterpart of Theorem 8.3.4 that follows can be derived using steps parallel to those utilized in the real case.

Theorem 8.3a.3

Let the p × 1 complex vectors \(\tilde {X}_j\overset {iid}{\sim } \tilde {N}_p(\tilde {\mu },~ \varSigma ),~\varSigma >O, ~j=1,\ldots ,r\) . Let \(\tilde {\mathbf {X}}=[\tilde {X}_1,\ldots ,\tilde {X}_r]\) be the simple random sample matrix from this complex p-variate Gaussian population. Let \(\tilde {T}_2=\tilde {\mathbf {X}}^{*}\varSigma ^{-1}\tilde {\mathbf {X}}\,\) or \(\,\tilde {T}_2=\tilde {\mathbf {X}}^{*}\tilde {\mathbf {X}}\) if Σ = I p . Then, the density of \(\tilde {T}_2\) , denoted by \(\tilde {f}_u(\tilde {T}_2)\) , is the following:

so that \(\tilde {T}_2\sim \tilde {W}_r(p,I)\) , that is, \(\tilde {T}_2\) has a complex Wishart distribution with p degrees of freedom. If \(\tilde {\mu }\ne O\) , let \(\tilde {T}_2=(\tilde {\mathbf {X}}-\bar {\tilde {\mathbf {X}}})^{*}(\tilde {\mathbf {X}}-\bar {\tilde {\mathbf {X}}})\) or \(\tilde {T}_2=(\tilde {\mathbf {X}}-\bar {\tilde {\mathbf {X}}})^{*}\varSigma ^{-1}(\tilde {\mathbf {X}}-\bar {\tilde {\mathbf {X}}})\) when Σ≠I with \(\bar {\tilde {\mathbf {X}}}=(\bar {\tilde {X}},\ldots ,\bar {\tilde {X}}) \) wherein \(\bar {\tilde {X}}=\frac {1}{r}(\tilde {X}_1+\cdots +\tilde {X}_r)\) . Then, \(\tilde {T}_2\sim \tilde {W}_r(p-1,~I),~ r\le p-1\).

8.3a.1. Singular gamma or singular Gaussian distribution, complex case

Let \(\tilde {\mathbf {X}}=[\tilde {X}_1,\ldots ,\tilde {X}_r]\) where the p × 1 vectors \(\tilde {X}_1,\ldots ,\tilde {X}_r\) are independently distributed as complex Gaussian vectors whose mean value is the null vector and covariance matrix is I, that is, \(\tilde {X}_j \overset {iid}{\sim } \tilde {N}_p(O,~I)\), j = 1, …, r, r < p. Then, the density of \(\tilde {\mathbf {X}}\), denoted by \(\tilde {f}_1(\tilde {\mathbf {X}})\), is

Let r < p so that \(\tilde {\mathbf {X}}\tilde {\mathbf {X}}^{*}\ge O\) (Hermitian positive semi-definite). Let the p × p Hermitian positive definite matrix \(\tilde {W}_2\) have a Wishart density with n ≥ p degrees of freedom and parameter matrix I, that is, \(\tilde {W}_2\sim \tilde {W}_p(n,~I),~n\ge p\). Further assume that \(\tilde {\mathbf {X}}\) and \(\tilde {W}_2\) are independently distributed. Then, the joint density of \(\tilde {\mathbf {X}}\) and \(\tilde {W}_2\), denoted by \(\tilde {f}_2(\tilde {\mathbf {X}},\,\tilde {W}_2)\), is the following:

where \(\tilde {\mathbf {X}}\) is p × r, r < p. Letting the p × p matrix \(\tilde {U}=\tilde {\mathbf {X}}\tilde {\mathbf {X}}^{*}+\tilde {W}_2>O\), the joint density of \(\tilde {U}\) and \(\tilde {\mathbf {X}}\), denoted by \(\tilde {f}_3(\tilde {\mathbf {X}},\,\tilde {U})\), is given by

Letting \(\tilde {U}=\tilde {U}^{*}>O\), one has

where \(\tilde {V}\) is a p × r matrix of rank r < p. Since \(\mathrm {d}\tilde {X}=|\mathrm {det}(\tilde {U})|{ }^r\mathrm {d}\tilde {V}\) for fixed \(\tilde {U}\), the joint density of \(\tilde {U}\) and \(\tilde {V}\) is as follows:

As has been previously noted,

where \(\tilde {V}^{*}\tilde {V}\) is an r × r Hermitian positive definite matrix. Since \(\tilde {f}_4(\tilde {U},\tilde {V})\) can be factorized, \(\tilde {U}\) and \(\tilde {V}\) are independently distributed, and the marginal density of \(\tilde {V}\), denoted by \(\tilde {f}_5(\tilde {V})\), is of the following form, after integrating out \(\tilde {U}\) with the help of a complex matrix-variate gamma integral:

where \(\tilde {c}\) is the normalizing constant. Now, proceeding as in the real case, the following result is obtained:

Theorem 8.3a.4

Let the p × 1 complex vectors \(\tilde {X}_j\overset {iid}{\sim } \tilde {N}_p(O,~I), ~j=1,\ldots ,r\) , and \(\tilde {\mathbf {X}}=[\tilde {X}_1,\ldots ,\tilde {X}_r]\) be the p × r full rank sample matrix with r < p. Let \(\tilde {W}_2\) be a p × p Hermitian positive definite matrix having a nonsingular Wishart density with n degrees of freedom and parameter matrix I, that is, \(\tilde {W}_2\sim \tilde {W}_p(n,~I), ~n\ge p\) , and assume that \(\tilde {W}_2\) and \(\tilde {\mathbf {X}}\) are independently distributed. Let \(\tilde {U}=\tilde {\mathbf {X}}\tilde {\mathbf {X}}^{*}+\tilde {W}_2\) be Hermitian positive definite, \(\tilde {V}=\tilde {U}^{-\frac {1}{2}}\tilde {\mathbf {X}}\) and \(\tilde {S}=\tilde {V}^{*}\tilde {V}\) . Then, \(\tilde {U}\) and \(\tilde {V}\) are independently distributed and the densities of \(\tilde {V}\) and \(\tilde {S}\) , respectively denoted by \(\tilde {f}_5(\tilde {V})\) and \(\tilde {f}_6(\tilde {S})\) , are

and

observing that n − p = (n + r − p) − r.

Let \(\tilde {W}_1=\tilde {\mathbf {X}}\tilde {\mathbf {X}}^{*}\) where the p × r matrix \(\tilde {\mathbf {X}}\) is the previously defined sample matrix arising from a standard complex Gaussian population. Let \(\tilde {W}_2\sim \tilde {W}_p(n,~I)\) and assume that \(\tilde {W}_1\) and \(\tilde {W}_2\) are independently distributed. Letting \(\tilde {U}=\tilde {\mathbf {X}}\tilde {\mathbf {X}}^{*}+\tilde {W}_2>O\), consider the determinantal equation

Then, as in the real case, the following result can be obtained:

Theorem 8.3a.5

Let \(\tilde {W}_1,~\tilde {W}_2,~\tilde {V}\) and \(\tilde {U}\) be as previously defined. Then,

This establishes that the roots μ j’s of the determinantal equation (i) coincide with the eigenvalues of \(\tilde {V}^{*}\tilde {V}\), and since \(\tilde {V}^{*}\tilde {V}>O\), the eigenvalues are real and positive. Let the eigenvalues be distinct, in which case μ 1 > ⋯ > μ r > 0. Then, steps parallel to those utilized in the real case will yield the following result:

Theorem 8.3a.6

Let μ 1, …, μ r be the nonzero roots of the equation

where \(\tilde {W}_1\) and \(\tilde {W}_2\) are as previously defined. Let μ 1 > ⋯ > μ r > 0, r < p, and let D = diag(μ 1, …, μ r). Then, the joint density of the eigenvalues μ 1, …, μ r , denoted by \(\tilde {f}_{\mu }(\mu _1,\ldots ,\mu _r),\) which is available from (8.3a.12) and the relationship between \( \mathrm {d}{\tilde S}\) and dD, is the following:

8.3a.2. A direct method of evaluation in the complex case

The steps of the derivations being analogous to those utilized in the real case, the corresponding theorems will simply be stated for the complex case. Let \(\tilde {W}_1=\tilde {\mathbf {X}}\tilde {\mathbf {X}}^{*}\) be a p × p singular Wishart matrix where the p × r matrix \(\tilde {\mathbf {X}}=[\tilde {X}_1,\ldots ,\tilde {X}_r],~ \tilde {X}_j\overset {iid}{\sim } \tilde {N}_p(O,~I),~j=1,\ldots ,r,\) and r < p. That is, \(\tilde {\mathbf {X}}\) is a simple random sample matrix from this complex Gaussian population. Let \(\tilde {W}_2>O\) have a complex Wishart distribution with n degrees of freedom and parameter matrix I, that is, \(\tilde {W}_2\sim \tilde {W}_p(n,~I),~n\ge p\), and assume that \(\tilde {W}_1\) and \(\tilde {W}_2\) be independently distributed. Consider the initial equation

whose roots are λ 1, …, λ r, 0, …, 0, and the additional equation \(\mathrm {det}(\tilde {W}_1-\mu (\tilde {W}_1+\tilde {W}_2))=0,\) whose roots will be denoted by μ 1, …, μ r, 0, …, 0. In the following theorems, the λ j’s and μ j’s will refer to these two sets of roots.

Theorem 8.3a.7

Let \(\tilde {W}_1=\mathbf {XX}^{*},~ \mathbf {X}=[X_1,\ldots ,X_r],~ X_j\overset {iid}{\sim } \tilde {N}_p(O,~I),~ j=1,\ldots ,r,\) and r < p. Let \(\tilde {W}_2\sim \tilde {W}_p(n,~I),~ n\ge p,\) be a nonsingular complex Wishart matrix with n degrees of freedom and parameter matrix I. Further assume that \(\tilde {W}_1\) and \(\tilde {W}_2\) are independently distributed. Let \(\tilde {V}=\tilde {W}_2^{-\frac {1}{2}}\tilde {\mathbf {X}},~ \tilde {V}\tilde {V}^{*}\ge O,~ \tilde {V}^{*}\tilde {V}>O,\) and \(\tilde {S}_1=\tilde {V}^{*}\tilde {V}>O\) . Then, \(\mathrm {det}(I_p+\tilde {V}\tilde {V}^{*})=\mathrm {det}(I_r+\tilde {V}^{*}\tilde {V})=\mathrm {det}(I_r+\tilde {S}_1)\) , and the densities of \(\tilde {V}^{*}\) and \(\tilde {S}_1\) are respectively given by

and

which is a complex matrix-variate type-2 beta distribution with the parameters (p, n − p + r). Additionally, the positive semi-definite matrix \(\tilde {S}_2=\tilde {W}_2^{-\frac {1}{2}}\tilde {\mathbf {X}}\tilde {\mathbf {X}}^{*}\tilde {W}_2^{-\frac {1}{2}}\) is distributed, almost surely, as \(\tilde {S}_1\) , which has a nonsingular complex matrix-variate type-2 beta distribution with the parameters (p, n − p + r).

Theorem 8.3a.8

Let \(\tilde {W}_2\) and \(\tilde {\mathbf {X}}\) be as defined in Theorem 8.3a.7 . Then, the joint density of the nonzero eigenvalues λ 1, …, λ r of \(\tilde {S}_2=\tilde {W}_2^{-\frac {1}{2}}\tilde {\mathbf {X}}\tilde {\mathbf {X}}^{*}\tilde {W}_2^{-\frac {1}{2}}\) , which are assumed to be distinct and such that λ 1 > ⋯ > λ r > 0, is given by

where D = diag(λ 1, …, λ r).

Theorem 8.3a.9

When \(\mu _j=\frac {\lambda _j}{1+\lambda _j}\) or \(\lambda _j=\frac {\mu _j}{1-\mu _j}\) , the distributions of the μ j ’s and λ j ’s, as respectively defined in (8.3a.13) and (8.3a.17), coincide.

8.4. The Case of One Wishart or Gamma Matrix in the Real Domain

If we only consider a single p × p gamma matrix W with parameters \((\alpha ,\,B),~B>O,~\Re (\alpha )>\frac {p-1}{2},\) whose density is

and zero elsewhere, then it can readily be determined that \(Z=B^{\frac {1}{2}}W\) has the density

and zero elsewhere. When \(\alpha =\frac {m}{2}\) and \( B=\frac {1}{2}I\), Z has a Wishart density with m ≥ p degrees of freedom and parameter matrix I, its density being given by

for m ≥ p, and zero elsewhere. Since Z is symmetric, there exists an orthonormal matrix P, PP′ = I, P′P = I, such that Z = PDP′ with D = diag(λ 1, …, λ p) where λ j > 0, j = 1, …, p, are the (assumed distinct) positive eigenvalues of Z, Z being positive definite. Consider the equation ZQ j = λ j Q j where the p × 1 vector Q j is an eigenvector corresponding to the eigenvalue λ j. Since the eigenvalues are distinct, the eigenvectors are orthogonal to each other. Let Q j be the normalized eigenvector, \(Q_j^{\prime }Q_j=1,~j=1,\ldots ,p,~ Q_i^{\prime }Q_j=0,\) for all i≠j. Letting Q = [Q 1, …, Q p], this p × p matrix Q is such that

where P 1, …, P p are the columns of the p × p matrix P. Yet, P need not be unique as \(P_j^{\prime }P_j=1\Rightarrow (-P_j)'(-P_j)=1\). In order to make it unique, let us require that the first nonzero element of each of the vectors P 1, …, P p be positive. Considering the transformation Z = PDP′, it follows from Theorem 8.2.1 that, before integrating over the full orthogonal group,

where h(P) is a differential element associated with the unique matrix of eigenvectors P, as is explained in Mathai (1997). The integral over h(P) gives

where O p is the full orthogonal group of p × p orthonormal matrices. On observing that \(|Z|=\prod _{j=1}^p\lambda _j\) and tr(Z) = λ 1 + ⋯ + λ p, it follows from (8.4.3) and (8.4.4) that the joint density of the eigenvalues λ 1, …, λ p and the matrix of eigenvectors P can be expressed as

Thus, the marginal density of λ 1, …, λ p can be obtained by integrating out P. Denoting this marginal density by f 4(λ 1, …, λ p), we have

and zero elsewhere. The density of P is then the remainder of the joint density. Denoting it by f 5(P), we have the following:

where P = [P 1, …, P p], the first nonzero element of P j being positive for j = 1, …, p, so as to make P unique. Hence, the following result:

Theorem 8.4.1

Let the p × p real positive definite matrix Z have a Wishart density with m ≥ p degrees of freedom and parameter matrix I. Let λ 1, …, λ p be the distinct positive eigenvalues of Z in decreasing order and the p × p orthonormal matrix P be the matrix of normalized eigenvectors corresponding to the λ j ’s. Then, {λ 1, …, λ p} and P are independently distributed, with the densities of {λ 1, …, λ p } and P being respectively given in (8.4.7) and (8.4.8).

8.4a. The Case of One Wishart or Gamma Matrix, Complex Domain

Let \(\tilde {W}\) be a p × p complex gamma distributed matrix, \(\tilde {W}=\tilde {W}^{*}>O\), whose density is

Letting \(\tilde {Z}=\tilde {B}^{\frac {1}{2}}\tilde {W}\), \(\tilde {Z}\) has the density

If α = m, m = p, p + 1, … in (8.4a.2), then we have the following Wishart density having m degrees of freedom in the complex domain:

Consider a unique unitary matrix \(\tilde {P},~ \tilde {P}\tilde {P}^{*}=I,~ \tilde {P}^{*}\tilde {P}=I\) such that \(\tilde {P}^{*}\tilde {Z}\tilde {P}=\mathrm {diag}(\lambda _1,\ldots ,\lambda _p)\) where λ 1, …, λ p are the eigenvalues of \(\tilde {Z}\), which are real and positive since \(\tilde {Z}\) is Hermitian positive definite. Letting the eigenvalues be distinct and such that λ 1 > λ 2 > ⋯ > λ p > 0, observe that

where \(\tilde {h}(\tilde {P})\) is the differential element corresponding to the unique unitary matrix \(\tilde {P}\). Then, as established in Mathai (1997),

where \(\tilde {O}_p\) is the full unitary group. Thus, the joint density of the eigenvalues λ 1, …, λ p and their associated normalized eigenvectors, denoted by \(\tilde {f}_3(D,\,\tilde {P})\), is

Then, integrating out \(\tilde {P}\) with the help of (8.4a.5), the marginal density of the eigenvalues λ 1 > ⋯ > λ p > 0, denoted by \(\tilde {f}_4(\lambda _1,\ldots ,\lambda _p)\), is the following:

Thus, the joint density of the normalized eigenvectors forming \(\tilde {P}\), denoted by \(\tilde {f}_5(\tilde {P})\), is given by

These results are summarized in the following theorem.

Theorem 8.4a.1

Let \(\tilde {Z}\) have the density appearing in (8.4a.3). Then, the joint density of the distinct eigenvalues λ 1 > ⋯ > λ p > 0 of \(\tilde {Z}\) is as given in (8.4a.7) and the joint density of the associated normalized eigenvectors comprising the unitary matrix P is as specified in (8.4a.8).

References

T.W. Anderson (2003): An Introduction to Multivariate Statistical Analysis, 3rd Edition, Wiley Interscience, New York.

A.M. Mathai (1997): Jacobians of Matrix Transformations and Functions of Matrix Argument, World Scientific Publishing, New York.

Author information

Authors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2022 The Author(s)

About this chapter

Cite this chapter

Mathai, A., Provost, S., Haubold, H. (2022). Chapter 8: The Distributions of Eigenvalues and Eigenvectors. In: Multivariate Statistical Analysis in the Real and Complex Domains. Springer, Cham. https://doi.org/10.1007/978-3-030-95864-0_8

Download citation

DOI: https://doi.org/10.1007/978-3-030-95864-0_8

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-95863-3

Online ISBN: 978-3-030-95864-0

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)