Abstract

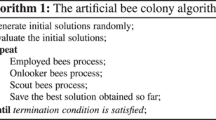

This chapter approaches the topic of Extreme Learning Machines (ELM); this technique is implemented in a single layer feedforward neural network and provides to the neural network with a fast performance since it is not necessary to adjust weights and hidden layer biases. ELM is implemented and combined with three metaheuristic algorithms. The selected algorithms belong to the family of Swarm intelligence such as Particle Swarm Optimization (PSO), Artificial Bee Colony (ABC) and Grey Wolf Optimization (GWO); these algorithms are applied to optimize the hidden biases and input weights and are implemented for improving the performance along with techniques of Extreme learning machines in data classification. Also, the performance and behavior of each algorithm can be observed. The data employed in this work consist of information about Australian credit, diabetes detection and hearth disease. The investigation result show that GWO obtained better results in the first dataset and PSO are better in the second and third datasets.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

M. Eshtay, H. Faris, N. Obeid, Metaheuristic-based extreme learning machines: a review of design formulations and applications. Int. J. Mach. Learn. Cybern. 10(6), 1543–1561 (2019). https://doi.org/10.1007/s13042-018-0833-6

G.B. Huang, Q.Y. Zhu, C.K. Siew, Extreme learning machine: a new learning scheme of feedforward neural networks, in IEEE International Conference on Neural Networks—Conference Proceedings, vol. 2 (2004), pp. 985–990. https://doi.org/10.1109/IJCNN.2004.1380068

A. Chakraborty, A.K. Kar, Nature-inspired computing and optimization—theory and applications. Model. Optim. Sci. Technol. 10(March), 13 (2017). https://doi.org/10.1007/978-3-319-50920-4

J. Kennedy, R. Eberhat, Particle Swarm Optimization (1995)

E. Cuevas, F. Fausto, A. González, A swarm algorithm inspired by the collective animal behavior. Intell. Syst. Ref. Libr. 160, 161–188 (2020). https://doi.org/10.1007/978-3-030-16339-6_6

R.A. Ibrahim, A.A. Ewees, D. Oliva, M. Abd Elaziz, S. Lu, Improved salp swarm algorithm based on particle swarm optimization for feature selection. J. Ambient Intell. Humaniz. Comput. 10(8), 3155–3169 (2019). https://doi.org/10.1007/s12652-018-1031-9

E.V. Cuevas Jimenez, J.V. Osuna Enciso, D.A. Oliva Navarro, M.A. Diaz Cortez, optimización Algoritmos programados con MATLAB, 1st edn. (ALFAOMEGA Grupo Editor, Guadalajara, 2016)

S. Mirjalili, S.M. Mirjalili, A. Lewis, Grey wolf optimizer. Adv. Eng. Softw. 69, 46–61 (2014). https://doi.org/10.1016/j.advengsoft.2013.12.007

X. Yu, W. Chen, X. Zhang, An artificial Bee colony algorithm for solving constrained optimization problems, in Proceedings of 2018 2nd IEEE Advanced Information Management, Communicates, Electronic and Automation Control Conference, IMCEC 2018 (2018), pp. 2663–2666. https://doi.org/10.1109/IMCEC.2018.8469371

E. Cuevas, F. Sención, D. Zaldivar, M. Pérez-Cisneros, H. Sossa, A multi-threshold segmentation approach based on artificial Bee colony optimization. Appl. Intell. 37(3), 321–336 (2012). https://doi.org/10.1007/s10489-011-0330-z

E. Cuevas, D. Zaldívar, M. Pérez-Cisneros, H. Sossa, V. Osuna, Block matching algorithm for motion estimation based on Artificial Bee Colony (ABC). Appl. Soft Comput. J. 13(6), 3047–3059 (2013). https://doi.org/10.1016/j.asoc.2012.09.020

D. Oliva, E. Cuevas, G. Pajares, Parameter identification of solar cells using artificial Bee colony optimization. Energy 72, 93–102 (2014). https://doi.org/10.1016/j.energy.2014.05.011

D. Oliva, A.A. Ewees, M. Abd, E. Aziz, A.E. Hassanien, (n.d.). A chaotic improved artificial Bee colony for parameter estimation of photovoltaic cells. 1–19. https://doi.org/10.3390/en10070865

E.Cuevas, F. Fausto, A. González, An introduction to nature-inspired metaheuristics and swarm methods, in New Advancements in Swarm Algorithms: Operators and Applications (2019), pp. 1–41. https://doi.org/10.1007/978-3-030-16339-6_1

E. Cuevas, F. Fausto, A. González, Metaheuristics and swarm methods: a discussion on their performance and applications, in New Advancements in Swarm Algorithms: Operators and Applications (2019), pp. 43–67. https://doi.org/10.1007/978-3-030-16339-6_2

D. Oliva, M. Abd Elaziz, S. Hinojosa, Metaheuristic algorithms for image segmentation: theory and applications. 825, 27–45 (2019). https://doi.org/10.1007/978-3-030-12931-6

D.N.G. Silva, L.D.S. Pacifico, T.B. Ludermir, An evolutionary extreme learning machine based on group search optimization, 2011 IEEE Congress of Evolutionary Computation, CEC 2011 (2011), pp. 574–580. https://doi.org/10.1109/CEC.2011.5949670

Y. Xu, Y. Shu, Evolutionary extreme learning machine—based on particle swarm optimization, in Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), 3971 LNCS(2) (2006), pp. 644–652. https://doi.org/10.1007/11759966_95

Q.Y. Zhu, A.K. Qin, P.N. Suganthan, G.B. Huang, Evolutionary extreme learning machine. Pattern Recogn. 38(10), 1759–1763 (2005). https://doi.org/10.1016/j.patcog.2005.03.028

F. Han, H.F. Yao, Q.H. Ling, An improved evolutionary extreme learning machine based on particle swarm optimization. Neurocomputing 116, 87–93 (2013). https://doi.org/10.1016/j.neucom.2011.12.062

C. Wan, Z. Xu, P. Pinson, Z.Y. Dong, K.P. Wong, Optimal prediction intervals of wind power generation. IEEE Trans. Power Syst. 29(3), 1166–1174 (2014). https://doi.org/10.1109/TPWRS.2013.2288100

L. Lin, J.C. Handley, Y. Gu, L. Zhu, X. Wen, A.W. Sadek, Quantifying uncertainty in short-term traffic prediction and its application to optimal staffing plan development. Transp. Res. Part C Emerg. Technol. 92(February), 323–348 (2018). https://doi.org/10.1016/j.trc.2018.05.012

M.R. Kaloop, D. Kumar, P. Samui, A.R. Gabr, J.W. Hu, X. Jin, B. Roy, Particle swarm optimization algorithm-extreme learning machine (PSO-ELM) model for predicting resilient modulus of stabilized aggregate bases. Appl. Sci. (Switzerland), 9(16) (2019). https://doi.org/10.3390/app9163221

T. Chai, R.R. Draxler, Root mean square error (RMSE) or mean absolute error (MAE)?—Arguments against avoiding RMSE in the literature. Geosci. Model Dev. 7(3), 1247–1250 (2014). https://doi.org/10.5194/gmd-7-1247-2014

C.L. Blake, C.J. Merz, UCI repository of machine learning databases. Department of Information and Computer Sciences, Univrsity of California, Irvine, CA (1998). [Online]. Available http://www.ics.uci.edu/~mlearn/MLRepository.html

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Appendix

Appendix

Below is show an example of the implementation of Extreme Learning machines algorithm for a single layer feedforward neural network in MATLAB, focused in the training and testing parts. In the first part of the code, the testing part will be seen, first of all the input weights and the biases of the hidden neurons are randomly generated, those values are stored in two variables, then the input weights are multiplied with the training data, furthermore the biases are added. The output of the hidden neuron is calculated selecting one activation function, then the output weights are estimated using the Moore-Penrose generalize inverse. In the case of H does not have inverse. Finally, is calculated the current output from the training data multiplying the pseudoinverse of H and the output weights, also the error and the accuracy of the training phase are obtained if it is a regression process, the accuracy is calculated implementing the mean square error function of MATLAB.

Code 1

MATLAB code of the training process of Extreme Learning Machines

-

1

InputWeight=rand(NumberofHiddenNeurons,NumberofInputNeurons)*2−1;

-

2

BiasofHiddenNeurons=rand(NumberofHiddenNeurons,1);

-

3

tempH=InputWeight*P;

-

4

clear P;

-

5

ind=ones(1,NumberofTrainingData);

-

6

BiasMatrix=BiasofHiddenNeurons(:,ind);

-

7

tempH=tempH+BiasMatrix;

-

8

switch lower(ActivationFunction)

-

9

case {‘sig’,’sigmoid’}

-

10

H = 1 ./ (1 + exp(-tempH));

-

11

case {‘sin’,’sine’}

-

12

H = sin(tempH);

-

13

case {‘hardlim’}

-

14

H = double(hardlim(tempH));

-

15

case {‘tribas’}

-

16

H = tribas(tempH);

-

17

case {‘radbas’}

-

18

H = radbas(tempH);

-

19

end

-

20

clear tempH;

-

21

OutputWeight=pinv(H’) * T’;

-

22

Y=(H’ * OutputWeight)’;

-

23

error = T−Y;

-

24

if Elm_Type == REGRESSION

-

25

TrainingAccuracy=sqrt(mse(T − Y));

-

26

End

-

27

clear H;

The testing part is almost the same as the training part. In this phase, the output is verified, in the top of the code the input weights and biases of the hidden layer are randomly initialized, the biases are added to the product between the input weights and the testing input data, one of the available activation functions is chosen to obtain the final output, if is a regression problem the accuracy is obtained through RMSE, in the contrary case the code where the training and testing data is corroborated by the output given by the neural network. The training and testing accuracy are calculated by 1 minus the percentage of the error in order to normalize the accuracy.

Rights and permissions

Copyright information

© 2021 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this chapter

Cite this chapter

Escobar, H., Cuevas, E. (2021). Implementation of Metaheuristics with Extreme Learning Machines. In: Oliva, D., Houssein, E.H., Hinojosa, S. (eds) Metaheuristics in Machine Learning: Theory and Applications. Studies in Computational Intelligence, vol 967. Springer, Cham. https://doi.org/10.1007/978-3-030-70542-8_6

Download citation

DOI: https://doi.org/10.1007/978-3-030-70542-8_6

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-70541-1

Online ISBN: 978-3-030-70542-8

eBook Packages: Intelligent Technologies and RoboticsIntelligent Technologies and Robotics (R0)