Abstract

We show the potential of greedy recovery strategies for the sparse approximation of multivariate functions from a small dataset of pointwise evaluations by considering an extension of the orthogonal matching pursuit to the setting of weighted sparsity. The proposed recovery strategy is based on a formal derivation of the greedy index selection rule. Numerical experiments show that the proposed weighted orthogonal matching pursuit algorithm is able to reach accuracy levels similar to those of weighted ℓ 1 minimization programs while considerably improving the computational efficiency for small values of the sparsity level.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

1 Introduction

In recent years, a new class of approximation strategies based on compressive sensing (CS) has been shown to be able to substantially lessen the curse of dimensionality in the context of approximation of multivariate functions from pointwise data, with applications to the uncertainty quantification of partial differential equations with random inputs. Based on random sampling from orthogonal polynomial systems and on weighted ℓ 1 minimization, these techniques are able to accurately recover a sparse approximation to a function of interest from a small-sized datasets of pointwise samples. In this paper, we show the potential of weighted greedy techniques as an alternative to convex minimization programs based on weighted ℓ 1 minimization in this context.

The contribution of this paper is twofold. First, we propose a weighted orthogonal matching pursuit (WOMP) algorithm based on a rigorous derivation of the corresponding greedy index selection strategy. Second, we numerically show that WOMP is a promising alternative to convex recovery programs based on weighted ℓ 1 minimization, thanks to its ability to compute sparse approximations with an accuracy comparable to those computed via weighted ℓ 1 minimization, but with a considerably lower computational cost when the target sparsity level (and, hence, the number of WOMP iterations) is small enough. It is also worth observing here that WOMP computes approximations that are exactly sparse, as opposed to approaches based on weighted ℓ 1 minimization, which provide compressible approximations in general.

Brief Literature Review

Various approaches for multivariate function approximation based on CS with applications to uncertainty quantification can be found in [1, 3,4,5,6, 11,12,13, 17]. An overview of greedy methods for sparse recovery in CS and, in particular of OMP, can be found in [7, Chapter 3.2]. For a general review on greedy algorithms, we refer the reader to [15] and references therein. Some numerical experiments on a weighted variant of OMP have been performed in the context of CS methods for uncertainty quantification in [4]. Weighted variants of OMP have also been considered in [10, 16], but the weighted procedure is tailored for specific signal processing applications and the term “weighted” does not refer to the weighted sparsity setting of [14] employed here. To the authors’ knowledge, the weighted variant of OMP considered in this paper seems to have been proposed here for the first time.

Organization of the Paper

In Sect. 2 we describe the setting of sparse multivariate function approximation in orthonormal systems via random sampling and weighted ℓ 1 minimization. Then, in Sect. 3 we formally derive a strategy for the greedy selection in the weighted sparsity setting and present the WOMP algorithm. Finally, we numerically show the effectiveness of the proposed technique in Sect. 4 and give our conclusions in Sect. 5.

2 Sparse Multivariate Function Approximation

We start by briefly introducing the framework of sparse multivariate function approximation from pointwise samples and refer the reader to [3] for further details.

Our aim is to approximate a function defined over a high-dimensional domain

where d ≫ 1, from a dataset of pointwise samples f(t 1), …, f(t m). Let ν be a probability measure on D and let \(\{\phi _j\}_{j \in \mathbb {N}_0^d}\) be an orthonormal basis for the Hilbert space \(L^2_\nu (D)\). In this paper, we will consider \(\{\phi _j\}_{j \in \mathbb {N}_0^d}\) to be a tensorized family of Legendre or Chebyshev orthogonal polynomials, with ν being the uniform or the Chebyshev measure on D, respectively. Assuming that \(f \in L^2_\nu (D) \cap L^\infty (D)\), we consider the series expansion

Then, we choose a finite set of multi-indices \(\Lambda \subseteq \mathbb {N}_0^d\) with | Λ| = N and obtain the truncated series expansion

In practice, a convenient choice for Λ is the hyperbolic cross of order s, i.e.

due to the moderate growth of N with respect to d. Now, assuming we collect m ≪ N pointwise samples independently distributed according to ν, namely,

the approximation problem can be recasted as a linear system

with \(x_\Lambda = (x_j)_{j \in \Lambda } \in \mathbb {C}^N\), and where the sensing matrix \(A \in \mathbb {C}^{m \times N}\) and the measurement vector \(y \in \mathbb {C}^m\) are defined as

with [k]:= {1, …, k} for every \(k \in \mathbb {N}\). The vector \(e \in \mathbb {C}^m\) accounts for the truncation error introduced by Λ and satisfies ∥e∥2 ≤ η, where η > 0 is an a priori upper bound to the truncation L ∞(D)-error, namely \(\|f-f_{\Lambda }\|{ }_{L^\infty (D)} \leq \eta \). A sparse approximation to the vector can be then computed by means of weighted ℓ 1 minimization.

Given weights \(w \in \mathbb {R}^N\) with w > 0 (where the inequality is read componentwise), recall that the weighted ℓ 1 norm of a vector \(z \in \mathbb {C}^N\) is defined as ∥z∥1,w:=∑j ∈ [N]|z j|w j. We can compute an approximation \(\hat {x}_\Lambda \) to x Λ by solving the weighted quadratically-constrained basis pursuit (WQCBP) program

where the weights \(w \in \mathbb {R}^N\) are defined as

The effectiveness of this particular choice of w is supported by theoretical results and it has been validated from the numerical viewpoint (see [1, 3]). The resulting approximation \(\hat {f}_\Lambda \) to f is finally defined as

In this setting, stable and robust recovery guarantees in high probability can be shown for the approximation errors \(\|f-f_\Lambda \|{ }_{L^2_\nu (D)}\) and \(\|f-f_\Lambda \|{ }_{L^\infty _\nu (D)}\) under a sufficient condition on the number of samples of the form \( m \gtrsim s^\gamma \cdot \operatorname {\mathrm {polylog}}(s,d), \) with γ = 2 or \(\gamma = \log (3)/\log (2)\) for tensorized Legendre or Chebyshev polynomials, respectively, hence lessening the curse of dimensionality to a substantial extent (see [3] and references therein). We also note in passing that decoders such as the weighted LASSO or the weighted square-root LASSO can be considered as alternatives to (3) for weighted ℓ 1 minimization (see [2]).

3 Weighted Orthogonal Matching Pursuit

In this paper, we consider greedy sparse recovery strategies to find sparse approximate solutions to (1), as alternatives to the WQCBP optimization program (3). With this aim, we propose a variation of the OMP algorithm to the weighted setting.

Before introducing weighted OMP (WOMP) in Algorithm 1, let us recall the rationale behind the greedy index selection rule of OMP (corresponding to Algorithm 1 with λ = 0 and w = 1). For a detailed introduction to OMP, we refer the reader to [7, Section 3.2]. Given a support set S ⊆ [N], OMP solves the least-squares problem

where \(G_0(z) := \|y-Az\|{ }_2^2\). In OMP, the support S is iteratively enlarged by one index at the time. Namely, we consider the update S ∪{j}, where the index j ∈ [N] is selected in a greedy fashion. In particular, assuming that A has ℓ 2-normalized columns, it is possible to show that (see [7, Lemma 3.3])

This leads to the greedy index selection rule operated by OMP, which prescribes the selection of an index j ∈ [N] that maximizes the quantity |(A ∗(y − Ax))j|2. We will use this simple intuition to extend OMP to the weighted case by replacing the function G 0 with a suitable function G λ that takes into account the data-fidelity term and the weighted sparsity prior at the same time.

Let us recall that, given a set of weights \(w \in \mathbb {R}^N\) with w > 0, the weighted ℓ 0 norm of a vector \(z \in \mathbb {C}^N\) is defined as the quantity (see [14])Footnote 1

Notice that when w = 1, then ∥⋅∥0,w = ∥⋅∥0 is the standard ℓ 0 norm. Given λ ≥ 0, we define the function

The tradeoff between the data-fidelity constraint and the weighted sparsity prior is balanced via the choice of the regularization parameter λ. Applying the same rationale employed in OMP for the greedy index selection and replacing G 0 with G λ leads to Algorithm 1, which corresponds to OMP when λ = 0 and w = 1.

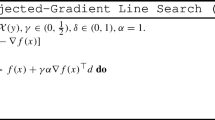

Algorithm 1 Weighted orthogonal matching pursuit (WOMP)

Inputs:

-

\(A\in \mathbb {C}^{m \times N}\): sampling matrix, with ℓ 2-normalized columns;

-

\(y \in \mathbb {C}^m\): vector of samples;

-

\(w \in \mathbb {R}^N\): weights;

-

λ ≥ 0: regularization parameter;

-

\(K \in \mathbb {N}\): number of iterations.

Procedure:

-

1.

Let \(\hat {x}_0 = 0\) and S 0 = ∅;

-

2.

For k = 1, …, K:

-

a.

Find \(\displaystyle j_k \in \arg \max _{j \in [N]} \varDelta _\lambda (x_{k-1},S_{k-1},j)\), with Δ λ as in (7);

-

b.

Define S k = S k−1 ∪{j k};

-

c.

Compute \(\displaystyle \hat {x}_{k} \in \arg \min _{v \in \mathbb {C}^{N}} \|A v - y\|{ }_2 \text{ s.t. } \operatorname {\mathrm {supp}}(v) \subseteq S_k\).

-

a.

Output:

-

\(\hat {x}_K \in \mathbb {C}^N\): approximate solution to Az = y.

Remark 1

The ℓ 2-normalization of the columns of A is a necessary condition to apply Algorithm 1. If A does not satisfy this hypothesis, is suffices to apply WOMP to the normalized system \(\widetilde {A}z = y\), where \(\widetilde {A} = AM^{-1}\) and M is the matrix containing the ℓ 2 norms of the columns of A on the main diagonal and zeroes elsewhere. The approximate solution \(\hat {x}_K\) to \(\widetilde {A}z = y\) computed via WOMP is then rescaled as \(M \hat {x}_K\), which approximately solves Az = y.

The following proposition justifies the weighted variant of OMP considered in Algorithm 1. In order to minimize G λ as much as possible, at each iteration, WOMP selects the index j that maximizes the quantity Δ λ(x, S, j) defined in (7). The following proposition makes the role of the quantity Δ λ(x, S, j) transparent, generalizing relation (5) to the weighted case, under suitable conditions on A and x that are verified at each iteration of Algorithm 1.

Proposition 1

Let λ ≥ 0, S ⊆ [N], \(A \in \mathbb {C}^{m \times N}\) with ℓ 2 -normalized columns, and \(x \in \mathbb {C}^N\) satisfying

Then, for every j ∈ [N], the following holds:

where G λ is defined as in (6), \(\varDelta _\lambda : \mathbb {C}^N \times 2^{[N]} \times [N] \to \mathbb {R}\) is defined by

Proof

Throughout the proof, we will denote the residual as r:= y − Ax.

Let us first assume j∉S. In this case, we compute

where δ x,y is the Kronecker delta function. In particular, we have

Now, if (A ∗ r)j = 0, then h(t) is minimized for t = 0 and \(\min _{t \in \mathbb {C}}G(x+te_j) = G(x)\). On the other hand, if (A ∗ r)j ≠ 0, by arguing similarly to [7, Lemma 3.3], we see that

where the minimum is realized for some \(t \in \mathbb {C}\) with |t| = |(A ∗ r)j|≠ 0. In summary,

which concludes the case j∉S.

Now, assume j ∈ S. Since the vector \(x_S = x|{ }_{S}\in \mathbb {C}^{|S|}\) is a least-squares solution to A S z = y, it satisfies \(A_S^*(y-A_S x_S) = 0\) and, in particular, (A ∗ r)j = 0. (Here, \(A_S \in \mathbb {C}^{m \times |S|}\) denotes the submatrix of A corresponding to the columns in S). Therefore, arguing similarly as before, we have

Considering only the terms depending on t, it is not difficult to see that

As a consequence, for every j ∈ S, we obtain

The results above combined with simple algebraic manipulations lead to the desired result. □

4 Numerical Results

In this section, we show the effectiveness of WOMP (Algorithm 1) in the sparse multivariate function approximation setting described in Sect. 2. In particular, we choose the weights w as in (4). We consider the function

We let \(\{\phi _j\}_{j \in \mathbb {N}_0^d}\) be the Legendre and Chebyshev bases and ν be the respective orthogonality measure. In Figs. 1 and 2 we show the relative \(L^2_\nu (D)\)-error of the approximate solution \(\hat {x}_K\) computed via WOMP as a function of iteration K, for different values of the regularization parameter λ in order to solve the linear system Az = y, where A and y are defined by (2) and where the ℓ 2-normalization of the columns of A is taken into account according to Remark 1. We consider λ = 0 (corresponding to OMP) and λ = 10−k, with k = 3, 3.5, 4, 4.5, 5. Here, Λ is the hyperbolic cross of order s = 10, corresponding to N = | Λ| = 571. Moreover, we consider m = 60 and m = 80. The results are averaged over 25 runs and the \(L^2_\nu (D)\)-error is computed with respect to a reference solution approximated via least squares and using 20N = 11, 420 random i.i.d. samples according to ν. We compare the WOMP accuracy with the accuracy obtained via the QCBP program (3) with η = 0 and WQCBP with tolerance parameter η = 10−8. To solve these two programs we use CVX Version 1.2, a package for specifying and solving convex programs [8, 9]. In CVX, we use the solver ‘mosek’ and we set CVX precision to ‘high’.

Plot of the mean relative \(L^2_\nu (D)\)-error as a function of the number of iterations K of WOMP (Algorithm 1) for different values of the regularization parameter λ for the approximation of the function f defined in (8) and using Legendre polynomials. The accuracy of WOMP is compared with those of QCBP and WQCBP

The same experiment as in Fig. 1, with Chebyshev polynomials

Figures 1 and 2 show the benefits of using weights as compared to the unweighted OMP approach, when the parameter λ is tuned appropriately. A good choice of λ for the setting considered here seem to be between 10−4.5 and 10−3.5. We also observe that WOMP is able to reach similar level of accuracy as WQCBP. An interesting feature of WOMP with respect to OMP is its better stability. We observe than after the m-th iteration, the OMP accuracy starts getting substantially worse. This can be explained by the fact that when K approaches N, OMP tends to destroy sparsity by fitting the data too much. This phenomenon is not observed in WOMP, thanks to its ability to keep the support of \(\hat {x}_k\) small via the explicit enforcement of the weighted sparsity prior (see Fig. 3).

Plot of the support size of \(\hat {x}_K\) as a function of the number of iterations K for WOMP in the same setting as in Figs. 1 and 2, with Legendre (left) and Chebyshev (right) polynomials. The larger the regularization parameter λ, the sparser solution (in the left plot, the curves relative to λ = 10−4.5 and λ = 10−4 overlap. In the right plot, the same happens for λ = 10−4 and λ = 10−3.5)

We show the better computational efficiency of WOMP with respect to the convex minimization programs QCBP and WQCBP solved via CVX by tracking the runtimes for the different approaches. In Table 1 we show the running times for the different recovery strategies.

The running times for WOMP are referred to K = 25 iterations, sufficient to reach the best accuracy for every value of λ as shown in Figs. 1 and 2. Moreover, the computational times for WOMP take into account the ℓ 2-normalization of the columns of A (see Remark 1). WOMP consistently outperforms convex minimization, being more than ten times faster in all cases. We note that in this comparison a key role is played by the parameter K or, equivalently, by the sparsity of the solution. Indeed, in this case, considering a larger value of K would result is a slower performance of WOMP, but it would not improve the accuracy of the WOMP solution (see Figs. 1 and 2).

5 Conclusions

We have considered a greedy recovery strategy for high-dimensional function approximation from a small set of pointwise samples. In particular, we have proposed a generalization of the OMP algorithm to the setting of weighted sparsity (Algorithm 1). The corresponding greedy selection strategy is derived in Proposition 1.

Numerical experiments show that WOMP is an effective strategy for high-dimensional approximation, able to reach the same accuracy level of WQCBP while being considerably faster when the target sparsity level is small enough. A key role is played by the regularization parameter λ, which may be difficult to tune due to its sensitivity to the parameters of the problem (m, s, and d), and on the polynomial basis employed. In other applications, where explicit formulas for the weights as (4) are not available, there might also be a nontrivial interplay between λ and w. In summary, despite the promising nature of the numerical experiments illustrated in this paper, a more extensive numerical investigation is needed in order to study the sensitivity of WOMP with respect to λ. Moreover, a theoretical analysis of the WOMP approach might highlight practical recipe for the choice of this parameter, similarly to [2]. This type of analysis may also help identifying the sparsity regime where WOMP outperforms weighted ℓ 1 minimization, which, in turn, could be formulated in terms of suitable assumptions on the regularity of f. These questions are beyond the scope of this paper and will be object of future work.

Notes

- 1.

The term “norm” here is an abuse of language, but we will stick to it due to its popularity.

References

Adcock, B.: Infinite-dimensional compressed sensing and function interpolation. Found. Comput. Math. 18(3), 661–701 (2018)

Adcock, B., Bao, A., Brugiapaglia, S.: Correcting for unknown errors in sparse high-dimensional function approximation (2017). Preprint. arXiv:1711.07622

Adcock, B., Brugiapaglia, S., Webster, C.G.: Compressed sensing approaches for polynomial approximation of high-dimensional functions. In: Boche, H., Caire, G., Calderbank, R., März, M., Kutyniok, G., Mathar R. (eds.) Compressed Sensing and Its Applications: Second International MATHEON Conference 2015, pp. 93–124. Springer International Publishing, Cham (2017)

Bouchot, J.-L., Rauhut, H., Schwab C.: Multi-level compressed sensing Petrov-Galerkin discretization of high-dimensional parametric PDEs (2017). Preprint. arXiv:1701.01671

Chkifa, A., Dexter, N., Tran, H., Webster, C.G.: Polynomial approximation via compressed sensing of high-dimensional functions on lower sets. Math. Comp. 87(311), 1415–1450 (2018)

Doostan, A., Owhadi, H.: A non-adapted sparse approximation of PDEs with stochastic inputs. J. Comput. Phys. 230(8), 3015–3034 (2011)

Foucart, S., Rauhut, H.: A Mathematical Introduction to Compressive Sensing. Birkhäuser Basel (2013)

Grant, M., Boyd, S.: Graph implementations for nonsmooth convex programs. In: Blondel, V., Boyd, S., Kimura, H. (eds.) Recent Advances in Learning and Control. Lecture Notes in Control and Information Sciences, pp. 95–110. Springer, Berlin (2008)

Grant, M., Boyd, S.: CVX: Matlab software for disciplined convex programming, version 2.1. http://cvxr.com/cvx (2014)

Li, G.Z., Wang, D.Q., Zhang, Z.K., Li, Z.Y.: A weighted OMP algorithm for compressive UWB channel estimation. In: Applied Mechanics and Materials, vol. 392, pp. 852–856. Trans Tech Publications, Zurich (2013)

Mathelin, L., Gallivan, K.A.: A compressed sensing approach for partial differential equations with random input data. Commun. Comput. Phys. 12(4), 919–954 (2012)

Peng, J., Hampton, J., Doostan, A.: A weighted ℓ 1-minimization approach for sparse polynomial chaos expansions. J. Comput. Phys. 267, 92–111 (2014)

Rauhut, H., Schwab, C.: Compressive sensing Petrov-Galerkin approximation of high-dimensional parametric operator equations. Math. Comp. 86(304), 661–700 (2017)

Rauhut, H., Ward, R.: Interpolation via weighted ℓ 1 minimization. Appl. Comput. Harmon. Anal. 40(2), 321–351 (2016)

Temlyakov, V.N.: Greedy approximation. Acta Numer. 17, 235–409 (2008)

Xiao-chuan, W., Wei-bo, D., Ying-ning, D.: A weighted OMP algorithm for Doppler superresolution. In: 2013 Proceedings of the International Symposium on Antennas & Propagation (ISAP), vol. 2, pp. 1064–1067. IEEE, Piscataway (2013)

Yang, X., Karniadakis, G.E.: Reweighted ℓ 1 minimization method for stochastic elliptic differential equations. J. Comput. Phys. 248, 87–108 (2013)

Acknowledgements

The authors acknowledge the support of the Natural Sciences and Engineering Research Council of Canada through grant number 611675, and of the Pacific Institute for the Mathematical Sciences (PIMS) Collaborative Research Group “High-Dimensional Data Analysis”. S.B. also acknowledges the support of the PIMS Postdoctoral Training Centre in Stochastics.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2020 The Author(s)

About this paper

Cite this paper

Adcock, B., Brugiapaglia, S. (2020). Sparse Approximation of Multivariate Functions from Small Datasets Via Weighted Orthogonal Matching Pursuit. In: Sherwin, S.J., Moxey, D., Peiró, J., Vincent, P.E., Schwab, C. (eds) Spectral and High Order Methods for Partial Differential Equations ICOSAHOM 2018. Lecture Notes in Computational Science and Engineering, vol 134. Springer, Cham. https://doi.org/10.1007/978-3-030-39647-3_49

Download citation

DOI: https://doi.org/10.1007/978-3-030-39647-3_49

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-39646-6

Online ISBN: 978-3-030-39647-3

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)