Abstract

Many people think “adaptive instruction” is synonymous with “personalized instruction” or training that is designed to appeal to the desires and whims of the individual learner. However, since the purpose of training is to support an organization’s needs for a fully competent and developed workforce, adaptive instruction is better thought of as training that is designed to change the training based on the learning needs of the individual (or group) as identified by gaps in the learner’s knowledge as well as the learner’s depth of understanding with the content. After all, the purpose of training is to “impact … individual, process, work team, and/or organizational performance” [1]. The adaptations are all about providing each learner with the training they need based upon precise gaps in the individual’s knowledge and skill level (e.g., novice or expert) with the content, all while ensuring the training is designed in line with learning theories about how people learn. For example, if the gap is for a specific motor skill, then the training will provide opportunities for the learner to practice, develop, and hone the skill. This could be done in virtual, simulated, or live environments. The reason for having physical practice is not because the learner “prefers motion” or is a so-called “kinesthetic learner,” but because the desired training outcome is physical performance of the task.

Those who are unfamiliar with the instructional design approaches to adaptive instruction might view the notion of “testing out” of a course as a form of adaptive training (or at the very least a precursor to the current approach). However, the test-out method is built around a linear view of training, as well as a view that all learners must work through all of the training materials. The problem is that people have very different backgrounds and knowledge bases and clearly do not all require the same training to be able to succeed in their work [2]. In addition, sometimes the training gaps demonstrate that some of the training which has been traditionally taught in a linear fashion does not need to be delivered in this way. This is not to suggest that there are not logical sequences or progressions of development. Rather, instructional design should expand its view of what is possible in training delivery and design the course to best meet the needs of the learners and workforce. Consider that 20 years ago we had to design courses to fit into specific time blocks because we had to account for the limitations of having an instructor and trainees gathered together. This led to the development of some courses that follow a highly structured progression even though the concepts and skills are not logically linear at all. The use of adaptive instruction can allow for a very different method of delivery.

Instructional designers use learning theories and best practices in instructional design to determine which teaching strategies to use for specific types of learning. Thus, it falls to the instructional designer to determine how the training ought to be developed to best meet the needs of the individual learner. The adaptive instructional system (AIS) must have methods to identify the learner’s knowledge and skill level and must deliver training which supports each unique combination of knowledge and skill level, especially as learners gain knowledge and their skill levels change.

The author investigates research-based approaches to identifying the knowledge gaps and skills in academic settings and applies these to workplace training. Further, the author identifies specific instructional design approaches and organizational thinking which are needed to support this type of adaptive approach to workplace training. The paper identifies the necessary technical components and capabilities for a fully functional AIS which provides adaptive training based on the learner’s gaps in knowledge and different skill level (from novice to expert). The discussion provides specific examples to illustrate how the adaptive training would differ for the individual learners in training designed for pilots, air traffic controllers, project managers, etc. Finally, the author calls upon instructional designers and software engineers to collaborate in developing systems which will provide the type of training the workforce needs and help us learn more about how people learn so that we can refine the training even as we learn about what works best.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Pilot Training: A Need for Adaptive Instructional Systems

The idea for this research paper began when the author was tasked with evaluating and redesigning the academic curriculum component of an undergraduate pilot training program. The original curriculum was designed to be delivered over an 18-month period during which students would have a limited number of flights in high-fidelity simulators; and eventually they would have solo flights.

The goal of the new program was to reduce the total training time by as much as 9 months. Students were given low-fidelity simulators which they could use as often as they liked from day one of the training. In order to reduce the total time for the course, the academic training needed to occur in a condensed time frame (as compared to its original format) and would need to happen in tandem with the students’ initial simulated flight experiences. In fact, because the low fidelity simulators led to students solo flights happening sooner, the customer even sought to provide academic instruction prior to students’ arrival so that they would be able to complete the academics before live flight.

The existing curriculum had been designed for live instruction combined with e-learning modules and reading materials. The e-learning modules were primarily click-and-read with a limited amount of animated graphics and videos to demonstrate or explain some of the concepts. The reading materials were lengthy guides of 100 or more pages per topic. Most of the live instruction was designed to provide students with support for their independent work (e-learning and readings).

The customer believed that students could likely test out of some of the lessons or pieces of the curriculum and that this would reduce the time burden. Further, the customer was interested in providing individualized training.

As the author examined the academic training content, several challenges became apparent.

-

1.

What is the best way to determine which components students have already mastered? There was so much material (over 1,000 pages of student readings alone) that even a simplistic test-out method could become burdensome in and of itself.

-

2.

How should the training be designed to adapt to the needs of each student while still ensuring all students meet all of the requirements (i.e., mastered the objectives)? They needed an adaptive delivery system which could accommodate the individual needs and account for all the variables.

-

3.

What design tools could the author use to map all of this training material? The tool needed to enable the design to allow for different learning paths, different learner aptitudes, sequencing, additional supports for students when needed, etc.

The author conducted research to find the answers to the questions described above, and in so doing developed an outline for an adaptive instructional system which could be used for training such as the pilot training program, but is much more broad-based in its application to workplace training. This paper describes that adaptive instructional approach.

2 Adaptive Instruction for Organizational Training

2.1 Adaptive Training vs. Personalized Training

The purpose of training is to enable individuals and groups to improve their performance to better support the organization [1]. Adaptive instruction for workplace training should be designed to improve the employee-learner’s ability to achieve the outcome or otherwise improve the outcomes for the organization. Thus, adaptive training is defined as training which is delivered or tailored to the individual in order to best support learning mastery for the individual within the confines of the organization’s needs.

It is important to distinguish training that is tailored to meet a learner’s needs and training that is tailored to meet a learner’s preferences. Some argue that adaptive training should be developed to support the individual learner’s preferences (e.g., create videos and games for learners who prefer learning this way). However, research shows that developing training for different delivery methods based on learner preference yields no greater results, and may even be harmful because learners do not develop or hone skills needed to learn (or succeed) in different learning environments/contexts [3]. Indeed, we must ensure our workforce can learn in all different contexts because we cannot possibly deliver every training to meet every learner’s preference. Further, developing duplicative training is costly. The author distinguishes adaptive training designed to meet the needs of learners by referring to training delivered based on user preference as personalized training and training delivered based on learner needs as adaptive training.

2.2 Adaptive Training for Different Skill Levels

In the workplace it is highly likely that individuals, even those working the same job, have varying skill levels with the specific content. Yet, there is a commonly held misunderstanding that all learners need all of the same content [2]. In addition to the problem of not recognizing differences among learners (or not acknowledging those differences when developing training), most organizations do not have systems in place to support the development or delivery of such differentiated training.

Consider the traditional live training in which employees are brought together for the training for a set amount of time (i.e., half-day training or a three-day course). In such an environment, all learners need to be ready to tackle each topic at the same time, moving at the same pace and reaching the finish line at the same time. While it is possible, and even likely, that there are instructors who differentiate instruction for some learners, when appropriate and possible, there are also built in constraints limiting such adaptations, if only due to the need to meet all course objectives. Further, such differentiation is not often built into the training.

As for computer-based training, this too is mainly designed with a one-size-fits-all approach. It is true that learners do not necessarily have to move at the same pace, but they are generally all provided the same exact training content and are expected to go through all of it.

The Learning Continuum.

Thinking of learning as a continuum, the author defines three specific skill levels among learners: novice, intermediate, and expert. A skill level refers to the depth of understanding or familiarity a learner has with the topic. A novice is someone who has little, if any, prior knowledge in the particular content area; and an expert is someone who has a broad knowledge base and can draw from experience and a complex level of understanding. The intermediate falls somewhere in between the novice and expert. It is important to note that the term expert is used to describe the level of experience for the learner, and is not to be confused with a subject matter expert (SME). All of the learners identified–from novice through to expert–need some training on the topic; the difference lies in how much training or what type of training they need. A person can be a novice in one area and an expert in another, and in fact this is to be expected. Thus, it is important to determine the learner’s skill level (novice, intermediate, expert) for each topic.

The one-size-fits-all model not only wastes the valuable resource of employee time when employees are given training they do not need, but it also results in diminishing returns because people respond to different training approaches as their knowledge, skill, and/or ability improves [4, 5]. In addition, the expertise-reversal effect shows that providing experts with training designed for a novice can have negative impacts on the training outcomes for experts and vice versa [6]. In other words, if we remove the extra supports and information which could help the novice, but require the novice to take the training, it is likely that the novice will not do well. And if we require the expert to take the training designed for the novice, the expert is likely to do poorly in the training as compared to training designed for the expert. The expertise-reversal effect is not intuitive at all; we often assume that the more experienced worker will thrive regardless of the training approach. However, research has shown time and again that providing extraneous materials leads to poorer learning outcomes for various learning contexts, including math, literature, statistics, foreign language, computer skills, accounting, etc. [7]. Since the purpose of training is to enable people to perform well at their jobs, poor training scenarios risk poor job performance outcomes.

As noted, research shows the need for differing training approaches based on the learner’s skill with the topic. Following are two examples which the author created to demonstrate how adaptive training could be provided to the novice, intermediate, and expert.

Project Manager Training.

A company develops training for new project managers which includes several topics: budgeting; reports; human resources and personnel management; and contracts and legal compliance. The new managers have different levels of experience with each of the topics. Some of the learners will be familiar with all of the required reports, and will be able to tackle this component of their new role with minimal training. Others will be very comfortable with all of the information required in the reports, but will have no idea how to navigate the section of the company’s intranet in order to find the report templates as well as information that goes into the reports. The adaptive training would be provided as such:

-

The learner who only needs training on navigating the Intranet will be provided with that information only.

-

The learners who do not require information about the Intranet will be given training which focuses on the reports themselves.

-

For learners who need all of the information, the training will focus on one component at a time, guiding learners throughout the process so that learners can master each task.

Inquiry/Discovery-Based Training for IT Security.

The following example is specifically provided to demonstrate that even inquiry-based (discovery-based) approaches can be used in an adaptive instructional model. Discovery-based training approaches are based on the work of Jerome Bruner, who said that people learn when they build meaning for themselves through the act of discovery [8]. Kalyuga identified specific approaches to discovery-based instruction for the novice [4]. When considering the discovery-based approach for adaptive instruction, it is important to recognize the challenges facing learners of different skill levels. In particular, if the training is too open-ended, the novice will expend effort trying to understand some of the basics at the expense of having cognitive resources to make discoveries. Because the novice is new to the content, s/he will not know what is most important for the task at hand. Thus, the discovery-based approach must be designed differently for the novice than for the expert. In the case of the novice, the training must limit the extraneous information (or unknowns) and focus on the area where the learner should be making the discovery.

Here is how the IT Security training might be different for the novice and the expert. The training is about complex technical security policies. Learners are provided with a real-life example and asked what they should do in the situation. All learners will be given an open-ended question, but the training will differ as follows.

-

The training for the novice will only include the terminology which is important to the task at hand, and will explain the terminology. This enables the novice to focus on the challenge of figuring out what the employee should do rather than having to look up the terminology and struggle with figuring out the meaning of the policy.

-

The training for intermediate learners will provide explanations for some of the terminology, as well as links (or text that the learner can click on to learn more) for explanations of the policy. In this way, the intermediate learner is given some guidance, but not as much as the novice.

-

The training for the expert will provide an open-ended question with links to policies and terminology, but does not provide any specific explanations of the policies within the body of the problem. The problem also may include extraneous policies or a more complex situation. This allows the learner to come up with a solution without being distracted by the basic information that is already familiar.

2.3 Adaptations for Different Learning Paths

The adaptive training should address more than just differentiation based on learner familiarity; it must also allow for different learning paths. Returning to the example of the pilot training, the academic content included material on physics and aerodynamics. Since all of the students were undergraduate college students, it is likely that some students were familiar with some of the physics concepts. However, even among the group with familiarity, some of the students would need the training to cover more of the physics content than others. The adaptive training model could provide each student with only the components they needed. To sync this up with the idea of the novice, intermediate, and expert: Suppose we have two students that need all of the pilot-specific aerodynamics content. The student who has a strong grasp of physics is provided training designed for an expert and the student who has no physics background is provided training designed for a novice. A third student has already mastered the pilot-specific aerodynamics content and does not need this part of the training at all.

Figure 1 depicts how this might work, by indicating different learning paths as if they were routes on a map. Each point on the map represents a skill, knowledge, or ability (KSA) that the learner must master in order to succeed in the full course objectives. If a learner has already mastered the skill, knowledge, or ability, then the learner will not undergo training in that area (i.e., be directed to that point on the map). (The diagram does not indicate novice, intermediate, or expert as this is another layer that must be addressed at each point on the map, and may change as a learner progresses through the training.)

This form of adaptive training allows each individual learner to travel the path which is most expedient and appropriate for that person. One person may need to go to all locations; another learner may only need to visit one or two. Some learners will need to take more time or revisit areas while others will grasp the content or develop the skill relatively quickly.

3 The Adaptive Instructional System

Adaptive instruction allows for individualized learning paths and accounts for the different needs of learners based on their skill level, even when providing training for large groups of people. This adaptive approach requires very specific input from instructional designers (IDs) and a technical system or architecture which delivers the adaptive training. Since the instructional design approach is embedded or integrated into the technical system, the discussion of the different technical capabilities will include specifics on the role of instructional design. The adaptive instructional system (AIS) must include capabilities, methods, and systems which:

-

Determines the learner’s skill level and knowledge gaps

-

Conducts periodic learner analysis to provide adaptive training delivery

-

Evaluates the learner experience and effectiveness of the adaptive system

-

Provides reporting tools and metrics for students, instructors, and organizational leaders

-

Supports adaptive training in digital and live training environments

In addition to the components within the AIS, there is a need for development tools that IDs could use to map the training components by learner levels, as well as the variety of learning paths, all while ensuring that the learning objectives and outcomes are addressed in such a way as to ensure all learners receive all the training they need.

The following discussion looks at each of the AIS requirements in greater detail, from the perspective of instructional design and learning theory. While the discussion includes some specific examples, it deliberately avoids providing or prescribing the technical solution(s) because it is believed that the software engineers and computer scientists should bring their expertise and insight to the problem and are likely to arrive at a much more elegant solution which addresses all of the instructional requirements.

3.1 Learner’s Skill Level and Knowledge Gaps

In order to ensure the learner is given the right training, the system must determine the learner’s gaps in knowledge and the learner’s skill level for each specific component of the training. The system should take into account historical information, assessments, and self-reporting about knowledge and skill level.

Learner Certifications and Training History.

There are many different individual data points which can act as a starting point for determining a learner’s knowledge base and skill level. This includes professional licenses, certifications, and academic degrees which indicate a base level of knowledge or skill with the particular content. The system should also account for other training the employee has taken, particularly any training that is provided by the company (i.e., the same organization which is providing the adaptive training). It is important to note that certifications and completions of training do not guarantee that the learner has mastered the material, but this information can provide helpful information about both gaps in knowledge and skill levels. The ID should provide lists of courses, certifications, and other indicators of knowledge in the particular area. As the system matures, the AIS may provide analysis showing which types of past training are strong indicators of a learner’s knowledge.

Assessing Skill Level and Perceived Effort.

One way to determine whether a learner is a novice, intermediate, or expert is to ask the learner to describe how they would solve a difficult problem. This approach is based on research and theories about the differences in the way that novices and experts approach a problem, and follows a similar process used by Kalyuga and Sweller [9]. Here is how it works. At the beginning of the training, learners are presented with a complex problem and asked to describe or tell what they would do first in solving the problem. The learner’s answer is used to identify where s/he falls on the novice to expert continuum. Obviously the ID will need to develop the complex problems, possible student responses, and the linkages between student responses and skill level.

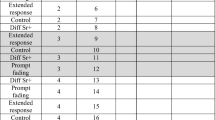

Using the example of the project manager training, the learner could be given the following problem: The quarterly report XJ5 is due next week. Describe the first thing you need to do in order to get this done. Table 1 provides examples of the different responses and the rationale the ID would use to determine the skill levels.

It is important to assess skill levels at various points in the training as some learners will progress from novice to expert rather quickly and others may require more practice and guidance, moving from novice to intermediate.

Self-reporting Skill Levels.

The training should include questions asking the learner to rate their own skill level in the course material at intervals throughout the training. It is true that a novice might indicate s/he is an expert with the hopes of skipping the training. However, the learner would not skip the training. Instead, the learner might (depending upon all of the other measures in this category) begin with a training component that is too difficult. When the learner does not master the training concepts, the system will adapt and provide different training which is more suited to the learner.

3.2 Ongoing Learner Analysis and Adaptive Delivery

In order to ensure learners are receiving the training that best supports their needs, it is important to conduct ongoing assessments of the learner’s knowledge base and skill level to enable additional (or ongoing) adaptations throughout the training. In terms of the knowledge base, the instructional designer needs to identify specific measures of success throughout the training as well as the indicators (data) of differing levels of success. The ID should determine metrics/data and pathways based on a “theory-based prediction model,” of the training [10]. According to Reigeluth, ID’s should design training based on theories about how people learn, and should use data to test those theories; all the while using the data to make decisions about what learners need [11]. The ID designs the training based on a framework of how the learner will progress through the training as well as markers of progress or indicators of needing more support. Further, the ID needs to provide specific instructions about how the course should be altered depending upon what the data analysis reveals [10]. Two important characteristics of the data the ID collects are: 1. it helps to answer a specific question or set of questions about the learner or training and 2. it provides information that can be acted upon [10].

The system should also include a way to determine the effectiveness of the adaptations: is the training at the right level of difficulty for the learner (is the cognitive load ideal) and does it provide the learner with the content that s/he needs? The author’s research uncovered several different methods of using the difficulty level (learner’s perceived level of effort) and the learner’s performance on the task to see if learners were given training that met their skill level. Using both types of data is important because a learner may do very well on the task while exerting a high level of cognitive effort and another learner may do very well while exerting little effort at all. Of course there are several other possibilities as well. We must have a way to distinguish among these learners so that the training can provide each learner with the training to enable him/her to progress to the point where they exert little effort (i.e., develop expertise). One way to determine the effectiveness of the adaptations is to look at whether the level of difficulty is what would be expected based on the learner’s knowledge and skill level.

As an example, following is a description of the data points used during a research project on adaptive approaches for air traffic controller (ATC) training [12]. The ATC training had tasks which were scored according to specific components of the task. For example, if the task included a plane with initial flight altitude which differed from the desired exit flight altitude, that component of the problem was scored as a 1. If the task involved the possibility of an airplane leaving the airspace if the ATC did not give a command to change its heading, that task scored 3. The complexity of the problem was the sum of all the tasks. Upon completing each problem, learners indicated how much mental effort they invested (on a five-point scale from very low to very high).

If a learner succeeds in a task, but indicates that the problem was difficult, the system could adapt by providing the learner with guided problems or additional problems; this should be determined by the ID based on the content. The system could be designed to require learners to achieve a specific efficiency score—a measure of the perceived difficulty and success—so that learners do not move on to tasks based solely on a pass/fail score, but rather only move on when they demonstrate the appropriate skill level [9]. It is also recommended that development of the AIS involve research to determine which algorithms provide for the best learning outcomes [9].

3.3 Learner Experience and Training Effectiveness

As described in the previous section, during the training delivery, the AIS should be calculating the efficiency of the training. At the same time, the AIS should be collecting metrics on how well it is working. In other words, the system should be designed to evaluate whether the pre-determined plan or map worked as expected. It is possible to have a situation where the ID indicated a task was very difficult, but the learner experience suggests otherwise. Or the learner outcomes may show that intermediate learners are having to repeat the same content and tasks several times, which may indicate the need for additional content or problems with the design of that material (or some other issue).

3.4 Tools and Reporting Methods

In order for the system to create reports, the system must be able to access data which was saved during the training event as well as collect and analyze data from various sources. As such, it is not likely that the AIS can be built upon a learning management system (LMS), or at least not solely upon an LMS: most LMS’s do not track data about how learners interact with the training or “how they go about solving an educational task” [10, p. 158]. Learning record stores (LRSs) provide a way to capture learner experience data, and may support the need for data captured throughout the training event [13].

The reporting methods and tools must be designed to provide reports that are specifically tailored to the various users, including at a minimum: students, instructors, IDs, and organizational leadership. Further, the ID should develop materials which help the different users not only to understand the reports, but also to know what they should do next. For example, a report provided to the student should include recommendations for next steps, which would be specific actions the learner can take in order to expand understanding of the concepts, to build upon the success within the training, actions to ensure the training mastery is demonstrated on the job, etc. Reports for organizational leaders should explain the findings as well as make recommendations such as how the training might be revised in the future.

3.5 Supports Many Learning Platforms

AIS should be developed to support e-learning, instructor-based training, blended training, etc. As shown in Fig. 1, learners may need different training components, and these are not limited to computer-based training. Not only must the ID develop adaptive training components for the training designed for different delivery methods, but the AIS must also provide a way for the instructor to identify which training to use (e.g., when should the instructor use the training for novices and when should the instructor use the training for experts).

4 Conclusion

As employees have varying levels of experience and knowledge even when they perform the same job, there is a need to provide training which meets the unique needs of each person so that organizations are not wasting resources by providing unneeded training. Further, the expertise-reversal effect shows that it is not ideal to provide experts with training designed for novices. The experts will not do as well as they would if the training were designed for them.

An adaptive training approach should include identifying the learner’s knowledge gaps and skill level. Adaptations should be made periodically throughout the training in order to support the learner’s development. The AIS should evaluate the effectiveness of the adaptations in order to support refining the training as well as informing the design of future training. The AIS needs to include reporting tools, and the ID should ensure that the reports include information which not only explain what is in the report, but also provide guidance and suggestions for next steps based on the findings. Finally, the adaptive training systems should be designed to support training for all training environments, from e-learning to the instructor-led classroom.

The paper provided the theory and concepts which should inform the AIS; however, the next step is for IDs and software experts to collaborate and build a system, and then refine the design by conducting research using actual training and employees.

References

Swanson, R.A.: Analysis for Improving Performance. Berrett-Koehler Publishers Inc., San Francisco (2007)

Hannum, W.: Training myths: beliefs that limit the efficiency and effectiveness of training solutions, part 1. Perform. Improv. 48(2), 26–30 (2009)

Hannum, W.: Training myths: false beliefs that limit the efficiency and effectiveness of training solutions, part 2. Perform. Improv. 48(6), 25–29 (2009)

Kalyuga, S.: For whom explanatory learning may not work: implications of the expertise reversal effect in cognitive load theory. Technol. Instr. Cognit. Learn. 9, 63–80 (2012)

Ngyuen, F.: What you already know does matter: expertise and electronic performance support systems. Perform. Improv. 45(4), 9–12 (2006)

Jiang, D., Kalyuga, S., Sweller, J.: The curious case of improving foreign language listening skills by reading rather than listening: an expertise reversal effect. Educ. Psychol. Rev. 30(3), 1139–1165 (2018)

Chen, O., Kalyuga, S., Sweller, J.: The expertise reversal effect is a variant of the more general element interactivity effect. Educ. Psychol. Rev. 29, 393–405 (2017)

Culatta, R.: Instructional Design.Org. https://www.instructionaldesign.org/theories/constructivist/. Accessed 11 Mar 2019

Kalyuga, S., Sweller, J.: Rapid dynamic assessment of expertise to improve the efficiency of adaptive e-learning. Educ. Technol. Res. Dev. 53(3), 83–93 (2005)

Davies, R., Nyland, R., Bodily, R., Chapman, J., Jones, B., Young, J.: Designing technology-enabled instruction to utilize learning analytics. TechTrends 61, 155–161 (2017)

Reigeluth, C.: What is instructional-design theory and how is it changing? In: Instructional-Design Theories and Models: A New Paradigm of Instructional Theory, pp. 5–30. Lawrence Erlbaum Associates, Mahwah (1999)

Salden, R., Paas, F., Broers, N., Van Merriënboer, J.: Mental effort and performance as determinants for the dynamic selection of learning tasks in air traffic control training. Instr. Sci. 32, 153–172 (2004)

xAPI Homepage. https://xapi.com. Accessed 11 Mar 2019

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Bove, L.K. (2019). Adaptive Training: Designing Training for the Way People Work and Learn. In: Sottilare, R., Schwarz, J. (eds) Adaptive Instructional Systems. HCII 2019. Lecture Notes in Computer Science(), vol 11597. Springer, Cham. https://doi.org/10.1007/978-3-030-22341-0_3

Download citation

DOI: https://doi.org/10.1007/978-3-030-22341-0_3

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-22340-3

Online ISBN: 978-3-030-22341-0

eBook Packages: Computer ScienceComputer Science (R0)