Abstract

Quantitative systems pharmacology (QSP) places an emphasis on dynamic systems modeling, incorporating considerations from systems biology modeling and pharmacodynamics. The goal of QSP is often to quantitatively predict the effects of clinical therapeutics, their combinations, and their doses on clinical biomarkers and endpoints. In order to achieve this goal, strategies for incorporating clinical data into model calibration are critical. Virtual population (VPop) approaches facilitate model calibration while faced with challenges encountered in QSP model application, including modeling a breadth of clinical therapies, biomarkers, endpoints, utilizing data of varying structure and source, capturing observed clinical variability, and simulating with models that may require more substantial computational time and resources than often found in pharmacometrics applications. VPops are frequently developed in a process that may involve parameterization of isolated pathway models, integration into a larger QSP model, incorporation of clinical data, calibration, and quantitative validation that the model with the accompanying, calibrated VPop is suitable to address the intended question or help with the intended decision. Here, we introduce previous strategies for developing VPops in the context of a variety of therapeutic and safety areas: metabolic disorders, drug-induced liver injury, autoimmune diseases, and cancer. We introduce methodological considerations, prior work for sensitivity analysis and VPop algorithm design, and potential areas for future advancement. Finally, we give a more detailed application example of a VPop calibration algorithm that illustrates recent progress and many of the methodological considerations. In conclusion, although methodologies have varied, VPop strategies have been successfully applied to give valid clinical insights and predictions with the assistance of carefully defined and designed calibration and validation strategies. While a uniform VPop approach for all potential QSP applications may be challenging given the heterogeneity in use considerations, we anticipate continued innovation will help to drive VPop application for more challenging cases of greater scale while developing new rigorous methodologies and metrics.

You have full access to this open access chapter, Download protocol PDF

Similar content being viewed by others

Key words

- Virtual population

- Virtual patient

- In silico trial

- Virtual patient cohort

- Sensitivity analysis

- Objective functions

- Prevalence weight

1 Introduction to the Use of Virtual Populations in Quantitative Systems Pharmacology Models

Quantitative systems pharmacology (QSP) is a discipline that incorporates elements of systems biology and pharmacometrics with an emphasis on dynamic systems modeling [1], often with the goal to quantitatively predict the effects of clinical interventions, their combinations, and their doses on clinical biomarkers and endpoints. In practice, QSP model development typically emphasizes biological regulation demonstrated to play a role in pathophysiology and clinical response, integration with reported clinical biomarkers, and clinical endpoints as prime considerations for the initial model design and refinement. In addition, QSP models are inherently both quantitative and mechanistic in that they aim to model the dynamic behaviors of a system in a fashion that is biophysically constrained by factors such as molecular affinities, proliferation rates, and transport rates. QSP is therefore distinct from some approaches to systems biology that may be more qualitative in deriving insights from data and place an initial emphasis on lateral integration of many pathways [1, 2]. In addition to characterizing the biological or physiological system, QSP models relate the quantity of drug dosed, frequency of dosing, pharmacokinetics, and proximal pharmacodynamics with biological or physiological responses. From this perspective, QSP models can be described as physiologic system pharmacodynamic models that incorporate key considerations from pharmacokinetics, and similar to physiologically based pharmacokinetic models often incorporate a reasonable mechanistic strategy for linking serum or plasma concentrations of a drug to concentrations at the modeled site of action.

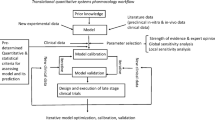

QSP models and their application have been discussed in much detail previously [3,4,5,6], and the goal of this chapter is to both provide background for how Virtual Populations (VPops) form a critical component of QSP research and an introduction to methodology for VPop development. Once established, VPops can be used to generate well-informed predictions on the patient population in new, previously untested, clinical scenarios, such as exploration of new therapeutic interventions. As shown in Fig. 1, VPops can be viewed as forming a critical link between basic pathway information, prior clinical data, quantitative clinical hypotheses, and actionable clinical decisions. Although this chapter will largely focus on clinical development applications, VPops have also served to help evaluate and prioritize new targets and pathways for therapeutic intervention in the setting of drug discovery.

2 A Brief Introduction to QSP Model Development

There exists a number of different potential mathematical modeling formalisms for QSP models [7], and QSP models intend to facilitate an understanding of a pathophysiological system. However, not all network-based analyses to identify interaction points for a pharmacological inhibition, although potentially useful, would necessarily be described as QSP modeling approaches. As the name implies, a QSP model intends to capture quantitative outcomes. QSP models mechanistically describe pathophysiological processes, model dynamic quantitative physiologic endpoints, and are used to predict how the dynamics of biomarkers and clinical endpoints change in response to a pharmacological intervention [1]. Systems of ordinary differential equations (ODEs) are commonly used for QSP modeling, and the VPop examples will focus on application with ODE models.

Determining the scale of a QSP model is an important step in planning its development, and scale can vary significantly depending on the scope and application of the model. For many discovery and preclinical applications, small-scale QSP models focusing on very specific biological pathways proximal to the drug target could be sufficient to gain some insight on the target mechanism of action and assist in drug discovery. As will be discussed later, small pathway-level QSP models may be fit to nonclinical data to extract informative model parameters to implement in a more comprehensive QSP model that integrates more of the biological system and feedbacks. The larger model therefore helps with a broader translation of nonclinical knowledge in the context of prior clinical data on targets of interest and related pathways. Small pathway-level models may also find applications in helping to optimize a specific molecule or in late clinical development to simply link a pharmacologic intervention to a biomarker of interest. For clinical applications, middle to large-scale QSP models that describe disease pathophysiology are often well suited to capture biomarker and clinical endpoint diversity representative of real patients, which is often achieved through the development of VPops. Recent progress in computational model development and application packages, advances in computing power, and the availability of richer clinical datasets have made this goal more readily achievable. It is worth noting that larger, more comprehensive models can capture many biomarkers and clinical endpoints of interest, and thus are well suited for identifying new pathways as potential therapeutic targets [8], assisting in biomarker development [9], prioritizing assets for clinical development, and mechanistically characterizing combination therapies and the quantitative contribution of components for combinations. The number of pathways in a model can increase the computational requirements for calibration and application of the model, especially when they span multiple time scales. However, opportunities to calibrate the model against a broader array of data and test salient predictions to validate the model for a particular application can also increase as more pathways and endpoints are incorporated. In the next section, we will provide a brief overview of the development of QSP models and the utility of VPops.

2.1 QSP Model Scope

Scoping is one of the most important factors that shapes the structure and complexity of a QSP model [3, 7]. In practice, an initial QSP model scope can often be achieved by thorough discussions with stakeholders in different scientific groups within pharmaceutical research and development, which may include clinicians, biomarker scientists, clinical pharmacologists, and drug discovery scientists [3]. This also serves to attain alignment on the scope of the model with these important stakeholders from the drug discovery and development matrix teams. Alignment with the matrix teams is crucial, as it helps to set concrete deliverables and timelines for model development and application. An experienced QSP modeler with significant expertise in the disease area is a primary contributor in both scoping and development stages. A thorough understanding of both the mathematics and biology ensures that key mechanistic considerations are incorporated while also resulting in a model with feedbacks structured to give reasonable physiologic behaviors.

Medium to large-scale QSP models that incorporate relevant positive and negative feedbacks based on key target as well as disease cellular and molecular physiology can provide more mechanistic insights while still giving good predictions. This requires a well-informed modeling strategy with suitable information and data to constrain the positive and negative feedbacks. Practically, if reusing and expanding an existing model that is performing well for related applications in a disease area, the expansiveness of the additional pathways to evaluate as well as amount of additional data to inform the model recalibration will have a substantial role in impacting project timelines. Like many multicompartment modeling paradigms in mathematical biology [10], the following elements should be identified to assemble QSP models for application to clinical development and discovery:

-

Compartments. The first step is the identification of biological compartments that usually represent physiological units in the broad sense. For example, a minimal set for an immuno-oncology (I-O) QSP model could include blood compartment, a tumor-draining lymph node, and tumor compartments in order to include key components of the cancer–immunity cycle [11]. Additional physiologic compartments such as a thymus might be added as needed to model specific behaviors or accommodate additional biomarkers.

-

Species. Once the compartment configuration is set up, a list of species tracked in each compartment can be identified based on the model scope. From a mathematical standpoint, a species will generally correspond to a state variable, but during the model development process there may be exceptions to this rule-of-thumb. For an I-O QSP model, these species could include the following.

-

Cancer cells and immune cells, such as CD8 T cells, CD4 Treg cells, antigen presenting cells, and others.

-

Immune checkpoints, possibly including cytotoxic T-lymphocyte-associated protein 4 (CTLA-4), programmed cell death protein 1 (PD-1), and lymphocyte-activation gene 3 (LAG3).

-

Mediators released by immune cells, including cytokines and chemokines.

-

Other important cell surface receptors, such as targeted cytokine receptors.

-

Therapeutic interventions, such as small molecules or antibodies and other biologics.

-

-

Reactions in each compartment and models for reaction fluxes. Developing a QSP model entails defining accurate mathematical description of the interactions between the species within a compartment. For an I-O QSP model, these interactions could include cell proliferation and apoptosis, cell–cell contact, mediator production, positive and negative feedbacks induced by mediators, cell surface molecule dynamics, and surface molecule induced positive and negative feedbacks, such as those caused by immune checkpoints. The dynamic behaviors of immune checkpoints, including binding, unbinding, and cell membrane diffusion, are of particular interest for linking therapeutic target engagement with pharmacodynamic effects [12].

-

Transport between compartments. Transport processes that bridge different compartments often need to be modeled. We often might not explicitly model these biological compartments spatially, therefore the transport processes should be developed to reflect the underlying biology. This is often accomplished by a series of mathematical approximations, and a relevant example is the tumor penetration of therapeutic molecules [13,14,15]. Still taking an I-O QSP model as an example, important transport processes could include the following.

-

Immune cell exchanges between compartments, such as immune cell extravasation from the blood and immune cell egress from tumor into a tumor-draining lymph node.

-

Molecular transport, such as therapeutic molecules being delivered from blood to tumor and mediator exchanges such as the transport of cytokines.

-

-

Therapeutic interventions. This includes the drug dosing regimens, pharmacokinetic models, target binding, and proximal effects in the model.

There has been much discussion of parameter estimation for systems biology and QSP models [7, 15]. From a practical standpoint, the reliability of applying the model in a predictive context for biomarker and clinical endpoint outcomes is a distinct and often more salient consideration [17, 18]. The systems biology community has been exploring strategies for direct characterization of prediction uncertainty [16,17,18]. We will touch on practical strategies for characterizing uncertainty in QSP model predictions with VPops later in the chapter. It is also worth noting that gathering relevant parameters from fitted pathway models, where a goal is often to characterize the pathway itself as opposed to immediately making a clinical prediction, is a distinct and important consideration. Pathway-level parameters extracted from other sources such as modeling well-controlled in vitro experiments can serve as a basis for parameterizing isolated pathways in a larger QSP model. Additional steps and feedbacks will play a role in translating from the in vitro pathways measured to the clinical disease system. That is, modeled reactions and intercompartment transport can be modeled and parameterized as their own constituents by controlled pathway model development, and careful integration of suitable pathway models into a larger QSP model may improve the process of translating in vitro results.

2.1.1 Pathway Model Development

A pathway model is a small-scale model that focuses on a key biological pathway and its detailed parameterization. During pathway model development, we want to ensure that estimated parameters are identifiable. To facilitate discussion, we consider the process of antibody-dependent cellular cytotoxicity (ADCC). ADCC is a mechanism of cell killing where an effector cell of the immune system binds to the fragment crystallizable (Fc) region of an antibody bound to a specific antigen at the surface of the target cell and releases cytotoxic factors to lyse the target cell. In this example, the effector cell is a natural killer cell (NK), the target cell is a CD4 Treg cell, the antigen is CTLA-4, and the antibody is a member of the class of anti-CTLA-4 antibodies. Here, we desire to get a quantitative estimation of ADCC potencies of different anti-CTLA-4 antibodies. One way to achieve this is through a well-designed in vitro experiment that reports target cell lysis when the assay cocultures different effector to target cell ratios (E:T ratios) as well as different antibody concentrations at physiological conditions (see Fig. 2). Ideally, the effector NK cells and target CD4 Treg cells are derived from human donors so we can better translate the results from this small pathway model to the QSP model.

In order to build a pathway model to describe the in vitro ADCC, we need to develop an ODE system that describes the time course of the cell–cell interaction in an assay well as antibody induced target cell lyses at different E:T ratios and antibody concentrations after a certain time of coculture. Some parameters needed for a pathway model, such as the cell’s radius, may be fixed by data in the literature or direct experimental measure. During the model development and parameter estimation process, we would want to make sure the ODE system describes the in vitro experiments accurately and the model parameters to be estimated are identifiable. For example, if effector–target cell complex formation and dissociation are modeled as a separate step, we could model the lysis rate as:

In this variation of a Hill equation [19], fc_bound_per_cell describes the amount of antibody bound to effector cell which can be modeled by the measured antibody affinities and [ET] is the effector–target cell complex. \( {K}_{\mathrm{kill}}^{\mathrm{max}},\mathrm{fc}\_\mathrm{ec}50 \), and adcc_slope are the parameters that need to be estimated from the in vitro experiment readout at different conditions. Depending on the therapeutic, clinical questions, and available data, one might need additional mechanistic detail for the pathway model. With sufficient data, we may also be able to fit nonlinear mixed-effect statistical models for the pathway model parameters [20]. When employed with an experimental model focused on cells from human subjects, the approach can start to yield estimates of salient variability that could be informative of pathway-level variability in patients when accompanied with a suitable translational strategy.

We give just one example of pathway model development to inform parameterization of a QSP model. In our experience, pathway models could be used more comprehensively through the discovery and clinical stages to support an asset to ensure better quantification of experimental readouts, aid in their interpretation, and inform translational strategies. For example, antibody binding as assessed on cells through flow cytometry and in surface plasmon resonance assays is very different, but the biophysical underpinnings for the differences can be modeled to extract a useful quantitative description that more readily translates to new physiologic scenarios of targeted receptor expression [12, 21]. To extract useful characterizations, the in vitro system often has to be quantitatively well-defined, which can be challenging in coculture designs when many cell types are involved, key quantities are not reported or readily available, or more complicated phenomena are being reported.

2.1.2 In Vitro–In Vivo Translation of Pathway Model Parameterization

Once relevant pathway models are developed and calibrated, the next step is to translate the model pathways and parameters into the QSP platform model. For example, consider if we want to add the ADCC component into the tumor compartment of an I-O QSP model for anti-CTLA-4 antibody. The I-O QSP model should represent the physiological NK cell and CD4 Treg cell interactions in the tumor microenvironment, where in vivo cell movement speeds play important roles in determining cell–cell complex formation. The cell–cell interaction parameters should be updated when translating from the in vitro experiment into the I-O QSP model, and in vivo motility data from techniques such as two-photon microscopy exist to form a rational basis for translation [22]. However, it could be reasonable to use estimated \( {K}_{\mathrm{kill}}^{\mathrm{max}},\mathrm{fc}\_\mathrm{ec}50 \), and adcc_slope in the I-O QSP model. If additional information on inhibition of ADCC processes is available, it can be quantitatively incorporated. It should be noted that other factors in the I-O QSP model are equally important and accounted for when determining in vivo ADCC, including immune cell infiltration into the tumor, antibody delivery into the tumor, cell proliferation, and cell–cell contact effects. The net in vivo ADCC employed in the platform is an integrated outcome that considers all these interactions, and is not solely determined by the in vitro parameterization. It is also worth noting that depending on variability observed in the in vitro parameters and sensitivities observed during model development, one can start to develop reasonable insights into which parameters may ultimately drive some of the biomarkers being calibrated and also formulate reasonable ranges the parameters should vary over during QSP model calibration. It is important to note that this careful translation of the in vitro characteristics is not the sole component of the strategy for clinical prediction, as the population still is ultimately calibrated to clinical variability and response data that can include biomarkers within and effects from this pathway.

Models of responses at the cellular level are additional examples of pathway models that can be integrated into larger QSP models. Such approaches also drive QSP towards a more modular design rationale. Initial advances of modeling cell types with logical network approaches appears promising as one rationale basis for modeling cellular responses in light of multiple inputs [23, 24], and additional steps can be taken to translate logical networks into quantitative ODE models with experimentally tractable and translatable concentration sensitivities [25].

2.2 Integration of Biomarker and Clinical Endpoints

Once a QSP model structure has been integrated and physiologic values or ranges are established for the parameters, the next step is to ensure the model is able to capture and explain observed clinical trial biomarker and response variability. This is important to address before the model is applied to clinical development questions. QSP, in contrast to simpler clinically relevant modeling approaches, therefore often aims to provide a multi-perturbation, multi-output analysis of a disease and one or more therapeutic interventions at a population level. Given that a QSP model usually provides an extensive quantitative framework for characterizing the mechanisms of action of therapies and their physiologic responses in a specified disease area, it can be helpful and sometimes even critical to integrate as many data-supported pharmacodynamic (PD) biomarkers as possible. As this is done, it becomes possible to constrain and calibrate the model with integrated clinical datasets. For example, given an I-O QSP model focusing on CTLA-4 and PD-1 checkpoint inhibitors in a first line melanoma patient population, ideally we would want to integrate essentially any modeled PD markers that were collected from first line melanoma patients in all available clinical trials for modeled therapies. These PD markers could be collected from patients’ blood sample or tumor biopsy. Target tissue data often have an advantage as they may more tightly link the modeled mechanisms and response, whereas data from the blood may differ more from the site of action due to differences in contributions from multiple tissues and different half-lives. Different data sources can play an important role in model development. For our considerations in I-O, the following apply:

-

Immunohistochemistry data from tissue biopsies can inform modeling the composition of tumors [26].

-

Omics data may also be used to inform modeling. In our experience, transcriptomics may correlate well with select, paired markers from immunohistochemistry, but the application context is important for implementing transcriptomics data. Immune signatures may also be useful to indicate the clinical activity of pathways modulated by multiple mediators or with substantial known posttranslational regulation, such as type I interferons and the transforming growth factor beta pathways [27, 28]. Signatures may be helpful for calibrating pharmacodynamic changes in a QSP model if implemented through net effects on the appropriate pathways and cell populations. Immune deconvolution approaches hold promise to estimate cellular compositions from historical clinical transcriptomics data [29, 30]. However, from our experience comparing between different modalities, care should be taken in the interpretation of absolute tissue cell fractions reported from deconvolution.

Expertise in a therapeutic area helps to interpret and apply data in a reasonable fashion. We often would want to integrate patient level data at different time points, such as pretreatment at day 0 vs on-treatment at day X, for multiple therapies. VPops can be used to calibrate a QSP model to clinical data for biomarkers and endpoints that are investigational, accepted as mechanistically important, or established as clinically meaningful for patient outcomes and trials. For the example in I-O, we may also want to include index lesion size and response rate data for calibration. When these data come from original electronic datasets on file, which may more often be readily available in an industry setting, they can provide a multivariate and more detailed resolution of distributions. In contrast, trial results published in journals often present only summary statistics, although they can still be useful to inform VPop calibration. In general, it is important to proactively check and ensure that these integrated biomarker or clinical endpoint data are collected from a well-defined patient population, such as first line or I-O naive melanoma. This is important as it sets the target patient population and clinical data integration criteria. Finally, relationships between the QSP model and the biomarker and clinical endpoint data are integrated from a consistent patient population through the development of virtual patients (VPs), VP cohorts, and VP populations (VPops). Ultimately VPops in conjunction with a QSP model serve to reproduce observed clinical trial outcomes by calibrating to the observed data, and then, if sufficient confidence has been established, often using a fit-for-purpose validation, the QSP model and VPop enable mechanistic extrapolation to new therapeutic regimens or interventions.

3 A Methodological Context for VPop Applications

VPop approaches have been applied to a number of questions relevant for clinical drug development in order to ensure consistency with prior clinical data. Before introducing these studies, we have to define several terms. There has been some divergence in the literature with regards to at what stage a model parameterization should be referred to as a VP [7, 31, 32]. Here, we adopt the standard that a VP is essentially any model parameterization. As we will describe in Subheading 4, if the simulated outputs for a particular model parametrization pass additional checks to verify the VP falls within reasonable clinical observations of ranges and dynamic behaviors, we may further qualify the VP as a plausible VP. A set of plausible VPs is called a VP cohort. We define prevalence weights as a vector of nonnegative weights that sum to one and are equal in length to the number of VPs in a VP cohort. Note that the prevalence weights have an analogy in importance sampling [33,34,35], where one may use sampling from one distribution to characterize another. We generally define a VPop as a set of prevalence weights and an associated VP cohort, although in some of the cases below prevalence weights are not used in the fitting process. Some of the major algorithmic and methodologic considerations and differences are briefly reviewed in order to illustrate different strategies that have been applied for VPops and motivate further discussion of methodology.

Klinke reported on a case study fitting a VPop for type 2 diabetes [36]. In this case study, the model was already well-developed, and Klinke started with a predeveloped set of VPs that were to be calibrated to real patients from the National Health and Nutrition Examination Survey III (NHANES III). Klinke used data from one intervention given in NHANES III for his model calibration, the oral glucose tolerance test. Data from different interventions, hyperinsulemic clamp studies and overnight fasting, were withheld from the calibration, and correlations between the clamp studies and less invasive fasting measures were used to check the VPop calibration. Some unique methodological considerations for Klinke’s approach were the availability of the electronic, multidimensional NHANES III dataset to guide the development of the VPop and the reduction of the dimensionality for fitting with a principal component analysis approach. To develop the VPop, Klinke derived a single variable distribution to fit by adjusting the prevalence weights for his VP cohort. The variable was derived from the radial distance of each real or virtual patient in a four-dimensional principal component space defined by the first four principal components of the original 13 taken from the NHANES III dataset.

A different approach was employed by Howell et al. that incorporated initial assumed parameter distributions and checks of resulting simulations for agreement with data [37]. The most methodological detail is given for their in vivo extrapolation for methapyrilene and to compare acetaminophen and methapyrilene. It is worth noting Howell et al. also made a clear, stepwise distinction when describing their calibration strategy for system and drug-specific parameter calibration. Distributions for each parameter were assumed a priori, VPs were sampled from the assumed distributions, and simulated VPs were compared to data. A fitness score for each VP was generated based on the likelihood of its parameter values from the comparison of simulation outputs to data and the likelihood given the assumed distributions. A genetic algorithm was applied to iteratively generate sets of VPs of improving fitness score. The final set of VPs was selected, with manual filtering to remove VPs with nearly identical parameter values. Variability in drug-specific parameters was incorporated as a second step. Howell et al. did not apply prevalence weights in their VPop calibration strategy. In contrast to many of the other QSP applications discussed here, Howell et al.’s model and VPop approach was used to investigate drug safety. In a related study [38], their group’s approach yielded additional clinically relevant insights, including using the time to reach peak serum alanine aminotransferase as a potential marker for the time to clear the toxic metabolite, N-acetyl-p-benzoquinone imine, from the liver. Their results also suggested improvements for current treatment nomograms, and that the oral N-Acetylcysteine protocol was more efficacious than the intravenous protocol for patients presenting within 24 h of acetaminophen overdose due to the treatment duration.

Later, Schmidt et al. implemented a different algorithm using hypothesis testing strategies to optimize the fits of VPops to data [39]. This approach made use of comparison methods such as t-tests, F-tests, and chi-square tests to weight differently sized clinical datasets in their comparison to VPops. These comparisons were then combined in a composite goodness-of-fit using Fisher’s method. The method was applied to calibrate alternate VPops that matched trial results nearly equally well, but had differences in the assignment of the prevalence of different VPs. The algorithm introduced an effective sample size for the VPop, similar to the effective sample sizes used for weighted sampling strategies [40], with higher effective sample size implying better spreading of the prevalence weights. The algorithm also considered VP parameter values when assigning prevalence weights by grouping parameter ranges into bins and optimizing the binned parameter probabilities. Schmidt et al. applied the method to investigate a type I interferon signature predictive of nonresponse to rituximab in rheumatoid arthritis [41], and proposed a quantitative mechanistic hypothesis for the clinical observation due to anti-inflammatory type I interferon effects that were robust across the alternate VPops. The alternate VPops emphasized different underlying pathophysiologies, exemplified by the mechanistic differences in VPops that tended to respond well to rituximab versus those that did not respond as well. Schmidt et al. also identified candidate baseline synovial biomarkers of response that tended to be selected as predictive of response to rituximab across alternate VPops, including markers of fibroblast-like synoviocyte and B cell activity. Later, Cheng et al. adapted this algorithm for implementation into the QSP Toolbox [32]. In Subheading 6, we will discuss a modification of this algorithm that includes additional types of hypothesis test comparisons, has relaxed the binned parameter prevalence groupings, and implements automated resampling and screening of plausible VPs.

Allen et al. proposed an algorithm that calibrated a VPop based on datasets where a single individual has a number of measured outcomes in a single datasource, such as NHANES III [31]. The strategy from Allen et al. was distinct from Klinke in several aspects. Notably, because the model itself was developed to simulate more quickly and readily facilitated sampling of new VPs, the algorithm was developed to enable additional sampling of the parameter space. Each sampled VP was optimized to better fall within observed physiologic ranges and yield a plausible VP. Allen et al. used estimates of the relative density in the data versus the plausible virtual patients to optimize probabilities to include their VPs in a VPop. That is, the approach from Allen et al. also employed a more direct adjustment for their metric of prevalence, inclusion probabilities, as opposed to optimization of agreement of a PCA-based marginal distribution. The algorithm from Allen et al. optimized the goodness-of-fit as evaluated by an average univariate Kolmogorov-Smirnov test-statistic over all biomarkers. When developing predictions with the virtual population, they sampled VPs according to their inclusion probability.

In a subsequent publication, Rieger et al. compared considerations of the diversity of generated VPs and goodness of fit for the algorithm proposed in Allen et al. with several new proposals [42]. Interestingly, a modified Metropolis-Hastings approach was among the strategies proposed and assessed. One key modification was that their Metropolis-Hastings approach focused on using a well-described target distribution for determining the acceptance probability, that is, distribution of model outputs postsimulation, as opposed to using target parameter distributions as in the canonical version of the algorithm.

Quadratic programming has also been used in VPop approaches. As one example, Kirouac et al. developed a virtual population in a translational application to predict responses to a novel extracellular signal-regulated kinase (ERK) inhibitor, GDC-0994, using data from epidermal growth factor receptor (EGFR), B-Raf, mitogen-activated protein kinase kinase (MEK), and ERK inhibitors [43]. Kirouac et al. implemented their match to clinical data as a constraint in the optimization, with changes in lesion size calibrated across three different targeted oncology therapy combinations. Rather than using the fit to the data as an objective function, which was successfully imposed as a constraint, their minimization objective was the sum of the square of the prevalence weights, essentially maximizing the effective sample size. For the calibration to predict the clinical response of GDC-0994, Kirouac et al. included data from other trials testing combinations of vemurafenib + cetuximab, dabrafenib + trametinib, and dabrafenib + trametinib + panitumumab. Although the clinical trial sample size was small, the approach employed by Kirouac et al. gave good prospective prediction of the observed response rate in a subsequent phase I study of GDC-0994 in colorectal cancer patients.

In addition to calibrating to pharmacodynamic markers and clinical endpoints observed for individual or multiple trials at a population level, calibration to individual responses in individual trials has also been proposed. For example, Milberg et al. fitted a model of response to I-O therapies to lesion response dynamics, and as a first step this included fits to individual patient index lesion dynamics, though not other biomarkers [44]. Once it was established that the model could reproduce the observed lesion trajectories by varying a subset of model parameters, virtual trials were simulated by sampling the subset of parameters within predefined ranges. In another study, Jafarnejad et al. used patient-specific information on tumor mutational burden and antigen affinity while sampling other varied model parameters to guide patient-specific prediction for anti-PD1 therapy responses in a neoadjuvant setting [45].

4 VP Cohorts Are a Precursor to VPop Development

It is often useful to explore the input–output behavior of a QSP model prior to the VPop calibration process. This often includes selecting a subset of the model parameters as well as determining reasonable physiologic ranges in order to generate sufficient variability for the model outputs that are to be matched to clinically observed biomarker data. Part of the parameter selection process is often manual and based on biological insight and expertise developed during model development. Important considerations include:

-

Variation of parameters in key pathways for physiology and quantitative hypothesis testing. In an I-O QSP model, this could be cytokine-mediated versus checkpoint-mediated immune suppression.

-

Characterized variation in pathway models needed to fit literature data or in-house experiments. Taking the ADCC example discussed earlier, different human NK donors might have different maximal ADCC rates. Another example is the expression level of cytokine receptors that may vary from patient to patient.

-

Ability of the model to recreate variability in observed patient characteristics. For an I-O QSP model, that could include immune cell content in the blood, the tumor, or the tumor-draining lymph node.

-

Observed variations between different literature reports.

Appropriate parameter ranges can either be derived from literature values or estimated from pathway model calibrations, as discussed in Subheading 2.1.1. Depending on the size of the QSP model, a global sensitivity analysis (GSA) of desired model outputs with respect to all model parameters, or a related multivariable sensitivity analysis strategy with a parameter subset, can be used to complement manual parameter selection. For example, GSA approaches have been used to identify and rank the most influential parameters for a particular model output given an anticipated range of possible values for the parameter [46]. One may also want to fix noninfluential parameters during the calibration. Varying the “right” set of parameters can be crucial to generate missing phenotypes (e.g., a responder to a specific therapy), which is particularly important if one wants to simultaneously calibrate a QSP model to many biomarkers and endpoints using data from multiple trials and therapies.

By imposing additional restrictions on the model outputs we can avoid behaviors that are known not to be physiologic or take into account published summary information about biomarker ranges, even if individual-level data are not available. As described previously and shown in Fig. 3, a set of plausible VPs is called a VP cohort, and it represents a natural starting point for VPop development.

Virtual patient cohort development. Alternate model parameterizations are sampled and simulated when developing the VP cohort. Multiple interventions (therapies) are simulated for each VP. Biomarker and response data are used to guide reasonable parameter bounds and set acceptance criteria on simulated outcomes. Plausible VPs must pass all acceptance criteria

4.1 VP Cohort Development

In order to illustrate the development process leading to a VP cohort we consider the ODE system of a general QSP model in the form:

Here, Yj is the vector of state variables on intervention j and Θ is a complete set of parameters. In this chapter, we adopt the convention that the term “state variable” refers to mathematical state variables with time derivatives, as defined by the form of eq. (2), and parameters are fixed, time-independent, specified quantities.

In practice, often one will want to simulate J clinical interventions which would most often be implemented as introducing dosing functions, but practically could involve intervention-specific parameter updates or initial values, which we will neglect for now. For notational simplicity, we index the state variable vector over j. Although the functions will mostly be conserved across interventions, we expect changes in considerations like functions to account for dosing that will impact the state variable values. We can also express the model with regards to the M individual state variables:

The N model parameters are denoted as:

Often, the number of parameters is by far larger than the number of state variables, that is, N ≫ M. The right-hand side of the ODE system can also be expressed as:

The right-hand side ODE system includes, for example, the reaction rates within a compartment and the transport rates between compartments.

The set of biomarker data and clinical endpoints being used for calibration may often be mapped to functions of state variables (Y ↦ g(Y)). This occurs, for example, when comparing to cell fractions while using cell numbers as state variables. For each intervention, j = 1, …, J, we can compute the value of the observed biomarkers, b = 1, …, Bj, using the results from the simulations of the ODE system:

The set of biomarker data is often smaller than the number of state variables, that is, Bj ≪ M, and reported biomarkers may also be related to combinations of state variables. The observed biomarker data are:

Each of the Bj observed biomarkers may be reported at a distinct number of Ij, b discrete time instances given by:

Note that we may have multiple observations at each time point in the data, each from different patients. Simulations of alternate model parameterizations, or VPs, will also often be of interest. Note that the number of time points for biomarker b on intervention j, Ij, b, can vary. For example, we might be able to collect many index lesion scans from a cancer patient over the duration of a therapy, but obtain only a baseline and one on-therapy measurement for a biopsy biomarker from the same patient.

To generate variability in the model predictions for biomarker and clinical endpoints we often vary a subset of model parameters:

Each varied parameter is restricted to its physiologically feasible range, that is,

Here, \( {\theta}_{i,L}^a \) and \( {\theta}_{i,U}^a \) are lower and upper bound of the parameter \( {\theta}_i^a \), respectively. We define a parameter axis as a parameter with an associated upper and lower limit that may be varied between virtual patients during model calibration. In some instances, we may wish to combine parameters into groups so they are varied together, but this case is not essential for the present purposes.

The next steps in generating a VP cohort are to simulate different parameter combinations (VPs) and then impose acceptance criteria to ensure plausibility. Often the acceptance criteria can be formulated in the simple form:

That is, we could require the simulated result for biomarker b on intervention j to fall in the same range as the observed data. Often we may want to constrain the simulations to fall within the range of observations matched for the same time point. Constraints imposed on the model outcomes may not have a simple mapping back to the parameter space. The allowed solution space can therefore be nonconvex with regards to parameters, which may pose new considerations in connection with methods such as GSA. Screening functions with boundaries that are time-varying or defined by relationships between the state variables are possible, especially when the dynamic characteristics for plausible physiologic behavior are better established [32].

There are some additional considerations for creating a VP cohort. For example, diversity in the modeled VPs is important, although we will still want to fit to clinical data. Initial iterations of VP cohort development could involve strategies such as Latin Hypercube Sampling or lower discrepancy quasi-random sequences, such as Sobol sequences [47], to more efficiently explore the parameter space. In addition, in some cases we may also incorporate known relationships between parameters based on the data, biology, or known model behaviors. For example, it could be that one model rate must be smaller than another to give realistic asymptotic behaviors or it may be established that the apoptosis rate for one cell type is smaller than another. Therefore, if we want to ensure that samples from \( {\theta}_i^a \) are always smaller than samples from \( {\theta}_j^a \):

Such constraints can be implemented as criteria to prescreen VPs, before running simulations. One could also turn the postsimulation acceptance criteria into an element of a composite objective function if one wants to write an optimization algorithm to create more plausible VPs [31, 32].

4.2 Sensitivity Analysis Techniques, Parallels and Differences with VP Cohorts

Knowledge of model sensitivities developed during pathway and larger model development as well as biological insight and literature research are important guides in developing suitable parameter axes for VP cohort development. It can often be useful to complement these strategies with a systematic approach, that is, based on the strength of the influence a parameter has on the output of interest. Sensitivity analysis (SA) can also help to determine influential model parameters and guide model calibration. Local SA methods often compute the relative change of an output with respect to a small relative deviation of a single input parameter from a nominal value. However, ranges of interest for biological parameters can span orders of magnitude, either due to intrinsic biological variability or uncertainty about the true parameter value, and the impact of changes in combinations of parameters is often of interest. As a result, GSA methods have also been developed, which can be used to quantify changes in the output variable with respect to changes of parameters over their whole range.

In the following we shall focus on three of the GSA methods used in the systems biology literature and their suitability for GSA in the context of VP cohort development in more detail [46, 48, 49]: correlation coefficients (CCs), Morris’s method based on elementary effects, and Sobol indices based on a variance decomposition of the output variable. The Pearson CC is defined as:

Here, \( \mathrm{Cov}\left({\theta}_i^a,y\right) \) denotes the covariance between the input parameter \( {\theta}_i^a \) and an output variable of interest y while \( {\sigma}_{\theta_i^a} \) and σy denote their respective standard deviations. By definition, the Pearson CC varies between −1 (total anticorrelation) and +1 (total correlation), and it measures to what extend \( {\theta}_i^a \) and y are linearly related. If one rank-transforms the data before computing the CC on obtains the Spearman CC. If one additionally discounts linear effects of the remaining parameters \( {\theta}_{j\ne i}^a \) on \( {\theta}_i^a \) and y one obtains the partial rank CC, or PRCC. The PRCC has also been shown to yield a robust global sensitivity measure for nonlinear but monotonic relationships between \( {\theta}_i^a \) and y [48]. If the relationship is nonmonotonic over the sampled parameter range positive and negative effects can average out, yielding a small PRCC and potentially giving an incorrect impression that \( {\theta}_i^a \) has negligible influence on y.

In contrast to PRCCs the applicability of the other two methods, Morris’s elementary effects and Sobol indices, can provide useful sensitivity information in the presence of nonmonotonic input–output relationships. Both methods assume that the axes parameters have been scaled to the unit cube [0, 1]Q via:

Each scaled parameter varies between 0 and 1. This framework is compatible with the bounds often placed on parameter axes. Morris defined an elementary effect of the parameter xi on an output y by the scaled difference:

Here, each of the xj in X = (x1, …, xQ) is randomly chosen from a p-level grid with points:

There is a restriction, xi ≤ 1 − Δ, so that the change in output with respect to xi can be computed with the defined parameter domain. In general, the step size, Δ, as well as the number of grid points per parameter, p, can be different for different parameters. However, the choice Δ = p/[2(p − 1)], with p being an even number, reduces the total number of required model evaluations from 2Qr to (Q + 1)r, where r is the number of independent parameter samples [50]. Efficient sampling strategies have also been proposed in revisions to the method [51]. The mean and the variance of the elementary effect with respect to parameter xi can be estimated from:

While the mean quantifies the average impact of a parameter on the output, the variance contains information about model nonlinearities and parameter interactions. Note that di can change its sign if the relationship between y and xi is nonmonotonic, so that positive and negative contributions to the mean could average out, similar as for PRCCs. However, the Morris method still allows to differentiate between parameters that have negligible impact on the output (|μi| and σi are small) and those that effect the output in a nonmonotonic manner (|μi| is small, but σi is not). Alternatively, the mean can be defined in terms of \( \left|{d}_i^{(k)}\right| \), rather than \( {d}_i^{(k)} \), which then quantifies the average total impact of a parameter on the output independent of its direction.

The elementary effects for a single parameter are defined in a similar manner as local response coefficients. However, by averaging the local sensitivities for this parameter over the whole parameter range (while sampling the other parameters) this method yields global information about the impact of a parameter on an output. Another way to obtain global information about parameter sensitivities is based on a decomposition of the output variance with respect to parameter contributions of increasing order [52]:

The first order effect of parameter xi on the output variance is:

It is computed by first taking the average of Y with respect to all parameters but xi (i.e., by keeping xi fixed). In a second step, the variance of this conditional expectation is computed by averaging over xi. Higher order terms in the expansion of V(Y) can be defined in a similar manner, for example:

Here, Vij quantifies the combined effect of xi and xj on the output variance discounting first order effects of each parameter, that is, Vij quantifies the combined effect of xi and xj due to interactions. By dividing by V(Y), one can define Sobol indices of any order. One computes the first order indices defined by:

Here, by definition several relations hold for the first order indices:

And:

To quantify interactions one can compute higher-order indices, which have an increasing computational cost. Alternatively, one can compute the total effect index associated with parameter xi, given by:

Note that the term in the numerator yields the first order effect of all parameters but xi on the output variance. Hence, V(Y) minus that term should include all terms in the expansion that involve xi, that is, it measures the first order and all higher order effects of xi on the output variance. One can show that:

In addition, parameters with small values of the total effect index can often be neglected when generating variability for the corresponding output. One might expect that variance based methods require r2Q model evaluations, but through an efficient sample design this number can be reduced to (Q + 2)r [53].

Interestingly, when applied to a model for HIV, the three GSA methods introduced here gave similar results with respect to the ranking of parameter importance [46]. This promising result suggests a simple PRCC approach that can yield insight on directionality might also be sufficient for a GSA. However, the monotonic input–output relationships can be a limitation of the PRCC method for many applications, so a combination of methods may give better understanding of the output sensitivities.

Different strategies have been proposed to assess the adequacy of sample size for the GSA methods. One method consists of ranking the parameters and checking for a high correlation of Savage scores, which weight top ranks more heavily [54], between runs of increasing sample size [48, 55]. For PRCC, checking the Symmetrized Blest Measure of Association between runs seems to be a reasonable approach [56]. For the Sobol indices, bootstrapping the simulation results has been proposed to calculate confidence intervals [32, 47], and monitoring the convergence of the sum of the sensitivity indices with increasing model evaluations has also been suggested [57].

There are several additional considerations for simulating VP cohorts that are not explicitly accounted for in the GSA methods as they are originally proposed. All of the GSA methods discussed characterize the effect of multiple parameters (inputs) on a single output for a single modeled intervention. However, when calibrating QSP models to clinical data we normally want to fit the model simultaneously to multiple outputs across multiple interventions, which requires new strategies to combine the single-output parameter sensitivities into a ranking scheme that allows one to choose a suitable set of parameter axes. For example, Campalongo et al. characterize the influence of each parameter on each output in terms of Savage scores, and use the sum of the Savage scores to identify sets of influential and least influential parameters [51].

Another consideration is the set of plausibility constraints for VPs. Although parameter ranges may be well-described by a hypercube, portions of the parameter space may result in simulations run to inform the sensitivities that violate the plausibility constraints. Consequently, the sensitivity metrics may change if we assess only the more relevant plausible regions. Note that the CCs can be computed from a VP cohort since each VP essentially represents a single parameter sample. Computing CCs from plausible VPs in a cohort has the additional advantage that all VPs in the cohort have already passed the acceptance criteria, so that the resulting input–output correlations occur in the physiologically plausible region of parameter space. Since the Sobol indices are often calculated using quasi-Monte Carlo methods, their application should be theoretically robust for sampled points removed from the analysis due to plausibility constraints. One strategy for GSA in VP cohorts could be a combination of PRCCs and Sobol indices because both measures can be directly computed from plausible VP samples. Given the necessities inherent in creating plausible VPs, VP cohorts, and VPops, there remains opportunity for innovation with approaches that facilitate assessment of the sensitivity of multiple simulated biomarkers and endpoints to multiple parameters in an efficient manner while accounting for implausible regions of the parameter space.

5 Considerations for Developing VPop Algorithms

Once the requirements for a VP cohort are established and we begin to generate a cohort of plausible VPs, we still have not yet necessarily ensured the VP simulations also closely match trends in observed data. That is, one goal early in the development of a VPop may be to capture as many distinct plausible model parameterizations as is feasible to better ensure diversity in the modeled pathways [42]. However, the distributions of simulated biomarkers thus sampled may not initially match the target distributions. VPop calibration can be described as the process of generating a VPop that matches the observed data given a QSP model structure and the target data, and may involve a prevalence weighting scheme to accelerate the convergence to the observed data.

There are several important considerations for VPop calibration:

-

It often aims to ensure agreement with data across multiple interventions simultaneously.

-

It often requires a simultaneous fitting of multiple biomarkers and responses for each intervention. In the example presented in Subheading 6, many dozens of biomarker–time point–intervention combinations are fit. One might expect this to grow as a QSP model is reused to support the development of additional therapeutic interventions.

-

It is usually subject to the same considerations for VP plausibility as described previously.

-

It usually requires fairly well-defined patient populations for fitting, for example, I-O naïve and first-line melanoma or first-line non–small cell lung cancer. Defining a population will also help to establish the guidelines for finding, assessing, and integrating clinical data.

Practically, the strategy of VPop calibration is often to collectively fit many salient data, with a goal of optimizing the predictive characteristics of the resulting fit model [16]. Although parameter estimation is often an objective in itself in pharmacometrics modeling, and pharmacometrics parameters have been used in drug labels [58], it is not necessarily a primary objective in many QSP model applications, especially after transiting from pathway models to a QSP model that can simulate the therapeutic responses. A VPop becomes a basis to use a QSP model and outcomes for biomarkers and endpoints it was trained with to predict for new interventions it was not trained with, for example interpolating between dose levels or, if the mechanisms are well-developed, extrapolating outside of dose levels it was calibrated with. In early stages of clinical development, data may be available for VPop calibration for drugs targeted to closely related mechanisms, and the VPop and model then also serve as a mechanism-informed strategy to extrapolate to predict the impact with the new intervention.

It has also been suggested to include intermediate stages of model calibrations with individual reference model parameterizations [7], although this is not always emphasized in QSP workflows [31, 32, 42]. One can define a reference VP as a plausible VP that captures some additional characteristic of interest, such as responsiveness to a therapy. Reference VPs can be mathematically convenient and have been useful in a historical context at initial project stages [59], especially when models are slow to simulate and quantitatively explore. However, with models that may initially capture many of the observed clinical behaviors well, and given advances in strategies to rapidly explore different plausible VPs, we have often found it practical, useful, and rigorous to proceed quickly to the development of VP cohorts and VPops early in a QSP workflow. There are also some cautionary points about the design, interpretation, and robustness of reference VPs. For example, if calibrating a single reference VP to the mean of the observed data, it is worth noting that it may not be feasible that a real single patient would run through the mean of all the multivariate data for the many biomarkers and endpoints from the population, given inherent heterogeneity in patients even within a well-defined clinical population. As one additional example, the relevance of a single mean calibration is challenging to interpret for bimodally distributed biomarkers or endpoints. The process of developing a VP cohort and VPop ensures that the ranges of the observed biomarkers and endpoints can each be captured, and does so simultaneously. As we will show in the example, after calibrating a VPop, one can begin to assess the variability observed in behaviors, including average behaviors, which provides an additional quantitative context for interpreting them. While developing a VPop can take substantial time and resources, the ability to simultaneously capture observed clinical population ranges, population central tendencies, and characteristics of population distributions is an important step to build confidence before making predictions to inform clinical development. It is worth noting that due to the combination of the available data and model behaviors, a retrospective assessment may also reveal some of the plausible VPs in the VPop tend toward population means across multiple biomarkers and endpoints.

As described in Subheading 3, a variety of strategies to fit QSP models have been employed before, including fitting individual patients up to calibrating population behaviors for multiple therapies across different patient sets. There are potential advantages with regards to being able to calibrate model behavior if one can use data from multiple therapies, especially when one wants to combine many mechanisms in a model and they are not generally administered to the same patient. However, in this case, one is not necessarily calibrating the model to an overlapping set of patients across all of the interventions. Care should be taken to ensure the patients being used in the combined fits are of similar background, so information from very different response classes are not being improperly combined.

One salient consideration for developing VPops is the availability of computational resources. The number of simulations needed to develop quantitatively reasonable calibrations can increase as one increases the number of biomarkers and endpoints to fit as well as the number of interventions per VP. An efficient algorithm that is still sufficiently rigorous to give good predictions is a substantial consideration. For example, if one wants to fit 20 biomarkers and responses across multiple interventions simultaneously, one might need to vary a similar number of parameters in the model. Of course, the time to simulate a model may vary substantially depending on the model processes themselves. For example, if a simulation of a VP on an intervention takes 20 s and there are 10 interventions for each VP, then a single VP takes 200 s of compute time. If one wants to simply simulate 1 × 106 VPs and had 1000 compute cores available, the computation would be done in about 55 h. However, VPop algorithms might not only involve an initial sampling but can also include iterative optimization and resampling processes.

5.1 Objective Functions for VPops

An important component to developing a VPop algorithm is the strategy for optimizing the agreement between a VPop and observed clinical data. Therefore, in addition to using objective functions in the step of developing plausible VPs, objective functions can play a role in the VPop development step and prevalence weighting strategies. A closely related consideration is how well the final fitted VPop agrees, and ideally the optimization and assessment strategies are well-aligned. It is possible for the optimization to find a solution that is optimal but does not agree very well with data, for example if two separate constraints on the optimization preclude a good global fit. In such a case, one may also need to assess the source of the problem, for example whether there are underlying issues in the data, the plausibility constraints were not set up in a reasonable fashion, or there is an issue in the model itself.

5.1.1 Objective Functions for Combining Data from Multiple Interventions and Disjoint Patient Groups

Consider a fixed set of simulation results that had already passed plausibility screening, and we want to assess the agreement of those simulation results with observed data. Say we want to assess agreement with a population, pooling together patients that have been given the different therapies that have been simulated. There are a variety of practical quantitative ways to assess the agreement, each with advantages and disadvantages.

As one example, one may use the square error between observed and simulated population values, possibly given a set of associated prevalence weights. Such an error term is often normalized by variability observed in the data to better facilitate comparisons between different types of observations.

Here, we have an objective function applied over J interventions, each with Bj, Tj, b biomarker–time point measures. Joint comparisons, for example involving using information between time points, are not shown here for simplicity. In contrast to the notation used in Eqs. (6)–(8), the individual time points are directly indicated here, but each biomarker–time point may also have distributions of patient data, so the comparison between the set of data for a given time point, dj, b, t, and corresponding weighted model-derived outputs, gj, b, t, is left generally as hj, b rather than directly showing the difference. However, a recognizable least-squares objective function will result given an appropriate selection of hj, b. Here, we have a matrix of the modeled axes coefficients for multiple plausible VPs indicated as \( {\overline{\Theta}}_{\mathrm{axes}} \), and the prevalence weights are indicated by W. E is the square error and sj, b is a normalization factor. One advantage is that such a least squares objective function formulation may be fast to solve even for a large number of biomarkers and interventions, given an appropriate selection for hj,b. Another open consideration for a least-squares approach is how to make a judgement that a fit is considered “acceptable.” One selection for the scaling factor could be:

Here, \( {\sigma}_{j,b}^2 \) is the variance for the biomarker or endpoint b on intervention j. If using this selection for a scaling factor, the minimum in a least square objective function comparing an individual simulated model parameterization with a single target value mathematically coincides with a minimum in a negative log likelihood function for fitting individual time-series data, provided model residuals are independent and normally distributed [17]. However, such a strategy for scaling does not directly take into consideration differences in underlying sample sizes if calibrating multiple datasets. An additional consideration is how to adapt the least-squares approach for fitting population characteristics including observed variability as opposed to individual time series data.

As another example, one could also use frequentist statistical methods to formulate an objective function [39]. For example, p-values are combined and a composite goodness-of-fit is assessed in meta-analysis. A related objective function can be expressed as:

Here, fj, b are functions involving a statistical comparison accounting for the simulation results, such as minus the logarithm of test p-values, and \( S\left({\overline{\Theta}}_{\mathrm{axes}},W\right) \) is a composite objective function. One advantage of such an approach is that, due to the dependence on test statistics against respective data, the normalization for sample size is fixed, and the resulting meta-analysis test statistic may provide a more direct quantitative value for assessment of how good a fit is for each constituent comparison relative to least-squares approaches. With a suitable selection of the fj, b, the p-values for each biomarker–time point also become a diagnostic to help identify any issues in the model or calibration during fitting, and the use of p-values makes it fairly straightforward to compare between them. One disadvantage is that it may be slower to optimize using hypothesis tests in an objective function, given they may necessitate the need for slower, general-purpose optimization methods.

More concretely, if one wants to use Fisher’s method to combine a series of p-values in a meta-analysis of the simulated population versus patient data, the test statistic is:

Here, pj, b, t is a p-value calculated from a statistical comparison to data and \( {S}_F\left({\overline{\Theta}}_{\mathrm{axes}},W\right) \) is a Fisher test statistic. Fisher test statistics have been utilized in meta-analysis approaches [60]. It was noted that the Fisher’s combined method may report overly low p-values when there are mechanistic or other dependencies [61], so for the present case where one wants to minimize the rejection and maximize the agreement with data, Fisher’s method is a conservative approach. Nonetheless, there exist alternative methods for meta-analysis that formally account for nonindependence between the combined tests [61,62,63,64].

Practically, one would like to find VP cohorts and weighting schemes that effectively spread the prevalence weights among many VPs. This helps to ensure a single model parameterization does not drive much of the observed response, and is a prerequisite for resampling strategies and alternate clinical trial simulations. Similar to importance sampling methodologies, one could define the effective sample size as [39, 40, 43]:

Here, N is the number of plausible VPs in the cohort and the weights sum to one.

5.1.2 Objective Functions for Combining Data from a Single Patient Population

If the structure of the data is such that we have comprehensive biomarker and endpoints from a single set of patients, different approaches to calibrating the simulations to data may be more readily used. Situations like this may arise more commonly, for example, in metabolic disease research where a single patient group may be subject to pharmacodynamic studies in addition to the investigational intervention and key indicators of efficacy such as plasma glucose and insulin levels may be subject to frequent sampling. One objective function that was previously proposed for this situation, for example when fitting baseline characteristics of cholesterol across a large database, was to take the average of Kolmogorov–Smirnov (K-S) test statistics calculated independently for each calibrated model output biomarker [31]. In this example, even though the K-S test was calculated for each calibrated biomarker separately, the population fitting step also took into consideration the local multivariate probability density around each VP relative to the observed density across all of the output biomarkers.

5.2 Types of Biomarker and Endpoint Fits

There are many types of information that might be selected for fitting to quantitatively calibrate a VPop, and their choice will depend on the sources of knowledge available for a given clinical intervention, for example whether data are available in-house or must be taken from the literature.

-

Mean and standard deviation are often available in the literature for some endpoints and biomarkers. If the underlying distributions can be verified as lognormal, it is straightforward to calculate and work with the appropriate lognormal summary information.

-

Binned distributions are sometimes natural choices for clinical biomarker and endpoint calibrations. For example, in oncology, clinical response assessments are based in a substantial part on index lesion changes [65], and hence can be grouped as complete responders, partial responders, stable disease or progressive disease.

-

Empirical distributions from source data provide another basis for VPop calibration to patient data. Although individual data points may not be given in many publications, appropriate data may be found from a database or the electronic source clinical trial data.

-

Correlations can also be used in the fitting process.

-

Multivariate distributions can also be fit.

The VPop can be calibrated to many of these characteristics in the data with a related objective function. For example, we previously described a way to calibrate means, variances, binned distributions, and empirical distributions across multiple interventions and disjoint patient groups with a least-squares objective function [66]. As we will describe soon, frequentist statistical methods can be used for these comparisons and more, with methods such as t-tests, F-tests, contingency tables, K-S tests, Fisher’s r-to-z transformation, and multidimensional K-S tests such as the Peacock test and related comparisons [67, 68]. Note that if we have multivariate data, even if not all from the same patients, it is still possible to use these relationships directly in VPop calibration. A calibration of distributions and multivariate relationships is often not possible when using literature data, but can be used with electronic data that might be available within a database or directly from a clinical trial. There are additional options, and an interesting possibility for the multivariate empirical distribution calibration is the earth-mover’s distance [69, 70], although comparisons of one or more pairs of multivariate empirical distributions in many dimensions can be computationally intensive. When combining data across many clinical interventions and trials, it often may not be practical to fit individual patient data due to issues such as the sparsity of data, missing values for many of the biomarkers, and desire to combine observations from disjoint sets of patients. Regardless, multivariate relationships observed in the population from the individual data can often still be calibrated, which we will demonstrate in Subheading 6.

Another consideration is how to best handle time series data, if available, at a population level if not performing fits to individual patient trajectories. Calibrating to distinct marginal distributions at multiple time points might yield reasonable quantitative population behaviors. However, it is worth noting that time series can also be analyzed in terms of autocorrelation characteristics, which perhaps has been more often done for population analyses with stochastic differential equation models [71, 72]. One reasonable proposal to handle this situation could be to directly calibrate, or at least check, that either time series autocorrelation or joint distributions between time point measures for the VPop are similar to the clinical data.

One additional strategy that may be helpful for calibrating QSP models in certain circumstances, especially when one has source data, is imputation. Although one has to be careful when applying data imputation techniques, be transparent about their application, and verify their application is reasonable, they have been useful in a variety of systems biology and medical applications [73,74,75]. There are some situations in QSP where imputation strategies may be useful to help guide model calibration if data are sparse. For example, when many samples from an analyte of interest are below a detection limit but they have clear correlation with other analytes in their observed data [76,77,78]. Another potential application would be to develop quantitative guidance for likely biomarker patterns when there are data for two trials testing the same therapeutic intervention with multivariable individual-level biomarker and endpoint data at multiple time points, but some of the biomarker data are more sparsely sampled in one of the trials.

5.3 Optimization Algorithms

There are two key places where objective functions can play a role in the VPop methods described here. The first is during the cohort development step, when deciding whether a VP is plausible. The second is during the VPop prevalence-weighting step. Following development of suitable objective functions, many optimization strategies have been used in VP and VPop development. For cases where an optimization problem is cast in terms of least squares, fast optimization techniques such as quadratic programming can sometimes be used [43, 66]. Otherwise, more general-purpose optimization techniques such as simulated annealing, particle swarm, or genetic algorithms have been used [31, 32]. As more clinical biomarkers and endpoints are being calibrated, general optimization methods that can scale well across clusters such as parallel asynchronous particle swarm methods may find increasing utilization [79, 80].

5.4 Strategies for Assessing Uncertainty with VPops

As with other modeling approaches, uncertainty is a consideration for VPop approaches. Uncertainty can enter into model development at the steps of defining the model structure, parameterization, and prediction [18]. Although conceptually VP cohorts and VPops can be applied in a controlled manner to address each of these considerations distinctly, we believe the consideration of prediction uncertainty merits the most attention in many practical applications [16, 18], including clinical development. From the model development workflow, considerations for how to structure and parameterize the model are often addressed at the model and cohort development stage, before the VPop is developed. The questions a QSP model is being applied to address during clinical development often depend more on predicted clinical outcomes and biomarkers, as calculated from state variables, rather than structural and parametric insights, which are often explored more during pathway model development.