Abstract

An attempt to estimate the displacement demands of precast cantilever columns has been presented here. The purpose of the findings presented is to set up a more reliable design philosophy based on dynamic displacement considerations instead of using acceleration spectrum based design which initiates the action with unclear important assumptions such as the initial stiffness, displacement ductility ratios etc. The sole aim of this chapter is to define a procedure for overcoming the difficulties rising right at the beginning of the traditional design procedure.

For that purpose first 12 groups of earthquake records cover the cases of far field, near field, firm soil, soft soil possibilities for 2/50, 10/50 and 50/50 earthquakes with minimum scale factors are identified associated to the present fundamental period of structure. And they are reselected for each new period of structure during the iterative algorithm presented here and they are used to remove the displacement calculations based on static consideration. Nonlinear time history analysis (NLTHA) are employed within the algorithm presented here which takes into account the strength and stiffness degradations of structural elements and the duration of records which are ignored in the spectrum based design philosophy.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

8.1 Introduction

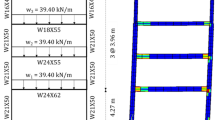

Single story precast frame type structures are widely used in the construction of industrial facilities and commercial malls in Turkey. The non-moment resisting beam-to-column connections are all wet connections. The lateral strength and stiffness of the structure depend entirely on the cantilevered columns, see Fig. 8.1.

After August 1999 Kocaeli and November 1999 Düzce Earthquakes, site investigations revealed that structural damage and collapse of one-story precast structures were common especially in uncompleted structures, (Saatcioglu et al. 2001; Ataköy 1999; Sezen et al. 2000; Bruneau 2002; Sezen and Whittaker 2006). Various types of structural damage were frequently observed in one-story precast structures, such as plasticized zones at the base of the columns, axial movement of the roof girders that led to pounding against the supporting columns or falling of the roof girders, (Wood 2003). The post-earthquake observations of one-story precast frame type structures indicate also that

-

Lateral stiffness may not be high enough to limit the lateral displacement of column tops which may differ from peripheral columns to center columns simply because of the lack of in-plane rigidity of roofing system, Fig. 8.2a,

-

Hence the excessive top rotations of columns and the relative displacement in the plane of roof become perfect reasons to dislocate the long span heavy slender roof beam together with the other two component of earthquake, Fig. 8.2b. They are creating perfect imperfections as well, for out of plane buckling of beams which have very simple insufficient hinge connections to the columns.

-

Incompatible column displacement ductility achieved in the field and the lateral load reduction factor used in design, Fig. 8.2c.

In addition to the observations listed above it is also known that, structural alterations done after construction, the effects of nonstructural elements used unconsciously, oversimplified details of connections can be counted among the other important deficiencies of these buildings which causes severe damages.

At the design stage of that type of buildings, the seismic weight coming from the tributary area of columns are determined easily for predicting the earthquake loads. However the Lateral Displacement Ductility Ratio which is the main parameter of Lateral Load Reduction Factor has to be selected at the beginning of design which is not an easy estimation and has its own uncertainties. Another difficulty is to estimate the lateral rigidity of column which is going to be used to calculate the fundamental period of vibration to go to the spectrum curves. Finally the proposed displacement limits based on static considerations are no longer satisfying the requirements of dynamic displacement calculations.

Those are the factors which are being discussed following experimental and theoretical primary works (Karadoğan 1999; Karadoğan et al. 2006). This Chapter is the scrutinized summary of the findings of the earlier works of the Authors and is aimed to establish a conclusive design algorithm as proposed below.

8.2 Basic Structural Features Observed in the Field and Basic Features of the Current Design Practice

It is probable that all the above mentioned damaged and collapsed buildings they have been neither designed nor manufactured nor mounted properly. From structural engineering point of view the following facts are important to critic the present design practice:

-

There exist almost no in–plane rigidity in the roof and in the sides of the examined precast buildings.

-

The connections between the long span beams and columns are almost hinged and they are vulnerable to different types of failure modes in addition to shear strength deficiency such as rupture of concrete around the shear studs etc.

-

The tributary areas of columns are used to define the earthquake design forces. When this come along the lack of in-plane rigidity of roof then columns in different location with different dynamic characteristics starts to behave independently hence top displacements and top rotations in opposite direction becomes an important issue to keep the long span and heavy roof beam in the required position. Because all kind of imperfection to destabilize the roof beam appears in addition to the inherent tendency towards out of plane buckling.

In local design practice generally un-cracked sections are used to calculate the fundamental period of the structure. Static calculations are required for determination of displacements and a lateral load reduction factors suggested by codes are used to define the design loads.

One of the main issues in precast structures is that the top displacement of center columns in precast single story industrial buildings may not have synchronized seismic oscillations with the perimeter columns despite the fact that they often have the same cross sectional dimensions. The precast industrial structures do not possess in-plane rigidity at roof level in most cases leading thus to lack of load path among the columns resulting individual shaking of each column (Karadoğan et al. 2013). The displacement time-history plots of Column #1, the details of which are given below in the section of Numerical Analyses, are presented in Fig. 8.3. The bottom plot in Fig. 8.3 presents that the maximum center column displacement is 26 cm, while the middle column maximum displacement is 20 cm. These two numbers may mislead the engineer to a wrong conclusion that the differential displacement between the perimeter and center columns is just 26–20 = 6 cm. If the top plot with the differential displacement between the two columns in time domain is observed, however, it can be seen that the maximum differential (i.e. asyncronized) displacement reaches up to level of 33 cm. The main reason for that is because the top displacement of each individual column may occur at different time thus the phase difference may cause large asyncronized displacements. In other words, the top displacement of a center and of a perimeter column may have opposite signs.

The top displacement of individual cantilever columns exhibiting opposite signs may lead to instability of the beams which are hinged to the column tops in existing practice. It can be seen in Fig. 8.4 following analyses of perimeter and center columns of a single-story precast structure with 20 code-compatible records that the tip rotation is always higher than the chord rotation (please note that the chord rotation is equal to drift in cantilever systems). In other words, the tip of the column where hinged beams are connected rotates more than the column itself. This is a major parameter neglected in design.

8.3 Why Justification of Code Based Design Procedure Is Needed?

Even if the damaged or collapsed buildings shown in Fig. 8.2 had been designed properly, been manufactured properly and been mounted properly, unless the assumptions done at the beginning of design are not justified at the end, one should have right to keep suspition about the safety of building.

The basic questions to be kept in mind till the satisfactory design has been reached, are as follows:

-

What should be the initial period of the structure on which the fundamental period will be based?

-

What should be the displacement ductility factor or lateral load reduction factor on which the design forces will be based?

-

To what extent is valid the story drift calculation based on static considerations?

One of the other deficiencies of spectrum based design technique is the length of the record which is not taken into account and the other one is the stiffness and strength deterioration of structure: Unfortunately they are not embedded in the procedure widely used by existing codes.

In order to satisfy the suspicions from which all those questions are arising, an algorithm to justify the design procedures used at the beginning, is presented in the following paragraphs.

8.4 Selection of Partially Code Compatible Records

A simple engineering approach is used here for the selection of records used in nonlinear analyses. The record selection has been done by using the PEER NGA Database where 7,025 recorded motions were available. An in-house developed software was used to list and download the record automatically and plot the spectra for acceleration at 5 % damping, velocity and displacement.

Twelve bins of records, (http://web.itu.edu.tr/~iebal/Dr_Ihsan_Engin_BAL/SafeCladding_EU_Project), are created where:

-

1.

Earthquake intensity (2/50, 10/50 or 50/50 earthquakes, 3 bins)

-

2.

Far field or near field issue (2 bins)

-

3.

Soil type (firm soil and soft soil, 2 bins)

parameters are checked. Each of these 12 bins have 20 records.

In terms of the selection algorithm, first the acceleration spectrum of the original record is compared to that of the target, in the period window of 0.2–2.0 s. A scale factor is applied to the ordinates. Then the near field vs. far field comparison is made where the distances above 15 km are assumed as far field. Finally a comparison is made in terms of the soil type where the records taken on soil with Vs30 higher than 300 m/s are assumed to be recorded on firm soil while records taken on soils with Vs30 lower than 700 m/s are assumed to be recorded on soft soil. There is certainly an overlap in the soil criteria; this is nevertheless unavoidable if one checks the firm and soft soil borders in the guidelines and codes.

The intensity levels of 2/50, 10/50 and 50/50 are defined to represent 2, 10 and 50 % probabilities of exceedance in 50 years, respectively.

The criteria applied have resulted the number of available records, but it should be mentioned that some each bin does not return the same number of available records. For instance, records which are recorded on soft soil and farm field consist of more than 60 % of the record pool, thus the rest is shared between three different groups which are far field – firm soil, near field – soft soil, and near field – firm soil. As a result, selection criteria have to be loosened in some cases.

The scale factors are set such that average of 20 records does not go below the target spectrum in certain percentages and most of the cases the average spectrum is not allowed to go below the target spectrum at all. Similarly, the average spectrum is not allowed to go above 30 % of the target spectrum in any point within the period window. In order to control the difference of the positive and negative peaks, where positive peaks refer to the peaks above the target spectrum and vice versa, another criterion is also applied to check the individual records. According to this, the individual record is not allowed to go below the target spectrum less than 50 %, or above more than 200–300 % in any of the peaks. This criterion dictates to select rather smooth records with less peaks, however it is a very harsh criterion to be satisfied. The scale factors in overall are not allowed to be below 0.5 and above 2 in any of the selected records so that the energy content can be controlled.

Two more criteria have been applied to control the energy content, one is the PGV and the other is the Arias Intensity. The purpose of the inclusion of these two criteria is to decrease the scatter, i.e. record-to-record variability of the selected records. In order to do so, a record that fits the target spectrum with the least error has been assigned as the best record, and the selected records are not allowed to have PGV or Arias Intensity values above or below certain ratios as compared to those obtained from the best record. The limits for these criteria had be set so high in some of the bins that they were practically not much effective because the number of available records was already low even without these criteria. Generally, the selected records are not allowed to have PGV and Arias intensity values, after scale factors are applied, above 1/0.6–1/0.7 and below 0.6–0.7 of that of the best record.

The selection of records has been done by using acceleration spectra, however similar procedures may and should be produced for velocity and displacement spectra as well.

As an example, acceleration spectra and displacement spectra are given Fig. 8.5. Please note that the differences among the selected records are much higher in displacement spectra when long-period structures are considered, such as the single-story precast structures as presented here.

8.5 Proposed Algorithm

The following steps are identified in the proposed algorithm; see the flow chart given in Fig. 8.6 and illustrative description presented in Fig. 8.7:

-

It is assumed that the preliminary design of the structure has been completed so that all requirements in the selected seismic code have been satisfied such as strength and displacement limitations etc. There is no need to discuss what should be initial stiffness or what is the most suitable lateral load reduction factor or the displacement equality principal is valid or not.

-

Real earthquake records are selected so that the parameters like soil conditions, distance to active faults, and the required intensities such as 2/50–10/50–50/50 are all satisfied with reasonable tolerances and scaling factors are chosen as much as close to unity to make the acceleration curves close to the curve provided by codes in a narrowest window around the fundamental period of the structure. The selected earthquakes should be around 20 and the most meaningful part of the records should be identified to shorten the NLTHA analysis which will be used for all records. The details of this topic is discussed below in another sub section.

-

The selected partially code compatible earthquakes are used for linear and non-linear analysis of the structure to check which one of the displacement or energy equality assumption is valid for the specific structure under consideration. It is also important to have an idea about what could be the tolerance to accept the validity of one of these equalities.

-

Depending on the decision done at the end of last step one can calculate the lateral load reduction factor accordingly using the proper formula given in the flow chart.

-

Mean plus one standard deviation of maximum displacement obtained through NLTHA and lateral load reduction factors are calculated.

-

Capacity curve of the structure is obtained using any one of the known technique. These curves cannot be only obtained theoretically but also experimentally, empirically, parametrically, they can be in a continuous form or in the bilinear form etc.

-

Yielding point and the point corresponds to maximum inelastic displacement found are taken into consideration for defining the lateral rigidity and the achieved displacement ductility of the structure from where the more realistic lateral load reduction factors will be calculated referring to the same formula used in the previous step.

-

It is expected to have almost equal lateral load reduction factors in last two cycles of iterations. If they are not at the close proximity then another step of iteration will be carried out.

In the following paragraphs several definitions and explanations are given and some complementary results of early experimental and theoretical findings for over strength factor, lateral load reduction factors and capacity curves are summarized for the sake of having complete information together.

8.6 Over Strength and Lateral Load Reduction Factors

For the sake of completeness the early results achieved by reviewing the experimentally obtained and theoretically examined column behavior has been added into this paragraph (Karadoğan et al. 2006, 2013).

The 4.0 m high column having a cross section of 40 × 40 cm, Fig. 8.8a, subjected to displacement reversals exposed the structural response shown in Fig. 8.8b. The same hysteresis loops have been obtained theoretically and compared in Fig. 8.8c with the experimental results. Then the material coefficients have been reduced from 1.15 to 1.4 to unity for steel and concrete respectively before the similar theoretical works carried out. The envelope of hysteresis curves are compared in Fig. 8.8d.

Similar 12 more tests have completed and similar analyses have been carried out depending on the results obtained and Table 8.1 has been prepared (Karadoğan et al. 2013). One can found the ultimate loads of the columns when the material coefficient is taken as unity or different than unity, in the first two lines, respectively. The ratio of these two lines give the approximate over-strength factors. It can be concluded that for these type of columns the over-strength factors can be taken as 1.10. If the displacement ductilities obtained from the same tests which are given in the fourth line of Table 8.1 are multiplied by over strength factor the lateral load reduction factors on the fifth line will be achieved.

8.7 Capacity Curves

Capacity curves used in the above explained algorithm can be obtained either by means of a theoretical manner or it can be obtained by any one of the known simplified technique. They can be in a continuous form or in bi-linear form.

Sometimes for the same size same quality concrete but for different reinforcement ratios simple ready charts can be utilized for that purpose. An example of a capacity curve for 30 × 30 cm C25 square column obtained experimentally, theoretically and parametrically is presented in Fig. 8.9, as well (Karadoğan et al. 2006).

8.8 Numerical Examples

The presented algorithm has been used to make clear the following issues;

-

To what extent the assumptions made at the beginning of preliminary design are satisfied? Namely, have the strength, the lateral load reduction factor and /or the displacement ductility assumptions as well as the stiffness values used in design been checked?

-

The design is accepted when one or several of parameters are satisfied. What are the tolerance limits for satisfaction of the design criteria?

The initial design parameters and the findings are presented for three columns, Column #1 to #3. The Column #1 is extracted from the benchmark structure of Safecast FP7 Project. Column # 2 is one of the prefabricated columns tested at ITU laboratories (Karadoğan et al. 2006). The Column # 3 is extracted from a real structure currently in use in Kocaeli, Turkey.

The algorithm proposed above was run for each of the columns mentioned here. The algorithm has converged in three steps for all columns. The results as well as the key parameters per each analysis step have been presented in Table 8.2.

The results presented in Table 8.2 are based on the assumption that the change of R factor in two consecutive steps will not exceed a tolerance, which is 10 % in this study. This tolerance as well as tolerance limits of other parameters may be adjusted by the user depending on parametric studies and findings.

Comparison of the assumed capacity curve with the pushover and time history analyses results for columns are presented in Figs. 8.10, 8.11, and 8.12.

The results presented in Figs. 8.10, 8.11, and 8.12 are representative of all possible cases in design iterations when the proposed algorithm is used. In the first example, the displacement condition is not satisfied (i.e. the displacement demand of the original column is higher than the displacement capacity of the structure). The strength is not satisfied in the second example. The third example satisfies both conditions but the algorithm was still run in order to see how the design would change. It can be observed in these figures that the column dimensions and/or reinforcement need to be changed in all cases in order to satisfy the design algorithm proposed here.

Please note that the scale factors for some of the records listed in Table 8.3 are higher than 2. These are the cases where the number of available records for the set of criteria used was not high thus the scale factor condition was loosened.

One of the key points of the algorithm proposed here, which is also one of the main motivations of the study, is that the spectral displacement equality (i.e. equal displacement rule) which is the basis of the conventional design is not valid in most of the cases . The analyses show that for the examined three columns, the average plastic displacements calculated by applying selected 20 records on the columns is always higher than the spectral displacements found from the displacement spectra of the selected records. In other words, the equal displacement rule certainly does not work for the cases examined.

The displacement equality is the base of the conventional design because the behavior factor, R, is the most important assumption of the conventional design. A graphical description of the terms and the design assumption are presented in Fig. 8.13.

The results shown in Fig. 8.14 indicate a significant disagreement between the spectral and real displacement demands. Please note that the period of the three columns presented in the plot shown in Fig. 8.14, columns of 40 × 40, 60 × 60 and 70 × 70, are 0.64 s, 0.75 s and 1.54 s, respectively. As it can be seen in Fig. 8.14, as the period of the system increases, the disagreement becomes even more evident.

8.9 Conclusions

The following conclusions are drawn:

-

Design verification is needed and if necessary redesign step of iterations are carried out.

-

It is possible to overcome the inherently existing deficiencies of spectrum based design by the algorithm presented; namely the strength and stiffness degradations and time duration effects can be considered which are not considered in the code specified spectrum analyses. In this technique, at the beginning of design stage, there is no need to make a series of assumptions such as the initial stiffness of the structure, displacement ductility of the structure and lateral load reduction factor which are all effective on the results. It becomes possible to trace the actual behavior of structure during the iteration steps.

-

The top displacements obtained by NLTHA which are based on nearly code compatible real earthquake records are generally bigger than code limits and they are practically not equal to the elastic displacements obtained by linear time history analyses. Therefore the widely utilized assumption of displacement equality cannot be generalized for the columns analyzed and equality of velocities or energies should be considered wherever is needed. The algorithm presented here is providing a versatile tool for that purpose.

-

The proposed procedure can be used not only for single story precast buildings but it can be generalized by minor alterations for the design of bridge columns or piers and for the critical columns of piloty type building structures where all the nonlinear behavior is observed only in one of the generally lower stories.

-

The execution time for nonlinear time history analyses needed in the proposed algorithm is not a big issue because of the speed reached by computers but more discussions should be done on the selection of real records and their optimal numbers.

-

Several more checks can be added to the flow chart to have more refined one for controlling the sufficiency of sectional ductility needed to provide the required displacement ductility and to check the allowable tip rotations to keep the top beams stable in their original position. The algorithm proposed may be altered to depend on other limits or other parameters based on available research.

References

Ataköy H (1999) 17 August Marmara earthquake and the precast structures built by TPCA members. Turkish Precast Concrete Association, Ankara

Bruneau M (2002) Building damage from the Marmara, Turkey earthquake of August 17, 1999. J Seismol 6(3):357–377

Bal IE Personel Webpage: http://web.itu.edu.tr/~iebal/Dr_Ihsan_Engin_BAL/SafeCladding_EU_Project.html

Karadoğan F (1999) Prefabricated industrial type structures of Adapazari. In: Irregular structures asymmetric and irregular structures, vol 2. European Association of Earthquake Engineering, Task Group 8, Istanbul

Karadoğan F, Yüksel E, Yüce S, Taskın K, Saruhan H (2006) Experimental study on the original and retrofitted precast columns. Technical report (in Turkish), Istanbul Technical University

Karadoğan F, Yüce S, Yüksel E, Bal IE, Hasel FB (2013) Single story precast structures in seismic zones-I. COMPDYN 2013, 4th ECCOMAS thematic conference on computational methods in structural dynamics and earthquake engineering, Kos Island, 12–14 June 2013

PEER NGA Database. Pacific earthquake engineering research center: NGA database. http://peer.berkeley.edu/peer_ground_motion_database/

Saatcioglu M, Mitchell D, Tinawi R, Gardner NJ, Gillies AG, Ghobarah A et al (2001) The August 17, 1999, Kocaeli (Turkey) earthquake – damage to structures. Can J Civ Eng 28:715–737

SAFECAST FP7 (2008–2011) Project Performance of innovative mechanical connections in precast building structures under seismic conditions, coordinated by Dr. Antonella Colombo

Sezen H, Whittaker AS (2006) Seismic performance of industrial facilities affected by the 1999 Turkey earthquake. J Per Const Fac ASCE 20(1):28–36

Sezen H, Elwood KJ, Whitaker AS, Mosalam KM, Wallace JW, Stanton JF (2000) Structural engineering reconnaissance of the August 17, 1999. Kocaeli (Izmit), Turkey. Earthquake rep No 2000/09. Pacific Engineering Research Center. University of California, Berkeley

Wood SL (2003) Seismic rehabilitation of low-rise precast industrial buildings in Turkey. In: Wasti T, Ozcebe G (eds) Advances in earthquake engineering for urban risk reduction. NATO science series. IV. Earth and environmental sciences, vol 66. Kluwer Academic Publishers, Published by Springer, Dordrecht, pp 167–77

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is distributed under the terms of the Creative Commons Attribution Noncommercial License, which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

Copyright information

© 2015 The Author(s)

About this chapter

Cite this chapter

Karadoğan, H.F., Bal, I.E., Yüksel, E., Yüce, S.Z., Durgun, Y., Soydan, C. (2015). An Algorithm to Justify the Design of Single Story Precast Structures. In: Ansal, A. (eds) Perspectives on European Earthquake Engineering and Seismology. Geotechnical, Geological and Earthquake Engineering, vol 39. Springer, Cham. https://doi.org/10.1007/978-3-319-16964-4_8

Download citation

DOI: https://doi.org/10.1007/978-3-319-16964-4_8

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-16963-7

Online ISBN: 978-3-319-16964-4

eBook Packages: Earth and Environmental ScienceEarth and Environmental Science (R0)