Abstract

Calibration of a camera system is essential to ensure that image measurements result in accurate estimates of locations and dimensions within the object space. In the underwater environment, the calibration must implicitly or explicitly model and compensate for the refractive effects of waterproof housings and the water medium. This chapter reviews the different approaches to the calibration of underwater camera systems in theoretical and practical terms. The accuracy, reliability, validation and stability of underwater camera system calibration are also discussed. Samples of results from published reports are provided to demonstrate the range of possible accuracies for the measurements produced by underwater camera systems.

This is a revised version based on a paper originally published in the online access journal Sensors (Shortis 2015).

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

2.1 Introduction

2.1.1 Historical Context

Photography has been used to document the underwater environment since the invention of the camera. In 1856 the first underwater images were captured on glass plates from a camera enclosed in a box and lowered into the sea (Martínez 2014). The first photographs captured by a diver date to 1893 and in 1914 the first movie was shot on film from a spherical observation chamber (Williamson 1936). Various experiments with camera housings and photography from submersibles followed during the next decades, but it was only after the invention of effective water-tight housings in 1930s that still and movie film cameras were used extensively underwater. In the 1950s the use of SCUBA became more widespread; several underwater feature movies were released and the first documented uses of underwater television cameras to record the marine environment were conducted (Barnes 1952). A major milestone in 1957 was the invention of the first waterproof 35 mm camera that could be used both above and under water, later developed into the Nikonos series of cameras with interchangeable, water-tight lenses.

The first use of underwater images in conjunction with photogrammetry for heritage recording was the use of a stereo camera system in 1964 to map a late Roman shipwreck (Bass 1966). Other surveys of shipwrecks using pairs of Nikonos cameras controlled by divers (Hohle 1971), mounted on towed body systems (Pollio 1972) or mounted on submersibles (Bass and Rosencrantz 1977) soon followed. Subsequently a variety of underwater cameras have been deployed for traditional mapping techniques and cartographic representations, based on diver-controlled systems (Henderson et al. 2013) and ROVs (Drap et al. 2007). Digital images and modelling software have been used to create models of artefacts such as anchors and amphorae (Green et al. 2002). These analyses of the stereo pairs utilized the traditional techniques of mapping from stereo photographs, developed for topographic mapping from aerial photography. These first applications of photogrammetry to underwater archaeology were motivated by the well-documented advantages of the technique, especially the non-contact nature of the measurements, the impartiality and accuracy of the measurements, and the creation of a permanent record that could be reanalysed and repurposed later (Anderson 1982). Stereo photogrammetry has the disadvantage that the measurement capture and analysis is a complex task that requires specific techniques and expertise, however this complexity can be ameliorated by the documentation of operations at the site and in the office (Green 2016; Green et al. 1971).

2.1.2 Modern Systems and Applications

More recent advances in equipment and techniques have dramatically improved the efficacy of the measurement technique and the production of deliverables. There is an extensive range of underwater-capable, digital cameras with high-resolution sensors that can capture both still images and video sequences (Underwater Photography Guide 2017). Rather than highly constrained patterns of stereo photographs and traditional, manual photogrammetric solutions, many photographs from a single camera and the principle of Structure from Motion (SfM) (Pollefeys et al. 2000) can be used to automatically generate a detailed 3D model of the site, shipwreck or artefacts. SfM has been used effectively to map archaeological sites (McCarthy 2014; McCarthy and Benjamin 2014; Skarlatos et al. 2012; Van Damme 2015), compare sites before and after the removal of encrustations (Bruno et al. 2013) and create models for the artefacts from a shipwreck (Balletti et al. 2015; Fulton et al. 2016; Green et al. 2002; McCarthy and Benjamin 2014). Whilst there are some practical considerations that must be respected to obtain an effective and complete 3D virtual model (McCarthy and Benjamin 2014), the locations of the photographs are relatively unconstrained, which is a significant advantage in the underwater environment.

Based on citations in the literature (Mallet and Pelletier 2014; Shortis et al. 2009a), however, marine habitat conservation, biodiversity monitoring and fisheries stock assessment dominate the application of accurate measurement by underwater camera systems. The age and biomass of fish can be reliably estimated based on length measurement and a length-weight or length-age regression (Pienaar and Thomson 1969; Santos et al. 2002). When combined with spatial or temporal sampling in marine ecosystems, or counts of fish in an aquaculture cage or a trawl net, the distribution of lengths can be used to estimate distributions of or changes in biomass, and shifts in or impacts on population distributions. Underwater camera systems are now widely employed in preference to manual methods as a non-contact, non-invasive technique to capture accurate length information and thereby estimate biomass or population distributions (Shortis et al. 2009a). Underwater camera systems have the further advantages that the measurements are accurate and repeatable (Murphy and Jenkins 2010), sample areas can be very accurately estimated (Harvey et al. 2004) and the accuracy of the length measurements vastly improves the statistical power of the population estimates when sample counts are very low (Harvey et al. 2001).

Underwater stereo-video systems have been used in the assessment of wild fish stocks with a variety of cameras and modes of operation (Klimley and Brown 1983; Mallet and Pelletier 2014; McLaren et al. 2015; Santana-Garcon et al. 2014; Seiler et al. 2012; Watson et al. 2009), in pilot studies to monitor length frequencies of fish in aquaculture cages (Harvey et al. 2003; Petrell et al. 1997; Phillips et al. 2009) and in fish nets during capture (Rosen et al. 2013). Commercial systems such as the AKVAsmart, formerly VICASS (Shieh and Petrell 1998), and the AQ1 AM100 (Phillips et al. 2009) are widely used in aquaculture and fisheries.

There are many other applications of underwater photogrammetry. Stereo camera systems were used to conduct the first accurate seabed mapping applications (Hale and Cook 1962; Pollio 1971) and have been used to measure the growth of coral (Done 1981). Single and stereo cameras have been used for monitoring of submarine structures, most notably to support energy exploration and extraction in the North Sea (Baldwin 1984; Leatherdale and Turner 1983), mapping of seabed topography (Moore 1976; Pollio 1971), 3D models of sea grass meadows (Rende et al. 2015) and inshore sea floor mapping (Doucette et al. 2002; Newton 1989). A video camera has been used to measure the shape of fish pens (Schewe et al. 1996), a stereo camera has been used to map cave profiles (Capra 1992) and digital still cameras have been used underwater for the estimation of sponge volumes (Abdo et al. 2006). Seafloor monitoring has been carried out in deep water using continuously recorded stereo video cameras combined with a high resolution digital still camera (Shortis et al. 2009b). A network of digital still camera images has been used to accurately characterize the shape of a semi-submerged ship hull (Menna et al. 2013).

2.1.3 Calibration and Accuracy

The common factor for all these applications of underwater imagery is a designed or specified level of accuracy. Photogrammetric surveys for heritage recording, marine biomass or fish population distributions are directly dependent on the accuracy of the 3D measurements. Any inaccuracy will lead to significant errors in the measured dimensions of artefacts (Capra et al. 2015), under- or over-estimation of biomass (Boutros et al. 2015) or a systematic bias in the population distribution (Harvey et al. 2001). Other applications such as structural monitoring or seabed mapping must achieve a specified level of accuracy for the surface shape.

Calibration of any camera system is essential to achieve accurate and reliable measurements. Small errors in the perspective projection must be modelled and eliminated to prevent the introduction of systematic errors in the measurements. In the underwater environment, the calibration of the cameras is of even greater importance because the effects of refraction through the air, housing and water interfaces must be incorporated.

Compared to in-air calibration, camera calibration under water is subject to the additional uncertainty caused by attenuation of light through the housing port and water media, as well as the potential for small errors in the refracted light path due to modelling assumptions or non-uniformities in the media. Accordingly, the precision and accuracy of calibration under water is always expected to be degraded relative to an equivalent calibration in-air. Experience demonstrates that, because of these effects, underwater calibration is more likely to result in scale errors in the measurements.

2.2 Calibration Approaches

2.2.1 Physical Correction

In a limited range of circumstances calibration may be unnecessary. If a high level of accuracy is not required, and the object to be measured approximates a 2D planar surface, a straightforward solution is possible.

Correction lenses or dome ports such as those described in Ivanoff and Cherney (1960) and Moore (1976) can be used to provide a near-perfect central projection under water by eliminating the refraction effects. Any remaining, small errors or imperfections can either be corrected using a grid or graticule placed in the field of view, or simply accepted as a small deterioration in accuracy. The correction lens or dome port has the further advantage that there is little, if any, degradation of image quality near the edges of the port. Plane camera ports exhibit loss of contrast and intensity at the extremes of the field of view due to acute angles of incidence and greater apparent thickness of the port material.

This simplified approach has been used, either with correction lenses or with a pre-calibration of the camera system, to carry out two-dimensional mapping. A portable control frame with a fixed grid or target reference is imaged before deployment or placed against the object to measured, to provide both calibration corrections as well as position and orient the camera system relative to the object. Typical applications of this approach are shipwreck mapping (Hohle 1971), sea floor characterization surveys (Moore 1976), length measurements in aquaculture (Petrell et al. 1997) and monitoring of sea floor habitats (Chong and Stratford 2002).

If accuracy is a priority, however, and especially if the object to be measured is a 3D surface, then a comprehensive calibration is essential. The correction lens approach assumes that the camera is a perfect central projection and that the entrance pupil of the camera lens coincides exactly with the centre of curvature of the correction lens. Any simple correction approach, such as a graticule or control frame placed in the field of view, will be applicable only at the same distance. Any significant extrapolation outside of the plane of the control frame will inevitably introduce systematic errors.

2.2.2 Target Field Calibration

The alternative approach of a comprehensive calibration translates a reliable technique from in-air into the underwater environment. Close range calibration of cameras is a well-established technique that was pioneered by Brown (1971), extended to include self-calibration of the camera(s) by Kenefick et al. (1972) and subsequently adapted to the underwater environment (Fryer and Fraser 1986; Harvey and Shortis 1996). The mathematical basis of the technique is reviewed in Granshaw (1980).

The essence of this approach is to capture multiple, convergent images of a fixed calibration range or portable calibration fixture to determine the physical parameters of the camera calibration (Fig. 2.1). A typical calibration range or fixture is based on discrete targets to precisely identify measurement locations throughout the camera fields of view from the many photographs (Fig. 2.1). The targets may be circular dots or the corners of a checkerboard. Coded targets or checkerboard corners on the fixture can be automatically recognized using image analysis techniques (Shortis and Seager 2014; Zhang 2000) to substantially improve the efficiency of the measurements and network processing. The ideal geometry and a full set of images for a calibration fixture are shown in Figs. 2.2 and 2.3, respectively.

Typical portable calibration fixture (left, courtesy of NOAA) and test range. (Right, from Leatherdale and Turner 1983)

Top: a set of calibration images from an underwater stereo-video system using a 3D calibration fixture. Both the cameras and the object have been rotated to acquire the convergent geometry of the network. Bottom: a set of calibration images of a 2D checkerboard for a single camera calibration, for which only the checkerboard has been rotated. (From Bouguet 2017)

A fixed test range, such as the ‘Manhattan’ object shown in Fig. 2.1, has the advantage that accurately known target coordinates can be used in a pre-calibration approach. The disadvantage, however, is that the camera system must be transported to the range and then back to the deployment location. In comparison, accurate information for the positions of the targets on a portable calibration fixture is not required, as coordinates of the targets can be derived as part of a self-calibration approach. Hence, it is immaterial if the portable fixture distorts or is dis-assembled between calibrations, although the fixture must retain its dimensional integrity during the image capture.

Scale within the 3D measurement space is determined by introducing distances measured between pre-identified targets into the self-calibration network (El-Hakim and Faig 1981). The known distances between the targets must be reliable and accurate, so known lengths are specified between targets on the rigid arms of the fixture or between the corners of the checkerboard.

In practice, cameras are most often pre-calibrated using a self-calibration network and a portable calibration fixture in a venue convenient to the deployment. The refractive index of water is insensitive to temperature, pressure or salinity (Newton 1989), so the conditions prevailing for the pre-calibration can be assumed to be valid for the actual deployment of the system to capture measurements. The assumption is also made that the camera configurations, such as focus and zoom, and the relative orientation for a multi camera system, are locked down and undisturbed. In practice this means that the camera lens focus and zoom adjustments must be held in place using tape or a lock screw, and the connection between multiple cameras, usually a base bar between stereo cameras, must be rigid. A close proximity between the locations of the calibration and the deployment minimizes the risk of a physical change to the camera system.

The process of self-calibration of underwater cameras is straightforward and quick. The calibration can take place in a swimming pool, in an on-board tank on the vessel or, conditions permitting, adjacent to, or beneath, the vessel. The calibration fixture can be held in place and the cameras manoeuvred around it, or the calibration fixture can be manipulated whilst the cameras are held in position, or a combination of both approaches can be used (Fig. 2.3). For example, a small 2D checkerboard may be manipulated in front of an ROV stereo-camera system held in a tank. A large, towed body system may be suspended in the water next to a wharf and a large 3D calibration fixture manipulated in front of the stereo video cameras. In the case of a diver-controlled stereo-camera system, a 3D calibration fixture may be tethered underneath the vessel and the cameras moved around the fixture to replicate the network geometry shown in Fig. 2.2.

There are very few examples of in situ self-calibrations of camera systems, because this type of approach is not readily adapted to the dynamic and uncontrolled underwater environment. Nevertheless, there are some examples of a single camera or stereo camera in situ self-calibration (Abdo et al. 2006; Green et al. 2002; Schewe et al. 1996). In most cases a pre- or post-calibration is conducted anyway to determine an estimate of the calibration of the camera system as a contingency.

2.3 Calibration Algorithms

2.3.1 Calibration Parameters

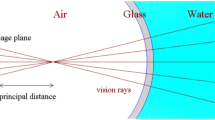

Calibration of a camera system is necessary for two reasons. First, the internal geometric characteristics of the cameras must be determined (Brown 1971). In photogrammetric practice, camera calibration is most often defined by physical parameter set (Fig. 2.4) comprising principal distance, principal point location, radial (Ziemann and El-Hakim 1983) and decentring (Brown 1966) lens distortions, plus affinity and orthogonality terms to compensate for minor optical effects (Fraser et al. 1995; Shortis 2012). The principal distance is formally defined as the separation, along the camera optical axis, between the lens perspective centre and the image plane. The principal point is the intersection of the camera optical axis with the image plane.

Radial distortion is a by-product of the design criteria for camera lenses to produce very even lighting across the entire field of view and is defined by an odd-ordered polynomial (Ziemann and El-Hakim 1983). Three terms are generally sufficient to model the radial lens distortion of most cameras in-air or in-water. SfM applications such as Agisoft Photoscan/Metashape (Agisoft 2017) and Reality Capture (Capturing Reality 2017) offer up to five terms in the polynomial; however, these extra terms are redundant except for camera lenses with extreme distortion profiles.

Decentring distortion is described by up to four terms (Brown 1971), but in practice only the first two terms are significant. This distortion is caused by the mis-centring of lens components in a multi-element lens and the degree of mis-centring is closely associated with the quality of the manufacture of the lens. The magnitude of this distortion is much less than radial distortion (Figs. 2.6 and 2.7) and should always be small for simple lenses with few elements when calibrated in-air.

Second, the relative orientation of the cameras with respect to one another, or the exterior orientation with respect to an external reference, must be determined. Also known as pose estimation, both the location and orientation of the camera(s) must be determined. For the commonly used approach of stereo cameras, the relative orientation effectively defines the separation of the perspective centres of the two lenses, the pointing angles (omega and phi rotations) of the two optical axes of the cameras and the roll angles (kappa rotations) of the two focal plane sensors (Fig. 2.5).

2.3.2 Absorption of Refraction Effects

In the underwater environment the effects of refraction must be corrected or modelled to obtain an accurate calibration. The entire light path, including the camera lens, housing port and water medium, must be considered. By far the most common approach is to correct the refraction effects using absorption by the physical camera calibration parameters. Assuming that the camera optical axis is approximately perpendicular to a plane or dome camera port, the primary effect of refraction through the air-port and port-water interfaces will be radially symmetric around the principal point (Li et al. 1996). This primary effect can be absorbed by the radial lens distortion component of the calibration parameters. Figure 2.6 shows a comparison of radial lens distortion from calibrations in-air and in-water for the same camera, demonstrating the compensation effect for the radial distortion profile. There will also be some small, asymmetric effects caused by, for example, alignment errors between the optical axis and the housing port, and perhaps non-uniformities in the thickness or material of the housing. These secondary effects can be absorbed by calibration parameters such as the decentring lens distortion and the affinity term. Figure 2.7 shows a comparison of decentring lens distortion from calibrations in-air and in-water of the same camera. Similar changes in the lens distortion profiles are demonstrated in Fryer and Fraser (1986) and Lavest et al. (2000).

Comparison of decentring lens distortion from in-air and in-water calibrations of a GoPro Hero4 camera operated in HD video mode. Note the much smaller range of distortion values (vertical axis) compared to Fig. 2.6

Table 2.1 shows some of the calibration parameters for the in-air and in-water calibrations of two GoPro Hero4 cameras. The ratios of the magnitudes of the parameters indicate whether there is a contribution to the refractive effects. As could be expected, for a plane housing port, the principal distance is affected directly, whilst changes in parameters such as the principal point location and the affinity term may include the combined influences of secondary effects, correlations with other parameters and statistical fluctuation. These results are consistent for the two cameras, consistent with other cameras tested, and Lavest et al. (2000) presents similar outcomes from in-air versus in-water calibrations for flat ports. Very small percentage changes to all parameters, including the principal distance, are reported in Bruno et al. (2011) for housings with dome ports. This result is in accord with the expected physical model of the refraction.

The disadvantage of the absorption approach for the refractive effects is that there will always be some systematic errors which are not incorporated into the model. The effect of refraction invalidates the assumption of a single projection centre for the camera (Sedlazeck and Koch 2012), which is the basis for the physical parameter model. The errors are most often manifest as scale changes when measurements are taken outside of the range used for the calibration process. Experience over many years of operation demonstrates that, if the ranges for the calibration and the measurements are commensurate, then the level of systematic error is generally less than the precision with which measurements can be extracted. This masking effect is partly due to the elevated level of noise in the measurements, caused by the attenuation and loss of contrast in the water medium.

2.3.3 Geometric Correction of Refraction Effects

The alternative to the simple approach of absorption is the more complex process of geometric correction, effectively an application of ray tracing of the light paths through the refractive interfaces. A two-phase approach is developed in Li et al. (1997) for a stereo camera housing with concave lens covers. An in-air calibration is carried out first, followed by an in-water calibration that introduces 11 lens cover parameters such as the centre of curvature of the concave lens and, if not known from external measurements, refractive indices for the lens covers and water. A more general geometric correction solution is developed for plane port housings in Jordt-Sedlazeck and Koch (2012). Additional unknowns in the solution are the distance between the camera perspective centre and the housing, and the normal of the plane housing port, whilst the port thickness and refractive indices must be known. Using ray tracing, Kotowski (1988) develops a general solution to refractive surfaces that, in theory, can accommodate any shape of camera housing port. The shape of the refractive surface and the refractive indices must be known. Maas (2015), develops a modular solution to the effects of plane, parallel refraction surfaces, such as a plane camera port or the wall of a hydraulic testing facility, which can be readily included in standard photogrammetric tools.

A variation on the geometric correction is the perspective centre shift or virtual projection centre approach. A specific solution for a planar housing port is developed in Telem and Filin (2010). The parameters include the standard physical parameters, the refractive indices of glass and water, the distance between the perspective centre and the port, the tilt and direction of the optical axis with respect to the normal to the port, and the housing interface thickness. A modified approach neglects the direction of the optical axis and the thickness of thin ports, as these factors can be readily absorbed by the standard physical parameters. Again, a two-phase process is required: first a ‘dry’ calibration in-air and then a ‘wet’ calibration in-water (Telem and Filin 2010). A similar principle is used in Bräuer-Burchardt et al. (2015), also with a two-phase calibration approach.

The advantage of these techniques is that, without the approximations in the models, the correction of the refractive effects is exact. The disadvantages are the requirements for two phase calibrations and necessary data such as refractive indices. Further, in some cases the theoretical solution is specific to a housing type, whereas the absorption approach has the distinct advantage that it can be used with any type of underwater housing.

As well as the common approaches described above, some other investigations are worthy of note. The Direct Linear Transformation (DLT) algorithm (Abdel-Aziz and Karara 1971) is used with three different techniques in Kwon and Casebolt (2006). The first is essentially an absorption approach, but used in conjunction with a sectioning of the object space to minimize the remaining errors in the solution. A double plane correction grid is applied in the second approach. In the last technique a formal refraction correction model is included with the requirements that the camera-to-interface distance and the refractive index must be known. A review of refraction correction methods for underwater imaging is given in Sedlazeck and Koch (2012). The perspective camera model, ray-based models and physical models are analysed, including an error analysis based on synthetic data. The analysis demonstrates that perspective camera models incur increasing errors with increasing distance and tilt of the refractive surfaces, and only the physical model of refraction correction permits a complete theoretical compensation.

2.3.4 Relative Orientation

Once the camera calibration is established, single camera systems can be used to acquire measurements when used in conjunction with reference frames (Moore 1976) or sea floor reference marks (Green et al. 2002). For multi-camera systems the relative orientation is required as well as the camera calibration. The relative orientation can be included in the self-calibration solution as a constraint (King 1995) or can be computed as a post-process based on the camera positions and orientations for each set of synchronized exposures (Harvey and Shortis 1996). In either case it is important to detect and eliminate outliers, usually caused by lack of synchronization, which would otherwise unduly influence the calibration solution or the relative orientation computation. Outliers caused by synchronization effects are more common for systems based on camcorders or video cameras in separate housings, which typically use an external device such as a flashing LED light to synchronize the images to within one video frame (Harvey and Shortis 1996).

In the case of post-processing, the exterior orientations for the sets of synchronized exposures are initially in the frame of reference of the calibration fixture, so each set must be transformed into a local frame of reference with respect to a specific baseline between the cameras. In the case of stereo cameras, the local frame of reference is adopted as the centre of the baseline between the camera perspective centres, with the axes aligned with the baseline direction and the mean optical axis pointing direction (Fig. 2.5). The final parameters for the precise relative orientation are adopted as the mean values for all sets in the calibration network, after any outliers have been detected and eliminated.

2.4 Calibration Reliability and Stability

2.4.1 Reliability Factors

The reliability and accuracy of the calibration of underwater camera systems is dependent on a number of factors. Chief amongst the factors are the geometry and redundancy for the calibration network. A high level of redundant information—provided by many target image observations on many exposures—produces high reliability so that outliers in the image observations can be detected and eliminated. An optimum 3D geometry is essential to minimize correlations between the parameters and ensure that the camera calibration is an accurate representation of the physical model (Kenefick et al. 1972). It should be noted, however, that it is not possible to eliminate all correlations between the calibration parameters. Correlations are always present between the three radial distortion terms and between the principal point and two decentring terms.

The accuracy of the calibration parameters is enhanced if the network of camera and target locations meets the following criteria:

-

1.

The camera and target arrays are 3D in nature. 2D arrays are a source of weak network geometry. 3D arrays minimize correlations between the internal camera calibration parameters and the external camera location and orientation parameters.

-

2.

The many, convergent camera views approach a 90° intersection at the centre of the target array. A narrowly grouped array of camera views will produce shallow intersections, weakening the network and thereby decreasing the confidence with which the calibration parameters are determined.

-

3.

The calibration fixture or range fills the field of view of the camera(s) to ensure that image measurements are captured across the entire format. If the fixture or range is small and centred in the field of view, then the radial and decentring lens distortion profiles will be defined very poorly because measurements are captured only where the distortion signal is small in magnitude.

-

4.

The camera(s) are rolled around the optical axis for different exposures so that 0°, 90°, 180° and 270° orthogonal rotations are spread throughout the calibration network. A variety of camera rolls in the network also minimizes correlations between the internal camera calibration parameters and the external camera location and orientation parameters.

If these four conditions are met, the self-calibration approach can be used to simultaneously and confidently determine the camera calibration parameters, camera exposure locations and orientations, and updated target coordinates (Kenefick et al. 1972).

In recent years there has been an increasing adoption of a calibration technique using a small 2D checkerboard and a freely available Matlab solution (Bouguet 2017). The main advantages of this approach are the simplicity of the calibration fixture and the rapid measurement and processing of the captured images, made possible by the automatic recognition of the checkerboard pattern (Zhang 2000). A practical guide to the use of this technique is provided in Wehkamp and Fischer (2014).

The small size and 2D nature of the checkerboard, however, limits the reliability and accuracy of measurements made using this technique (Boutros et al. 2015). The technique is equivalent to a fixed test range calibration rather than a self-calibration, because the coordinates of the checkerboard corners are not updated. Any inaccuracy in the coordinates, especially if the checkerboard has variations from a true 2D plane, will introduce systematic errors into the calibration. Nevertheless, the 2D fixture can produce a calibration suitable for measurements at short ranges and with modest accuracy requirements. AUV and diver-operated stereo camera systems pre-calibrated with this technique have been used to capture fish length measurements (Seiler et al. 2012; Wehkamp and Fischer 2014) and tested for the 3D re-construction of artefacts (Bruno et al. 2011).

2.4.2 Stability Factors

The stability of the calibration for underwater camera systems has been well documented in published reports (Harvey and Shortis 1998; Shortis et al. 2000). As noted previously, the basic camera settings such as focus and zoom must be consistent between the calibration and deployments—usually ensured through the use of tape or a locking screw to prevent the settings from being inadvertently altered. For cameras used in-air, other factors are related to the handling of the camera—especially when the camera is rolled about the optical axis or a zoom lens is employed—and the quality of the lens mount. Any distortion of the camera body or movement of the lens or optical elements will result in variation of the relationship between the perspective centre and the CMOS or CCD imager at the focal plane, which will disturb the calibration (Shortis and Beyer 1997). Fixed focal length lenses are preferred over zoom lenses to minimise the instabilities.

The most significant sensitivity for the calibration stability of underwater camera systems, however, is the relationship between the camera lens and housing port. Rigid mounting of the camera in the housing is critical to ensure that the total optical path from the image sensor to the water medium is consistent (Harvey and Shortis 1998). Testing and validation have shown that calibration is only reliable if the camera in the housing is mounted on a rigid connection to the camera port (Shortis et al. 2000). This applies to both a single deployment and multiple, separate deployments of the camera system. Unlike correction lenses and dome ports, a specific position and alignment within the housing is unnecessary, but the distance and orientation of the camera lens relative to the housing port must be consistent. The most reliable option is a direct, mechanical linkage between the camera lens and the housing port that can consistently re-create the physical relationship. The consistency of distance and orientation is especially important for portable camcorders because they must be regularly removed from the housings to retrieve storage media and replenish batteries.

Finally, for multi-camera systems—in-air or in-water—their housings must have a rigid mechanical connection to a base bar to ensure that the separation and relative orientation of the cameras is also consistent. Perturbation of the separation or relative orientation often results in apparent scale errors, which can be readily confused with refractive effects. Figure 2.8 shows some results of repeated calibrations of a GoPro Hero 2 stereo-video system. The variation in the parameters between consecutive calibrations demonstrates a comparatively stable relative orientation but a more unstable camera calibration, in this case caused by a non-rigid mounting of the camera in the housing.

Stability of the right camera calibration parameters (top) and the relative orientation parameters (bottom) for a GoPro Hero 2 stereo-video system. The vertical axis is the change significance of individual parameters between consecutive calibrations (Harvey and Shortis 1998)

2.5 Calibration and Validation Results

2.5.1 Quality Indicators

The first evaluation of a calibration is generally the internal consistency of the network solution that is used to compute the calibration parameters, camera locations and orientations, and if applicable, updated target coordinates. The ‘internal’ indicator is the Root Mean Square (RMS) error of image measurement, a metric for the internal ‘fit’ of the least squares estimation solution (Granshaw 1980). Note that in general the measurements are based on an intensity weighted centroid to locate the centre of each circular target in the image (Shortis et al. 1995).

To allow comparison of different cameras with different spacing of the light sensitive elements in the CMOS or CCD imager, the RMS error is expressed in fractions of a pixel. In ideal conditions in-air, the RMS image error is typically in the range of 0.03–0.1 pixels (Shortis et al. 1995). In the underwater environment, the attenuation of light and loss of contrast, along with small non-uniformities in the media, degrades the RMS error into the range of 0.1–0.3 pixels (Table 2.2). This degradation is a combination of a larger statistical signature for the image measurements and the influence of small, uncompensated systematic errors. In conditions of poor lighting or poor visibility the RMS error deteriorates rapidly (Wehkamp and Fischer 2014).

The second indicator that is commonly used to compare the calibration, especially for in-air operations, is the proportional error, expressed as the ratio of the RMS error in the 3D coordinates of the targets to the largest dimension of the object. This ‘external’ indicator provides a standardized, relative measure of precision in the object space. In the circumstance of a camera calibration, the largest dimension is the diagonal span of the test range volume, or the diagonal span of the volume envelope of all imaged locations of the calibration fixture. Whilst the RMS image error may be favourable, the proportional error may be relatively poor if the object is contained within a small volume or the geometry of the calibration network is poor. Table 2.2 presents a sample of some results for the precision of calibrations. It is evident that the proportional error can vary substantially, however an average figure is approximately 1:5000.

2.5.2 Validation Techniques

As a consequence of the potential misrepresentation by proportional error, independent testing of the accuracy of underwater camera systems is essential to ensure the validity of 3D locations, length, area or volume measurements. For stereo and multi-camera systems, the primary interest is length measurements that are subsequently used to estimate the size of artefacts or the biomass of fish. One validation technique is to use known distances on the rigid components of the calibration fixture (Harvey et al. 2003), however this has some limitations.

As already noted, the circular, discrete targets are dissimilar to the natural feature points of a fish snout or an anchor tip, and they are measured by different techniques. The variation in size and angle of the distance on the calibration fixture may not correlate well with the size and orientation of the measurement. In particular, measurements of objects of interest are often taken at greater ranges than that of the calibration fixture, partly due to expediency in surveys and partly because the calibration fixture must be close enough to the cameras to fill a reasonable portion of the field of view. Given the approximations in the refraction models, it is important that accuracy validations are carried out at ranges greater than the average range to the calibration fixture. Further, it has been demonstrated that the accuracy of length measurements is dependent on the separation of the cameras in a multi-camera system (Boutros et al. 2015) and significantly affected by the orientation of the artefact relative to the cameras (Harvey and Shortis 1996; Harvey et al. 2002). Accordingly, validation of underwater video measurement systems is typically carried out by introducing a known length, such as a rod or a fish silhouette, which is measured manually at a variety of ranges and orientations within the field of view (Fig. 2.9).

2.5.3 Validation Results

In the best-case scenario of clear visibility and high contrast targets, the RMS error of validation measurements is typically less than 1 mm over a length of 1 m, equivalent to a length accuracy of 0.1%. In realistic, operational conditions using fish silhouettes or validated measurements of live fish, length measurements have an accuracy of 0.2–0.7% (Boutros et al. 2015; Harvey et al. 2002, 2003, 2004; Telem and Filin 2010). The accuracy is somewhat degraded if a simple correction grid is used (Petrell et al. 1997) or a simplified calibration approach is adopted (Wehkamp and Fischer 2014). A sample of published results of validations based on known lengths or geometric objects is given in Table 2.3.

McCarthy and Benjamin (2014) presents some validation results from direct comparisons between a 3D virtual model generated by photogrammetry and taped measurements taken by divers. The artefacts in this case were cannons lying on the sea floor and the 3D information was derived from a self-calibration, SfM solution. An accurate scale for the mesh was provided by a 1 m length bar placed within the site. The average difference for long measurements was found to be 3% and, for the longest distances, differences were typically less than 1%. Shorter distances tended to exhibit much larger errors, however the comparisons are detrimentally influenced by the inability to choose exactly corresponding points of reference for the virtual model and the tape measurements.

Two different types of underwater cameras are evaluated in a preliminary study of accuracy for the monitoring of coral reefs (Guo et al. 2016). In-air and underwater calibrations were undertaken, validated by an accurately known target fixture and 3D point cloud models of cinder blocks. The targets on the calibration frame were divided into 12 control points and 33 check points for the calibration networks. Based on the approximate 1 m span of the fixture, the proportional errors underwater range from 1:2500 to 1:7000. Validation based on comparisons of in-air and underwater SfM 3D models of the cinder blocks indicated RMS errors of the order of 1–2 mm, corresponding to an accuracy in the range of 0.1–0.2%.

Validations of biomass estimates of Southern Bluefin Tuna measured in aquaculture pens (Harvey et al. 2003) and sponges measured in the field (Abdo et al. 2006) have shown that volumes can be estimated with an accuracy of the order of a few percent. The Southern Bluefin Tuna validation was based on distances such as body length and span, made by a stereo-video system and compared to a length board and calliper system of manual measurement. Each Southern Bluefin Tuna in a sample of 40 fish was also individually weighed. The stereo-video system produced an estimate of better than 1% for the total biomass (Harvey et al. 2003). Triangulation meshes on the surface of simulated and live specimens were used to estimate the volume of sponges. The resulting errors were 3–5%, and no worse than 10%, for individual sponges (Abdo et al. 2006). Greater variability is to be expected for the estimates of the sponge volumes, because of the uncertainty associated with the assumed shape of the unseen substrate surface beneath each sponge.

By the nature of conversion from length to weight, errors can be amplified significantly. Typical regression functions are power series with a near cubic term (Harvey et al. 2003; Pienaar and Thomson 1969; Santos et al. 2002). Accordingly, inaccuracies in the calibration and the precision of the measurement may combine to produce unacceptable results. A simulation is employed by Boutros et al. (2015) to demonstrate clearly that the predicted error in the biomass of a fish, based on the error in the length, deteriorates rapidly with range from the cameras, especially with a small 2D calibration fixture and a narrow separation between the stereo cameras. Errors in the weight in excess of 10% are possible, reinforcing the need for validation testing throughout the expected range of measurements. Validation at the most distant ranges, where errors in biomass can approach 40%, is critical to ensure that an acceptable level of accuracy is maintained.

2.6 Conclusions

This chapter has presented a review of different calibration techniques that incorporate the effects of refraction from the camera housing and the water medium. Calibration of underwater camera systems is essential to ensure the accuracy and reliability of measurements of marine fauna, flora or artefacts. Calibration is a key process to ensure that the analysis of biomass, population distribution or dimensions is free of systematic errors.

Irrespective of whether an implicit absorption or an explicit refractive model is used in the calibration of underwater camera systems, it is clear from the sample of validation results that an accuracy of the order of 0.5% of the measured dimensions can be achieved. Less favourable results are likely when approximate methods, such as 2D planar correction grids, are used. The configuration of the underwater camera system is a significant factor that has a primary influence on the accuracy achieved. The advantage of photogrammetric systems, however, is that the configuration can be readily adapted to suit the desired or specified accuracy.

Understanding all the complexities of calibration and applying an appropriate technique may be daunting for anyone entering this field of endeavour for the first time. The first consideration should always be the accuracy requirements or expectations for the underwater measurement or modelling task. There is a clear correlation between the level of accuracy achieved and the complexity of the calibration. If accuracy is not a priority then calibration can be ignored completely, with the understanding that there is a significant risk of systematic errors in any measurements or models. The use of 2D calibration objects is a compromise between accuracy requirements and the complexity of the calibration approach, but has gained popularity despite the potential for systematic errors in the measurements. At the other end of the scale, for the most stringent accuracy requirements, in-situ self-calibration of a high quality, high stability underwater camera system using a 3D object and an optimal network geometry is critical.

Lack of understanding of the interplay between calibration and systematic errors in the measurements can be exacerbated by ‘black box’ systems that incorporate an automatic assignment of calibration parameters. Systems such as Agisoft Photoscan/Metashape (2017) and Pix4D (2017) incorporate ‘adaptive’ calibration that selects the parameters based on the geometry of the network, without requiring any intervention by the operator of the software. Whilst the motivation for this functionality is clearly to aid the operator, and the operator can intervene if they wish, the risk here is that the software may tend to nominate too many parameters to minimize errors and achieve the ‘best’ possible result. The additional, normally redundant, terms for the radial and decentring distortion parameters will only exaggerate this effect in most circumstances. The over-parameterization leads to over-fitting by the least squares estimation solution, produces overly optimistic estimates of errors and precisions, and generates systematic distortions in the derived model.

Irrespective of the approach to calibration, however, validation of measurements is the ultimate test of accuracy. The very straightforward task of introducing a known object into the field of view of the camera(s) and measuring lengths at a variety of locations and ranges produces an independent assessment of accuracy. This is a highly recommended, rapid test that can evaluate the actual accuracy against the specified or expected level based on the chosen approach. The system configuration and choice of calibration technique can be modified accordingly for subsequent measurement or modelling tasks until an optimum outcome is achieved.

Essential further reading for anyone entering this field are a guide to underwater cameras such as the Underwater Photography Guide (2017) and practical advice on heritage recording underwater such as Green (2016, Chap. 6), and McCarthy (2014). A practical guide to the procedure for the calibration technique based on the 2D checkerboard given by Bouguet (2017) is provided by Wehkamp and Fischer (2014). For more information on the use of 3D calibration objects, see Fryer and Fraser (1986), Harvey and Shortis (1996), Shortis et al. (2000), and Boutros et al. (2015).

References

Abdel-Aziz YI, Karara HM (1971) Direct linear transformation from comparator coordinates into object space coordinates in close-range photogrammetry. In: Proceedings of the symposium on close-range photogrammetry. American Society of Photogrammetry, Falls Church, VA, pp 1–18

Abdo DA, Seager JW, Harvey ES, McDonald JI, Kendrick GA, Shortis MR (2006) Efficiently measuring complex sessile epibenthic organisms using a novel photogrammetric technique. J Exp Mar Biol Ecol 339(1):120–133

Agisoft (2017) Agisoft Photoscan. http://www.agisoft.com/. Accessed 27 Oct 2017

Anderson RC (1982) Photogrammetry: the pros and cons for archaeology. World Archaeol 14(2):200–205

Baldwin RA (1984) An underwater photogrammetric measurement system for structural inspection. Int Arch Photogramm 25(A5):9–18

Balletti C, Beltrame C, Costa E, Guerra F, Vernier P (2015) Underwater photogrammetry and 3d reconstruction of marble cargos shipwreck. Int Arch Photogramm Remote Sens Spat Inf Sci XL-5/W5:7–13. https://doi.org/10.5194/isprsarchives-XL-5-W5-7-2015

Barnes H (1952) Underwater television and marine biology. Nature 169:477–479

Bass GF (1966) Archaeology under water. Thames and Hudson, Bristol

Bass GF, Rosencrantz DM (1977) The ASHREAH—a pioneer in search of the past. In: Geyer RA (ed) Submersibles and their use in oceanography and ocean engineering. Elsevier, Amsterdam, pp 335–350

Bouguet J (2017) Camera calibration toolbox for MATLAB. California Institute of Technology. http://www.vision.caltech.edu/bouguetj/calib_doc/index.html. Accessed 27 Oct 2017

Boutros N, Harvey ES, Shortis MR (2015) Calibration and configuration of underwater stereo-video systems for applications in marine ecology. Limnol Oceanogr Methods 13(5):224–236

Bräuer-Burchardt C, Kühmstedt P, Notni G (2015) Combination of air- and water-calibration for a fringe projection based underwater 3d-scanner. In: Azzopardi G, Petkov N (eds) Computer analysis of images and patterns. 16th international conference, CAIP 2015, Valletta, Malta, 2–4 September 2015 Proceedings. Part I: Image processing, computer vision, pattern recognition, and graphics, vol 9257. Springer, Basel, pp 49–60

Brown DC (1966) Decentring distortion of lenses. Photogramm Eng 22:444–462

Brown DC (1971) Close range camera calibration. Photogramm Eng 37(8):855–866

Bruno F, Bianco G, Muzzupappa M, Barone S, Razionale AV (2011) Experimentation of structured light and stereo vision for underwater 3D reconstruction. ISPRS J Photogramm Remote Sens 66(4):508–518

Bruno F, Gallo A, De Filippo F, Muzzupappa M, Petriaggi BD, Caputo P (2013) 3D documentation and monitoring of the experimental cleaning operations in the underwater archaeological site of Baia (Italy). Proceedings of Digital Heritage International Congress (DigitalHeritage), IEEE, vol 1. Institute of Electrical and Electronics Engineer, Piscataway, NJ, pp 105–112

Capra A (1992) Non-conventional system in underwater photogrammetry. Int Arch Photogramm Remote Sens 29(B5):234–240

Capra A, Dubbini M, Bertacchini E, Castagnetti C, Mancini F (2015) 3D reconstruction of an underwater archaeological site: comparison between low cost cameras. Int Arch Photogramm Remote Sens Spat Inf Sci XL-5/W5:67–72. https://doi.org/10.5194/isprsarchives-XL-5-W5-67-2015

Capturing Reality (2017) Reality capture. https://www.capturingreality.com/. Accessed 27 Oct 2017

Chong AK, Stratford P (2002) Underwater digital stereo-observation technique for red hydrocoral study. Photogramm Eng Remote Sens 68(7):745–751

Done TJ (1981) Photogrammetry in coral ecology: a technique for the study of change in coral communities. In: Gomez ED (ed) 4th international coral reef symposium. Marine Sciences Center, University of the Philippines, Manila, vol 2, pp 315–320

Doucette JS, Harvey ES, Shortis MR (2002) Stereo-video observation of nearshore bedforms on a low energy beach. Mar Geol 189(3–4):289–305

Drap P, Seinturier J, Scaradozzi D, Gambogi P, Long L, Gauch F (2007) Photogrammetry for virtual exploration of underwater archaeological sites. In: Proceedings of the 21st international symposium of CIPA, Athens, 1–6 October 2007, 6 pp

El-Hakim SF, Faig W (1981) A combined adjustment of geodetic and photogrammetric observations. Photogramm Eng Remote Sens 47(1):93–99

Fraser CS, Shortis MR, Ganci G (1995) Multi-sensor system self-calibration. In: El-Hakim SF (ed) Videometrics IV, vol 2598. SPIE, pp 2–18

Fryer JG, Fraser CS (1986) On the calibration of underwater cameras. Photogramm Rec 12(67):73–85

Fulton C, Viduka A, Hutchison A, Hollick J, Woods A, Sewell D, Manning S (2016) Use of photogrammetry for non-disturbance underwater survey—an analysis of in situ stone anchors. Adv Archaeol Pract 4(1):17–30

Granshaw SI (1980) Bundle adjustment methods in engineering photogrammetry. Photogramm Rec 10(56):181–207

Green J (2016) Maritime archaeology: a technical handbook. Routledge, Oxford

Green JN, Baker PE, Richards B, Squire DM (1971) Simple underwater photogrammetric techniques. Archaeometry 13(2):221–232

Green J, Matthews S, Turanli T (2002) Underwater archaeological surveying using PhotoModeler, VirtualMapper: different applications for different problems. Int J Naut Archaeol 31(2):283–292. https://doi.org/10.1006/ijna.2002.1041

Guo T, Capra A, Troyer M, Gruen A, Brooks AJ, Hench JL, Schmitt RJ, Holbrook SJ, Dubbini M (2016) Accuracy assessment of underwater photogrammetric three dimensional modelling for coral reefs. Int Arch Photogramm Remote Sens Spat Inf Sci XLI-B5:821–828. https://doi.org/10.5194/isprs-archives-XLI-B5-821-2016

Hale WB, Cook CE (1962) Underwater microcontouring. Photogramm Eng 28(1):96–98

Harvey ES, Shortis MR (1996) A system for stereo-video measurement of sub-tidal organisms. Mar Technol Soc J 29(4):10–22

Harvey ES, Shortis MR (1998) Calibration stability of an underwater stereo-video system: implications for measurement accuracy and precision. Mar Technol Soc J 32(2):3–17

Harvey ES, Fletcher D, Shortis MR (2001) Improving the statistical power of visual length estimates of reef fish: comparison of divers and stereo-video. Fish Bull 99(1):63–71

Harvey ES, Shortis MR, Stadler M, Cappo M (2002) A comparison of the accuracy of measurements from single and stereo-video systems. Mar Technol Soc J 36(2):38–49

Harvey ES, Cappo M, Shortis MR, Robson S, Buchanan J, Speare P (2003) The accuracy and precision of underwater measurements of length and maximum body depth of Southern Bluefin Tuna (Thunnus maccoyii) with a stereo-video camera system. Fish Res 63:315–326

Harvey ES, Fletcher D, Shortis MR, Kendrick G (2004) A comparison of underwater visual distance estimates made by SCUBA divers and a stereo-video system: implications for underwater visual census of reef fish abundance. Mar Freshw Res 55(6):573–580

Henderson J, Pizarro O, Johnson-Roberson M, Mahon I (2013) Mapping submerged archaeological sites using stereo-vision photogrammetry. Int J Naut Archaeol 42(2):243–256. https://doi.org/10.1111/1095-9270.12016

Hohle J (1971) Reconstruction of an underwater object. Photogramm Eng 37(9):948–954

Ivanoff A, Cherney P (1960) Correcting lenses for underwater use. J Soc Motion Picture Telev Eng 69(4):264–266

Jordt-Sedlazeck A, Koch R (2012) Refractive calibration of underwater cameras. In: Fitzgibbon A, Lazebnik S, Perona P, Sato Y, Schmid C (eds) Computer vision—ECCV 2012: 12th European conference on Computer Vision, Florence, Italy, 7–13 October 2012. Proceedings. Part I: Image processing, computer vision, pattern recognition and graphics, vol 7572. Springer, Berlin, Heidelberg, pp 846–859

Kenefick JF, Gyer MS, Harp BF (1972) Analytical self-calibration. Photogramm Eng Remote Sens 38(11):1117–1126

King BR (1995) Bundle adjustment of constrained stereo pairs—mathematical models. Geomatics Res Australas 63:67–92

Klimley AP, Brown ST (1983) Stereophotography for the field biologist: measurement of lengths and three-dimensional positions of free-swimming sharks. Mar Biol 74:175–185

Kotowski R (1988) Phototriangulation in multi-media photogrammetry. Int Arch Photogramm Remote Sens 27(B5):324–334

Kwon YH, Casebolt JB (2006) Effects of light refraction on the accuracy of camera calibration and reconstruction in underwater motion analysis. Sports Biomech 5(1):95–120

Lavest JM, Rives G, Lapresté JT (2000) Underwater camera calibration. In: Vernon D (ed) Computer vision—ECCV 2000: 6th European conference on Computer Vision Dublin, Ireland, 26 June–2 July 2000, Proceedings. Part I. Lecture notes in computer science, vol 1842. Springer, Berlin, pp 654–668

Leatherdale JD, Turner DJ (1983) Underwater photogrammetry in the North Sea. Photogramm Rec 11(62):151–167

Li R, Tao C, Zou W, Smith RG, Curran TA (1996) An underwater digital photogrammetric system for fishery geomatics. Int Arch Photogramm Remote Sens 31(B5):319–323

Li R, Li H, Zou W, Smith RG, Curran TA (1997) Quantitative photogrammetric analysis of digital underwater video imagery. IEEE J Ocean Eng 22(2):364–375

Maas H-G (2015) A modular geometric model for underwater photogrammetry. Int Arch Photogramm Remote Sens Spat Inf Sci XL-5/W5:139–141. https://doi.org/10.5194/isprsarchives-XL-5-W5-139-2015 2015

Mallet D, Pelletier D (2014) Underwater video techniques for observing coastal marine biodiversity: a review of sixty years of publications (1952–2012). Fish Res 154:44–62

Martínez A (2014) A souvenir of undersea landscapes: underwater photography and the limits of photographic visibility, 1890–1910. História, Ciências, Saúde-Manguinhos 21:1029–1047

McCarthy J (2014) Multi-image photogrammetry as a practical tool for cultural heritage survey and community engagement. J Archaeol Sci 43:175–185. https://doi.org/10.1016/j.jas.2014.01.010

McCarthy J, Benjamin J (2014) Multi-image photogrammetry for underwater archaeological site recording: an accessible, diver-based approach. J Marit Archaeol 9(1):95–114. https://doi.org/10.1007/s11457-014-9127-7

McLaren BW, Langlois TJ, Harvey ES, Shortland-Jones H, Stevens R (2015) A small no-take marine sanctuary provides consistent protection for small-bodied by-catch species, but not for large-bodied, high-risk species. J Exp Mar Biol Ecol 471:153–163

Menna F, Nocerino E, Troisi S, Remondino F (2013) A photogrammetric approach to survey floating and semi-submerged objects. In: Remondino F, Shortis MR (eds) Videometrics, range imaging, and applications XII, vol 8791. SPIE, paper 87910H

Moore EJ (1976) Underwater photogrammetry. Photogramm Rec 8(48):748–763

Murphy HM, Jenkins GP (2010) Observational methods used in marine spatial monitoring of fishes and associated habitats: a review. Mar Freshw Res 61:236–252

Newton I (1989) Underwater photogrammetry. In: Karara HM (ed) Non-topographic photogrammetry. American Society for Photogrammetry and Remote Sensing, Bethesda, pp 147–176

Petrell RJ, Shi X, Ward RK, Naiberg A, Savage CR (1997) Determining fish size and swimming speed in cages and tanks using simple video techniques. Aquac Eng 16(1–2):63–84

Phillips K, Boero Rodriguez V, Harvey E, Ellis D, Seager J, Begg G, Hender J (2009) Assessing the operational feasibility of stereo-video and evaluating monitoring options for the Southern Bluefin Tuna Fishery ranch sector. Fisheries Research and Development Corporation report 2008/44, 46 pp

Pienaar LV, Thomson JA (1969) Allometric weight-length regression model. J Fish Res Board Can 26:123–131

Pix4D (2017) Pix4Dmapper. https://pix4d.com/. Accessed 27 Oct 2017

Pollefeys M, Koch R, Vergauwen M, Van Gool L (2000) Automated reconstruction of 3D scenes from sequences of images. ISPRS J Photogramm Remote Sens 55(4):251–267

Pollio J (1971) Underwater mapping with photography and sonar. Photogramm Eng 37(9):955–968

Pollio J (1972) Remote underwater systems on towed vehicles. Photogramm Eng 38(10):1002–1008

Rende FS, Irving AD, Lagudi A, Bruno F, Scalise S, Cappa P, Montefalcone M, Bacci T, Penna M, Trabucco B, Di Mento R, Cicero AM (2015) Pilot application of 3d underwater imaging techniques for mapping Posidonia oceanica (L.) delile meadows. Int Arch Photogramm Remote Sens Spat Inf Sci XL-5/W5:177–181. https://doi.org/10.5194/isprsarchives-XL-5-W5-177-2015

Rosen S, Jörgensen T, Hammersland-White D, Holst JC (2013) DeepVision: a stereo camera system provides highly accurate counts and lengths of fish passing inside a trawl. Can J Fish Aquat Sci 70(10):1456–1467

Santana-Garcon J, Newman SJ, Harvey ES (2014) Development and validation of a mid-water baited stereo-video technique for investigating pelagic fish assemblages. J Exp Mar Biol Ecol 452:82–90

Santos MN, Gaspar MB, Vasconcelos P, Monteiro CC (2002) Weight-length relationships for 50 selected fish species of the Algarve coast (southern Portugal). Fish Res 59(1–2):289–295

Schewe H, Moncreiff E, Gruendig L (1996) Improvement of fish farm pen design using computational structural modelling and large-scale underwater photogrammetry. Int Arch Photogramm Remote Sens 31(B5):524–529

Sedlazeck A, Koch R (2012) Perspective and non-perspective camera models in underwater imaging—overview and error analysis. In: Dellaert F, Frahm J-M, Pollefeys M, Leal-Taixé L, Rosenhahn B (eds) Outdoor and large-scale real-world scene analysis: 15th international workshop on theoretical foundations of computer vision, Dagstuhl Castle, Germany, 26 June–1 July 2011, Lecture notes in computer science, vol 7474. Springer, Berlin, pp 212–242

Seiler J, Williams A, Barrett N (2012) Assessing size, abundance and habitat preferences of the Ocean Perch Helicolenus percoides using a AUV-borne stereo camera system. Fish Res 129:64–72

Shieh ACR, Petrell RJ (1998) Measurement of fish size in Atlantic salmon (salmo salar l.) cages using stereographic video techniques. Aquac Eng 17(1):29–43

Shortis MR (2012) Multi-lens, multi-camera calibration of Sony Alpha NEX 5 digital cameras. In: Proceedings on CD-ROM, GSR_2 Geospatial Science Research Symposium, RMIT University, 10–12 December 2012, paper 30, 11 pp

Shortis MR (2015) Calibration techniques for accurate measurements by underwater camera systems. Sensors 15(12):30810–30826. https://doi.org/10.3390/s151229831

Shortis MR, Beyer HA (1997) Calibration stability of the Kodak DCS420 and 460 cameras. In: El-Hakim SF (ed) Videometrics IV, vol 3174. SPIE, pp 94–105

Shortis MR, Seager JW (2014) A practical target recognition system for close range photogrammetry. Photogramm Rec 29(147):337–355

Shortis MR, Clarke TA, Robson S (1995) Practical testing of the precision and accuracy of target image centring algorithms. In: El-Hakim SF (ed) Videometrics IV, vol 2598. SPIE, pp 65–76

Shortis MR, Miller S, Harvey ES, Robson S (2000) An analysis of the calibration stability and measurement accuracy of an underwater stereo-video system used for shellfish surveys. Geomatics Res Australas 73:1–24

Shortis MR, Harvey ES, Abdo DA (2009a) A review of underwater stereo-image measurement for marine biology and ecology applications. In: Gibson RN, Atkinson RJA, Gordon JDM (eds) Oceanography and marine biology: an annual review, vol 47. CRC Press, Boca Raton, pp 257–292

Shortis MR, Seager JW, Williams A, Barker BA, Sherlock M (2009b) Using stereo-video for deep water benthic habitat surveys. Mar Technol Soc J42(4):28–37

Skarlatos D, Demesticha S, Kiparissi S (2012) An ‘open’ method for 3d modelling and mapping in underwater archaeological sites. Int J Herit Digit Era 1(1):1–24

Telem G, Filin S (2010) Photogrammetric modeling of underwater environments. ISPRS J Photogramm Remote Sens 65(5):433–444

Underwater Photography Guide (2017) Underwater Digital Cameras. http://www.uwphotographyguide.com/digital-underwater-cameras. Accessed 27 Oct 2017

Van Damme T (2015) Computer vision photogrammetry for underwater archaeological site recording in a low-visibility environment. Int Arch Photogramm Remote Sens Spat Inf Sci XL-5/W5:231–238. https://doi.org/10.5194/isprsarchives-XL-5-W5-231-2015

Watson DL, Anderson MJ, Kendrick GA, Nardi K, Harvey ES (2009) Effects of protection from fishing on the lengths of targeted and non-targeted fish species at the Houtman Abrolhos Islands. West Aust Mar Ecol Prog Ser 384:241–249

Wehkamp M, Fischer P (2014) A practical guide to the use of consumer-level still cameras for precise stereogrammetric in situ assessments in aquatic environments. Underw Technol 32:111–128. https://doi.org/10.3723/ut.32.111

Williamson JE (1936) Twenty years under the sea. Ralph T. Hale & Company, Boston

Zhang Z (2000) A flexible new technique for camera calibration. IEEE Trans PAMI 22(11):1330–1334

Ziemann H, El-Hakim SF (1983) On the definition of lens distortion reference data with odd-powered polynomials. Can Surveyor 37(3):135–143

Acknowledgements

This is a revised version based on a paper originally published in the online access journal Sensors (Shortis 2015). The author acknowledges the Creative Commons licence and notes that this is an updated version of the original paper.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2019 The Author(s)

About this chapter

Cite this chapter

Shortis, M. (2019). Camera Calibration Techniques for Accurate Measurement Underwater. In: McCarthy, J., Benjamin, J., Winton, T., van Duivenvoorde, W. (eds) 3D Recording and Interpretation for Maritime Archaeology. Coastal Research Library, vol 31. Springer, Cham. https://doi.org/10.1007/978-3-030-03635-5_2

Download citation

DOI: https://doi.org/10.1007/978-3-030-03635-5_2

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-03634-8

Online ISBN: 978-3-030-03635-5

eBook Packages: Earth and Environmental ScienceEarth and Environmental Science (R0)