Abstract

In this work we explore the temporal dynamics of spatial heterogeneity during the process of tumorigenesis from healthy tissue. We utilize a spatial stochastic model of mutation accumulation and clonal expansion in a structured tissue to describe this process. Under a two-step tumorigenesis model, we first derive estimates of a non-spatial measure of diversity: Simpson’s Index, which is the probability that two individuals sampled at random from the population are identical, in the premalignant population. We next analyze two new measures of spatial population heterogeneity. In particular we study the typical length scale of genetic heterogeneity during the carcinogenesis process and estimate the extent of a surrounding premalignant clone given a clinical observation of a premalignant point biopsy. This evolutionary framework contributes to a growing literature focused on developing a better understanding of the spatial population dynamics of cancer initiation and progression.

Similar content being viewed by others

References

Baer CF, Miyamoto MM, Denver DR (2007) Mutation rate variation in multicellular eukaryotes: causes and consequences. Nat Rev Genet 8:619–631

Bozic I, Antal T, Ohtsuki H et al (2010) Accumulation of driver and passenger mutations during tumor progression. Proc Natl Acad Sci 107(43):18545–18550

Bramson M, Griffeath D (1980) On the Williams–Bjerknes tumor growth model: II. Math Proc Camb Philos Soc 88:339–357

Bramson M, Griffeath D (1981) On the Williams–Bjerknes tumour growth model: I. Ann Probab 9:173–185

Brouwer AF, Eisenberg MC, Meza R (2016) Age effects and temporal trends in HPV-related and HPV-unrelated oral cancer in the united states: a multistage carcinogenesis modeling analysis. PLoS ONE 11(3):e0151098

Curtius K, Wong C-J, Hazelton WD, Kaz AM, Chak A, Willis JE et al (2016) A molecular clock infers heterogeneous tissue age among patients with Barretts esophagus. PLoS Comput Biol 12(5):e1004919

de Vries A, Flores ER, Miranda B, Hsieh H-M, van Oostrom CTM, Sage J, Jacks T (2002) Targeted point mutations of p53 lead to dominant-negative inhibition of wild-type p53 function. Proc Natl Acad Sci USA 99(5):2948–2953

Dhawan A, Graham TA, Fletcher AG (2016) A computational modelling approach for deriving biomarkers to predict cancer risk in premalignant disease. bioRxiv, page 020222 Cancer Prev Res 9(4):283–295

Durrett R (1988) Lecture notes on particle systems and percolation. Wadsworth and Brooks/Cole Advanced Books and Software, Pacific Grove

Durrett R, Foo J, Leder K, Mayberry J, Michor F (2011) Intratumor heterogeneity in evolutionary models of tumor progression. Genetics 188:461–477

Durrett R, Foo J, Leder K (2016) Spatial Moran models II. Cancer initiation in spatially structured tissue. J Math Biol 72(5):1369–1400

Durrett R, Moseley S (2015) Spatial Moran models I. Stochastic tunneling in the neutral case. Ann Appl Probab 25(1):104–115

Fewell MP (2006) Area of common overlap of three circles. Technical Report DSTO-TN-0722, Australian Government Defence Science and Technology Organization

Foo J, Leder K, Ryser MD (2014) Multifocality and recurrence risk: a quantitative model of field cancerization. J Theor Biol 355:170–184

Gillies RJ, Gatenby RA (2015) Metabolism and its sequelae in cancer evolution and therapy. Cancer J 21(2):88–96

Iwasa Y, Michor F (2011) Evolutionary dynamics of intratumor heterogeneity. PLoS ONE 6:e17866

Kara MA, Peters FP, ten Kate FJ, van Deventer SJ, Fockens P, Bergman JJ (2005) Endoscopic video autofluorescence imaging may improve the detection of early neoplasia in patients with barretts esophagus. Gastrointest Endosc 61:679–685

Komarova N (2006) Spatial stochastic models for cancer initiation and progression. Bull Math Biol 68:1573–1599

Komarova N (2013) Spatial stochastic models of cancer: fitness, migration, invasion. Math Biosci Eng 10:761–775

Kumar S, Subramanian S (2002) Mutation rates in mammalian genomes. PNAS 99(2):803–808

Maley CC, Galipeau PC, Finley JC et al (2006) Genetic clonal diversity predicts progression to esophageal adenocarcinoma. Nat Genet 38(4):468–473

McGranahan N, Swanton C (2015) Biological and therapeutic impact of intratumor heterogeneity in cancer evolution. Cancer Cell 27(1):15–26

Nachmann M, Crowell S (2000) Estimate of the mutation rate per nucleotide in humans. Genetics 156(1):287–304

Nowak M, Michor Y, Iwasa Y (2003) The linear process of somatic evolution. PNAS 100:14966–14969

Pitman J, Tran NM (2012) Size biased permutations of a finite sequence with independent and identically distributed terms. Bernoulli 21:2484–2512

Rahman N (2014) Realizing the promise of cancer predisposition genes. Nature 505(7483):302–308

Sprouffske K, Pepper JW, Maley CC (2011) Accurate reconstruction of the temporal order of mutations in neoplastic progression. Cancer Prev Res 4(7):1135–1144

Thalhauser C, Lowengrub J, Stupack D, Komarova N (2010) Selection in spatial stochastic models of cancer: migration as a key modulator of fitness. Biol Direct 5:21

Whittaker RH (1972) Evolution and measurement of species diversity. Taxon 21:213–251

Wild CP, Scalbert A, Herceg Z (2013) Measuring the exposome: a powerful basis for evaluating environmental exposures and cancer risk. Environ Mol Mutagen 54(7):480–499

Williams T, Bjerknes R (1972) Stochastic model for abnormal clone spread through epithelial basal layer. Nature 236:19–21

Acknowledgements

JF is supported by NSF grants DMS-1224362 and DMS-134972. KZL is supported by NSF grants CMMI-1362236 and CMMI-1552764. MDR is supported by National Institutes of Health R01-GM096190 and Swiss National Science Foundation P300P2_154583.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1: Details for Non-spatial Simpson’s Index

1.1 Preliminary Definitions and Results

To characterize the distributions of the Simpson’s Index, we introduce two definitions. Let \(L_1, L_2, \ldots , L_n\) be independent, identically distributed random variables with distribution F. Recall the definition of a sized-biased pick from Definition 1 in Sect. 4.2. Then we define a size-biased permutation as follows.

Definition 2

We call \(\left( L_{[1]}, \ldots L_{[n]}\right) \) a size-biased permutation (s.b.p) of the sequence \(\left( L_i\right) \) if \(L_{[1]}\) is a size-biased pick of the sequence, and for \( 2\le k\le n\),

The following results will be useful.

Proposition 5.1

(Proposition 2 in Pitman and Tran 2012) For \(1\le k \le n\), let \(\nu _k\) be the density of \(S_k\), the sum of k i.i.d random variables with distribution F. Then

\(\square \)

Corollary 5.2

(Corollary 3 in Pitman and Tran 2012) Let \(T_{n-k}= X_{[k+1]}+\cdots +X_{[n]}\) denote the sum of the last \(n-k\) terms in an i.i.d s.b.p of length n. Then for \(k=1,\ldots ,n-1\),

\(\square \)

1.2 Conditional Expectation

Recalling that type 1 clones have a linear radial growth rate, Simpson’s index (4) can be rewritten explicitly

where \(\left\{ t_{i}\right\} _{i=1}^{N_t}\) are the points of a Poisson process with constant intensity \(Nu_1 st\). In particular, we note that conditioned on \(N_t\), the \(t_i\) are i.i.d and

We now define \(X_i:=\left( 1-t_i/t\right) ^{d+1}\) and let

which allows us to rewrite (15) as

Note that conditioned on \(N_t\), the \(X_i\) are i.i.d with

To see this, note first that by symmetry \(X_i \sim (t_i/t)^{d}\); using characteristic functions, it is then easy to verify that for \(X\sim Beta\left( \alpha ,1\right) \) and \(Y\sim U(0,1)\), we have \(X\sim Y^n\) if and only if \(\alpha = 1/n\). Using the above notation and recalling the notion of a size-biased pick in Definition 1, we condition (17) on \(N_t\) to find

To compute the conditional expectation of Simpson’s Index R(t), we take the expectation of (18) to find

Setting \(k=1\), it follows now from Corollary 5.2

Note that the support of \(\nu _1\) is over [0, 1], and the support of \(\nu _{n-1}\) is over [0, n]. Now, by definition, \(\nu _1\) is the pdf of \(Beta\left( \frac{1}{d},1\right) \), i.e.

On the other hand, \(\nu _{n-1}\) is the density of the sum of \(n-1\) i.i.d. \(Beta(\frac{1}{d},1)\) random variables, i.e.

For positive integer n let \(S_n=B_1+\cdots + B_n\) where \(B_i\) are independent \(Beta(\frac{1}{d},1)\) random variables. Finally, from (20) we find

where \(S_n:=B_1+\cdots + B_n\), and \(B_i\) are independent \(Beta(\frac{1}{d},1)\) random variables.

1.3 Upper Bound for Variance

We derive an upper bound for the variance of the conditional Simpson’s Index as follows:

where the second to last equality follows from the fact that the sub-sigma algebra \(\sigma (N_t=n)\) is coarser than \(\sigma (X_1, \ldots , X_n)\).

1.4 Proof of Proposition 3.3

Proof

Let \(Y_n=(R(t)|N(t)=n)\), and note that by definition \(Y_n\ge 0\). Thus it suffices to show that \(\mathbb {E}[Y_n]\rightarrow 0\) as \(n\rightarrow \infty \). Note that

and by the law of large numbers \(S_1/(S_n/n)\rightarrow S_1/\mathbb {E}[B_1]\) as \(n\rightarrow \infty \). Thus if we establish that

then by uniform integrability we will have \(\mathbb {E}[(S_1/(S_n/n))^2]\rightarrow 1\), and thus \(\mathbb {E}[Y_n]\rightarrow 0\).

In order to establish (23), we define \(S_{2,n}=B_2+\cdots +B_n\) and for \(\varepsilon >0\) the event

We then have that

From Azuma-Hoeffding inequality, we know that there exists a k independent of n such that \(\mathbb {P}(A_n^c)\le e^{-kn}\), thus establishing (23). \(\square \)

1.5 Monte Carlo Simulations

We evaluate the conditional expectation of Simpson’s Index for fixed time t using Monte Carlo simulations. Based on the representation in (5), we first generate M independent copies of the vector \((S_1, S_n)\) denoted by \(\{(S_1^{(i)},S_n^{(i)})\}_{i=1}^M\), and form the estimator

which satisfies \(\mathbb {E}[\hat{\mu }(n,M)]=\mathbb {E}[R(t)|N_t=n]\) and \(Var[\hat{\mu }(n,M)]=O(1/M)\). If we simulate \(M_1\) copies of \(N_t\), denoted by \(\{N_t^{(i)}\}_{i=1}^{M_1}\) and for each realization \(N_t=n\) we form the estimator \(\hat{\mu }(n,M_2)\), then we have an unbiased estimator of \(\mathbb {E}[R(t)]\) via

since \(N_t^{(j)}\) is independent of \(\hat{\mu }(n,M_2)\). Note that simulating the mesoscopic model M times and averaging R(t) over those simulations is equivalent to using the estimator \(\hat{R}(M,1)\).

Appendix 2: \(I_1\) Calculations

Recall from Sect. 4.1 that I(r, t) is approximated by (8). It is therefore necessary to calculate \(\mathbb {P}(D_{ab}\cap E_1)\) and \(\mathbb {P}(D_{ab}\cap E_2)\). Also recall that \(V_x(t_0)\) is the space-time cone centered at x with radius \(c_dt_0\) at time 0 and radius 0 at time \(t_0\). For two points a and b in our spatial domain, we will be interested in the sets

where \(r=\Vert a-b\Vert \). We suppress the dependence on a and b in D and M to emphasize that the volume of these sets depends only on the distance \(\Vert a-b\Vert \). Denote the Lebesgue measure of a set \(A\in \mathbb {R}^d\times [0,\infty )\) by |A|. In order to calculate I(r, t) it will be necessary to compute \(|D(r,t_0)|\) and \(|M(r,t_0)|\). Note that

In the next two subsections, we compute \(I_1\) in one and two dimensions. For ease of notation we define \(\mu =u_1s\). For real number a, define \(a^+=\max \{a,0\}\).

1.1 \(I_1\) in 1 Dimension

We will first calculate the volumes \(|V_a(t_0)|, |M(r,t_0)|\), and from (24), \(|D(r,t_0)|\). In one dimension these calculations are simple: \(|V_x(t_0)| = t_0^2c_d\) and \(|M(r,t_0)| = \frac{[(2t_0c_d-r)^+]^2}{4c_d}\), so we have that:

Note that if \(t_0<r/(2c_d)\) then the only way sites a and b are the same at time \(t_0\) is if there are zero mutations in \(V_a(t_0)\cup V_b(t_0)\), i.e.,

Thus assume for the remainder of the subsection that \(t_0>r/(2c_d)\), in which case

And since the mutations arise according to a Poisson process with parameter \(\mu \),

From (8) it remains to compute \(P(D_{ab}|E_1)\) and \(P(D_{ab}|E_2)\). Note that if event \(E_1\) occurs, then \(D_{ab}\) can only occur if the single mutation occurs in the set \(D(r,t_0)\), and therefore

In order to calculate \(\mathbb {P}(D_{ab} | E_2)\), we must split \(V_a(t_0) \cup V_b(t_0)\) into 7 different regions because the probabilities will differ, depending on where the first mutation occurs (as shown in Fig. 6). By conditioning on \(E_2\) we assume that two mutations occur in the space-time region \(V_a(t_0)\cup V_b(t_0)\). Denote the space-time coordinates of the first mutation by \((x_1,t_1)\).

If \((x_1,t_1)\) occurs outside of \(M(r,t_0)\) but between a and b (i.e. in regions \(R_6\) or \(R_7\)), then the cells will definitely be different, regardless of where the second mutation occurs. However, if the first mutation occurs in \(R_i\), \(1\le i \le 5\), then the location of the second mutation will determine whether the sampled cells are different. Thus each region \(R_i\), \(1\le i \le 5\), will have an associated region \(Z_i\) that will be used to calculate \(\mathbb {P}(D_{ab} | E_2)\). If the first mutation occurs at the point \((x_1, t_1) \in R_i\), then the shape and size of \(Z_i(x_1, t_1)\) depends on i and \((x_1, t_1)\).

First, we will consider the regions inside \(M(r,t_0)\), which are \(R_1, R_2,\) and \(R_3\). For \(i=1,2, 3\), \(Z_i(x_1,t_1)\) represents the region in which the occurrence of a second mutation would make the sampled cells different at time \(t_0\), i.e. the two clones will meet between a and b and then each will spread to one of the cells.

If \((x_1,t_1) \in R_1\), then \((x_1,t_1)\) is in \(M(r,t_0)\) and between a and b. In this case, \(Z_1(x_1,t_1)\) consists of two triangles, whose upper vertices occur at positions a and b (see Fig. 7). The base of the triangle on the left is \(2(x_1-a)\), and the base of the triangle on the right is \(2(b-x_1)\), so the total area of \(Z_1(x_1,t_1)\) is \(c_d^{-1}[(x_1-a)^2+(b-x_1)^2]\).

If \((x_1,t_1) \in R_2\), then \((x_1,t_1)\) is in \(M(r,t_0)\) but to the left of a. In this case, \(Z_2(x_1,t_1)\) is a trapezoidal region. This trapezoidal region can be constructed by taking the triangle whose upper vertex is at position b and subtracting the smaller triangle with upper vertex at position a (see Fig. 8). The base of the larger triangle is \(2(b-x_1)\), and the base of the smaller triangle is \(2(a-x_1)\). Hence, the area of \(Z_2(x_1,t_1)\) is \(c_d^{-1}[(b-x_1)^2-(a-x_1)^2]\). \(Z_3(x_1,t_1)\) is constructed analogously to \(Z_2(x_1,t_1)\).

\(Z_4(x_1,t_1)\) and \(Z_5(x_1,t_1)\) have a slightly different meaning. Given that the first mutation occurs in region 4 or 5, respectively, \(Z_4(x_1,t_1)\) and \(Z_5(x_1,t_1)\) each represent the region in which the occurrence of a second mutation would make the sampled cells genetically identical.

If \((x_1,t_1) \in R_4\), then \((x_1,t_1)\) is outside of \(M(r,t_0)\) and to the left of a. In order for a and b to be the same in this case, the second clone must meet the first clone before it reaches a, and the second clone must spread to b before \(t_0\). Hence, \(Z_4(x_1,t_1)\) is a triangle inside \(M(r,t_0)\) (see Fig. 9). In the next paragraph, we will explain how the area of \(Z_4(x_1,t_1)\) is calculated.

The distance between the right vertex of \(Z_4(x_1,t_1)\) and a is equal to the distance between a and \(x_1\), so the position of that vertex is \(a+(a-x_1)=2a-x_1\). Let \(V_b'\) be the portion of \(V_b\) that falls between the t-values \(t_1\) and \(t_0\). Then we can find the position of the left vertex of \(Z_4(x_1,t_1)\) by considering it as the left corner of \(V_b'\). The height of \(V_b'\) is \(t_0-t_1\), so its base is \(2c_d(t_0-t_1)\). Then the left vertex of \(V_b'\), and consequently the left vertex of \(Z_4(x_1,t_1)\) is \(b-c_d(t_0-t_1)\). Hence, the base of \(Z_4(x_1,t_1)\) has length \(2a-x_1-b+c_d(t_0-t_1)\). Therefore, the area of \(Z_4(x_1,t_1)\) is:

Analogously, the area of \(Z_5(x_1,t_1)\) is:

In summary, we have the following areas:

Let \(X_n\) be the position of the nth mutation. Then:

Thus to calculate \(\mathbb {P}(D_{ab})\) it remains to calculate \(\mathbb {P}(X_2\in Z_i|X_1\in R_i)\) and \(\mathbb {P}(X_1\in R_i)\) for \(i\in \{1,\ldots ,5\}\).

Let \(A_i(x,t)\) be the region inside \(V_a\cup V_b\) that is affected by a mutation at \((x,t) \in R_i\). Since type-1 mutations must occur in cells that have not yet mutated, the second type-1 mutation cannot occur inside \(A_i(x,t)\) (Fig. 10).

The area of \(A_i(x,t)\) depends on whether (x,t) is in \(M(r,t_0), V_a\setminus V_b\), or \(V_b\setminus V_a\). The following are the areas \(|A_i(x,t)|\), which will be used to calculate \(\mathbb {P}(X_2\in Z_i|X_1\in R_i)\):

We will explain how \(|A_4(x,t)|\) is calculated and leave out the calculations for \(|A_1(x,t)|\) and \(|A_5(x,t)|\), which can be done similarly.

\(|A_4(x,t)|\) is calculated by taking the area of the truncated triangle \(V_a'\) (the portion of \(V_a\) that lies between times t and \(t_0\)) and then subtracting the area of two smaller triangles that are not in \(A_4\) (see Fig. 11). The bases of these triangles lie along line t, between x and the two lower vertices of \(V_a'\). The height of \(V_a'\) is \(t_0-t\), so its base is \(2c_d(t_0-t)\). Hence the lower left vertex of \(V_a'\) is at position \(a-c_d(t_0-t)\), and the lower right vertex of \(V_a'\) is at position \(a+c_d(t_0-t)\). Therefore the base of the left small triangle is \(x-a+c_d(t_0-t)\), so its area is \(\displaystyle \frac{(x-a+c_d(t_0-t))^2}{4c_d}\). The base of the right small triangle is \(a+c_d(t_0-t)-x\), so its area is \(\displaystyle \frac{(a-x+c_d(t_0-t))^2}{4c_d}\). Since \(|V_a'| = c_d(t_0-t)^2\), we get the area listed above for \(A_4\) and \(A_6\).

If \(X_1=(x,t)\in R_i\), then \(\mathbb {P}(X_2\in Z_i)=\displaystyle \frac{|Z_i(x,t)|}{|V_a\cup V_b \setminus A_i(x,t)|}\). We can integrate this quantity over the places where the first mutation could have occurred, which is all of \(R_i\), and then divide by \(|R_i|\) to get:

Now it remains to calculate \(\mathbb {P}(X_1 \in R_i)\). Since mutations arrive according to a Poisson process, we have \(\mathbb {P}(X_1 \in R_i) = \mu (|R_i|)e^{-\mu (|R_i|)}\), and it suffices to know the following areas:

The expression for \(|R_2|\) listed above is calculated by considering \(R_2\) as a triangle inside \(V_b\). The height of \(V_b\) is \(t_0\), so the left vertex is at position \(b-c_dt_0\). Then the base of \(R_2\) is \(a-b+c_dt_0\), which means its area is \(\displaystyle \frac{(a-b+c_dt_0)^2}{4c_d}\).

Then we can use \(|R_2|\) to calculate \(|R_1|\) and \(|R_6|\):

\(|R_1| = |M(r,t_0)| - 2|R_2|\), and \(|R_4| = \frac{1}{2}|V_a| - |R_2|\). And the height of \(R_6\) is \(t_0\) minus the height of \(R_2\), so \(|R_6|\) simplifies to \(\displaystyle \frac{r^2}{4c_d}\).

All of the equations above can be used to calculate \(\mathbb {P}(D_{ab})\):

1.2 \(I_1\) in 2 Dimensions

Similarly to the one-dimensional case, we will first calculate \(|V_a(t_0)|\) and \(|M(r,t_0)|\). In the two-dimensional setting, this is slightly more difficult. First we know that \(|V_a(t_0)|=\pi t_0^3c_d^2/3\), so it remains to find \(|M(r,t_0)|\).

Observe that if \(r>2c_dt_0\) then \(M(r,t_0)=\emptyset \), so we only need to calculate \(|M(r,t_0)|\) in the case \(r<2c_dt_0\). If we consider the overlap of space-time cones at the fixed time \(s\in \left[ 0,t_0-r/(2c_d)\right] \), then looking at Fig. 12 it can be seen that half the area of the overlap of their cones at this specific time is given by taking the difference between the area of the circular section with radius \(c_d(t-s)\) and angle \(\theta \) and twice the area of the triangle with side lengths \(x,r/2, c_d(t_0-s)\). The area of the circular section is given by

and twice the area of the triangle is given by

Thus the area of overlap between the two cones at time s is given by

The space-time volume of M(r, t) is therefore given by

Applying integration by parts to the first integral we see that

Thus we have that

which we can combine with (24) to see that for \(r<2c_dt_0\)

With these calculations we see that

We can now explicitly calculate \(\mathbb {P}(D_{ab} | E_1) = \frac{|D(r,t_0)|}{|V_a(t_0) \cup V_b(t_0)|}.\) The remainder of this section will deal with the calculation of \(\mathbb {P}(D_{ab}|E_2)\).

The approach here will be slightly different from the one-dimensional case because it is easier to look at the two-dimensional cross sections of \(|V_a(t_0) \cup V_b(t_0)|\), rather than the entire three-dimensional space-time cones. Therefore, we will split the cross sections into just two regions, and then when calculating the relevant volumes involved in \(I_2\), we will split the regions into multiple cases. In the end, the process is similar, but the setup will be simpler, and then the volume calculations will be more complicated in the two-dimensional setting.

If two events occur in \(V_a(t_0) \cup V_b(t_0)\), then the probabilities will differ, depending on whether the first event occurs in \(M(r,t_0)\) or in \(D(r,t_0)\). We will assume that if two mutation circles collide, then we can draw a line through that point, perpendicular to the line segment connecting the two mutations (as show in Fig. 13). The circles will not extend beyond that line but will continue to expand in all other directions.

If the first event occurs in \(M(r,t_0)\) at position \((x_1, y_1)\) at time \(t_1\), then let \(r_a\) be the distance between \((x_1, y_1)\) and a, and let \(r_b\) be the distance between \((x_1, y_1)\) and b. Then let \(C_a(t_1)\) be the cone centered at a that extends to the edge of the expanding clone, so \(C_a(t_1)\) will have radius \(r_a\) at time \(t_1\) and radius 0 at time \((t_1 + \frac{r_a}{c_d})\). Similarly, \(C_b(t_1)\) will be the cone centered at b with radius \(r_b\) at time \(t_1\) and radius 0 at time \((t_1 + \frac{r_b}{c_d})\). Cross sections of these cones are shown in Fig. 14.

If the second mutation occurs outside of \(C_a(t_1) \cup C_b(t_1)\), then the first clone will reach both a and b before interacting with the second clone. If the second mutation occurs in \(C_a(t_1) \setminus C_b(t_1)\), then the line dividing the two clones will separate a from b, so the second clone will affect a, and the first will affect b, making the two cells different. Similarly, if the second mutation occurs in \(C_b(t_1) \setminus C_a(t_1)\), then the first clone will affect a, and the second will affect b.

However if the second mutation occurs in \(C_b(t_1) \cap C_a(t_1)\), then both a and b will be on the same side of the line dividing the mutation circles, so the second clone will affect both a and b. Therefore, the two cells will only be different if the second mutation occurs in \(C_b(t_1) \triangle C_a(t_1)\).

If the first mutation occurs in \(D(r,t_0)\), then its position \((x_1, y_1)\) is closer to either a or b. Without loss of generality, say that \((x_1, y_1)\) is closer to a. Again let \(r_a\) be the distance between \((x_1, y_1)\) and a, and let \(C_a(t_1)\) be the cone centered at a with radius \(r_a\) at time \(t_1\) and radius 0 at time \((t_1 + \frac{r_a}{c_d})\). A cross section of this cone is shown in Fig. 15.

If the second mutation occurs outside of \(C_a(t_1)\), then the first mutation will reach a before interacting with the second mutation. Since the first mutation is outside \(V_b(t_0)\), it cannot reach b by time \(t_0\), so the sampled cells will be different.

If the second mutation occurs inside \(C_a(t)\) but outside M(r, t), then the two mutations will interact before the first mutation reaches a, meaning that the second mutation will affect a. However, the second mutation will not spread to b, since it does not start in \(V_b(t_0)\). Hence, the cells located at a and b will only be the same if the second mutation occurs in \(C_a(t_1) \cap M(r,t_0)\).

In summary, we have:

Since the mutations arise according to a Poisson process, we can use the volume calculations for \(M(r,t_0)\) and \(V_a(t_1) \setminus V_b(t_1)\) to calculate the following probabilities:

Similarly to the 1-D case:

where A(x, y, t) is the cone-shaped region inside \(V_a(t_0) \cup V_b(t_0)\) that is affected by a mutation at (x, y, t).

In addition:

We next develop formulas to compute the volumes in the previous two displays. Note that

and that \(|C_a(t_1)| = \displaystyle \frac{\pi }{3}r_a^2\left( t_1+\frac{r_a}{c_d}\right) \), since \(C_a(t_1)\) is a cone with radius \(r_a\) and height \(t_1+\frac{r_a}{c_d}\). Similarly, \(|C_b(t_1)| = \displaystyle \frac{\pi }{3}r_b^2\left( t_1+\frac{r_b}{c_d}\right) \).

We next compute \(|C_a(t_1)\cap C_a(t_1)|\). A cross section of \(C_a(t_1)\) has radius \(r_a - (s-t_1)c_d\) at time s, and a cross section of \(C_b(t_1)\) has radius \(r_b - (s-t_1)c_d\) at time s. \(C_a(t_1)\) and \(C_b(t_1)\) will have a nonempty intersection until \(r_a - (s-t_1)c_d + r_b - (s-t_1)c_d = r\), i.e. when \(s=\displaystyle \frac{r_a+r_b-r}{2c_d}+t_1\).

If we denote the area of intersection of the cross sections of \(C_a(t_1)\) and \(C_b(t_1)\) at time s by I(s), then

I(s) is calculated by summing the areas of the two circular segments, each of which can be calculated by subtracting the area of a triangle from the area of a wedge of the circle,

where \(R_a(s) = r_a - (s-t_1)c_d\), \(R_b(s) = r_b - (s-t_1)c_d\), \(d_a(s) = \displaystyle \frac{r^2-R_b^2(s)+R_a^2(s)}{2r}\), and \(d_b = \displaystyle \frac{r^2+R_b^2-R_a^2}{2r}\).

In order to compute the quantity \(|C_a(t)\cap M(r,t)|\) used in equation (27), we need to first determine when the cross sections of \(C_a(t)\) and \(V_b(t)\) have nonempty intersection. This occurs when \(r_a- (s-t_1)c_d + c_d(t_0-s) > r\), i.e. when \(s< \displaystyle \frac{1}{2}\left( \frac{r_a-r}{c_d}+t_0+t_1\right) \). Hence:

where

\(R_a\) is defined above, \(\hat{R_b}(s) = c_d(t_0-s)\), \(\hat{d_a}(s) = \displaystyle \frac{r^2-\hat{R_b}(s)^2+R_a^2(s)}{2r}\), and \(\hat{d_b}(s) = \displaystyle \frac{r^2+\hat{R_b}^2(s)-R_a^2(s)}{2r}\).

We finally compute \(|(V_a(t_0)\cup V_b(t_0))\setminus A(x,y,t)|\). In pursuit of this, we define \(U_1\) as the region that is affected by the mutation at \((x_1, y_1,t_1)\), i.e.,

Let \(u_1(s)\) be the cross section of \(U_1\) at time s, i.e.,

Observe that \(A(x_1,y_1,t_1)\) is the region inside \(V_a(t_0) \cup V_b(t_0)\) that is affected by a mutation at \((x_1,y_1,t_1)\), so \(A(x_1,y_1,t_1)=U_1 \cap (V_a(t_0) \cup V_b(t_0))\). This implies that

Then it remains to find \(|A(x_1,y_1,t_1)|\). This will be accomplished by looking at the cross sections of this set for each fixed time s. Define \(v_a(s)\) and \(v_b(s)\) as the cross sections of \(V_a\) and \(V_b\), respectively, at time s, i.e.,

If \((x_1,y_1, t_1) \in V_a(t_0) \setminus V_b(t_0)\), then \(U_1\) will not intersect \(V_b(t_0)\), so in this case \(A(x_1,y_1,t_1) = U_1 \cap V_a(t_0)\). In order to compute the volume of this set we look at the area of the cross section for each fixed time point. We can divide the interval \([0,t_0]\) into three distinct intervals, which determine the shape of the area of this cross section. In the first time interval, the cross section of \(U_1\) is contained in the cross section of \(V_a\), so we determine the time interval as shown below,

In the final interval, the cross section of \(V_a\) is contained in the cross section of \(U_1\), so we have

When \(\displaystyle \frac{t_1+t_0}{2} - \frac{r_a}{2c_d}< s < \displaystyle \frac{t_1+t_0}{2} + \frac{r_a}{2c_d}\), we have

where \(R_u(s) = c_d(s-t_1)\), \(\hat{R_a}(s) = c_d(t_0-s)\), \(d_u(s) = \displaystyle \frac{r_a^2-\hat{R}_a^2(s)+R_u^2(s)}{2r_a}\), and \(\hat{d}_a(s) = \displaystyle \frac{r_a^2+\hat{R}_a^2(s)-R_u^2(s)}{2r_a}\).

Thus for \((x_1,y_1, t_1) \in V_a(t_0) \setminus V_b(t_0)\),

\(|A(x_1,y_1,t_1)|\) is computed analogously when \((x_1,y_1, t_1) \in V_b(t_0) \setminus V_a(t_0)\).

It remains to compute \(|A(x_1,y_1,t_1)|\) when \((x_1,y_1, t_1) \in V_b(t_0) \cap V_a(t_0)\). First note that if \((x_1,y_1, t_1) \in V_b(t_0) \cap V_a(t_0)\), then

Once the cross sections \(v_a(s)\) and \(v_b(s)\) are no longer intersecting, i.e., when \(s>t_0-\frac{r}{2c_d}\), then

The two quantities \(|u_1(s)\cap v_a(s)|\) and \(|u_1(s)\cap v_b(s)|\) can be calculated as shown above.

Then for \(\displaystyle \frac{t_1+t_0}{2} - \frac{\min \{r_a,r_b\}}{2c_d}< s < t_0-\frac{r}{2c_d}\), we have

The quantities \(|u_1(s) \cap v_a(s)|\) and \(|u_1(s) \cap v_b(s)|\) can be calculated as shown above, and \(|u_1(s) \cap v_a(s) \cap v_b(s)|\) can be calculated as shown in Fewell (2006).

Thus if \((x_1,y_1,t_1)\in V_a(t_0)\cap V_b(t_0)\), then

With (30) and (31) we can compute \(|A(x_1,y_1,t_1)|\) for arbitrary \((x_1,y_1,t_1)\). We can then use \(|A(x_1,y_1,t_1)|\) with (29) and (28) to compute (26) and (27). Finally we use (26) and (27) to compute \(P(D_{ab}|E_2)\) based on (25).

Appendix 3: \(I_2\) Calculations

In this section we describe how to compute \(I_2(r,t)\) and \(I_2(r,\tau )\). First recall from Sect. 4.2 that R is the radius of the clone, Y is chosen according to a size-biased pick, and X is the distance of p (a point selected at random from Y) from the center of Y.

We first describe how to estimate \(I_2(r,t)\) based on (11). In particular, we can generate K i.i.d copies of the vector (X, R), denoted by \(\{(X_i,R_i)\}_{i=1}^K\). Our method for generating \((X_1,R_1)\) based on the time interval [0, t] is as follows. First generate the arrival times of mutations based on a Poisson process with rate \(Nu_1s\), denote this set of times by \(t_1,\ldots , t_n\). Then for each mutation calculate the size of its family at time t using the formula (7), and this gives us the collection of family sizes \(Y_{1,1},\ldots , Y_{1,n}\) of clones \(C_{1,1},\ldots , C_{1,n}\). Choose a clone \(C=C_{[1]}\) via a size-biased pick from the collection \(C_{1,1},\ldots , C_{1,n}\), and set R to be the radius of C. Let U be a uniform random variable on [0, 1] independent of R and set \(X=R\sqrt{U}\). With these samples, form the estimator

We can also derive an alternative representation for \(P(p_2\in C)\) that is more suitable for mathematical analysis. Denote the conditional density of X, given \(R=y\), by \(f_X(x|R=y)\) and the density of R by \(f_R\). It is easy to see that \(f_X(x|R=y)=\frac{2x}{y^2}\) for \(x\in (0,y)\) and 0 otherwise, and therefore

Define

and

Therefore we see from (32) that we have \(\mathbb {P}(p_2\in C) = \mathbb {E}[\Phi _r(R)]\).

The formula \(\mathbb {P}(p_2\in C) = \mathbb {E}[\Phi _r(R)]\) is difficult to work with, due to the complex distribution of R. However, an interesting observation is that the distribution of R becomes much simpler if we assume that the sampling occurs at the random detection time \(\tau \). In this case define \(R(\tau )\) to be the radius of the clone that we choose at time \(\tau \). Then we can use equation (9) in Foo et al. (2014) to see that conditional on \(\tau =t\), \(R(\tau )\) has density

for \(x\le c_dt\) and zero otherwise. In the conditional density above \(\theta = \mu \gamma c_d^d/(d+1)\). In order to describe the distribution of \(R(\tau )\), we then need the distribution of \(\tau \), which we can get from (4) of Foo et al. (2014). In particular define

and \(\lambda =Nu_1s\). Then \(\tau \) has density

Therefore we can calculate that

Furthermore we can take derivatives to find that \(R(\tau )\) has density given by

Note that the density \(\hat{f}\) is very similar to the Weibull density, and thus we can generate samples from \(\hat{f}\) by using the acceptance rejection algorithm with a proposal distribution based on the Weibull distribution. With these samples from the density \(\hat{f}\), we can use the function \(\Phi _r\) to estimate \(I_2(r,\tau )\).

Note that when approximating \(I_2(r,\tau )\) it is not necessary to simulate the mesoscopic model. We simply generate random variables according to the density \(\hat{f}_R\) and then evaluate the function \(\Phi _r(R)\). However, approximating \(I_2(r,t)\) is a greater computational burden because it requires simulating the mesoscopic model.

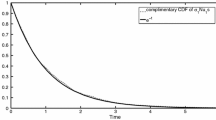

(Color Figure Online) Plot of \(\hat{r}_{0.5}\) in 2D as a function of a selection strength, s, and b time of sampling, t. In all panels \(N=2e5\), and 1e4 Monte Carlo simulations are performed. Unless varied, \(s=0.1\), \(u_1=7.5e-7\), and t is the median of the detection time \(\tau \) with \(\mu =2e-6\)

Appendix 4: Characteristic Length Scale Based on \(I_2\)

In practice, \(I_2\) can help to reduce the number of subsequent biopsy samples that should be taken after a premalignant sample has been found. For example, if a clinician wanted to take samples so that the probability that they come from the original premalignant clone is at most p, then we can define a length \(\hat{r}_{p} \equiv \{\text{ argmin }_{r>0} I_2(r,t) < p \}\); samples taken a distance of at least \(\hat{r}_{p}\) away from the original sample should satisfy this requirement. Figure 16 shows plots of \(\hat{r}_{0.5}\) as a function of s and as a function of t. We can see in these plots that as the selection strength increases, \(\hat{r}_{0.5}\) increases. As s increases, mutant clones expand more quickly, so samples must be taken farther away from the premalignant sample in order to guarantee \(I_2 < 0.5\). Similarly as the sampling time t increases, we expect the clones to be larger by the time the cells are sampled, so \(\hat{r}_{0.5}\) increases as well.

Rights and permissions

About this article

Cite this article

Storey, K., Ryser, M.D., Leder, K. et al. Spatial Measures of Genetic Heterogeneity During Carcinogenesis. Bull Math Biol 79, 237–276 (2017). https://doi.org/10.1007/s11538-016-0234-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11538-016-0234-5