Abstract

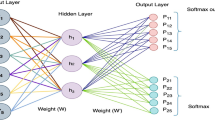

In recent years, deep neural network has achieved great success in solving many natural language processing tasks. Particularly, substantial progress has been made on neural text generation, which takes the linguistic and non-linguistic input, and generates natural language text. This survey aims to provide an up-to-date synthesis of core tasks in neural text generation and the architectures adopted to handle these tasks, and draw attention to the challenges in neural text generation. We first outline the mainstream neural text generation frameworks, and then introduce datasets, advanced models and challenges of four core text generation tasks in detail, including AMR-to-text generation, data-to-text generation, and two text-to-text generation tasks (i.e., text summarization and paraphrase generation). Finally, we present future research directions for neural text generation. This survey can be used as a guide and reference for researchers and practitioners in this area.

Similar content being viewed by others

References

Flanigan J, Dyer C, Smith N A, et al. Generation from abstract meaning representation using tree transducers. In: Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. San Diego California, 2016. 731–739

Pourdamghani N, Knight K, Her-mjakob U. Generating english from abstract meaning representations. In: Proceedings of the 9th International Natural Language Generation Conference. Edinburgh, 2016. 21–25

Konstas I, Iyer S, Yatskar M, et al. Neural amr: Sequence-to-sequence models for parsing and generation. In: Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics. Vancouver, 2017. 146–157

Banarescu L, Bonial C, Cai S, et al. Abstract meaning representation for sembanking. In: Proceedings of the 7th Linguistic Annotation Workshop and Interoperability with Discourse. Sofia, 2013. 178–186

Mei H Y, Bansal M, Walter M R. What to talk about and how? Selective generation using lstms with coarse-to-fine alignment. In: The 2016 Conference of the North American Chapter of the Association for Computational Linguistics. San Diego California, 2016. 720–730

Perez-Beltrachini L, Lapata M. Bootstrapping generators from noisy data. ArXiv: 1804.06385

Sha L, Mou L, Liu T, et al. Order-planning neural text generation from structured data. In: Thirty-Second AAAI Conference on Artificial Intelligence. New Orleans, 2018

Nallapati R, Zhai F, Zhou B. Summarunner: A recurrent neural network based sequence model for extractive summarization of documents. In: Thirty-First AAAI Conference on Artificial Intelligence. San Francisco, 2017 9 Narayan S, Cohen S B, Lapata M. Ranking sentences for extractive summarization with reinforcement learning. In: Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. New Orleans, 2018. 1747–1759

Nallapati R, Zhou B, Gulcehre C, et al. Abstractive text summarization using sequence-to-sequence rnns and beyond. ArXiv: 1602.06023

Rush A M, Chopra S, Weston J. A neural attention model for abstractive sentence summarization. In: Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing. Lisbon, 2015. 379–389

Li Z, Jiang X, Shang L, et al. Paraphrase generation with deep reinforcement learning. In: Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing. Brussels, 2018. 3865–3878

Gupta A, Agarwal A, Singh P, et al. A deep generative framework for paraphrase generation. In: Thirty-Second AAAI Conference on Artificial Intelligence. New Orleans, Louisiana, 2018

Sutskever I, Vinyals O, Le Q V. Sequence to sequence learning with neural networks. Advances in Neural Information Processing Systems. In: Proceedings of the Annual Conference on Neural Information Processing Systems. Montreal, 2014. 3104–3112

Bahdanau D, Cho K, Bengio Y. Neural machine translation by jointly learning to align and translate. In: 3rd International Conference on Learning Representations. San Diego, 2015

Arumae K, Liu F. Reinforced extractive summarization with question-focused rewards. In: Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics. Melbourne, 2018. 105–111

Paulus R, Xiong C, Socher R. A deep reinforced model for abstractive summarization. ArXiv: 1705.04304

Kingma D, Welling M. Autoencoding variational bayes. ArXiv: 1312.6114

Goodfellow I, Pouget-Abadie J, Mirza M, et al. Generative adversarial nets. Advances in Neural Information Processing Systems. In: Proceedings of the Annual Conference on Neural Information Processing Systems. Montreal, 2014. 2672–2680

Reiter E, Dale R. Building applied natural language generation systems. Nat Lang Eng, 1997, 3: 57–87

Piwek P, Van Deemter K. Constraint-based natural language generation: A survey. Technical Report. Computing Department, The Open University, 2006

Gatt A, Krahmer E. Survey of the state of the art in natural language generation: core tasks, applications and evaluation. J Artif Intel Res, 2018, 61: 65–170

Perera R, Nand R. Recent advances in natural language generation: A survey and classification of the empirical literature. Comput Infor, 2017, 36: 1–32

Papineni K, Roukos S, Ward T, et al. BLEU: A method for automatic evaluation of machine translation. In: Proceedings of the 40th Annual Meeting on Association for Computational Linguistics. Association for Computational Linguistics. Philadelphia, 2002. 311–318

Lin C. ROUGE: A package for automatic evaluation of summaries. In: Proceedings of Workshop on Text Summarization Branches Out, Post 2 Conference Workshop of ACL. Barcelona, 2004

Popović M. chrf++: words helping character n-grams. In: Proceedings of the Second Conference on Machine Translation. Copenhagen, 2017. 612–618

Banerjee S, Lavie A. METEOR: An automatic metric for MT evaluation with improved correlation with human judgments. In: Proceedings of the ACL Workshop on Intrinsic And Extrinsic Evaluation Measures for Machine Translation and/or Summarization. Ann Arbor, 2005. 65–72

Zhu Y, Lu S, Zheng L, et al. Texygen: A benchmarking platform for text generation models. In: The 41st International ACM SIGIR Conference on Research & Development in Information Retrieval. Ann Arbor, 2018. 1097–1100

Shu R, Nakayama H, Cho K. Generating diverse translations with sentence codes. In: Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics. Florence, 2019. 1823–1827

Gupta A, Agarwal A, Singh P, et al. A deep generative framework for paraphrase generation. In: Thirty-Second AAAI Conference on Artificial Intelligence. New Orleans, 2018

Zhang T, Kishore V, Wu F, et al. Bertscore: Evaluating text generation with bert. ArXiv: 1904.09675

Zhao W, Peyrard M, Liu F, et al. Moverscore: Text generation evaluating with contextualized embeddings and earth mover distance. ArXiv: 1909.02622

Kusner M, Sun Y, Kolkin N, et al. From word embeddings to document distances. In: International Conference on Machine Learning. Lille, 2015. 957–966

Mikolov T, Karafiȼt M, Burget L, et al. Recurrent neural network based language model. In: Eleventh Annual Conference of the International Speech Communication Association. Makuhari, 2010

Kalchbrenner N, Blunsom P. Recurrent continuous translation models. In: Proceedings of the 2013 Conference on Empirical Methods in Natural Language Processing. Seattle, 2013. 1700–1709

Vaswani A, Shazeer N, Parmar N, et al. Attention is all you need. Advances in Neural Information Processing Systems. In: Proceedings of the Annual Conference on Neural Information Processing Systems. Long Beach, 2017. 5998–6008

Radford A, Narasimhan K, Salimans T, et al. Improving language understanding by generative pre-training. https://s3-us-west-2.amazonaws.com/openai-assets/research-covers/language-unsupervised/language_understanding_paper.pdf. 2018

Devlin J, Chang M, Lee K, et al. Bert: Pre-training of deep bidirectional transformers for language understanding. ArXiv: 1810.04805

Radford A, Wu J, Child R, et al. Language models are unsupervised multitask learners. OpenAI Blog, 2019, 1: 9

Song L, Zhang Y, Wang Z, et al. A graph-to-sequence model for amr-to-text generation. In: Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers). Melbourne, 2018. 1616–1626

Zhu J, Li J, Zhu M, et al. Modeling graph structure in transformer for better amr-to-text generation. In: Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing. Hong Kong, 2019. 5462–5471

Wiseman S, Shieber S M, Rush A M. Challenges in data-to-document generation. ArXiv: 1707.08052

Puduppully R, Dong L, Lapata M. Data-to-text generation with content selection and planning. In: Proceedings of the AAAI Conference on Artificial Intelligence. Honolulu, 2019. 6908–6915

Puduppully R, Dong L, Lapata M. Data-to-text generation with entity modeling. ArXiv: 1906.03221

Gehrmann S, Dai F, Elder H, et al. End-to-end content and plan selection for data-to-text generation. In: Proceedings of the 11th International Conference on Natural Language Generation. Tilburg University, The Netherlands. 2018. 46–56

Rebuffel C, Soulier L, Scoutheeten G, et al. A hierarchical model for data-to-text generation. ArXiv: 1912.10011

Liu T, Wang K, Sha L, et al. Table-to-text generation by structure-aware seq2seq learning. In: Thirty-Second AAAI Conference on Artificial Intelligence. New Orleans, 2018

Chopra S, Auli M, Rush A M. Abstractive sentence summarization with attentive recurrent neural networks. In: Proceedings of the 2016_Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. San Diego, 2016. 93–98

Tan J, Wan X, Xiao J. Abstractive document summarization with a graph-based attentional neural model. In: Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics. Vancouver, 2017. 1171–1181

Duan X, Yu H, Yin M, et al. Contrastive attention mechanism for abstractive sentence summarization. In: Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing. Hong Kong, 2019. 3035–3044

You Y, Jia W, Liu T, et al. Improving abstractive document summarization with salient information modeling. In: Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics. Florence, 2019. 2132–2141

Gu J, Lu Z, Li H, et al. Incorporating copying mechanism in sequence-to-sequence learning. In: Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics. Berlin, 2016. 1631–1640

Song K, Zhao L, Liu F. Structure-infused copy mechanisms for abstractive summarization. In: Proceedings of the 27th International Conference on Computational Linguistics. Santa Fe, 2018. 1717–1729

Prakash A, Hasan S A, Lee K, et al. Neural paraphrase generation with stacked residual lstm networks. ArXiv: 1610.03098

Li Z, Jiang X, Shang L, et al. Decomposable neural paraphrase generation. ArXiv: 1906.09741, 2019

Kajiwara T. Negative lexically constrained decoding for paraphrase generation. In: Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics. Florence, 2019. 6047–6052

Mallinson J, Sennrich R, Lapata M. Paraphrasing revisited with neural machine translation. In: Proceedings of the 15th Conference of the European Chapter of the Association for Computational Linguistics. Valencia, 2017. 881–893

Wieting J, Mallinson J, Gimpel K. Learning paraphrastic sentence embeddings from back-translated bitext. ArXiv: 1706.01847

Guo Y, Liao Y, Jiang X, et al. Zero-shot paraphrase generation with multilingual language models. ArXiv: 1911.03597

Dong L, Yang N, Wang W, et al. Unified language model pre-training for natural language understanding and generation. In: Advances in Neural Information Processing Systems. Vancouver, 2019. 13042–13054

Hamza H, Isabel G, Amir S. Have your text and use it too! End-to-end neural data-to-text generation with semantic fidelity. ArXiv: 2004.06577

Song K, Tan X, Qin T, et al. Mass: masked sequence to sequence pre-training for language generation. ArXiv: 1905.02450

Lewis M, Liu Y, Goyal N, et al. Bart: Denoising sequence-to-sequence pre-training for natural language generation, translation, and comprehension. ArXiv: 1910.13461

Liu Y, Lapata M. Text summarization with pretrained encoders. In: Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing. Hong Kong, 2019. 3721–3731

Gardent C, Shimorina A, Narayan S, et al. The WebNLG challenge: Generating text from RDF data. In: International Conference on Natural Language Generation. Santiago de Compostela, 2017. 124–133

Zhang X, Lapata M, Wei F, et al. Neural latent extractive document summarization. In: Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing. Brussels, 2018. 779–784

Arumae K, Liu F. Guiding extractive summarization with question-answering rewards. In: Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. Minneapolis, 2019. 2566

Pasunuru R, Bansal M. Multi-reward reinforced summarization with saliency and entailment. In: Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. New Orleans, 2018. 646–653

Ye R, Shi W, Zhou H, et al. Variational template machine for data-to-text generation. ArXiv: 2002.01127

Li P, Lam W, Bing L, et al. Deep recurrent generative decoder for abstractive text summarization. In: Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing. Copenhagen, Denmark. 2017

Bowman S R, Vilnis L, Vinyals O, et al. Generating sentences from a continuous space. ArXiv: 1511.06349

Shakeri S, Sethy A. Label dependent deep variational paraphrase generation. ArXiv: 1911.11952

Li C L, Su Y X, Qi J, et al. Using GAN to generate sport news from live game stats. In: International Congress on Cognitive Computing. San Diego, 2019: 102–116

Liu L, Lu Y, Yang M, et al. Generative adversarial network for abstractive text summarization. In: Thirty-Second AAAI Conference on Artificial Intelligence. New Orleans, 2018

Yang Q, Shen D, Cheng Y, et al. An end-to-end generative architecture for paraphrase generation. In: Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing. Hong Kong, 2019. 3123–3133

An Z, Liu S. Towards diverse paraphrase generation using multi-class wasserstein GAN. ArXiv: 1909.13827

Hsu wang H T, Wu Y C, et al. Voice conversion from unaligned corpora using variational autoencoding wasserstein generative adversarial networks. ArXiv: 1704.00849

Roy A, Grangier D. Unsupervised paraphrasing without translation. ArXiv: 1905.12752

Bao Y, Zhou H, Huang S, et al. Generating sentences from disentangled syntactic and semantic spaces. In: Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics. Florence, 2019. 6008–6019

He K, Zhang X, Ren S, et al. Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Las Vegas, 2016. 770–778

Ba J L, Kiros J R, Hinton G E. Layer normalization. ArXiv: 1607.06450

Zhang H, Gong Y, Yan Y, et al. Pretraining-based natural language generation for text summarization. ArXiv: 1902.09243

Song K, Tan X, Qin T, et al. MASS: Masked sequence to sequence pre-training for language generation. In: International Conference on Machine Learning. Long Beach, 2019. 5926–5936

Williams R J. Simple statistical gradient-following algorithms for connectionist reinforcement learning. Mach Learn, 1992, 8: 229–256

Sohn K, Lee H, Yan X. Learning structured output representation using deep conditional generative models. Advances in Neural Information Processing Systems. In: Proceedings of the Annual Conference on Neural Information Processing Systems. Montreal, 2015. 3483–3491

Song L, Peng X, Zhang Y, et al. AMR-to-text generation with synchronous node replacement grammar. In: Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers). Vancouver, 2017. 7–13

Beck D, Haffari G, Cohn T. Graph-to-sequence learning using gated graph neural networks. In: Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers). Melbourne, 2018. 273–283

Guo Z, Zhang Y, Teng Z, et al. Densely connected graph convolutional networks for graph-to-sequence learning. Trans Assoc Comput Linguist, 2019, 7: 297–312

Damonte M, Cohen S B. Structural neural encoders for amr-to-text generation. ArXiv: 1903.11410v1

Petar V, Guillem C, Arantxa C, et al. Graph attention networks. ArXiv: 1710.10903

Wang T, Wan X, Jin H. AMR-to-text generation with graph transformer. Trans Assoc Comput Linguist, 2020, 8: 19–33

Cai D, Lam W. Graph transformer for graph-to-sequence learning. ArXiv: 1911.07470

Ribeiro L F R, Gardent C, Gurevych I. Enhancing amr-to-text generation with dual graph representations. In: Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing. Hong Kong, 2019. 3174–3185

Reiter E, Dale R. Building applied natural language generation systems. Nat Lang Eng, 1997, 3: 57–87

Chen D, Mooney R J. Learning to sportscast: A test of grounded language acquisition. In: Proceedings of the 25th International Conference on Machine learning. Helsinki, Finland. 2008. 128–135

Liang P, Jordan M I, and Klein D. Learning semantic correspondences with less supervision. In: Proceedings of the Joint Conference of the 47th Annual Meeting of the ACL and the 4th International Joint Conference on Natural Language Processing of the AFNLP. Singapore. 2009. 91–99

Gardent C, Shimorina A, Narayan S, et al. The webnlg challenge: generating text from rdf data. In: Proceedings of the 10th International Conference on Natural Language Generation. The webnlg challenge: generating text from rdf data. Santiago de Compostela, 2017. 124–133

Lebret R, Grangier D, Auli M. Neural text generation from structured data with application to the biography domain. In: Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing. Austin, 2016. 1203–1213

Novikova J, Dušek O, Rieser V. The e2e dataset: New challenges for end-to-end generation. ArXiv: 1706.09254

Wiseman S, Shieber S, Rush A S. Learning neural templates for text generation. In: Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing. Brussels, 2018

Li L, Wan X. Point precisely: Towards ensuring the precision of data in generated texts using delayed copy mechanism. In: Proceedings of the 27th International Conference on Computational Linguistics. Santa Fe, 2018. 1044–1055

Du?ek O, Jurčíček F. Sequence-to-sequence generation for spoken dialogue via deep syntax trees and strings. ArXiv: 1606.05491

Juraska J, Karagiannis P, Bowden K K, et al. A deep ensemble model with slot alignment for sequence-to-sequence natural language generation. ArXiv: 1805.06553

Freitag M, Roy S. Unsupervised natural language generation with denoising autoencoders. ArXiv: 1804.07899

Edmundson H P. New methods in automatic extracting. J ACM, 1969, 16: 264–285

McDonald R. A study of global inference algorithms in multidocument summarization. In: European Conference on Information Retrieval. Rome, 2007. 557–564

Li C, Qian X, Liu Y. Using supervised bigram-based ILP for extractive summarization. In: Proceedings of the 51st Annual Meeting of the Association for Computational Linguistics. Sofia, 2013. 1004–1013

Genest P E, Lapalme G. Fully abstractive approach to guided summarization. In: Proceedings of the 50th Annual Meeting of the Association for Computational Linguistics. Jeju Island, 2012. 354–358

Bing L, Li P, Liao Y, et al. Abstractive multi-document summarization via phrase selection and merging. In: Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing. Beijing, 2015. 1587–1597

Mehdad Y, Carenini G, Ng R. Abstractive summarization of spoken and written conversations based on phrasal queries. In: Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics. Baltimore, 2014. 1220–1230

Graff D, Cieri C. English gigaword. Linguistic Data Consortium, 2003

Napoles C, Gormley M, Van D B. Annotated gigaword. In: Proceedings of the Joint Workshop on Automatic Knowledge Base Construction and Web-scale Knowledge Extraction. Montreal, 2012. 95–100

Sandhaus E. The new york times annotated corpus. Linguistic Data Consortium, Philadelphia, 2008, 6: e26752

Narayan S, Cohen S B, Lapata M. Don’t give me the details, just the summary! Topic-aware convolutional neural networks for extreme summarization. In: Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing. Brussels, 2018. 1797–1807

Grusky M, Naaman M, Artzi Y. Newsroom: A dataset of 1.3 million summaries with diverse extractive strategies. In: Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. New Orleans, 2018. 708–719

Kim B, Kim H, Kim G. Abstractive summarization of reddit posts with multi-level memory networks. In: Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. Minneapolis, 2019. 2519–2531

Fabbri A R, Li I, She T, et al. Multi-news: A large-scale multidocument summarization dataset and abstractive Hierarchical Model. In: Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics. Florence, 2019. 1074–1084

Liu P, Saleh M, Pot E, et al. Generating wikipedia by summarizing long sequences. ArXiv: 1801.10198

Over P, Dang H, Harman D, et al. DUC in context. Information Processing and Management, 2007, 43(6): 1506–1520

Jadhav A, Rajan V. Extractive summarization with swap-net: Sentences and words from alternating pointer networks. In: Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics. Melbourne, 2018. 142–151

Cheng J, Lapata M. Neural summarization by extracting sentences and words. In: Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics. Berlin, 2016. 484–494

Cao Z, Li W, Li S, et al. AttSum: Joint learning of focusing and summarization with neural attention. In: Proceedings of COLING 2016, the 26th International Conference on Computational Linguistics: Technical Papers. Osaka, 2016. 547–556

Ayana, Shen S, Chen Y, et al. Zero-shot cross-lingual neural headline generation. IEEE/ACM Trans Audio Speech Lang Process, 2018, 26: 2319–2327

Duan X, Yin M, Zhang M, et al. Zero-shot cross-lingual abstractive sentence summarization through teaching generation and attention. In: Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics. Florence, 2019. 3162–3172

Zhu J, Wang Q, Wang Y, et al. Ncls: Neural cross-lingual summarization. In: Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing. Hong Kong, 2019. 3045–3055

Mendes A, Narayan S, Miranda S, et al. Jointly extracting and compressing documents with summary state representations. In: Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. Minneapolis, 2019. 3955–3966

Chen Y C, Bansal M. Fast abstractive summarization with reinforce-selected sentence rewriting. In: Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics. Melbourne, 2018. 675–686

Zhou Q, Yang N, Wei F, et al. Neural document summarization by jointly learning to score and select sentences. In: Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics. Melbourne, 2018. 654–663

Zhong M, Liu P, Wang D, et al. Searching for effective neural extractive summarization: What works and what’s next. In: Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics. Florence, 2019. 1049–1058

Zhang X, Wei F, Zhou M. HIBERT: document level pre-training of hierarchical bidirectional transformers for document summarization. In: Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics. Florence, 2019. 5059–5069

Zhou Q, Yang N, Wei F, et al. Selective encoding for abstractive sentence summarization. In: Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics. Vancouver, 2017. 1095–1104

Cao Z, Li W, Li S, et al. Retrieve, rerank and rewrite: Soft template based neural summarization. In: Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics. Melbourne, 2018. 152–161

Wang K, Quan X, Wang R, et al. BiSET: Bi-directional selective encoding with template for abstractive summarization. In: Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics. Florence, 2019. 2153–2162

Sharma E, Huang L, Hu Z, et al. An entity-driven framework for abstractive summarization. In: Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing. Hong Kong, 2019. 3278–3289

Guo H, Pasunuru R, Bansal M. Soft layer-specific multi-task summarization with entailment and question generation. In: Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics. Melbourne, 2018. 687–697

Zhang J, Zhao Y, Saleh M, et al. PEGASUS: Pre-training with extracted gap-sentences for abstractive summarization. ArXiv: 1912.08777

Vinyals O, Fortunato M, Jaitly N. Pointer networks. Advances in Neural Information Processing Systems. In: Proceedings of the Annual Conference on Neural Information Processing Systems Montreal. Montreal, 2015. 2692–2700

Zhang F, Yao J, Yan R. On the abstractiveness of neural document summarization. In: Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing. Brussels, 2018. 785–790

Gehrmann S, Deng Y, Rush A M. Bottom-up abstractive summarization. In: Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing. Brussels, 2018. 4098–4109

Song K, Wang B, Feng Z, et al. Controlling the amount of verbatim copying in abstractive summarization. ArXiv: 1911.10390

Li W, Xiao X, Lyu Y, et al. Improving neural abstractive document summarization with explicit information selection modeling. In: Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing. Brussels, 2018. 1787–1796

Jiang Y, Bansal M. Closed-book training to improve summarization encoder memory. In: Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing. Brussels, 2018. 4067–4077

Leuski A, Lin C Y, Zhou L, et al. Cross-lingual C*ST*RD. ACM Trans Asian Language Inf Processing (TALIP), 2003, 2: 245–269

Orasan C, Chiorean O A. Evaluation of a cross-lingual Romanian-English multi-document summariser. In: Proceedings of the Sixth International Conference on Language Resources and Evaluation (LREC’08). Marrakech, 2008

Wan X, Li H, Xiao J. Cross-language document summarization based on machine translation quality prediction. In: Proceedings of the 48th Annual Meeting of the Association for Computational Linguistics. Association for Computational Linguistics. Uppsala, 2010. 917–926

Wan X. Using bilingual information for cross-language document summarization. In: Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies-Volume 1. Portland, 2011. 1546–1555

See A, Liu P J, Manning C D. Get to the point: Summarization with pointer-generator networks. In: Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers). Vancouver, 2017. 1073–1083

Zhou L, Lin C Y, Munteanu D S, et al. Paraeval: Using paraphrases to evaluate summaries automatically. In: Proceedings of the main conference on Human Language Technology Conference of the North American Chapter of the Association of Computational Linguistics. New York, 2006. 447–454

Ganesan K. ROUGE 2.0: Updated and improved measures for evaluation of summarization tasks. ArXiv: 1803.01937

Zhao W, Peyrard M, Liu F, et al. MoverScore: Text generation evaluating with contextualized embeddings and earth mover distance. In: Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing. Hong Kong, 2019. 563–578

Cao Z, Wei F, Li W, et al. Faithful to the original: fact aware neural abstractive summarization. In: Thirty-Second AAAI Conference on Artificial Intelligence. New Orleans, 2018

Fader A, Zettlemoyer L, Etzioni O. Open question answering over curated and extracted knowledge bases. In: Proceedings of the 20th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. New York, 2014. 1156–1165

Lin T Y, Maire M, Belongie S, et al. Microsoft coco: Common objects in context. In: Proceedings of the European Conference on Computer Vision. Cham: Springer. Zurich, 2014. 740–755

Fader A, Zettlemoyer L, Etzioni O. Paraphrase-driven learning for open question answering. In: Proceedings of the 51st Annual Meeting of the Association for Computational Linguistics. Sofia, 2013. 1608–1618

Wieting J, Gimpel K. ParaNMT-50M: Pushing the limits of paraphrastic sentence Embeddings with millions of machine translations. In: Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics. Melbourne, 2018. 451–462

Pavlick E, Rastogi P, Ganitkevitch J, et al. PPDB 2.0: Better paraphrase ranking, fine-grained entailment relations, word embeddings, and style classification. In: Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing (Volume 2: Short Papers). Beijing, 2015. 425–430

Dolan W B, Brockett C. Automatically constructing a corpus of sentential paraphrases. In: Proceedings of the Third International Workshop on Paraphrasing (IWP2005). Jeju Island, 2005

Lin D, Pantel P. Discovery of inference rules for question-answering. Nat Lang Eng, 2001, 7: 343–360

Creutz M. Open subtitles paraphrase corpus for six languages. ArXiv: 1809.06142

McKeown K R. Paraphrasing questions using given and new information. Computational Linguistics, 1983, 9: 1–10

Zhao S, Lan X, Liu T, et al. Application-driven statistical paraphrase generation. In: Proceedings of the Joint Conference of the 47th Annual Meeting of the ACL and the 4th International Joint Conference on Natural Language Processing of the AFNLP. Singapore, 2009. 834–842

Meteer M, Shaked V. Strategies for effective paraphrasing. In: Proceedings of the 12th conference on Computational linguistics-Volume 2. Budapest, Hungary. 1988. 431–436

Bolshakov I A, Gelbukh A. Synonymous paraphrasing using wordnet and internet. In: International Conference on Application of Natural Language to Information Systems. Salford, 2004. 312–323

Kauchak D, Barzilay R. Paraphrasing for automatic evaluation. In: Proceedings of the main conference on Human Language Technology Conference of the North American Chapter of the Association of Computational Linguistics. New York, 2006. 455–462

Narayan S, Reddy S, Cohen S B. Paraphrase generation from Latent-Variable PCFGs for semantic parsing. ArXiv: 1601.06068

Quirk C, Brockett C, Dolan W B. Monolingual machine translation for paraphrase generation. In: Proceedings of the 2004 Conference on Empirical Methods in Natural Language Processing. Barcelona, 2004. 142–149

Zhao S, Niu C, Zhou M, et al. Combining multiple resources to improve SMT-based paraphrasing model. In: Proceedings of the 46th Annual Meeting of the Association for Computational Linguistics. Columbus, 2008. 021–1029

Zhao S, Lan X, Liu T, et al. Application-driven statistical paraphrase generation. In: Proceedings of the Joint Conference of the 47th Annual Meeting of the ACL and the 4th International Joint Conference on Natural Language Processing of the AFNLP. Singapore, 2009. 834–842

Liu X, Mou L, Meng F, et al. Unsupervised paraphrasing by simulated annealing. ArXiv: 1909.03588

Sun H, Zhou M. Joint learning of a dual SMT system for paraphrase generation. In: Proceedings of the 50th Annual Meeting of the Association for Computational Linguistics. Jeju Island, 2012. 38–42

Grangier D, Auli M. QuickEdit: Editing text & translations by crossing words out. In: Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. New Orleans, 2018. 272–282

Galley M, Brockett C, Sordoni A, et al. DeltaBLEU: A discriminative metric for generation tasks with intrinsically diverse targets. In: Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing. Beijing, 2015. 445–450

Qian L, Qiu L, Zhang W, et al. Exploring diverse expressions for paraphrase generation. In: Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing. Hong Kong, 2019. 3164–3173

Author information

Authors and Affiliations

Corresponding author

Additional information

This work was supported by the National Natural Science Foundation of China (Grant No. 61772036), and the Key Laboratory of Science, Technology and Standard in Press Industry (Key Laboratory of Intelligent Press Media Technology).

Rights and permissions

About this article

Cite this article

Jin, H., Cao, Y., Wang, T. et al. Recent advances of neural text generation: Core tasks, datasets, models and challenges. Sci. China Technol. Sci. 63, 1990–2010 (2020). https://doi.org/10.1007/s11431-020-1622-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11431-020-1622-y