Abstract

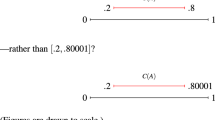

Many philosophers regard the imprecise credence framework as a more realistic model of probabilistic inferences with imperfect empirical information than the traditional precise credence framework. Hence, it is surprising that the literature lacks any discussion on how to update one’s imprecise credences when the given evidence itself is imprecise. To fill this gap, I consider two updating principles. Unfortunately, each of them faces a serious problem. The first updating principle, which I call “generalized conditionalization,” sometimes forces an agent to change her imprecise degrees of belief even though she does not have new evidence. The second updating principle, which I call “the generalized dynamic Keynesian model,” may result in a very precise credal state although the agent does not have sufficiently strong evidence to justify such an informative doxastic state. This means that it is much more difficult to come up with an acceptable updating principle for the imprecise credence framework than one might have thought it would be.

Similar content being viewed by others

Notes

His original framework was designed to embrace utilities–degrees of desire–as well as credences–degrees of belief. In this paper, I will not discuss utilities.

Formally, \( \fancyscript{R}_{n}=\left\{ r|r:\Phi \rightarrow \left[ 0,1\right] \& (\exists c\in \fancyscript{C}_{n})(\forall X\in \Phi )r(X)=c(X)\right\} \).

Of course, the more traditional form of strict conditionalization has been formulated within the precise credence framework. Nevertheless, I believe that the above and the traditional forms of SC are similar enough to justify my use of the same name.

Again, the original version of Jeffrey conditionalization was formulated within the precise credence framework, but I will use the same name for both the precise and imprecise versions.

Here, we are not assuming that \(\Phi \) is a Boolean algebra. This is why we call the members of \(\fancyscript{C}\) and \(\fancyscript{C}*\) “probability distributions” rather than “probability functions.” However, it is pressupposed here that, whenever possible, those probability distributions satisfy the axioms of probability theory.

Proof: Suppose FSET and prove that RPSET is true. Consider any credal states \(\fancyscript{C}\) and \(\fancyscript{C}*\) over \(\left\{ E,\lnot E\right\} \). (\(\Rightarrow \)) Assume that \(\fancyscript{C}\) and \(\fancyscript{C*}\) encode the same belief. By supposition, \(\fancyscript{C}\) and \(\fancyscript{C*}\) are the same set. Therefore, \(\fancyscript{C}\left( E\right) =\fancyscript{C*}\left( E\right) \). (\(\Leftarrow \)) Assume that \(\fancyscript{C}\left( E\right) =\fancyscript{C*}\left( E\right) \). Consider any probability distribution \(c\in \fancyscript{C}\) such that \(c:\left\{ E,\lnot E\right\} \rightarrow \left[ 0,1\right] \). Let \(x=c\left( E\right) \). Since \(c\left( E\right) \in \fancyscript{C}\left( E\right) =\fancyscript{C*}\left( E\right) \), there is \(c*\in \fancyscript{C}*\) such that \(c*\left( E\right) =x\). Moreover, \(c\left( \lnot E\right) =1-x=c*\left( \lnot E\right) \). Hence, \(c=c*\), which implies that \(c\in \fancyscript{C*}\). Similarly, any probability distribution \(c*\in \fancyscript{C}*\) is also a member of \(\fancyscript{C}\). In sum, \(\fancyscript{C}=\fancyscript{C*}\). Therefore, \(\fancyscript{C}\) and \(\fancyscript{C*}\) encode the same beliefs. Done.

Here, \(\Omega \) is assumed to be a Boolean algebra. In general, I use “\(\Omega \)” to refer to the domain of probability functions, and “\(\Phi \)” to refer to any set of propositions, especially a partition.

Proof: Obvious. Or see footnote 9 for a more general proof.

Proof: Suppose that S has a credence distribution \(r_{n}\) over \(\left\{ E,\lnot E\right\} \) as a result of her experience at \(t_{n}\) and that she updates in accordance with JC. Assume that \(r_{n}\) is not new evidence and show that S does not change her credence in any \(X\in \Phi \). It suffices to prove that \(\fancyscript{C}_{n-1}\left( X\right) =\fancyscript{C}_{n}\left( X\right) \) for any \(X\in \Omega \). For this proof, let X be an arbitrary member of \(\Omega \). \(({\subseteq })\) Consider any \(x\in \fancyscript{C}_{n-1}\left( X\right) \). By definition, there is \(c_{n-1}\in \fancyscript{C}_{n-1}\) such that \(c_{n-1}\left( X\right) =x\). Consider the probability function \(c_{n}\) defined as follows: for any \(Y\in \Omega \), \(c_{n}\left( Y\right) =_{df}c_{n-1}\left( Y/E\right) r_{n}\left( E\right) +c_{n-1}\left( Y/\lnot E\right) r_{n}\left( \lnot E\right) \). By supposition, \(c_{n}\in \fancyscript{C}_{n}\). By assumption and \(\hbox {NE}_\mathrm{precise}\), \(c_{n}\left( X\right) =c_{n-1}\left( X/E\right) r_{n}\left( E\right) +c_{n-1}\left( X/\lnot E\right) r_{n}\left( \lnot E\right) =c_{n-1}\left( X/E\right) c_{n-1}\left( E\right) +c_{n-1}\left( X/\lnot E\right) c_{n-1}\left( \lnot E\right) =c_{n-1}\left( X\right) \). Thus, \(x\in \fancyscript{C}_{n}\left( X\right) \). (\(\supseteq \)) Analogous to the proof of \((\subseteq )\).

Let \(t_{2}\) be the moment of observation and \(t_{1}\) be the moment just before \(t_{2}\). Let \(\fancyscript{C}_{1}\) and \(\fancyscript{C}_{2}\) be Alex’s credal states at \(t_{1}\) and \(t_{2}\). According to GC, \( \fancyscript{C}_{2}(W)=\{ y|r_{2}\in \fancyscript{R}_{2} \& c_{1}\in \fancyscript{C}_{1} \& y=r_{2}(H)\cdot c_{1}(W/H)+r_{2}(\lnot H)c_{1}(W/\lnot H)\} =\{ y|r_{2}\in \fancyscript{R}_{2} \& c_{1}\in \fancyscript{C}_{1} \& y=r_{2}(H)\cdot c_{1}(W/H)\} \) (because \(c_{1}\left( W/\lnot H\right) =0\) for every \(c_{1}\in \fancyscript{C}_{1}\)). Therefore, \(\min _{z\in \fancyscript{C}_{2}\left( W\right) }z=\left( \min _{r_{2}\in \fancyscript{R}_{2}}r_{2}\left( H\right) \right) \cdot \left( \min _{c_{1}\in \fancyscript{C}_{1}}c_{1}\left( W/H\right) \right) =\frac{1}{2}\cdot 0=0\) and \(\max _{z\in \fancyscript{C}_{2}\left( W\right) }z=\left( \max _{r_{2}\in \fancyscript{R}_{2}}r_{2}\left( H\right) \right) \cdot \left( \max _{c_{1}\in \fancyscript{C}_{1}}c_{1}\left( W/H\right) \right) =\frac{3}{4}\cdot \frac{1}{2}=\frac{3}{8}\). Done.

The proof that \(P_{1}\left( W\right) \in \left[ \frac{3}{16},\frac{1}{4}\right] \) is similar to that of the fact that \(\fancyscript{C}_{1}\left( W\right) =\left[ \frac{3}{16},\frac{1}{4}\right] \).

I am grateful to an anonymous referee for pressing me to discuss this additional problem of GC.

I owe this point to Ilho Park.

Some reader may wonder if it is rational at \(t_{1}\) for Betty* to assign \(\left[ \frac{1}{4},\frac{1}{2}\right] \) to W as the conditional credence on H, although she forgot why she did so at \(t_{0}\). In general, is it rational for an agent to retain her previous credence although she does not remember the original reason? This is analogous to the so-called problem of forgotten reason, which has been extensively discussed by informal epistemologists (Harman 2008; Huemer 1999; Goldman 1999, 2009). Due to space limitation, I cannot discuss it in full detail here. Rather, I will point out that we tend to retain our beliefs even when we have forgotten the original reasons. If such beliefs are not justified, then it means that most of our beliefs are unjustified. Answering “No” to the above question will lead to a similar problem concerning the justificatory status of our credal states. Furthermore, even if, due to her forgetting of (b), Betty* assigns a wider interval to W conditional on H than \(\left[ \frac{1}{4},\frac{1}{2}\right] \), we can still prove that, as long as \(\fancyscript{C}_{1}^{*}\left( W/H\right) \subset \left( 0,1\right) \), \(\fancyscript{C}_{2}^{*}\left( W\right) \ne \left[ \frac{3}{16},\frac{1}{4}\right] =\fancyscript{C}_{1}^{*}\left( W\right) \).

As the anonymous referee pointed out, it is notoriously difficult to model the phenomenon of forgetting within a Bayesian framework. So one may complain that no Bayesian model applies to this example. However, Betty*’s forgetting of (b) occurs between \(t_{0}\) and \(t_{1}\), not between \(t_{1}\) and \(t_{2}\). Thus, a reasonable model of Bayesian updating will apply to the latter credal transition. Of course, we only applied GC* to that credal transition in deriving the problematic result.

See Weatherson (2007, pp. 179–183).

Proof: (\(\subseteq \)) Consider any real number \(x\in \fancyscript{C}_{1}\left( W\right) \). Hence, \(x=c_{1}\left( W/H\right) c_{1}\left( H\right) \) for some \(c_{1}\in \fancyscript{C}_{1}\). Since \(\fancyscript{R}_{2}\left( H\right) =\fancyscript{C}_{1}\left( H\right) \), \(c_{1}\left( H\right) \in \fancyscript{R}_{2}\left( H\right) \). Thus, there is some \(r_{2}\in \fancyscript{R}_{2}\) such that \(c_{1}\left( H\right) =r_{2}\left( H\right) \). Since V satisfies C, \(V\left( \fancyscript{C}_{1},r_{2}\right) =\left\{ c_{1}\in \fancyscript{C}_{1}|c_{1}\left( H\right) =r_{2}\left( H\right) \right\} \). Thus, \(c_{1}\in V\left( \fancyscript{C}_{1},r_{2}\right) \). Therefore, \(x=c_{1}\left( W/H\right) r_{2}\left( H\right) \in \fancyscript{C}_{2}\left( W\right) \). (\(\supseteq \)) Consider any real number \(x\in \fancyscript{C}_{2}\left( W\right) \). Thus, \(x=c_{1}\left( W/H\right) r_{2}\left( H\right) \) for some \(r_{2}\in \fancyscript{R}_{2}\) and \(c_{1}\in V\left( \fancyscript{C}_{1},r_{2}\right) \). Since \(r_{2}\left( H\right) \in \fancyscript{R}_{2}\left( H\right) =\fancyscript{C}_{1}\left( H\right) \), there is some \(c_{1}\in \fancyscript{C}_{1}\) such that \(r_{2}\left( H\right) =c_{1}\left( H\right) \). Since V satisfies C, \(V\left( \fancyscript{C}_{1},r_{2}\right) =\left\{ c_{1}\in \fancyscript{C}_{1}|c_{1}\left( H\right) =r_{2}\left( H\right) \right\} \). As \(c_{1}\in V\left( \fancyscript{C}_{1},r_{2}\right) \), \(c_{1}\left( H\right) =r_{2}\left( H\right) \). Therefore, \(x=c_{1}\left( W/H\right) c_{1}\left( H\right) \in \fancyscript{C}_{1}\left( W\right) \). Done.

Proof: Let \(\fancyscript{C}_{n}\) be an agent S’s credal state at \(t_{n}\), \(\fancyscript{C}_{n-1}\) be S’s credal state at \(t_{n-1}\) and \(\fancyscript{R}_{n}\) be S’s imprecise evidence over \(\{ E,\lnot E\} \) at \(t_{n}\). Assume that \(\fancyscript{R}_{n}(E)=\fancyscript{C}_{n-1}(E)\) and \(\fancyscript{C}_{n}\) is related to \(\fancyscript{C}_{n-1}\) by \(\hbox {GDKM}_{V}\) , and show that, for any \(X\in \Omega \), \(\fancyscript{C}_{n}(X)=\fancyscript{C}_{n-1}(X)\). Consider any \(X\in \Omega \). (\(\subseteq \)) Let x be any real number in \(\fancyscript{C}_{n}(X)\). By \(\hbox {GDKM}_{V}\), \(x=c_{n-1}(X/E)r_{n}(E)+c_{n-1}(X/\lnot E)r_{n}(\lnot E)\) for some \(c_{n-1}\in V(\fancyscript{C}_{n-1},r_{n}),r_{n}\in \fancyscript{R}_{n}\). Since \(\fancyscript{R}_{n}(E)=\fancyscript{C}_{n-1}(E)\), there is some \(c_{n-1}^{*}\in \fancyscript{C}_{n-1}\) such that \(r_{n}(E)=c_{n-1}^{*}(E)\). Since \(V(\cdot ,\cdot )\) satisfies C, \(V(\fancyscript{C}_{n-1},r_{n})=\{ c_{n-1}\in \fancyscript{C}_{n-1}|c_{n-1}(E)=r_{n}(E)\} \). As \(c_{n-1}\in V(\fancyscript{C}_{n-1},r_{n})\), \(c_{n-1}(E)=r_{n}(E)\) and \(c_{n-1}(\lnot E)=r_{n}(\lnot E)\). Hence, \(x=c_{n-1}(X/E)c_{n-1}(E)+c_{n-1}(X/\lnot E)c_{n-1}(\lnot E)=c_{n-1}(X)\) for some \(c_{n-1}\in \fancyscript{C}_{n-1}\). Thus, \(x\in \fancyscript{C}_{n-1}\). (\(\supseteq \)) Let x be any real number in \(\fancyscript{C}_{n-1}(X)\). So \(x=c_{n-1}(X/E)c_{n-1}(E)+c_{n-1}(X/\lnot E)c_{n-1}(\lnot E)\). Since \(\fancyscript{R}_{n}(E)=\fancyscript{C}_{n-1}(E)\), \(c_{n-1}(E)=r_{n}(E)\) for some \(r_{n}\in \fancyscript{R}_{n}\). As \(V(\cdot ,\cdot )\) satisfies C, \(c_{n-1}\in V(\fancyscript{C}_{n-1},r_{n})\). Thus, \(x=c_{n-1}(X/E)r_{n}(E)+c_{n-1}(X/\lnot E)r_{n}(\lnot E)\) for some \(r_{n}\in \fancyscript{R}_{n-1},c_{n-1}\in V(\fancyscript{C}_{n-1},r_{n})\). By \(\hbox {GDKM}_{V}\), \(x\in \fancyscript{C}_{n}\). Done.

The proof is not so difficult but somewhat lengthy. Due to the limitation in length of this paper, I omit the proof.

Proof: Consider any \(c\in V(\fancyscript{C},Black)\subseteq \fancyscript{C}\). We know that \( c(Black)=[\sum _{1\le n\le 5}c(Black/\left\langle n\right\rangle \& Heads)c(\left\langle n\right\rangle /Heads)]c(Heads)+[\sum _{1\le n\le 5}c(Black/\left\langle n\right\rangle \& \lnot Heads)c(\left\langle n\right\rangle /\lnot Heads)]c(\lnot Heads)\). Note that \( c(Black/\left\langle n\right\rangle \& \lnot Heads)=\frac{n-1}{5}<1\) for any \(n\in \{ 1,\ldots ,5\} \), and \( c\left( Black|\left\langle n\right\rangle \& Heads\right) =\frac{n}{5}<1\) for any \(n\in \left\{ 1,\ldots ,4\right\} \). Thus, if the value of \(c\left( Black\right) \) is to be one, it must be the case that\(c\left( Heads\right) =1\) and \(c\left( \left\langle 5\right\rangle /Heads\right) =1\). It immediately follows that \(c\left( \left\langle 5\right\rangle \right) =1\). Done.

Proof: Since there exists \(c\in \fancyscript{C}\) such that \(c\left( Black\right) =1=r_{Black}\left( Black\right) \), \(V\left( \fancyscript{C},r_{Black}\right) =\left\{ c\in \fancyscript{C}|c\left( Black\right) =1\right\} \). Hence, \(V\left( \fancyscript{C},r_{Black}\right) =U\left( \fancyscript{C},Black\right) \). Hence, using basically the same reasoning as in footnote 20, we can derive that \(c\left( Heads\right) =1\) and \(c\left( \left\langle 5\right\rangle \right) =1\).

I feel grateful for the useful responses from the audiences of my talk in Imprecise Probabilities in Statistics and Philosophy 2014.

Proof: Consider any \(c_{1}\in \fancyscript{C}_{1}\). Since \(\left\{ X,Y,Z\right\} \) is a partition, \(c_{1}\left( H\right) =c_{1}\left( H/X\right) c_{1}\left( X\right) +c_{1}\left( H/Y\right) c_{1}\left( Y\right) +c_{1}\left( H/Z\right) c_{1}\left( Z\right) \) and \(c_{1}\left( Y\right) =1-\left( c_{1}\left( X\right) +c_{1}\left( Z\right) \right) \). By (i), \(c_{1}\left( H\right) =\left( c_{1}\left( H/X\right) +c_{1}\left( H/Z\right) \right) c_{1}\left( X\right) +c_{1}\left( H/Y\right) c_{1}\left( Y\right) \) and \(c_{1}\left( Y\right) =1-2c_{1}\left( X\right) \). By (ii) and (iii), \(c_{1}\left( H\right) =c_{1}\left( X\right) +\frac{1}{2}c_{1}\left( Y\right) =c_{1}\left( X\right) +\frac{1}{2}\left( 1-2c_{1}\left( X\right) \right) =\frac{1}{2}\). Therefore, \(\fancyscript{C}_{1}\left( H\right) =\frac{1}{2}\). Next, observe that \(c_{1}\left( F\right) =c_{1}\left( F/X\right) c_{1}\left( X\right) +c_{1}\left( F/Y\right) c_{1}\left( Y\right) +c_{1}\left( F/Z\right) c_{1}\left( Z\right) \). By (iv) and (v), \(c_{1}\left( F\right) =\frac{4}{5}c_{1}\left( Y\right) \). Since no other restriction is imposed on the members of \(\fancyscript{C}_{1}\), \(\min _{c_{1}\in \fancyscript{C}_{1}}c_{1}\left( F\right) =0\) and \(\max _{c_{1}\in \fancyscript{C}_{1}}c_{1}\left( F\right) =\frac{4}{5}\). Therefore, \(\fancyscript{C}_{1}\left( F\right) =\left[ 0,\frac{4}{5}\right] \). Done.

Here, we can regard \(\fancyscript{R}_{2}\) as imprecise evidence defined over the partition \( \left\{ H \& Y,\lnot H \& Y\right\} \).

If the coin lands tails, she cannot distinguish the two awakenings because the memory of the first awakening is erased before the second occurs. Thus, if T, she wakes up twice in exactly the same situation.

For example, Joyce defends the so-called Chance Grounding Thesis: “(CGT) Only on the basis of known chances can one legitimately have sharp credences. Otherwise, one’s spread of credences should cover the range of chance hypotheses left open by your evidence.” (Joyce 2010, p. 289; quoted from White 2010, p. 174.) For another example, Kaplan makes a similar claim: “And when you have no evidence whatsoever pertaining to the truth or falsehood of a hypothesis P, then, for every real number n, \(1\ge n\ge 0\), your set of con-assignments should contain at least one assignment on which \(con\left( P\right) =n\).” (Kaplan 1998, p. 28; here, \(con\left( \cdot \right) \) is a credence function.) Finally, Weatherson writes: “...the representor of the agent with no empirical evidence is \(\left\{ Pr: \text{ If } p \text { is a priori certain, then } {Pr\left( p\right) =1}\right\} \).” (Weatherson 2007, p. 181.) (Hence, if p is not a priori certain, then \(Pr\left( p\right) =\left[ 0,1\right] \).)

I owe this point to the anonymous referee.

References

Bradley, R. (2007). The kinematics of belief and desire. Synthese, 156(3), 515–535.

Elga, A. (2000). Self-locating belief and the sleeping beauty problem. Analysis, 60(2), 143–147.

Elga, A. (2010). Subjective probabilities should be sharp. Philosophers Imprint, 10(5), 10–11.

Goldman, A. I. (1999). Internalism exposed. The Journal of Philosophy, 96(6), 271–293.

Goldman, A. I. (2009). Internalism, externalism, and the architecture of justification. The Journal of Philosophy, 309–338.

Harman, G. (2008). Change in view: Principles of reasoning. Cambridge: Cambridge University Press.

Huemer, M. (1999). The problem of memory knowledge. Pacific Philosophical Quarterly, 80(4), 346–357.

Jeffrey, R. (1983a). Bayesianism with a human face. In John Earman (Ed.), Testing scientific theories, minnesota studies in the philosophy of science (Vol. 10, pp. 133–156). Minneapolis: University of Minnesota Press.

Jeffrey, R. (1983b). The logic of decision. Chicago: University of Chicago Press.

Jeffrey, R. (1987). Indefinite probability judgement. Philosophy of Science, 54(4), 588–591.

Joyce, J. M. (2010). A defense of imprecise credences in inference and decision making. Philosophical Perspectives, 24(1), 281–323.

Kaplan, M. (1998). Decision theory as philosophy. Cambridge: Cambridge University Press.

Kim, N. (2009). Sleeping Beauty and shifted Jeffrey conditionalization. Synthese, 168(2), 295–312.

Lewis, D. K. (1980). A subjectivist’s guide to objective chance. Studies in inductive logic and probability, 2, 263–293.

Lewis, D. K. (2001). Sleeping beauty: A reply to Elga. Analysis, 61(3), 171–176.

Meacham, C. (2008). Sleeping beauty and the dynamics of de se beliefs. Philosophical Studies, 138, 245–269.

Rinard, S. (2013). Against radical credal imprecision. Thought: A Journal of Philosophy, 2(2), 157–165.

Titelbaum, M. G. (2008). The relevance of self-locating beliefs. Philosophical Review, 117(4), 555–606.

Van Fraassen, B. C. (2006). Vague expectation value loss. Philosophical Studies, 127(3), 483–491.

Weatherson, B. (2007). VII-the bayesian and the dogmatist. Proceedings of the Aristotelian Society, 107, 169–185.

Weintraub, R. (2004). Sleeping beauty: A simple solution. Analysis, 64(1), 8–10.

White, R. (2010). Evidential symmetry and mushy credence. In T. Szabó Gendler & J. Hawthorne (Eds.) Oxford studies in epistemology V. 3, 161–186.

Acknowledgments

This work was supported by the National Research Foundation of Korea (NRF) under the Grant funded by the Korean government (NRF-2012S1A5B5A01025359). I would like to thank Hanjo Lee, Byeongdoek Lee, Phillip Bricker, and Chris Meacham for teaching me. Also, I feel grateful to Ilho Park for offering a very insightful comment on Betty’s example. Sam Fletcher not only corrected many grammatical mistakes but he also helped me articulate the three defenses of GC that I critically discussed in Sect. 8. Finally, I would like to say “Thank you” to the anonymous referee. He (or she) was the best referee whom I’ve ever met.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Kim, N. A dilemma for the imprecise bayesian. Synthese 193, 1681–1702 (2016). https://doi.org/10.1007/s11229-015-0800-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11229-015-0800-7