Abstract

Sometimes we receive evidence in a form that standard conditioning (or Jeffrey conditioning) cannot accommodate. The principle of maximum entropy (MAXENT) provides a unique solution for the posterior probability distribution based on the intuition that the information gain consistent with assumptions and evidence should be minimal. Opponents of objective methods to determine these probabilities prominently cite van Fraassen’s Judy Benjamin case to undermine the generality of maxent. This article shows that an intuitive approach to Judy Benjamin’s case supports maxent. This is surprising because based on independence assumptions the anticipated result is that it would support the opponents. It also demonstrates that opponents improperly apply independence assumptions to the problem.

Similar content being viewed by others

References

Bovens, L. (2010). Judy Benjamin is a sleeping beauty. Analysis, 70(1), 23–26.

Bradley, R. (2005). Radical probabilism and Bayesian conditioning. Philosophy of Science, 72(2), 342–364.

Caticha, A. (2012). Entropic inference and the foundations of physics. Sao Paulo: Brazilian Chapter of the International Society for Bayesian Analysis.

Caticha, A., & Giffin, A. (2006). Updating probabilities. In MaxEnt 2006, the 26th international workshop on Bayesian inference and maximum entropy methods.

Cover, T., & Thomas, J. (2006). Elements of information theory (Vol. 6). Hoboken, NJ: Wiley.

Csiszár, I. (1967). Information-type measures of difference of probability distributions and indirect observations. Studia Scientiarum Mathematicarum Hungarica, 2, 299–318.

Diaconis, P., & Zabell, S. (1982). Updating subjective probability. Journal of the American Statistical Association, 77, 822–830.

Douven, I., & Romeijn, J. (2009). A new resolution of the Judy Benjamin problem. CPNSS Working Paper, 5(7), 1–22.

Gardner, M. (1959). Mathematical games. Scientific American, 201(4), 174–182.

Grove, A., & Halpern, J. (1997). Probability update: Conditioning vs. cross-entropy. In Proceedings of the thirteenth conference on uncertainty in artificial intelligence, Citeseer, Providence, RI.

Grünwald, P. (2000). Maximum entropy and the glasses you are looking through. Proceedings of the sixteenth conference on uncertainty in artificial intelligence (pp. 238–246). Burlington: Morgan Kaufmann Publishers.

Grünwald, P., & Halpern, J. (2003). Updating probabilities. Journal of Artificial Intelligence Research, 19, 243–278.

Halpern, J. Y. (2003). Reasoning about uncertainty. Cambridge, MA: MIT Press.

Hobson, A. (1971). Concepts in statistical mechanics. New York: Gordon and Beach.

Howson, C., & Franklin, A. (1994). Bayesian Conditionalization and Probability Kinematics. The British Journal for the Philosophy of Science, 45(2), 451–466.

Jaynes, E. (1989). Papers on probability, statistics and statistical physics. Dordrecht: Springer.

Jaynes, E., & Bretthorst, G. (1998). Probability theory: The logic of science. Cambridge, UK: Cambridge University Press.

Jeffrey, R. (1965). The logic of decision. New York: McGraw-Hill.

Shore, J., & Johnson, R. (1980). Axiomatic derivation of the principle of maximum entropy and the principle of minimum cross-entropy. IEEE Transactions on Information Theory, 26(1), 26–37.

Topsøe, F. (1979). Information-theoretical optimization techniques. Kybernetika, 15(1), 8–27.

Uffink, J. (1996). The constraint rule of the maximum entropy principle. Studies in History and Philosophy of Science Part B: Studies in History and Philosophy of Modern Physics, 27(1), 47–79.

Van Fraassen, B. (1981). A problem for relative information minimizers in probability kinematics. The British Journal for the Philosophy of Science, 32(4), 375–379.

Van Fraassen, B. (1986). A problem for relative information minimizers, continued. The British Journal for the Philosophy of Science, 37(4), 453–463.

Walley, P. (1991). Statistical reasoning with imprecise probabilities. London: Chapman and Hall.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix A: Jaynes’ constraint rule

This appendix provides a concise but comprehensive summary of Jaynes’ constraint rule not easily obtainable in the literature (although the constraint rule is a straightforward application of Lagrange multipliers). Jaynes applies it to the Brandeis Dice Problem (see Jaynes 1989, p. 243), but does not give a mathematical justification.

Let \(f\) be a probability distribution on a finite space \(x_{1},\ldots ,x_{m}\) that fulfills the constraint

An affine constraint can always be expressed by assigning a value to the expectation of a probability distribution (see Hobson 1971). In Judy Benjamin’s case, for example, let \(r(x_{1})=0, r(x_{2})=1-{\vartheta }, r(x_{3})=-{\vartheta }\hbox { and }\alpha =0\). Because \(f\) is a probability distribution it fulfills

We want to maximize Shannon’s entropy, given the constraints (2) and (3),

We use Lagrange multipliers to define the functional

and differentiate it with respect to \(f(x_{i})\)

Set (5) to \(0\) to find the necessary condition to maximize (4)

This is the Gibbs distribution. We still need to do two things: (a) show that the entropy of \(g\) is maximal, and (b) show how to find \(\lambda _{0}\) and \(\lambda _{1}\). (a) is shown in Theorem 12.1.1 in Cover and Thomas (2006) and there is no reason to copy it here.

For (b), let

To find \(\lambda _{0}\) and \(\lambda _{1}\) we introduce the constraint

To see how this constraint gives us \(\lambda _{0}\) and \(\lambda _{1}\), Jaynes’ solution of the Brandeis Dice Problem (see Jaynes 1989, p. 243) is a helpful example. We are, however, interested in a general proof that this choice of \(\lambda _{0}\) and \(\lambda _{1}\) gives us the probability distribution maximizing the entropy. That \(g\) so defined maximizes the entropy is shown in (a). We need to make sure, however, that with this choice of \(\lambda _{0}\) and \(\lambda _{1}\) the constraints (2) and (3) are also fulfilled (a standard result in the application of Lagrange multipliers).

First, we show

Then, we show, by differentiating \(\ln (Z(\beta ))\) using the substitution \(x=e^{-\beta }\)

And, finally,

Filling in the variables from Judy Benjamin’s scenario gives us result (1). The lambdas are:

We combine the normalized odds vector \((0.16,0.48,0.36)\) following from these lambdas using Dempster’s Rule of Combination with (MAP) and get result (1).

The powerset approach formalized

Let us assume a partition \(\{B_{i}\}_{i=1,{\ldots },4n}\) of \(A_{1}\,\cup \,A_{2}\,\cup \,A_{3}\) into sets that are of equal measure \(\mu \) and whose intersection with \(A_{i}\) is either the empty set or the whole set itself (this is the division into rectangles of scenario III). (MAP) dictates that the number of sets covering \(A_{3}\) equals the number of sets covering \(A_{1}\cup {}A_{2}\). For convenience, we assume the number of sets covering \(A_{1}\) to be \(n\). Let \(\mathcal C \), a subset of the powerset of \(\{B_{i}\}_{i=1,{\ldots },4n}\), be the collection of sets which agree with the constraint imposed by (HDQ), i.e.

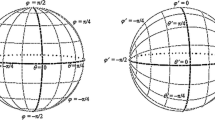

In Figs. 5 and 6 there are diagrams of two elements of the powerset of \(\{B_{i}\}_{i=1,{\ldots },4n}\). One of them (Fig. 5) is not a member of \(\mathcal C \), the other one (Fig. 6) is.

The binomial distribution dictates the expectation \(EX\) of \(X\), using simple combinatorics. In this case we require, again for convenience, that \(n\) be divisible by \(t\) and the ‘grain’ of the partition be \(s=n/t\). Remember that \(t\) is the factor by which (HDQ) indicates that Judy’s chance of being in \(A_{2}\) is greater than being in \(A_{1}\). In Judy’s particular case, \(t=3\) and \({\vartheta }=0.75\). We introduce a few variables which later on will help for abbreviation:

\(EX\), of course, depends both on the grain of the partition and the value of \(t\). It makes sense to make it independent of the grain by letting the grain become increasingly finer and by determining \(EX\) as \(s\rightarrow \infty \). This cannot be done for the binomial distribution, as it is notoriously uncomputable for large numbers (even with a powerful computer things get dicey around \(s=10\)). But, equally notorious, the normal distribution provides a good approximation of the binomial distribution and will help us arrive at a formula for \(G_{\mathrm{pws}}\) (corresponding to \(G_{\mathrm{ind}}\) and \(G_{\mathrm{max}}\)), determining the value \(q_{3}\) dependent on \({\vartheta }\) as suggested by the powerset approach.

First, we express the random variable \(X\) by the two independent random variables \(X_{12}\) and \(X_{3}. X_{12}\) is the number of partition elements in the randomly chosen \(C\) which are either in \(A_{1}\) or in \(A_{2}\) (the random variable of the number of partition elements in \(A_{1}\) and the random variable of the number of partition elements in \(A_{2}\) are decisively not independent, because they need to obey (HDQ)); \(X_{3}\) is the number of partition elements in the randomly chosen \(C\) which are in \(A_{3}\). A relatively simple calculation shows that \(EX_{3}=n\), which is just what we would expect (either the powerset approach or the uniformity approach would give us this result):

The expectation of \(X, X\) being the random variable expressing the ratio of the number of sets covering \(A_{3}\) and the number of sets covering \(A_{1}\cup {}A_{2}\cup {}A_{3}\), is

If we were able to use uniformity and independence, \(EX_{12}=n\) and \(EX=1/2\), just as Grove and Halpern suggest (although their uniformity approach is admittedly less crude than the one used here). Will the powerset approach concur with the uniformity approach, will it support the principle of maximum entropy, or will it make another suggestion on how to update the prior probabilities? To answer this question, we must find out what \(EX_{12}\) is, for a given value \(t\) and \(s\rightarrow \infty \), using the binomial distribution and its approximation by the normal distribution.

Using combinatorics,

Let us call the numerator of this fraction NUM and the denominator DEN. According to the de Moivre–Laplace Theorem,

where

Substitution yields

Consider briefly the argument of the exponential function:

with (the double prime sign corresponds to the simple prime sign for the numerator later on)

Consequently,

And, using the error function for the cumulative density function of the normal distribution,

with

We proceed likewise with the numerator, although the additional factor introduces a small complication:

Again, we substitute and get

where the argument for the exponential function is

and therefore

Using the error function,

with

for large \(s\), because the arguments for the error function \(w'\) and \(w''\) escape to positive infinity in both cases (NUM and DEN) so that their ratio goes to 1. The argument for the exponential function is

and, for \(s\rightarrow \infty \), goes to

Notice that, for \(t\rightarrow \infty , \alpha _{t}\) goes to \(0\) and

in accordance with intuition T2.

Rights and permissions

About this article

Cite this article

Lukits, S. The principle of maximum entropy and a problem in probability kinematics. Synthese 191, 1409–1431 (2014). https://doi.org/10.1007/s11229-013-0335-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11229-013-0335-8