Abstract

This article proposes a total variation (TV) based model with local constraints for heavy multiplicative noise removal. The local constraint involves multiple local windows rather than one local window as in Chen and Cheng (IEEE Trans Image Process 21(4):1650–1662, 2012), and the proposed model is an extension model of Lu et al. (Appl Comput Harmon Anal 41(2):518–539, 2016) that incorporates a spatially adaptive regularization parameter, which enables us to handle heavy multiplicative noise as well as to sufficiently denoise in homogeneous regions while preserving small details and edges. In addition, convergence analysis such as the existence and uniqueness of a solution for our model is also provided. We also derive an optimization algorithm from the first-order optimality characterization of our model. Furthermore, we utilize a proximal linearized alternating direction algorithm for efficiently solving our subproblem. Numerical results are shown to validate the effectiveness of our model, with comparisons with several existing TV based models.

Similar content being viewed by others

References

Chen, D.-Q., Cheng, L.-Z.: Spatially adapted total variation model to remove multiplicative noise. IEEE Trans. Image Process. 21(4), 1650–1662 (2012)

Lu, J., Shen, L., Xu, C., Xu, Y.: Multiplicative noise removal in imaging: an exp-model and its fixed-point proximity algorithm. Appl. Comput. Harmon. Anal. 41(2), 518–539 (2016)

Wagner, R.F., Smith, S.W., Sandrik, J.M., Lopez, H.: Statistics of speckle in ultrasound B-scans. IEEE Trans. Sonics Ultrason. 30(3), 156–163 (1983)

Schmitt, J.M., Xiang, S., Yung, K.M.: Speckle in optical coherence tomography. J. Biomed. Opt. 4(1), 95–105 (1999)

Lee, J.-S., Hoppel, K.W., Mango, S.A., Miller, A.R.: Intensity and phase statistics of multilook polarimetric and interferometric SAR imagery. IEEE Trans. Geosci. Remote Sens. 32(5), 1017–1028 (1994)

Lee, J.-S.: Digital image enhancement and noise filtering by use of local statistics. IEEE Trans. Pattern Anal. Mach. Intell. 2, 165–168 (1980)

Frost, V.S., Stiles, J.A., Shanmugan, K.S., Holtzman, J.C.: A model for radar images and its application to adaptive digital filtering of multiplicative noise. IEEE Trans. Pattern Anal. Mach. Intell. 2, 157–166 (1982)

Yu, Y., Acton, S.T.: Speckle reducing anisotropic diffusion. IEEE Trans. Image Process. 11(11), 1260–1270 (2002)

Krissian, K., Westin, C.-F., Kikinis, R., Vosburgh, K.G.: Oriented speckle reducing anisotropic diffusion. IEEE Trans. Image Process. 16(5), 1412–1424 (2007)

Aubert, G., Aujol, J.-F.: A variational approach to removing multiplicative noise. SIAM J. Appl. Math. 68(4), 925–946 (2008)

Li, Z., Lou, Y., Zeng, T.: Variational multiplicative noise removal by DC programming. J. Sci. Comput. 68(3), 1200–1216 (2016)

Shi, J., Osher, S.: A nonlinear inverse scale space method for a convex multiplicative noise model. SIAM J. Imaging Sci. 1(3), 294–321 (2008)

Steidl, G., Teuber, T.: Removing multiplicative noise by Douglas–Rachford splitting methods. J. Math. Imaging Vis. 36(2), 168–184 (2010)

Dong, Y., Zeng, T.: A convex variational model for restoring blurred images with multiplicative noise. SIAM J. Imaging Sci. 6(3), 1598–1625 (2013)

Yun, S., Woo, H.: A new multiplicative denoising variational model based on \(m\)th root transformation. IEEE Trans. Image Process. 21(5), 2523–2533 (2012)

Kang, M., Yun, S., Woo, H.: Two-level convex relaxed variational model for multiplicative denoising. SIAM J. Imaging Sci. 6(2), 875–903 (2013)

Parrilli, S., Poderico, M., Angelino, C.V., Verdoliva, L.: A nonlocal SAR image denoising algorithm based on LLMMSE wavelet shrinkage. IEEE Trans. Geosci. Remote Sens. 50(2), 606–616 (2012)

Rudin, L.I., Osher, S., Fatemi, E.: Nonlinear total variation based noise removal algorithms. Phys. D Nonlinear Phenom. 60(1–4), 259–268 (1992)

Rudin, L., Lions, P.-L., Osher, S.: Multiplicative denoising and deblurring: theory and algorithms. In: Geometric Level Set Methods in Imaging, Vision, and Graphics, pp. 103–119. Springer, Berlin (2003)

Grasmair, M.: Locally adaptive total variation regularization. In: International Conference on Scale Space and Variational Methods in Computer Vision, pp. 331–342. Springer, Berlin (2009)

Gilboa, G., Sochen, N., Zeevi, Y.Y.: Variational denoising of partly textured images by spatially varying constraints. IEEE Trans. Image Process. 15(8), 2281–2289 (2006)

Li, F., Ng, M.K., Shen, C.: Multiplicative noise removal with spatially varying regularization parameters. SIAM J. Imaging Sci. 3(1), 1–20 (2010)

Bertalmío, M., Caselles, V., Rougé, B., Solé, A.: TV based image restoration with local constraints. J. Sci. Comput. 19(1), 95–122 (2003)

Almansa, A., Ballester, C., Caselles, V., Haro, G.: A TV based restoration model with local constraints. J. Sci. Comput. 34(3), 209–236 (2008)

Dong, Y., Hintermüller, M., Rincon-Camacho, M.M.: Automated regularization parameter selection in multi-scale total variation models for image restoration. J. Math. Imaging Vis. 40(1), 82–104 (2011)

Chambolle, A.: An algorithm for total variation minimization and applications. J. Math. Imaging Vis. 20(1), 89–97 (2004)

Goldstein, T., Osher, S.: The split Bregman method for L1-regularized problems. SIAM J. Imaging Sci. 2(2), 323–343 (2009)

Hale, E.T., Yin, W., Zhang, Y.: Fixed-point continuation applied to compressed sensing: implementation and numerical experiments. J. Comput. Math. 28(2), 170–194 (2010)

Beck, A., Teboulle, M.: A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2(1), 183–202 (2009)

Yang, J., Zhang, Y.: Alternating direction algorithms for \(\ell _1\)-problems in compressive sensing. SIAM J. Sci. Comput. 33(1), 250–278 (2011)

Yang, J., Zhang, Y., Yin, W.: A fast alternating direction method for TVL1-L2 signal reconstruction from partial Fourier data. IEEE J. Sel. Top. Signal Process. 4(2), 288–297 (2010)

Wu, C., Tai, X.-C.: Augmented Lagrangian method, dual methods, and split Bregman iteration for ROF, vectorial TV, and high order models. SIAM J. Imaging Sci. 3(3), 300–339 (2010)

Osher, S., Mao, Y., Dong, B., Yin, W.: Fast linearized Bregman iteration for compressive sensing and sparse denoising. arXiv preprint arXiv:1104.0262

Ouyang, Y., Chen, Y., Lan, G., Pasiliao Jr., E.: An accelerated linearized alternating direction method of multipliers. SIAM J. Imaging Sci. 8(1), 644–681 (2015)

Woo, H., Yun, S.: Proximal linearized alternating direction method for multiplicative denoising. SIAM J. Sci. Comput. 35(2), B336–B358 (2013)

Dong, F., Zhang, H., Kong, D.-X.: Nonlocal total variation models for multiplicative noise removal using split Bregman iteration. Math. Comput. Model. 55(3), 939–954 (2012)

Chen, D.-Q., Cheng, L.-Z.: Fast linearized alternating direction minimization algorithm with adaptive parameter selection for multiplicative noise removal. J. Comput. Appl. Math. 257, 29–45 (2014)

Wang, Z., Bovik, A.C., Sheikh, H.R., Simoncelli, E.P.: Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process. 13(4), 600–612 (2004)

Kornprobst, P., Deriche, R., Aubert, G.: Image sequence analysis via partial differential equations. J. Math. Imaging Vis. 11(1), 5–26 (1999)

Acknowledgements

Myeongmin Kang was supported by NRF(2016R1C1B1009808). Miyoun Jung was supported by Hankuk University of Foreign Studies Research Fund and NRF(2017R1A2B1005363). Myungjoo Kang was supported by NRF(2014R1A 2A1A10050531, 2015R1A5A1009350) and IITP-MSIT (No. B0717-16-0107).

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix

The Proof of Lemma 1

Proof

Let us consider a minimizing sequence \(\{u_n\}\) of the problem (45).

Define \(\tilde{\gamma } = \inf (\log (t^2_{\alpha ,\beta } f))\), \(\tilde{\xi } = \sup (\log (t^2_{\alpha ,\beta } f))\), and

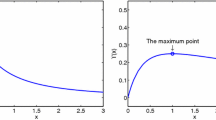

For fixed \(x\in \varOmega ,\) let \(v_0(s) = \lambda (x) \left\{ s+f(x)e^{-s}+\alpha \left( \sqrt{\frac{e^s}{f(x)}}-\beta \right) ^2 \right\} \). Then, the function \(v_0(s)\) has the unique minimum value at \(s = \log (t^2_{\alpha ,\beta }f(x))\). In addition, v(s) decreases on \((-\infty , \log (t^2_{\alpha ,\beta }f(x)]\) and increases on \([\log (t^2_{\alpha ,\beta }f(x)), \infty )\). This implies that if \(M_1 \geqslant \log (t^2_{\alpha ,\beta }f(x))\) and \(M_2 \leqslant \log (t^2_{\alpha ,\beta }f(x))\), then \( v_0(\min (s, M_1)) \leqslant v_0(s)\) and \(v_0(\max (s,M_2)) \leqslant v_0(s). \) This yields that

Moreover, by Lemma 1 in [39], we have that \(J(\inf (u,\tilde{\xi })) \leqslant J(u)\) and \(J(\sup (u,\tilde{\gamma })) \leqslant J(u)\), which yields that

In a word, we can assume that \( \tilde{\gamma } \leqslant u_n \leqslant \tilde{\xi }\), we have \(\{u_n\}\in L^1(\varOmega )\). Since \(\{u_n\}\) is a minimizing sequence of V, \(V(u_n)\) is bounded. Further, from the (32), \(V_0(u_n) \geqslant K\) for some constant K. Then, \(J(u_n)\) is bounded, and thus \(u_n\) is bounded in BV(\(\varOmega \)). By the compactness of BV\((\varOmega )\), there exists a subsequence \(\{u_{n_k}\}\) of \(\{u_n\}\) such that \(u_{n_k} \rightarrow u\) in BV(\(\varOmega \))-weak\(^{*}\) and \(u_{n_k} \rightarrow u\) in \(L^1(\varOmega )\)-strong. Then, we can obtain that \(\tilde{\gamma } \leqslant u \leqslant \tilde{\xi }\). Finally, owing to the lower semi-continuity of \(J(\cdot )\) and Fatou’s lemma, u is a solution of (45). \(\square \)

The Proof of Lemma 2

Proof

Let \(h(u):=\nabla F(u) =\lambda \left( 1-\frac{f}{e^u}+\alpha \left( \frac{e^u}{f}-\beta \sqrt{\frac{e^u}{f}} \right) \right) \). By the mean value theorem, we have that for all \(u,v \in \mathcal {U}\),

where \(\tilde{u} = t u+(1-t)v\) for some \(t \in [0,1]\), and \(L_q = \sup _{\tilde{w} \in \mathcal {U}} |q'(\tilde{w})|\). By basic calculation, we can find an upper bound for \(L_q\) as follows:

Define \(\tilde{L} := \frac{f_{\max }}{t_{\alpha ,\beta }^2 f_{\min }} + \alpha \max \left( \frac{\beta ^2}{16}, \frac{t_{\alpha ,\beta }^2 f_{\max }}{f_{\min }}- \frac{\beta }{2} \sqrt{\frac{t_{\alpha ,\beta }^2 f_{\max }}{f_{\min }}},\frac{t_{\alpha ,\beta }^2 f_{\min }}{f_{\max }}- \frac{\beta }{2} \sqrt{\frac{t_{\alpha ,\beta }^2 f_{\min }}{f_{\max }}} \right) \). Then, we have

which proves the Lipschitz continuity of \(\nabla F\).\(\square \)

Rights and permissions

About this article

Cite this article

Na, H., Kang, M., Jung, M. et al. An Exp Model with Spatially Adaptive Regularization Parameters for Multiplicative Noise Removal. J Sci Comput 75, 478–509 (2018). https://doi.org/10.1007/s10915-017-0550-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10915-017-0550-4