Abstract

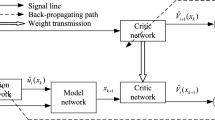

Compared with value-function-based reinforcement learning (RL) methods, policy gradient reinforcement learning methods have better convergence, but large variance of policy gradient estimation influences the learning performance. In order to improve the convergence speed of policy gradient RL methods and the precision of gradient estimation, a kind of Actor-Critic (AC) learning algorithm based on incremental least-squares temporal difference with eligibility trace (iLSTD(λ)) is proposed by making use of the characteristics of AC framework, function approximator and iLSTD(λ) algorithm. The Critic estimates the value-function according to the iLSTD(λ) algorithm, and the Actor updates the policy parameter based on a regular gradient. Simulation results concerning a grid world with 10×10 size illustrate that the AC algorithm based on iLSTD(λ) not only has quick convergence speed but also has good gradient estimation.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Sutton, R.S., Barto, A.G.: Reinforcement Learning: An Introduction. MIT Press, Cambridge (1998)

Sutton, R.S.: Learning to Predict by the Method of Temporal Differences. Machine Learning 3(1), 9–44 (1988)

Bhatnagar, S., Bowling, M., Lee, M., et al.: Natural-gradient Actor-critic Algorithms. Automatica 45(11), 2471–2482 (2009)

Bhatnagar, S., Sutton, R.S., Ghavamzadeh, H., Lee, M.: Incremental Natural Actor-critic Algorithms. In: Proceedings of Advances in Neural Information Processing Systems, Vancouver, Canada, pp. 105–112. The MIT Press, Cambridge (2007)

Bradtke, S.J., Barto, A.G.: Linear Least-squares Algorithms for Temporal Difference Learning. Machine Learning 22(1-3), 33–57 (1996)

Boyan, J.A.: Technical update: Least-squares Temporal Difference Learning. Machine Learning 49(2-3), 233–246 (2002)

Geramifard, A., Bowling, M., Zinkevich, M., Sutton, R.S.: iLSTD: Eligibility Traces and Convergence Analysis. In: Proceedings of Advances in Neural Information Processing Systems, Vancouver, Canada, pp. 826–833. The MIT Press, Cambridge (2006)

Sutton, R.S., McAllester, D., Singh, S., Mansour, Y.: Policy Gradient Methods for Reinforcement Learning with Function Approximation. In: Proceedings of Advances in Neural Information Processing Systems, Denver, USA, pp. 1057–1063. The MIT Press, Cambridge (1999)

Peters, J., Schaal, S.: Natural Actor-critic. Neurocomputing 71(7-9), 1180–1190 (2008)

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2012 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Cheng, Y., Feng, H., Wang, X. (2012). Actor-Critic Algorithm Based on Incremental Least-Squares Temporal Difference with Eligibility Trace. In: Huang, DS., Gan, Y., Gupta, P., Gromiha, M.M. (eds) Advanced Intelligent Computing Theories and Applications. With Aspects of Artificial Intelligence. ICIC 2011. Lecture Notes in Computer Science(), vol 6839. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-25944-9_24

Download citation

DOI: https://doi.org/10.1007/978-3-642-25944-9_24

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-642-25943-2

Online ISBN: 978-3-642-25944-9

eBook Packages: Computer ScienceComputer Science (R0)