Abstract

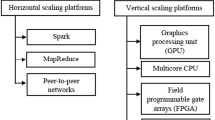

Data is often mined using clustering algorithms such as Density-Based Spatial Clustering of Applications with Noise (DBSCAN). However, clustering is computationally expensive and thus for big data, parallel processing is required. The two prevalent paradigms for parallel processing are High-Performance Computing (HPC) based on Message Passing Interface (MPI) or Open Multi-Processing (OpenMP) and the newer big data frameworks such as Apache Spark or Hadoop. This paper surveys for these two different paradigms publicly available implementations that aim at parallelizing DBSCAN and compares their performance. As a result, it is found that the big data implementations are not yet mature and in particular for skewed data, the implementation’s decomposition of the input data into parallel tasks has a huge influence on the performance in terms of run-time due to load imbalance.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

If the eps neighbourhood contains, e.g., all data points, the complexity of DBSCAN grows obviously again to \(O(n^2)\) despite these tree-based approaches.

- 2.

Remarkably, the machine learning library MLlib which is a part of Apache Spark does not contain DBSCAN implementations.

- 3.

Note that also purely serial Scala implementations of DBSCAN are available, for example GSBSCAN from the Nak machine learning library [32]. However, these obviously make not use of Apache Spark parallel processing. But still, they can be used from within Apache Spark code to call these implementations in parallel, however each does then cluster unrelated data sets [33].

- 4.

There is another promising DBSCAN implementation for Spark by Han et al. [34]: A kd-tree is used to obtain \(O(n \log n)\) complexity. Concerning the partitioning, the authors state “We did not partition data points based on the neighborhood relationship in our work and that might cause workload to be unbalanced. So, in the future, we will consider partitioning the input data points before they are assigned to executors.” [34]. However, it was not possible to benchmark it as is not available as open-source.

- 5.

Note that spheric distances of longitude/latitude points should in general not be calculated using Euclidian distance in the plane. However, as long as these points are sufficiently close together, clustering based on the simpler and faster to calculate Euclidian distance is considered as appropriate.

- 6.

Despite these exceptions, we did only encounter once during all measurements a re-submissions of a failed Spark tasks – in this case, we did re-run the job to obtain a measurement comparable to the other, non failing, executions.

- 7.

Remarkably, the authors of RDD-DBSCAN [37] performed scalability studies only up to 10 cores.

References

Schmelling, M., Britsch, M., Gagunashvili, N., Gudmundsson, H.K., Neukirchen, H., Whitehead, N.: RAVEN – boosting data analysis for the LHC experiments. In: Jónasson, K. (ed.) PARA 2010. LNCS, vol. 7134, pp. 206–214. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-28145-7_21

Memon, S., Vallot, D., Zwinger, T., Neukirchen, H.: Coupling of a continuum ice sheet model and a discrete element calving model using a scientific workflow system. In: Geophysical Research Abstracts. European Geosciences Union (EGU) General Assembly 2017, Copernicus, vol. 19 (2017). EGU2017-8499

Glaser, F., Neukirchen, H., Rings, T., Grabowski, J.: Using MapReduce for high energy physics data analysis. In: 2013 International Symposium on MapReduce and Big Data Infrastructure. IEEE (2013/2014). https://doi.org/10.1109/CSE.2013.189

Ester, M., Kriegel, H., Sander, J., Xu, X.: Density-based spatial clustering of applications with noise. In: Proceedings of the Second International Conference on Knowledge Discovery and Data Mining. AAAI Press (1996)

Apache Software Foundation: Apache Hadoop (2017). http://hadoop.apache.org/

Neukirchen, H.: Performance of big data versus high-performance computing: some observations. In: Clausthal-Göttingen International Workshop on Simulation Science, Göttingen, Germany, 27–28 April 2017 (2017). Extended Abstract

Neukirchen, H.: Survey and performance evaluation of DBSCAN spatial clustering implementations for big data and high-performance computing paradigms. Technical report VHI-01-2016, Engineering Research Institute, University of Iceland (2016)

Neukirchen, H.: Elephant against Goliath: Performance of Big Data versus High-Performance Computing DBSCAN Clustering Implementations. EUDAT B2SHARE record (2017). https://doi.org/10.23728/b2share.b5e0bb9557034fe087651de9c263000c

Guttman, A.: R-trees: a dynamic index structure for spatial searching. In: Proceedings of the 1984 ACM SIGMOD International Conference on Management of Data, vol. 14, issue 2. ACM (1984). https://doi.org/10.1145/602259.602266

Beckmann, N., Kriegel, H.P., Schneider, R., Seeger, B.: The R*-tree: an efficient and robust access method for points and rectangles. In: Proceedings of the 1990 ACM SIGMOD International Conference on Management of Data, vol. 19, issue 2. ACM (1990). https://doi.org/10.1145/93597.98741

Bentley, J.L.: Multidimensional binary search trees used for associative searching. Commun. ACM 18(9), 509–517 (1975). https://doi.org/10.1145/361002.361007

Kjolstad, F., Snir, M.: Ghost cell pattern. In: 2nd Annual Conference on Parallel Programming Patterns (ParaPLoP), 30–31 March 2010, Carefree, AZ. ACM (2010). https://doi.org/10.1145/1953611.1953615

Xu, X., Jäger, J., Kriegel, H.P.: A fast parallel clustering algorithm for large spatial databases. Data Min. Knowl. Disc. 3(3), 263–290 (1999). https://doi.org/10.1023/A:1009884809343

Dagum, L., Menon, R.: OpenMP: an industry standard API for shared-memory programming. IEEE Comput. Sci. Eng. 5(1), 46–55 (1998). https://doi.org/10.1109/99.660313

MPI Forum: MPI: A Message-Passing Interface Standard. Version 3.0, September 2012. http://mpi-forum.org/docs/mpi-3.0/mpi30-report.pdf

OpenSFS and EOFS: Lustre (2017). http://lustre.org/

IBM: General Parallel File System Knowledge Center (2017). http://www.ibm.com/support/knowledgecenter/en/SSFKCN/

Folk, M., Heber, G., Koziol, Q., Pourmal, E., Robinson, D.: An overview of the HDF5 technology suite and its applications. In: Proceedings of the EDBT/ICDT 2011 Workshop on Array Databases, pp. 36–47. ACM (2011). https://doi.org/10.1145/1966895.1966900

Dean, J., Ghemawat, S.: MapReduce: simplified data processing on large clusters. In: Proceedings of the 6th Symposium on Operating Systems Design and Implementation, Berkeley, CA, USA. USENIX Association (2004). https://doi.org/10.1145/1327452.1327492

Apache Software Foundation: Apache Spark (2017). http://spark.apache.org/

Zaharia, M., Chowdhury, M., Das, T., Dave, A., Ma, J., McCauley, M., Franklin, M.J., Shenker, S., Stoica, I.: Resilient distributed datasets: a fault-tolerant abstraction for in-memory cluster computing. In: Proceedings of the 9th USENIX Conference on Networked Systems Design and Implementation. USENIX Association (2012)

Jha, S., Qiu, J., Luckow, A., Mantha, P., Fox, G.C.: A tale of two data-intensive paradigms: applications, abstractions, and architectures. In: 2014 IEEE International Congress on Big Data, pp. 645–652. IEEE (2014). https://doi.org/10.1109/BigData.Congress.2014.137

MacQueen, J., et al.: Some methods for classification and analysis of multivariate observations. In: Proceedings of the Fifth Berkeley Symposium on Mathematical Statistics and Probability, Volume 1: Statistics, University of California Press, Berkeley, California, pp. 281–297 (1967)

Ganis, G., Iwaszkiewicz, J., Rademakers, F.: Data analysis with PROOF. In: Proceedings of XII International Workshop on Advanced Computing and Analysis Techniques in Physics Research. Number PoS(ACAT08)007 in Proceedings of Science (PoS) (2008)

Wang, Y., Goldstone, R., Yu, W., Wang, T.: Characterization and optimization of memory-resident MapReduce on HPC systems. In: 2014 IEEE 28th International Parallel and Distributed Processing Symposium, pp. 799–808. IEEE (2014). https://doi.org/10.1109/IPDPS.2014.87

Kriegel, H.P., Schubert, E., Zimek, A.: The (black) art of runtime evaluation: are we comparing algorithms or implementations? Knowl. Inf. Syst. 52(2), 341–378 (2016). https://doi.org/10.1007/s10115-016-1004-2

Schubert, E., Koos, A., Emrich, T., Züfle, A., Schmid, K.A., Zimek, A.: A framework for clustering uncertain data. PVLDB 8(12), 1976–1979 (2015). https://doi.org/10.14778/2824032.2824115

Patwary, M.M.A., Palsetia, D., Agrawal, A., Liao, W.k., Manne, F., Choudhary, A.: A new scalable parallel DBSCAN algorithm using the disjoint-set data structure. In: International Conference for High Performance Computing, Networking, Storage and Analysis (SC), pp. 1–11. IEEE (2012). https://doi.org/10.1109/SC.2012.9

Götz, M., Bodenstein, C., Riedel, M.: HPDBSCAN: highly parallel DBSCAN. In: Proceedings of the Workshop on Machine Learning in High-Performance Computing Environments, held in conjunction with SC15: The International Conference for High Performance Computing, Networking, Storage and Analysis. ACM (2015). https://doi.org/10.1145/2834892.2834894

Patwary, M.M.A.: PDSDBSCAN source code (2017). http://users.eecs.northwestern.edu/~mpatwary/Software.html

Götz, M.: HPDBSCAN source code. Bitbucket repository (2016). https://bitbucket.org/markus.goetz/hpdbscan

Baldridge, J.: ScalaNLP/Nak source code. GitHub repository (2015). https://github.com/scalanlp/nak

Busa, N.: Clustering geolocated data using Spark and DBSCAN. O’Reilly (2016). https://www.oreilly.com/ideas/clustering-geolocated-data-using-spark-and-dbscan

Han, D., Agrawal, A., Liao, W.K., Choudhary, A.: A novel scalable DBSCAN algorithm with Spark. In: 2016 IEEE International Parallel and Distributed Processing Symposium Workshops, pp. 1393–1402. IEEE (2016). https://doi.org/10.1109/IPDPSW.2016.57

Litouka, A.: Spark DBSCAN source code. GitHub repository (2017). https://github.com/alitouka/spark_dbscan

Stack Overflow: Apache Spark distance between two points using squaredDistance. Stack Overflow discussion (2015). http://stackoverflow.com/a/31202037

Cordova, I., Moh, T.S.: DBSCAN on Resilient Distributed Datasets. In: 2015 International Conference on High Performance Computing and Simulation (HPCS), pp. 531–540. IEEE (2015). https://doi.org/10.1109/HPCSim.2015.7237086

Cordova, I.: RDD DBSCAN source code. GitHub repository (2017). https://github.com/irvingc/dbscan-on-spark

He, Y., Tan, H., Luo, W., Feng, S., Fan, J.: MR-DBSCAN: a scalable MapReduce-based DBSCAN algorithm for heavily skewed data. Front. Comput. Sci. 8(1), 83–99 (2014). https://doi.org/10.1007/s11704-013-3158-3

aizook: Spark\(\_\)DBSCAN source code. GitHub repository (2014). https://github.com/aizook/SparkAI

Raad, M.: DBSCAN On Spark source code. GitHub repository (2016). https://github.com/mraad/dbscan-spark

He, Y., Tan, H., Luo, W., Mao, H., Ma, D., Feng, S., Fan, J.: MR-DBSCAN: an efficient parallel density-based clustering algorithm using MapReduce. In: 2011 IEEE 17th International Conference on Parallel and Distributed Systems (ICPADS), pp. 473–480. IEEE (2011). https://doi.org/10.1109/ICPADS.2011.83

Dai, B.R., Lin, I.C.: Efficient map/reduce-based DBSCAN algorithm with optimized data partition. In: 2012 IEEE Fifth International Conference on Cloud Computing, pp. 59–66. IEEE (2012). https://doi.org/10.1109/CLOUD.2012.42

Bodenstein, C.: HPDBSCAN Benchmark test files. EUDAT B2SHARE record (2015). https://doi.org/10.23728/b2share.7f0c22ba9a5a44ca83cdf4fb304ce44e

Amdahl, G.M.: Validity of the single processor approach to achieving large scale computing capabilities. In: AFIPS Conference Proceedings Volume 30: 1967 Spring Joint Computer Conference, pp. 483–485. American Federation of Information Processing Societies (1967). https://doi.org/10.1145/1465482.1465560

Acknowledgment

The author likes to thank all those who provided implementations of their DBSCAN algorithms. Special thanks go to the division Federated Systems and Data at the Jülich Supercomputing Centre (JSC), in particular to the research group High Productivity Data Processing and its head Morris Riedel. The author gratefully acknowledges the computing time granted on the supercomputer JUDGE at Jülich Supercomputing Centre (JSC).

The HPDBSCAN implementation of DBSCAN will be used as pilot application in the research project DEEP-EST (Dynamical Exascale Entry Platform – Extreme Scale Technologies) which receives funding from the European Union Horizon 2020 – the Framework Programme for Research and Innovation (2014–2020) under Grant Agreement number 754304.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Neukirchen, H. (2018). Elephant Against Goliath: Performance of Big Data Versus High-Performance Computing DBSCAN Clustering Implementations. In: Baum, M., Brenner, G., Grabowski, J., Hanschke, T., Hartmann, S., Schöbel, A. (eds) Simulation Science. SimScience 2017. Communications in Computer and Information Science, vol 889. Springer, Cham. https://doi.org/10.1007/978-3-319-96271-9_16

Download citation

DOI: https://doi.org/10.1007/978-3-319-96271-9_16

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-96270-2

Online ISBN: 978-3-319-96271-9

eBook Packages: Computer ScienceComputer Science (R0)