Abstract

A new Monte Carlo algorithm for solving singular linear systems of equations is introduced. In fact, we consider the convergence of resolvent operator \(R_{\lambda }\) and we construct an algorithm based on the mapping of the spectral parameter \(\lambda \). The approach is applied to systems with singular matrices. For such matrices we show that fairly high accuracy can be obtained.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Consider the linear system \(Tx=b\) where \(T\in \mathbb {R}^{n\times n}\) is a nonsingular matrix and b, T are given. If we consider \(L=I-T\), then

The iterative form of (1) is \(x^{(k+1)}=Lx^{(k)}+b, k=0,1,2...\). Let us have now \(x^{0}=0, L^0=I\), we have

If \(\Vert L\Vert <1\), then \(x^{(k)}\) tends to the unique solution x [7]. In fact, the solution of (1) can be obtained by using the iterations

We consider the stochastic approach. Suppose that we have a Markov chain given by:

where \(\alpha _i,~i=0,1,2,3...,k\) belongs to the state space \(\{1,2,...,n\}\). Then \(\alpha ,~\beta \in \{1,2...,n\}\), \(p_{\alpha }=P(\alpha _{0}=\alpha )\) is the probability that the Markov chain starts at state \(\alpha \) and \(p_{\alpha \beta }=P(\alpha _{i+1}=\beta |\alpha _{i}=\alpha )\) is the transition probability from state \(\alpha \) to \(\beta \). The set of all probabilities \(p_{\alpha \beta }\) defines a transition probability matrix \([p_{\alpha \beta }]\). We say that the distribution \([p_1,p_2 {\ldots }, p_n]^{t}\) is acceptable for a given vector h, and the distribution \(p_{\alpha \beta }\) is acceptable for matrix L, if \(p_{\alpha }>0\) when \(h_{\alpha }\ne 0\), and \(p_{\alpha }\ge 0\) when \(h_{\alpha }=0\), and \(p_{\alpha \beta }> 0\) when \(l_{\alpha \beta }\ne 0\) and \(p_{\alpha \beta }\ge 0\) when \(l_{\alpha \beta }=0\) respectively. We assume

for all \(\alpha =1,2 {\ldots },n\). The random variable whose mathematical expectation is equal to \(\langle x, h\rangle \) is given by the following expression

where \(W_0=1, W_j=W_{j-1}\frac{l_{\alpha _{j-1}\alpha _j}}{p{\alpha _{j-1}\alpha _j}}, j=1,2,3...\). We use the following notation for the partial sum:

It is shown that \(E(\theta _i(h))=\langle h, \varSigma _{m=0}^{i} L^m b \rangle =\langle h, x^{(i+1)} \rangle \) and \(E(\theta _i(h))\) tends to \(\langle x, h\rangle \) as \(i\rightarrow \infty \) [7]. To find \(r^{th}\) component of x, we put

It follows that

The number of Markov chain is given by \(N\ge (\frac{0.6745}{\epsilon }\frac{\Vert b\Vert }{(1-\Vert L\Vert )})^2\). With considering N paths \({\alpha _0}^{(m)}\rightarrow {\alpha _1}^{(m)}\rightarrow ...\rightarrow {\alpha _k}^{(m)},~m=1, 2, 3..., N\), on the coefficient matrix, we have the Monte Carlo estimated solution by

The condition \(\Vert L\Vert \le 1\) is not very strong. In [9, 10], it is shown that, it is possible to consider a Monte Carlo algorithm for which the Neumann series does not converge.

In this paper, we continue research on resolvent Monte Carlo algorithms presented in [4] and developed in [2, 3, 5]. We consider Monte Carlo algorithms for solving linear systems in the case when the corresponding Neumann series does not necessarily converge. We apply a mapping of the spectral parameter \(\lambda \) to obtain a convergent algorithm. First, sufficient conditions for the convergence of the resolvent operator are given. Then the Monte Carlo algorithm is employed.

2 Resolvent Operator Approach

2.1 The Convergence of Resolvent Operator

We study the behaviour of the equation

depending on the parameter \(\lambda .\) Define nonsingular values of L by

\(\chi (L)=(\pi (L))^c\) is called the characteristic set of L. Let X and Y be Banach spaces and let \(U=\{x\in X: \Vert x\Vert \le 1\}.\) An operator \(L:X\rightarrow Y\) is called compact if the closure of L(U) is compact. By Theorem 4.18 in [8], if dimension the rang of L is finite, then L is compact. The statement that \(\lambda \in \pi (L)\) is equivalent to asserting the existence of the two-sided inverse operator \((I-\lambda L)^{-1}\). It is shown that for compact operators, \(\lambda \) is a characteristic point of L if and only if \(\frac{1}{\lambda }\) is an eigenvalue of L. Also, it is shown that for every \(r>0\), the disk \(|\lambda |<r\) contains at most a finite number of characteristic values.

The operator \(R_\lambda \) defined by \(R_\lambda =(I-\lambda L)^{-1}\) is called the resolvent of L, and

The radius of convergence r of the series is equal to the distance \(r_0\) from the point \(\lambda =0\) to the characteristic set \(\chi (L)\). Let \(\lambda _1,\lambda _2, {\ldots }\) be the characteristic values of L that \(|\lambda _1|\le |\lambda _2|\le ...\). The systematic error of the above presentation when m terms are used is

where \(\rho \) is multiplicity of roots \(\lambda _1\). This follows that when \(|\lambda |\ge |\lambda _1|\) the series does not converge. In this case we apply the analytical method in functional analysis. The following theorem for the case of compact operators has been proved in [6].

Theorem 1

Let \(\lambda _0\) be a characteristic value of a compact operator L. Then, in a sufficiently small neighbourhood of \(\lambda _0\), we have the expansion

Here r is the rank of characteristic value \(\lambda _0\), the operators \(L_{-r},...,L_{-1}\) are finite dimensional and \(L_{-r}\ne 0\). The series on the right-hand side of (6) is convergent in the space of operators B(X, X).

3 The Convergence of Monte Carlo Method

Let \(\lambda _1, \lambda _2,..\) be real characteristic values of L such that \(\lambda _k\in (-\infty ,-a]\). In this case we may apply a mapping of the spectral parameter \(\lambda \). We consider a domain \(\varOmega \) lying inside the definition domain of \(R_{\lambda }\) as a function of \(\lambda \) such that all characteristic values are outside of \(\varOmega \), \(\lambda _{*}=1\in \varOmega \), \(0\in \varOmega \). Define \(\psi (\alpha )=\frac{4a\alpha }{(1-\alpha )^2},~(|\alpha |<1)\), which maps \(\{\alpha : |\alpha |<1 \}\) to \(\varOmega \) described in [1]. Therefore the resolvent operator can be written in the form

where \(g_k^{(m)}=\varSigma _{j=k}^{m}d_k^{(j)}\alpha ^j\) and \(c_k=L^{k+1}b\). In [1], it is shown that \(d_k^{(j)}=(4a)^kC_{k+j-1}^{2k-1}\). All in all, in the following theorem, it is shown that the random variable whose mathematical expectation is equal to \(\langle h, \varSigma _{k=0}^{m} L^{k}\rangle \), is given by the following expression:

where \(W_0=1, W_j=W_{j-1}\frac{l_{\alpha _{j-1}\alpha _j}}{p_{\alpha _{j-1}\alpha _j}}, j=1,2,3...\), \(g_0^{(m)}=1\) and \(\alpha _0,\alpha _1,...\) is a Markov chain with initial probability \(p_{\alpha _0}\) and one step transition probability \(p_{\alpha _{\nu -1}\alpha _{\nu }}\) for choosing the element \(l_{\alpha _{\nu -1}\alpha _{\nu }}\) of the matrix L [1].

Theorem 2

Consider matrix L, whose Neumann series does not necessarily converge. Let \(\psi (\alpha )=\frac{4a\alpha }{(1-\alpha )^2}\) be the required mapping, so that the presentation \(g_k^{(m)}\) exists. Then

In [5], authors have analysed the robustness of the Monte Carlo algorithm for solving a class of linear algebra problems based on bilinear form of matrix powers \(\langle h,L^{k}b\rangle \). In [5], authors have considered real symmetric matrices with norms smaller than one. In this paper, results are extended considerably compared to cases [3, 5]. We consider singular matrices. For matrices that are stochastic matrices the accuracy of the algorithm is particularly high.

3.1 Numerical Tests

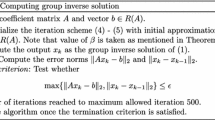

In this section of paper we employed our resolvent Monte Carlo algorithm for solving systems of singular linear algebraic equations. The test matrices are randomly generated. The factor of the improvement of the convergence depends on parameter \(\alpha \). An illustration of this fact is Table 1. We consider randomly generated matrices of order 100, 1000 and 5000. But more precise consideration shows that the error decreases with the increasing of the matrix size.

4 Conclusion

A new Monte Carlo algorithm for solving singular linear systems of equations is presented in this paper. The approach is based on the resolvent operator \(R_{\lambda }\) convergence. In fact we construct an algorithm based on the mapping of the spectral parameter \(\lambda \). The approach is applied to systems with singular matrices. The initial results show that for such matrices a fairly high accuracy can be obtained.

References

Dimov, I.T.: Monte Carlo Methods for Applide Scientists. World Scientific Publishing, Singapore (2008)

Dimov, I., Alexandrov, V.: A new highly convergent Monte Carlo method for matrix computations. Mathe. Comput. Simul. 47, 165–181 (1998)

Dimov, I., Alexandrov, V., Karaivanova, A.: Parallel resolvent Monte Carlo algorithms for linear algebra problems. Monte Carlo Method Appl. 4, 33–52 (1998)

Dimov, I.T., Karaivanova, A.N.: Iterative monte carlo algorithms for linear algebra problems. In: Vulkov, L., Waśniewski, J., Yalamov, P. (eds.) WNAA 1996. LNCS, vol. 1196, pp. 150–160. Springer, Heidelberg (1997). https://doi.org/10.1007/3-540-62598-4_89

Dimov, I.T., Philippe, B., Karaivanova, A., Weihrauch, C.: Robustness and applicability of Markov chain Monte Carlo algorithms for eigenvalue problems. Appl. Math. Model. 32, 1511–1529 (2008)

Kantorovich, L.V., Akilov, G.P.: Functional Analysis. Pergamon Press, Oxford (1982)

Rubinstein, R.Y.: Simulation and the Monte Carlo Method. Wiley, New York (1981)

Rudin, W.: Functional Analysis. McGraw Hill, New York (1991)

Sabelfeld, K.K.: Monte Carlo Methods in Boundary Value Problems. Springer, Heidelberg (1991)

Sabelfeld, K., Loshchina, N.: Stochastic iterative projection methods for large linear systems. Monte Carlo Methods Appl. 16, 1–16 (2010)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG, part of Springer Nature

About this paper

Cite this paper

Fathi Vajargah, B., Alexandrov, V., Javadi, S., Hadian, A. (2018). Novel Monte Carlo Algorithm for Solving Singular Linear Systems. In: Shi, Y., et al. Computational Science – ICCS 2018. ICCS 2018. Lecture Notes in Computer Science(), vol 10862. Springer, Cham. https://doi.org/10.1007/978-3-319-93713-7_16

Download citation

DOI: https://doi.org/10.1007/978-3-319-93713-7_16

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-93712-0

Online ISBN: 978-3-319-93713-7

eBook Packages: Computer ScienceComputer Science (R0)