Abstract

This chapter reflects on how journals and book publishers in the fields of humanities and social sciences are studied and evaluated in Spain, particularly with regard to assessments of books and book publishers. The lack of coverage of Spanish output in international databases is underlined as one of the reasons for the development of nationwide assessment tools, both for scholarly journals and books. These tools, such as RESH and DICE (developed by ILIA research team), are based on a methodology which does not rely exclusively on a citation basis, thus providing a much richer set of information. They were used by the main Spanish assessment agencies, whose key criteria are discussed in this chapter. This chapter also presents the recently developed expert survey-based methodology for the assessment of book publishers included in the system Scholarly Publishers Indicators.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

There is little doubt that scholarly communication, reading and citation habits among humanists and social scientists differ from those in other scientific disciplines (as has been studied by Glänzel and Schoepflin 1999; Hicks 2004; Nederhof and Zwaan 1991; Nederhof 2006; Thompson 2002, among many others). Considerable scientific evidence points to the following: in the social sciences and the humanities (SSH), (a) there is a stronger citation pattern in books and book chapters; (b) taking into account the more limited use of scholarly journals, the national-oriented ones are more relevant than the international-oriented ones; (c) this last attribute is related to the local/national character of the research topics covered by the SSH; and (d) the internationality of the research in these branches is conditioned by the research topics.

As a brief profile of Spanish scholarly journals, Thomson Reuters Essential Science Indicators ranks Spain ninth for its scientific production and eleventh for the number of citations received. The number of scholarly journals produced in Spain is quite impressive (data from 2012): 1,826 in SSH, 277 in science and technology and 240 in biomedical sciences. Concerning SSH titles, 58 are covered by the Arts and Humanities Citation Index (AHCI), 44 by the Social Science Citation Index (SSCI), 214 by the European Reference Index for the Humanities (ERIH)—both in the 2007 and 2011 lists. These figures indicate an acceptable degree of visibility of Spanish literature in the major international databases, especially if compared with the undercoverage in these databases 15 years ago. Nevertheless, these percentages are not sufficient for dealing adequately with the evaluation process of researchers, departments or schools of SSH. Taking into consideration just the scholarly production included in Web of Science (WoS) or in Scopus, a type of scholarly output which is essential in SSH is underestimated: works published in national languages which have a regional or local scope.

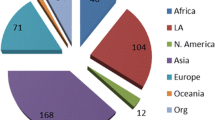

As shown in Fig. 1, the number of Spanish journals not covered by any of these sources is enormous—a group too large to be dismissed. There are at least three reasons for this lack of coverage: (a) Perhaps there are too many journals published in these areas, which can be explained not only by the existence of different schools of thought but also because of the eagerness of universities to have their own reference publications, as another indicator of their status within the scholarly community; (b) in some of these journals, there is a lack of quality and professionalization; and (c) there are high quality journals which will never be covered by those databases due to their lack of internationality—they are specialized in local topics—because they are published in Spanish and because international databases need to define a limited corpus of source journals. It is important to note, on the one hand, that indexing new journals is costly, and, on the other hand, the selective nature of these databases make them suitable for evaluation purposes.

Providing a solution to this problem has been a priority of different research groups in Spain. In the last two decades, several open indicators systems covering Spanish scholarly journals have been created especially for SSH. In all cases, the main motivation for doing so was to build national sources with indicators for journals in a way that complements international sources, to obtain a complete picture of scholarly output in SSH.

The construction of those tools constitutes the applied research developed by the aforementioned research groups, while the theoretical research has had as its object of study the communication and citation habits of humanists and social scientists, as well as the Spanish scientific policy and its research evaluation processes. Such work has drawn the following unequivocal conclusion: not only it is desirable to provide indirect quality indicators for the whole set of journals in a given country; for the successful development of research evaluation in those fields, it is necessary to pay attention to scholarly books, recognize their role as scientific output, increase their weight in assessment processes and develop and apply indicators which might help with assessment processes—but not provide the ultimate verdict (Giménez-Toledo et al. 2015).

2 Research Evaluation in Social Sciences and Humanities in Spain

Research evaluation in Spain is not centralized in a single institution. Several agencies have, among their aims, the assessment of higher education and research institutions, research teams, research projects and scholars. All these agencies are publicly funded and depend on the Spanish Public Administration; nevertheless, their procedures and criteria are not harmonized. This lack of coordination in procedures and criteria can be partially explained by the different objectives which each of these agencies has, but it puzzles scholars and causes confusion regarding the national science policy, which must be the sole one.Footnote 1

The three main evaluation agencies in Spain are CNEAI, ANEP and ANECA. CNEAI (National Commission for the Assessment of Research Activity) is in charge of evaluating lecturers and research staff, through assessing their scientific activity, especially their scientific output. Every 6 years, each researcher may apply for the evaluation of his/her scholarly activity during the last 6 years. A successful result means a salary complement, but what is more important is the social recognition that this evaluation entails: it enables promotions or appointment to PhD committees, or even having a lower workload as a lecturer (BOE 2009).

ANEP (National Evaluation and Foresigh Agency) assesses research projects. Part of its work includes evaluating the research teams leading research projects. Its reports are strongly considered by the Ministry in its decisions to fund (or not fund) research projects.

Finally, ANECA (National Agency for Quality Assessment and Accreditation) has the ultimate goal of contributing to improving the quality of the higher education system through the assessment, certification and accreditation of university degrees, programmes, teaching staff and institutions.

Although the Ministry of Economy and Competitiveness, which currently handles research policy matters,Footnote 2 performs ex ante and ex post assessments of its funded projects, and the executive channel for that assessment is ANEP. In addition, FECYT (the Spanish Foundation on Science and Technology) manages assessment issues, since it has the task of evaluating the execution and results of the Spanish National Research Plan. Nevertheless, its conclusions do not directly target researchers nor universities but the national science policy as a whole.

Unlike in other European countries, Spanish assessment agencies are not funding bodies. Each of them establishes its own evaluation procedures, criteria and sources from which to obtain indicators.

Over the past several years, all of these organizations progressively defined specific criteria for the different groups of disciplines, as a form of recognition of their differences. This occurred not only in the case of SSH but also in other fields, such as engineering and architecture. Some researchers regard this specificity as a less demanding subsystem for certain disciplines. Nevertheless, it seems obvious that if communication patterns differ because of the nature of the research, the research evaluation methods should not omit them. Moreover, research assessment by field or discipline is not unique to the Spanish context; a clear example of the extended use of such methodologies is the assessment system applied in the Research Excellence Framework (REF).Footnote 3

The difference in the assessment procedures established by Spanish agencies can be clearly seen in the criteria for publications. With respect to SSH, the following points are worth mentioning:

-

Books are taken into account. This might seem obvious, but, in other disciplines, they are not considered at all. In SSH, some quality indicators for books or book publishers are foreseen (see below).

-

Regarding journals, and as a common pattern for all fields, WoS is the main source, that is, hierarchically it has much more value than the others. Nevertheless, there are two relevant differences in journal sources for SSH. On one hand, alternative international sources, such as ERIH, Scopus and Latindex, are also mentioned, even if they appear to have a lower weight. On the other hand, national sources, such as DICEFootnote 4 or In Recs,Footnote 5 which provide quality and impact indicators for Spanish journals, are considered as well.

The fact that national or international sources are taken into account to obtain the quality indicators of journals (impact, visibility, editorial management, etc.) does not mean that all sources have the same status or weight. However, it does guarantee that a more complete research evaluation can be carried out, by considering most of the scholarly production of an author, research team, etc., and not only what is indexed by WoS. Since some national sources include all journals published in the country, expert panels consider the value of indicators (level of internationalization, peer reviewed journal, etc.), not just their inclusion in the information system.

This is not how it was 15 years ago. However, the appearance of various evaluation agencies, the development of national scientific research plans and the demands of the scientific community have caused the various evaluation agencies (ANECA, CNEAI and ANEP) to gradually refine their research evaluation criteria, and specifically those that refer to publications.

3 Spanish Social Sciences and Humanities Journals’ Indicators

Similar to some Latin American countries, such as Colombia, Mexico or Brazil, Spain has extensive experience in the study of its scholarly publications, both in its librarian aspects, such as identification and contents indexation, and in bibliometric or evaluative dimensions.

The Evaluation of Scientific Publications Research Group (EPUC)Footnote 6—recently transformed into ÍLIA. Research Team on Scholarly Books—is part of the Centre for Human and Social Sciences (CCHS) at the Spanish National Research Council (CSIC). It was created in 1997 in order to carry out the first systematic studies on the evaluation of scientific journals in SSH.

Shortly thereafter, Spain joined the Latindex system (journal evaluation system, at the basic level, for the countries of Latin America, Spain and Portugal), and this group took charge of representing Spain in this system until 2013.

The team is dedicated to the study of scholarly publications in SSH, particularly in the development and application of quality indicators for scholarly journals and books. One of the objectives of the research is to define the published SSH research so that the systems of research evaluation can consider the particularities of scholarly communication in these fields without renouncing the quality requirements. Another objective is to improve, by means of evaluation, the average quality of Spanish publications.

During the last decade, the team developed the journal evaluation systems RESHFootnote 7 and DICE.Footnote 8 The former was built and funded within the framework of competitive research projects (Spanish National Plan for Research, Development & Innovation), while the latter was funded between 2006 and 2013 by ANECA. It is worth mentioning the issue of funding, since it is a crucial issue not only for creating rigorous and reliable information systems but also for guaranteeing the sustainability of those systems. Going even further, public institutions should support the production of indicators which can be used for evaluating research outputs, mostly developed under the auspices of Spanish public funds (METRIS 2012, p. 25). In this way, public funding generates open systems and makes them available, as a public service, to all researchers, guaranteeing transparency and avoiding extra-scholarly interests from non-public database producers. Furthermore, these systems are complementary to the information which can be extracted from the international databases.

Unfortunately, the production of indicators for Spanish publications has not had stable funding. Even the funding of DICE by ANECA, probably the most stable source, ended in 2013 due to budgetary cuts.

As regards RESH and DICE, although they are no longer updated, they are still available online, and they have influenced other Latin American systems. Both systems provided quality indicators for Spanish SSH journals and were useful for researchers, publishers, evaluators of scientific activity and librarians. In addition, they were an essential source of information for the studies carried out by EPUC, as they permitted the recognition, for each discipline, of publication practices, the extent of the validity of each indicator, the particular characteristics of each publication, the level of compliance with editorial standards, the kind of editorial management, etc.

The most complete of these is RESH (see Fig. 2), developed in collaboration with the EC3 group from the University of Granada. It includes more than 30 indirect quality indicators for 1,800 SSH journals.

Users can see all Spanish scholarly journals classified by field. For every single title, its level of compliance with the different indicators established by evaluation agencies (see Table 1 for a list of indicators) is provided (ANECA 2007). Some of them include peer review (refereed/non-refereed journal), databases indexing/abstracting the journal, features of the editorial/advisory board (internationality and represented institutions), percentage of international papers (international authorship) and compliance with the frequency of publication.

This kind of layout makes the system practical. In other words, agencies may check the quality level of a journal according to their established criteria; researchers may search for journals of different disciplines and different levels of compliance with quality indicators; and editors may check how the journals are behaving according to the quality indicators (Fig. 3).

RESH also included three more quality indicators not specifically mentioned by evaluation agencies:

-

Number and name of databases indexing/abstracting the journal, as a measure of the journals dissemination (see Fig. 3). This information was obtained by carrying out searches and analysing lists of publications indexed in national and international databases.

-

An indicator related to experts opinion, since scholars are the only ones who can judge the journals content quality. This indicator was obtained from a survey among Spanish SSH researchers carried out in 2009. The study had a response rate of over 50 % (more than 5,000 answers). By including this element in the integrated assessment of a journal, correlations (or the lack thereof) among different quality indicators may arise. This shall allow for a more accurate analysis of each journal.

-

An impact measure for each journal, similar to the Thomson Reuters Impact Factor, but calculated just on the basis of Spanish SSH journals. These data will reveal how Spanish journals cite Spanish journals.

Since no single indicator may summarize the quality of a journal, it seems to be more objective to take into account all these elements in order to provide a clear idea of the global quality of each publication.

4 Book Publishers Assessment

On the one hand, as mentioned previously, books are essential as scholarly outputs of humanists and certain social scientists. Publishing books or using them as preferential sources of research are not erratic choices. On the contrary, books are the most adequate communication channel for the research carried out in the SSH fields.

On the other hand, SSH research should not be evaluated according to others fields patterns but according to their own communication habits. This is not a question of the exceptionality of SSH research but of the nature and features of each discipline. Therefore, an appropriate weight to books in the evaluation of scholarly output is needed to avoid forcing the humanist in the long run to research and publish in a different format, with subsequent prejudices to advance certain kinds of knowledge.

Scholarly publications are the main pillar of the scholarly evaluation conducted by the different assessment agencies.

During the last decade, Spanish evaluation agencies have provided details on journal evaluation criteria. Consequently, the rules are now clearer and more specific for scholars. However, in the case of book assessments, there is still a lot of work to be done. Evaluation agencies have mentioned quality indicators for books. Despite citation products, such as Book Citation Index, Scopus and Google Scholar, there were no sources offering data for making more objective the evalauation of a certain book.

Spanish evaluation agencies have mentioned the following indicators for assessing books in SSH: citations, editors, collections, book reviews in scholarly journals, peer review, translations to other languages, research manuscripts, dissemination in databases, library catalogues and publisher prestige. Nevertheless, generally speaking, the formulation of these criteria is diffuse, subjective or difficult for conducting an objective assessment.

5 Publisher’s Prestige

One of the possible approaches to infer the quality of books is to focus on the publisher. In fact, a publishers prestige is one of the most cited indicators by evaluation agencies. Moreover, the methods for analysing quality at the publisher level seem to be more feasible and efficient than at the series or book level, at least if a qualitative approach is pursued. By establishing the quality or prestige of the publisher, the quality of the monographs published could be inferred somehow. The same actually happens with scholarly papers: they are valued according to the quality or impact of the journal in which they have been published.

With the aim of going into more depth in the study of the quality of books, and mainly to provide some guideline indicators on the subject, the ILIA research team has been working on the concept of publishers prestige. In the framework of our last research projects,Footnote 9 we wondered about what publishing prestige is, how it could be defined, which publishers are considered prestigious or how we could make this concept more objective.

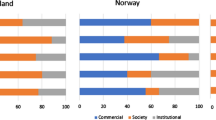

The main objectives of this researchFootnote 10 have been (a) to know the indicators or features that are more valued and accepted by Spanish SSH researchers for evaluating books or book publishers, (b) to identify more relevant publishers according to expert opinion and (c) to analyse how these results could be used in evaluation processes.

In order to achieve these objectives, ILIA designed a survey, aimed at Spanish researchers working in the different disciplines of SSH. Their opinion is the closest expression to the quality of the monographs published by a publisher, as they are the specialized readers and authors who can judge the content of the works, although globally. As the results are opinions, there is always room for bias. Bias nevertheless becomes weaker when the population consulted is wide and the response rate is high.

The survey was sent by e-mail to 11,000 Spanish researchers and lecturers. They had at least a 6-year research period approved by CNEAI. In total, 3,045 completed surveys were returned, representing a 26 % response rate.

One of the questions asked the experts to indicate the three most important publishers in their disciplines. The Indicator of Quality of Publishers according to Experts (ICEE) was applied to the results obtained:

where \(n_i\) is the number of votes received by the publisher in position i (1st, 2nd or 3rd), \(N_i\) is the number of votes received by all the publishers in each position (1st, 2nd or 3rd) and \(N_j\) is the total number of votes received by all publishers in all positions (1st, 2nd or 3rd).

The weight applied to the votes received by a publisher in each position is the result of dividing the mean of the votes received in that position (in (1st, 2nd or 3rd)) by the sum of the mean of the three positions. In the results, the weight is always bigger for the first position than for the second, and the second bigger than the third.

This indicator has allowed ILIA to produce a general ranking of publishers as well as different rankings by each of the SSH disciplines. The results indicate that there are vast differences between the global ranking and the discipline-based one. Therefore, they also highlight the convenience of using both rankings in the frame of any given research assessment process, as each of them can provide different and relevant information.

5.1 Scholarly Publishers Indicators

These rankings were published for the very first time on the Scholarly Publishers Indicators (SPI) websiteFootnote 11 in 2012. This information system is aimed at collecting the indicators of a different nature for publishers (editorial processes, transparency, etc.), not with the intention of considering them as definitive but as a guide of the quality of the publishers. Indicators and information included are to inform not to perform. In order to avoid the temptation of using them automatically, it is necessary to promote a responsible use of the system.

Since 2013, SPI has been considered by CNEAI as a reference source, albeit not the only one, for the evaluation exercises in some fields of the humanities (history, geography, arts, philosophy and philology). This represents a challenge for further research and developments on this issue. It would be very interesting, for example, to extend the survey to the international scientific community, in order to consolidate and increase the robustness of the results.

6 Conclusions

The aforementioned evaluation tools are a way to improve or at least obtain more information on SSH research evaluation processes. If experts can provide their judgements on the research results, indicators for publications offer objective information on the channel of communication, providing a guide for evaluation processes.

Complementary sources for journals as well as indicators for books or book publishers are needed at the national level if a fair and complete research evaluation is pursued. Although quality indicators for publications may be improved, refined or adapted to special features of certain disciplines, three more complex problems have to be tackled: (a) gaining the acceptance of the scientific community for these kind of indicators, (b) the formula for funding these systems and (c) the relationship between large companies devoted to scientific information and selection of information sources for evaluation purposes in evaluation agencies at the national and international level. All of them should be studied in detail in order to handle the underlying problems regarding evaluation tools. Without such a research, any of the evaluation systems will remain limited, biased or unaccepted.

Notes

- 1.

At the time of writing this chapter, ANECA (National Agency for Quality Assessment and Accreditation) and CNEAI (National Commission for the Assessment of Research Activity) are in merger process and changes are announced in the evaluation procedures; these are specified in a more qualitative assessment and according to the characteristics of each area.

- 2.

From December 2011, and as a consequence of the change of government, the former socialist government created the Ministry for Science and Innovation, a more focused organization for research issues.

- 3.

- 4.

- 5.

http://ec3.ugr.es/in-recs/. IN-RECS is a bibliometric index that offered statistical information from a count of the bibliographical citations, seeking to determine the scientific relevance, influence and impact of Spanish social science journals.

- 6.

- 7.

- 8.

- 9.

Assessment of scientific publishers and books on humanities and social sciences: qualitative and quantitative indicators HAR2011-30383-C02-01 (2012–2014), funded by Ministry of Economy and Competitiveness. R&D National Plan and Categorization of scholarly publications on humanities & social sciences (2009–2010), funded by Spanish National Research Council (CSIC).

- 10.

Some details on the first project may be found in Giménez-Toledo et al. (2013), p. 68.

- 11.

References

ANECA. (2007). Principios y orientaciones para la aplicación de los criterios de evaluación. Madrid: ANECA. Retrieved from http://www.aneca.es/content/download/11202/122982/file/pep_criterios_070515.pdf.

BOE. (2009). Resolución de 18 nov. 2009, de la Presidencia de la Comisión Nacional Evluadora de la Actividad Investigadora, por la que se establecenlos criterios especificos en cada uno de los campos de evaluación. Retrieved from https://www.boe.es/boe/dias/2009/12/01/pdfs/BOE-A-2009-19218.pdf.

Giménez-Toledo, E., Mañana-Rodriguez, J., Engels, T. C., Ingwersen, P., Pölönen, J., Sivertsen, G., & Zuccala, A. A. (2015). The evaluation of scholarly books as research output. Current developments in Europe. In A. A. A. Salah, Y. Tonta, A. A. Akdag Salah, C. Sugimoto, & U. Al (Eds.), Proceedings of the 15th International Society for Scientometrics and Informetrics Conference, Istanbul, Turkey, 29th June to 4th July, 2015 (pp. 469–476). Istanbul: Bogazici University. Retrieved from http://curis.ku.dk/ws/files/141056396/Giminez_Toledo_etal.pdf.

Giménez-Toledo, E., Tejada-Artigas, C., & Mañana-Rodriguez, J. (2013). Evaluation of scientific books’ publishers in social sciences and humanities: Results of a survey. Research Evaluation, 22(1), 64–77. doi:10.1093/reseval/rvs036.

Glänzel, W., & Schoepflin, U. (1999). A bibliometric study of reference literature in the sciences and social sciences. Information Processing & Management, 35(1), 31–44. doi:10.1016/S0306-4573(98)00028-4.

Hicks, D. (2004). The four literatures of social science. In H. F. Moed, W. Glänzel, & U. Schmoch (Eds.), Handbook of quantitative science and technology research: The use of publication and patent statistics in studies of S&T systems (pp. 476–496). Dordrecht: Kluwer Academic Publishers.

METRIS. (2012). Monitoring European trend in social sciences and humanities. Social sciences and humanities in Spain (Country Report). Retrieved from http://www.metrisnet.eu/metris//fileUpload/countryReports/Spain_2012.pdf.

Nederhof, A. J. (2006). Bibliometric monitoring of research performance in the social sciences and the humanities: A review. Scientometrics, 66(1), 81–100. doi:10.1007/s11192-006-0007-2.

Nederhof, A. J., & Zwaan, R. (1991). Quality judgments of journals as indicators of research performance in the humanities and the social and behavioral sciences. Journal of the American Society for Information Science, 42(5), 332–340. doi:10.1002/(SICI)1097-4571(199106)42:5<332:AID-ASI3>3.0.CO;2-8.

Thompson, J. W. (2002). The death of the scholarly monograph in the humanities? Citation patterns in literary scholarship. Libri, 52(3), 121–136. doi:10.1515/LIBR.2002.121.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is distributed under the terms of the Creative Commons Attribution-Noncommercial 2.5 License (http://creativecommons.org/licenses/by-nc/2.5/) which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

The images or other third party material in this chapter are included in the work’s Creative Commons license, unless indicated otherwise in the credit line; if such material is not included in the work’s Creative Commons license and the respective action is not permitted by statutory regulation, users will need to obtain permission from the license holder to duplicate, adapt or reproduce the material.

Copyright information

© 2016 The Author(s)

About this chapter

Cite this chapter

Giménez Toledo, E. (2016). Assessment of Journal & Book Publishers in the Humanities and Social Sciences in Spain. In: Ochsner, M., Hug, S., Daniel, HD. (eds) Research Assessment in the Humanities. Springer, Cham. https://doi.org/10.1007/978-3-319-29016-4_8

Download citation

DOI: https://doi.org/10.1007/978-3-319-29016-4_8

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-29014-0

Online ISBN: 978-3-319-29016-4

eBook Packages: EducationEducation (R0)