Abstract

Most video-conferencing technologies focus on 1-1, person-to-person links, typically showing the heads and shoulders of the conversants seated facing their cameras. This limits their movement and expects foveal attention. Adding people to the conversation multiplies the complexity and competes for visual real estate and video bandwidth. Most coronal meaning-making activity is excised by this frontal framing of the participants. This method does not scale well as the number of participants rises. This research presents a different approach to augmenting collaboration and learning. Instead of projecting people to remote spaces, furniture is digitally augmented to effectively exist in two (or more) locations at once. An autoethnographic analysis of social protocols of this technology is presented. We ask, how can such shared objects provide a common site for ad hoc activity in concurrent conversations among people who are not co-located but co-present via audio?

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Collaboration technology

- Lifelong learning

- Collaboration technology

- Problem-based learning

- Inquiry-based learning

- Project-based learning

- Blended learning

- Collaborative knowledge construction

- Interdisciplinary studies

- Social media

- Social networking

- Social processes

- Teams

- Communities

- Surface computing

- Technology-enhanced learning

- Technology-rich interactive learning environments

- Suturing spaces

- Tabletop displays

- Augmented reality

- Mixed reality

- Collaborative work

- Interior design

- Furniture design

- Responsive architecture

- Interactive architecture

- Smart architecture

- Smart objects

- Realtime media

- Real-time interactive media

- Responsive media

- Live video

- Live audio

- Gestural interfaces

- User interfaces

- Computational matter

1 Introduction

Since the early 2000s, video chatting has become a mainstay in everyday communication. Theses person-to-person links are well equipped for mimicking the conditions of co-located interactions. Facing their cameras, the illusion of locked gaze is created by the tightly coupled camera and screen of a laptop or desktop computer configuration. This has somatic and social implications; foveal attention – eye contact – is expected for the duration of the conversation (arguably much longer than in co-located interactions). Adding people to the conversation multiplies the complexity and competes for visual real estate and video bandwidth. Most coronal meaning-making activity is excised by this frontal framing of the participants — an approach which has been adapted by some to address the scaling up of co-discussants.

Instead of spotlighting single entities as talking heads, our approach to augmenting collaboration and learning focuses on making furniture and objects that effectively exist simultaneously in multiple locations. By focusing attention on a common table instead of faces, we avoid the problems of representing people: the complexities of gaze tracking, focusing cameras or microphones. We ask, how can such shared objects provide a common site for ad hoc activity in concurrent conversations among people who are not co-located but co-present via audio?

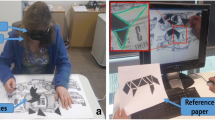

Our Table of Content (TOC) uses tables mated with two way, continuous live-video. Each table has a projector and a camera beaming down onto the surface of the table. Video capture from one side to transmitted to the remote projector and vice versa. As a result, objects placed on one table appear projected on the other. This focuses attention on what is being discussed and on a common tabletop on which props, diagrams and simple gestures can be used with ad hoc freedom. Should the collaborators wish to see their remote counterpart’s faces, they can add a standard technology like Skype. We provide an omni-directional microphone and good speakers so that people can speak at any time in the flow of conversation without having the overhead of human gaze tracking. This conveys everyone’s presence and enables ad hoc concurrent engagement.

We rethink the process and methodology of designing for shared spaces. Rather than fixing on tele-projecting people or transporting things and data objects from location to location, we think of a single place that exists in two locations. We call this technique “suturing”, borrowing consciously from topology. There is no need for mated objects be the same size or shape so long as symbolic and social activity coordinate those objects. Just as in topology one can suture two manifolds together by a “gluing map” that identifies dissimilar shapes, designers can identify objects that are quite different.

We emphasize the design metaphor that the TOC is furniture existing in two or more places at once. It is not a “communication channel” that requires dial-up protocols for initiating or terminating a device; the TOC is engineered to run continuously so that people can gather around it and start using it at any time. We leverage all the existing social and technical protocols people use to get together around, and for sharing a table. Rather than treat collaboration as a telecommunication problem, we build furniture as sites of common activity.

The video [1] shows how this technology works. We implemented our common table in our labs in Montreal and Phoenix to serve several series of seminars. The seminars vary in format between round-the-table verbal discussions and single-speaker presentations.

In this paper, we focus on the social protocols which emerged from these sessions. To begin, we discuss precedent work in many-to-many conferencing or furniture-based telematic networking. Following, we briefly outline the TOC’s technical specifications. Turning to the element our observations of how we as humans interacted with each other through the table, we discuss our negotiations with etiquette; how should we coordinate and interleave our interventions using tokens, gesture, vocal signals, etc.? In this vein, we share how our mediated communication affected perceptions of politeness and rudeness related to focus and attention. Here, we deal with the mixture of different streams of communication, such as augmenting the table with “foveal” media such as the talking-heads videos of remote interlocutors or text messaging. Secondly, we discuss the interaction of sharing space, which allows for people and objects to “overlap” in unique ways. Privacy and trust are important issues dealt with in the TOC’s design, which we investigate here as well. Finally, we propose future work to be done which respond to these first experiments.

2 Methods

2.1 Problem Statement

Aside from the engineering problems, some of the questions we address with this platform include:

-

Coordinating Conversation. How should people coordinate and interleave their interventions using tokens, gesture, vocal signals, etc.?

-

Time Zone. How can we handle time zone differences? Three hours between Montreal and Phoenix, 8 or 9 h between Phoenix and London or Athens?

-

Live vs Recorded. How do people mix live events with recorded audio, video or documents?

-

Foveal vs Ambient Attention. How do people mix the table with foveal media such as the talking-heads videos of remote interlocutors?

2.2 Literature Review

Using tabletop surfaces as communication and collaboration platforms has been a research interest for many institutions in academic and technological circles. Dating back to experiments at Xerox Parc, Video Walls described in [2] where California and Oregon research offices were connected to promote spontaneous interactions between people in the two different locations, which ranged from social to work-related interactions. This focus on collaborative and networked solutions that extend beyond the computer screen and into everyday spaces are among the interests of many researchers and groups, and are used for many different applications that aim to enhance collaborative work across remote locations. Other examples include [3–5].

Reference Fish et al. [4] provides an affordable and easy to use setup to create a tabletop collaboration platform by using an overhead projector, top-mounted tracking cameras, and a standard table surface for the projection. This project focuses on gesture recognition and computer vision tracking to enable collaborative manipulation of shapes, figures, and interface elements. While the set of this project is similar to that in the Table of Content, we avoid designing the interaction to a specific set of tasks, and instead leave the platform open for a multitude of use cases that rely on the augmented presence without the computational complexity. Our work on the Table of Content draws on this long history of research and experimentation, but with a conscious effort to avoid reproducing work that has already been done, and to approach the challenge from a different perspective.

Reference Costanza et al. [6] presents a survey of mixed reality applications, use cases, challenges (mostly technical), futures, and HCI considerations in mixed reality applications. In the survey, the tabletop interaction project, which was developed at the Innovation Center Virtual Reality (ICVR) at ETH Zurich, uses of overhead table projection and computer vision tracking to augment people’s interaction with regular office supplies such as pens and rulers. The Holoport project allows for extending the regular meeting tables with a virtual one in a different location, thus allowing for a face-to-face meeting with remotely connected counterparts. We draw similarities from these projects since our Table of Content uses an augmented tabletop as a meeting and collaboration platform that allows participants to use everyday object and projected imagery as tools for this collaboration.

Reference Ehnes [8] addresses the issue of content presentation, retrieval, and sharing, through projecting meeting notes and relevant documents on the table top. It applies similar technique as [6] in allowing users to manipulate and move documents and figures through computer vision tracking, but relies on objects with special marking and shapes on and around the table to be the facilitator of that interaction. This project implements the possibility of scalability, where it could be deployed on multiple meeting tables that can share and exchange documents between themselves.

Concerns over privacy and spatial social behavior in networked remote locations, as well as distinguishing between place and space as two different concepts in networked mixed reality solutions has been addressed in [9] by drawing on social and linguistic definitions of regular spatial practices and definitions, and attempting to refining them in the context of networked solutions as design considerations. This is also approached in [8] where concepts of co-location and social conventions in shared and connected spaces are studies in different experiments from collaborative writing, to collective design changes, and to tabletop interactions.

2.3 Methods and Materials

Each terminal point in a Table of Content network consists of the following pieces of hardware:

Furniture:

-

Table: An oval table painted matte white.

Video:

-

Projector: a projector mounted vertically above the table, projecting downward onto it.

-

Camera: a monochrome video camera mounted vertically above the table, pointing downward to capture video of the table.Footnote 1 The camera has an optical filter that passes infrared light and blocks visible light so as to prevent the camera from capturing the projected image, resulting in a feedback loop.

Audio:

-

Microphone(s): one omnidirectional microphone suspended above the centre of the table, or multiple shotgun or cardioid microphones positioned to pick up audio from different sectors of the table.

-

Speaker(s): a wide-spectrum audio transducer mounted to the underside of the table, or an array of two to four speakers attached to the ceiling above the table.

-

Amplifier: an audio power amplifier capable of driving the speaker(s).

Lighting:

-

Controller: a DMX lighting controllerFootnote 2 that controls the ambient lighting array, allowing the lights to be animated by data extracted from the video and/or audio feeds.

-

Dimmer packs: A set of DMX dimmer packs,Footnote 3 which vary the brightness of the ambient lighting array.

-

IR emitter: an infrared light to illuminate the table without washing out the projected image.Footnote 4

-

Ambient lighting array: an array of lightbulbs suspended from the ceiling and attached to the DMX control system to provide animatable ambient lighting.

Computer:

-

A computer running custom patches (available at https://github.com/Synthesis-ASU-TML/Synthesis) written in Max/MSP/Jitter (TML uses a 2011 Mac Pro).

The Software:

-

masking and mapping

-

fluids and particles

-

weighted control between local and remote feeds

-

Recording and playback: The Software allows for recording the video feed which is acquired from the overhead camera, and encoding the feed into video files that will be stored on the local computer’s hard drive. Once the feed is recorded and stored, it can be used as a video source that could be streamed and mapped onto the other tabletop in case of inactivity or intentional change of the feed source. This feature is implemented to account for important events or meetings that happened in one of the two locations, but was not streamed live due to time shift or schedule conflicts.

-

key software: Max/MSP/Jitter, Syphon, syphon camera, syphon recorder (for documentation and looping)

-

ffmpeg and the Jitter [vipr] external (Fig. 1).

2.4 Data Collection

In order to obtain relevant experimental results that are experiential in nature, we employed an autoethnographic method inside a qualitative methodology using a grounded theory approach. Data was archived as email, video collections and a series of shared online documents. Data was coded, excerpted and analyzed for relevant information regarding current behavioral practices and enhancements to the Table of Content system.

3 Analysis of Emergent Social Protocols

This section presents the design considerations resulting from the observations and analysis which have been recorded during our TOC seminar sessions. These are mainly concerning the social protocol and interaction patterns that have emerged during the seminars, which have been discussed and categorized during the iterative design process (Fig. 2).

3.1 Turn-Taking

Imbalances between the number of people on one side of the table versus the other often led to difficulties for people in the less-numerous group to interject. In such cases, the local band overrode the video and audio bands. Corporeal presence during a formal discussion demanded attentiveness in a way that the media streams do not; if one discussant ignores a co-present interlocutor, it is readily apparent. However, in our conditions, it was much less apparent to the speaker whether people in the remote location were paying attention to his or her transmitted speech. We believe that this is also partly due to seminar convention, in which the desire to interject is signified through a silent but visible act, namely raising a hand. In our conditions, simply waving ones arms below the camera did not provide an adequate remote analog to this, because the projected video was less compelling of attention, and all the more so when it was rendered illegible by the plethora of notebooks, coffee cups, and snack bowls covering a well-attended seminar table.

To mediate turn-taking between our co-located seminar discussants, we employed an intuitive solution: electing one person to act as the liaison between the two groups, deliberately making space in the conversation for each side to speak and prompting responsive engagement between the two groups. Our events organized around/through the table benefited greatly from the designation of a facilitator on each side, whose roles were to monitor the conversational dynamics and actively bridge the conversation between the two groups. The delegation of speaking permissions imparted a strong feeling of administrative cohesiveness and experiential togetherness, not unlike in co-located seminars, which remedied perceived notions of social instability or unbalance.

3.2 Etiquette of Attentiveness or Politeness and Rudeness

Our experiences of rudeness or politeness of attentiveness whilst using the TOC differed from norms of face-to-face conversation (co-located or remotely located). In face-to-face conversations, visibly switching one’s bodily orientation/attention away from an interlocutor (e.g. by checking messages on your phone, looking something up on the computer, going to get an object from elsewhere in the space) may be interpreted as rude. However, the many-to-many dynamic of conversations mediated by the TOC rendered the social dynamic pliant in this regard. If an interlocutor was speaking and noticed in the projected image that remote discussant was working on his or her computer, the speaker did not interpret this as distraction on the side of their remote counterpart. This derived in part from the fact that foveal attention/Skype-talking-head-style visual presence was never established. Thus activity seen from a birds’ eye view did not represent a deviation from signifiers of attentiveness (e.g. eye contact) that were never present in the first place. Attentiveness was mainly signified within the audio domain via practices such as turn-taking. Once a conversation was established, each speaker in the sequence assumed (though not necessarily with justification) that the other participants were listening. Thus, our device that supported “multi-band communication.”

3.3 Multi-band Communication

We designed the TOC to scaffold four separate communication bands: visual, auditory, ancillary, and corporeal. Each of these bands has specific characteristics and “bandwidth.”

In the audio band, bandwidth was relatively narrow, at least during intentional activities such as seminars when dialogue between remote locations was desirable. Typically, for reasons both of perceived attentiveness and intelligibility, turn-taking conventions precluded more than one person speaking/being heard at a time. When this one-at-a-time speaking protocol is observed, we suggest that the audio band is the main region where individual identity is established. In the absence of the habitual legibility of body language that comes from a head-on perspective, we contend that the audio region also acts as the main site of non-lexical signalling (e.g. tone of voice, rhythm of speech).

Video was the domain of (a) relatively impersonal presence and (b) symbolic information. In terms of presence, the sight of arms, cups, the tops of heads, etc. in the projected image gave a sense of the type and quantity of activity taking place at the table, but was relatively thin compared to face-based video chatting in terms of conveying personal information (e.g., body language). Our participants’ attention sometimes drifted from the projected image without feeling like the quality or focus of the conversation was compromised. This changed when deliberate visual signifying activities (e.g. collaborative drawing) occurred, since these activities required collaboration from all parties (e.g. to clear space on the table so that the drawn images or text could be seen, and to participate in the co-creation of images). Video was high-bandwidth in the sense that multiple distinct activities could take place around the table without garbling the signal, although we found that intelligibility did decrease as the visual complexity/density of the set of objects on either table increased.

The ancillary band is a catch-all term for all communication between people around remote tables that took place via media other than the tables themselves. SMS or instant messaging are two examples of this category. During seminars, those researchers charged with driving the technical set up often communicated with each other over established internet chat platforms to work out calibration issues (e.g. “what’s your port”? or “lower the contrast please”). Arguably, communication in this region could take place in parallel to the intra-table video and audio streams without disrupting them (although prominently texting during a seminar was locally interpreted as rude) (Fig. 3).

Finally, the local/corporeal band simply designates activity which took place locally at either table, with the full “bandwidth” of a conventional meeting/conversation.

3.4 Overlapping in Shared Space

The overlaying of projected images of bodies onto corporeally-present bodies is a unique intersection which produced a range of effects, whose specificity is, we argue, determined by the context of the conversation at hand. In some cases, occupying the same space as someone’s image produced feelings of intimacy (similar to the territory explored in [10]); this was more plausible when familiarity already exists, or when the event taking place was less formal. During a seminar, on the other hand, situating oneself in the same space occupied by the image of someone who is currently speaking appeared as a sign of impoliteness, inasmuch as one is not making (visual) space for the speaker. Then again, aligning one’s body with the image of a remote speaker who is dominating the conversation produced or conveyed at times feelings of identity. In our seminars, both sides of our conversation aligned a clock on the TOC, to the purpose of keeping track of daylight time zone differences between Phoenix and Montreal. This further emphasizes the metaphorical singularity of our TOC, which exist simultaneously in distinct two time zones.

3.5 Privacy and Trust

Privacy. Insofar as the table streams video and audio data to remote locations, and also records video and audio for later viewing, we considered seriously the question of privacy. The transmit-receive function of the table was apparent even without explanation simply from its physical design (a visible microphone, a projector showing a remote location and a camera capturing the table). Often, when people encountered the table for the first time, one of the first questions they asked was, “Is that thing streaming?” or “Am I being recorded?” Arguably, the essence of surveillance technology is the non-consensual capture, remote viewing and storage of data. Surveillance practices involve a hierarchy, setting some people as informed viewing subjects over others as ignorant viewed objects. We considered it best to orient the table away from such an unethical structure at the level of engineering/design. Since the constant telematic connection of the remote tables was integral to establishing a sense of the tables existing as a single object shared between two spaces, we suggest that the media streams be regarded as providing two types of data, namely presence and representation. The video and audio components of the table provide a sense of remote presence without conveying representational content. In order to sense remote presence, we simply needed to see perturbations in the audio and video streams that have no apparent local cause.

Trust. The Table of Contents can be used as a playback medium for recorded materials that are managed by users from the respective sides at ASU and Concordia. These videos are designed to be played in a loop during times of inactivity, or at will by user interaction in case of specific events of mutual interest. Earlier discussions about computing the level of activity, or performing specific gesture analysis, as a trigger for the recording process has precipitated several privacy and trust concerns that needed to be dealt with on the design stages. For this reason, our implementation necessitated a clear, intentional, and embodied gesture to start and stop the recording by clicking on an unambiguous interface element. Making the content available for streaming was enabled by users on each side. This was an immediate solution to handle concerns about content storage and availability. New models for managing storage, content, and interaction paradigms to control these issues, are being discussed for future implementations.

4 Implications and Moving Forward

4.1 Turn Taking

In order to facilitate turn-taking, we suggest integrating a gesture recognition engine into the table, designing a gesture that causes a difficult-to-ignore visual signal on the remote table. This could be as simple as causing the table’s brightness or colour to pulsate, an agreed-upon cue that the other side wishes to speak. Again, this gesture could be something as elegant as haptic or auditory feature detection evoked by scratching or tapping on the table, or as gross as a large red button in the middle of the table. The design of said gesture should not interfere with the “gesture-space” of actions that people normally perform at a table. For example, looking for hands placed on the table would not be a good strategy, as people often rest their hands on the table by default.

4.2 Privacy

In order to secure users of their privacy without losing the ability to visualize real-time presence, we suggest streaming a low-resolution version of the overhead camera feed from Phoenix and use it as a control matrix for a particle system in Montreal, and vice versa. In this case, only the particle system is being displayed on the table - the full-resolution video feed (which we are calling the “representational” feed) does not appear. When someone in Phoenix moves at the table, the particles in Montreal would be disbursed accordingly. The rhythm and intensity of the particles’ movement conveys a nuanced sense of presence – even of the genre of activity taking place, without literally representing the image of the remote party.

Acoustically, we suggest running the microphone feed through a low-pass filter, so that the cadence of speech can be heard but the content is unintelligible. This solution will be run in parallel with s more poetic implementation where the mic audio will be run into a feature extraction object that sends the resulting data stream (but not the audio) to the other side, where the data would control musical or abstract sonic instruments creating a sonification of presence. In this case, we can imagine hearing a set of synthetic chimes playing with the cadence of a remote person’s speech. Here again, we have a means of conveying presence without non-consensually broadcasting meaning.

The solutions which we describe here could be implemented as default behaviours for the table. Should a party wish to establish representational communication, a gesture (corporeal, spoken, GUI-based, etc.) could be used to “clarify” the visual and auditory streams, replacing the low-res information with full-frequency audio and full-resolution video. In sync with our concerns in the social protocol aspect of the design, “opening of the table” should be designed to require a two-way handshake (the same way in which invitations to voice or video calls work in Skype). Alternately, each side could autonomously decide to clarify or obscure their streams independent of the status of the other side’s streams. The important point, we believe, is that in a perpetually-running communication system, the broadcasting or storage of data should require voluntary and intentional initialization.

4.3 Recording

Managing the storage and archiving of the recorded media presents multiple challenges on the technical and interaction levels, and on the level of administering the availability of the content. Recording long sessions and storing them on local hard drive has resulted with very large file sizes, and presented us with the danger of losing the entire content in case of a software crash during the recording process. We suggest an implementation that sets a maximum buffer length for recorded media, which splits long recording sessions across multiple files, thus preserving the content from any software-caused loss or damage, and allows for the implementation of a distributed archival system over multiple storage units. Future implementations will include a management console that allows users on each side to control the availability of their recorded content.

We suggest another poetic way for users to interact with the table’s video feed over time; objects left on the table for a certain period of time could “burn in” to the video feed, with their images persisting for a duration that corresponded exponentially to the duration of their physical presence on the table, with the option to dismiss the burn effect with gestural or timed expiry system. This effect could run in parallel to our above-described recording format, with the former being suitable for giving a sense of temporally-distorted presence and the latter being more suited to representational purposes (e.g. the archiving of seminars).

4.4 Time Differences

The time difference between Phoenix and Montreal has so far not been of major consequence, as it is easy enough to follow standard protocol for planning geographically-remote meetings and make the time difference explicit in scheduling. Larger time differences move work days more significantly out of sync; which presents a design challenge for developing combined live/recorded effects to most effectively suture these spaces. We are interested in further investigating the social protocols which emerge from this configuration. These will include preparing for the scenarios where one party would leading the other one in the conversation (e.g. Athens would be recording events that Phoenix would later view), and the case where the two parties would treat the system as a way to leave audiovisual messages for each other. Although we consider these circumstances relating to the genre of intentional, planned activity that the table has mostly been used for to date - the question of how time differences might affect the perception of occasional/casual activity around the table while it is perpetually running feels is another question we are interested in pursuing.

5 Conclusion

In this paper, we presented the results of experiments with a table-based video conferencing system. We began by outlining the technical specifications of this system. Our focus was to share our observations regarding the emergent social protocols of this system, such as turn-taking, interleaving recorded and live video materials, interactions in physical space, etiquette, and multiple simultaneous streams of communication. Finally, we propose future areas of development in this project, including changes to interfacing, considerations of privacy, and the more organic integration of playback materials.

Notes

- 1.

Currently a Point Grey Research Flea2 1394 camera; http://www.ptgrey.com/flea2-ieee-1394b-firewire-cameras.

- 2.

E.g. Enttec ODE: http://www.enttec.com/index.php?main_menu=Products&pn=70305.

- 3.

E.g. the Chauvet DMX-4: http://www.chauvetlighting.com/dmx-4.html.

- 4.

E.g. the Raytec Vario-8 LED IR emitter: http://www.rayteccctv.com/products/view/vario-low-voltage/vario-i8.

References

Montpellier, E.: The Table of Content (2014). https://vimeo.com/105478904. Accessed 10 Jan 2015

Goodman, G., Abel, M.: Communication and collaboration: Facilitating cooperative work through communication. Off. Technol. People 3(2), 129–146 (1987)

Sirkin, D., Venolia, G., Tang, J.C., Robertson, G.G., Kim, T., Inkpen, K., Sedlins, M., Lee, B., Sinclair, M.: Motion and attention in a kinetic videoconferencing proxy. In: Proceedings of the 13th IFIP TC 13 International Conference (2011)

Fish, R.S., Kraut, R.E., Chalfonte, B.L.: The videowindow system in informal communication. In: Proceedings of ACM Conference on Computer Supported Collaborative Work (1990)

Johanson, B., Fox, A., Winograd, T.: The stanford interactive workspaces project. VLSI J. 1–30 (2004)

Costanza, E., Kunz, A., Fjeld, M.: Mixed reality: a survey. In: Lalanne, D., Kohlas, J. (eds.) Human Machine Interaction. LNCS, vol. 5440, pp. 47–68. Springer, Heidelberg (2009)

Bekins, D., Yost, S., Garrett, M., Deutsch, J. Htay, W.M., Xu, D., Aliaga, D.: Mixed reality tabletop (MRT): a low-cost teleconferencing framework for mixed-reality applications. In: VR 2006 Proceedings of the IEEE Conference on Virtual Reality (2006)

Ehnes, J.: An automated meeting assistant: a tangible mixed reality interface for the AMIDA automatic content linking device. In: Filipe, J., Cordeiro, J. (eds.) Enterprise Information Systems. LNBIP, vol. 24, pp. 952–962. Springer, Heidelberg (2009)

Harrison, S., Dourish, P.: Re-placeing space: the roles of place and space in collaborative systems. In: Proceedings of ACM Conference on Computer Supported Collaborative Work (1996)

Sermon, P.: Telematic dreaming. http://www.medienkunstnetz.de/works/telematic-dreaming/. Accessed 21 Dec 2014

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Montpellier, E. et al. (2015). Suturing Space: Tabletop Portals for Collaboration. In: Kurosu, M. (eds) Human-Computer Interaction: Interaction Technologies. HCI 2015. Lecture Notes in Computer Science(), vol 9170. Springer, Cham. https://doi.org/10.1007/978-3-319-20916-6_44

Download citation

DOI: https://doi.org/10.1007/978-3-319-20916-6_44

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-20915-9

Online ISBN: 978-3-319-20916-6

eBook Packages: Computer ScienceComputer Science (R0)