Abstract

Of course (many of) you in academia want your work to be relevant, to serve a purpose beyond its immediate role in academic promotion and prestige. Research can get public attention, when it feeds into a current public debate, and can influence policy decisions and potentially shape the future. Funding sources often request information on the policy implications of proposed research. Contributing to public policy can be personally satisfying, career-enhancing, and maybe even welfare-improving.

After 20 years as an environmental economist at the University of California at Davis and the University of Michigan, I joined the US Environmental Protection Agency (EPA), where my work centered on regulatory analysis. This article draws from my experiences of using academic research in a policy context. It does not necessarily reflect the views of EPA.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Peter Berck and Gloria Helfand in Gothenburg, Sweden, July 2008, attending the European Association of Environmental and Resource Economists conference.

Of course (many of) you in academia want your work to be relevant, to serve a purpose beyond its immediate role in academic promotion and prestige. Research can get public attention, when it feeds into a current public debate, and can influence policy decisions and potentially shape the future. Funding sources often request information on the policy implications of proposed research. Contributing to public policy can be personally satisfying, career-enhancing, and maybe even welfare-improving.

Complementarily, those involved in public policy want policy-relevant academic research. They may be legally bound to justify their actions, such as choosing the level of a standard based on the best available scientific information. Using peer-reviewed academic research in those actions increases the credibility of the assessments.

With incentives for researchers to supply policy-relevant information, and for public agencies to use such information, a happy market should exist for policy-relevant research for use in public policy. Yet, not all research that positions itself as relevant to public policy is actually as useful in a policy setting as it might initially seem. The question being asked in the research may not reflect the current policy debate. Data may be old, or modeling may omit nuances of the policy being studied. These research traits may not diminish the publishability of the work, but they may reduce the role that the research will play in public policy. These problems suggest a potential market failure in the provision of policy-relevant research, where the incentives for relevant policy research may not align with incentives for academic advancement.

This chapter suggests ways for academics to reduce this divergence. This is not a plea to change academic research; rather, my goal is to assist those who specifically want to have greater influence on public policy. The following five principles for increasing relevance come from my personal reflections, as a former academic doing policy analysis, on the relationship between academic research and policy analysis. Although these principles may be difficult to achieve, they may increase the policy value of your work.

-

Know your audience.

-

What would other disciplines say?

-

Magnitudes matter.

-

Keep it simple, but not too simple.

-

Humility.

The remainder of this article explains each of these topics, with examples provided from my work on the economic analysis involved in vehicle emissions standards regulation.

1 Know Your Audience

For whom is the research or policy recommendation intended? “Policy-makers” encompass a wide range of actors, including legislators, regulators, and external stakeholders. In the policy world, each of these groups has different roles. Understanding where a piece of research fits into the policy process can enhance its relevance.

Legislators, at national and subnational levels, can enact laws. They have tremendous discretion not only in what policies to enact, but also the degree of specificity in the laws; they may want their legislation to be highly prescriptive, or they may write laws open to interpretation. Prescriptive legislation is more likely to be enforced as written. If, on the other hand, legislators do not have technical expertise in an issue, if they want to allow for changing circumstances without having to enact new legislation, if political compromises reduce specificity, or if they prefer to let an agency take responsibility for the impacts of an action, they may choose to leave the agencies with discretion in implementation.

Regulators then have the task of implementing and enforcing the laws. Depending on how prescriptive the laws are, regulators may also have the authority, or the responsibility, to interpret the laws through regulations. Regulators’ authority only goes as far as legislation and executive branch management allow.

Stakeholders seek to influence both legislation and regulatory actions, and they live with the consequences of the actions taken by these groups.

Each of these groups is interested in research, but the types of research of interest differ among them. Consider, for instance, papers on the relative performance of standards and price-based incentives for new vehicle emissions controls. The US Environmental Protection Agency (EPA) is unlikely to find those papers relevant, because the Clean Air ActFootnote 1 requires the use of standards to limit pollutants from new motor vehicles and does not authorize taxes on fuels or vehicle emissions. Legislators, on the other hand, have the ability to implement price-based policies. They might be interested not only in the efficiency impacts of the different policies but also in their distributional effects; their positions may be affected by the impacts of the policies on key constituents.

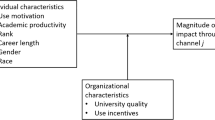

In addition, which results are presented and how they are presented are likely to affect how the information is used. Results may be taken out of context, misinterpreted, or misread. Tables and figures tend to attract more notice than text; even if the text contains significant caveats about the results, readers may focus on the numbers and not notice the limitations associated with the numbers. A researcher should consider carefully how to present and describe results, to reduce these potential misuses (Fig. 1).

Example: Presentation

In sum, the utility of research for the policy process depends on getting a relevant analysis to the right group in a way that will allow that group to understand what the researcher wants it to understand about the results.

2 What Would Other Disciplines Say?

As Irwin et al. (2018) point out, many pressing policy problems inherently bridge multiple disciplines. This is especially true in environmental economics, with its emphasis on the connection between human well-being and the natural world. The researchers who tackle these problems, on the other hand, do not always cross those bridges; Irwin et al. note academic obstacles to their doing so, including “the prevalence of the individual, disciplinary-based reward systems” (p. 324). Researchers who stay within their disciplines may miss potentially important interconnections.

Fourcade et al. (2015) noted that economists have “imperialist” tendencies: when they work on topics that have been studied by other disciplines, they are relatively unlikely to recognize the contributions of those other disciplines, citing them less often than other disciplines cite economic research. As economists have taken their statistical skills into other disciplines – not only other social sciences but also natural/physical sciences, such as biology, public health, and engineeringFootnote 2 – they vary in how much understanding they display of other disciplines’ literatures.

Other disciplines are likely to have a rich history in mechanisms as well as the statistical associations that economists typically pursue. Economic research that primarily cites other economic research, rather than drawing from the research in other disciplines, may contribute less to a policy debate than if the authors understood the fuller intellectual context of the problem. On the one hand, the research may reconfirm findings that already exist; reconfirmation is very valuable for the policy process (see below) but is less of a novel contribution. On the other hand, it may contradict others’ findings. In that case, a policy analyst needs to understand what leads to the different results and which research is more relevant for the particular problem being faced.

Example: Engineering Fuel Economy

Standard economic principles suggest that, if a technology will save more in fuel costs than in up-front costs, automakers should provide, and consumers should seek, vehicles with that technology. A 2010 prospective engineering analysis nevertheless identified a number of existing technologies that would reduce fuel consumption in light-duty vehicles, with payback periods as low as 1–3 years, that were not in widespread use (U.S. Environmental Protection Agency, 2010a, Chapter 3; U.S. Environmental Protection Agency, 2010b, Chapters 3, 6, 8.1.3).Footnote 3 A 2016 retrospective analysis essentially confirmed those findings (U.S. Environmental Protection Agency et al., 2016). It observed significant adoption of many of those technologies once the standards were enacted, with costs and fuel savings generally similar to those previously estimated, without apparent adverse effects on other vehicle attributes. From an engineering perspective, then, the basic economic principles were not supported: those technologies, though they would save people money, were not widely implemented through free-market principles alone.

Some economists have questioned these findings on the basis that the regulatory agencies had not demonstrated evidence of the causes of limited adoption. For instance, Gayer and Viscusi (2013) argue that “the behavioral justifications offered by NHTSA and EPA [such as consumer misperception of the value of fuel savings] offer very little evidence that consumers are causing themselves harm in their vehicle-purchasing decisions and would thus accrue private benefits by having their options restricted (p. 255).” Nevertheless, it is possible – indeed, it appears to be true – both that the engineering analysis is correct and that economists have not yet explained that finding. For instance, economists may not yet have tested the right explanatory theory, or perhaps the result is due to a curious interaction of effects. Basing arguments on economic principles without addressing the engineering findings, though, does not address the fundamental paradox. Economists and engineers might mutually advance our understanding of this market by trying to solve this puzzle together.

In sum, an academic finding that does not fully address the cross-disciplinary breadth of academic literature on a topic may leave a policy analyst scrambling to understand the range of findings. Putting any one set of results into the context of the overall literature will aid in policy relevance.

3 Magnitudes Matter

Policy analysts often need to estimate the magnitude of an effect. Are changes in emissions large? How will employment, revenues, or sales be affected? How much are people willing to pay to reduce risk? These estimates are easier to make when there is some agreement about the relevant elasticities or other measures of impact. Policy analysts thus search the literature for the range of values, in the hope of finding that agreement. Are results consistently statistically significant and of the same sign? Such findings are a start, but not the end. Is the result similar in magnitude to findings in other studies? If so, the finding is robust, and a policy analyst is set to do the estimation.

If, on the other hand, results are not consistent, the policy analyst is stuck with the task of assessing those results. Is an average of disparate results an acceptable value to use? Are different studies measuring the same phenomenon? Meta-analysis can sometimes provide insight into sources of variation; at the least, critical reading of the literature is needed to determine if some estimates are better, or more applicable in specific circumstances, than others.

Concerns have been raised that academic research may face its own biases. Acceptance in journals tends to come more easily with statistically significant findings (Dwan et al., 2013), a phenomenon known as publication bias. Citations, often a measure of academic impact, may come more easily to studies with significant findings (de Vries et al., 2018). Emphasizing statistical significance of results in academic work is a rational, even if questionable, response to incentives but may bias results. An insignificant finding may be meaningful in and of itself.

In addition, sometimes lost in the concern for significance is the magnitude: even if significant, does a treatment matter (Bellemare, 2016)? Even a consensus on the order of magnitude of a result may be useful. If research shows that a result is “small,” then it will not have a strong impact; “large” results, on the other hand, deserve greater attention.

Example: Willingness to Pay for Reduced Fuel Consumption

What is the role of fuel economy in consumers’ vehicle purchase decisions? This parameter becomes important for understanding how policies that improve fuel economy might affect vehicle sales. If people are willing to buy at least as much fuel-saving technology as policy leads automakers to install, then vehicle sales might increase as a result of policy. On the other hand, of course, if people are not willing to accept increased vehicle prices in exchange for reduced fuel costs, then sales will decrease. A good estimate of the willingness to pay (WTP) for fuel savings, then, is necessary to understand impacts of standards on the auto market.

A rational, calculating vehicle buyer should be willing to pay for additional fuel-saving technologies up to the present value of the resulting fuel savings over a vehicle’s lifetime. Such a calculation requires a number of assumptions, including the expected miles of travel, fuel costs, discount rates, and technology costs; it may not be a surprise if consumers err in this calculation (Turrentine & Kurani, 2007), but it is not obvious whether errors would lead them to overestimate or underestimate it. Behavioral factors, such as myopia, risk aversion, or loss aversion, on the other hand, might lead to systematic biases. In other words, this is an empirical question.

Greene et al. (2018) conducted a meta-analysis of the results from 52 papers which considered the role of fuel economy in consumer vehicle purchases. In most cases, Greene et al. had to convert results from the papers into a common metric, the WTP for a one-cent reduction in fuel costs per mile, because the papers’ authors did not use common metrics. They found extremely high variation: before removing outliers, the mean WTP was -$8331, with a standard deviation of $97,820; after removing outliers, the mean WTP was $1880, with a standard deviation of $6875.Footnote 4 The meta-analysis found that results differed depending on whether the papers were stated preference, revealed preference, or market studies, as well as whether they used fixed- or random-coefficient discrete choice models and whether they accounted for endogeneity. Such a lack of consensus about the role of fuel savings in consumers’ vehicle purchase decisions raises questions about the robustness of the methods used to estimate this value.

In sum, as much as statistical significance can matter, policy analysts seek well-supported magnitudes for their estimates. Statistical significance may be necessary, but it is not sufficient for robust regulatory analyses, which are more reliable when results are robust across studies.

4 Keep It Simple, But Not Too Simple

Of course, the results of an analysis depend on the underlying assumptions and the data used. For relevance, an analysis needs to match as closely as possible the reality of the policy world, which means that the assumptions and data should align as closely as possible to that reality. Closeness is not always achievable, though. Sometimes, for analytical convenience, an assumption is made that does not match the actual policy scenario (Cherrier, 2018). Data may be old or from a specific socioeconomic setting or may be missing some key variables. Perhaps the analysis is exploratory – e.g., if the world works in the following way, then the following results will occur – without much effort going into whether the world works in that way. These adjustments may make the difference between being able to produce a publication and failing.

On the other hand, from the policy world’s perspective, getting the policy scenario wrong or using old or misaligned data puts significant question marks around the relevance of a paper. Many regulatory standards, for instance, have cost-reducing flexibilities associated with them, such as using rate-based standards or allowing trading among facilities; omitting these flexibilities will overstate program costs. Analyses done using data for one state may not be generalizable to other states without careful consideration of the representativeness of the place studied. Technologies and conditions change over time; data from 20 years ago may be available and suitable for analysis, but they may not produce estimates appropriate for the current issue. Relevance requires careful consideration of the context of the research.

Example: Pre-buy of Heavy-Duty Vehicles

Regulation of new vehicles, by increasing costs, may not only decrease sales but also lead to increased sales of vehicles before the regulations are effective – a phenomenon known as pre-buy. Estimating the effectiveness of a new regulation would benefit from understanding how people might seek to avoid its costs.

Several papers have examined pre-buy for vehicles, but it may not be possible to apply their findings prospectively. Hausman (2016), for instance, looked at pre-buy in the Great Depression; it might be difficult to rely on results from the 1930s for current policy. Lam and Bausell (2007) and Rittenhouse and Zaragoza-Watkins (2018) examine the existence and magnitude of pre-buy for heavy-duty vehicles in the 2000s, a more relevant policy setting for current regulatory analysis. For valid methodological reasons, however, they do not relate regulatory costs to sales impacts; as a result, it is unclear whether those papers can be used to predict the magnitude of pre-buy for future heavy-duty vehicle standards.

In sum, research is more likely to be relevant when it reflects the current key conditions of the policy scenarios. Each step away from those conditions reduces the ability of research to reflect current policy reality.

5 Humility

Science is a process, an accumulation of findings. Any one research effort is a contribution, but it is unusual when a finding is conclusive or ends a line of inquiry. Policy analysts frequently need a critical synthesis of the findings, in the hope of identifying an agreement. Policy analysis based on a body of robust science will produce more reliable results than analysis based on one study that, as high-quality as it may be, is only one piece of evidence. Put another way (Campbell, 2018), “Most Published Research Is Probably Wrong!”

Campbell argues that academics have low incentives to critique others’ work; the critiques may annoy the authors of the studies, who may be asked to serve as referees for the critical paper when it is submitted to a journal or to write letters of recommendation as experts in the field. Even if researchers behave more honorably than Campbell fears, academic incentives may still steer researchers away from critical syntheses of a body of research. It is likely that original research with novel findings is considered more prestigious in an academic career than literature reviews or replication studies. Policy analysts then face the task of reconciling potentially divergent results without input from the academic community on why results differ. That synthesis effort would benefit from authors’ insights on sources of variation, as well as advantages or limitations associated with using results from each study. A critical consideration of a set of research findings in the broader context of the literature should not just be an opportunity to extol the merits of one’s own work but also to show how results fit together and how knowledge is accumulating.

Example: Environmental Impacts of Electric Vehicles

Electric vehicles (EVs) produce no tailpipe emissions; compared to gasoline or diesel vehicles, they reduce air pollution in the immediate area where they are driven. On the other hand, electric vehicles increase emissions from electric power plants. Holland et al. (2016) looked at the relative effects of these emissions on air quality and human health, based on, among other assumptions, power plant emissions rates from 2010 to 2012, and found that, averaged across the USA, EVs caused more damage than gasoline vehicles. Of their various sensitivity analyses, the only one that changed this result was assuming cleaner electricity production. On that basis, they concluded that, on average in the USA, EVs were more environmentally harmful than gasoline vehicles, though with considerable geographic variation due largely to the pollution intensity of electricity production in an area.

The 2010s were a time of great changes in electricity generation, as natural gas and renewable energy sources dropped in price. Holland et al. (2020), to their great credit, recognized this change and revisited their analysis. In a mere 5–7 years, they found damages from electricity generation had decreased so much that EVs were now generally environmentally preferable, though results still varied geographically. Nevertheless, even these results may be outdated in a few years. Acknowledging that results depend on assumptions, and assumptions may need revision, may seem straightforward and appropriate; revisiting an already published study when those conditions change, though, is not common.

In sum, policy benefits from greater attention to synthesis and critical assessment of results in addition to individual findings. Each individual finding contributes to that synthesis.

6 Relevance Is Possible

As may be obvious from these principles, it may be hard to conduct academically rigorous, policy-oriented research. Finding unaddressed research questions and novel datasets is difficult enough; matching those to current public policy is an even greater challenge. Nevertheless, policy analysts find much that is useful in academic research. Theory can help policy by providing frameworks for analysis and helping explain market structures in regulated industries. Empirical evidence, as suggested by some of the examples, sometimes proves more difficult to match to public policy; nevertheless, a collection of studies that point toward a common finding, even if no individual study is an exact match to policy, may provide robust support for estimates.

For instance, a small literature examines the effects of environmental regulation on employment. EPA has used the theoretical framework from Berman and Bui (2001) and a similar one from Morgenstern et al. (2002) to explain that regulation a priori has ambiguous effects on employment; while higher costs reduce demand for a final good, and thus employment from production, part of those higher costs is additional labor required to comply with the regulation. In addition, the various empirical studies generally show small net effects in the regulated industry (Ferris et al., 2014), and more aggregate net employment impacts depend heavily on overall macroeconomic conditions (Belova et al., 2015). These findings help to develop an overall picture of the “jobs and environment” debate.

7 Conclusion

Publishing research relevant to public policy may be harder than it seems. It takes time and outreach beyond academic journals and conferences to learn the institutional context of a policy debate and to identify key questions to be analyzed. Suitable data may not exist, or it may not be possible to run an appropriate experiment. Modeling by its nature involves simplifications and assumptions, which may or may not affect the model’s ability to estimate reality. Any of these obstacles can contribute to the wedge between academic work and policy relevance.

In addition, policy analysis typically seeks a scientific consensus based on a synthesis of research, while academic researchers may benefit more from producing unique experiments and results. Any one academic paper will be one additional piece of evidence. A set composed of unique results may produce a scientific consensus, but may also produce confusing and apparently contradictory findings. Without sufficient academic reward for critical literature reviews and replication studies, policy analysts rather than academics will have the lead in figuring out what is known about various policy questions.

In sum, it is hard to do policy-relevant research, in or outside academia. To make academic findings more useful to policy, researchers should place their results in the broader context of the literature and recognize the limitations of their studies. Better understanding of how research can contribute to public policy may not only improve its relevance but also feed back to improve the quality of scientific research itself.

Notes

- 1.

Clean Air Act Section 202(a)(1), https://www.law.cornell.edu/uscode/text/42/7521. Accessed December 10, 2019.

- 2.

I do not wish to pick on individual researchers or research papers; thus, I present topics rather than specific citations. For biology, see, e.g., fisheries or forestry research; for public health, see, e.g., the effects of air pollution on human health; and for engineering, see, e.g., modeling of pollution flows from sources to receptors.

- 3.

Examples include 6-speed automatic transmissions, use of downsized-turbocharged engines, and gasoline direct injection. Many of these technologies had been in limited use, commonly in high-end vehicles, for as long as decades without diffusion into more widely purchased vehicles (U.S. Environmental Protection Agency, 2019, Chapter 4).

- 4.

For reference, Greene et al. (2018) estimate that a vehicle with 115,000 discounted lifetime miles would have a marginal willingness to pay of $1150 for a $0.01/mile reduction in fuel cost. It is provided here only to suggest an order of magnitude of the expected value.

References

Belova, A., Gray, W., Linn, J., Morgenstern, R., & Pizer, W. (2015). Estimating the job impacts of environmental regulation. Journal of Benefit-Cost Analysis, 6, 325–340.

Bellemare, M. F. (2016). Metrics Monday: Statistical vs. economic significance. http://marcfbellemare.com/wordpress/11680. Accessed December 30, 2019.

Berman, E., & Bui, L. (2001). Environmental regulation and labor demand: Evidence from the South Coast Air Basin. Journal of Public Economics, 79, 265–295.

Campbell, D. L. (2018). Reminder: Most published research is probably wrong!”. http://douglaslcampbell.blogspot.com/2018/01/reminder-most-published-research-is.html

Cherrier, B. (2018). How tractability has shaped economic knowledge: A few conjectures. https://beatricecherrier.wordpress.com/2018/04/20/how-tractability-has-shaped-economic-knowledge-a-follow-up/

de Vries, Y. A., Roest, A. M., de Jonge, P., Cuijpers, P., Munafò, M. R., & Bastiaansen, J. A. (2018). The cumulative effect of reporting and citation biases on the apparent efficacy of treatments: The case of depression. Psychological Medicine, 48, 2453–2455.

Dwan, K., Gamble, C., Williamson, P., & Kirkham, J. for the Reporting Bias Group (2013). Systematic review of the empirical evidence of study publication bias and outcome reporting bias — An updated review. PLoS One, 8, e66844.

Ferris, A., Shadbegian, R. J., & Wolverton, A. (2014). The effect of environmental regulation on power sector employment: Phase I of the title IV SO2 trading program. Journal of the Association of Environmental and Resource Economists, 1(4), 521–553.

Fourcade, M., Ollion, E., & Algan, Y. (2015). The superiority of economists. Journal of Economic Perspectives, 29, 89–114.

Gayer, T., & Viscusi, W. (2013). Overriding consumer preferences with energy regulations. Journal of Regulatory Economics, 43, 248–264.

Greene, D., Hossain, A., Hofmann, J., Helfand, G., & Beach, R. (2018). Consumer willingness to pay for vehicle attributes: What do we know? Transportation Research Part A, 7118, 258–279.

Hausman, J. K. (2016). What was bad for General Motors was bad for America: The automobile industry and the 1937/38 recession. Journal of Economic History, 76(2), 427–477.

Holland, S. P., Mansur, E. T., Muller, N. Z., & Yates, A. J. (2016). Are there environmental benefits from driving electric vehicles? The importance of local factors. American Economic Review, 106(12), 3700–3729.

Holland, S. P., Mansur, E. T., Muller, N. Z., & Yates, A. J. (2020). Decompositions and policy consequences of an extraordinary decline in air pollution from electricity generation. American Economic Journal: Economic Policy, 12, 244–74.

Irwin, E., Culligan, P., Fischer-Kowalski, M., Law, K. L., Murtugudde, R., & Pfirman, S. (2018). Bridging barriers to advance global sustainability. Nature Sustainability, 1, 324–326.

Lam, T., & Bausell, C. (2007). Strategic behaviors toward environmental regulation: A case of trucking industry. Contemporary Economic Policy, 25(1), 3–13.

Morgenstern, R., Pizer, W., & Shih, J.-S. (2002). Jobs versus the environment: An industry-level perspective. Journal of Environmental Economics and Management, 43, 412–436.

Rittenhouse, K., & Zaragoza-Watkins, M. (2018). Anticipation and environmental regulation. Journal of Environmental Economics and Management, 89, 255–277.

Turrentine, T. S., & Kurani, K. S. (2007). Car buyers and fuel economy? Energy Policy, 35, 1213–1223.

U.S. Environmental Protection Agency. (2010a). Joint technical support document: Rulemaking to establish light-duty vehicle greenhouse gas emission standards and corporate average fuel economy standards. EPA-420-R-10-901. https://nepis.epa.gov/Exe/ZyPDF.cgi/P1006W9S.PDF?Dockey=P1006W9S.PDF. Accessed December 16, 2019.

U.S. Environmental Protection Agency. (2010b). Final regulatory impact analysis: Rulemaking to establish light-duty vehicle greenhouse gas emission standards and corporate average fuel economy standards. EPA-420-R-009. https://nepis.epa.gov/Exe/ZyPDF.cgi/P1006V2V.PDF?Dockey=P1006V2V.PDF. Accessed December 16, 2019.

U.S. Environmental Protection Agency, National Highway Traffic Safety Administration, & California Air Resources Board. (2016). Draft technical assessment report: Midterm evaluation of light-duty vehicle greenhouse gas emission standards and corporate average fuel economy standards for model years 2022–2025. EPA-420-D-16-900. https://nepis.epa.gov/Exe/ZyPDF.cgi/P100OXEO.PDF?Dockey=P100OXEO.PDF. Accessed December 30, 2019.

U.S. Environmental Protection Agency. (2019). The 2018 EPA automotive trends report: Greenhouse gas emissions, fuel economy, and technology since 1975. EPA-420-R-19-002. https://nepis.epa.gov/Exe/ZyPDF.cgi/P100W5C2.PDF?Dockey=P100W5C2.PDF. Accessed December 12, 2019.

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2023 The Author(s)

About this chapter

Cite this chapter

Helfand, G. (2023). So You Want To Be Relevant: A Policy Analyst’s Reflections on Academic Literature. In: Zilberman, D., Perloff, J.M., Spindell Berck, C. (eds) Sustainable Resource Development in the 21st Century. Natural Resource Management and Policy, vol 57. Springer, Cham. https://doi.org/10.1007/978-3-031-24823-8_18

Download citation

DOI: https://doi.org/10.1007/978-3-031-24823-8_18

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-24822-1

Online ISBN: 978-3-031-24823-8

eBook Packages: Economics and FinanceEconomics and Finance (R0)