Abstract

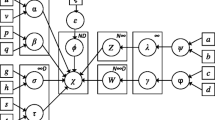

Mixture models are considered as a powerful approach for modeling complex data in an unsupervised manner. In this paper, we introduce a finite generalized inverted Dirichlet mixture model for semi-bounded data clustering, where we also developed a variational entropy-based method in order to flexibly estimate the parameters and select the number of components. Experiments on real-world applications including breast cancer detection and image categorization demonstrate the superior performance of our proposed model.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Bishop., C.M : Pattern recognition and machine learning. Information science and statistics. Springer, New York, NY, (2006). Softcover published in 2016

McLachlan, G.J., Peel, D.: Finite Mixture Models. John Wiley & Sons, Hoboken (2004)

Ho, T.K., Baird, H.S.: Large-scale simulation studies in image pattern recognition. IEEE Trans. Pattern Analy. Mach. Intell. 19(10), 1067–1079 (1997)

Fan, W., Bouguila, N.: Non-Gaussian data clustering via expectation propagation learning of finite Dirichlet mixture models and applications. Neural Process. Lett. 39(2), 115–135 (2014)

Bdiri, T., Bouguila, N.: Positive vectors clustering using inverted Dirichlet finite mixture models. Expert Syst. Appl. 39(2), 1869–1882 (2012)

Bouguila, N., Ziou, D.: A countably infinite mixture model for clustering and feature selection. Knowl. Inf. Syst. 33(2), 351–370 (2012)

Bouguila, N., Amayri, O.: A discrete mixture-based kernel for SVMs: application to spam and image categorization. Inf. Process. Manag. 45(6), 631–642 (2009)

Sefidpour, A., Bouguila, N.: Spatial color image segmentation based on finite non-Gaussian mixture models. Expert Syst. Appl. 39(10), 8993–9001 (2012)

Bouguila, N.: A model-based approach for discrete data clustering and feature weighting using MAP and stochastic complexity. IEEE Trans. Knowl. Data Eng. 21(12), 1649–1664 (2009)

Bdiri, T., Bouguila, N.: Bayesian learning of inverted Dirichlet mixtures for SVM kernels generation. Neural Comput. Appl. 23(5), 1443–1458 (2013)

Fan, W., Bouguila, N.: Online learning of a Dirichlet process mixture of beta-liouville distributions via variational inference. IEEE Trans. Neural Netw. Learn. Syst. 24(11), 1850–1862 (2013)

Mashrgy, M.A.I., Bdiri, T., Bouguila, N.: Robust simultaneous positive data clustering and unsupervised feature selection using generalized inverted Dirichlet mixture models. Knowl. Based Syst. 59, 182–195 (2014)

Bdiri, T., Bouguila, N.: Learning inverted Dirichlet mixtures for positive data clustering. In: Kuznetsov, S.O., Ślęzak, D., Hepting, D.H., Mirkin, B.G. (eds.) RSFDGrC 2011. LNCS (LNAI), vol. 6743, pp. 265–272. Springer, Heidelberg (2011). https://doi.org/10.1007/978-3-642-21881-1_42

Bdiri, T., Bouguila, N.: An infinite mixture of inverted Dirichlet distributions. In: Lu, B.-L., Zhang, L., Kwok, J. (eds.) ICONIP 2011. LNCS, vol. 7063, pp. 71–78. Springer, Heidelberg (2011). https://doi.org/10.1007/978-3-642-24958-7_9

Tirdad, P., Bouguila, N., Ziou, D.: Variational learning of finite inverted Dirichlet mixture models and applications. In: Laalaoui, Y., Bouguila, N. (eds.) Artificial Intelligence Applications in Information and Communication Technologies. SCI, vol. 607, pp. 119–145. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-19833-0_6

Fukumizu, K., Amari, S.: Local minima and plateaus in hierarchical structures of multilayer perceptrons. Neural Netw. 13(3), 317–327 (2000)

Bouguila, N., Ziou, D.: High-dimensional unsupervised selection and estimation of a finite generalized Drichlet mixture model based on minimum message length. IEEE Trans. Pattern Anal. Mach. Intell. 29(10), 1716–1731 (2007)

Bouguila, N., Ziou, D.: MML-based approach for finite Dirichlet mixture estimation and selection. In: Perner, P., Imiya, A. (eds.) MLDM 2005. LNCS (LNAI), vol. 3587, pp. 42–51. Springer, Heidelberg (2005). https://doi.org/10.1007/11510888_5

Maanicshah, K., Bouguila, N., Fan, W.: Variational learning for finite generalized inverted Dirichlet mixture models with a component splitting approach. In: 2019 IEEE 28th International Symposium on Industrial Electronics (ISIE), pp. 1453–1458. IEEE (2019)

Bourouis, S., Mashrgy, M.A.L., Bouguila, N.: Bayesian learning of finite generalized inverted Dirichlet mixtures: application to object classification and forgery detection. Expert Syst. Appl. 41(5), 2329–2336 (2014)

Bdiri, T., Bouguila, N., Ziou, D.: Variational Bayesian inference for infinite generalized inverted Dirichlet mixtures with feature selection and its application to clustering. Appl. Intell. 44(3), 507–525 (2016)

Mashrgy, M.A.L., Bdiri, T., Bouguila, N.: Robust simultaneous positive data clustering and unsupervised feature selection using generalized inverted Dirichlet mixture models. Knowl.-Based Syst. 59, 182–195 (2014)

Manouchehri, N., Rahmanpour, M., Bouguila, N., Fan, W.: Learning of multivariate beta mixture models via entropy-based component splitting. In: 2019 IEEE Symposium Series on Computational Intelligence (SSCI), pp. 2825–2832. IEEE (2019)

Fan, W., Al-Osaimi, F.R., Bouguila, N., Du, J.: Proportional data modeling via entropy-based variational Bayes learning of mixture models. Appl. Intell. 47(2), 473–487 (2017). https://doi.org/10.1007/s10489-017-0909-0

Fan, W., Bouguila, N., Ziou, D.: Variational learning for finite Dirichlet mixture models and applications. IEEE Trans. Neural Netw. Learn. Syst. 23(5), 762–774 (2012)

Chandler, D.: Introduction to Modern Statistical. Mechanics. Oxford University Press, Oxford, UK (1987)

Celeux, G., Forbes, F., Peyrard, N.: Em procedures using mean field-like approximations for Markov model-based image segmentation. Pattern Recogn. 36(1), 131–144 (2003)

Faivishevsky, L., Goldberger, J.: ICA based on a smooth estimation of the differential entropy. In: Advances in Neural Information Processing Systems, pp. 433–440 (2009)

Leonenko, N., Pronzato, L., Savani, V., et al.: A class of rényi information estimators for multidimensional densities. Ann. Stat. 36(5), 2153–2182 (2008)

Penalver, A., Escolano, F.: Entropy-based incremental variational Bayes learning of Gaussian mixtures. IEEE Trans. Neural Netw. Learn. Syst. 23(3), 534–540 (2012)

Dua, D., Graff, C.: UCI machine learning repository (2017)

Wolberg, W.H., Street, W.N., Mangasarian, O.L.: Machine learning techniques to diagnose breast cancer from image-processed nuclear features of fine needle aspirates. Cancer Lett. 77(2–3), 163–171 (1994)

Fei-Fei, L., Fergus, R., Perona, P.: Learning generative visual models from few training examples: an incremental Bayesian approach tested on 101 object categories. In: 2004 Conference on Computer Vision and Pattern Recognition Workshop, pp. 178–178. IEEE (2004)

Li, T., Mei, T., Kweon, I.-S., Hua, X.-S.: Contextual bag-of-words for visual categorization. IEEE Trans. Circ. Syst. Video Technol. 21(4), 381–392 (2010)

Lowe, D.G.: Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 60(2), 91–110 (2004)

Acknowledgment

The completion of this research was made possible thanks to the Natural Sciences and Engineering Research Council of Canada (NSERC) and the National Natural Science Foundation of China (61876068).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

Ahmadzadeh, M.S., Manouchehri, N., Ennajari, H., Bouguila, N., Fan, W. (2021). Entropy-Based Variational Learning of Finite Generalized Inverted Dirichlet Mixture Model. In: Nguyen, N.T., Chittayasothorn, S., Niyato, D., Trawiński, B. (eds) Intelligent Information and Database Systems. ACIIDS 2021. Lecture Notes in Computer Science(), vol 12672. Springer, Cham. https://doi.org/10.1007/978-3-030-73280-6_11

Download citation

DOI: https://doi.org/10.1007/978-3-030-73280-6_11

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-73279-0

Online ISBN: 978-3-030-73280-6

eBook Packages: Computer ScienceComputer Science (R0)