Abstract

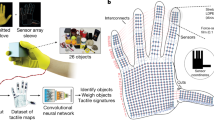

The connection between visual input and tactile sensing is critical for object manipulation tasks such as grasping and pushing. In this work, we introduce the challenging task of estimating a set of tactile physical properties from visual information. We aim to build a model that learns the complex mapping between visual information and tactile physical properties. We construct a first of its kind image-tactile dataset with over 400 multiview image sequences and the corresponding tactile properties. A total of fifteen tactile physical properties across categories including friction, compliance, adhesion, texture, and thermal conductance are measured and then estimated by our models. We develop a cross-modal framework comprised of an adversarial objective and a novel visuo-tactile joint classification loss. Additionally, we introduce a neural architecture search framework capable of selecting optimal combinations of viewing angles for estimating a given physical property.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

Tactile measurements were done by SynTouch Inc., with the BioTac Toccare, and purchased by Rutgers.

- 2.

References

Arandjelović, R., Zisserman, A.: Objects that sound. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11205, pp. 451–466. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01246-5_27

Arian, M.S., Blaine, C.A., Loeb, G.E., Fishel, J.A.: Using the BioTac as a tumor localization tool. In: 2014 IEEE Haptics Symposium (HAPTICS), pp. 443–448. IEEE (2014)

Aytar, Y., Vondrick, C., Torralba, A.: See, hear, and read: deep aligned representations. arXiv preprint arXiv:1706.00932 (2017)

Bessinger, Z., Jacobs, N.: Quantifying curb appeal. In: 2016 IEEE International Conference on Image Processing (ICIP), pp. 4388–4392. IEEE (2016)

Burgos-Artizzu, X.P., Ronchi, M.R., Perona, P.: Distance estimation of an unknown person from a portrait. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) ECCV 2014. LNCS, vol. 8689, pp. 313–327. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-10590-1_21

Calandra, R., et al.: More than a feeling: learning to grasp and regrasp using vision and touch. IEEE Robot. Autom. Lett. 3(4), 3300–3307 (2018)

Carreira, J., Zisserman, A.: Quo vadis, action recognition? A new model and the kinetics dataset. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6299–6308 (2017)

Chen, L., Srivastava, S., Duan, Z., Xu, C.: Deep cross-modal audio-visual generation. In: Proceedings of the on Thematic Workshops of ACM Multimedia 2017, pp. 349–357. ACM (2017)

Chung, Y.A., Weng, W.H., Tong, S., Glass, J.: Unsupervised cross-modal alignment of speech and text embedding spaces. In: Advances in Neural Information Processing Systems, pp. 7354–7364 (2018)

Cordts, M., et al.: The cityscapes dataset for semantic urban scene understanding. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3213–3223 (2016)

Dai, B., Fidler, S., Urtasun, R., Lin, D.: Towards diverse and natural image descriptions via a conditional GAN. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2970–2979 (2017)

DeGol, J., Golparvar-Fard, M., Hoiem, D.: Geometry-informed material recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1554–1562 (2016)

Dymczyk, M., Schneider, T., Gilitschenski, I., Siegwart, R., Stumm, E.: Erasing bad memories: agent-side summarization for long-term mapping. In: 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 4572–4579. IEEE (2016)

Falco, P., Lu, S., Cirillo, A., Natale, C., Pirozzi, S., Lee, D.: Cross-modal visuo-tactile object recognition using robotic active exploration. In: 2017 IEEE International Conference on Robotics and Automation (ICRA), pp. 5273–5280. IEEE (2017)

Feichtenhofer, C., Fan, H., Malik, J., He, K.: SlowFast networks for video recognition. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 6202–6211 (2019)

Fishel, J.A., Loeb, G.E.: Bayesian exploration for intelligent identification of textures. Front. Neurorobot. 6, 4 (2012)

Gao, Y., Hendricks, L.A., Kuchenbecker, K.J., Darrell, T.: Deep learning for tactile understanding from visual and haptic data. In: 2016 IEEE International Conference on Robotics and Automation (ICRA), pp. 536–543. IEEE (2016)

Geiger, A., Lenz, P., Stiller, C., Urtasun, R.: Vision meets robotics: the KITTI dataset. Int. J. Robot. Res. 32(11), 1231–1237 (2013)

Glasner, D., Fua, P., Zickler, T., Zelnik-Manor, L.: Hot or not: exploring correlations between appearance and temperature. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 3997–4005 (2015)

Gonzalez-Garcia, A., van de Weijer, J., Bengio, Y.: Image-to-image translation for cross-domain disentanglement. In: Advances in Neural Information Processing Systems, pp. 1287–1298 (2018)

Hu, J., Shen, L., Sun, G.: Squeeze-and-excitation networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7132–7141 (2018)

Isola, P., Zhu, J.Y., Zhou, T., Efros, A.A.: Image-to-image translation with conditional adversarial networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1125–1134 (2017)

Janner, M., Levine, S., Freeman, W.T., Tenenbaum, J.B., Finn, C., Wu, J.: Reasoning about physical interactions with object-oriented prediction and planning. arXiv preprint arXiv:1812.10972 (2018)

Jayaraman, D., Grauman, K.: Learning to look around: intelligently exploring unseen environments for unknown tasks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1238–1247 (2018)

Jean, N., Burke, M., Xie, M., Davis, W.M., Lobell, D.B., Ermon, S.: Combining satellite imagery and machine learning to predict poverty. Science 353(6301), 790–794 (2016)

Jehle, M., Sommer, C., Jähne, B.: Learning of optimal illumination for material classification. In: Goesele, M., Roth, S., Kuijper, A., Schiele, B., Schindler, K. (eds.) DAGM 2010. LNCS, vol. 6376, pp. 563–572. Springer, Heidelberg (2010). https://doi.org/10.1007/978-3-642-15986-2_57

Johns, E., Leutenegger, S., Davison, A.J.: Pairwise decomposition of image sequences for active multi-view recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3813–3822 (2016)

Karpathy, A., Fei-Fei, L.: Deep visual-semantic alignments for generating image descriptions. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3128–3137 (2015)

Kerr, E., McGinnity, T.M., Coleman, S.: Material recognition using tactile sensing. Expert Syst. Appl. 94, 94–111 (2018)

Kerzel, M., Ali, M., Ng, H.G., Wermter, S.: Haptic material classification with a multi-channel neural network. In: 2017 International Joint Conference on Neural Networks (IJCNN), pp. 439–446. IEEE (2017)

Kiros, R., Salakhutdinov, R., Zemel, R.S.: Unifying visual-semantic embeddings with multimodal neural language models. arXiv preprint arXiv:1411.2539 (2014)

Li, R., et al.: Localization and manipulation of small parts using gelsight tactile sensing. In: 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, pp. 3988–3993. IEEE (2014)

Li, Y., Zhu, J.Y., Tedrake, R., Torralba, A.: Connecting touch and vision via cross-modal prediction. arXiv preprint arXiv:1906.06322 (2019)

Liu, C., Gu, J.: Discriminative illumination: per-pixel classification of raw materials based on optimal projections of spectral BRDF. IEEE Trans. Pattern Anal. Mach. Intell. 36(1), 86–98 (2014)

Liu, C., et al.: Progressive neural architecture search. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11205, pp. 19–35. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01246-5_2

Liu, H., Simonyan, K., Yang, Y.: Darts: differentiable architecture search. arXiv preprint arXiv:1806.09055 (2018)

McCurrie, M., Beletti, F., Parzianello, L., Westendorp, A., Anthony, S., Scheirer, W.J.: Predicting first impressions with deep learning. In: 2017 12th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2017), pp. 518–525. IEEE (2017)

Nam, H., Ha, J.W., Kim, J.: Dual attention networks for multimodal reasoning and matching. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 299–307 (2017)

Nielsen, J.B., Jensen, H.W., Ramamoorthi, R.: On optimal, minimal BRDF sampling for reflectance acquisition. ACM Trans. Graph. (TOG) 34(6), 186 (2015)

Owens, A., Efros, A.A.: Audio-visual scene analysis with self-supervised multisensory features. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11210, pp. 639–658. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01231-1_39

Owens, A., Isola, P., McDermott, J., Torralba, A., Adelson, E.H., Freeman, W.T.: Visually indicated sounds. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2405–2413 (2016)

Owens, A., Wu, J., McDermott, J.H., Freeman, W.T., Torralba, A.: Ambient sound provides supervision for visual learning. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9905, pp. 801–816. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46448-0_48

Perarnau, G., Van De Weijer, J., Raducanu, B., Álvarez, J.M.: Invertible conditional GANs for image editing. arXiv preprint arXiv:1611.06355 (2016)

Pham, H., Guan, M.Y., Zoph, B., Le, Q.V., Dean, J.: Efficient neural architecture search via parameter sharing. arXiv preprint arXiv:1802.03268 (2018)

Ranjan, V., Rasiwasia, N., Jawahar, C.: Multi-label cross-modal retrieval. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 4094–4102 (2015)

Reinecke, J., Dietrich, A., Schmidt, F., Chalon, M.: Experimental comparison of slip detection strategies by tactile sensing with the BioTac® on the DLR hand arm system. In: 2014 IEEE international Conference on Robotics and Automation (ICRA), pp. 2742–2748. IEEE (2014)

Russakovsky, O., et al.: ImageNet large scale visual recognition challenge. Int. J. Comput. Vision 115(3), 211–252 (2015). https://doi.org/10.1007/s11263-015-0816-y

Salvador, A., et al.: Learning cross-modal embeddings for cooking recipes and food images. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3020–3028 (2017)

Shelhamer, E., Rakelly, K., Hoffman, J., Darrell, T.: Clockwork convnets for video semantic segmentation. In: Hua, G., Jégou, H. (eds.) ECCV 2016. LNCS, vol. 9915, pp. 852–868. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-49409-8_69

Su, H., Maji, S., Kalogerakis, E., Learned-Miller, E.: Multi-view convolutional neural networks for 3D shape recognition. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 945–953 (2015)

Su, Z., et al.: Force estimation and slip detection/classification for grip control using a biomimetic tactile sensor. In: 2015 IEEE-RAS 15th International Conference on Humanoid Robots (Humanoids), pp. 297–303. IEEE (2015)

Taylor, S., et al.: A deep learning approach for generalized speech animation. ACM Trans. Graph. (TOG) 36(4), 93 (2017)

Volokitin, A., Timofte, R., Van Gool, L.: Deep features or not: temperature and time prediction in outdoor scenes. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 63–71 (2016)

Wang, B., Yang, Y., Xu, X., Hanjalic, A., Shen, H.T.: Adversarial cross-modal retrieval. In: Proceedings of the 25th ACM International Conference on Multimedia, pp. 154–162. ACM (2017)

Wang, O., Gunawardane, P., Scher, S., Davis, J.: Material classification using BRDF slices. In: IEEE Conference on Computer Vision and Pattern Recognition, 2009, CVPR 2009, pp. 2805–2811. IEEE (2009)

Wang, T.-C., Zhu, J.-Y., Hiroaki, E., Chandraker, M., Efros, A.A., Ramamoorthi, R.: A 4D light-field dataset and CNN architectures for material recognition. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9907, pp. 121–138. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46487-9_8

Williams, R.J.: Simple statistical gradient-following algorithms for connectionist reinforcement learning. Mach. Learn. 8(3–4), 229–256 (1992). https://doi.org/10.1007/BF00992696

Wu, J., Lu, E., Kohli, P., Freeman, B., Tenenbaum, J.: Learning to see physics via visual de-animation. In: Advances in Neural Information Processing Systems, pp. 153–164 (2017)

Wu, J., Yildirim, I., Lim, J.J., Freeman, B., Tenenbaum, J.: Galileo: perceiving physical object properties by integrating a physics engine with deep learning. In: Advances in Neural Information Processing Systems, pp. 127–135 (2015)

Wu, Z., et al.: 3D ShapeNets: a deep representation for volumetric shapes. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1912–1920 (2015)

Xu, Z., Wu, J., Zeng, A., Tenenbaum, J.B., Song, S.: DensePhysNet: learning dense physical object representations via multi-step dynamic interactions. arXiv preprint arXiv:1906.03853 (2019)

Xue, J., Zhang, H., Dana, K., Nishino, K.: Differential angular imaging for material recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 764–773 (2017)

Yuan, W., Mo, Y., Wang, S., Adelson, E.H.: Active clothing material perception using tactile sensing and deep learning. In: 2018 IEEE International Conference on Robotics and Automation (ICRA), pp. 1–8. IEEE (2018)

Yuan, W., Wang, S., Dong, S., Adelson, E.: Connecting look and feel: associating the visual and tactile properties of physical materials. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5580–5588 (2017)

Zhang, H., Dana, K., Nishino, K.: Reflectance hashing for material recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3071–3080 (2015)

Zhang, H., Dana, K., Nishino, K.: Friction from reflectance: deep reflectance codes for predicting physical surface properties from one-shot in-field reflectance. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9908, pp. 808–824. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46493-0_49

Zhang, Y., Lu, H.: Deep cross-modal projection learning for image-text matching. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11205, pp. 707–723. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01246-5_42

Zhang, Z., Yang, L., Zheng, Y.: Translating and segmenting multimodal medical volumes with cycle-and shape-consistency generative adversarial network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 9242–9251 (2018)

Zhao, C., Sun, L., Stolkin, R.: A fully end-to-end deep learning approach for real-time simultaneous 3D reconstruction and material recognition. In: 2017 18th International Conference on Advanced Robotics (ICAR), pp. 75–82. IEEE (2017)

Zhou, B., Andonian, A., Oliva, A., Torralba, A.: Temporal relational reasoning in videos. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11205, pp. 831–846. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01246-5_49

Zhu, J.Y., et al.: Toward multimodal image-to-image translation. In: Advances in Neural Information Processing Systems, pp. 465–476 (2017)

Zoph, B., Le, Q.V.: Neural architecture search with reinforcement learning. arXiv preprint arXiv:1611.01578 (2016)

Acknowledgments

This research was supported by NSF Grant #1715195. We would like to thank Eric Wengrowski, Peri Akiva, and Faith Johnson for the useful suggestions and discussions.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Purri, M., Dana, K. (2020). Teaching Cameras to Feel: Estimating Tactile Physical Properties of Surfaces from Images. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, JM. (eds) Computer Vision – ECCV 2020. ECCV 2020. Lecture Notes in Computer Science(), vol 12372. Springer, Cham. https://doi.org/10.1007/978-3-030-58583-9_1

Download citation

DOI: https://doi.org/10.1007/978-3-030-58583-9_1

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-58582-2

Online ISBN: 978-3-030-58583-9

eBook Packages: Computer ScienceComputer Science (R0)