Abstract

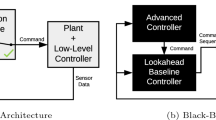

We present the Neural Simplex Architecture (NSA), a new approach to runtime assurance that provides safety guarantees for neural controllers (obtained e.g. using reinforcement learning) of autonomous and other complex systems without unduly sacrificing performance. NSA is inspired by the Simplex control architecture of Sha et al., but with some significant differences. In the traditional approach, the advanced controller (AC) is treated as a black box; when the decision module switches control to the baseline controller (BC), the BC remains in control forever. There is relatively little work on switching control back to the AC, and there are no techniques for correcting the AC’s behavior after it generates a potentially unsafe control input that causes a failover to the BC. Our NSA addresses both of these limitations. NSA not only provides safety assurances in the presence of a possibly unsafe neural controller, but can also improve the safety of such a controller in an online setting via retraining, without overly degrading its performance. To demonstrate NSA’s benefits, we have conducted several significant case studies in the continuous control domain. These include a target-seeking ground rover navigating an obstacle field, and a neural controller for an artificial pancreas system.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

In case of partial observability, the full state can typically be reconstructed from sequences of past states and actions, but this process is error-prone.

- 2.

For nondeterministic (stochastic) systems, a (probabilistic) model checker can be used instead of a simulator, but this approach may be computationally expensive.

- 3.

Although the obstacles are fixed, the NC still generalizes well (but not perfectly) to random obstacle fields not seen during training, as shown in this video https://youtu.be/ICT8D1uniIw.

References

Achiam, J., Held, D., Tamar, A., Abbeel, P.: Constrained policy optimization. In: International Conference on Machine Learning, pp. 22–31 (2017)

Alshiekh, M., Bloem, R., Ehlers, R., Könighofer, B., Niekum, S., Topcu, U.: Safe reinforcement learning via shielding. arXiv preprint arXiv:1708.08611 (2017)

Alshiekh, M., Bloem, R., Ehlers, R., Könighofer, B., Niekum, S., Topcu, U.: Safe reinforcement learning via shielding. In: AAAI (2018). https://www.aaai.org/ocs/index.php/AAAI/AAAI18/paper/view/17211

Berkenkamp, F., Turchetta, M., Schoellig, A., Krause, A.: Safe model-based reinforcement learning with stability guarantees. In: Advances in Neural Information Processing Systems, pp. 908–918 (2017)

Bouton, M., Karlsson, J., Nakhaei, A., Fujimura, K., Kochenderfer, M.J., Tumova, J.: Reinforcement learning with probabilistic guarantees for autonomous driving. CoRR abs/1904.07189 (2019)

Chen, H., Paoletti, N., Smolka, S.A., Lin, S.: Committed moving horizon estimation for meal detection and estimation in type 1 diabetes. In: American Control Conference (ACC 2019), pp. 4765–4772 (2019)

Cheng, R., Orosz, G., Murray, R.M., Burdick, J.W.: End-to-end safe reinforcement learning through barrier functions for safety-critical continuous control tasks. AAAI (2019)

Chow, Y., Nachum, O., Duenez-Guzman, E., Ghavamzadeh, M.: A Lyapunov-based approach to safe reinforcement learning. In: Advances in Neural Information Processing Systems, pp. 8103–8112 (2018)

Dalal, G., Dvijotham, K., Vecerik, M., Hester, T., Paduraru, C., Tassa, Y.: Safe exploration in continuous action spaces. arXiv e-prints (2018)

Desai, A., Ghosh, S., Seshia, S.A., Shankar, N., Tiwari, A.: A runtime assurance framework for programming safe robotics systems. In: IEEE/IFIP International Conference on Dependable Systems and Networks (DSN) (2019)

Duan, Y., Chen, X., Houthooft, R., Schulman, J., Abbeel, P.: Benchmarking deep reinforcement learning for continuous control. In: Proceedings of the 33rd International Conference on Machine Learning ICML 2016, vol. 48, pp. 1329–1338 (2016). http://dl.acm.org/citation.cfm?id=3045390.3045531

Fu, J., Topcu, U.: Probably approximately correct MDP learning and control with temporal logic constraints. In: 2014 Robotics: Science and Systems Conference (2014)

Fulton, N., Platzer, A.: Safe reinforcement learning via formal methods. In: AAAI 2018 (2018)

Fulton, N., Platzer, A.: Verifiably safe off-model reinforcement learning. In: Vojnar, T., Zhang, L. (eds.) TACAS 2019. LNCS, vol. 11427, pp. 413–430. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-17462-0_28

García, J., Fernández, F.: A comprehensive survey on safe reinforcement learning. J. Mach. Learn. Res. 16(1), 1437–1480 (2015). http://dl.acm.org/citation.cfm?id=2789272.2886795

García, J., Fernández, F.: Probabilistic policy reuse for safe reinforcement learning. ACM Trans. Auton. Adapt. Syst. (TAAS) 13(3), 14 (2019)

Hasanbeig, M., Abate, A., Kroening, D.: Logically-correct reinforcement learning. CoRR abs/1801.08099 (2018)

Johnson, T., Bak, S., Caccamo, M., Sha, L.: Real-time reachability for verified Simplex design. ACM Trans. Embed. Comput. Syst. 15(2), 26:1–26:27 (2016). https://doi.org/10.1145/2723871, http://doi.acm.org/10.1145/2723871

Lillicrap, T., et al.: Continuous control with deep reinforcement learning. arXiv preprint arXiv:1509.02971 (2015)

Mason, G., Calinescu, R., Kudenko, D., Banks, A.: Assured reinforcement learning with formally verified abstract policies. In: ICAART, no. 2, pp. 105–117. SciTePress (2017)

Mnih, V., et al.: Asynchronous methods for deep reinforcement learning. In: ICML, pp. 1928–1937 (2016)

Moldovan, T.M., Abbeel, P.: Safe exploration in Markov decision processes. In: ICML. icml.cc/Omnipress (2012)

Ohnishi, M., Wang, L., Notomista, G., Egerstedt, M.: Barrier-certified adaptive reinforcement learning with applications to Brushbot navigation. IEEE Trans. Robot. 1–20 (2019). https://doi.org/10.1109/TRO.2019.2920206

Phan, D., Paoletti, N., Grosu, R., Jansen, N., Smolka, S.A., Stoller, S.D.: Neural simplex architecture. arXiv preprint arXiv:1908.00528 (2019)

Schulman, J., Levine, S., Abbeel, P., Jordan, M., Moritz, P.: Trust region policy optimization. In: ICML, pp. 1889–1897 (2015)

Seto, D., Krogh, B., Sha, L., Chutinan, A.: The Simplex architecture for safe online control system upgrades. In: Proceedings of 1998 American Control Conference, vol. 6, pp. 3504–3508 (1998). https://doi.org/10.1109/ACC.1998.703255

Seto, D., Sha, L., Compton, N.: A case study on analytical analysis of the inverted pendulum real-time control system (1999)

Sha, L.: Using simplicity to control complexity. IEEE Softw. 18(4), 20–28 (2001). https://doi.org/10.1109/MS.2001.936213

Silver, D., Hubert, T., Schrittwieser, J., et al.: Mastering chess and shogi by self-play with a general reinforcement learning algorithm. arXiv preprint arXiv:1712.01815 (2017)

Silver, D., Schrittwieser, J., Simonyan, K., et al.: Mastering the game of Go without human knowledge. Nature 550(7676), 354 (2017)

Simão, T.D., Spaan, M.T.J.: Safe policy improvement with baseline bootstrapping in factored environments. In: AAAI, pp. 4967–4974. AAAI Press (2019)

Sutton, R., Barto, A.: Reinforcement Learning: An Introduction. MIT Press, Cambridge (1998)

Tessler, C., Mankowitz, D.J., Mannor, S.: Reward constrained policy optimization. arXiv e-prints (2018)

Vivekanandan, P., Garcia, G., Yun, H., Keshmiri, S.: A Simplex architecture for intelligent and safe unmanned aerial vehicles. In: 2016 IEEE 22nd International Conference on Embedded and Real-Time Computing Systems and Applications (RTCSA), pp. 69–75 (2016). https://doi.org/10.1109/RTCSA.2016.17

Wang, X., Hovakimyan, N., Sha, L.: L1Simplex: fault-tolerant control of cyber-physical systems. In: 2013 ACM/IEEE International Conference on Cyber-Physical Systems (ICCPS), pp. 41–50 (2013)

Wang, Z., et al.: Sample efficient actor-critic with experience replay. arXiv preprint arXiv:1611.01224 (2016)

Wen, M., Ehlers, R., Topcu, U.: Correct-by-synthesis reinforcement learning with temporal logic constraints. In: IROS, pp. 4983–4990. IEEE Computer Society Press (2015)

Xiang, W., et al.: Verification for machine learning, autonomy, and neural networks survey. arXiv e-prints (2018)

Acknowledgments

We thank the anonymous reviewers for their helpful comments. This material is based upon work supported in part by NSF grants CCF-191822, CPS-1446832, IIS-1447549, CNS-1445770, and CCF-1414078, FWF-NFN RiSE Award, and ONR grant N00014-15-1-2208. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of these organizations.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Phan, D.T., Grosu, R., Jansen, N., Paoletti, N., Smolka, S.A., Stoller, S.D. (2020). Neural Simplex Architecture. In: Lee, R., Jha, S., Mavridou, A., Giannakopoulou, D. (eds) NASA Formal Methods. NFM 2020. Lecture Notes in Computer Science(), vol 12229. Springer, Cham. https://doi.org/10.1007/978-3-030-55754-6_6

Download citation

DOI: https://doi.org/10.1007/978-3-030-55754-6_6

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-55753-9

Online ISBN: 978-3-030-55754-6

eBook Packages: Computer ScienceComputer Science (R0)