Abstract

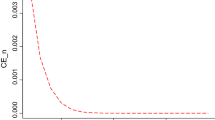

The cumulative entropy is an information measure which is alternative to the differential entropy and is connected with a notion in reliability theory. Indeed, the cumulative entropy of a random lifetime X can be expressed as the expectation of its mean inactivity time evaluated at X. After a brief review of its main properties, in this paper, we relate the cumulative entropy to the cumulative inaccuracy and provide some inequalities based on suitable stochastic orderings. We also show a characterization property of the dynamic version of the cumulative entropy. In conclusion, a stochastic comparison between the empirical cumulative entropy and the empirical cumulative inaccuracy is investigated.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Bibliography

Abbasnejad, M.: Some characterization results based on dynamic survival and failure entropies. Communications of the Korean Statistical Society, 18, 1–12 (2011)

Ahmad, I. A. and Kayid, M.: Characterizations of the RHR and MIT orderings and the DRHR and IMIT classes of life distributions. Probability in the Engineering and Informational Sciences, 19, 447–461 (2005)

Ahmad, I. A., Kayid, M. and Pellerey, F.: Further results involving the MIT order and IMIT class. Probability in The Engineering and Informational Sciences, 19, 377–395 (2005)

Asadi, M. and Ebrahimi, N.: Residual entropy and its characterizations in terms of hazard function and mean residual life function. Statistics & Probability Letters, 49, 263–269 (2000)

Asadi, M. and Zohrevand, Y.: On the dynamic cumulative residual entropy. Journal of Statistical Planning and Inference, 137, 1931–1941 (2007)

Block, H. W., Savits, T. H. and Singh, H.: The reversed hazard rate function. Probability in the Engineering and Informational Sciences, 12, 69–90 (1998)

Bowden, R. J.: Information, measure shifts and distribution metrics. Statistics, 46, 249–262 (2010)

Cover, T. M. and Thomas, J. A.: Elements of Information Theory. Wiley, New York (1991)

Di Crescenzo, A.: A probabilistic analogue of the mean value theorem and its applications to reliability theory. Journal of Applied Probability, 36, 706–719 (1999)

Di Crescenzo, A. and Longobardi, M.: Entropy-based measure of uncertainty in past lifetime distributions. Journal of Applied Probability, 39, 434–440 (2002)

Di Crescenzo, A. and Longobardi, M.: On weighted residual and past entropies. Scientiae Mathematicae Japonicae, 64, 255–266 (2006)

Di Crescenzo, A. and Longobardi, M.: On cumulative entropies. Journal of Statistical Planning and Inference, 139, 4072–4087 (2009)

Di Crescenzo, A. and Longobardi, M.: On cumulative entropies and lifetime estimations. Methods and Models in Artificial and Natural Computation, Lecture Notes in Computer Science, Vol. 5601 (Mira J., Ferrandez J. M., Alvarez Sanchez J. R., Paz F., Toledo J. eds.), 132–141. Springer-Verlag, Berlin (2009)

Di Crescenzo, A. and Longobardi, M.: More on cumulative entropy. Cybernetics and Systems 2010, (Trappl R. ed.), 181–186. Austrian Society for Cybernetic Studies, Vienna (2010)

Di Crescenzo, A. and Longobardi, M.: Neuronal data analysis based on the empirical cumulative entropy. Computer Aided Systems Theory, EUROCAST 2011, Part I, Lecture Notes in Computer Science, Vol. 6927 (Moreno-Diaz R., Pichler F., Quesada-Arencibia A. eds.), 72–79. Springer-Verlag, Berlin (2012)

Di Crescenzo, A. and Shaked, M.: Some applications of the Laplace transform ratio order. Arab Journal of Mathematical Sciences, 2, 121–128 (1996)

Ebrahimi, N.: How to measure uncertainty in the residual life time distribution. Sankhyä, Series A, 58, 48–56 (1996)

Ebrahimi, N. and Kirmani, S. N. U. A.: Some results on ordering of survival functions through uncertainty. Statistics & Probability Letters, 29, 167–176 (1996)

Ebrahimi, N. and Pellerey, F.: New partial ordering of survival functions based on the notion of uncertainty. Journal of Applied Probability, 32, 202–211 (1995)

Ebrahimi, N., Soofi, E. S. and Soyer, R.: Information measures in perspective. International Statistical Review, 78, 383–412 (2010)

Jaynes, E. T.: Information theory and statistical mechanics. Statistical Physics, 1962 Brandeis Lectures in Theoretical Physics, Vol. 3. (Ford K. ed.), 181–218. Benjamin, New York (1963)

Kerridge, D. F.: Inaccuracy and Inference. Journal of the Royal Statistical Society, Series B, 23, 184–194 (1961)

Maynar, P. and Trizac, E.: Entropy of continuous mixtures and the measure problem. Physical Review Letters, 106, 160603 [4 pages] (2011)

Misagh, F., Panahi, Y., Yari, G. H. and Shahi, R.: Weighted cumulative entropy and its estimation. IEEE International Conference on Quality and Reliability, 477–480 (2011)

Misra, N., Gupta, N. and Dhariyal, I. D.: Stochastic properties of residual life and inactivity time at a random time. Stochastic Models, 24, 89–102 (2008)

Navarro, J., del Aguila, Y. and Asad M.: Some new results on the cumulative residual entropy. Journal of Statistical Planning and Inference, 140, 310–322 (2010)

Navarro, J. and Hernandez P. J.: Mean residual life functions of finite mixtures, order statistics and coherent systems. Metrika, 67, 277–298 (2008)

Navarro, J. and Shaked M.: Some properties of the minimum and the maximum of random variables with joint logconcave distributions. Metrika, 71, 313–317 (2010)

Rao, M.: More on a new concept of entropy and information. Journal of Theoretical Probability, 18, 967–981 (2005)

Rao, M., Chen, Y., Vemuri, B. C. and Wang, F.: Cumulative residual entropy: a new measure of information. IEEE Transactions on Information Theory, 50, 1220–1228 (2004)

Schroeder, M. J.: An alternative to entropy in the measurement of information. Entropy, 6, 388–412 (2004)

Shaked, M. and Shanthikumar, J. G.: Stochastic Orders. Springer, New York (2007)

Shannon, C. E.: A mathematical theory of communication. Bell System Technical Journal, 27, 279–423 (1948)

Wang, F. and Vemuri, B. C.: Non-rigid multi-modal image registration using cross-cumulative residual entropy. International Journal of Computer Vision, 74, 201–215 (2007)

Wang, F., Vemuri, B. C., Rao, M. and Chen, Y.: Cumulative residual entropy, a new measure of information & its application to image alignment. Proceedings on the Ninth IEEE International Conference on Computer Vision (ICCV’03), 1, 548–553. IEEE Computer Society (2003)

Acknowledgements

This paper is dedicated to Moshe Shaked in admiration to his most profound contributions on stochastic orders. The corresponding author is grateful to Fabio Spizzichino for introducing Moshe Shaked to him in 1996, which started the collaboration behind the Di Crescenzo and Shaked [132] paper. Antonio Di Crescenzo and Maria Longobardi are partially supported by MIUR-PRIN 2008 “Mathematical models and computation methods for information processing and transmission in neuronal systems subject to stochastic dynamics”.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2013 Springer Science+Business Media New York

About this chapter

Cite this chapter

Di Crescenzo, A., Longobardi, M. (2013). Stochastic Comparisons of Cumulative Entropies. In: Li, H., Li, X. (eds) Stochastic Orders in Reliability and Risk. Lecture Notes in Statistics(), vol 208. Springer, New York, NY. https://doi.org/10.1007/978-1-4614-6892-9_8

Download citation

DOI: https://doi.org/10.1007/978-1-4614-6892-9_8

Published:

Publisher Name: Springer, New York, NY

Print ISBN: 978-1-4614-6891-2

Online ISBN: 978-1-4614-6892-9

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)