Abstract

Complex problem solving in naturalistic environments is fraught with uncertainty, which has significant impacts on problem-solving behavior. Thus, theories of human problem solving should include accounts of the cognitive strategies people bring to bear to deal with uncertainty during problem solving. In this article, we present evidence that analogy is one such strategy. Using statistical analyses of the temporal dynamics between analogy and expressed uncertainty in the naturalistic problem-solving conversations among scientists on the Mars Rover Mission, we show that spikes in expressed uncertainty reliably predict analogy use (Study 1) and that expressed uncertainty reduces to baseline levels following analogy use (Study 2). In addition, in Study 3, we show with qualitative analyses that this relationship between uncertainty and analogy is not due to miscommunication-related uncertainty but, rather, is primarily concentrated on substantive problem-solving issues. Finally, we discuss a hypothesis about how analogy might serve as an uncertainty reduction strategy in naturalistic complex problem solving.

Similar content being viewed by others

Analogy as a heuristic for complex problem solving under uncertainty

Uncertainty is a driving feature of real-world complex problem solving; there is much about the past, present, and future that is uncertain, and problem solvers are repeatedly challenged with resolving many small and large uncertainties. There are accounts of uncertainty in finance (Rowe, 1994), management (Priem, Love, & Shaffer, 2002), medicine (Brashers et al., 2003), negotiation (Bottom, 1998), military tactics (Cohen, Freeman, & Thompson, 1998), and engineering (Wojtkiewicz, Eldred, Field, Urbina, & Red-Horse, 2001). Problem solvers across these settings draw on an array of domain-specific tools and methods to deal with uncertainty, such as rapid prototyping in engineering and design (Skelton & Thamhain, 2003) and statistical procedures such as Monte Carlo simulations and computational modeling in the sciences. Additionally, domain-general cognitive processes, such as mental simulation, appear to be important ways of dealing with uncertainty (Christensen & Schunn, 2009; Trickett, Trafton, & Schunn, 2009). As the range of domain-specific accounts, tools, and methods demonstrate, uncertainty is complex as a phenomenon and ubiquitous in complex real-world settings.

The focus of this article is on the cognitive mechanisms problem solvers use to deal with psychological uncertainty—an internal feeling of being uncertain about information (e.g., data, expected utility of actions/decisions) that may or may not be objectively uncertain (Jousselme, Maupin, & Bosse, 2003; Kahneman & Tversky, 1982). This psychological uncertainty can drive behavior such as decision making (Kahneman & Tversky, 1982) or problem solving to reduce uncertainty to acceptable levels (Trickett et al., 2009). If a problem solver is unaware of the uncertainty in available information, no psychological uncertainty exists, and no problem solving is required to resolve uncertainty.

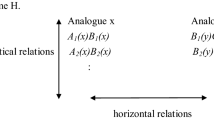

Specifically, we focus on the cognitive process of analogy and how it might be an important strategy for dealing with uncertainty. Analogy is a fundamental cognitive process in which a source (a known piece of information) and a target (a problem or current domain of knowledge) are linked to one another by a systematic mapping of attributes and/or relations, which then allows for transfer of existing knowledge to the target (Gentner, 1983; Holyoak & Thagard, 1996b). For example, despite their differences in scale and domain, the solar system and an atom can be seen as analogous by virtue of both having a nucleus and orbiting elements. The psychological literature on analogy and problem solving has often focused on analogy’s role in facilitating the creation of novel concepts and solutions, such as conceptual change in scientific discovery (Gentner et al., 1997; Holyoak & Thagard, 1996a; Langley & Jones, 1988; Nersessian, 1999), generating innovative ideas in design (Chan et al., 2011; Dahl & Moreau, 2002; Helms, Vattam, & Goel, 2009; Linsey, Laux, Clauss, Wood, & Markman, 2007; Wilson, Rosen, Nelson, & Yen, 2010), and developing creative artistic visions (Okada, Yokochi, Ishibashi, & Ueda, 2009).

A less well-studied function of analogy is its support of more "mundane" problem solving. Theoretical models of general problem solving suggest that analogy may be an important strategy for dealing with the many instances of uncertainty that occur in problem solving. Analogy has been addressed by information-processing models of problem solving based on the notion of heuristic search in a problem space (Newell & Simon, 1972): Analogy is sometimes implemented as a “weak method” for advancing problem solving when known “strong methods” that take advantage of the problem structure are unavailable or unknown (Jones & Langley, 2005; Langley, McKusick, Allen, Iba, & Thompson, 1991). Anderson and colleagues’ ACT–R cognitive architecture implements analogy as a way of generating potential production rules in situations where known/tested production rules are absent (Anderson, Fincham, & Douglass, 1997; Singley & Anderson, 1989). Across these models, analogy is used to overcome perceived cognitive obstacles.

These theoretical claims have empirical support. Houghton (1998) suggested anecdotally that analogy is an important strategy for decision making under uncertainty in policymaking. In his in vivo studies of four microbiology labs, Dunbar (1997) reported that scientists responded to anomalies and unexpected findings with analogies. When exploring potential methodological flaws that might have produced the anomalies, the scientists tended to analogize from studies and processes involving the same organism; when new causal models or modifications to theories were explored, the analogies tended to be from studies and processes involving other organisms. Finally, Ball and Christensen (2009) found, in their analysis of a professional product design team’s naturalistic problem-solving conversations, that increases in uncertainty were associated with analogy. Importantly, they also found that uncertainty generally returned to baseline levels immediately following analogy. Thus, there is both theoretical and empirical support for supposing that analogy might function as an uncertainty resolution strategy.

However, for several reasons, additional empirical work is required to establish the relationship between uncertainty and analogy. First, there are questions surrounding the statistical robustness of existing empirical findings. Dunbar’s (1997) research, while rich in qualitative detail and external validity, did not include formal statistical analyses. Ball and Christensen (2009) reported statistical analyses but employed a chi-square test that assumes independence of observations. This assumption was likely not warranted, given interdependencies among statements from the same individual and among statements closer together in time; thus, their inferences run the risk of being misleading or invalid (Lewis & Burke, 1949; Rietveld, Van Hout, & Ernestus, 2004). To validate their inferences, analyses are required that appropriately account for the inherent temporal dependencies in the data (e.g., hierarchical linear modeling, sequential analysis).

Another gap involves the generalizability of the findings. Both Dunbar (1997) and Ball and Christensen (2009) focused on specific problem-solving contexts: microbiologists’ reasoning in weekly lab meetings and two brainstorming sessions of a product design team, respectively. This narrowed scope was likely a result of the highly resource-intensive nature of their methodology, trading off breadth of scope for depth of analysis. However, it is possible that analogy might be an uncertainty resolution strategy in some problem-solving contexts (e.g., science and engineering) and not others (e.g., negotiation, planning). More data across additional types of problem-solving contexts are needed.

Additionally, prior work did not examine whether the link between analogy and uncertainty might be specific to particular types of uncertainty (i.e., about the task vs. about what people said). For example, it is possible that in the case of Ball and Christensen’s (2009) designers, the analogies might have primarily been addressing uncertainty surrounding communicative misalignments; for instance, one designer might express uncertainty in the context of being unsure about what another designer is saying, leading another designer to use an analogy as a clarifying explanation, thereby serving a communicative function. By contrast, the designers might express collective uncertainty about the viability of a design proposal, and an analogy might be brought in to help evaluate the proposal, thereby serving a function closer to that of an uncertainty resolution strategy for generative problem-solving purposes (e.g., generating solutions or predictions about solutions/problems). Given that analogy serves a range of communicative functions in complex domains (e.g., persuasion, illustration, and explanation in political argumentation; Blanchette & Dunbar, 2001; Whaley & Holloway, 1997) , it is important to clarify the nature of the uncertainty being addressed.

This article addresses these gaps with detailed analyses of the interplay between uncertainty and analogy in the naturalistic problem solving of science and engineering teams. Three complementary streams of analyses (here separated as studies using the same data set) were conducted on science teams on the Mars Exploration Rover (MER) mission. Study 1 tested whether uncertainty preceded the use of different types of analogies. Study 2 then tested whether uncertainty was significantly reduced following analogy use. Study 3 was a qualitative follow-up analysis to explore (1) the links between uncertainty and analogy across different problem-solving contexts (e.g., planning, reasoning) and (2) the extent to which analogy following uncertainty served a communication clarification function or facilitated problem solving.

General method

Before discussing the method and results of the three studies, we present general information about the research context, data sampling methods, and coding.

Research context

The data analyzed in this study consist of transcribed informal, task-relevant conversations between scientists on the multidisciplinary MER science team (Squyres, 2005). The MER mission’s goal was to discover evidence for a history of surface liquid water on Mars. To meet this goal, the mission involved landing two identical rovers on opposite sides of Mars and directing them to drive, photograph, and dig. The outcome of the mission was a success, with the overall team discovering conclusive evidence for a history of water within the first 90 Martian days of the mission. The path to this significant discovery involved complex problem solving on a diverse set of tasks, varying from those specific to the science and engineering domains of this mission (e.g., interpretation of geological data) to tasks shared with all sciences (e.g., rover experiment planning, equipment debugging) and general work processes (e.g., meeting planning, group process discussions). As such, this context represents a strategic choice for testing the relationship between uncertainty and analogy within several different forms of complex problem solving.

The scientists were co-located at the Jet Propulsion Laboratory. The greater science team was broken into two largely independent groups (MER A and B) that followed each rover. Each rover science team was further made up of disciplinary science and engineering subteams. The scientists in the two teams knew of each other’s work and occasionally exchanged individuals and lessons learned but were generally separate day by day. Communication was primarily conducted via face-to-face structured and informal meetings within and between subteams.

Data collection and sampling

Data are taken from 20 data collection trips (10 observation trips of each rover team) distributed over the 90-day nominal mission. All data were collected from video cameras placed on top of large shared screens located near different subgroups’ workstation clusters. Each trip consisted of 3 days of approximately 8 h of data collection each day, resulting in almost 500 h of video. Informed consent was obtained for videotaping. The scientists quickly became habituated to the cameras—at times, discussing personal information.

We analyzed data at the level of the conversation with participants embedded in these conversations. The analyzed conversations in the data set were informal and task relevant. Structured, formal meetings were excluded because they were conducted in a highly formal round-robin presentation style. Task-relevant talk included anything regarding the mission. Off-topic talk included topics such as medical issues or family vacations. Because the conversations could include any combination of the over 100 MER scientists, conversations did not cleanly nest into stable small teams. Furthermore, given the anonymous nature of the study, the video quality, and the tendency for speakers to occasionally stand offscreen or face away from the camera, it was often not possible to identify individuals.

We sampled 11 h 25 min of informal conversation clips from early and late in the mission, with the length of clips based on when conversations naturally began and stopped. The clips were then transcribed into 12,336 utterances. We coded whether each utterance was about MER-related processes or not, broadly speaking (Cohen’s kappa = .96). The analyses were conducted on the 11,856 on-topic utterances, or roughly 11 h. These 114 conversation clips were from 8 to 760 utterances long (M = 104, median = 67, SD = 122). Clips were of groups ranging from 2 to 10 participants.

Coding of protocol data

Analogy

Transcript segments were coded for analogy following the method developed by Dunbar (1995, 1997), where an analogy was defined as a reference to another source of knowledge, with an attempt to transfer concepts from that source to the target domain. Two independent, trained coders assessed the occurrence of analogy at the utterance level, with an interrater reliability of 99 % agreement, Cohen's kappa = .60.Footnote 1 All disagreements were resolved by discussion. Once analogies were found, they were then coded for analogical distance (Cohen’s kappa = .78) and purpose (Cohen’s kappa = .78). Analogical distance had two values: (1) Near analogies were mapped from the same or a similar specialty subject either within that MER mission or from the domain of geology or atmospheric science (e.g., noting that a current rock has features and/or possibilities and challenges similar to the rock the scientists analyzed the week before), and (2) far analogies were mapped from domains outside of MER, geology/atmospheric science, (e.g., when a scientist compared a rover instrument planning problem with a chess game, mapping that the order of moves is key).

Analogies were coded for two types of purpose: (1) Problem-related analogies were used to assist in various substantive problem-solving functions, including explaining or predicting problems and generating or explaining solutions to problems (Bearman, Ball, & Ormerod, 2007) (e.g., one scientist noted that the process that produced the clumpy soil they had just observed might be the same geochemical process they were debating, but acting on lucipaderiol instead of a rock), and (2) non-problem-related analogies were primarily used to show illustrative commonalities and differences not in the immediate context of attempting to solve a problem (e.g., “this [Martian rock formation] reminds of the Grand Canyon”).

Uncertainty

Two research assistants coded each utterance for the presence of uncertainty, using a syntactic approach adapted from Trickett, Trafton, Saner, and Schunn (2007), where “hedge words” were used to identify utterances potentially containing uncertainty (e.g., “I guess,” “I think,” “possibly,” “maybe,” “I believe”). Utterances containing these words were marked as uncertainty present only if it was also clear from context that the segment was spoken with uncertainty. Interrater reliability was acceptable (Cohen’s kappa = .75). All disagreements were resolved by discussion.

Study 1

Method

To test whether situations involving uncertainty reliably spark the use of analogies, a time-lagged logistic regression analysis was employed—time-lagged because this analysis would estimate the change in the probability of an analogy being used at time t + 1 on the basis of uncertainty levels at time t, and logistic because the outcome variable was binary (i.e., did a scientist use an analogy or not). This analysis assumed that (1) there was some baseline probability of an analogy being used in any given time slice and (2) a change in this probability as a function of uncertainty in the previous time slice would reflect the effects of uncertainty on analogy use.

Because the focus of our analysis was on information exchange and the interaction between cognitive events, blocks of utterances, rather than time per se, formed the unit of analysis. Blocks were created by segmenting groups of 10 consecutive utterances into blocks, beginning with the first line of each clip. The choice of block size reflected our focus on relatively fine-grained moment-to-moment cognitive processes, and the relatively unanchored segmentation of the transcript into blocks approximates a random sample of fluctuations in uncertainty in the scientists’ conversations. The data associated with each block consisted of (1) a binary variable analogy , indicating whether any analogy was used in the block, and (2) a continuous variable uncertainty , indicating the number of uncertain statements in the block (ranging from 0 to 10).

Because the focus of the analysis was on predicting analogy at time t + 1, we excluded data where potential independent–dependent variable pairs involved missing data. Specifically, because the overall sample of blocks included significant conversation breaks (i.e., between video clips), dependent variable data (analogies) from 114 (out of 1,283) blocks that occurred at the beginning of clips were excluded from the time-lagged analyses; for the same reason, independent variable data (uncertainty) from the 114 blocks that occurred at the end of clips were excluded from the analyses. Additionally, most of these blocks at the end of each clip contained fewer than 10 lines. To avoid statistical noise from heterogeneity of block size, dependent variable data (analogies) from 108 of the 114 end-of-clip blocks were also excluded from the analyses, due to being in small blocks, resulting in a total of 1,063 clean time t to time t + 1 block pairs in the analyses. However, the pattern of findings remained unchanged with the dependent variable data from the shorter blocks included.

Results

In total, 94 analogies were identified, which were nested into 73 of the 1,063 block pairs in the analyses. Of the 94 analogies, the majority were categorized as near (72 %), with only 28 % far. Similarly, most of the analogies were problem related (70 %), rather than non-problem-related (30 %). Uncertainty occurred in about 10 % of the transcript utterances.

We estimated a model predicting analogy at time t + 1 with uncertainty at time t. Because the data presented here had an inherently multilevel structure, since blocks occurred within video clips, we first ascertained whether our model needed to account for within-clip dependencies. To test for the presence of these dependencies with respect to the analogy variable, we used the Hierarchical Linear Modeling 6 program (HLM 6; Raudenbush & Bryk, 2002) to estimate a random effects hierarchical linear model with blocks nested within clips and analogy as the dependent variable at the block level and no predictors at the block level. This model tested the degree to which variations in analogy were dependent on clip-level variations. Since the clip-level variance component was not significant (i.e., no violation of independence of blocks or events—no nesting effect), simple logistic regressions were performed.

Uncertainty was found to be a significant positive predictor of analogy at time t + 1 (see Table 1); specifically, each additional uncertain statement in the current block was associated with an approximately 27 % increase in the probability of analogy at time t + 1. The overall model was significant, χ2(1, N = 1063) = 8.128, p = .004, Negelkerke R 2 = .02, and the Hosmer–Lemeshow test was not significant, indicating a model with good fit, χ2(3) = 1.625, p = .65.

Figure 1 shows the increasing trend in the proportion of blocks with analogy as a function of the number of uncertain statements in their preceding blocks, with a noticeable increase in analogy rate only when the prior block had at least 40 % of the utterances involve uncertainty. The increased width of the error bars reflects the relative rarity of blocks with high uncertainty.

Analysis by analogy subtype

To explore how the link between uncertainty and analogy might vary as a function of the distance and purpose of the analogy, the time-lagged regression models were repeated for each analogy variation. Specifically, four additional models were run, each with uncertainty at time t as the predictor (as before), but with the models differing in that the dependent variable was limited to a specific type of analogy: (1) analogy-near , the use of any near analogies, regardless of purpose; (2) analogy-far , any far analogies, regardless of purpose; (3) analogy-p+, the use of any problem-related analogies, regardless of distance; and (4) analogy-p− , the use of any non-problem-related analogies, regardless of distance. Because these variables were not defined in a mutually exclusive manner and some blocks contained more than one analogy, some blocks defined as, for example, analogy-near = 1 might also include far analogies.

The results of these models are summarized in Table 2. This exploratory analysis showed that the pattern linking uncertainty to analogy use was statistically significant with near and problem-related analogies, but not for far and non-problem-related analogies. It is difficult to infer from this analysis whether the findings imply that uncertainty triggers near analogies in general, problem-related analogies in general, or only near problem-related analogies, given that there was a significant association between distance and purpose; most near analogies were problem-related analogies (78 %), χ2(1) = 7.02, p < .01.

To clarify this issue, four additional models were run, each predicting one of the four possible combinations of distance and purpose—that is, analogy-near-p+, analogy-near-p−, analogy-far-p+, and analogy-far-p − (see Table 3). As with the previous set of models, each separate model had uncertainty at time t as the predictor.

In this set of models, only the model for uncertainty predicting near problem-related analogies reaches statistical significance. While the Ns for the other distance–purpose combinations are too small (around N = 10; see Table 3) for robust statistical tests when an interaction analysis is undertaken, the trends of those statistical tests nonetheless suggest that both near in general and problem-related in general analogies are triggered. Specifically, the models for both near non-problem-related and far problem-related analogies yield comparable effect sizes to the near problem-related case, with only far non-problem-related analogies not increasing after rises in uncertainty. Additional data would be required to verify the stability of these trends.

Discussion

The time-lagged logistic regression models demonstrated that situations involving uncertainty were reliable predictors of analogy use. Follow-up exploratory analyses suggested that this relationship may be specific to near analogies and problem-related analogies. Because of the reduced Ns for far and non-problem-related analogies, we cannot be certain that there is no relationship between those kinds of analogies and uncertainty. However, the higher base rate and co-occurrence of near problem-related analogies suggest that they are the preferred approach for dealing with uncertainty, as compared with using far and/or non-problem-related analogies. Furthermore, we might expect near analogies to be more closely related to unpacking the issue at hand and, therefore, more effective for problem solving under uncertainty. Additionally, non-problem-related analogies are unlikely to be useful for dealing with uncertainty, since they did not occur in the context of the scientists and engineers attempting to solve and/or explain problems. Finally, the reduced Ns for far and non-problem-related analogies would likely result in underpowered statistical tests for follow-up analyses. Given these theoretical and pragmatic motivations, we focus in Studies 2 and 3 on near, problem-related analogies.

Study 2

While Study 1 showed that analogy use tended to follow rises in uncertainty levels, additional evidence of a different kind is needed before concluding that analogy might be a strategy for dealing with uncertainty during problem solving. One natural question is whether the use of analogy tends to alleviate elevated uncertainty levels. To address this question, Study 2 explored how uncertainty levels changed before, during, and after analogy in relation to baseline levels of uncertainty.

Method

The MER transcript was first segmented into one of five segment types: (1) preanalogy (pre-A) segments, 10 utterances just prior to an analogy episode; (2) during-analogy (during-A) segments, utterances from the beginning to end of an analogy episode; (3) postanalogy (post-A) segments, 10 utterances immediately following an analogy episode; (4) post-postanalogy (post-A + 1) segments, 10 utterances immediately following postanalogy utterances; and (5) baseline segments, each segment of 10 utterances at least 25 utterances away from the other segment types. The measure of uncertainty in each segment was the proportion of uncertain statements.

The sampling strategy for the baseline segments was designed to provide an estimate of uncertainty levels when the scientists were not engaged in preanalogy, during-analogy, or postanalogy conversation, with the logic being that a certain amount of “lag” or spillover of uncertainty was assumed to take place surrounding analogy episodes. During-A segments varied by the length and number of analogies in the analogy episode: The scientists tended to use both single analogies and multiple analogies linked together in a coherent reasoning chain, most often within 20 utterances of each other, and such chains are analytically treated as one during-A segment. To illustrate, Fig. 2 graphically represents an example pre-A to post-A segment sequence surrounding a single-analogy episode, in contrast with an example segment sequence surrounding a multiple-analogy episode.

A total of 65 analogy episodes were identified. In keeping with our focus on unpacking whether analogies sparked by uncertainty might act to alleviate that uncertainty, we focused our analysis on analogy episodes that included at least one near problem-related analogy. This filter excluded analogy episodes that consisted of only near non-problem-related or far problem-related analogies and resulted in a total of 37 analogy episodes. Additionally, some analogy episodes occurred within less than 10 uterances of either the beginning or the end of clips; to ensure stability of the estimates of pre- and postanalogy uncertainty, these pre- and postsegments were excluded from the analysis. Of the 37 analogy episodes that included near problem-related analogies, 5 were missing a pre-A segment, and 3 were missing a post-A segment, but none were missing both. There were a total of 908 baseline segments, which provided a stable estimate of the baseline level of uncertainty in the scientists’ conversations.

Results

As Fig. 3 shows, uncertainty levels, measured in terms of the proportion of statements with coded uncertainty, in both pre-A and during-A segments were significantly greater than in baseline segments, while post-A and post-A + 1 segments were not.

A one-way analysis of variance (ANOVA) model was estimated with segment type as a five-level between-subjects variable and proportion uncertain statements as the dependent variable. The overall ANOVA was statistically significant, F(4, 1007) = 5.79, p = .000, partial η 2 = .022, demonstrating that uncertainty levels differed significantly across segment types. To correct for Type I error inflation due to multiple comparisons, a Dunnett’s t test was used to compare pre-A, during-A, post-A, and post-A + 1 segments with baseline segments. Uncertainty was found to be greater than baseline only for pre-A and during-A segments, d = 0.57 (95 % CI = .51–58), p = .022, and d = 0.77 (95 % CI = .64–.78), p < .001, respectively. Inspection of the graph yields the contextualizing insight that the average difference in uncertainty levels between pre-A and post-A segments is relatively small, which is reflected in the small standardized difference, d = 0.38 (95 % CI = .32–.42) and nonsignificant contrast.

Discussion

Converging on the results of Study 1, Study 2 showed that uncertainty levels were elevated above baseline levels just prior to analogy episodes. Furthermore, uncertainty levels were found to be elevated during analogy episodes as well but returned to baseline levels immediately following the end of analogy episodes. Thus, Study 2 provided evidence that analogies—in particular, near problem-related ones—not only tend to reliably occur in the presence of increased uncertainty, but also may play a part in alleviating it. However, we also note that uncertainty was slightly higher than baseline during versus before analogies, possibly because analogies were introducing some uncertainty of their own. Alternatively, this finding might simply be an indication of a tight coupling between uncertainty and analogy use, as would be expected if analogizing is a strategy for supporting the handling of problems under conditions of uncertainty. Additionally, the difference between pre-A and post-A segments is relatively small, suggesting that the alleviating effect of analogy on uncertainty is not dramatic (i.e., complete resolution) but, instead, a “good enough” effect (i.e., returning to nonzero baseline levels of uncertainty). This more nuanced effect of analogy on uncertainty is explored further in Study 3.

Study 3

Having demonstrated that situations involving uncertainty tended to predict the onset of analogies and provided evidence that analogies are directly associated with a return of uncertainty to baseline levels, we now turn to our final step in investigating whether and how analogy may actually alleviate elevated uncertainty. Understanding more about the details of the connection provides more insight into the nature of the mechanism and also reduces concerns about the relationship being a spurious statistical relationship.

Specifically, Study 3 had two objectives. First, recall that analogies classified as problem related in our coding scheme included a range of functions supporting problem solving, including explaining solutions to problems to other team members and generating solutions or predictions for problems. In this study, we sought to determine the degree to which analogy was being used in situations of uncertainty involving communicative misalignments (e.g., “I don’t understand what you are saying”) versus generative problem solving per se (e.g., “what are we looking at,” “what is the value of this potential solution,” etc.). The second objective was to formulate and explore hypotheses about the precise ways in which analogy use might function to alleviate elevated uncertainty.

Qualitative coding and analyses were conducted to address these research objectives. Similar to Study 2, analyses focused on near problem-related analogies, since the link between uncertainty and analogy was clearest for those analogy subtypes.

Method

Communicative misalignments

Qualitative judgments

To explore the extent to which analogies were brought in to deal with miscommunication-related uncertainty (vs. generative problem solving), the first and second authors independently coded the pre-A utterances in each analogy episode using a binary classification scheme. A code of “1” indicated that at least one uncertain utterance expressed uncertainty about what another speaker was saying (e.g., “I don’t understand what you are saying,” “I think I know what you are suggesting”), and “0” indicated that none of the uncertainty utterances had this feature (i.e., reflected uncertainty in the problem-solving task). Interrater agreement for this coding was perfect with a Cohen’s kappa of 1.

Exploring how analogy might alleviate uncertainty

Uncertainty topics

To explore the range of sources of uncertainty associated with analogy use, the first and second authors together, as an expert panel, coded the pre-A and post-A uncertain utterances for topic, using a classification scheme describing the major categories of problem solving that are typically seen in such contexts: science content-related issues (e.g., data analysis, theorizing), science planning-related issues (instrumentation troubleshooting, rover experiment planning), and work/team process issues (Paletz, Schunn, & Kim, 2011). The distinction between content- and planning-related issues is analogous to the distinction between problem solving in the space of hypotheses and in the space of experiments in multiple-space search theories of science (Klahr & Dunbar, 1988). From a psychology of science perspective, it is important to know for which of these types of uncertainties analogy was most helpful. Furthermore, science is also inherently social, particularly so in this large team context, which involves additional problem solving related to social and work processes (e.g., assigning people to specific tasks, negotiating team meetings and work schedules, determining budgets and staffing). Work processes may elicit different problem-solving strategies for resolving uncertainty than are used in the core task itself, or analogy may be a common strategy for solving any kind of uncertainty. Examining the relationship of uncertainty to analogy across these major topics allows for exploration of the generality of the underlying mechanism.

These three major topics were captured in the following topics coding scheme:

-

1.

Task-science (1 = yes, 0 = no): Any of the uncertain utterances have to do with interpreting data and discussing hypotheses about the geology or atmosphere of Mars;

-

2.

Task-planning (1 = yes, 0 = no): Any of the uncertain utterances are about planning analyses, readings, or movements of a Mars Rover;

-

3.

Process (1 = yes, 0 = no): Any of the uncertain utterances have to do work process issues, (e.g., scheduling, paper writing, division of labor).

The codes were not mutually exclusive; that is, a given segment could contain any or all of the three code types.

Results and discussion

Communicative misalignments

The number of episodes with either pre- or postanalogy uncertainty is given in Table 3. Of the 37 analogy episodes examined, 9 had no uncertain utterances in pre-A segments. Analysis focused only on preanalogy uncertainty to uncover what kinds of uncertainty would be associated with subsequent analogy use. Out of the 28 episodes with pre-A uncertainty, none were judged to contain uncertainty stemming primarily from communicative misalignments. Thus, the analogies predicted by increases in uncertainty in this data set were largely serving not communicative alignment functions but, rather, more generative problem-solving functions.

Exploring how analogy might alleviate uncertainty

Uncertainty topics

As is shown in Table 4, the most common topic of uncertainty was task-science uncertainty, with task-planning and process uncertainty approximately equally prevalent. Thus, the majority of the uncertainty surrounding analogy appeared to be directly related to the central problem-solving tasks of the mission, such as interpreting data, discussing hypotheses/theories, and planning rover measurement trips, with a smaller number of various uncertainties related to coordinating schedules, writing papers, and so on. Next, we present prototypical examples from each topic type to show the character of the relationship of uncertainty and analogy. In particular, we focus upon whether the analogy is connected to the uncertainty and whether the analogy resolves the uncertainty.

A prototypical example of how task-science uncertainty is related to analogy is illustrated in a protocol excerpt in Table 5. For brevity and clarity, the protocol excerpts that follow are shown by speaker turn rather than utterance. In this excerpt, the scientists discuss potential explanations for some scarring patterns observed on a sample of rocks, focusing on the implications, if any, of the observed data patterns for the viability of life and historical presence of liquid surface water at this particular location on Mars. A ventifact is a rock that has been shaped, abraded, or polished by wind-driven sand. Desert varnish on Earth is a coating of inorganic and organic matter found on exposed rock surfaces in arid environments. At this point in the mission, historical evidence of liquid surface water had only recently been discovered, but not by this particular rover team.

The scientists attempt to reason about how the rocks under analysis could have formed desert varnish, whether what they observe is indeed desert varnish, and the implications for possible historical water at their site.

Two analogies participate in their reasoning process. The first is to how desert varnish is formed on Earth, where “it’s thought to maybe have biological implications”; the second is to the findings of a prior Mars mission (“certainly with Viking we saw frost”) that helped prove that Mars did have, at least, frozen water. These analogies appear to alleviate uncertainty enough for the scientists to advance their reasoning along the same lines: Speaker 2 says that it is “not implausible” that frost might play a role in creating Martian desert varnish, and Speaker 1 seems to express his increasing certainty about an acid fog hypothesis by saying “maybe that’s a good explanation of it.” Further, Speakers 4 and 2 begin to explore additional questions, suggesting that their discussion of the desert varnish and frost hypotheses has reached a threshold of uncertainty to allow them to explore further implications.

In the next, task-planning excerpt (Table 6), team members at the long-term planning workstations attempt to decide how long to have the rover take a Mössbauer spectrometer instrument reading. A pass refers to the satellite revolutions around Mars that dictate when data can be rapidly uploaded/downloaded from the rover to Earth. The uncertainty is expressed by Speaker 1 in particular.

An analogy to an abstract “go and touch” situation generates a proposed decision rule. The scientists at this point have already coined the term “touch and go” to indicate an instrument reading followed by a drive; the speaker extends this concept to mean a drive followed by an instrument reading. The absence of expressed uncertainty—in particular, by Speaker 1—after the analogy-generated solution is proposed suggests that the analogy at least partially helped in resolving the uncertainty surrounding their decision making.

Finally, in an example of analogy dealing with process uncertainty (Table 7), the speakers discuss a proposal they sent to headquarters requesting additional time and observations.

Although the rovers were initially built to last 90 Martian days (sols), it became increasingly obvious that the rovers would outlast this time. In fact, Spirit lasted over 6 years, and Opportunity is still operational as of this writing, over 8 years since landing. However, the initial mission was only budgeted, scheduled, and staffed for these initial 90 days, after which the scientists were expected to return to their home institutions and the engineers were expected to shift to different projects. In this conversation, scientists from MER A on sol 77 discuss how they might continue conducting science in a sped-up, remote fashion. They express uncertainty regarding which parts of their proposal will be approved and, consequently, for how long the MER mission would be extended and how much engineering support they should expect.

Speaker 1 uses two analogies to strategize their continued functioning with a huge reduction in engineering staff. When the mission began, it took a full Martian day (sol) of science planning to determine a sol of rover activities; by the end of the first 90 days, this amount had dropped to roughly 3 h (Tollinger, Schunn, & Vera, 2006). The scientists here refer to recent process improvements where the one team was able to plan 2 Martian days of rover activity in one sol and how the other was able to do a short planning session. The analogy does not completely resolve their uncertainty—a complete resolution would consist of them hearing back from headquarters—but the analogous situations appear to help the speakers understand how they might continue to manage the rover with limited staffing.

As the three excerpts illustrate, the analogies in this data set often did not completely resolve the underlying issues causing the uncertainty; rather, they seemed to help by narrowing the space of possible resolutions—for example, by generating a resolution that was then rejected or by generating a potential resolution that required additional verification. In a number of cases, some residual uncertainty persisted postanalogy. Analogies were not final, definite resolutions to the problem-solving uncertainties but, instead, appeared to be quick, approximate aids for advancing problem solving, reasoning, and decision making.

General discussion

Summary of findings

This article explored the possibility that analogy is a general strategy for problem solving under uncertainty, using three complementary streams of analyses of naturalistic records of complex problem solving. Studies 1 and 2 showed that local increases in uncertainty reliably predicted the use of mainly near problem-related analogies and that uncertainty tended to return to baseline levels immediately following these kinds of analogies. Study 3 provided evidence that the uncertain contexts that tended to predict analogy use related to more generative problem solving, such as planning rover observations, interpreting data, and dealing with work process difficulties, rather than communicative misalignments. Finally, also in Study 3, we discussed a data-generated hypothesis that, rather than completely resolving the issue underlying the immediate uncertainty, analogy supports problem solving under uncertainty by narrowing the space of possibilities to facilitate quick, approximate problem solving, reasoning, and decision making.

Study strengths and limitations

An important strength of our work is its external validity. Our data consisted of recordings of diverse scientists conversing naturally, lending strength to our ability to generalize our conclusions to real-world problem solving. Another strength is the statistical sophistication of our work, in that we employed hierarchical modeling and sequential analysis to account for the possible influences of multilevel dependencies in the data, which prior work had not accounted for. Furthermore, while our findings are correlational in nature, the sequential nature of our analysis methods provides information of temporal order, which is one of the building blocks of inferring causality beyond simple correlations (e.g., B causes A is ruled out). This statistical sophistication and the broad consistency of our findings with prior work (Ball & Christensen, 2009; Dunbar, 1997; Houghton, 1998) provide increased support for the claim that analogy is a domain-general strategy for problem solving under uncertainty.

A potential limitation of our work is the focus on scientists at work. However, rather than simply focusing on a narrow range of topics, the scientists dealt with many issues, not only about the science—which itself was broad (e.g., atmospheric, geochemical, geology, soil science)—but also about how to deploy the rover and its instruments and many types of work processes (e.g., paper publications, obtaining future funding, scheduling). Thus, our conclusions are likely to generalize to many real-world problem-solving settings to the degree that those settings share one or more of these diverse problem-solving activities.

Implications and conclusions

Implications for complex problem solving under uncertainty

The analogies the scientists used typically did not completely resolve uncertainty directly. Analogies are generally inductive in nature; inferences generated by analogy do not provide the certainty of logical deduction but, rather, need to be checked for validity by additional reasoning or problem solving (Gentner & Colhoun, 2010; Holyoak & Thagard, 1996b). Thus, it is not entirely surprising that analogies may not completely resolve uncertainty. Furthermore, scientists and engineers do not always seek to completely eliminate uncertainty (and indeed, sometimes it is not possible to do so) but often drive problem solving with the aim of converting it into approximate ranges sufficient to continue problem solving (Schunn, 2010).

With that being said, the question remains whether analogy is an adaptive cognitive strategy for dealing with uncertainty. Information in naturalistic problem solving often comes at a cost, and decisions must often be made quickly, so it may be adaptive in those situations to “satisfice” with a heuristic like analogy (Shah & Oppenheimer, 2008; Simon, 1956). Furthermore, under certain conditions, heuristics are not only more efficient than logical or statistically normative methods, but also more accurate (Gigerenzer & Brighton, 2009). For example, in so-called “large worlds,” information is scarce or limited, the structure of the problem environment is dynamic and unstable (e.g., contingencies and probabilities may change, unexpected events may occur), and information bearing on decision rules is incomplete or unavailable (Luan, Schooler, & Gigerenzer, 2011). Under these conditions, heuristics that selectively ignore problem-relevant information outperform more "optimal" methods that take as much information into account as possible (Gigerenzer & Brighton, 2009; Gigerenzer & Gaissmaier, 2011). Thus, under certain conditions (e.g., under tight time pressures, large worlds), analogy might be an adaptive or even optimal uncertainty resolution strategy. Of course, at this juncture, it is an open question whether the expert scientists in our data set succeeded in their problem solving under uncertainty because of or in spite of analogy use. Future research should examine this question, perhaps by comparing teams either using or not using analogy, or testing computational simulations that pit analogies against other uncertainty reduction tools/techniques in “large world” conditions.

Implications for analogy and theories of cognition

Our findings bridge the gap between general theories of human problem solving and theories dealing specifically with analogy. First, our results lend support to theory on cognitive architectures that uncertainty is an important triggering mechanism for analogy (Jones & Langley, 2005; Langley et al., 1991; Singley & Anderson, 1989). Uncertainty is a sign of a temporary impasse, and analogy appears to be among the mechanisms to overcome such an obstacle. From the perspective of models of analogy, too, this work provides some potentially useful theoretical contributions. While many detailed computational models of the crucial components of analogy (e.g., retrieval, mapping, inference) have been developed (Forbus, Gentner, & Law, 1994; Gentner, 1983; Hofstadter & Fluid Analogies Research Group, 1995; Holyoak & Thagard, 1989; Hummel & Holyoak, 1997; Kokinov, 1998), modeling efforts have only recently begun to explicitly integrate task-specific constraints. A notable exception is the work of Keith Holyoak, Paul Thagard, and their colleagues, whose models take into account how the pragmatic goals of the agent influence retrieval and mapping (Holyoak & Thagard, 1989; Thagard, Holyoak, & Nelson, 1990).

Our findings suggest that uncertainty may impose an additional constraint on analogical retrieval, given that near analogies figured prominently, both in the data set generally and also with respect to the use of analogy for dealing with problems under uncertainty. Given the types of uncertainty raised (science data analysis and interpretation, science-related planning, work process issues), it is not surprising that analogies from near topics were used most often. The overall preponderance of near analogies for these kinds of activities is consistent with other real-time studies of science (Dunbar, 1995, 1997; Saner & Schunn, 1999) and engineering design (Christensen & Schunn, 2007). Near analogies drawn from the team’s domain of expertise are most likely to be well understood and, therefore, effectively support making predictions under uncertainty and diagnosing possible problems. However, our data did not conclusively rule out the possible role of far analogies for dealing with uncertainty, given the lack of statistical power. Future research should continue investigating the extent to which analogies of varying distances might be used for this function.

Finally, a related open question raised by our work concerns the degree to which the triggering mechanisms for analogy under uncertainty might be governed by an explicit strategy selection process (Lovett & Schunn, 1999; Schunn, Lovett, & Reder, 2001) or more implicit mechanisms, such as associative memory retrieval or automatic strategy selection (Lovett & Anderson, 1996; Reder & Schunn, 1996) and whether these mechanisms might vary from when analogy is used in other cognitive tasks, such as learning and creative idea generation. Theories of analogy would be enriched by an understanding of how analogy use may be triggered in different ways across different types of cognitive tasks.

Notes

We were not able to find systematic intercoder differences in the coding of analogy. When very rare events in large data sets are coded, there is typically a high percent reliability and a much lower kappa. The discrepancy between the two is a typical statistical pattern; kappa was created to adjust for high percent reliability by chance in cases with one dominant code (Smith, 2000). The low kappa is also common for findings of rare events, since humans have trouble staying vigilant under such conditions.

References

Anderson, J. R., Fincham, J. M., & Douglass, S. (1997). The role of examples and rules in the acquisition of a cognitive skill. Journal of Experimental Psychology: Learning, Memory, and Cognition, 23, 932–945.

Ball, L. J., & Christensen, B. T. (2009). Analogical reasoning and mental simulation in design: Two strategies linked to uncertainty resolution. Design Studies, 30, 169–186.

Bearman, C. R., Ball, L. J., & Ormerod, T. C. (2007). The structure and function of spontaneous analogising in domain-based problem solving. Thinking and Reasoning, 13(3), 273–294.

Blanchette, I., & Dunbar, K. N. (2001). Analogy use in naturalistic settings: The influence of audience, emotion, and goals. Memory & Cognition, 29, 730–735.

Bottom, W. P. (1998). Negotiator risk: Sources of uncertainty and the impact of reference points on negotiated agreements. Organizational Behavior and Human Decision Processes, 76, 89–112.

Brashers, D. E., Neidig, J. L., Russell, J. A., Cardillo, L. W., Haas, S. M., Dobbs, L. K., & Nemeth, S. (2003). The medical, personal, and social causes of uncertainty in HIV illness. Issues in Mental Health Nursing, 24, 497–522.

Chan, J., Fu, K., Schunn, C. D., Cagan, J., Wood, K. L., & Kotovsky, K. (2011). On the benefits and pitfalls of analogies for innovative design: Ideation performance based on analogical distance, commonness, and modality of examples. Journal of Mechanical Design, 133, 081004.

Christensen, B. T., & Schunn, C. D. (2007). The relationship of analogical distance to analogical function and preinventive structure: The case of engineering design. Memory & Cognition, 35, 29–38.

Christensen, B. T., & Schunn, C. D. (2009). The role and impact of mental simulation in design. Applied Cognitive Psychology, 23, 327–344.

Cohen, M. S., Freeman, J. T., & Thompson, B. (1998). Critical thinking skills in tactical decision making: A model and a training strategy. In J. A. Cannon-Bowers & E. Salas (Eds.), Making decisions under stress: Implications for individual and team training (pp. 155–189). Washington, DC: American Psychological Association.

Dahl, D. W., & Moreau, P. (2002). The influence and value of analogical thinking during new product ideation. Journal of Marketing Research, 39, 47–60.

Dunbar, K. N. (1995). How scientists really reason: Scientific reasoning in real-world laboratories. In R. J. Sternberg & J. E. Davidson (Eds.), The nature of insight (pp. 365–395). Cambridge, MA: MIT Press.

Dunbar, K. N. (1997). How scientists think: On-line creativity and conceptual change in science. In T. B. Ward, S. M. Smith, & J. Vaid (Eds.), Creative thought: An investigation of conceptual structures and processes (pp. 461–493). Washington, DC: American Psychological Association.

Forbus, K. D., Gentner, D., & Law, K. (1994). MAC/FAC: A model of similarity-based retrieval. Cognitive Science, 19, 141–205.

Gentner, D. (1983). Structure mapping: A theoretical framework for analogy. Cognitive Science, 7, 155–170.

Gentner, D., Brem, S., Ferguson, R. W., Wolff, P., Markman, A. B., & Forbus, K. D. (1997). Analogy and creativity in the works of Johannes Kepler. In T. B. Ward, S. M. Smith, & J. Vaid (Eds.), Creative thought: An investigation of conceptual structures and processes (pp. 403–459). Washington, D.C.: American Psychological Association.

Gentner, D., & Colhoun, J. (2010). Analogical processes in human thinking and learning. In B. Glatzeder, V. Goel, & A. von Muller (Eds.), Towards a theory of thinking (pp. 35–48). Heidelberg, Germany: Springer.

Gigerenzer, G., & Brighton, H. (2009). Homo heuristicus: Why biased minds make better inferences. Topics in Cognitive Science, 1, 107–143.

Gigerenzer, G., & Gaissmaier, W. (2011). Heuristic decision making. Annual Review of Psychology, 62, 451–482.

Helms, M., Vattam, S. S., & Goel, A. K. (2009). Biologically inspired design: Process and products. Design Studies, 30, 606–622.

Hofstadter, D. R., & the Fluid Analogies Research Group. (1995). Fluid concepts and creative analogies: Computer models of the fundamental mechanisms of thought. New York, NY: Basic Books.

Holyoak, K. J., & Thagard, P. (1989). Analogical mapping by constraint satisfaction. Cognitive Science, 13, 295–355.

Holyoak, K. J., & Thagard, P. (1996a). The analogical scientist. In K. J. Holyoak & P. Thagard (Eds.), Mental leaps: Analogy in creative thought (pp. 185–209). Cambridge, MA: MIT Press.

Holyoak, K. J., & Thagard, P. (1996b). Mental leaps: Analogy in creative thought. Cambridge, MA: MIT Press.

Houghton, D. P. (1998). Historical analogies and the cognitive dimension of domestic policymaking. Political Psychology, 19, 279–303.

Hummel, J. E., & Holyoak, K. J. (1997). Distributed representations of structure: A theory of analogical access and mapping. Psychological Review, 104, 427–466.

Jones, R. M., & Langley, P. W. (2005). A constrained architecture for learning and problem solving. Computational Intelligence, 21, 480–502.

Jousselme, A., Maupin, P., & Bosse, E. (2003). Uncertainty in a situation analysis perspective. In Proceedings of the 6th International Conference on Information Fusion (pp. 1207–1214). Cairns, Australia.

Kahneman, D., & Tversky, A. (1982). Variants of uncertainty. Cognition, 11, 143–157.

Klahr, D., & Dunbar, K. N. (1988). Dual space search during scientific reasoning. Cognitive Science, 12, 1–48.

Kokinov, B. K. (1998). Analogy is like cognition: Dynamic, emergent, and context-sensitive. In K. J. Holyoak, D. Gentner, & B. K. Kokinov (Eds.), Advances in analogy research: Integration of theory and data from the cognitive, computational, and neural sciences (pp. 96–105). Sofia, Bulgaria: New Bulgarian University Press.

Langley, P. W., & Jones, R. M. (1988). A computational model of scientific insight. In R. J. Sternberg (Ed.), The nature of creativity: Contemporary psychological perspectives (pp. 177–201). New York, NY: Cambridge University Press.

Langley, P. W., McKusick, K. B., Allen, J. A., Iba, W. F., & Thompson, K. (1991). A design for the icarus architecture. In Proceedings of the AAAI Spring Symposium on Integrated Intelligent Architectures.

Lewis, D., & Burke, C. J. (1949). The use and misuse of the chi-square test. Psychological Bulletin, 46, 433–489.

Linsey, J. S., Laux, J. P., Clauss, E., Wood, K. L., & Markman, A. B. (2007). Increasing innovation: A trilogy of experiments towards a design-by-analogy method. In Proceedings of the ASME 2007 International Design Engineering Technical Conferences & Computers and Information in Engineering Conference.

Lovett, M. C., & Anderson, J. R. (1996). History of success and current context in problem solving: Combined influences on operator selection. Cognitive Psychology, 31, 168–217.

Lovett, M. C., & Schunn, C. D. (1999). Task representations, strategy variability, and base-rate neglect. Journal of Experimental Psychology. General, 128, 107–130.

Luan, S., Schooler, L. J., & Gigerenzer, G. (2011). A signal-detection analysis of fast-and-frugal trees. Psychological Review, 118, 316–338.

Nersessian, N. J. (1999). Model-based reasoning in conceptual change. In L. Magnani, N. J. Nersessian, & P. Thagard (Eds.), Model-based reasoning in scientific discovery (pp. 5–22). New York, NY: Kluwer Academic/Plenum.

Newell, A., & Simon, H. A. (1972). Human problem solving. Englewood Cliffs, NJ: Prentice-Hall.

Okada, T., Yokochi, S., Ishibashi, K., & Ueda, K. (2009). Analogical modification in the creation of contemporary art. Cognitive Systems Research, 10, 189–203.

Paletz, S. B. F., Schunn, C. D., & Kim, K. H. (2011). Conflict under the microscope: Micro-conflicts in naturalistic team discussions. Negotation and Conflict Management Research, 4, 314–351.

Priem, R. L., Love, L. G., & Shaffer, M. A. (2002). Executives’ perceptions of uncertainty sources: A numerical taxonomy and underlying dimensions. Journal of Management, 28, 725–746.

Raudenbush, S. W., & Bryk, A. S. (2002). Hierarchical linear models: Applications and data analysis methods (2nd ed.). Thousand Oaks, CA: Sage.

Reder, L., & Schunn, C. D. (1996). Metacognition does not imply awareness: Strategy choice is goverened by implicit learning and memory. In L. M. Reder (Ed.), Implicit memory and metacognition (pp. 45–78). Mahwah, NJ: Erlbaum.

Rietveld, T., Van Hout, R., & Ernestus, M. (2004). Pitfalls in corpus research. Computers and the Humanities, 38, 343–362.

Rowe, R. D. (1994). Understanding uncertainty. Risk Analysis, 14, 743–750.

Saner, L. D., & Schunn, C. D. (1999). Analogies out of the blue: When history seems to retell itself. In Proceedings of the 21st Annual Conference of the Cognitive Science Society.

Schunn, C. D. (2010). From uncertainly exact to certainly vague: Epistemic uncertainty and approximation in science and engineering problem solving. In B. H. Ross (Ed.), Psychology of learning and motivation (Vol. 53, pp. 227–252). San Diego, CA: Elsevier.

Schunn, C. D., Lovett, M., & Reder, L. M. (2001). Awareness and working memory in strategy adaptivity. Memory & Cognition, 29, 256–266.

Shah, A. K., & Oppenheimer, D. M. (2008). Heuristics made easy: An effort-reduction framework. Psychological Bulletin, 134, 207–222.

Simon, H. A. (1956). Rational choice and the structure of the environment. Psychological Review, 63, 129–138.

Singley, M. K., & Anderson, J. R. (1989). Transfer in the ACT* theory. In M. K. Singley & J. R. Anderson (Eds.), The transfer of cognitive skill (pp. 43–67). Cambridge, MA: Harvard University Press.

Skelton, T. M., & Thamhain, H. J. (2003). The human side of managing risks in high-tech product developments. In Proceedings of Engineering Management Conference, 2003. IEMC ’03. Managing Technologically Driven Organizations: The Human Side of Innovation and Change.

Smith, C. P. (2000). Content analysis and narrative analysis. In H. T. Reis & C. M. Judd (Eds.), Handbook of research methods in social and personality psychology (pp. 313–335). Cambridge: Cambridge University Press.

Squyres, S. (2005). Roving Mars: Spirit, Opportunity, and the exploration of the red planet. New York: Hyperion.

Thagard, P., Holyoak, K. J., & Nelson, G. (1990). Analog retrieval by constraint satisfaction. Artificial Intelligence, 46, 259–310.

Tollinger, I. V., Schunn, C. D., & Vera, A. H. (2006). What changes when a large team becomes more expert? Analyses of speedup in the Mars Exploration Rovers science planning process. In Proceedings of the 28th Annual Conference of the Cognitive Science Society.

Trickett, S. B., Trafton, J. G., Saner, L. D., & Schunn, C. D. (2007). “I don’t know what’s going on there”: The use of spatial transformations to deal with and resolve uncertainty in complex visualizations. In M. C. Lovett & P. Shah (Eds.), Thinking with data (pp. 65–86). Mahwah, NJ: Erlbaum.

Trickett, S. B., Trafton, J. G., & Schunn, C. D. (2009). How do scientists respond to anomalies? Different strategies used in basic and applied science. Topics in Cognitive Sciences, 1, 711–729.

Whaley, B. B., & Holloway, R. L. (1997). Rebuttal analogy in political communication: Argument and attack in sound bite. Political Communication, 14, 293–305.

Wilson, J. O., Rosen, D., Nelson, B. A., & Yen, J. (2010). The effects of biological examples in idea generation. Design Studies, 31, 169–186.

Wojtkiewicz, S. F., Eldred, M. S., Field, R. V., Urbina, A., & Red-Horse, J. R. (2001). Uncertainty quantification in large computational engineering models. In Proceedings of the 42nd AIAA/ASME/ASCE/AHS/ASC Strutures, Structural Dynamics, and Materials Conference.

Author note

This research was supported in part by National Science Foundation Grants SBE-0830210, SBE-1064083, through the Science of Science and Innovation Policy Program, and CMMI-0855293. We are grateful to Carmela Rizzo for data and research assistant coordination, Irene Tollinger, Preston Tollinger, Mike McCurdy, Tyler Klein, and Alonso Vera for help with data collection, and Lauren Gunn, Carl Fenderson, Justin Krill, Tiemoko Ballo, Rebecca Sax, Michael Ye, and Candace Smalley for help with data processing and coding.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Chan, J., Paletz, S.B.F. & Schunn, C.D. Analogy as a strategy for supporting complex problem solving under uncertainty. Mem Cogn 40, 1352–1365 (2012). https://doi.org/10.3758/s13421-012-0227-z

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13421-012-0227-z