Abstract

Recent research has suggested that words presented with a slightly increased interletter spacing are identified faster than words presented with the default spacing settings (i.e.,

is faster to identify than

; see Perea, Moret-Tatay, & Gomez, 2011). To examine the nature of the effect of interletter spacing in visual-word recognition (i.e., affecting encoding processes vs. quality of information), we fitted Ratcliff’s (1978) diffusion model to a lexical decision experiment in which we manipulated a range of five interletter spacings (from condensed [–0.5] to expanded [1.5]). The results showed an effect of interletter spacing on latencies to word stimuli, which reflected a linear decreasing trend: Words presented with a more expanded interletter spacing were identified more rapidly than those with a narrower spacing. Fits from the diffusion model revealed that interletter spacing produces small changes in the encoding process rather than changes in the quality of lexical information. This finding opens a new window of opportunities to examine the role of interletter spacing in more applied settings.

Similar content being viewed by others

Introduction

Although studies on the role of typographical and perceptual factors during visual-word recognition and reading have a long tradition (see Huey, 1908; Tinker, 1963), this area of research has been relatively neglected in the past decades, despite its obvious practical implications (Legge, Mansfield, & Chung, 2001; see also Moret-Tatay & Perea, 2011; Slattery & Rayner, 2010). In the present study, we focus on how variations of interletter spacing affect the recognition of visually presented words. Changes in interletter spacing can have either a beneficial or a deleterious effect in visual-word recognition, depending on its magnitude (see, e.g., Chung, 2002; Perea, Moret-Tatay, & Gómez, 2011). On the one hand, very large interletter spacings hinder the perceptual integrity of the whole word (e.g., as in

) and (unsurprisingly) produce longer word identification times—indeed, this manipulation has been employed as a way to degrade words (e.g., see Cohen, Dehaene, Vinckier, Jobert, & Montavont, 2008). But on the other hand—and more importantly for the present purposes—small increases in interletter spacing (relative to the default settings; compare

vs.

) do not destroy the integrity of the written word but do produce two potential benefits: fewer “crowding” effects (i.e., less interference from the neighboring letters; see Bouma, 1970; O’Brien, Mansfield, & Legge, 2005) and a more accurate process of letter position coding (see Davis, 2010; Gomez, Ratcliff, & Perea, 2008).

In a recent study based on the lexical decision task, Perea et al. (2011) found faster identification times for words presented with a slightly wide interletter spacing (+1.2; e.g.,

) than for words presented with the default spacing (0.0; e.g.,

; see Latham & Whitaker, 1996, and McLeish, 2007, for similar findings with other paradigms and populations). (The interletter spacing levels were taken from the values provided by Microsoft Word; e.g., the value +1.2 refers to an expanded intercharacter spacing of 1.2 points in this application.) Perea et al. concluded that small increases in interletter spacing (relative to the default settings) produced a benefit for lexical access, but they acknowledged that “more research is needed to examine in greater detail the optimal interletter value using a large set of interletter spacing conditions” (p. 350). The present experiment aims to fill this gap. In the present experiment, we employed a parametric approach with five levels of interletter spacing: condensed (–0.5), as in

; default (0.0), as in

; expanded (+0.5), as in

; expanded (+1.0), as in

; and expanded (+1.5), as in

. As in Perea et al.’s study, we used the most common word identification laboratory task: the lexical decision task (see Balota et al., 2007); note that the effects obtained with this task have typically been replicated in normal silent reading (Rayner, 1998; see also Davis, Perea, & Acha, 2009; Perea & Pollatsek, 1998). (We examine the potential implications of the present experiment for normal silent reading in the Discussion section.)

The second goal of the present experiment was to examine the nature of the effect of interletter spacing. To do that, we employed Ratcliff’s (1978) diffusion model for speeded two-choice decisions. This model has been quite successful at accounting for lexical decision data (e.g., Ratcliff, Gomez, & McKoon 2004; see also Gomez, Ratcliff, & Perea, 2007; Ratcliff, Perea, Colangelo, & Buchanan 2004; Wagenmakers, Ratcliff, Gomez, & McKoon, 2008). According to the diffusion model account of the lexical decision task, the visual stimulus is encoded so that the relevant stimulus features (e.g., lexical features) are utilized to accumulate evidence toward a “word” or “nonword” response. The accumulation of evidence is assumed to occur in a noisy manner. The two aforementioned processes (encoding and accumulation of evidence) are represented by two separate parameters in the model: (T er and drift rate, respectively). Importantly, in a diffusion model, changes in these two parameters produce different effects in qualitative aspects of the data. If the T er parameter changes, there should be shifts in the response time (RT) distributions with no change in their shape (see Gomez et al., 2007), and in addition, there should not be any effect on error rates. On the other hand, changes in the drift rate produce greater effects in the tail than in the leading edge of the RT distributions (i.e., the .1 quantile) and also affect error rates.Footnote 1 Therefore, if the effect of interletter spacing takes place in the early encoding, nondecisional stage, its effect should be a shift of the RT distribution with no effect on accuracy. Alternatively, if the impact of interletter spacing occurs in the word system, the one would expect some changes in the drift rate—and consequent changes in the RT distributions and error rates. We should note here that Perea et al. (2011) briefly discussed the RT distributions—with no fits of the diffusion model—and the effect of interletter spacing on words grew very slightly as a function of RT quantiles, while the changes in error rates were minimal. However, explicit fits are necessary to corroborate that observation—in particular, by using a wider range of interletter spacing conditions. To obtain stable estimations for the diffusion model, we employed a large number of items per condition (60) in the experiment.

Method

Participants

A group of 25 students at the University of Valencia took part in the experiment voluntarily. They were native speakers of Spanish, and all had either normal or corrected-to-normal vision.

Materials

We selected a set of 300 Spanish words from the B-Pal lexical database (Davis & Perea, 2005). The mean written frequency of these words was 89 occurrences per million words (range: 24–690); the mean length was 5.6 (range: 5–6); and the mean number of substitution-letter neighbors was 1.53 (range: 1–4). For the purposes of the lexical decision task, 300 orthographically legal nonwords were also created (mean length: 5.6 letters; range: 5–6). These nonwords had been created by changing two letters from Spanish words that did not form part of the word list. The stimuli were presented in Times New Roman 14-pt font (i.e., the same font as in the Perea et al., 2011, experiments). Five lists of stimuli were created to counterbalance the materials across letter spacings, so that each target appeared only once in each list, but in a different condition. The list of stimuli is available at www.uv.es/mperea/paramspacing.pdf. The participants were randomly assigned to each list.

Procedure

Participants were tested individually in a quiet room. Presentation of the stimuli and recording of latencies were controlled by a computer using DMDX (Forster & Forster, 2003). On each trial, a fixation point (+) was presented for 500 ms in the center of the monitor. Then, the stimulus item (in lowercase) was presented until the participant’s response. The letter strings were presented centered, in black, on a white background. The participants were instructed to push a button labeled sí “yes” if the letter string formed an existing Spanish word and a button labeled no if the letter string was not a word. Each participant received a different order of trials, and the whole experimental session lasted about 25 min.

Results

Incorrect responses (3.9% of the data) and RTs less than 250 ms or greater than 1,500 ms (less than 1.5% of the data) were excluded from the RT analyses. The mean correct RTs and error percentages from the participant analysis are presented in Table 1. ANOVAs based on the participant and item mean correct RTs were conducted according to a 5 (interletter spacing: condensed [–0.5], default [0.0], expanded [+0.5], expanded [+1.0], or expanded [+1.5]) × 5 (list: 1–5) design. List was included as a dummy factor in the statistical analyses to remove the error variance due to the counterbalancing lists.

Word data

The ANOVA on the latency data showed an effect of interletter spacing, F 1(4, 80) = 3.94, MSE = 934, p < .007, η 2 = .16; F 2(4, 1180) = 7.81, MSE = 5,391, p < .001, η 2 = .03. This effect reflected a decreasing linear trend (see Table 1), F 1(1, 80) = 6.59, MSE = 2,147, p < .02, η 2 = .25; F 2(1, 295) = 29.50, MSE = 5,869, p < .001, η 2 = .09, while the quadratic/cubic/quartic components were not significant (all Fs < 1).

The ANOVA on the error data showed did not reveal any significant effects (both ps > .25).

Nonword data

The ANOVAs on the latency/error data failed to show any significant effects (all Fs < 1).

Diffusion model analysis

Within the diffusion model framework, different data patterns correspond to distinct parameter behavior. The behavior of the parameters can then be interpreted in terms of psychological processes. To this end, we present the fits to the grouped data that we obtained using the fitting routines described by Ratcliff and Tuerlinckx (2002). We calculated the accuracy and latency (i.e., the RTs at the .1, .3, .6, .7, and .9 quantiles) for “word” and “nonword” responses for all conditions and for all participants, and we obtained the group-level performance by averaging across subjects (i.e., vincentizing; Ratcliff, 1978; Vincent, 1912). Fitting averaged data is an appropriate procedure for fitting the diffusion model. In previous research (e.g., Ratcliff, Gomez & McKoon 2004; Ratcliff, Thapar, & McKoon, 2001), fits to averaged data provided parameter values similar to the values obtained by averaging across fits to individual participants. The averaged quantile RTs were used for the diffusion model fits as follows: The model generated for each response the predicted cumulative probability within the time frames bounded by the five empirical quantiles. Subtracting the cumulative probabilities for each successive quantile from those of the next higher quantile yields the proportions of responses between each pair of quantiles, which are the expected values for the chi-square computation. The observed values are the empirical proportions of responses that fall within a bin bounded by the 0, .1, .3, .5, .7, .9, and 1.0 quantiles, multiplied by the proportion of responses for that choice (e.g., if there is a .965 response proportion for the word alternative, the proportions would be .965*.1, .965*.2, .965*.2, .965*.2, .965*.2 and .965*.1).

In this article, we used the model as a tool to test specific hypotheses in the most principled way possible. Simply put, interletter spacing could affect the encoding time, the rate of accumulation of evidence, or a combination of these two parameters. Hence, we implemented three parameterizations of the diffusion model (see Ratcliff & McKoon, 2008, for a full description of the model and its parameters, and Table 2 for the parameter values here); the first parameterization is a fairly unconstrained implementation of the model in which the T er parameter (i.e., encoding/response process) was allowed to vary for each interletter space (same T er for words and nonwords, so five values of T er), and the drift rates (i.e., quality of information) were allowed to vary for each interletter spacing and also for words and nonwords (creating ten values of drift rate). For the next two parameterizations, we removed free parameters and obtained the loss in the quality of the fits in terms of chi-square (in the second parameterization, we allowed T er to vary, and in the third we allowed the drift rate to vary). These chi-squares are based on group data, so they cannot properly be used as absolute measures of fit; however, they provide us with an estimate of the loss or gain in the quality of the fit relative to each of the other parameterizations of the model.

The unconstrained parameterization yielded a chi-square value of 77.37. Interestingly, the value of the T er parameter decreased as a function of interletter spacing, from .492 in the condensed condition to .473 in the +1.5 condition. The value of the drift rates for words increased very slightly from the condensed condition (.264) to the +1.5 condition (.278).

Ter parameterization

Pure distributional shifts (i.e., changes in the locations of distributions) are naturally accounted for by allowing the T er parameter to vary (i.e., there were five values of T er, one for each level of spacing, and two drift rates, one for words and one for nonwords). This model yielded a chi-square value of 104.55, which was 14% greater than the value from the unconstrained model (see Ratcliff & Smith, 2010, for a similar result in a perceptual task).

Drift rate parameterization

Across a large variety of manipulations, the mean RT and the variance are correlated; this is so because effects tend to be larger in the tail of the RT distribution than in the faster responses (e.g., the word-frequency effect affects both the mean and the variance of the RTs). These effects are naturally accounted for by allowing the drift rate to vary (i.e., one value of T er and 10 values of drift rate: one for words and one for nonwords for each level of spacing); this model yielded a chi-square value of 126.63, which was 38% worse than the value for the unconstrained model.

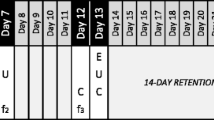

To summarize, the T er parameterization provides the best balance of parsimony and quality of fits. It has eight fewer parameters than the unconstrained model and three fewer parameters than the drift rate model. Furthermore, it nicely fits the empirical patterns, with a shift in the RT distributions and also a null effect on error rates as a function of spacing (see Fig. 1). One feature of the data, however, does not seem to be accounted for by any of the parameterizations: Interletter spacing affected only the responses to words, not to nonwords, thus suggesting an interaction between lexicality and the encoding process. One can speculate on the reasons for this pattern of results. In previous applications of the diffusion model to the lexical decision task, the encoding time has been assumed to be unaffected by lexical status. Nonetheless, in Ratcliff, Gomez and McKoon (2004) Table 3, it can be seen that the diffusion model consistently underestimates the empirical .1 quantile for pseudowords by 5–15 ms. Clearly, more work is needed to understand the specific nature of word-versus-nonword decisions in lexical decision (see Davis, 2010, for a discussion).

Group response time (RT) distributions in the five interletter spacing conditions for word (left panel) and nonword (right panel) stimuli. Each column of points represents the five RT quantiles (.1, .3, .5, .7, and .9) in each letter spacing condition. These values were obtained by computing the quantiles for individual participants and subsequently averaging the obtained values for each quantile over the participants (see Vincent, 1912). The proportions shown at the bottom of the figure are the accuracy rates for each condition. The plus signs joined by dotted lines represent the fits of the T er parameterizations of the diffusion model. The offset in the horizontal dimension represents the size of the model miss

Discussion

The findings from the present experiment are clear. First, small increases of interletter spacing (relative to the default settings) lead to faster word identification times, extending the findings of Perea et al. (2011) to a wider range of interletter spacing conditions. Second, the effect of interletter spacing shows a decreasing linear trend (see Table 1). Third, the effect of interletter spacing occurs at the encoding level rather than at a decisional level, as deduced from the fits of the diffusion model (see Fig. 1).

What about the locus of the effect of interletter spacing for word stimuli? The locus of this effect is at an encoding level (rather than at the decision level), as deduced from the fits of the diffusion model: The fits of the model were very good when the encoding parameter (T er) was allowed to vary freely across the spacing conditions, while they were rather poor when the drift rate (i.e., the quality of lexical information) was allowed to vary across conditions (see Fig. 1). To our knowledge, this is the first time in which a manipulation at the stimulus level has produced an effect on the encoding time rather than on the quality of information (i.e., drift rates)—note that this finding undermines a common criticism of the diffusion model approach, that “everything goes to drift rate.”Footnote 2 This encoding advantage for words with a slightly wide interletter spacing was presumably due to less “crowding” or a more accurate “letter position coding” process; the present experiment was not designed to disentangle these two accounts, however. Thus, increased letter spacing could be thought to enhance the perceptual normalization phase, which would affect T er but not drift rates. This pattern of data is consistent with the experimental findings of Yap and Balota (2007), who found that degrading the lexical string led to a shift of the RT distribution, with little or no effect on the error data. Although Yap and Balota did not conduct any explicit modeling, this finding would be consistent with the view that stimulus degradation affects the encoding process (i.e., T er in the diffusion model)—namely, that the decision process would not begin until the appropriate information had been extracted from the stimulus.

Importantly, the presence of faster identification times for words presented with a slightly wider interletter spacing than the default one (i.e.,

faster than

) has obvious practical implications. The “default” interletter settings in word-processing packages (and publishing companies) may not be optimal—keep in mind that this “default” setting was established on the basis of no empirical evidence (see McLeish, 2007). One fair question to ask is whether or not the present findings can be generalized from the recognition of single, isolated words (e.g., when reading the names of products, stores, or bus/subway stations) to the context of text reading. The vast majority of the effects obtained in visual-word recognition tasks have been generalized to normal reading experiments—with the advantage that word-identification tasks can be easily modeled in terms of the components of word processing. Nonetheless, the potential advantages of interletter spacing in the fovea during word identification (e.g., less crowding, more accurate letter position coding) may be canceled out by the fact that the N + 1 or N + 2 words in a sentence would be presented slightly farther away from fixation (i.e., with a decrease in acuity; compare the sentences “

” vs. “the cat is on the couch”). In this respect, in an unpublished study, Tai, Sheedy, and Hayes (2009) used nine conditions, from condensed interletter spacing (–1.75; e.g.,

) through expanded interletter spacing (2.00; e.g.,

) in a reading task in which participants had to read a novel while their eye movements were monitored. Tai et al. reported that fixation durations decreased with interletter spacing in a linear way—as occurred in the present experiment with lexical decision times. However, the number of fixations and the regression rate in the Tai et al. experiment also increased with interletter spacing, and the overall reading rate was not affected by interletter spacing. More research will be necessary to assess the role of interletter spacing in a normal reading scenario, not only with adult skilled readers, but also with other populations (e.g., low-vision individuals, young readers, or dyslexic readers).

In sum, the present experiment with adult skilled readers has revealed that small increases in interletter spacing (relative to the default settings) have a positive impact on lexical access, and that the locus of the effect is at an early encoding (nondecisional) stage. This finding opens a new window of opportunities to examine the role of interletter spacing not only in other well-known word identification paradigms, but also in more applied settings (i.e., normal silent reading).

Notes

It is important to note here that two influential factors in visual-word recognition experiments, word frequency and repetition, are related to the quality of information (e.g., RT distributions corresponding to high-frequency words [or repeated words] have a less pronounced asymmetry than do those corresponding to low-frequency words [or nonrepeated words])

This is certainly the case for word stimuli. Nonetheless, we should note here that, in letter discrimination and brightness discrimination tasks, Ratcliff and Smith (2010) found that adding static or dynamic noise produced changes in the T er parameter

References

Balota, D. A., Yap, M. J., Cortese, M. J., Hutchison, K. A., Kessler, B., Loftis, B., & Treiman, R. (2007). The English Lexicon Project. Behavior Research Methods, 39, 445–459. doi:10.3758/BF03193014

Bouma, H. (1970). Interaction effects in parafoveal letter recognition. Nature, 226, 177–178.

Chung, S. T. L. (2002). The effect of letter spacing on reading speed in central and peripheral vision. Investigative Ophthalmology and Visual Science, 43, 1270–1276.

Cohen, L., Dehaene, S., Vinckier, F., Jobert, A., & Montavont, A. (2008). Reading normal and degraded words: Contribution of the dorsal and ventral visual pathways. NeuroImage, 40, 353–366. doi:10.1016/j.neuroimage.2007.11.036

Davis, C. J. (2010). The spatial coding model of visual word identification. Psychological Review, 117, 713–758. doi:10.1037/a0019738

Davis, C. J., & Perea, M. (2005). BuscaPalabras: A program for deriving orthographic and phonological neighborhood statistics and other psycholinguistic indices in Spanish. Behavior Research Methods, 37, 665–671. doi:10.3758/BF03192738

Davis, C. J., Perea, M., & Acha, J. (2009). Re(de)fining the orthographic neighborhood: The role of addition and deletion neighbors in lexical decision and reading. Journal of Experimental Psychology: Human Perception and Performance, 35, 1550–1570. doi:10.1037/a0014253

Forster, K. I., & Forster, J. C. (2003). DMDX: A Windows display program with millisecond accuracy. Behavior Research Methods, Instruments, & Computers, 35, 116–124. doi:10.3758/BF03195503

Gomez, P., Ratcliff, R., & Perea, M. (2007). A model of the go/no-go task. Journal of Experimental Psychology: General, 136, 389–413.

Gomez, P., Ratcliff, R., & Perea, M. (2008). The overlap model: A model of letter position coding. Psychological Review, 115, 577–600. doi:10.1037/a0012667

Huey, E. B. (1908). The psychology and pedagogy of reading. New York, NY: Macmillan.

Latham, K., & Whitaker, D. (1996). A comparison of word recognition and reading performance in foveal and peripheral vision. Vision Research, 36, 2665–2674. doi:10.1016/0042-6989(96)00022-3

Legge, G. E., Mansfield, J. S., & Chung, S. T. L. (2001). Psychophysics of reading: XX. Linking letter recognition to reading speed in central and peripheral vision. Vision Research, 41, 725–734.

McLeish, E. (2007). A study of the effect of letter spacing on the reading speed of young readers with low vision. British Journal of Visual Impairment, 25, 133–143.

Moret-Tatay, C., & Perea, M. (2011). Do serifs provide an advantage in the recognition of written words? Journal of Cognitive Psychology, 23, 619–624. doi:10.1080/20445911.2011.546781

O’Brien, B. A., Mansfield, J. E., & Legge, G. E. (2005). The effect of print size on reading speed in dyslexia. Journal of Research in Reading, 28, 332–349.

Perea, M., Moret-Tatay, C., & Gómez, P. (2011). The effects of interletter spacing in visual-word recognition. Acta Psychologica, 137, 345–351. doi:10.1016/j.actpsy.2011.04.003

Perea, M., & Pollatsek, A. (1998). The effects of neighborhood frequency in reading and lexical decision. Journal of Experimental Psychology: Human Perception and Performance, 24, 767–779. doi:10.1037/0096-1523.24.3.767

Ratcliff, R. (1978). A theory of memory retrieval. Psychological Review, 85, 59–108. doi:10.1037/0033-295X.85.2.59

Ratcliff, R., Gomez, P., & McKoon, G. (2004). A diffusion model account of the lexical decision task. Psychological Review, 111, 159–182. doi:10.1037/0033-295X.111.1.159

Ratcliff, R., & McKoon, G. (2008). The diffusion decision model: Theory and data for two-choice decision tasks. Neural Computation, 20, 873–922. doi:10.1162/neco.2008.12-06-420

Ratcliff, R., Perea, M., Colangelo, A., & Buchanan, L. (2004). A diffusion model account of normal and impaired readers. Brain and Cognition, 55, 374–382. doi:10.1016/j.bandc.2004.02.051

Ratcliff, R., & Smith, P. L. (2010). Perceptual discrimination in static and dynamic noise: The temporal relation between perceptual encoding and decision making. Journal of Experimental Psychology: General, 139, 70–94. doi:10.1037/a0018128

Ratcliff, R., Thapar, A., & McKoon, G. (2001). The effects of aging on reaction time in a signal detection task. Psychology and Aging, 16, 323–341. doi:10.1037/0882-7974.16.2.323

Ratcliff, R., & Tuerlinckx, F. (2002). Estimating parameters of the diffusion model: Approaches to dealing with contaminant reaction times and parameter variability. Psychonomic Bulletin & Review, 9, 438–481. doi:10.3758/BF03196302

Rayner, K. (1998). Eye movements in reading and information processing: 20 years of research. Psychological Bulletin, 124, 372–422. doi:10.1037/0033-2909.124.3.372

Slattery, T. J., & Rayner, K. (2010). The influence of text legibility on eye movements during reading. Applied Cognitive Psychology, 24, 1129–1148.

Tai, Y. C., Sheedy, J., & Hayes, J. (2009). The effect of interletter spacing on reading. Forest Grove, OR: Paper presented at the Computer Displays and Vision Conference.

Tinker, M. A. (1963). Legibility of print. Ames, Iowa: Iowa State University Press.

Vincent, S. B. (1912). The function of the vibrissae in the behavior of the white rat. Animal Behavior Monograph, 1(5).

Wagenmakers, E.-J., Ratcliff, R., Gomez, P., & McKoon, G. (2008). A diffusion model account of criterion shifts in the lexical decision task. Journal of Memory and Language, 58, 140–159. doi:10.1016/j.jml.2007.04.006

Yap, M. J., & Balota, D. A. (2007). Additive and interactive effects on response time distributions in visual word recognition. Journal of Experimental Psychology: Learning, Memory, and Cognition, 33, 274–296. doi:10.1037/0278-7393.33.2.274

Author’s note

The research reported in this article was partially supported by Grant PSI2008-04069/PSIC and CONSOLIDER-INGENIO2010 CSD2008-00048 from the Spanish Ministry of Science and Innovation. We thank Colin Davis, Corey White, and Tessa Warren for helpful comments on an earlier draft. We also thank Carmen Moret for running the participants in the experiment.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Perea, M., Gomez, P. Increasing interletter spacing facilitates encoding of words. Psychon Bull Rev 19, 332–338 (2012). https://doi.org/10.3758/s13423-011-0214-6

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13423-011-0214-6